Abstract

Increasing global food production to address challenges from population growth, labor shortages, and climate change necessitates a significant enhancement of agricultural sustainability. Autonomous agricultural machinery, a recognized application of precision agriculture, offers a promising solution to boost productivity, resource efficiency, and environmental sustainability. This study presents a systematic review of autonomous driving technologies for agricultural machinery based on 506 rigorously selected publications. The review emphasizes three core aspects: navigation reliability assurance, motion control mechanisms for both vehicles and implements, and actuator fault-tolerance strategies in complex agricultural environments. Applications in farmland, orchards, and livestock farming demonstrate substantial potential. This study also discusses current challenges and future development trends. It aims to provide a reference and technical guidance for the engineering implementation of intelligent agricultural machinery and to support sustainable agricultural transformation.

1. Introduction

Agriculture plays a fundamental role in global food supply, economic stability, and sustainable development. However, it is projected that the world’s population will reach 10 billion by 2050, necessitating a substantial increase in agricultural productivity to meet rising food demands [1]. Throughout history, advancements in agricultural productivity have primarily resulted from structural changes and have undergone four major transformations to date. The technological features characterizing each stage of agricultural development are illustrated in Figure 1. Among these, traditional cultivation methods from ancient times to the late 19th century are commonly classified as Agriculture 1.0. During this period, agriculture relied heavily on localized tools and intensive manual labor, resulting in a characteristically labor-intensive production model. Although this method has low production efficiency, a standardized workflow for agricultural operations has been established, thereby providing a logical foundation for subsequent operations involving agricultural robots.

Figure 1.

Four development stages of agricultural production.

During the First Industrial Revolution, with the widespread adoption of power machinery, agricultural production advanced to the 2.0 stage in the early 20th century. The combined agricultural tool system, driven by steam engines and internal combustion engines, began replacing traditional manual tools, which significantly increased the grain output per unit of labor. This transformation laid the groundwork for the autonomy of agricultural robots in terms of power and mechanical structure, and it also directly promoted the development of mechanical guidance devices. Patent records indicate that mechanical guiding devices based on furrow trajectory recognition were already being developed during this period. By the late 1930s, facilitated by advances in electromechanical control systems, the first complete circular farming system was deployed. This system utilized piano wire deployed from a central spool to construct an accurate mechanical path planning network, thereby providing a foundational engineering practice for the autonomous driving of agricultural machinery [2].

From the 1960s to the 1990s, advances in embedded systems, software development, and communication technologies catalyzed the automation of agricultural production systems. This landmark technological shift has been recognized by scholars as the Third Agricultural Revolution (Agriculture 3.0). This marked a critical breakthrough period for the autonomy of agricultural robots. The core contribution during this period was defined by breakthroughs in positioning and sensing technologies. Specifically, the engineering deployment of the Global Positioning System (GPS) provided the necessary positioning support for the autonomous navigation of agricultural machinery, thereby enabling precise positioning for large-scale field operations. By the 1980s, the integration of computers and image sensors culminated in the development of early field robots with visual-based navigation systems [3,4]. As GPS and computer vision technologies continued to mature, the positioning accuracy of autonomous driving agricultural machinery reached centimeter-level precision and has been widely adopted in agricultural operations including sowing, fertilizing, and harvesting. This evolution led to the emergence of automated systems such as tractor navigation platforms, unmanned orchard spraying technologies, and crop harvesting guidance systems [5]. These devices are capable of continuous and high-precision operation, which marked a shift from single-function machinery to autonomous operation systems for agricultural robots.

With the rapid advancements in artificial intelligence and information technology in the 21st century, the emergence of the Fourth Industrial Revolution has completely transformed the form of agricultural activities. Agricultural production has entered the 4.0 era, often referred to as the digital agriculture era. During this stage, agricultural robots have been endowed with the core capabilities of intelligence and autonomy [6,7]. In this context, the acceleration of population growth and urbanization has led to a sustained increase in global food demand, while environmental challenges such as climate change, farmland degradation, and water scarcity have further constrained agricultural development potential [8,9]. Additionally, the agricultural sector is experiencing a shortage of labor and an aging workforce, partly because younger generations are increasingly disinterested in agricultural work. Compared with office employment, agricultural work is more physically demanding and typically offers lower income. Shifting societal and cultural values have further diminished the attractiveness of agricultural careers [10,11]. These factors collectively exert a negative impact on agricultural productivity and output. Therefore, it is imperative to develop innovative and sustainable strategies to boost agricultural productivity and capacity.

To address this challenge, precision agriculture (PA) has been introduced as a transformative management approach. It is defined as “a management strategy that collects, processes, and analyzes temporal, spatial, and individual plant and animal data, and integrates this information with other sources to support management decisions based on estimated variability, thereby enhancing resource use efficiency, productivity, quality, profitability, and the sustainability of agricultural production” [12]. As early as the 1990s, PA emerged in parallel with the development of GPS, GIS, and various data collection tools. Pierre C. Robert described it as an information revolution driven by new technologies [13]. PA facilitates the accurate collection of data related to crop conditions, weather, soil, and the environment through the use of GPS, sensors, data processing systems, and automation, thereby enabling reliable, data-driven decision-making. Autonomous driving agricultural machinery—comprising unmanned aerial vehicles, unmanned ground vehicles, and autonomous navigation robots—represents one of the most recent and practical advancements within PA. These systems integrate core technologies including positioning, perception, control, and actuation. They are capable of autonomous navigation across diverse agricultural settings—including farmlands, orchards, and livestock facilities—and can perform a wide range of precise operational tasks such as sowing, fertilizing, weeding, pest control, harvesting, livestock feeding, environmental monitoring, and facility cleaning. This capability allows them to address numerous operational gaps that traditional machinery cannot fill, thereby substituting human labor in large-scale and repetitive agricultural tasks [14,15,16]. In the long run, the advantages of autonomous agricultural robots in sustainability and economy render them highly valuable for future agricultural development [17], and this assertion has been empirically verified. From a cost perspective, Sørensen et al. conducted an economic study on mechanical weed-removing robots, reporting that when the weed-removing efficiency of organic agricultural machinery robots reached 100%, labor could be reduced by 85% and 60% in organic sugar beet and carrot cultivation, respectively. Furthermore, an efficiency level of 75% was projected to reduce labor costs by 50% [18]. Moreover, experimental results from Pedersen et al. indicated that the utilization of autonomous spraying robots is projected to reduce operating costs by 24% [19]. Regarding operational efficiency, Hussain et al. proposed that a 20 W laser weed-removing robot requires 23.7 h (excluding charging) or 35.7 h (including charging) to process a 1-acre plot, whereas traditional manual labor typically spans several days [20]. Research by Al-Amin et al. indicates that autonomous agricultural cluster robots can operate for up to 22 h per day (including 2 h of maintenance) compared to traditional machinery which typically operates for only 10 h [21]. In terms of environmental benefits, agricultural autonomous robots not only mitigate the reliance on pesticides but also minimize soil compaction. For instance, the SprayBox crop protection robot in the United States, which integrates 50 nozzles and a complex computer system, can achieve millimeter-level precision for weed removal. This results in an approximate 95% reduction in chemical herbicides compared to traditional spraying technology [22]. Similarly, the SwarmBot autonomous fertilizing robot, developed by an Australian team, utilizes machine learning algorithms to acquire crop physiological data, enabling precise fertilizer application as needed and thereby preventing environmental pollution caused by excessive fertilizer application [23]. Additionally, the 28 kW small autonomous agricultural equipment employed by Al-Amin et al. is considerably lighter than traditional 221 kW large machinery, which reduces soil compaction. Furthermore, the combination of strip intercropping and robot operation modes can further safeguard the biodiversity and soil health of the agricultural ecosystem [24].

At present, although numerous scholarly articles address the key technologies and application scenarios of specific categories of autonomous driving agricultural machinery, several limitations in research perspectives remain. These studies often fail to deeply examine the distinctive characteristics of autonomous driving agricultural machinery compared to other autonomous systems, lack comparative analyses of core technologies such as navigation control and machine operation control, and seldom address coping strategies for frequent actuator failures under complex agricultural conditions. To address these gaps, this paper conducts a systematic review of the evolution and practical application of key technologies in the domain of autonomous driving agricultural machinery and further investigates the unique challenges specific to this field. The structural framework of the study is presented in Table 1. The main contributions of this paper are as follows:

Table 1.

An overview of the review paper outline.

(1) A novel systematic framework for key technologies is introduced. Unlike previous reviews, which often treat technologies in isolation, an integrated framework is proposed that systematically categorizes and analyzes the core technologies of autonomous agricultural machinery.

(2) A pioneering comparative analysis between navigation and implementation control is presented. This review is among the first to explicitly compare and contrast the motion control mechanisms utilized for vehicle navigation and implementation operations. It highlights their unique design requirements and the associated technical challenges, which are often overlooked in the existing literature primarily focused on platform mobility.

(3) An in-depth focus on actuator fault tolerance in agricultural contexts is provided. Moving beyond conventional reviews that concentrate on perception and navigation, this paper offers a dedicated investigation into fault detection and fault-tolerant control strategies for actuators. This addresses a critical gap in ensuring reliability and safety under the harsh and unpredictable conditions inherent to agricultural operations.

(4) A multi-dimensional review of applicability across diverse agricultural scenarios is presented. This study offers a comprehensive evaluation of the applicability and practical deployment of various autonomous agricultural machines across diverse environments, including farmlands, orchards, and livestock farming. Their performance is assessed from multiple dimensions, such as technical feasibility, operational efficiency, and environmental adaptability.

The rest of this paper is organized as follows: Section 2 presents the literature review methodology. Section 3 analyzes the key technologies of agricultural machinery automatic driving. Section 4 discusses the application status of automatic driving in agricultural machinery. Section 5 describes the existing challenges and future development trends. Finally, a summary is made.

2. Literature Review and Analysis Methods

2.1. Overview of the Search Strategy

In this study, the literature was primarily retrieved from ScienceDirect, IEEE Xplore, and Web of Science. The search keywords included “agricultural automatic driving,” “agricultural unmanned vehicle,” “agricultural UAV,” “agricultural robot,” “orchard robot,” “livestock and poultry breeding robot,” “agricultural machinery positioning,” “agricultural machinery perception,” “agricultural machinery control,” and “agricultural actuator.” Inclusion criteria were established to encompass peer-reviewed journal articles, conference proceedings, and relevant book chapters published between 1 January 2011 and 30 May 2025. A small number of publications released before 2011 were also included to provide background information or clarify specific technical concepts. Duplicate entries retrieved from multiple databases were removed. By reading the titles and abstracts of the retrieved articles, relevant papers that match the research scope were sorted out, totaling 506 documents. Each document was exported in TXT format and converted into structured full-text records. A synonym substitution file along with the literature dataset was imported into VOSviewer 1.6.20 for bibliometric analysis, and the resulting keyword co-occurrence visualizations were generated. To more intuitively illustrate the temporal and geographic distribution of research on autonomous driving agricultural machinery, the relevant data from VOSviewer were subsequently imported into Origin 2025 and Scimago Graphics 1.0.51 for visualization.

2.2. Literature Classification and Focus Analysis

Table 2 illustrates the distribution of agricultural machinery reference types cited in this review. In this context, a single reference may encompass multiple key technologies, thereby allowing its classification into several categories simultaneously. “Perception and Vision” constitutes the most concentrated research area, accounting for 35.3%. This concentration is primarily driven by advancements in deep learning technology, with the objective of addressing core challenges in target recognition and understanding within unstructured agricultural environments. Research related to “Actuators and Fault-Tolerant Control” also comprises a relatively high proportion (24.4%). This indicates a growing academic concern regarding the conversion of intelligent decisions into precise and reliable physical actions. However, the sub-direction of “Fault Diagnosis and Fault-Tolerant Control” remains in its nascent stages of development, representing a critical research avenue for achieving future system robustness. Secondly, regarding application scenarios, the research exhibits characteristics highly correlated with agricultural production structures. The “Farmland” scenario accounts for over half of the research (50.4%). Its large-scale and regular characteristics allow for technological application throughout the entire life cycle of agricultural production. In contrast, the “Fruit Orchard and Greenhouse” scenario (30.7%) features research predominantly focused on resolving perception and operational challenges associated with high-value-added crops in complex environments, thus entailing higher technical requirements. Conversely, “Livestock Breeding,” as an emerging automated application scenario (14.4%), despite its currently smaller research base, is demonstrating robust growth potential, signifying a future expansion of research scope. Finally, regarding research maturity, a significant “innovation–application” gap currently exists. Although over half (55.7%) of the research has progressed to the prototype experimental verification stage, thereby demonstrating technological feasibility, only a small proportion (7.7%) has been successfully commercialized. This indicates that the majority of technical solutions continue to confront substantial engineering challenges, including those related to cost, reliability, applicability, and system integration. Bridging the transition from “usable” to “user-friendly” and ultimately to market adoption remains an urgent imperative for the entire field.

Table 2.

Literature classification statistics.

2.3. Literature Selection Bias

It should be noted that the literature search methodology employed in this study contains potential selection biases, which may consequently impact the comprehensiveness and objectivity of the derived research conclusions. Firstly, at the database selection level, this study excluded open access resources, such as Google Scholar, and multidisciplinary databases, including Scopus and SpringerLink. This directly resulted in the omission of relevant cross-disciplinary research. Secondly, regarding keyword design, the core terms were not comprehensively covered by their synonyms and various combinations. This led to limitations in the search scope, consequently hindering the comprehensive capture of research focused on specific technical directions. Thirdly, concerning the time range and literature type selection, the core literature predominantly focused on publications after 2011, thereby lacking coverage of fundamental agricultural robot technologies from 2000 to 2010. This omission impedes a complete tracing of the technological evolution process. Concurrently, while prioritizing journal and conference papers, this study neglected patent literature, industry reports, and promotional reports from commercial or research institutions. This oversight resulted in an insufficient comprehensive analysis of the “academic research–industrial implementation” nexus and a lack of multi-dimensional support for evaluating technology commercialization potential and global regulatory advancements. Finally, regarding the literature inclusion criteria, this study did not explicitly incorporate non-English studies into its search scope. Non-English-speaking countries, such as Germany, Japan, and China, offer significant reference value in research areas including agricultural robot safety standards, orchard picking technology, and Beidou navigation applications. This oversight may diminish the comprehensiveness and objectivity of the conclusions, particularly concerning cross-disciplinary result integration, technological evolution analysis, and the assessment of commercialization potential. Future research should therefore optimize the search strategy by expanding database coverage and integrating diverse literature types.

2.4. Year of Publication

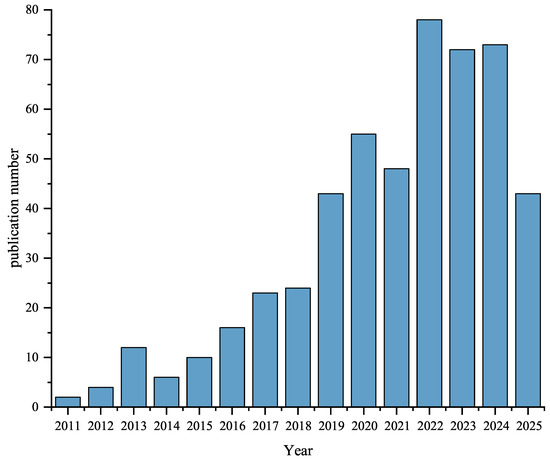

Figure 2 shows the volume of publications related to autonomous driving in agricultural machinery over the past fifteen years. As research into precision agriculture and smart farming continues to expand globally, research efforts on autonomous farming machinery have intensified. Driven by the rising global food demand and an aging agricultural workforce, the adoption of automated agricultural machinery equipped with autonomous navigation and precision operation capabilities has emerged as both a feasible and inevitable trend to replace human labor in agricultural tasks.

Figure 2.

Statistics on the amount of relevant research corresponding to each year from 1 January 2011 to 31 May 2025.

2.5. Country

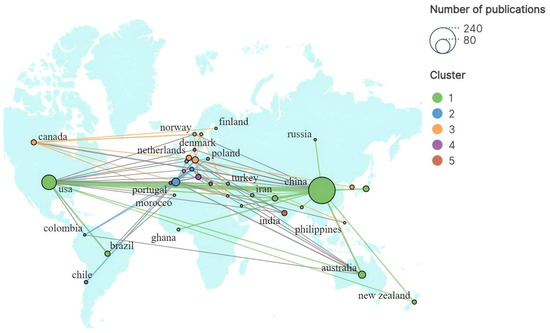

Figure 3 illustrates the global distribution of contributing countries and their collaborative networks in the field of autonomous driving agricultural machinery research. The figure reveals that scholars from China and the United States rank highest in publication output, suggesting strong market demand and substantial research investments in precision agriculture and intelligent production in both countries. Additionally, Australia, Japan, India, Brazil, and several European nations have also made notable contributions. These countries either possess large populations with high food demand or exhibit advanced agricultural systems with a strong emphasis on intelligent production, thereby promoting the development of autonomous driving agricultural machinery.

Figure 3.

Co-occurrence map of author countries.

2.6. Keywords

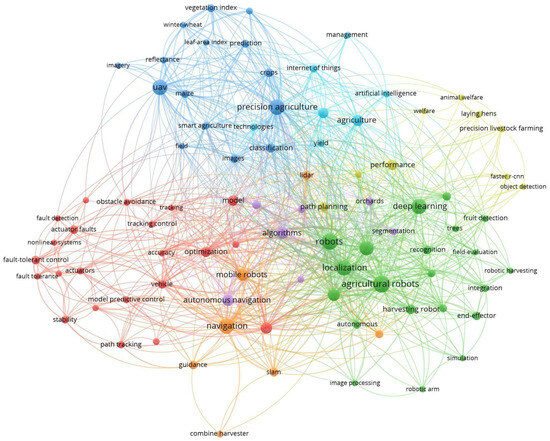

Figure 4 shows the keyword co-occurrence network in all studies. VOSviewer software is employed to conduct a comprehensive analysis of keywords extracted from 506 selected papers. To reduce redundancy, some keywords with similar meanings are consolidated, such as “robotics” and “robots”, “smart farming” and “smart agriculture”, and “unmanned aerial vehicle” and “uav”. The keyword frequency threshold is set to 5, and generic terms such as “design” and “system” are excluded, resulting in a final set of 88 relevant keywords. In the visualization, the size of each circle represents keyword frequency, with larger circles indicating higher frequencies. Different colors denote distinct clusters, and arcs between circles indicate co-occurrence relationships. The frequent appearance of terms such as “robots”, “machine vision”, “navigation”, and “deep learning” indicates a diversification of autonomous driving agricultural machinery functions and a continuous enhancement in both navigation performance and intelligence level.

Figure 4.

Keywords co-occurrence network.

3. Key Technologies

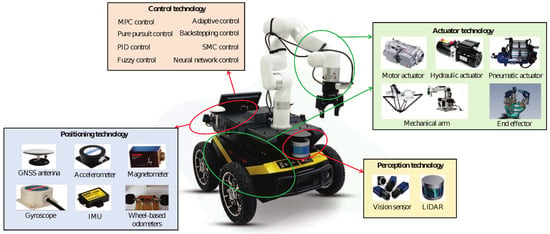

The automatic driving of agricultural machinery is a complex form of systematic engineering, which consists of four key technologies: positioning, perception, control, and actuators, as shown in Figure 5. These technologies cooperate with each other and operate collaboratively to ensure the automatic driving and autonomous operation of agricultural machinery and equipment. Specifically, we summarize the key technologies as follows: (1) positioning technology is the cornerstone of agricultural machinery autonomous driving, providing accurate position, speed, and time information for agricultural machinery; (2) perception technology endows agricultural machinery with “vision” and “touch” to help it capture the surrounding environmental information and the information of the target operation object; (3) motion planning and control technology act as the “brain” of agricultural machinery autonomous driving, responsible for planning motion paths and formulating control strategies; (4) as the “muscle” of agricultural machinery, the actuator technology is responsible for driving the movement of mechanical equipment and completing specific operational tasks.

Figure 5.

Components of an autonomous agricultural machine.

3.1. Positioning Technology

High-precision positioning serves as a prerequisite for enabling autonomous operation of agricultural machinery. This section provides a systematic review of positioning technologies applied in agricultural environments. Based on their technical principles and reference systems, these methods can be broadly categorized into three types: absolute positioning, relative positioning, and integrated positioning.

3.1.1. Absolute Positioning

According to the different reference coordinate systems, positioning techniques can be divided into absolute positioning and relative positioning. Absolute positioning determines the position coordinates of agricultural autonomous driving machinery relative to the global coordinate system by using a Global Navigation Satellite System (GNSS) including the Global Positioning System (GPS) and the Beidou Navigation Satellite System (BDS), as well as beacon methods [25]. A GNSS can provide positioning accuracy of 2–4 m, and some studies have successfully applied it to the autonomous driving of agricultural machinery [26,27]. With the help of the differential calculation principle of real-time kinematic (RTK) technology, a GNSS can further obtain position information accurate to the centimeter level. RTK-GNSS relies on wireless communication between the reference receiver (fixed base station) and the mobile receiver (mobile station) installed on the autonomous driving mechanical equipment to achieve precise positioning. The distance between the two is usually within 10 km [28]. Specifically, RTK technology transmits the phase of the GNSS signal received by the fixed base station to the mobile station. The mobile station compares the received phase with the phase of the satellite signal it observes, thereby obtaining the spatial position coordinates of the mobile station. Generally, if the positioning accuracy is required to reach the centimeter level, the base station and the mobile station need to receive signals from at least five satellites simultaneously [29,30]. Chou et al. [31] developed an autonomous agricultural vehicle based on the RTK-GPS module. They installed a mobile station antenna on the front frame of the multi-functional all-terrain vehicle and a base station antenna on the top of the tripod. Without introducing interruptions, the navigation position errors were all controlled within a range of 20 mm. Xiong et al. [32] designed an autonomous orchard spraying robot based on RTK-BDS. The results of the field experiment show that the offset error of the system positioning is proportional to the driving speed: the average offset error is about 0.03 m, while at a driving speed of 2 km/h, the maximum offset error can reach about 0.13 m. Perez-Ruiz et al. conducted geospatial mapping of crops by installing a single real-time RTK-GPS system on tractors. The error of the final drawn crop map in the trajectory direction is 2.67 cm, and 95% of the plants are located within a circular radius of 5.58 cm at the map position [33]. Based on the high positioning accuracy of RTK-GNSS, some studies suggest that a multi-receiver system can be adopted to further determine the heading angle of autonomous driving agricultural machinery [34,35,36]. However, integrating GPS-GNSS into autonomous driving agricultural machinery incurs high costs, especially with multiple RTK-GNSS systems [29,37]. To promote the application of autonomous driving agricultural machinery, it is necessary to adopt low-cost and compact RTK-GNSS modules. Valente et al. [38] compared two open-source, low-cost, single-frequency RTK-GNSS systems, namely Emlid Reach RTK (ER-RTK) and NavSpark RTK (NS-RTK). Based on all the test results, ER-RTK GNSS and NS-RTK GNSS achieved fixed solutions of 94.0% and 71.5%, respectively, and the former also achieved higher accuracy. Although GNSS technology has the significant advantage of all-temporal coverage, it may be affected by driving speed, radio frequency interference, obstacles, atmospheric conditions, and satellite geometry, and is not suitable for use indoors and in orchards with dense foliage [32,39,40,41].

To achieve absolute positioning in the indoor environment, active and passive beacon technologies have been introduced. The former, such as ultra-wideband (UWB) technology, actively transmits signals by deploying fixed beacon nodes, while the latter is often placed on the floor, ceiling, or around facilities, and requires autonomous driving agricultural machinery to obtain the position information of beacons through active perception (such as vision, lidar, RFID reading and writing, magnetic sensors, etc.) [42,43,44]. Active beacon technology has the advantages of high positioning accuracy and strong real-time performance, but it requires high installation and maintenance costs and is limited by the environmental structure and various obstacles [45]. In contrast, passive beacon technology has a lower initial installation cost and is suitable for installation in structured, smooth-surfaced livestock and poultry houses. However, this technology still requires regular maintenance to prevent corrosion, wear, and dust accumulation [42]. Therefore, the existing beacon technology still has a relatively high maintenance cost and is only suitable for use in large farms.

3.1.2. Relative Positioning

Relative positioning usually relies on technologies such as machine vision sensors, light detection and ranging (LiDAR), inertial measurement units (IMU), gyroscopes, accelerometers, magnetometers, and wheel odometers for heading position calculation. The advantages and disadvantages of various technologies are shown in Table 3 and Table 4. Compared with absolute positioning technology, it can also estimate the attitude (rolling, pitch, and yaw) of autonomous driving machinery, and the accuracy will not decline due to the failure of satellite signals. Machine vision sensors are a passive navigation and positioning method. They identify reference objects such as landmarks and crops through cameras and collect their continuous image sequences, thereby measuring the relative position and direction between the autonomous driving agricultural machinery and the reference objects [46]. Among them, the process of analyzing continuous images based on feature matching and tracking techniques to estimate the motion information of autonomous driving machinery is called visual odometry (VO). Combining image processing methods (such as Hough transform, Census transform) [47,48,49], optimization algorithms (such as Kalman filtering) [50], object detection algorithms (such as YOLO algorithm) [51,52], semantic segmentation algorithms [53,54], and control algorithms (such as PID control, fuzzy control) [49] can further improve the accuracy of recognition and positioning [55,56].

Table 3.

Advantages of different positioning sensors.

Table 4.

Disadvantages of different positioning sensors.

LiDAR includes 2D LiDAR(Blackfly S BFS-U3-200S4C, FLIR Systems, Wilsonville, OR, USA) and 3D radar (AXIS Q3526-LVE, Axis Communications, Chelmsford, MA, USA) [66,67]. The former only has a set of ranging data, and there is a risk of being blocked by weeds or leaves, as well as the possibility of losing detailed 3D data [68]. Therefore, researchers suggest using sensors with a wider vertical viewing angle, such as 3D LiDAR or rotating 2D LiDAR, to obtain richer information [69,70]. Similar to visual odometry, the process of estimating mechanical motion states based on LiDAR perception information and using point cloud registration is called LiDAR odometry (LO). LO and VO are usually used as the front-end modules of simultaneous Localization and Mapping (SLAM) technology, providing relative observation results of mechanical motion and generating local maps. On this basis, SLAM technology uses optimization algorithms or filters to optimize the pose trajectory and map of the machinery and combines the loop closure mechanism to update and eliminate cumulative errors. This stage is usually referred to as the back end. Finally, the SLAM algorithm constructs the real-time environmental map based on the optimized results [71]. Typical visual SLAM algorithms include ORB-SLAM [72], LSD-SLAM [73], etc., while common LiDAR SLAM algorithms are represented by algorithms such as LeGO-LOAM [74] and Cartographer [75]. Table 5 presents the performance and characteristics of these algorithms in both structured and unstructured agricultural environments.

Table 5.

Performance of the SLAM algorithm in structured and unstructured agricultural environments.

Wheel-based odometers are commonly used in autonomous agricultural vehicles, which infer the position and direction changes of the vehicle during movement by analyzing the information of wheel rotation. Considering the drawbacks of wheel-based odometers, when performing autonomous positioning, it is necessary to ensure that the vehicle’s weight distribution is uniform and that the wheels are in full and uniform contact with the ground to minimize the risk of skidding to the greatest extent [62]. Accelerometers directly sense acceleration by measuring changes in force or mass, while gyroscopes determine the device’s motion posture based on the conservation of angular momentum and the measured angular velocity. The inertial measurement unit (IMU) consists of an accelerometer and a gyroscope and is a sensor that measures the device’s posture on three axes. Researchers usually combine it with Global Positioning Systems, magnetometers, or machine vision technologies, or use sensor calibration procedures to improve the estimation accuracy of heading angles [59,76]. The magnetometer is a method for calibrating heading angles because it can determine the absolute heading of an object by measuring the strength and direction of the Earth’s magnetic field [61].

3.1.3. Fusion Positioning

In actual agricultural scenarios, positioning technology also needs to deal with complex environmental interference issues such as obstructions, terrain undulations, and light fluctuations. For this, a single sensor has certain limitations and is unable to provide accurate position and attitude information for autonomous agricultural machinery. Integrating multiple information sources (sensor data) can effectively solve this problem.

To address the issue of GNSSs being affected by the obstruction of fruit tree canopies, Fei et al. proposed the error-state Kalman filtering (ESKF) fusion strategy, which combines non-holonomic constraints (NHCs) to limit the lateral and vertical velocity errors of the IMU and corrects the IMU drift through periodic/non-periodic zero-speed updates (ZUPT). Eventually, the average positioning error is controlled within 0.15 m, with the maximum error being approximately 0.3 m, and the system remains stable even when the satellite signal is briefly blocked [77]. Leanza et al. used adaptive Kalman filtering and linear regression to correct raw IMU/GPS data, achieving a full-path relative error of 1.9% and a straight-line segment error of 0.7% in a sloping vineyard scene with loose soil and severe vegetation obstruction. Compared to the uncorrected IMU/GPS combination, this method improves by more than 85% [61].

In response to the performance limitations of visual sensors under harsh lighting conditions, a visual–inertial fusion scheme was proposed by Fu et al. Thermal imaging images were optimized using adaptive bilateral filtering and Sobel gradient enhancement, with Sage–Husa adaptive filtering integrated to mitigate non-Gaussian noise. Under normal lighting, low lighting, and low-light jitter scenarios, the positioning error was reduced by 58.69%, 57.24%, and 60.23%, respectively, relative to the traditional IEKF algorithm. Furthermore, in real-world complex lighting environments, the root mean square error (RMSE) of the absolute trajectory error (ATE) was controlled within 0.2826 m [78].

Regarding the LiDAR point cloud occlusion problem in dense foliage environments, a LiDAR SLAM point cloud mapping scheme was designed by Qu et al., and the map was optimized using the GNU Image Manipulation Program (GIMP) to eliminate noise points. The average positioning error in outdoor tests was only 0.205 m [79]. Separately, a probabilistic sensor model was constructed by Hiremath et al. through the combination of LiDAR and particle filtering. Even in scenarios featuring curved crop rows and uneven plant gaps, the robot’s heading error was controlled within 2.4°, and its lateral deviation was controlled within 0.04 m, enhancing its adaptability to semi-structured farmland [80]. Furthermore, terrain undulation can exacerbate LiDAR-induced positioning errors. Indoor–outdoor comparison experiments conducted by Qu et al. demonstrated that the average lateral error during 15 m indoor navigation was 0.1717 m, whereas the error increased to 0.237 m for the same navigation distance in outdoor terrain with undulations. Complementary filtering integrating an inertial measurement unit (IMU) and wheel speed odometry is recognized as an optimization method for addressing this issue [79].

Most of the above studies adopt filtering (such as Kalman filtering and particle filtering) to conduct multi-sensor fusion. In addition, another commonly used fusion method is optimization (such as pose graphs and factor graphs) [81,82]. Among them, the filtering method updates the state estimates and covariance matrices in a recursive manner in real time to achieve efficient real-time positioning effects [83], while the optimization method uses optimization techniques to minimize positioning errors and thereby achieve global optimization [84]. The application scenarios of these two methods in the field of autonomous driving of agricultural machinery are shown in Table 6. The filtering algorithms and optimization algorithms mentioned in Table 6 and their performances in different environments are presented in Table 7 and Table 8, respectively.

Table 6.

Examples of integrated positioning for autonomous driving of agricultural machinery.

Table 7.

Performance of filtering algorithms in structured and unstructured environments.

Table 8.

Performance of optimizing algorithms in structured and unstructured environments.

Multi-sensor fusion technology also has extensive applications in the field of SLAM. Compared with the single-sensor SLAM technology, the multi-sensor SLAM algorithm shows higher robustness and positioning accuracy. Peng et al. tested three SLAM algorithms in the caged chicken house environment. The results show that the SLAM algorithm using only 2D LiDAR has excessive deviation, resulting in ineffective map construction. The mapping deviation of the SLAM algorithm that fuses 2D LiDAR, wheel odometer and IMU sensing data based on particle filters is 15 degrees. The mapping deviation of the SLAM algorithm that fuses the above three types of sensor data based on the graph optimization algorithm is only 3 degrees [96]. Jiang et al. fused IMU data with 3D LiDAR data to construct an autonomous navigation scheme for orchard spraying robots based on multi-sensor devices and SLAM technology. The average lateral navigation error and average heading Angle deviation of the robot’s movement do not exceed 16 cm and 8°, respectively [97]. Zhu et al. used binocular cameras to obtain crop boundary lines as navigation references, and then integrated visual SLAM and inertial guidance information to achieve real-time positioning of unmanned harvesters [98]. To solve the positioning problem of unmanned agricultural machinery vehicles in the absence of GNSS assistance, Zhao et al. proposed an efficient and adaptive LiDAR—vision-inertial odometry SLAM system. The test results show that the proposed model maintains accurate and stable positioning performance for a long time in various typical agricultural scenarios (greenhouses, farmlands, engineering buildings) [99]. Based on the measurement data of sensors, the SLAM algorithm can construct real-time environmental maps. The map matching method can be adopted to match the map drawn by the SLAM algorithm with the pre-constructed high-precision map, thereby achieving more accurate positioning. Map matching is also a fusion positioning method, which combines the relative positioning information and absolute positioning information generated by sensors. This method is usually applicable to indoor environments with relatively fixed internal structures, such as livestock and poultry breeding houses. Joffe et al. [100] adopted ultrasonic beacon technology to obtain absolute positioning information, calculated the motion posture of the autonomous driving robot through sensors such as wheel odometers and IMUs, and developed an autonomous egg picking robot with a positioning accuracy within a range of 2 cm.

3.2. Perception Technology

Perception technology is crucial for autonomous agricultural machinery, endowing it with the ability to “see” and “touch” its surroundings. It enables the system to acquire vital information about the environment and target operational objects, thereby facilitating precise navigation and operation. This section elaborates on key environmental perception technologies, which are essential for tasks such as object positioning and identification, status monitoring, and environmental assessment.

In the field of autonomous driving of agricultural machinery, various types of perception devices have been developed at present for capturing data such as light, humidity, temperature, odor, gas concentration, chemical substance concentration, images, and tactile and force feedback [101,102,103]. Images, as the main data type, have the advantages of rich signal information, easy acquisition, and non-contact collection, and are widely used in the navigation and operation processes of autonomous driving agricultural machinery. Therefore, here we mainly introduce common image perception devices (imaging technologies). Imaging technology can be classified according to different criteria. According to the differences in spectral resolution (spectral width) or the number of bands, it can be classified into visible-spectrum imaging (VSI), multispectral imaging (MSI), and hyperspectral imaging (HSI) [104]. From the perspective of imaging dimensions, they can be further classified into 2D cameras and 3D cameras (depth cameras) [105]. Table 9 and Table 10 show the characteristics, advantages, and disadvantages of the above-mentioned imaging technologies, and Figure 6 presents several commonly used perception technologies. Among these technologies, visible-light-spectrum imaging exhibits lower costs, ease of deployment, and support for plug-and-play three-dimensional perception, making it suitable for crop recognition, localization, and simple modeling. Although highly susceptible to lighting conditions, visible-light-spectrum imaging maintains high overall cost-effectiveness, rendering it well-suited for small- and medium-sized agricultural scenarios. Multispectral imaging (MSI) equipment features a moderate price point and enables the acquisition of spectral information from bands including the red edge and near infrared (NIR). MSI facilitates vegetation index analysis and crop health monitoring; however, it imposes specific requirements for data processing, limiting its applicability to farmers with adequate technical expertise, sufficient funding, and demands for high-efficiency operations to implement precision agricultural management. By contrast, hyperspectral imaging (HSI) delivers highly detailed spectral information, making it suitable for component analysis and precise crop disease identification. However, HSI equipment remains costly, requires complex data processing, and exhibits environmental sensitivity. HSI’s applicability to small-scale farming operations is relatively limited; instead, it is well-suited for large-scale agricultural production systems or research fields with stringent precision requirements.

Table 9.

Characteristics of different imaging techniques.

Table 10.

Advantages and disadvantages of different imaging techniques.

Figure 6.

Commonly used perception techniques: (a) monocular camera, (b) LiDAR, (c) imaging spectrometer, (d) binocular stereo vision camera, (e) structured light camera, (f) ToF camera.

3.2.1. Visible-Spectrum Imaging Technology

Typical visible-light-spectrum imaging techniques mainly include RGB cameras and RGB-D cameras. Both of them use filter arrays to capture RGB images. Such images can significantly distinguish the target object from other background objects, and thus have been widely used in many studies for identifying objects with different characteristics and detecting key features of the target object [106,107,108,109]. In addition, RGB-D cameras can also generate depth images that represent the distance information of the surrounding environment. The darker the black color in the image, the closer the distance; the lighter the white color, the farther the distance. The gray areas between black and white correspond to the actual physical distance between the target and the sensor. By combining this depth information with the 2D coordinates or RGB information of the target object, the target can be located [110,111]. Among them, the positioning based on 2D coordinates requires the use of coordinate transformation methods to obtain 3D coordinates, while the positioning based on RGB information requires the segmentation of the generated full-scene point cloud [112,113].

3.2.2. Multispectral Imaging Technology

Given that multispectral imaging technology is capable of acquiring data across multiple spectral bands—and that different materials exhibit distinct reflectance and absorption properties for radiation across these bands—spectral bands with the highest discriminatory power for specific feature information are typically selected by researchers for target recognition and feature analysis [114]. Within the field of autonomous driving for agricultural machinery, the most commonly utilized spectral bands primarily include the red-edge, near-infrared (NIR), and infrared (IR) bands.

The red-edge and near-infrared (NIR) bands exhibit high sensitivity to variations in green vegetation and soil moisture content. As a result, these spectral regions are frequently employed in precision agriculture for non-destructive and non-invasive analyses, such as estimating vegetation indices (e.g., NDVI, NDRE) [115,116,117], drought indices (e.g., PDI, MPDI) [118,119], and chlorophyll concentration [120,121], as well as monitoring crop growth and health [122,123]. Chlorophyll strongly reflects light in the red-edge and NIR bands, while water molecules exhibit absorption in these bands [124]. Consequently, higher vegetation density and chlorophyll content correspond to increased spectral reflectance, whereas higher soil moisture content leads to reduced reflectance in these bands [125,126]. In addition, NIR wavelengths can induce molecular vibrations in C–H, N–H, O–H, S–H, and C=O bonds, which are key components of organic matter. This property makes NIR widely applicable for soil component analysis [127,128].

Infrared (IR) radiation is an important form of energy exchange between objects and the outside world. All objects above absolute zero emit infrared energy. By converting the invisible IR radiation signals in the environment into visible two-dimensional images, the spatial distribution of temperature differences in the environment can be visually presented [129]. This is how thermal imaging technology works. This technology is non-invasive, non-contact, non-destructive, and highly applicable, and can form images even in the absence of light [130]. Since the temperature of plants is closely related to physiological processes such as transpiration, water metabolism, and stress response, thermal imaging technology is widely used in crop maturity and bruising analysis [131,132], water stress monitoring [133], pest and disease detection, and yield estimation [130]. Infrared thermal imagers can be roughly divided into two types: cooled types and uncooled types. Refrigerated infrared thermal imagers can provide more details of temperature differences, but they are large in size, expensive, and consume a lot of energy. Uncooled infrared thermal imagers have the advantages of small size, light weight, and affordability, and are suitable for installation on autonomous driving agricultural machinery [134]. However, the low contrast of thermal images is prone to cause significant errors in the generation of orthophoto images. In this regard, some researchers have developed effective calibration algorithms to improve the accuracy of uncooled thermal imagers [135,136].

3.2.3. Hyperspectral Imaging Technology

Hyperspectral imaging (HSI) is capable of capturing reflectance, transmittance, or radiance information from a target object across dozens to hundreds of narrow spectral bands, which is then compiled into a series of images and subsequently integrated into a three-dimensional hyperspectral data cube. These three dimensions correspond to the two spatial dimensions of the scene and the spectral dimension representing various wavelengths [137,138]. In recent years, HSI technology has been widely employed in crop type identification [139,140], crop component analysis [141,142,143], and the detection of weeds, pests, and diseases [144,145,146]; however, it still faces challenges including high costs, large data volumes, and environmental sensitivity. To address these challenges, principal component analysis (PCA) and continuous wavelet transform (CWT) can be utilized to identify and aggregate redundant data across multiple channels [123,139].

3.2.4. Two-Dimensional Cameras

In the field of agriculture, 2D cameras (monocular cameras) can not only perform object recognition [147,148] and phenotypic analysis [149,150], but can also obtain the positioning information of objects through perspective transformation calculations [151]. In response to the shortcomings of 2D cameras, some researchers have developed effective depth estimation algorithms that can obtain the distance information of objects from two-dimensional images. These algorithms can be classified into two categories: those based on machine learning and those based on deep learning. The former estimates depth by solving the unknown parameters in the assumed model or by searching for similar images in a large deep dataset [152,153], while the latter uses classification methods to predict the depth values of pixels in the image [154].

3.2.5. Three-Dimensional Camera

Common 3D cameras primarily encompass stereo vision cameras, structured light (SL) cameras, and time-of-flight (ToF) cameras. Stereo vision cameras typically consist of two or more monocular cameras, which capture and analyze disparities in images acquired from different viewpoints to generate high-resolution depth information [105,155]. However, the stereo matching and calibration processes for such cameras are frequently complex and time-intensive [156,157]. Structured light (SL) cameras are visual sensors equipped with one or more monocular cameras and a projector, which utilize projected light patterns to measure the three-dimensional (3D) shape of objects. Specifically, the projector emits a grid pattern onto the target object’s surface, while the camera captures the reflected pattern image [158]. This perception technology enables rapid acquisition of depth information for the target object and is unaffected by the object’s texture features. However, increases in measurement distance and intense lighting conditions result in reduced accuracy. Time-of-flight (ToF) cameras, including light detection and ranging (LiDAR) systems, measure the flight time of light pulses by emitting continuous beams toward the target object, receiving the reflected light, and subsequently calculating the object’s distance or depth [105]. Relative to stereo vision cameras and SL cameras, the measurement accuracy of ToF cameras is unaffected by object features or changes in distance. Therefore, ToF cameras are well-suited for 3D measurement tasks requiring high accuracy and robust motion stability at scale. However, ToF cameras exhibit relatively low resolution and higher power consumption. Furthermore, the speed of laser light renders direct detection of optical ToF practically unfeasible. Therefore, researchers typically acquire 3D measurement data by detecting the phase shift of modulated light waves [159]. Within the agricultural sector, 3D cameras have been widely employed for crop localization [86,160,161], crop detection [162,163,164], crop phenotypic analysis [165,166,167], and livestock monitoring [168,169,170].

3.2.6. Fusion of Multi-Perception Technologies

Each individual sensor has its own limitations. By using advanced data processing methods, data from multiple sensors can be fused together, thereby improving the accuracy and reliability of perception, especially under challenging conditions or in the presence of occlusion [171,172]. Different sensor combinations have different applicable scenarios. Multi-sensor combinations including LiDAR are typically used for positioning and measurement operations. For example, Kang et al. [173] located fruits by fusing the depth information of LiDAR and the color information of RGB cameras. The experimental results show that even under the condition of strong afternoon sunlight, the sensor can achieve accurate and robust positioning. The standard deviations of positioning at 0.5 m, 1.2 m, and 1.8 m are 0.253, 0.230, and 0.285, respectively. Zhang et al. [174] collected long-range images of large areas of crops by equipping unmanned aerial vehicles with LiDAR and multispectral cameras, thereby achieving the estimation of crop yields. Multi-sensor combinations that incorporate multispectral or hyperspectral imaging techniques are typically employed for large-scale monitoring of crop or environmental conditions. For instance, Dash et al. [175] evaluated the moisture conditions of operation-intensive farmland based on the thermal imaging and multispectral effects of unmanned aerial vehicles. Javidan et al. [176] detected the disease infection of tomatoes based on RGB and hyperspectral image data. Multi-sensor combinations containing RGB cameras are typically used for feature recognition or phenotypic analysis of the operation objects. Bhole et al. [177] used RGB images and thermal images to identify Holstein cattle in cattle herds. Gutierrez et al. [178] used RGB cameras and hyperspectral line scanning cameras to estimate the maturity of mangoes.

3.2.7. Deep Learning Training Methods for Environments with Scarce Labels

Methods based on deep learning play an effective role in solving various complex perception tasks. However, to fully utilize their potential, a large amount of labeled data is required. In the agricultural environment, obtaining large-scale and high-quality labeled data is not only costly but also time-consuming. To address this issue, semi-supervised learning (SSL) and domain transfer techniques have become effective alternatives, enabling high-performance visual perception tasks with limited labeled samples. Among them, semi-supervised learning (SSL) significantly reduces the reliance on labeling by simultaneously utilizing a small amount of labeled data and a large amount of unlabeled data. For example, Zhang et al. proposed a semi-supervised weed detection method based on a diffusion model. This model effectively integrates generated features with real features through an attention mechanism and a semi-diffusion loss function, achieving a performance of 0.94 in accuracy, 0.90 in recall rate, and 0.92 in mAP@50 compared to the fully supervised method DETR, which improves the accuracy and recall rate by approximately 10% and 8%, respectively [179]. Li et al. proposed a semi-supervised few-shot learning method, which can adaptively select pseudo-labeled samples from the confidence interval to help fine-tune the model, improving the accuracy of few-shot classification. The results show that the average improvement rate of the proposed single semi-supervised method is 2.8%, and the average improvement rate of the iterative semi-supervised method is 4.6% [180]. Benchallal et al. developed a new deep learning framework based on the ConvNeXt encoder combined with a semi-supervised learning method with consistency regularization, effectively utilizing labeled and unlabeled data. This method achieved high classification accuracy on public datasets such as DeepWeeds and 4-Weeds, outperforming the fully supervised model trained with only limited labeled data [181].

Another effective method is domain transfer techniques, especially suitable for pre-training models in the source domain (such as laboratory environment) and then transferring to the target domain (such as real fields). For example, Ashour et al. used bamboo (source domain) for pre-training in the sugarcane quality estimation task, and then adapted it to the sugarcane target domain through transfer learning, achieving an average estimation error of 4.5% and 5.9% for bamboo and sugarcane, respectively, using only sparse labeled data. This method avoids the high cost of frame-by-frame labeling and can train deep networks relying only on integrated labels over time [182]. Moreover, transfer learning can also reduce the computational resources and data required for training deep learning models by leveraging pre-trained models already trained on large datasets and fine-tuning them on a smaller dataset. It can also reduce the risk of overfitting in deep learning and improve the accuracy of model predictions and generalization ability in different environments [183]. For example, Simhadri et al. applied transfer learning to 15 pre-trained CNN models to achieve automatic recognition of rice leaf diseases. The results showed that the InceptionV3 model performed the best, with an average accuracy of 99.64%, while the worst was the AlexNet model, which had an average accuracy of 97.35% [184]. Buchke et al. applied transfer learning to tomato leaf disease image datasets with sizes of 3000, 8000, and 10,000, achieving impressive accuracy rates of 97.3%, 99.2%, and 99.5%. The experimental results show that transfer learning can achieve excellent performance even in small- and medium-sized datasets [185].

3.3. Motion Planning and Control Technology

Motion planning technology usually creates environmental maps based on positioning and perception data and then plans safe and efficient driving (motion) paths. Control technology determines specific dynamic parameters by further adopting inverse kinematics calculations or control algorithms to perform stable and accurate trajectory tracking tasks [186,187]. Motion planning and control technologies in agricultural machinery autonomous driving often cover vehicle navigation and machinery operation. Vehicle navigation enables agricultural machinery equipment to move between different operation sites, providing a guarantee for the completion of large-scale automated operations. Machinery operations involve the use of robotic arms and end effectors to complete specific agricultural tasks such as plowing, sowing, fertilizing, weeding, picking, feeding livestock and poultry, and environmental cleaning, which helps to achieve precise operations such as feeding, cutting, and grasping.

3.3.1. Motion Planning

Motion planning technology is the key for agricultural machinery autonomous driving to achieve autonomous motion and precise operation. The rationality of the motion planning algorithm depends on the consideration of various constraints, including obstacles, coverage rate, operational efficiency, etc. [188,189,190]. For the motion planning of machinery operations, it is also necessary to consider the posture of the target object and the motion coordination among various mechanical arms (multi-arm robots) to improve the success rate and efficiency of the operation [191,192,193]. According to environmental awareness and the state of obstacles, motion planning can be divided into global planning and local planning. Global planning is usually a kind of static planning that can be completed offline. It involves conducting path planning based on the known and fixed obstacle positions and environmental structures in the environmental map. It can be said that the overall planning determines the logic of agricultural machinery autonomous driving in independently performing operation tasks and affects the efficiency of task execution. However, in the actual operation process, one may encounter unknown or constantly changing scenarios, such as sudden obstacles, moving people, or livestock and poultry. In this case, local (dynamic) motion planning can effectively avoid collisions and perform agricultural operation tasks because it can achieve online real-time adjustment according to the actual situation [96,194,195]. Both global path planning and local path planning consist of two links: front-end path search and back-end path optimization. Among them, the purpose of path finding is to quickly find a feasible path that meets specific constraint conditions between the starting point and the target point, while path optimization involves optimizing and improving the path generated at the front end to make it more continuous, efficient, and smooth [196,197].

A wide array of path search algorithms have been developed to address various navigation requirements. Based on their underlying technical principles, these algorithms can be categorized into four types: graph-search-based, sampling-based, optimization-based, and learning-based algorithms [198]. The key features and representative algorithms of each category are summarized in Table 11 and Table 12. Furthermore, according to specific task demands, path planning algorithms can be classified into point-to-point, multi-objective, and complete-coverage path planning methods [96]. Point-to-point algorithms are commonly applied in navigation strategies for fixed-route autonomous robots or beacon-guided systems operating in semi-structured agricultural environments, where precise stopping at predefined locations is essential for task execution [199]. Multi-objective path planning aims to determine optimal routes that connect multiple target points, thereby enhancing operational efficiency and reducing energy consumption. These target points are often discrete and variable. For instance, in motion planning for autonomous feeding robots, routes must be dynamically adjusted to account for dispersed feed buckets and uncertain animal movement patterns in semi-intensive farms, which may lead to varying levels of feed availability at each feeding point [200]. In harvesting operations, autonomous picking robots must plan optimal picking trajectories for robotic arms to ensure all ripe fruits on a tree are harvested swiftly and efficiently [201,202]. Complete-coverage path planning focuses on ensuring full spatial coverage for operations such as sowing, monitoring, and cleaning [203,204]. Applications like floor egg collection or weeding also require a comprehensive inspection of the operating environment to determine exhaustive and efficient movement trajectories [205,206,207]. These applications often require systematic inspection of the environment to generate comprehensive motion paths. However, most conventional path planning algorithms primarily account for geometric constraints, often neglecting kinematic and safety considerations. To address this, back-end path optimization methods refine the initially generated paths by incorporating additional constraints to produce executable, smooth, and collision-free trajectories [208]. Some advanced algorithms, such as Kinodynamic RRT* [209], Hybrid A* [210], and the Covariant Hamiltonian Optimization Motion Planning (CHOMP) algorithm [211], explicitly consider kinematic constraints during the path generation phase to ensure feasibility. Despite the progress in this field, path planning algorithms still face significant challenges in unstructured and dynamic agricultural environments. Common issues include limited accuracy, low computational efficiency, and difficulties in trajectory convergence. Additionally, many algorithms insufficiently address external factors such as terrain variability, vehicle structure, machine dynamics, and energy consumption [212,213]. Recent studies have proposed hybrid approaches that combine multiple algorithms to improve adaptability, real-time performance, and planning robustness [214]. Future research can further explore two promising directions: (1) integrating conventional path planning algorithms with end-to-end learning-based approaches to develop high-performance, dedicated computing platforms for path planning, and (2) leveraging multi-robot collaboration to enhance the efficiency and scalability of agricultural task execution [215].

Table 11.

Definition of each type of path-finding algorithm.

Table 12.

Characteristics of each type of path-finding algorithm.

3.3.2. Motion Control

After obtaining the planned movement path, autonomous driving agricultural machinery still has to rely on control technology to track the trajectory stably and accurately. The motion control technology of autonomous driving agricultural machinery has been widely studied. Control methods can be divided into two types: model-based and model-independent. Model-based control methods such as pure tracking control, backstepping control, model predictive control (MPC), and adaptive control can build robust controllers based on the kinematic or dynamic models of vehicles or machinery to achieve precise control of autonomous driving agricultural machinery. However, this control method usually relies on high-precision sensors to obtain real-time status information. When autonomous driving agricultural machinery operates in complex and unstructured environments, it is prone to problems such as sensor or actuator failure, which directly affect the performance of the control algorithm. Most model-based control methods are still limited by parameter uncertainty, inaccurate modeling, unknown noise, and interference [216,217]. This poses challenges for achieving high-precision trajectory tracking of autonomous driving agricultural machinery. In contrast, model-independent control methods can adapt to environmental changes or deal with positional interference through learning or feedback mechanisms. Furthermore, this type of control algorithm has a faster response speed to the dynamic changes of the system and is suitable for handling complex systems and real-time control tasks [218]. The control methods independent of the model mainly include fuzzy control, proportional–integral–differential controller (PID), sliding mode control (SMC), and neural network control. However, these methods have their own limitations and have insufficient accuracy and stability. Table 13 and Table 14 show the characteristics and applicable scenarios of each control algorithm.

Facing the diverse and changeable agricultural operation environment, traditional control methods have certain limitations. In this regard, scholars have attempted to improve and combine traditional control algorithms or develop more accurate and stable control algorithms. To address the challenges agricultural unmanned aerial vehicles (UAVs) face due to wind disturbances, payload variations, and propeller failures during ultra-low-altitude phenotypic remote sensing and precision spraying, Wang et al. [219] proposed an adaptive composite disturbance rejection control algorithm. Considering variations in terrain conditions, Kraus et al. [220] introduced a nonlinear model predictive control strategy for autonomous tractor navigation, achieving an average deviation of 0.60 m during headland turning on damp and uneven grassland. Tang et al. [221] developed a linear active disturbance rejection control method optimized using an improved particle swarm optimization algorithm to mitigate the impact of environmental disturbances such as stones and potholes on agricultural machinery stability. Additionally, Yang et al. [96] applied adaptive and model predictive controllers in floor-based poultry houses to reduce wheel slippage caused by loose bedding materials. Several studies have implemented fault-tolerant control algorithms to address actuator faults in agricultural vehicles, thereby improving the stability of path tracking control. These methods effectively reduce the worst-case discrepancies between the desired and actual control inputs, while enhancing the fault tolerance and robustness of system fault detection and diagnosis. More specific details are discussed in greater depth in the following section [222,223]. Additionally, autonomous driving systems for agricultural machinery must coordinate the control of both mobile platforms and operational equipment. Yue et al. [224] proposed a multi-level coordinated control strategy to enable dynamic collaborative obstacle avoidance and trajectory planning for towed implements and mobile platforms. A notable aspect of motion control in agricultural machinery autonomy is the precision required in task execution. Combining the requirements of the operation tasks and the characteristics of the operation objects is crucial for improving the success rate of the operation and reducing crop losses. Aiming to solve the problem of the picking action of the autonomous driving robot being prone to cause fruit drop damage, Sun et al. [225] used PID control to track the target position of the robotic arm and proposed adaptive input shaping control to suppress the vibration of the end effector. Yin et al. [226] combined the fragile nature of mushroom fruits as the operation object, adopted model predictive control as the bottom position and speed tracker and used admittance control as the top control, which enhanced the flexibility of the robot picking operation. Chen et al. [227] proposed a force-feedback dynamic control method with slip detection function. By using an ultrasonic sensor to detect the distance between the end actuator and the operation object, and then using the feedback information facts to challenge the servo output torque, the success rate of the operation was enhanced.

Table 13.

Applicable scenarios of different control algorithms.

Table 13.

Applicable scenarios of different control algorithms.

| Category | Algorithm | Applicable Scenarios | Example Reference |

|---|---|---|---|

| Model-Based Control | Pure Pursuit Control | Low-speed path tracking in structured environments such as dry farmland and livestock barns (e.g., AGV linear navigation, agricultural vehicle straight-line guidance). | [228,229] |

| Backstepping Control | Multi-degree-of-freedom coupled systems (e.g., UAV attitude control, robotic arm trajectory tracking). | [230,231] | |

| MPC | High-precision dynamic trajectory tracking (e.g., cornering maneuvers, dynamic obstacle avoidance), multi-constraint optimization tasks (e.g., spray painting, sorting operations). | [232,233] | |

| Adaptive Control | Systems with uncertain parameters (e.g., agricultural machinery or robotic arms with varying payloads). | [234,235] | |

| Model-Free Control | Fuzzy Control | Unstructured environments (e.g., muddy farmland, poultry house robot navigation), robotic arm vibration suppression. | [197,236] |

| PID | Steady-state environments (fixed-trajectory tracking), low-speed low-disturbance scenarios (e.g., cage chicken house inspection). | [43,237] | |

| SMC | High-disturbance environments (e.g., paddy field skidding), scenarios requiring rapid response (e.g., emergency vehicle obstacle avoidance). | [238,239] | |

| Neural Network Control | Complex dynamic tasks (e.g., lettuce sorting, apple picking), end-to-end control (e.g., autonomous driving vision navigation). | [240,241] |

Table 14.

Characteristics of different control algorithms.

Table 14.

Characteristics of different control algorithms.

| Algorithm | Advantages | Disadvantages |

|---|---|---|

| Pure Pursuit Control | ① Simple implementation with low computational cost | ① Performance highly sensitive to lookahead distance parameter tuning |

| ② Well-suited for low-speed structured path tracking | ② Degraded performance under dynamic disturbances | |

| Backstepping Control | ① Naturally adapted for nonlinear systems | ① High computational complexity requiring recursive derivation |

| ② Flexible hierarchical design, compatible with adaptive/robust strategies | ② Moderate dependence on model accuracy | |

| MPC | ① Multi-step predictive optimization with explicit constraint handling | ① High computational overhead requiring real-time solvers |

| ② Adaptable to dynamic changes and complex paths | ② Performance degradation under model mismatch | |

| Adaptive Control | ① Online parameter adjustment for time-varying disturbance rejection | ① Convergence depends on parameter estimation accuracy |

| ② No requirement for prior disturbance boundary information | ② Difficulty in stability analysis for complex systems | |

| Fuzzy Control | ① No requirement for precise mathematical models, relies on expert knowledge | ① Time-consuming rule base design process |

| ② Strong capability in handling nonlinearities | ② Limited adaptability without sufficient data validation | |

| PID | ① Simple structure, easily implemented into engineering | ① Poor performance in nonlinear/time-varying systems |

| ② Low computational cost, suitable for embedded systems | ② Dependence on manual parameter tuning with weak robustness | |

| SMC | ① Strong robustness against disturbances and parameter variations | ① High-frequency chattering in traditional SMC implementations |

| ② Fast convergence, well-suited for nonlinear systems | ② Requirement for disturbance boundary information (mitigated in improved versions) | |

| Neural Network Control | ① Model-free, data-driven approach | ① Requirement for large amounts of training data |

| ② Capable of handling high-dimensional nonlinearities and dynamic changes | ② High computational resource demands and poor interpretability |

In practical applications, the autonomous driving motion control algorithms for agricultural machinery continue to present inherent challenges. First, given that agricultural robots typically operate in outdoor or harsh environments, challenges such as limited computing resources and insufficient energy supply are frequently encountered within their hardware configurations. Therefore, the feasibility of control algorithms in resource-constrained environments must be thoroughly evaluated during their selection and implementation. These robots are predominantly equipped with embedded systems, which are characterized by limited processor performance, memory capacity, and battery endurance. Thus, a judicious balance between accuracy and efficiency must be achieved during the selection of control algorithms [242]. Model-based control methods, although capable of providing high-precision control effects, are associated with substantial computational costs and a dependence on high-precision sensors. Consequently, their implementation on devices with limited computing resources proves challenging. For example, while model predictive control (MPC) can handle multi-constraint optimization problems, its online solution necessitates considerable computing power and typically requires a high-performance processor. This often renders it unfeasible given the inherent power and cost constraints of agricultural robots. In contrast, model-free control methods are more readily deployable on devices with limited computing capabilities. Specifically, PID control, with its simple structure and low computational burden, is particularly well-suited for fixed trajectory tracking and scenarios characterized by low speed and minimal interference. However, PID control is associated with discernible limitations when applied to nonlinear and time-varying systems. These include the necessity for manual parameter tuning and a demanding prerequisite for robust system performance. Neural network control can handle high-dimensional nonlinear problems; nevertheless, its training and inference processes are computationally intensive and present significant challenges for real-time execution on low-power microcontrollers [243]. Therefore, in practical applications, acceptable tracking performance with limited resources is frequently achieved through the utilization of simplified models, the reduction in controller complexity (e.g., simplifying multi-degree-of-freedom models to equivalent low-dimensional models), or the integration of lightweight algorithms (such as fuzzy PID and adaptive sliding mode control). Additionally, to effectively address the uncertainties and dynamic changes characteristic of agricultural environments and to obviate excessive computational and storage overheads, algorithms should be equipped with online learning or adaptive capabilities [242]. In the future, with the ongoing development of edge computing hardware and the advancement of algorithm lightweighting technologies, more complex control strategies are projected to be effectively deployed on resource-constrained agricultural robot platforms. Second, despite the development of diverse agricultural autonomous driving motion control algorithms for various operational scenarios, most of these algorithms are exclusively validated under low-speed, small-curvature experimental conditions. Consequently, control schemes for high-speed, high-curvature, and other complex conditions present a notable gap [244,245]. Third, owing to the absence of a unified data communication protocol, research concerning coordinated control between mobile platforms and operational equipment remains constrained [246]. Finally, the dynamic and kinematic models of the agricultural autonomous driving chassis are indispensable for the efficacious design of control algorithms. Future research is recommended to investigate further multi-parameter fusion intelligent control technologies for each subsystem of the agricultural autonomous driving chassis [247,248].

3.4. Actuator Technology

Actuators are fundamental to autonomous agricultural machinery, translating control system signals (electrical, hydraulic, or pneumatic) into mechanical motion to enable diverse robotic tasks. Their performance and reliability are paramount, directly impacting the precision, efficiency, and safety of agricultural operations. Due to varied functional requirements, actuators are broadly classified by application: drive actuators are for locomotion and vehicle control and manipulator actuators are for intricate interaction tasks (e.g., grasping and cutting). The challenging agricultural environment often leads to actuator failures, necessitating robust fault detection and isolation (FDI) systems and effective fault-tolerant control strategies for continuous, reliable, and safe operation.

3.4.1. Drive Actuator