Abstract

Lower limb exoskeletons have been developed to improve functionality and assist with daily activities in various environments. Although these systems utilize sensors for gait phase detection, they lack anticipatory information about environmental changes, which limits their adaptability. This paper presents a vision-based intelligent gait environment detection algorithm for a lightweight ankle exosuit designed to enhance gait stability and safety for stroke patients, particularly during stair negotiation. The proposed system employs YOLOv8 for real-time environment classification, combined with a long short-term memory (LSTM) network for spatio-temporal feature extraction, enabling the precise detection of environmental transitions. An experimental study evaluated the classification algorithm and soft ankle exosuit performance through three conditions using kinematic analysis and muscle activation measurements. The algorithm achieved an overall accuracy of over 95% per class, which significantly enhanced the exosuit’s ability to detect environmental changes, and thereby improved its responsiveness to various conditions. Notably, the exosuit increased the ankle dorsiflexion angles and reduced the muscle activation during the stair ascent, which enhanced the foot clearance. The results of this study indicate that advanced spatio-temporal feature analysis and environment classification improve the exoskeleton’s gait assistance, improving adaptability in complex environments for stroke patients.

1. Introduction

Stroke patients commonly exhibit functional impairments, such as muscle weakness and sensorimotor deficits, with gait disturbances significantly restricting their functional independence in daily activities [1,2,3]. Due to decreased strength and impaired balance, they exhibit a pathological hemiparetic gait pattern characterized by a reduced gait speed, a prolonged stance phase duration, and an extended double support period [4,5]. Stroke patients exhibit foot drop, characterized by the weakness of the primary dorsiflexor muscles or shortening of plantarflexor muscles, which impairs their ability to perform dorsiflexion during gait [6,7,8]. A reduction in foot clearance during the swing phase of the gait cycle results in pathological gait patterns, such as circumduction gait or steppage gait [9,10,11,12]. Particularly, during stair ascent, the diminished foot clearance due to foot drop results in insufficient clearance between the foot and the stair nosing, thereby increasing the risk of a fall due to potential contact between the foot and the stair nosing [13].

Stair ambulation is a common activity in daily life; however, it is considered a challenging task compared with level walking due to the increased demands on the joint range of motion and muscular moments in the lower extremities [14]. Additionally, stroke patients exhibit greater force and oxygen consumption during stair ambulation compared with healthy people, leading to an increased metabolic cost [15,16]. Consequently, a lower limb exoskeleton has been developed to assist with gait in stair environments [17,18,19]. Lower limb exoskeletons enhance user functionality and assist in daily activities by transmitting assistive forces to the lower limb joints during locomotion. Yinsheng Xu et al. proposed a soft exosuit that assists hip flexion during stair climbing by utilizing angular information of the hip joint, which effectively reduces the metabolic consumption and muscle fatigue in users [20]. Ying Fang et al. developed a wearable adaptive ankle exoskeleton that leverages torque balance information based on the forefoot force to assist ankle moment [21]. Lee et al. created a knee assistive exosuit that aids stair ascent and descent movements by distinguishing the current gait phase through insole sensors [22].

Insole sensors and inertial measurement unit (IMU) sensors provide valuable information regarding the current state of the gait, making them useful for gait phase detection [23,24]. However, they do not offer prior information about the gait environment, which impedes the ability to anticipate environmental changes in advance. Consequently, research on vision-based environmental perception algorithms for exoskeletons was conducted [25,26]. This approach is motivated by the observation that humans inherently utilize visual information to acquire prior knowledge of their gait environments, thereby facilitating more effective navigation and adaptation.

Visual factors play a critical role in stair negotiation. According to the Stair Behavior Model, the process of stair negotiation involves several stages: an initial conceptual scan for sensory input, hazard detection, route choice, visual perception of the step location, and continuous monitoring scans [27]. Disturbances during these stages can lead to an increased risk of a fall [28]. Camera-based gait environment recognition functions similarly to the visual recognition of step location and continuous monitoring stages within the human vision system, thereby enhancing gait stability during assistance with a lower limb exoskeleton. Specifically, the vision system of an exoskeleton performs the role of ambient vision by detecting peripheral fields, which contributes to the awareness of the surrounding environment, improves posture stabilization, and provides guidance for locomotion.

To enhance the overall performance of the vision system, deep learning-based methodologies can be employed. Recent advancements in the deep learning model have significantly influenced diverse fields, including robotics, such as robot control and intention detection, leading to enhanced performance and novel methodologies [29]. Large Language Models (LLMs) have revolutionized natural language processing by advancing capabilities in text generation, high-level reasoning, and multimodal tasks, thereby transforming human–robot interaction across various domains [30,31]. Additionally, Kolmogorov–Arnold Networks (KANs) present a novel approach by replacing conventional neural network weights with learnable activation, offering enhanced accuracy and mechanistic interpretability [32,33]. Furthermore, YOLOv8 represents a significant advancement in the domain of real-time object detection, enhancing both computational speed and accuracy, thereby proving effective for applications in video analysis and environment classification [34,35]. Nevertheless, the integration of deep learning-based environment classification into assistive robotics applications remains underexplored, despite the significant advancements in the field.

Enrica Tricomi et al. created a hip exosuit that integrates IMU-based gait phase detection with RGB camera vision and convolutional neural network (CNN)-based environmental classification for adaptive gait assistance [36]. This approach achieves an overall accuracy of 85% in the real-time classification of gait environments and reduces the user’s metabolic cost by approximately 20%. However, the system demonstrates a limitation, with a decreased accuracy of less than 80% during transition phases between gait environments, leading to an increased rate of misclassification. This study does not specifically target stroke patients, and stroke patients exhibit greater asymmetry in the ankle joint compared with the hip joint [37]. Therefore, assistance for the ankle joint is required more than for the hip joint to maintain lower limb stability.

This paper presents a vision-based intelligent gait environment detection algorithm for a lightweight ankle exosuit for stroke patients. It introduces an advanced environmental recognition algorithm by leveraging YOLOv8 for detailed gait environment segmentation and applies LSTM, which utilizes spatio-temporal features to improve the accuracy during transitions when environmental changes occur. In stair negotiation, the visual confirmation of the first step is considered a critical factor in transitioning from level walking to stair navigation [38]. In particular, falls on stairs typically occur during the initial or final three steps when the gait environment changes [27]. Therefore, it is essential to accurately and rapidly recognize stairs during gait assistance to adapt to environmental changes. Thus, segmenting the stair environment into multiple stages, including the initial and final stages of the stair ascent and descent, can enhance the gait stability and improve the environmental recognition accuracy. To verify the performance of the proposed method, experiments were conducted with a healthy subject.

2. Soft Exosuit

2.1. Soft Exosuit Design

The soft ankle exosuit is constructed from flexible and lightweight materials that conform to the user’s movements, providing assistive forces to the ankle through a tendon-driven mechanism. The exosuit consists of vest, waist belt, leg brace, and foot wearable components. The vest integrates the actuation unit and a camera (Logitech BRIO 4K Ultra HD Pro), which is mounted at the chest position to capture the gait environment. The leg brace is connected to the thigh strap and shin brace along the lateral side of the lower limb, forming a tendon path. To analyze the kinematics of the shin and thigh during gait phase detection, an IMU (mpu6050) is attached to the shin and thigh positions of the leg brace, respectively. The foot wearable includes anchor points in the toe and heel regions to assist with ankle dorsiflexion and plantarflexion.

The actuation unit of the exosuit consists of a motor, two Bowden cables, and a single pulley. The pulley, with a diameter of 61 mm, is directly connected to the shaft of the motor (Ak809, Cubemars, China). The system was designed with an antagonistic structure, wherein the cables are wound in opposite directions to facilitate both dorsiflexion and plantarflexion movements. The cables attached to the pulley are connected to anchor points located at the toe and heel of the foot wearable, thereby transmitting torque to the ankle and providing assistive support. In the counterclockwise direction, the mechanism pulls the toe section to assist dorsiflexion, while simultaneously inducing slack in the wire connected to the heel anchor point. Conversely, when driven clockwise, the system supports plantarflexion by pulling on the heel portion and generates slack in the wire attached to the toe anchor point, thereby preventing restriction of the ankle. The actuation unit, which includes the motor, a 22.2 V Li-ion battery, and the control device (Arduino Uno), is positioned on the rear of the user’s vest. This placement ensures proximity to the body’s center of gravity, thereby distributing the applied forces more effectively across the user’s body. The wires utilized are bicycle brake cables, which extend from the pulley of the actuation unit mounted on the back, through a sheath along the shin, and are subsequently attached to the anchor points on the foot wearable.

2.2. Control Strategy

Environmental data, captured via a camera, are classified into one of three conditions (level ground, stair ascent, or stair descent) using a vision-based system. The label value of the walking environment, denoted as vision data k, defined as Equation (1), is subsequently transmitted to Simulink via user datagram protocol (UDP) communication.

The processed according to Equation (3) inform the motor input parameters, derived from the shank angular velocity and thigh angular velocity , outlined in Equation (2). These data are specifically tailored to each environmental context, thereby dictating differentiated motor operations for each gait environment.

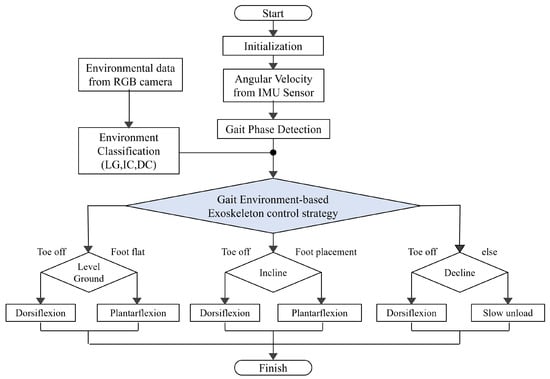

The motor operates based on the , which is classified according to the specific gait environment, thereby implementing the appropriate control method for each condition, as shown in Figure 1. In level walking, dorsiflexion is assisted during the toe-off phase, and plantarflexion is supported during the foot-flat phase to maintain the foot in a horizontal alignment [39,40,41]. During a stair ascent, increased assistive force is provided compared with level walking, with enhanced dorsiflexion support at the toe-off phase (forward continuance) and a gradual unloading using current control until just before toe-off [42]. During a stair descent, dorsiflexion assistance during the toe-off phase (leg pull-through) is complemented by plantarflexion just before the foot placement, ensuring the foot is level upon contact.

Figure 1.

Flowchart of gait-environment-based exoskeleton control strategy.

Toe-off detection utilizes an IMU positioned on the affected thigh and shin of the subject. IMU is oriented with the positive Y-axis directed toward the head and the positive Z-axis directed outward, away from the thigh and shank. In gait phase detection using IMU values from the thigh and shank, the correction of input signals is required to mitigate the impact of extraneous noise. To address this, a Kalman filter is implemented within Simulink for processing the IMU data collected via Arduino Uno [43]. The timing of the toe-off phase within the gait cycle is determined based on the Z-axis angular velocity values collected from the thigh and shank across various walking environments [44,45]. In a stationary state, the Z-axis angular velocity attains a value of zero and exhibits a consistent pattern across different gait cycles, irrespective of the walking environment. During the heel-off phase, the Z-axis angular velocity decreases, reaching a minimum just before the toe-off phase. The value then increases at the onset of the toe-off phase, reaching a maximum during the mid-swing phase. Subsequently, during the heel-strike phase, the Z-axis angular velocity attains a value of zero. From the foot-flat phase through to the heel-off phase, the Z-axis angular velocity decreases in the negative direction. The repetitive pattern of Z-axis angular velocities during the gait cycle facilitates the identification of the toe-off initiation, marked by an increase from a negative value immediately after reaching the minimum. To prevent the misidentification of toe-off due to noise in a stationary state, a threshold is set such that only values greater than twice the maximum absolute angular velocity measured at rest are recognized as part of the gait phase. Consequently, the initiation of dorsiflexion assistance commences once this threshold is surpassed, displaying characteristics indicative of toe-off.

Motor control is performed through an integrated electric speed controller (ESC) using position control. Communication with the control device is facilitated using the controller area network (CAN) protocol, with an MCP2515 module connected to the Arduino. Upon the detection of the necessary characteristics, the drive information is transmitted to the motor’s ESC in CAN data format via Simulink, causing the motor to rotate sufficiently to wind the wire and transmit appropriate torque at the toe-off phase. The motor’s angle is calculated by dividing the required stroke length for positioning the peel or toe by the radius of the pulley.

3. Environment Recognition System

3.1. Data Acquisition and Class Definition

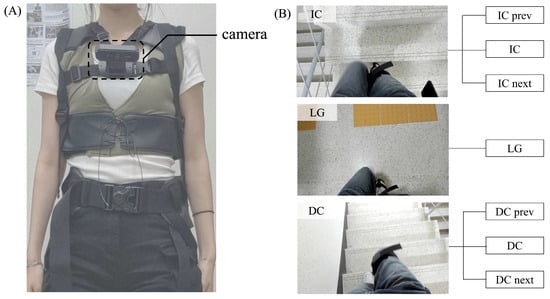

A customized dataset was constructed by recording a single female participant walking at her preferred pace on both level ground and stair environments while wearing a soft ankle exosuit. Each frame in the walking dataset videos was captured at a resolution of 960 × 540 pixels. For the training dataset, which initially consisted of 8000 images, data augmentation techniques, including rotation, shear, brightness adjustment, blur, and noise addition, were applied to generate a total of 16,000 images. To address the class imbalance within the dataset, both undersampling and oversampling techniques were employed for different labels. The final dataset was partitioned into 16,000 training images, 2000 validation images, and 2000 test images, following an 8:1:1 ratio. As shown in Figure 2, the customized dataset utilized in this study was categorized into seven distinct gait environments to enhance the accuracy during the transition phases: level ground (), initial incline (), incline (), final incline (), initial decline (), decline (), and final decline ().

Figure 2.

Exoskeleton setup for dataset collection and the acquired gait environment image data: (A) wearable camera system of soft exosuit prototype and (B) RGB images of gait environments categorized by stair incline and decline, including specific walking environment classes and training datasets.

Classification was based on the ratio of stairs within the frame and the weight acceptance phase of the gait cycle. The weight acceptance phase, characterized by both feet being in contact with the ground, represents a relatively stable double-stance phase with minimal dynamic motion, facilitating more accurate image classification. See Table 1.

Table 1.

Definitions and labeling criteria for the seven classes that distinguished specific gait environments, including the initial and final stages of level ground and stair environments.

3.2. YOLO Model Training

The YOLO algorithm is an object detection framework widely utilized in various computer vision fields [46]. In contrast to conventional two-stage detectors, which perform detection through a region-proposal method and utilize a classifier, YOLO adopts a one-stage detection approach by framing object detection as a regression problem. The YOLO model predicts multiple bounding boxes and class probabilities concurrently within a single convolutional network. This necessitates a less complex pipeline compared with other object detection systems, resulting in extremely rapid real-time detection speeds. Furthermore, it processes input images globally, thereby effectively leveraging contextual information and demonstrating superior generalization capabilities. In this study, rapid detection in response to the gait environment transition and the ability to analyze the spatial global information were crucial. Therefore, adopting the YOLO model was particularly suitable due to its aforementioned characteristics. The recently released YOLOv8 incorporates a modified CSP Darknet32 backbone into its architecture [47]. This backbone modification significantly enhances the model’s capabilities, ensuring an optimal performance across diverse scenarios and tasks. The YOLOv8 model was selected for its lightweight nature, low computational requirements, and superior accuracy compared with previous versions. YOLOv8 differentiates itself from previous YOLO networks through several key innovations. It replaces the C3 module with the C2f module, which operates with slightly fewer parameters while maintaining similar performance levels. The backbone of YOLOv8 was optimized by replacing 6 × 6 convolutional layers with 3 × 3 convolutional layers, effectively halving the total number of parameters. Furthermore, YOLOv8 removes the objectness branch responsible for calculating confidence scores and implements a decoupled head for regression and classification. This adjustment results in faster processing speeds and improved average precision (AP) compared with the single-head approach of earlier YOLO models. Additionally, YOLOv8 employs an anchor-free detection method, which directly predicts object centers rather than resizing anchor boxes to fit object sizes. This approach reduces the number of bounding box predictions and accelerates the non-maximum suppression (NMS) process.

The YOLOv8 model was trained using the PyTorch (Meta Platforms, Inc., Menlo Park, CA, USA) framework on a workstation equipped with an Intel i5-13600KF CPU (Intel Corporation, Santa Clara, CA, USA) and an NVIDIA RTX 3070 GPU (NVIDIA Corporation, Santa Clara, CA, USA). Hyperparameter tuning was performed to optimize the model’s performance, resulting in the selection of the most effective hyperparameters. The training process was set for 100 epochs but was halted at epoch 92 due to early stopping. The batch size was configured to 16, the learning rate was set to 0.0001, and the Adam optimizer was utilized to enhance the model’s training efficiency. The trained YOLO model was evaluated on the test dataset, yielding a high accuracy of 99.3%.

3.3. LSTM-Based Spatio-Temporal Feature Extraction

Gait is a form of time-series data in which characteristics such as the gait environment and joint angles change over the time domain. To accurately classify the gait environment, it is more appropriate to consider both past and future contexts relative to the current frame, rather than relying solely on the current frame. Accordingly, an LSTM model, which was trained on sequential time-series data, was selected.

LSTM is a recurrent neural network (RNN) designed to train long-term dependencies in sequential data and demonstrates superior performance by overcoming the vanishing and exploding gradient problems inherent in traditional RNN [48]. An LSTM unit consists of a forget gate, an input gate, an output gate, and a cell. The gates regulate the flow of information within the cell; the forget gate determines which information from the previous cell state should be discarded, the input gate decides how much new information should be added to the cell state based on the current and past inputs, and the output gate determines which information is output from the memory cell. The LSTM network, applied across various domains, such as computer vision, text recognition, and natural language processing, exhibits exceptional performance when analyzing the spatio-temporal features of sequential data.

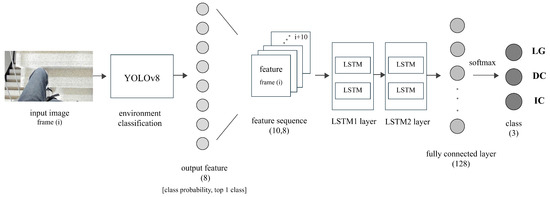

Figure 3 illustrates the overall vision system for the gait environment classification. The YOLO framework performed real-time analysis of each video frame, classifying the environment into distinct environment conditions, such as level ground, early stair, mid stair, and late stair. This environmental classification provided crucial contextual information for the LSTM, facilitating its understanding of transitions across diverse environments. These classification outputs were subsequently organized into sequences that reflected the temporal progression of the gait environment. Each frame within these sequences was annotated with its corresponding environmental classification, thereby preserving temporal features for the LSTM’s analysis. By providing subdivided context regarding the current gait environment through the YOLO output, the LSTM effectively extracted relevant features corresponding to each phase of the stair negotiation, which improved its predictive capability for environment transitions and enhanced the overall classification accuracy between the level ground and stair conditions.

Figure 3.

Overall architecture and model design of the gait environment classification algorithm combining YOLO for environment classification and LSTM for sequence feature extraction.

In this study, the LSTM model utilized the frame-wise probabilities for each class and the most probable class extracted from the YOLO method as the training data. To create the time-series data, the training videos were extracted at 20 frames per second (FPS), and labeling was performed by assigning one class label for every ten frames. The stride was set to five, which resulted in four labels per second. For the LSTM data preparation, a maximum voting technique was initially applied to label the most probable class. If there was a tie in the class probabilities, an average voting technique was used, where the class with the highest average probability was chosen. The shape of the LSTM data was (1714, 10, 8), which consisted of sequences of data with ten time steps, and each contained eight features. The validation dataset, which was proportionally divided with an 8:1 ratio compared with the training dataset, contained 209 sequences. The LSTM model was trained for 1000 epochs with a batch size of 32 and a learning rate of 0.001, and it utilized the Adam optimizer. Upon completing the training, the model’s performance was evaluated on the test dataset to calculate the accuracy and assess the overall performance using various evaluation metrics. The model demonstrated a high level of accuracy, where it achieved 98.93% on the test dataset, indicating its exceptional performance.

4. Experiment

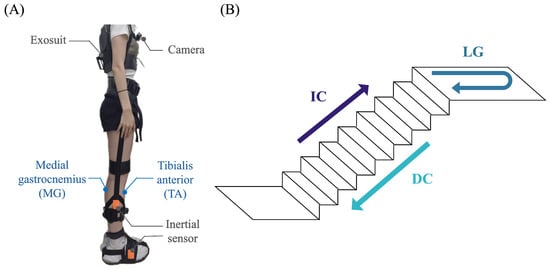

To evaluate the performance of the gait environment classification algorithm in real time, an experimental study was conducted with a female participant (height: 162 cm, weight: 47 kg). The experiments were conducted in an indoor environment within a building, utilizing straight stairs with a tread depth of 28 cm and a rise height of 16 cm. The experimental setup was characterized by uniform lighting conditions, unaffected by sunlight, and featured a tread width of 1.2 m, which ensured that both edges of the staircase were captured by the camera. The procedure involved the participant walking at a constant speed from a starting point on level ground, ascending a staircase consisting of eight steps, traversing a circular path on the level ground, and subsequently descending the same staircase to return to the initial starting point. The experimental protocol for this study is illustrated in Figure 4.

Figure 4.

Experimental protocol for evaluating the exosuit performance across various walking environments, including (A) the experimental prototype that consisted of an exosuit, camera, and inertial sensor. The blue lines represent the TA and MG muscles for measuring the dorsiflexion and plantarflexion muscle activity, respectively. (B) The participant performed stair ascent, stair descent, and level ground gaits in both stair and level ground experimental environments.

To assess the performance of the soft ankle exosuit, the experiment was conducted under three distinct conditions: (1) without the exosuit (no exo), (2) the exosuit worn but unpowered (exo off), and (3) the exosuit worn and powered (exo on). Prior to the experiment, the participant underwent a 10-minute familiarization process with the exosuit to adapt to its movement. Additionally, a 20-minute rest interval was implemented between each experimental condition to prevent any residual effects from impacting subsequent conditions.

For the analysis of human kinematics, an inertial motion capture system, the Xsens MTw Awinda System (Xsens Technologies BV, Enschede, The Netherlands), was utilized. This system was attached to the participant’s foot and mid-shank to analyze the ankle joint angles in the sagittal plane, with the data collected using Xsens Analyze software (version 2022.2, Xsens Technologies B.V., Enschede, Netherlands). Surface electromyography (sEMG) sensors (LAXTHA Inc., Daejeon, Republic of Korea) were employed to measure the muscle activation during the gait in each experimental condition. Surface electrodes were attached to the tibialis anterior (TA) and medial gastrocnemius (MG) muscles, following the guidelines outlined in the Surface Electromyography for Non-Invasive Assessment of Muscles (SENIAM) protocol. The maximum voluntary contraction (MVC) for each lower limb muscle was measured before the experiment [49,50]. EMG data were collected using Matlab (R2023a, MathWorks Inc., Natick, MA, USA, Win10) and normalized based on the pre-measured MVC values. The raw EMG signals were low-pass filtered using a fourth-order Butterworth filter with a cutoff frequency of 6 Hz [51]. The gait cycles were segmented from 0% to 100% based on the heel-off event of the left foot, and gait data analysis was performed for each experimental condition and gait environment. To ensure reliable analysis, the first and last steps of the gait cycle, which did not accurately reflect the gait pattern and were unstable, were excluded from the data analysis.

5. Results

5.1. Real-Time Environment Classification Performance

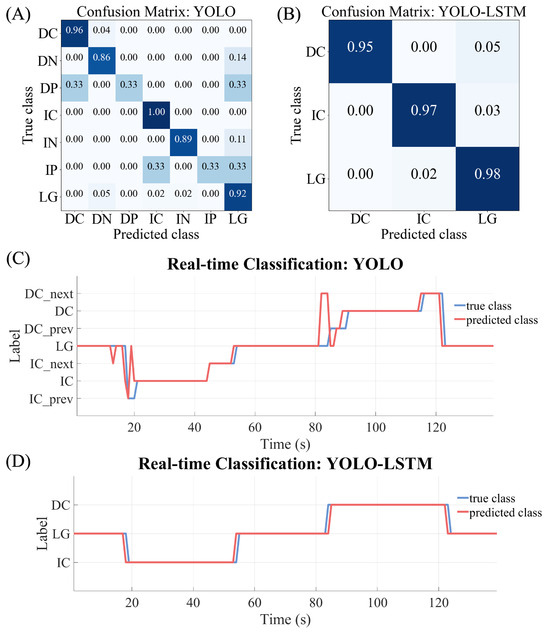

The real-time classification performance of the vision system was evaluated by classifying the gait environment through a camera mounted on the exosuit in the exo on condition. Performance evaluation metrics are critical for assessing the effectiveness of classification system, categorizing predictions into true positive (), false positive (), false negative (), and true negative () categories. The confusion matrices for the classification of gait environments by both the YOLO system and the YOLO-LSTM system, normalized to a range between 0 and 1, are illustrated in the upper part of Figure 5. Additionally, Table 2 demonstrates the precision, recall, F1 score, and accuracy of each environment. Precision measures the proportion of predictions among all positive predictions, indicating the model’s accuracy, defined as

Figure 5.

Evaluation metrics for the real-time gait environment classification vision system. (A) Confusion matrix for the seven gait environment classes (, , , , , , and ) classified by the YOLO model. (B) Confusion matrix for the three gait environment classes (, , ) classified by the YOLO-LSTM vision system. (C) YOLO-based environment classification results over time to assess the transition timing during the real-time application. (D) Integrated vision-system-based environment classification results over time.

Table 2.

Environment classification performance.

Recall assesses the proportion of predictions among all true positives, reflecting the model’s ability to identify positive instances, denoted as

The F1 score provides a balanced evaluation by combining precision and recall, given by Equation (6). Additionally, Accuracy measures the overall correctness of the model, defined as Equation (7), providing a general overview of performance.

The real-time performance evaluation metrics of the YOLO system identified instances of misclassification, and the overall F1 score for the class was below 0.96. Particularly, the system exhibited a significantly lower accuracy during the initial transition from the level ground to a stair environment. Particularly, the precision values in the and was 1, while the recall value was notably lower at 0.333, indicating a significant imbalance. Consequently, the F1 score was 0.500, reflecting this deficiency. Additionally, in the class, the precision, recall value, and F1 score were 0.600, 0.857, and 0.706, respectively, indicating a relatively low performance.

Conversely, the confusion matrix for the YOLO-LSTM system demonstrated a high accuracy for the incline and decline environments, with a reduction in the misclassifications. This vision system enhanced the performance, as demonstrated by the improved precision, recall, F1 scores, and accuracy for , , and , relative to YOLO alone. Particularly, the F1 score of the overall class exceeded 0.96, indicating a balanced classification performance across the various class.

As shown in Figure 5, this study analyzed the gait environment class labels across the time domain to evaluate the timing of the gait environment transitions during real-time classification. The YOLO environment classification results indicate that during the transitions from the level ground to the stairs, the system misclassified the level ground as stairs 1.5 s (6 frames) before the stair entry. In contrast, the YOLO-LSTM vision system rapidly distinguished environmental changes with appropriate timing during the transition phases. It identified transitions during the initial entry into the stairs in the descent phase (), with a delay of one frame, or 0.25 s. Conversely, it detected the stair environment one frame earlier during the ascent (, ) and the switch from the stairs to the flat ground during the descent (). Additionally, the system accurately identified the environments during the stair ascent and descent, avoiding misclassification of the level ground and stair gait.

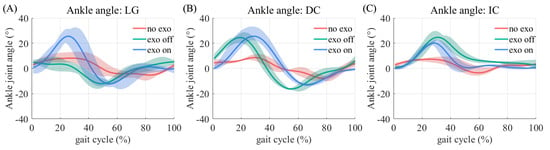

5.2. Joint Kinematics

The average ankle joint angles for each experimental condition are illustrated in Figure 6. On the level ground, the peak ankle dorsiflexion angles were (mean ± SD) in the no exo condition, in the exo off condition, and in the exo on condition. This indicates a slight reduction in the dorsiflexion angle with the exo off condition and a substantial increase with the exo on condition. In the stair incline environment, the peak ankle joint angles were as follows: under the no exo condition, under the exo off condition, and under the exo on condition. These results show similar peak dorsiflexion angles between the exo off and exo on conditions. In the stair decline environment, the peak dorsiflexion angles were under the no exo condition, under the exo off condition, and under the exo on condition, which demonstrated an increase in peak dorsiflexion angle with the application of the exosuit. The stair environments revealed similar peak ankle dorsiflexion angles between the exo off and exo on conditions.

Figure 6.

Ankle joint angle for each gait environment, classified by the gait cycle. Positive values indicate dorsiflexion. The red line represents the no exo condition, the green line indicates the exo off condition, and the blue line denotes the exo on condition. Ankle angle (A) in the level ground environment, (B) during the stair ascent, and (C) during the stair descent.

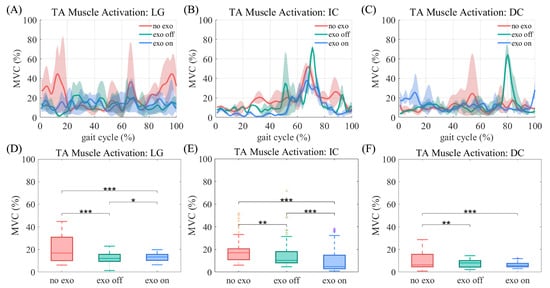

5.3. Muscle Reduction

Muscle reduction was observed in both the TA and MG muscles in the exo on condition. A paired t-test was performed to analyze the EMG signals recorded during the experiment under three conditions: no exo, exo off, and exo on. This analysis aimed to evaluate the impact of the exosuit on muscle activation during the gait cycle. The results of the TA muscle activation for each gait environment are presented in Figure 7. On level ground, the average TA muscle activation for each condition was 20.63 ± 11.56%, 12.77 ± 5.02%, and 13.22 ± 3.43% for the no exo, exo off, and exo on conditions, respectively. The paired t-test revealed a significant reduction in the activation between the overall conditions: no exo vs. exo off (p < 0.001, 95% CI = 6.08, 11.05), no exo vs. exo on (p < 0.001, 95% CI = −9.79, −5.03), and exo off vs. exo on (p = 0.04, 95% CI = −2.34, 0.03). This indicates similar reductions of 7.86 ± 13.98% in the exo off condition and 7.41 ± 11.89% in the exo on condition compared with the no exo condition.

Figure 7.

TA muscle activation across the Gait cycle for each gait environment. The red line indicates the No exo condition, the green line represents the Exo off condition, and the blue line denotes the Exo on condition. TA muscle activation in the (A) level ground environment, (B) stair incline environment, and (C) stair decline environment. Range of TA muscle activation in the (D) level ground environment, (E) stair incline environment, and (F) stair decline environment. The asterisks (*) denote statistical significance differences across the various conditions: * p < 0.05, ** p < 0.01, *** p < 0.001.

In the stair incline environment, TA muscle activation indicated a significant reduction across the overall conditions: no exo vs. exo off (p = 0.002, 95% CI = 0.02, 6.40), no exo vs. exo on (p < 0.001, 95% CI = 2.23, 8.66), and exo off vs. exo on (p < 0.001, 95% CI = −11.45, −5.86). Particularly, it decreased by 3.21 ± 9.92% and 8.65 ± 7.07% in the exo off (15.24 ± 12.77%) and exo on (9.79 ± 10.12%) conditions, respectively, compared with the no exo condition (18.44 ± 9.92%).

In the stair decline environment, the TA muscle activation showed similar values across the no exo (10.75 ± 4.25%), exo off (12.34 ± 10.93%), and exo on (12.09 ± 4.81%) conditions, with slight increases of 1.59 ± 11.19% and 1.34 ± 7.10% in the exo off and exo on conditions, respectively, compared with the no exo condition (p = 0.007, 95% CI = −5.06, −0.62; p < 0.001, 95% CI = 1.81, 3.98). However, no statistically significant differences were observed between the exo off and exo on conditions (p = 0.97, 95% CI = −2.39, 2.28).

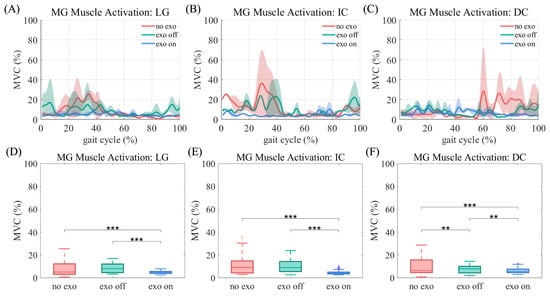

For the MG muscle, which is activated during plantarflexion, a significant reduction was observed in the level ground environment, with decreases of 3.01 ± 6.16% and 3.64 ± 4.27%, respectively, in the exo on condition compared with the no exo condition (p < 0.001, 95% CI = −4.33, −1.68) and exo off condition (p < 0.001, 95% CI = 2.81, 4.47), as illustrated in Figure 8 (no exo: 7.88 ± 6.61%, exo off: 8.51 ± 4.04%, exo on: 4.87 ± 1.21%). There was no statistically significant difference observed between the no exo and exo off conditions (p = 0.31, 95% CI = −2.16, 0.90).

Figure 8.

MG muscle activation throughout the Gait cycle for each Gait condition. The red line represents the No exo condition, the green line denotes the Exo off condition, and the blue line signifies the Exo on condition. MG muscle activation is shown in the (A) level ground environment, (B) stair incline environment, and (C) stair decline environment. The range of MG muscle activation is detailed for the (D) level ground environment, (E) stair incline environment, and (F) stair decline environment. The asterisks (*) denote statistical significance differences across the various conditions: * p < 0.05, ** p < 0.01, *** p < 0.001.

Similarly, in the stair incline environment, the MG muscle activation decreased by 7.01 ± 9.70% and 5.96 ± 6.80% in the exo on conditions compared with the unworn exosuit condition (p < 0.001, 95% CI = −8.79, −5.24) and worn-but-unpowered exosuit condition (p < 0.001, 95% CI = −4.73, 7.19), respectively (no exo: 11.71 ± 8.83%, exo off: 10.66 ± 5.99%, exo on: 4.70 ± 1.69%). The EMG reduction observed between the no exo and exo off conditions was not statistically significant (p = 0.15, 95% CI = −1.05, 3.16).

In the stair decline environment, muscle reductions of 2.35 ± 6.49% and 3.57 ± 4.51% were observed in the exo off and exo on conditions, respectively (no exo: 9.97 ± 7.35%, exo off: 7.62 ± 3.41%, exo on: 6.41 ± 2.19%). The results in the decline environment were statistically significant between the overall conditions: no exo vs. exo off (p = 0.006, 95% CI = 0.75, 3.95), no exo vs. exo on (p < 0.001, 95% CI = -5.08, −2.06), and exo off vs. exo on (p = 0.04, 95% CI = −2.34, 0.03).

6. Discussion

The vision system for the ankle exoskeleton developed in this study leverages YOLO’s object classification capabilities and LSTM’s temporal pattern recognition abilities to enable rapid responses to environmental changes. This system enhanced the precision, recall, F1 score, and accuracy compared with YOLO alone, and significantly improved the real-time environment transition timing. In the initial transition from the level ground to a stair environment, the system utilizing YOLO alone displayed a notably low recall value and high precision value, indicating the system failed to identify a significant portion of actual instances within these classes, leading to a substantial imbalance in performance. In contrast, the class with a low precision value suggests that while the system effectively identifies actual instances, it also incurs a substantial number of incorrect predictions.

The YOLO-LSTM vision system offered substantial improvements over the YOLO alone, particularly in the decline environments. The improved precision, recall, and F1 scores for each class validated the effectiveness of the YOLO-LSTM approach in addressing the complexities of real-gait analysis. Overall, this indicates the system effectively identifies actual instances while minimizing the misclassification, reflecting the reliable and robust performance. In comparison with previous studies, which reported accuracies of 97.5%, 85.5%, and 91.1% for various terrains, such as the level ground, stair incline, and decline environments, this research demonstrated improved performances, with accuracies of 98.4%, 97.2%, and 95.0% for each condition [36]. This suggests that our method achieves high accuracy across various terrains, thereby improving the effectiveness in real-world applications. The advancements observed in the results may be attributed to the integration of a more sophisticated environmental recognition algorithm and the utilization of advanced deep learning techniques.

Although the system detected environmental changes in the transition phase with a timing variation of ±1 frame (±0.25 s), this minor temporal discrepancy did not impact the overall exosuit performance. In particular, when misclassifications occurred in the YOLO model predictions, the LSTM model compensated by analyzing the previous context and patterns based on sequence data to make accurate predictions. This capability underscores the critical role of spatio-temporal feature extraction in adaptive exoskeletons, emphasizing its importance in rapid and accurate responses to dynamic environmental changes, thereby enhancing the system adaptability and real-time performance.

The analysis of the ankle joint angle variations revealed a significant increase in the ankle dorsiflexion angle during the exo on condition on the level ground. An increased dorsiflexion angle can alleviate symptoms of foot drop in stroke patients and facilitate improved foot clearance during gait. In the stair environments, the ankle angle demonstrated notable improvements in the exo off and exo on conditions compared with the no exo condition. Furthermore, similar ankle angles were observed whether the exosuit was in an unpowered or powered state. During the stair ascent, the exosuit reduced the TA and MG muscle activations by 5.44% and 5.95%, respectively, compared with the unpowered condition. This reduction indicates that the exosuit effectively supports ankle movements during stair climbing, reducing muscle activation while maintaining comparable ankle angles. In contrast, during the stair descent, the exosuit demonstrated similar muscle activation regardless of its powered state. The assistance control pattern during a stair descent may not directly influence muscle activation. Gravitational forces decrease the load on the ankle joint during a stair descent, which may naturally reduce muscle activation without the exosuit. Consequently, despite the additional assistance provided by the exosuit, no significant differences in muscle activation were observed. The statistical analysis revealed that the TA muscle exhibited low statistical significance between the exo off and exo on conditions during the stair descent. Similarly, the MG muscle demonstrated low statistical significance between the no exo and exo off conditions on the level ground and during the stair ascent. These findings can be attributed to the experimental constraints, as this study was conducted in a limited environmental condition with a single participant, potentially contributing to the reduced significance in certain conditions. However, the TA muscle demonstrated high statistical significance between the no exo and exo on conditions during the stair descent, indicating a reliable reduction in the muscle activation due to the exosuit. Moreover, the MG muscle displayed high statistical significance between the exo on and exo off conditions, which further validated the performance of the exosuit.

Although the YOLO-LSTM system demonstrated an improved classification accuracy and response time, future work could explore the more advanced deep learning models or hybrid approaches. This could further refine the vision system’s ability to predict complex environmental transitions, especially in highly dynamic environments. Additionally, we may focus on training the model with a more diverse dataset that includes various terrains, lighting conditions, and user profiles. This would enhance the system’s robustness and ensure consistent performance in a wide range of real-world scenarios. Furthermore, future work should focus on refining the system’s ability to detect gait phases in real time, enabling more precise and context-specific control of the exosuit. Moreover, future studies should incorporate trials involving a diverse cohort of subjects, particularly stroke patients exhibiting foot drop symptoms and characterized by varying the levels of stroke severity and motor capabilities to validate the effectiveness of the exosuit in real-world scenarios.

7. Conclusions

This paper proposes a vision-based intelligent gait environment detection algorithm for an ankle exosuit aimed at stroke patients. By integrating YOLO’s advanced object classification capabilities with LSTM for spatio-temporal pattern recognition, the proposed system significantly improves environmental recognition and response times. The analysis indicated that the exosuit substantially increased the ankle dorsiflexion angles during level ground walking, which can mitigate foot drop symptoms and enhance foot clearance for stroke patients. During the stair ascent, the exosuit reduced the TA and MG muscle activation, demonstrating its capacity to support ankle movements while maintaining similar angles. Overall, the findings highlight the critical role of spatio-temporal feature extraction in enhancing the performance of adaptive exoskeletons. The integration of YOLO and LSTM in the vision system provides robust environmental recognition and response capabilities, making significant strides in improving gait stability and safety for individuals with foot drop. Future work should focus on validating these results in diverse real-world scenarios and with multiple subjects, particularly stroke patients, to further establish the exosuit’s effectiveness and generalization ability.

This approach establishes a framework for future developments in robotic rehabilitation technologies, emphasizing the importance of real-time environmental awareness in improving user outcomes. It advances the understanding of adaptive exoskeleton design through the integration of YOLO’s object classification capabilities with LSTM’s temporal pattern recognition. Furthermore, by demonstrating the effects of the exosuit on ankle dorsiflexion and muscle activation during various gait scenarios, this work provides new insights into advancing robotic interventions for stroke patients, thereby informing future designs and applications in therapeutic robotics.

Author Contributions

Conceptualization, G.Y. and B.B.K.; methodology, G.Y.; software, G.Y. and J.H.; validation, G.Y. and J.H.; investigation, G.Y.; resources, G.Y.; data curation, G.Y.; writing—original draft preparation, G.Y. and J.H.; writing—review and editing, G.Y. and B.B.K.; visualization, G.Y.; supervision, B.B.K.; project administration, B.B.K.; funding acquisition, B.B.K. All authors read and agreed to the published version of this manuscript.

Funding

This study was supported by the Translational Research Program for Rehabilitation Robots (NRCTR-EX22008), National Rehabilitation Center, Ministry of Health and Welfare, Republic of Korea.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Winstein, C.J.; Stein, J.; Arena, R.; Bates, B.; Cherney, L.R.; Cramer, S.C.; Deruyter, F.; Eng, J.J.; Fisher, B.; Harvey, R.L.; et al. Guidelines for Adult Stroke Rehabilitation and Recovery. Stroke 2016, 47, e98–e169. [Google Scholar] [CrossRef]

- Perry, J.; Garrett, M.; Gronley, J.; Mulroy, S. Classification of Walking Handicap in the Stroke Population. Stroke 1995, 26, 982–989. [Google Scholar] [CrossRef]

- Bohannon, R. Muscle strength and muscle training after stroke. J. Rehabil. Med. 2007, 39, 14–20. [Google Scholar] [CrossRef]

- Woolley, S.M. Characteristics of gait in hemiplegia. Top. Stroke Rehabil. 2001, 7, 1–18. [Google Scholar] [CrossRef]

- Olney, S.J.; Richards, C. Hemiparetic gait following stroke. Part I: Characteristics. Gait Posture 1996, 4, 136–148. [Google Scholar] [CrossRef]

- Balaban, B.; Tok, F. Gait Disturbances in Patients With Stroke. PM & R 2014, 6, 635–642. [Google Scholar] [CrossRef]

- Ramsay, J.W.; Wessel, M.A.; Buchanan, T.S.; Higginson, J.S. Poststroke muscle architectural parameters of the tibialis anterior and the potential implications for rehabilitation of foot drop. Stroke Res. Treat. 2014, 2014, 948475. [Google Scholar] [CrossRef]

- Moseley, A.; Wales, A.; Herbert, R.; Schurr, K.; Moore, S. Observation and analysis of hemiplegic gait: Stance phase. Aust. J. Physiother. 1993, 39, 259–267. [Google Scholar] [CrossRef]

- Chen, G.; Patten, C.; Kothari, D.H.; Zajac, F.E. Gait differences between individuals with post-stroke hemiparesis and non-disabled controls at matched speeds. Gait Posture 2005, 22, 51–56. [Google Scholar] [CrossRef]

- Li, S.; Francisco, G.E.; Zhou, P. Post-stroke hemiplegic gait: New perspective and insights. Front. Physiol. 2018, 9, 1021. [Google Scholar] [CrossRef]

- Moosabhoy, M.A.; Gard, S.A. Methodology for determining the sensitivity of swing leg toe clearance and leg length to swing leg joint angles during gait. Gait Posture 2006, 24, 493–501. [Google Scholar] [CrossRef]

- Roche, N.; Bonnyaud, C.; Geiger, M.; Bussel, B.; Bensmail, D. Relationship between hip flexion and ankle dorsiflexion during swing phase in chronic stroke patients. Clin. Biomech. 2015, 30, 219–225. [Google Scholar] [CrossRef]

- Agha, S.; Levine, I.C.; Novak, A.C. Determining the effect of stair nosing shape on foot trajectory during stair ambulation in healthy and post-stroke individuals. Appl. Ergon. 2021, 91, 103304. [Google Scholar] [CrossRef]

- Andriacchi, T.; Andersson, G.; Fermier, R.; Stern, D.; Galante, J. A study of lower-limb mechanics during stair-climbing. JBJS 1980, 62, 749–757. [Google Scholar] [CrossRef]

- Jacobs, J.V. A review of stairway falls and stair negotiation: Lessons learned and future needs to reduce injury. Gait Posture 2016, 49, 159–167. [Google Scholar] [CrossRef]

- Novak, A.C.; Brouwer, B. Strength and aerobic requirements during stair ambulation in persons with chronic stroke and healthy adults. Arch. Phys. Med. Rehabil. 2012, 93, 683–689. [Google Scholar] [CrossRef]

- Hsu, S.H.; Changcheng, C.; Lee, H.J.; Chen, C.T. Design and implementation of a robotic hip exoskeleton for gait rehabilitation. Actuators 2021, 10, 212. [Google Scholar] [CrossRef]

- Lee, H.D.; Park, H.; Hong, D.H.; Kang, T.H. Development of a series elastic tendon actuator (SETA) based on gait analysis for a knee assistive exosuit. Actuators 2022, 11, 166. [Google Scholar] [CrossRef]

- Bishe, S.S.P.A.; Nguyen, T.; Fang, Y.; Lerner, Z.F. Adaptive ankle exoskeleton control: Validation across diverse walking conditions. IEEE Trans. Med. Robot. Bionics 2021, 3, 801–812. [Google Scholar] [CrossRef]

- Xu, Y.; Li, W.; Chen, C.; Chen, S.; Wang, Z.; Yang, F.; Liu, Y.; Wu, X. A Portable Soft Exosuit to Assist Stair Climbing with Hip Flexion. Electronics 2023, 12, 2467. [Google Scholar] [CrossRef]

- Fang, Y.; Orekhov, G.; Lerner, Z.F. Improving the energy cost of incline walking and stair ascent with ankle exoskeleton assistance in cerebral palsy. IEEE Trans. Biomed. Eng. 2021, 69, 2143–2152. [Google Scholar] [CrossRef]

- Lee, H.D.; Park, H.; Seongho, B.; Kang, T.H. Development of a soft exosuit system for walking assistance during stair ascent and descent. Int. J. Control. Autom. Syst. 2020, 18, 2678–2686. [Google Scholar] [CrossRef]

- Han, Y.C.; Wong, K.I.; Murray, I. Gait phase detection for normal and abnormal gaits using IMU. IEEE Sens. J. 2019, 19, 3439–3448. [Google Scholar] [CrossRef]

- Pappas, I.P.; Popovic, M.R.; Keller, T.; Dietz, V.; Morari, M. A reliable gait phase detection system. IEEE Trans. Neural Syst. Rehabil. Eng. 2001, 9, 113–125. [Google Scholar] [CrossRef]

- Liu, D.X.; Xu, J.; Chen, C.; Long, X.; Tao, D.; Wu, X. Vision-assisted autonomous lower-limb exoskeleton robot. IEEE Trans. Syst. Man Cybern. Syst. 2019, 51, 3759–3770. [Google Scholar] [CrossRef]

- Yang, F.; Chen, C.; Wang, Z.; Chen, H.; Liu, Y.; Li, G.; Wu, X. Vit-based terrain recognition system for wearable soft exosuit. Biomim. Intell. Robot. 2023, 3, 100087. [Google Scholar] [CrossRef]

- Templer, J. The Staircase: Studies of Hazards, Falls, and Safer Design; MIT Press: Cambridge, MA, USA, 1995; Volume 2. [Google Scholar]

- Coleman, A.L.; Stone, K.; Ewing, S.K.; Nevitt, M.; Cummings, S.; Cauley, J.A.; Ensrud, K.E.; Harris, E.L.; Hochberg, M.C.; Mangione, C.M. Higher risk of multiple falls among elderly women who lose visual acuity. Ophthalmology 2004, 111, 857–862. [Google Scholar] [CrossRef]

- Coser, O.; Tamantini, C.; Soda, P.; Zollo, L. AI-based methodologies for exoskeleton-assisted rehabilitation of the lower limb: A review. Front. Robot. AI 2024, 11, 1341580. [Google Scholar] [CrossRef]

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A survey of large language models. arXiv 2023, arXiv:2303.18223. [Google Scholar]

- Zhang, C.; Chen, J.; Li, J.; Peng, Y.; Mao, Z. Large language models for human-robot interaction: A review. Biomim. Intell. Robot. 2023, 3, 100131. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, Y.; Vaidya, S.; Ruehle, F.; Halverson, J.; Soljačić, M.; Hou, T.Y.; Tegmark, M. Kan: Kolmogorov-arnold networks. arXiv 2024, arXiv:2404.19756. [Google Scholar]

- Cheon, M. Demonstrating the efficacy of kolmogorov-arnold networks in vision tasks. arXiv 2024, arXiv:2406.14916. [Google Scholar]

- Sohan, M.; Sai Ram, T.; Reddy, R.; Venkata, C. A review on yolov8 and its advancements. In Data Intelligence and Cognitive Informatics; Springer: Berlin/Heidelberg, Germany, 2024; pp. 529–545. [Google Scholar]

- Hussain, M. YOLO-v1 to YOLO-v8, the rise of YOLO and its complementary nature toward digital manufacturing and industrial defect detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Tricomi, E.; Mossini, M.; Missiroli, F.; Lotti, N.; Zhang, X.; Xiloyannis, M.; Roveda, L.; Masia, L. Environment-based assistance modulation for a hip exosuit via computer vision. IEEE Robot. Autom. Lett. 2023, 8, 2550–2557. [Google Scholar] [CrossRef]

- Ogihara, H.; Tsushima, E.; Kamo, T.; Sato, T.; Matsushima, A.; Niioka, Y.; Asahi, R.; Azami, M. Kinematic gait asymmetry assessment using joint angle data in patients with chronic stroke—A normalized cross-correlation approach. Gait Posture 2020, 80, 168–173. [Google Scholar] [CrossRef]

- Archea, J.; Collins, B.L.; Stahl, F.I. Guidelines for Stair Safety; The Bureau: Washington, DC, USA, 1979; Number 120. [Google Scholar]

- Inman, V.T.; Eberhart, H.D. The major determinants in normal and pathological gait. JBJS 1953, 35, 543–558. [Google Scholar]

- Shorter, K.A.; Li, Y.; Bretl, T.; Hsiao-Wecksler, E.T. Modeling, control, and analysis of a robotic assist device. Mechatronics 2012, 22, 1067–1077. [Google Scholar] [CrossRef]

- Whittle, M.W. Gait Analysis: An Introduction; Butterworth-Heinemann: Oxford, UK, 2014. [Google Scholar]

- Karekla, X.; Tyler, N. Maintaining balance on a moving bus: The importance of three-peak steps whilst climbing stairs. Transp. Res. Part Policy Pract. 2018, 116, 339–349. [Google Scholar] [CrossRef]

- Welch, G.; Bishop, G. An Introduction to the Kalman Filter; ACM: New York, NY, USA, 1995. [Google Scholar]

- Winter, D.A. Biomechanics and Motor Control of Human Movement; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Zeng, H.; Zhao, Y. Sensing movement: Microsensors for body motion measurement. Sensors 2011, 11, 638–660. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A comprehensive review of yolo architectures in computer vision: From yolov1 to yolov8 and yolo-nas. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Graves, A.; Graves, A. Long short-term memory. In Supervised Sequence Labelling with Recurrent Neural Networks; Springer: Berlin, Heidelberg, 2012; pp. 37–45. [Google Scholar]

- Allison, G.; Marshall, R.; Singer, K. EMG signal amplitude normalization technique in stretch-shortening cycle movements. J. Electromyogr. Kinesiol. 1993, 3, 236–244. [Google Scholar] [CrossRef] [PubMed]

- Burden, A. How should we normalize electromyograms obtained from healthy participants? What we have learned from over 25 years of research. J. Electromyogr. Kinesiol. 2010, 20, 1023–1035. [Google Scholar] [CrossRef] [PubMed]

- Mello, R.G.; Oliveira, L.F.; Nadal, J. Digital Butterworth filter for subtracting noise from low magnitude surface electromyogram. Comput. Methods Programs Biomed. 2007, 87, 28–35. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).