1. Introduction

In today’s large-scale production environments, equipment often operates under high-load conditions, which accelerates the wear and tear of critical components, significantly increasing the risk of equipment failure and leading to severe economic losses and unplanned downtime. Traditional maintenance strategies, such as corrective maintenance, time-based maintenance, and condition-based maintenance, are no longer sufficient to meet the demands of modern, efficient operations [

1]. As a key technology in Prognostics and Health Management (PHM), remaining useful life (RUL) prediction plays a crucial role in providing timely and accurate assessments of equipment degradation, offering valuable insights for predictive maintenance. However, the complexity of modern industrial systems, particularly in the context of temporal variability and operational complexity, results in progressive or sudden changes in equipment conditions over time, leading to significant differences in system states at various points. Moreover, under diverse operating conditions, the equipment’s state and RUL are greatly affected, and the interaction between different feature dimensions becomes highly complex [

2]. These challenges highlight the need for advanced predictive methods that can better capture the intricate interactions between temporal features and the long-term and short-term dependencies critical for accurate RUL prediction.

Currently, remaining useful life prediction methods can be categorized into three main types: physics-based models, data-driven models, and hybrid models. Physics-based models rely on understanding the failure mechanisms of equipment, analyzing its degradation from fundamental principles, failure mechanisms, and key components. However, as equipment becomes increasingly complex, the construction of physics-based models necessitates fitting a large number of parameters, making model building overly challenging [

3]. Hybrid models integrate the strengths of both physics-based and data-driven approaches, improving the accuracy of RUL prediction. Nevertheless, due to their inherent complexity, their application in real-world engineering is limited. In contrast, data-driven models, which leverage technologies such as big data and the Internet of Things (IoT), automatically extract deep features from vast historical datasets, significantly reducing the need for domain expertise and showing broader application prospects [

4].

Data-driven RUL prediction methods can be broadly classified into two categories: statistical learning-based methods and machine learning-based methods. Statistical methods aim to model the degradation process of equipment by constructing statistical models to simulate the probability distribution of equipment failure. Machine learning-based approaches focus on extracting useful degradation information from historical monitoring data to address the RUL prediction as a regression problem. However, traditional machine learning methods heavily rely on domain expertise for feature engineering. With the development of deep learning technologies, an increasing number of studies have shifted toward deep learning, which can automatically extract deep features from data, reducing the dependence on manual feature engineering. Therefore, this paper will focus on exploring and analyzing the application of deep learning methods in RUL prediction [

5].

With the advancement of sensor deployment and computing power, deep learning has enabled neural networks to automatically extract deeper features from historical data, resulting in more accurate remaining useful life predictions. Currently, the more widely used models are Recurrent Neural Networks (RNNs) and Convolutional Neural Networks (CNNs). RNNs excel at capturing temporal features, while CNNs are effective at extracting multi-scale features [

6]. For example, RNNs are often employed to capture regression features in time series data. Khandelwal et al. proposed an RNN-based model for predicting the remaining useful life of lithium-ion batteries by analyzing battery degradation under various operating conditions [

7]. Sayah et al. proposed a robustness testing framework for deep LSTM models in RUL prediction, analyzing the model’s resilience and performance under stress functions, and validated it using the C-MAPSS dataset [

8]. However, since the input of each time step in an RNN depends on the output of the previous time step, there is a risk of information loss, and the vanishing gradient problem can occur when processing long sequences. In contrast, CNNs can process data in parallel, avoiding the information loss and vanishing gradient issues that arise in RNNs due to sequential processing. When handling time series data, CNNs often employ sliding time windows and perform convolution operations along the temporal dimension to capture the mapping relationships between each time window and the RUL labels. For instance, Keshun et al. proposed a 3D attention-enhanced hybrid neural network model based on CNN and BiLSTM, which extracts local features through a convolutional network and leverages the BiLSTM framework to learn long-dependency nonlinear features, while incorporating a 3D attention module to enhance the model’s learning capability and interpretability [

9]. Additionally, in recent years, Multilayer Perceptrons (MLPs) have shown great potential in time series prediction. With their strong representation learning capabilities, MLPs can effectively capture complex temporal dependencies and patterns. For instance, The MLP-Mixer architecture proposed by Tolstikhin et al., although originally designed for image processing, has proven effective for RUL prediction by mixing local features at each time step and global features across time steps. This approach captures temporal degradation information without relying on convolutions or attention mechanisms, offering a new way to process time series data [

10]. Although these studies have improved model accuracy in RUL prediction, certain limitations remain.

Firstly, many of these methods focus primarily on feature extraction along the temporal dimension, while neglecting the diversity of features in other dimensions. For example, Zhang et al. proposed a multi-head dual sparse self-attention network, which introduced ProbSparse and LoSparse strategies to optimize the efficiency of time series processing and reduce computational complexity [

11]. Zhang et al. developed a novel bidirectional gated recurrent unit with a temporal self-attention mechanism (BiGRU-TSAM) that assigns self-learned weights to RUL prediction tasks at different time points, improving the model’s ability to capture temporal dependencies [

12]. Li et al. introduced an RUL prediction method that combines domain knowledge with gated recurrent units (GRUs) and a dual attention mechanism, using temporal attention along with domain knowledge to enhance both accuracy and interpretability [

13]. Chen et al. utilized convolutional neural networks to extract temporal features and combined them with a Bayesian long short-term memory network (B-LSTM) to construct a multivariate time series prediction model [

14]. Ekambaram et al. introduced a lightweight multilayer perceptron architecture called TSMixer, showcasing the potential of MLP in time series prediction, though it lacks effective interaction across different feature scales [

15]. Zhao et al. combined GRU with a multi-head attention mechanism to encode temporal features from battery capacity time series data, while using a re-zero MLP-Mixer model to extract higher-level features, enhancing RUL prediction accuracy for lithium-ion batteries [

16]. Jiang et al. proposed a dual-attention-based multiscale convolutional neural network (DAMCNN) that utilizes precise time stage division and multiscale temporal feature extraction to efficiently predict the remaining useful life of rolling bearings [

17]. Wang et al. proposed a hybrid model combining Bi-LSTM, Temporal Convolutional Networks (TCNs), and an attention mechanism, which captures time series features at different levels and enhances the ability to handle dynamic degradation in complex systems [

18]. Mao et al. proposed a method combining Multi-Scale Convolutional Neural Networks (MCNNs), Decomposition Linear Layer, and Conformal Quantile Regression (CQR), which improves the accuracy of RUL predictions by capturing both long-term trends and local variations in time series features [

19]. Lei et al. proposed an interpretable operational condition-aware attention-based domain adaptive network, which aligns the temporal features of the source and target domains through a domain adaptation method and eliminates the impact between time-varying operating conditions and monitoring data via an Operational Condition Attention (OCA) subnetwork, thereby improving the model’s predictive accuracy under complex conditions [

20]. Wang et al. proposed a prediction model based on the self-attention mechanism and Temporal Convolutional Network, which captures temporal dependencies through TCN and adaptively assigns weights to time features using the self-attention mechanism, improving the accuracy of remaining useful life predictions [

21]. Ren et al. proposed a continuous learning framework based on kernel-space gradient projection, which uses a multi-kernel group convolution module to combine convolution operations at different scales, automatically capturing degradation features over time and adaptively adjusting attention allocation based on operational condition information [

22]. Although these methods have made significant progress in temporal feature extraction, they fail to adequately consider critical information from the feature dimension, potentially leading to the loss of key global information and feature interrelationships, which could negatively impact RUL prediction performance under complex working conditions.

Secondly, many of these studies focus on local feature extraction and specific scales, lacking in-depth exploration of cross-scale feature correlations. For instance, Zhang et al. proposed a parallel hybrid neural network combining 1D convolutional neural networks (1-DCNNs) and bidirectional gated recurrent units (BiGRUs) to simultaneously extract both temporal and feature dimension information from historical data for RUL prediction [

23]. Remadna et al. introduced a hybrid deep learning model that combines CNN and bidirectional long short-term memory networks, extracting features across both the temporal and feature dimensions to enhance RUL prediction performance [

24]. En et al. proposed a dual-mixer model based on supervised contrastive learning, which progressively integrates temporal and feature dimensions while maintaining the consistency of relationships between sample features and degradation processes through a training method called Feature Space Global Relationship Invariance (FSGRI) [

25]. Zhang et al. proposed a Self-Attention Mechanism Network with Spatio-Temporal Feature Extraction (SAMN-STFE), which utilizes Convolutional Neural Networks and Bidirectional Long Short-Term Memory networks to extract spatial and temporal features [

26]. Deng et al. proposed a remaining useful life prediction method for aero engines based on a CNN-LSTM-Attention framework, where local features are extracted using CNN and then combined with LSTM and an attention mechanism for RUL prediction [

27]. While these methods improve performance in processing time series data, they lack a flexible weight allocation mechanism during feature fusion, making it difficult to effectively adjust the model’s focus when dealing with different types of degradation information, leading to suboptimal results.

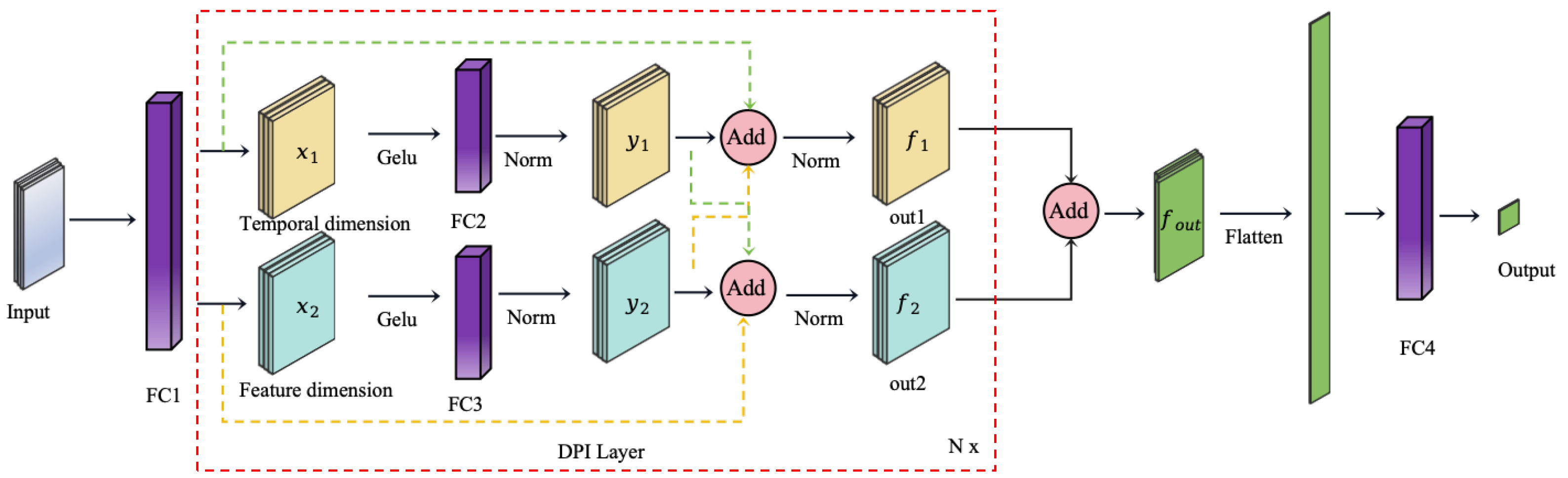

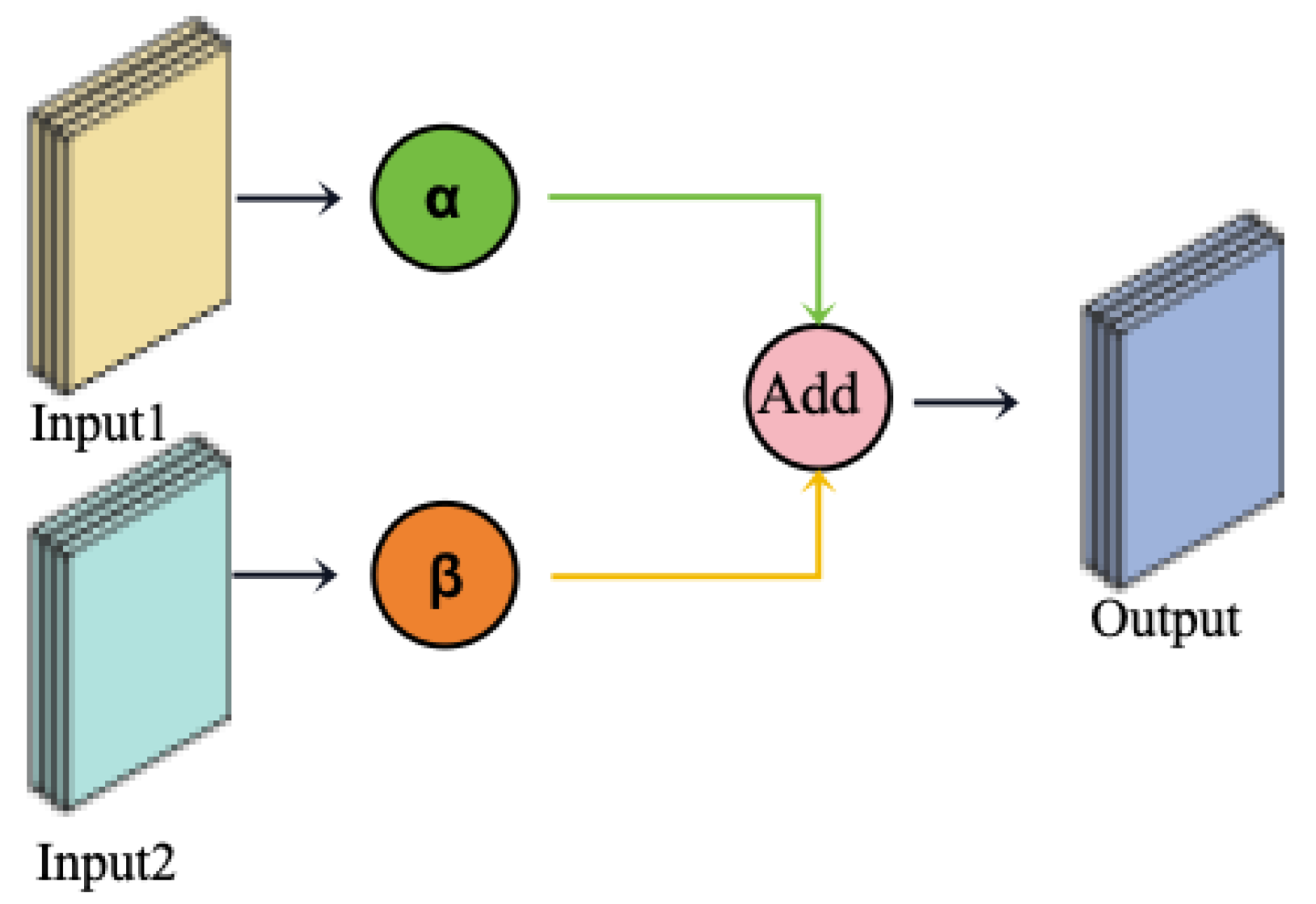

Inspired by the MLP-Mixer architecture, this paper proposes a Dual-Path Interaction Network model to address the aforementioned issues. The model captures complex relationships between the temporal and feature dimensions through a dual-path structure, enhancing information interaction during feature extraction, thereby improving the quality of feature representation and the accuracy of predictions. This approach effectively avoids the limitations of single-path structures, which may overlook critical features. However, despite the dual-path structure’s ability to capture both temporal and feature dimension information, the feature extraction process still focuses mainly on local and specific scales, failing to fully cover cross-scale information correlations. To further enhance the comprehensiveness and richness of feature extraction, this paper designs a Multiscale Temporal-Feature Convolution Fusion Module (MTF-CFM). This module introduces multiple convolution kernels of different scales through a 1D Convolutional Neural Network for multiscale feature extraction, and generates more expressive feature representations for both the temporal and feature dimensions through concatenation and splitting operations. The multiscale convolution kernel design enables more comprehensive capture of information across different temporal and feature scales. Nevertheless, the effectiveness of feature interaction and fusion largely depends on the proper allocation and dynamic adjustment of weights. To address this, the paper further introduces a Dynamic Weight Adaptation Module (DWAM), which enables dynamic interaction and adaptive weight adjustments between the temporal and feature dimensions, enhancing the model’s predictive performance and flexibility. As a result, the proposed model significantly improves RUL prediction accuracy.

The contributions of this paper are as follows:

Proposing the Dual-Path Interaction Network architecture: This paper introduces a novel dual-path interaction network architecture, which utilizes a dual-path structure to capture the complex relationships between the temporal and feature dimensions. By preserving more information interactions during the feature extraction process, the dual-path structure improves the quality of feature representations and the accuracy of predictions, effectively avoiding the issue of single-path models neglecting critical features.

Designing the Multiscale Temporal-Feature Convolution Fusion Module (MTF-CFM): To further enhance the comprehensiveness and richness of feature extraction, this paper designs the MTF-CFM module. By introducing multiple convolutional kernels of different scales for multiscale feature extraction, combined with operations such as concatenation and splitting, the module captures features across both temporal and feature dimensions, generating feature representations with richer expressive capabilities.

Introducing the Dynamic Weight Adaptation Module (DWAM): To address the challenges of weight allocation and dynamic adjustment in feature interaction and fusion, this paper introduces the Dynamic Weight Adaptation Module. This module enables dynamic interaction and adaptive weight adjustment between the temporal and feature dimensions, improving the model’s prediction performance and flexibility, thereby enhancing the accuracy of RUL prediction.

Experimental validation on the C-MAPSS dataset: Comprehensive comparative and ablation experiments were conducted on the C-MAPSS dataset to systematically validate the effectiveness and superiority of the proposed method. The results show that the proposed approach significantly outperforms existing methods in terms of RMSE and other evaluation metrics, highlighting its practical applicability. In particular, independent experiments on the MTF-CFM module demonstrated its wide applicability and substantial improvements in model performance across various network architectures, further underscoring its versatility and generalization capability.

The rest of this paper is structured as follows,

Section 2 introduces the proposed method.

Section 3 conducts a comprehensive analysis and comparison of the proposed method and validates it on the C-MAPSS dataset. Finally, the research work of this paper is summarized, and future work is discussed.

3. Experiment

In this section, we focus on the C-MAPSS dataset, which is very widely used in the field of RUL, to compare with other methods.

Section 3.1 presents information about this dataset,

Section 3.2 performs preprocessing work on the dataset,

Section 3.3 performs hyperparametric simulation experiments,

Section 3.4 performs controlled experiments to verify the validity of the model, and

Section 3.5 performs ablation experiments to verify the validity of the module.

In addition, all experiments conducted in this paper were carried out in an experimental platform with PyTorch2.2.2, Python3.9.19, installed with NVIDIA GeForce RTX4080. And in order to avoid generating randomness, all experiments were replicated five times and averaged.

3.1. Dataset Description

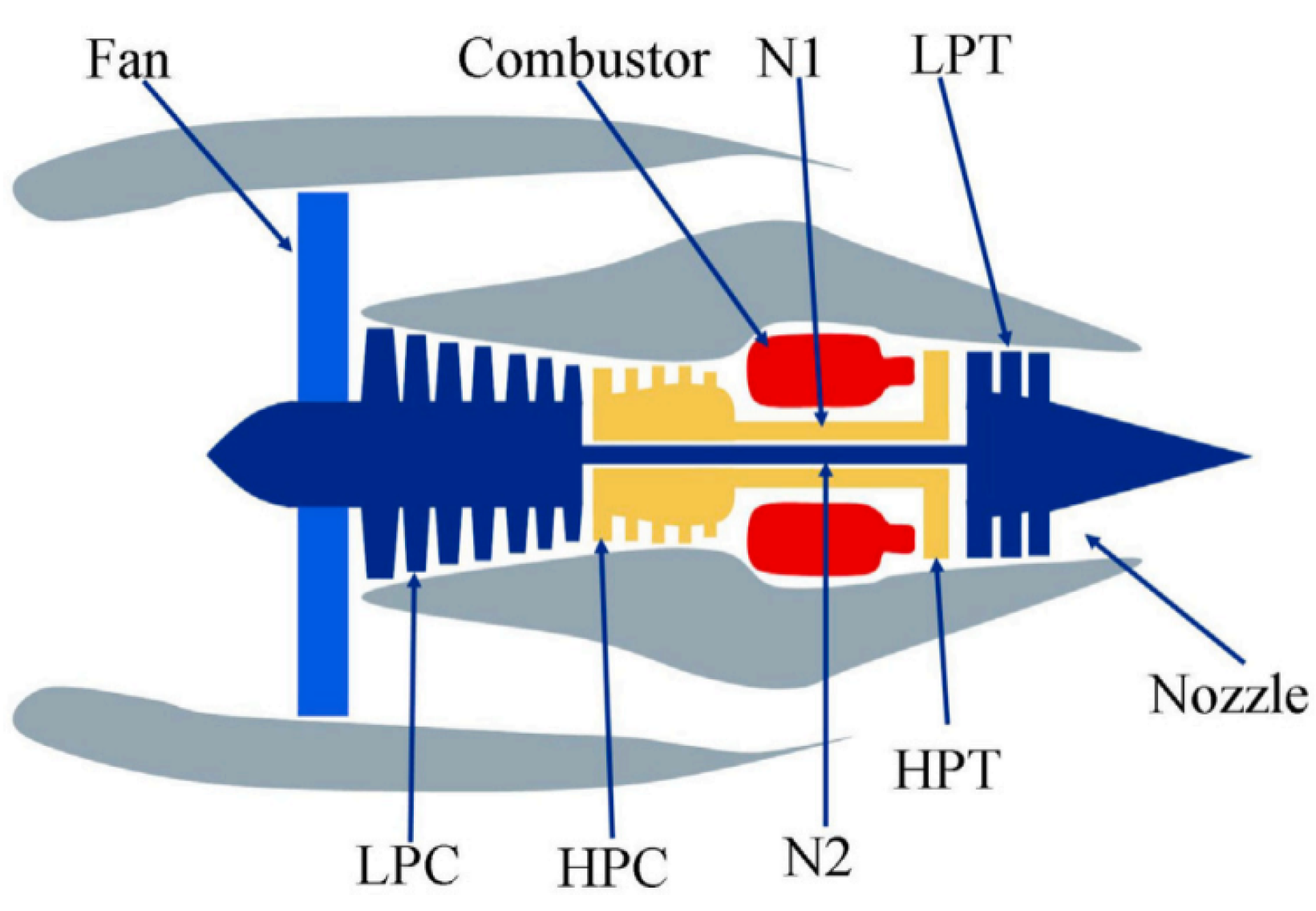

This paper focuses on experiments in the C-MAPSS dataset, which is very widely used in the PHM field, and is a simulated engine degradation dataset developed and made available by NASA, specifically for health management studies of aero engines.

Figure 6 shows the architecture of the aircraft engine, with specific parameters detailed in

Table 1. Besides

Table 2 provides detailed information on the data collected by the deployed sensors.

The C-MAPSS dataset consists of four different sub-datasets: FD001, FD002, FD003, and FD004. Each sub-dataset contains multiple instances of engine degradation, and for each instance, there are several time steps of data recorded before the occurrence of a failure. Each time step’s record includes various sensor measurements and operational conditions. The dataset description is shown in

Table 3.

In addition, since the RUL prediction problem of the engine can be applied to the regression problem, for this reason, the metrics RMSE, MAPE and Score, which are commonly used in RUL prediction, are adopted as the evaluation indexes of the model performance.

where

N is the number of test engines, and

and

represent the

ith engine’s predicted value and real value, respectively.

3.2. Data Pre-Processing

3.2.1. Data Selection and Normalization

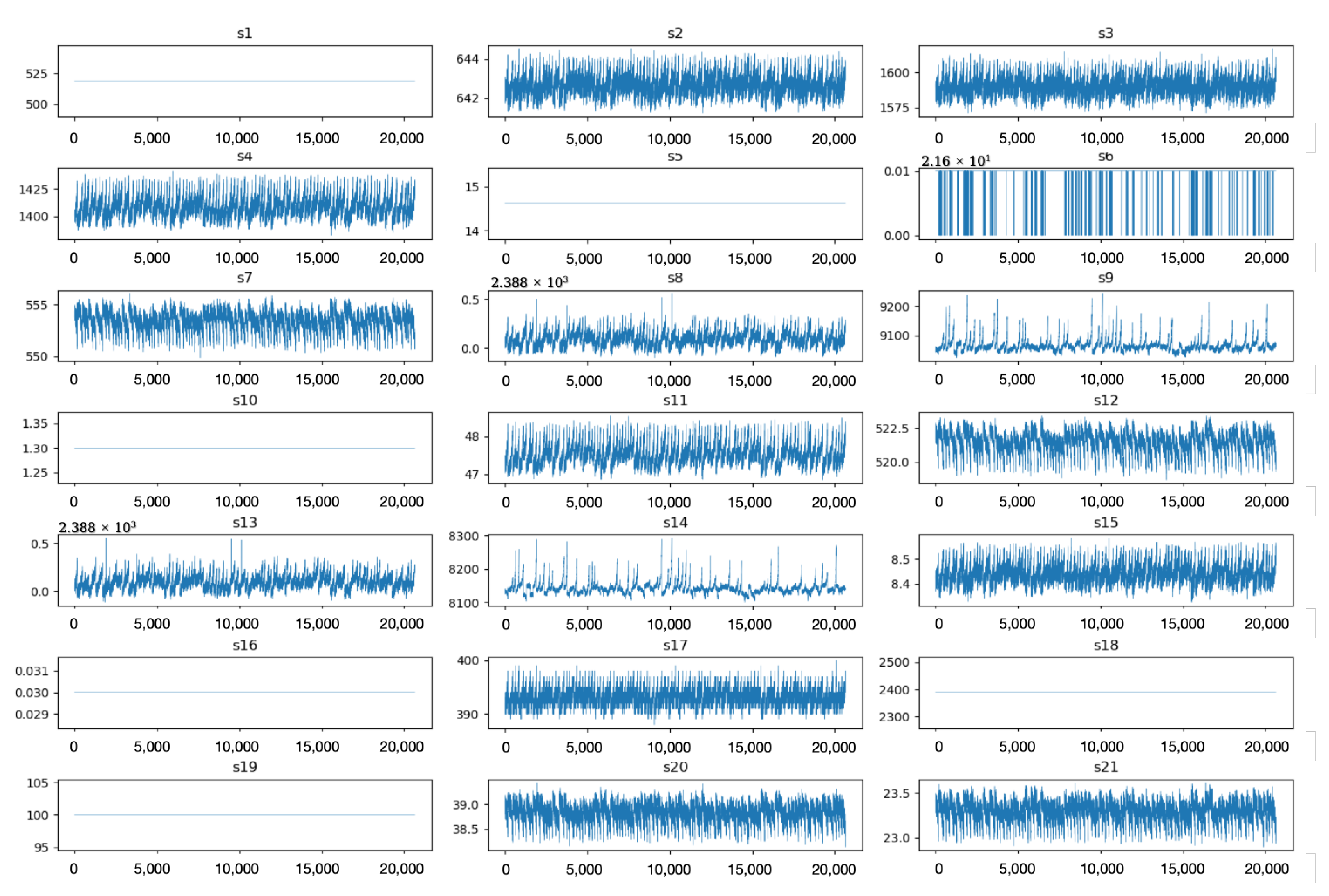

In real-world industrial applications, the contribution of data from different sensors to remaining useful life (RUL) prediction can vary significantly. Therefore, to ensure data quality, we performed an analysis on the 24 deployed sensors in the C-MAPSS dataset to remove those with poor correlation. Specifically, we analyzed the data collected from all sensors in the FD001 subset. As shown in

Figure 7, the values of sensors S1, S5, S10, S16, S18, and S19 remain nearly constant throughout the entire lifecycle of the aircraft engine, indicating that these sensors have weak relevance to the RUL prediction task.

Further confirmation was made by calculating the Pearson correlation coefficients between the sensor readings and RUL. As shown in

Figure 8, the results confirm that sensors S1, S5, S10, S16, S18, and S19 have correlation coefficients close to 0 with RUL, verifying their weak contribution to the prediction task. Consequently, we selected sensors S2, S3, S4, S6, S7, S8, S9, S11, S12, S13, S14, S15, S17, S20, and S21 as inputs to our model.

Furthermore, in the C-MAPSS dataset, the varying ranges of multiple types of sensor data resulted in significant differences in absolute values and magnitudes. To address this issue, we applied the Min-Max normalization strategy to scale all sensor values to the range of [−1, 1], eliminating scale differences. This ensures that high-value features do not disproportionately influence the model, while low-value features are not overlooked. Moreover, this normalization helps reduce floating-point errors in numerical calculations, improves numerical stability, and accelerates model convergence.

where

represents the normalized data,

X is the original data,

and

represent the maximum and minimum values in the data.

3.2.2. Slide Window

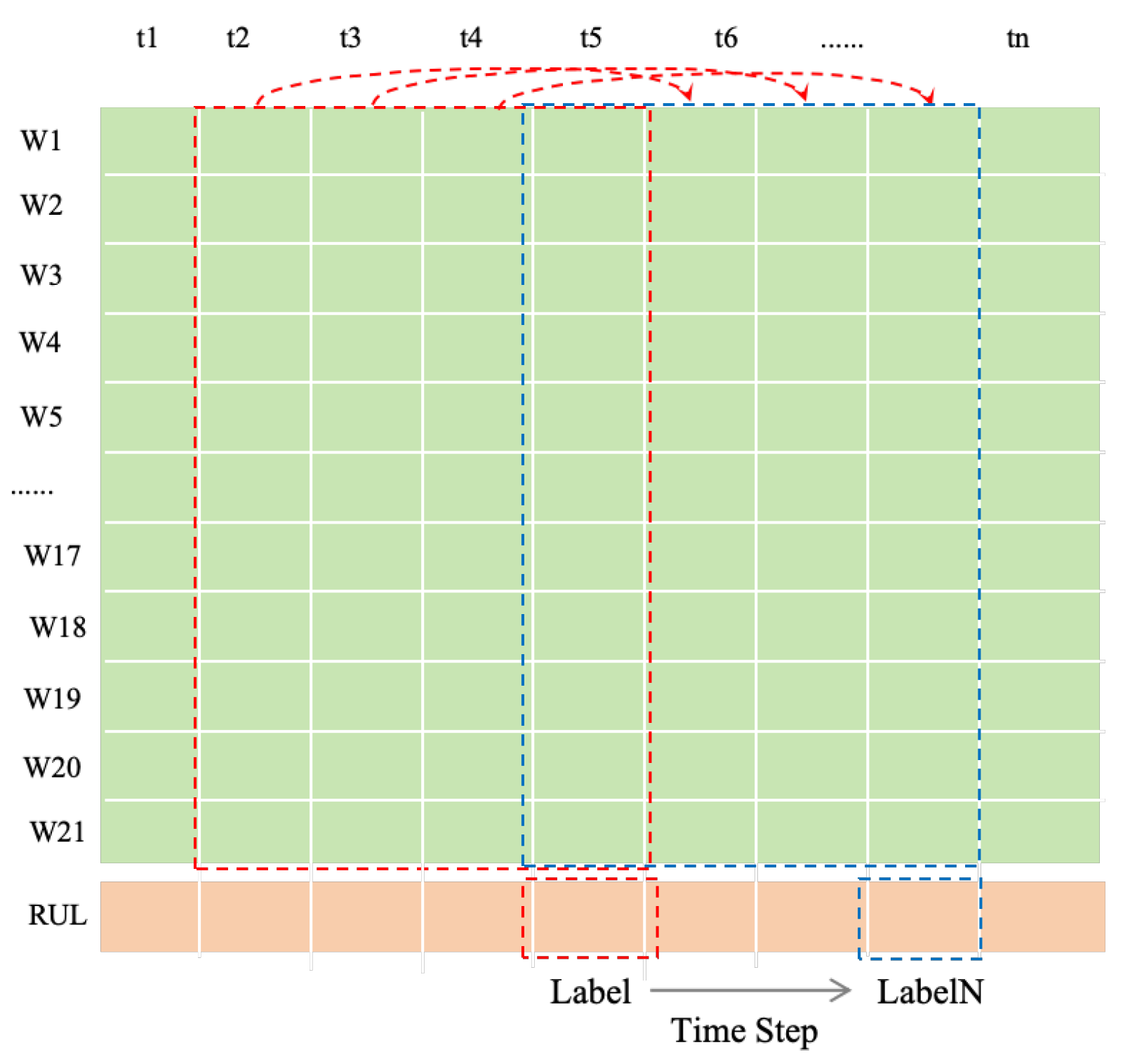

In time series data-related tasks, the primary focus is on analyzing the feature changes between adjacent data points of a variable at different time steps. A sliding window is often used as an effective method to extract useful features. In the C-MAPSS dataset, for the task of predicting the remaining useful life of engines, using data from multiple time steps typically captures more detailed degradation trend information compared to single time step data, significantly improving the model’s predictive performance, as illustrated in

Figure 9.

3.2.3. RUL Label Reconstruction

The entire lifecycle of the engine can be divided into two phases: the healthy phase and the degradation phase. During the healthy phase, the RUL remains at a relatively high and constant value, whereas in the degradation phase, the RUL declines significantly. To more effectively capture the engine’s lifecycle and provide more accurate RUL predictions, a piecewise linear model is employed. Multiple studies have validated that setting the RUL to 125 during the healthy phase is effective, and in this work, we adopt the same approach by setting the RUL to 125. After constructing samples using a sliding window approach, the RUL of the last cycle within each sample window is used as the label for that sample, as illustrated in

Figure 10.

Additionally, to reduce the impact of varying degradation rates between different engines on model optimization, as shown in

Figure 11, the RUL labels are standardized [

25]. This involves transforming the RUL labels from specific absolute values (number of cycles) into relative proportions (RUL percentage). By doing so, samples with different degradation rates can be compared on the same scale, thereby enhancing the model’s generalization ability and improving optimization performance.

where

is the standardized RUL label with the value range of [0, 1], and

is the original RUL label, and is the maximum lifecycle of this engine.

3.3. Hyperparameter Experiments

In this section, we study two key hyperparameters in the model: the window size and the number of DPI layers, and preliminarily verify the effectiveness of the model to explore the influence of hyperparameters under different complex working conditions.

The time window size

W determines the length of the input data sequence that the model processes, directly influencing its ability to capture temporal dependencies. In related studies [

11,

12,

23], it has been suggested to use

W = 30 as the optimal window size for handling the C-MAPSS dataset to capture these dependencies. However, [

29] tested various window sizes (1, 10, 20, 30, 40, 50, 60) to assess their impact on model performance, offering a broader range of options. Based on this, we adopt the values

to explore the optimal

W for our proposed model, with

serving as the baseline for our experiments.

The number of DPI layers

N controls the depth of feature extraction and the interaction between temporal and feature dimensions. In similar research [

25], an in-depth exploration of

N was conducted, testing values

to determine their effect on model performance, with

yielding the best results. Drawing from this work, we set the range of

N to

to investigate the optimal number of layers for our model, again using

as the baseline.

Therefore, in our final setup, and , with and serving as the baseline model for the hyperparameter experiments. The root mean square error (RMSE) metric is used to identify the optimal combination of hyperparameters for our model.

Based on the results in

Table 4, when

N is fixed, the RMSE metric first decreases and then increases as the window size

W increases from 10 to 60. The model achieves the best performance across all four datasets when

for the current number of DPI layers

N. Similarly, when

W is fixed, the RMSE also follows a similar trend of first decreasing and then increasing as

N increases from 2 to 6. The model performs best across the four datasets when

for the current window size

W. Therefore, from a comprehensive analysis, the optimal parameter combination for our model is

and

. Compared to the baseline model (

,

), the RMSE decreases by 12.70%, 6.47%, 7.33%, and 11.08% on the FD001, FD002, FD003, and FD004 datasets, respectively.

Furthermore, as shown in

Figure 12, the window size

W directly influences the model’s ability to capture temporal features. Smaller window sizes may fail to capture long-term trends and dependencies, leading to reduced performance, particularly under complex operating conditions. On the other hand, if the window size

W is too large, it may capture excessive temporal information, introducing unnecessary redundancy that increases computational complexity and the risk of overfitting, thereby reducing the mode’s generalization ability. Regarding the number of DPI layers

N, this parameter controls the depth of feature extraction and the interaction between temporal and feature dimensions. With fewer layers, the model may be unable to adequately capture complex patterns in the data, negatively impacting performance. Conversely, too many layers may introduce noise and increase the risk of overfitting, thereby compromising generalization.

Thus, based on the experimental results for both window size W and the number of DPI layers N, an intermediate window size and an appropriate number of feature extraction layers enable the model to achieve optimal predictive performance without introducing redundancy or overfitting. The combination of and is identified as the optimal parameter setting for our model.

Therefore, all the hyperparameter settings adopted in the experiments of this paper are shown in

Table 5.

3.4. Comparison Experiments

In this chapter, we compare our method with state-of-the-art approaches in the field using the C-MAPSS dataset to validate the effectiveness of the model.

LSTM [

8]: A standard Long Short-Term Memory network for time series prediction.

BTSAM [

12]: A hybrid neural network that combines 1D Convolutional Neural Networks with Bidirectional Gated Recurrent Units in parallel.

CNN-GRU [

23]: A multi-dimensional feature fusion network that combines Convolutional Neural Networks and Gated Recurrent Units.

DAMCNN [

17]: A Deep Attention Mechanism Convolutional Neural Network designed to capture long-term dependencies in time series data.

IMDSSN [

11]: An Integrated Multi-head Dual Sparse Self-Attention Network (IMDSSN) based on an improved Transformer.

MLP-Mixer [

10]: A deep network architecture for multidimensional feature extraction that can be used for time series data.

TS-Mixer [

15]: A time series-based Mixer architecture designed to extract and mix features across multiple temporal windows.

Dual-Mixer [

25]: A dual-path feature extraction network that leverages the MLP-Mixer framework for parallel temporal and feature dimension interaction and extraction.

In addition, “-M” refers to the incorporation of the Multiscale Temporal-Feature Convolution Fusion Module, which is used to verify the effectiveness of this module. Models with the suffix indicate the inclusion of the MTF-CFM module, representing an improvement to the original model. The column labeled “Improvement” indicates the performance gains, with bold text highlighting the globally optimal performance metrics.

From

Table 6, we can see that our proposed method achieved RMSE values of 0.0969, 0.1316, 0.086, and 0.1148 on the four datasets; MAPE values of 9.72%, 14.51%, 8.04%, and 11.27%; and Score values of 59.93, 209.39, 67.56, and 215.35. To comprehensively evaluate the performance of each model across all subsets, we calculated the average values for various metrics.

Compared to the second-best method, our proposed approach improved RMSE by 4.81%, 2.95%, 2.82%, and 7.64% across the four datasets, with an average improvement of 4.56%. For MAPE, the improvements were 8.47%, 12.91%, 5.74%, and 19.44%, with an average improvement of 11.64%. For the Score metric, the improvements were 2.43%, 5.06%, 4.35%, and 12.25%, averaging 6.02%.

To validate the effectiveness of the MTF-CFM module, we computed the average improvements for all models across the four datasets. RMSE improved by 5.38%, 6.96%, 6.51%, and 9.63%, with an average improvement of 7.12%. For MAPE, the improvements were 8.51%, 13.18%, 9.53%, and 11.24%, averaging 10.62%. The Score metric improved by 5.09%, 7.78%, 6.44%, and 9.52%, with an average improvement of 7.21%.

These results demonstrate that our proposed model outperforms current state-of-the-art methods across all four datasets, with particularly strong performance on the more complex FD002 and FD004 datasets. This indicates that our model can more accurately predict RUL under complex conditions. Furthermore, the MTF-CFM module consistently enhances RUL prediction performance across all scenarios, showcasing its robustness and potential for wide applicability.

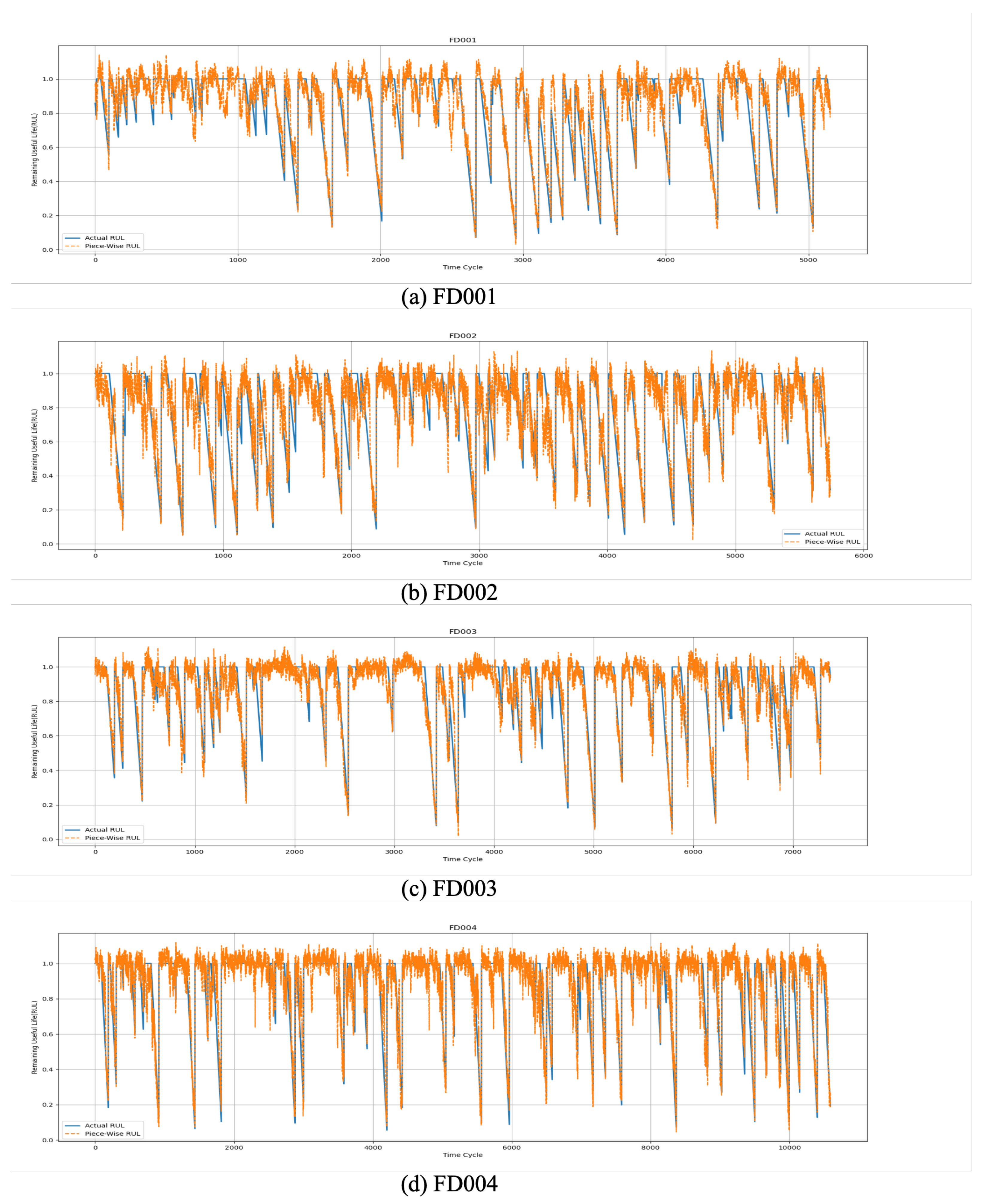

To provide a more intuitive demonstration of our algorithm’s performance on the C-MAPSS dataset, we conducted a visualization analysis comparing the actual and predicted RUL values for selected engines, as shown in

Figure 13. From the figure, it can be observed that, aside from slight deviations at certain points, the predicted RUL values closely align with the actual RUL values in most cases. This result highlights the high accuracy of our proposed method in predicting engine RUL.

To further supplement the explanation of the model parameters, we used the most complex FD004 dataset from the C-MAPSS dataset as an example, supplementing the experiment from three perspectives: the number of parameters, the model’s average running time, and memory usage.

According to

Table 7, our proposed model demonstrates a balanced performance in terms of parameter count, average runtime, and memory usage. While the number of parameters in the proposed model (81,944) is slightly higher than some models, such as TS-Mixer (26,897), it remains significantly lower than the BTSAM model (2,824,528), indicating that our model strikes a good balance in terms of complexity. In terms of runtime, the proposed model averages 244.322 s, which, although slower than CNN-GRU (78.016 s) and DAMCNN (58.9 s), is notably more efficient than the more complex BTSAM model (377.798 s). Additionally, the proposed model exhibits moderate memory usage at 467 MiB, slightly exceeding TS-Mixer (349 MiB) and MLP-Mixer (340 MiB), but still demonstrating better memory efficiency than most other models.

Considering the inherent randomness of deep learning models, we conducted five repeated experiments for each dataset and included the standard deviation (

) in

Table 6 to provide a more comprehensive evaluation. The standard deviation reflects the degree of dispersion in the results, offering insight into the model’s stability and consistency across different runs. From the distribution of

values in

Table 6, it is evident that our proposed model maintains relatively low RMSE and MAPE standard deviations across all four datasets. For instance, in the FD001 dataset, the RMSE

is 0.0011, while in the FD004 dataset, it is 0.0029, indicating minimal fluctuation in prediction errors and high stability. In contrast, the CNN-GRU and IMDSSN models exhibit higher standard deviations, especially in the FD003 and FD004 datasets, where the larger variations in RMSE and MAPE

values suggest less reliable performance under complex conditions. To further substantiate the effectiveness of the MTF-CFM module, we employed a

t-test to compare the model’s performance before and after incorporating the module and treated the models with and without the MTF-CFM module as paired samples. After confirming that the differences between the paired samples follow a normal distribution, we performed a paired

t-test using the following hypotheses:

Hypothesis 1. : The MTF-CFM module has no effect on improving model performance.

Hypothesis 2. : The MTF-CFM module has a significant effect on improving model performance.

The formula for the

t-test is as follows:

where

is the mean difference between the paired samples,

is the standard deviation of the differences,

is the number of paired observations.

In

Table 8, with the exception of the MAPE metric for the CNN-GRU model, the test statistics for all other models are less than 0, indicating that the models incorporating the MTF-CFM module outperform those without it. Additionally, since

p < 0.05, we reject the null hypothesis

, at the

significance level, accepting the alternative hypothesis

. This confirms a statistically significant difference between the two models. Therefore, based on the provided metrics, the model with the MTF-CFM module demonstrates superior performance compared to the model without it.

3.5. Ablation Experiment

In this section, we design four sets of ablation experiments to validate the DPIN architecture, the MTF-CFM module, and the DWAM module within the model. We also analyze the effectiveness of the proposed MTF-CFM module in comparison to existing attention-based modules.

DPIN-Del: This variant removes the feature interaction part of the dual-path network within the DPIN architecture, leaving only the dual-path outputs.

DPIN: This is the baseline architecture of the model, designed to verify the importance of dual-path feature interaction by comparison with 1.

DPIN-MTF-CFM: This variant combines the baseline architecture with the proposed MTF-CFM module, aiming to validate the effectiveness of the MTF-CFM module.

DPIN-DWAM: This variant integrates the baseline architecture with the DWAM gating unit, aiming to verify the effectiveness of the DWAM unit.

DPIN-MTF-CFM-DWAM: This represents the full architecture of the model, serving as the benchmark for the ablation experiment.

The experimental results in

Table 9 show that after the interaction of the dual-path network, the model’s RMSE improved by an average of 4.40%, MAPE by 7.53%, and Score by 5.38%. This indicates that effective interaction between the time and feature dimensions is crucial for improving the accuracy and stability of RUL prediction.

After adding the MTF-CFM module, RMSE improved by an average of 2.76%, MAPE by 6.09%, and Score by 5.38%. These results demonstrate that the Multiscale Temporal-Feature Convolution Fusion Module significantly enhances the model’s ability to capture complex degradation patterns. When the DWAM module was added, RMSE improved by an average of 3.46%, MAPE by 4.96%, and Score by 5.30%, indicating that DWAM enables the model to dynamically adjust weights according to changes in the input features, thereby improving the model’s flexibility and adaptability when handling varying and complex equipment conditions.

When both the MTF-CFM and DWAM modules were integrated, RMSE improved by an average of 8.23%, MAPE by 19.63%, and Score by 11.88%. This comprehensive improvement suggests that the synergy between these two modules allows the model to achieve a higher level of performance in capturing multi-dimensional feature information and performing dynamic adjustments. This synergy significantly boosts the model’s prediction accuracy and stability, particularly in complex RUL prediction tasks.

Overall, these experimental results underscore the importance of feature interaction between the time and feature dimensions and validate the critical role of each proposed module in the overall model performance. Each module is indispensable, and their combination maximizes the performance of the RUL prediction model.