Knowledge Graph Completion for High-Speed Railway Turnout Switch Machine Maintenance Based on the Multi-Level KBGC Model

Abstract

1. Introduction

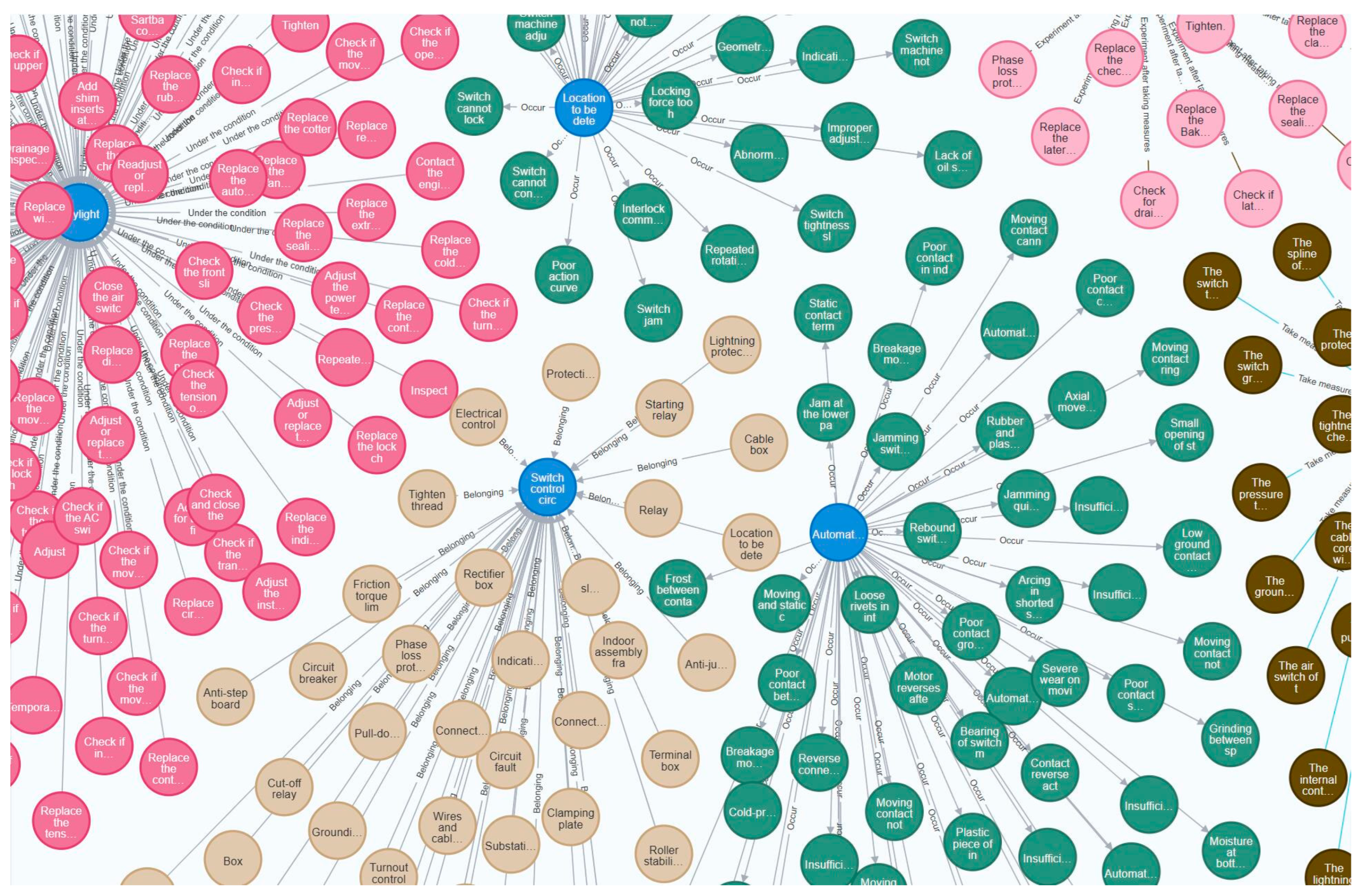

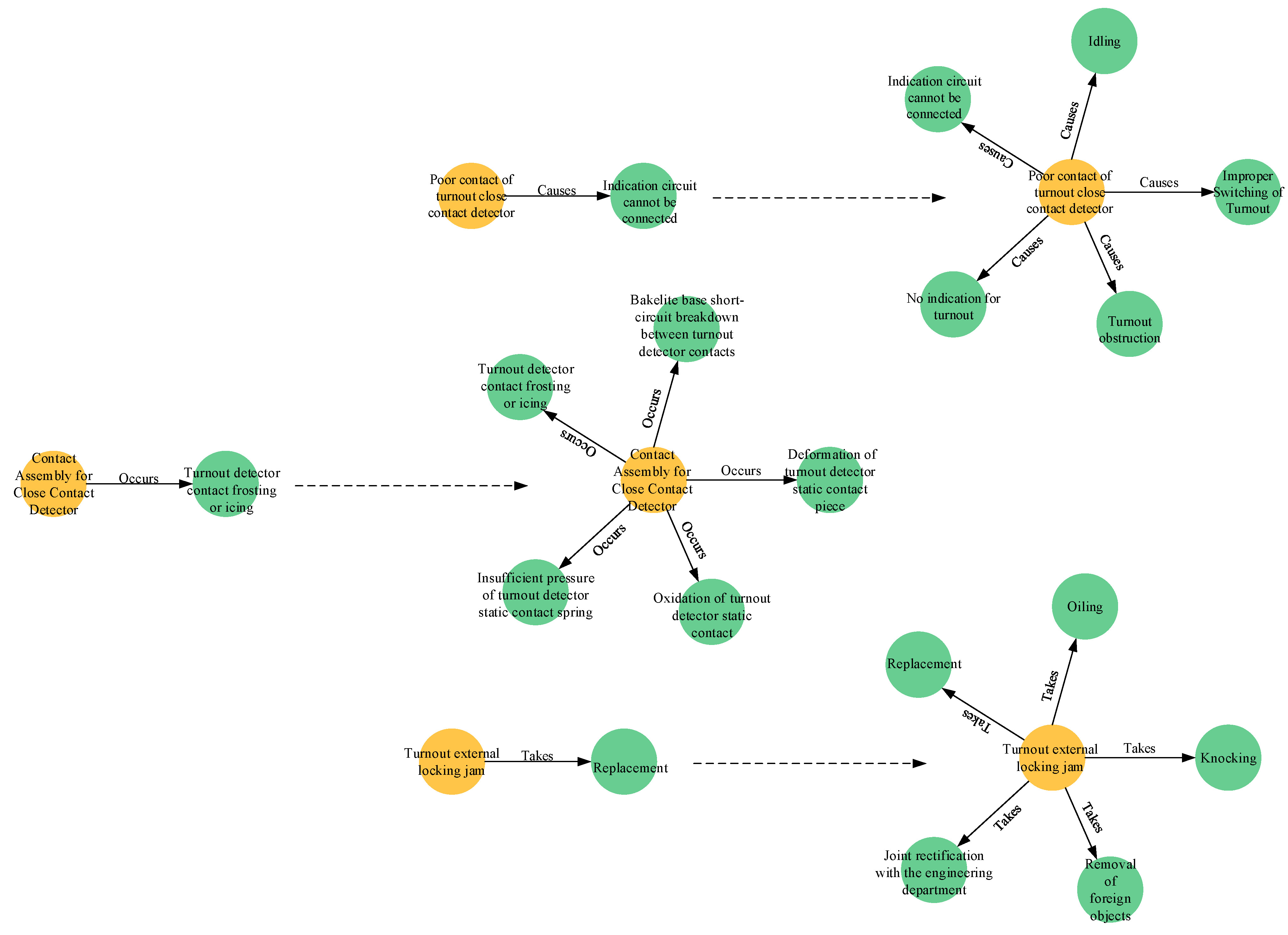

2. Construction of High-Speed Rail Turnout Switch Machine Maintenance Knowledge Graph

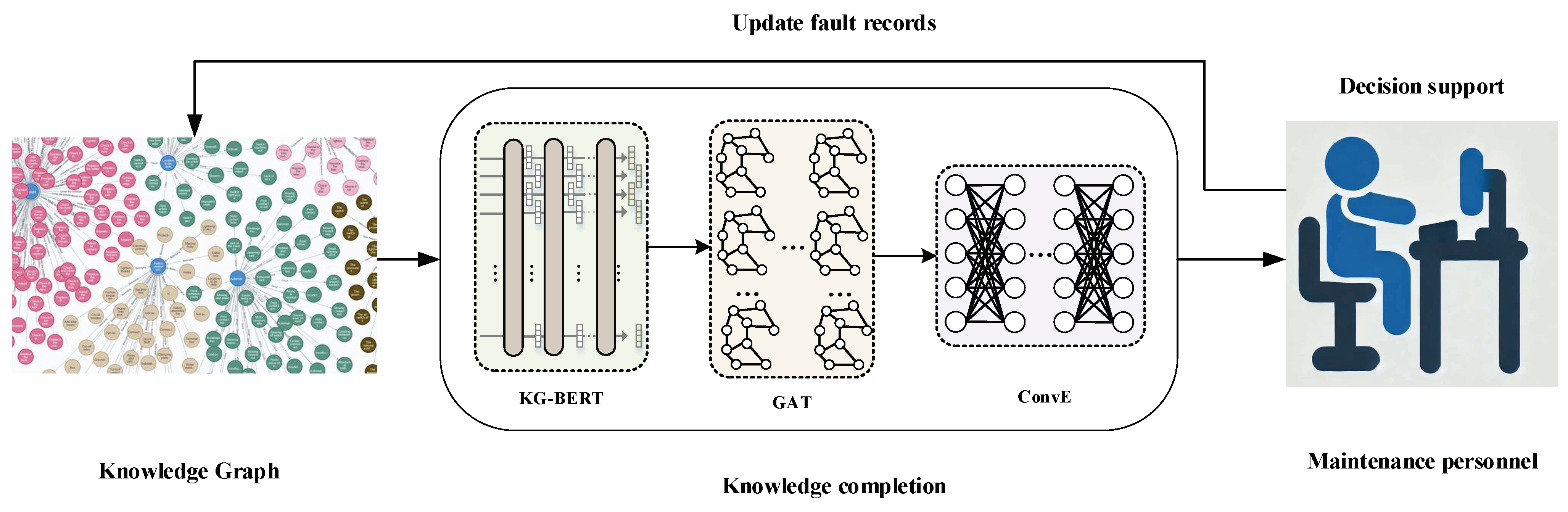

3. High-Speed Railway Turnout Switch Machine Maintenance Knowledge Graph Completion Model

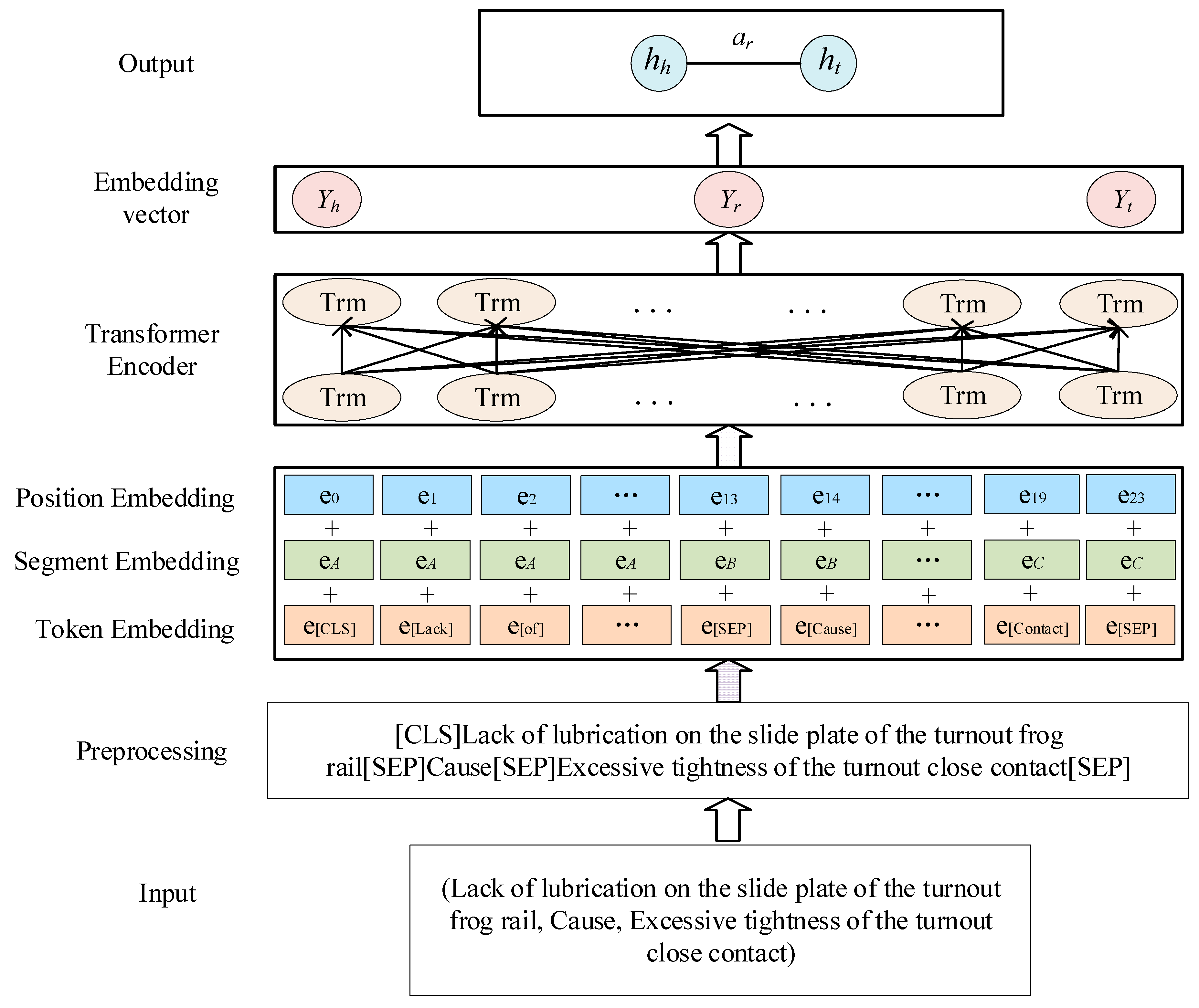

3.1. KG-BERT Layer

3.2. GAT Layer

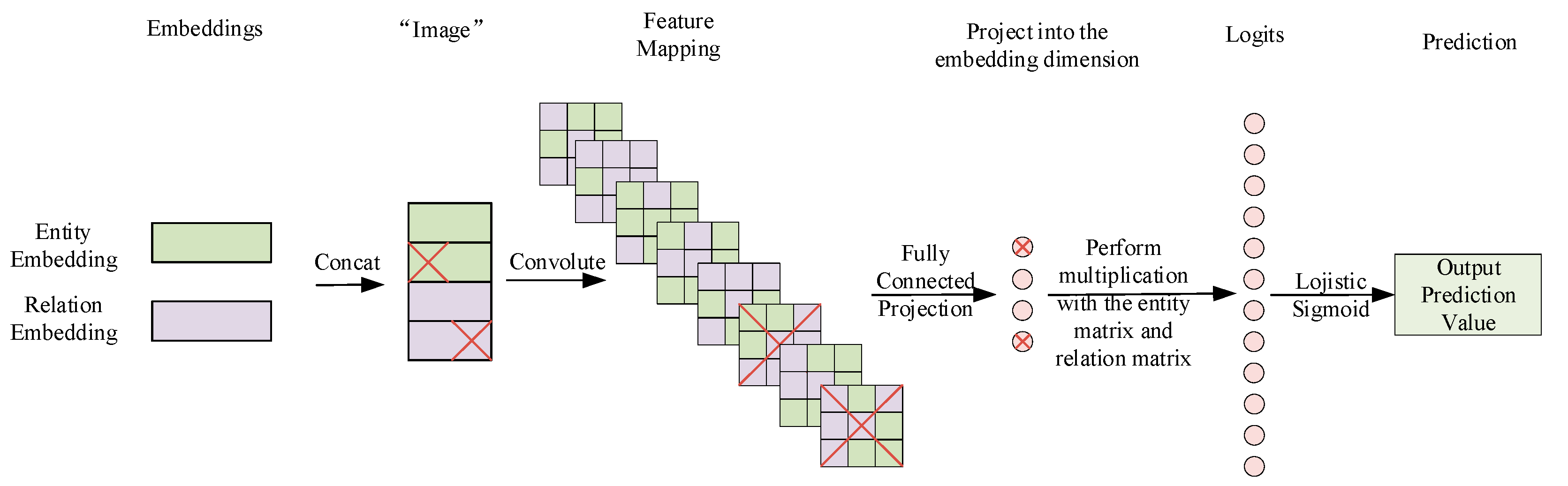

3.3. ConvE Layer

3.4. Loss Function

4. Empirical Results and Discussion

4.1. Experimental Datasets and Evaluation Metrics

4.2. Experimental Parameter Settings

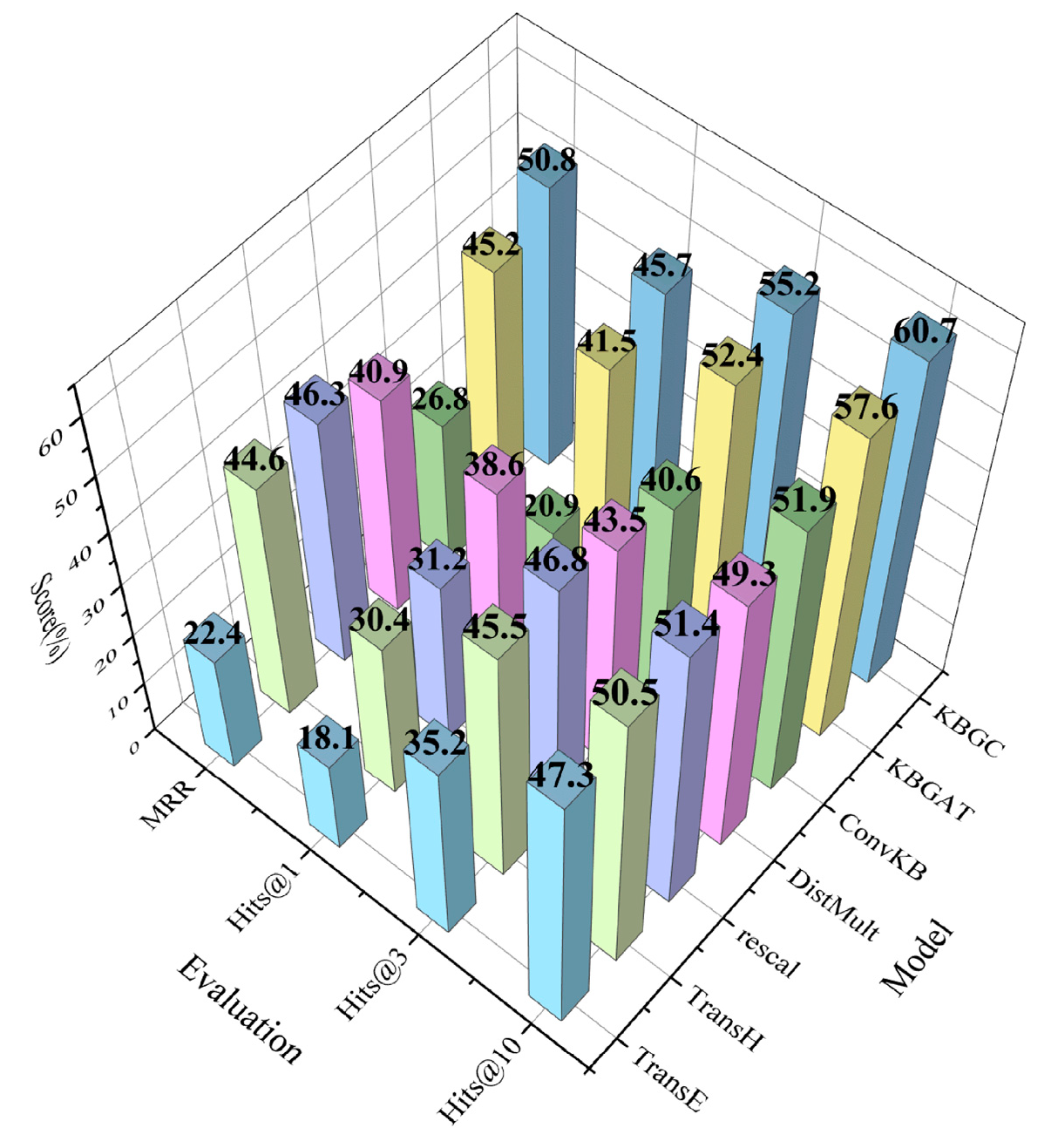

4.3. Comparative Experiments

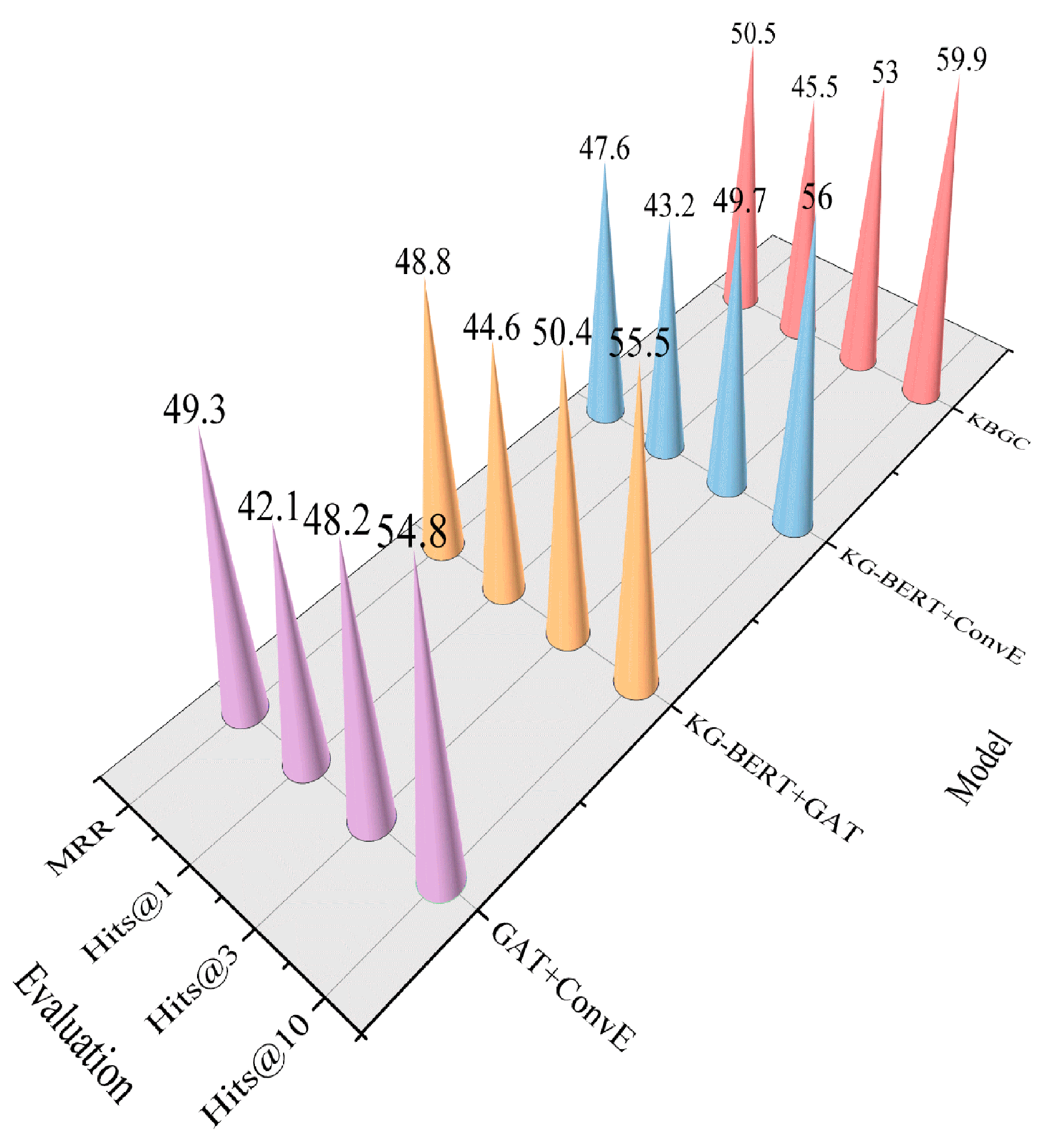

4.4. Ablation Experiments

- (1)

- GAT + ConvE: The KG-BERT pre-trained model is excluded, leaving the GAT and ConvE modules. GAT captures the graph structure information by updating entity representations through graph edges (relationships). ConvE subsequently extracts local features from the entity and relationship embeddings via convolution operations, thereby enhancing the model’s predictive capability.

- (2)

- KG-BERT + GAT: The ConvE module is removed, retaining the KG-BERT and GAT modules. KG-BERT embeds the entities and relationships from the triplets into a vector space, utilizing the pre-trained BERT model for prediction to capture complex semantic information. While KG-BERT encodes the input, GAT updates the entity representation by considering the influence of the neighboring entities on the target entity. The attention weights are used to determine the importance of different neighbors, providing more comprehensive modeling and prediction of entities and relationships.

- (3)

- KG-BERT + ConvE: The GAT module is excluded, leaving the KG-BERT and ConvE modules. KG-BERT utilizes the BERT pre-trained model to capture global semantic information in the knowledge graph, while ConvE aggregates the local feature information of the entities and relationships through convolution operations. Although this combination balances semantic understanding and local feature extraction, it lacks supplementary graph structure information.

- (4)

- KBGC: As the complete model in the experiment, the above three models are integrated to form a multi-level architecture. KG-BERT performs the global semantic encoding of entities and relationships using the BERT model, capturing complex semantic information. The GAT module extracts graph structure features from the knowledge graph, supplementing local structural information. The ConvE convolutional network then aggregates features through interactions between the entities and relationships, thus accomplishing the completion task.

4.5. Model Generalization Analysis

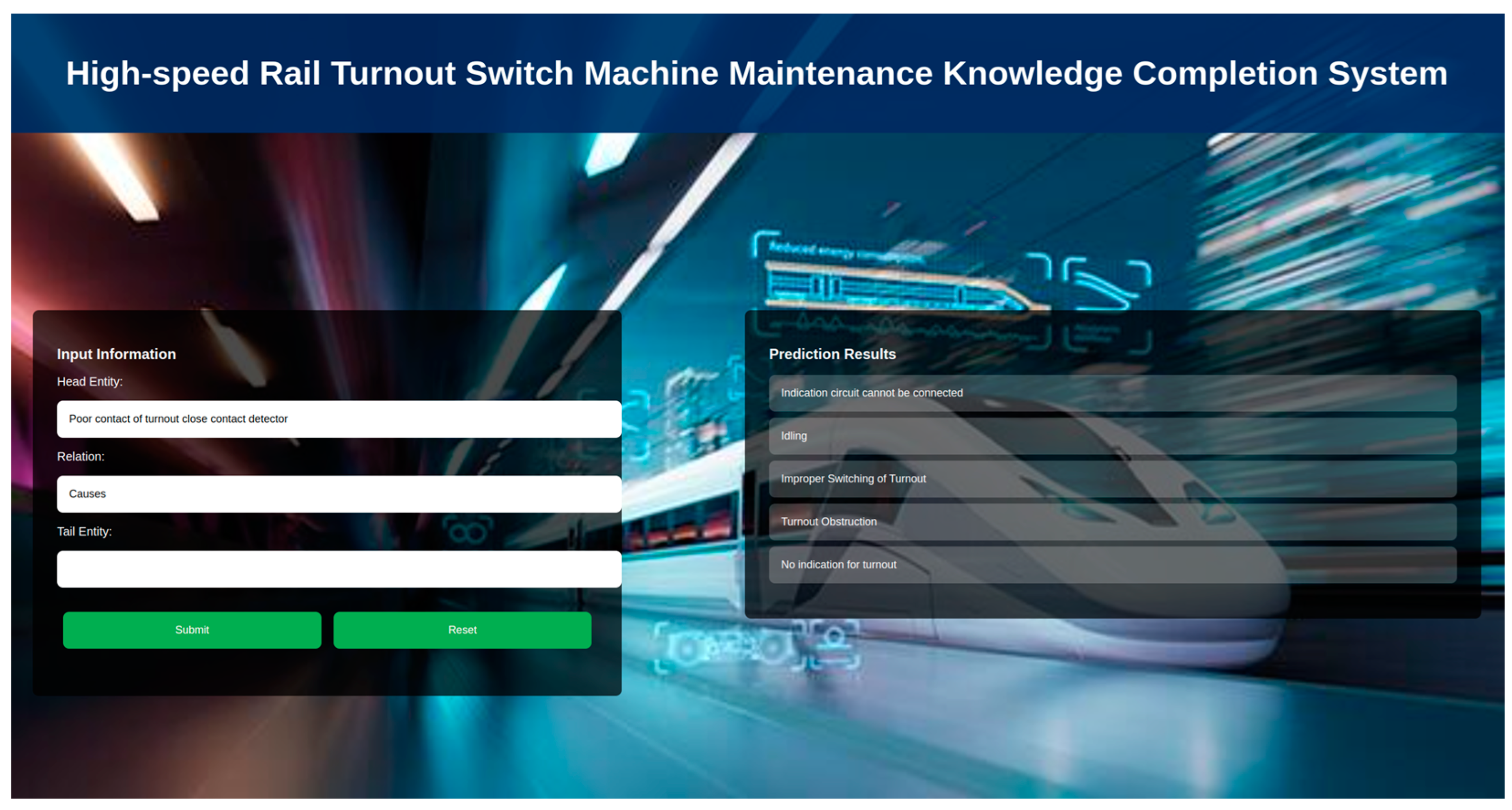

5. Knowledge Completion Visualization Application

6. Conclusions and Outlook

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhao, P.; Wang, X.; Fu, M. Fault Diagnosis of Switch Machines with Small Samples Based on Bayesian Meta-Learning. J. Railw. Sci. Eng. 2023, 20, 4008–4020. [Google Scholar]

- Xie, M.; He, J.; Hu, X.; Cao, Y. Ground Signal Fault Diagnosis of Urban Rail Transit Based on Fault Logs. J. Beijing Jiaotong Univ. 2020, 44, 27–35. [Google Scholar]

- Hu, X.; Cao, Y.; Tang, T.; Sun, Y. Data-Driven Technology of Fault Diagnosis in Railway Point Machines: Review and Challenges. Transp. Saf. Environ. 2022, 4, tdac036. [Google Scholar] [CrossRef]

- Hu, X.; Tang, T.; Tan, L.; Zhang, H. Fault Detection for Point Machines: A Review, Challenges, and Perspectives. Actuators 2023, 12, 391. [Google Scholar] [CrossRef]

- He, H.; Dai, M.; Li, X.; Tao, W. Research on Intelligent Fault Diagnosis Method for Turnouts Based on DCNN-SVM. J. Railw. Soc. 2023, 45, 103–113. [Google Scholar]

- Wang, Y.; Mi, G.; Kong, D.; Yang, J.; Zhang, Y. Research on High-Speed Railway Switch Fault Diagnosis Method Based on MDS and Improved SSA-SVM. J. China Railw. Soc. 2024, 46, 81–90. [Google Scholar]

- Liang, X.; Dai, S. Research on Switch Machine Fault Diagnosis Based on Sound Signals [J/OL]. Railw. Stand. Des. 2023, 1–9. [Google Scholar] [CrossRef]

- Hu, X.; Niu, R.; Tang, T. Pre-Processing of Metro Signaling Equipment Fault Text Based on Fusion of Lexical Domain and Semantic Domain. J. China Railw. Soc. 2021, 43, 78–85. [Google Scholar]

- Zhu, Q.; Wang, S.; Ding, Y.; Zeng, H.; Zhang, L.; Guo, Y.; Li, H.; Wang, W.; Song, S.; Hao, R.; et al. Construction Method of Knowledge Graph for Safety, Quality, and Progress in Intelligent Management of Railway Tunnel Blasting Construction. J. Wuhan Univ. Inf. Sci. Ed. 2022, 47, 1155–1164. [Google Scholar] [CrossRef]

- Huang, J.; Yang, C.; Feng, T.; Sun, N.; Li, J.; Yu, H.; Cui, H. Research and Application of Knowledge Graph Construction for Wind Turbine Operation and Maintenance. Power Syst. Prot. Control 2024, 52, 167–177. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, K.; Wang, S. Research on Knowledge Graph Construction for Aviation Equipment Faults Based on BiGRU-Attention Improvement. Acta Aeronaut. Astronaut. Sin. 2023, 42, 1–13. Available online: http://kns.cnki.net/kcms/detail/11.1929.V.20240104.1523.004.html (accessed on 3 June 2024).

- Shen, W.; Zhong, Y.; Wang, J.; Zheng, Z.; Ma, A. Construction and Application of a Knowledge Graph for Flood Disasters Based on Multimodal Data. J. Wuhan Univ. Inf. Sci. Ed. 2023, 48, 2009–2018. [Google Scholar] [CrossRef]

- Wang, S.; Li, X.; Li, R.; Zhang, H. Knowledge Fusion Method for High-Speed Trains Based on Knowledge Graphs. J. Southwest Jiaotong Univ. 2024; in press. [Google Scholar]

- Guo, H.; Li, R.; Zhang, H.; Wei, Y.; Dai, Y. Construction of a Knowledge Graph for High-Speed Train Maintainability Design Based on Multi-Domain Integration. Chin. Mech. Eng. 2022, 33, 3015–3023. [Google Scholar]

- Xue, L.; Yao, X.; Zheng, Q.; Wang, X. Research on the Construction Method of a Knowledge Graph for High-Speed Rail Control Onboard Equipment Faults. J. Railw. Sci. Eng. 2023, 20, 34–43. [Google Scholar] [CrossRef]

- Lin, H.; Zhao, Z.; Lu, R.; Bai, W.; Hu, N.; Lu, R. Construction and Application of a Knowledge Graph for Fault Handling of High-Speed Railway Turnout Equipment. J. Railw. Soc. 2024, 45, 73–80. [Google Scholar]

- Shen, T.; Zhang, F.; Cheng, J. A Comprehensive Overview of Knowledge Graph Completion. Knowl.-Based Syst. 2022, 255, 109597. [Google Scholar] [CrossRef]

- Du, X.; Liu, M.; Shen, L.; Peng, X. A Survey on Knowledge Graph Representation Learning Methods for Link Prediction. J. Softw. 2024, 35, 87–117. [Google Scholar] [CrossRef]

- Bordes, A.; Usunier, N.; Garcia-Duran, A.; Weston, J.; Yakhnenko, O. Translating Embeddings for Modeling Multi-Relational Data. Adv. Neural Inf. Process. Syst. 2013, 26, 2787–2795. [Google Scholar]

- Sa, R.; Li, Y.; Lin, M. A Review of Knowledge Graph Reasoning and Question Answering. J. Comput. Sci. Explor. 2022, 16, 1727–1741. [Google Scholar]

- Nickel, M.; Tresp, V.; Kriegel, H.P. Factorizing YAGO: Scalable Machine Learning for Linked Data. In Proceedings of the 21st International Conference on World Wide Web, Lyon, France, 16–20 April 2012; pp. 271–280. [Google Scholar]

- Lin, Y.K.; Han, X.; Xie, R.B.; Liu, Z.Y.; Sun, M.S. Knowledge Representation Learning: A Quantitative Review. arXiv 2018, arXiv:1812.10901. [Google Scholar]

- Dettmers, T.; Minervini, P.; Stenetorp, P.; Riedel, S. Convolutional 2D Knowledge Graph Embeddings. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. No. 1. [Google Scholar]

- Yao, L.; Mao, C.; Luo, Y. KG-BERT: BERT for Knowledge Graph Completion. arXiv 2019, arXiv:1909.03193. [Google Scholar]

- Lin, H.; Bai, W.; Zhao, Z.; Hu, N.; Li, D.; Lu, R. Knowledge Extraction Method for High-Speed Railway Switch Maintenance Text. J. Railw. Sci. Eng. 2024, 21, 2569–2580. [Google Scholar] [CrossRef]

- Zhang, Y.; Ye, H.; Zhang, L.; Xue, Y. BERT-Based Short Text Classification Model and Its Application in Fault Diagnosis of Railway CIR Equipment. Syst. Sci. Math. 2024, 44, 115–131. [Google Scholar]

- Sun, S.; Li, X.; Li, W.; Lei, D.; Li, S.; Yang, L.; Wu, Y. A Review of Graph Neural Network Applications in Knowledge Graph Reasoning. J. Comput. Sci. Explor. 2023, 17, 27–52. [Google Scholar]

- Wu, B.; Liang, X.; Zhang, S.; Xu, R. Advances and Applications of Graph Neural Networks. Chin. J. Comput. 2022, 45, 35–68. [Google Scholar]

- Zamini, M.; Reza, H.; Rabiei, M. A Review of Knowledge Graph Completion. Information 2022, 13, 396. [Google Scholar] [CrossRef]

- Gao, F.; Wang, J.; Duan, L.; Yue, K.; Li, Z. Knowledge Graph Completion of Folk Literature by Integrating Neighborhood Information. J. Beihang Univ. 2024; in press. [Google Scholar] [CrossRef]

- Ge, W.; Zhou, J.; Yuan, L.; Zheng, P. A Precision Fertilization Recommendation Model for Rice Based on Knowledge Graph and Case-Based Reasoning. Trans. Chin. Soc. Agric. Eng. 2023, 39, 126–133. [Google Scholar]

- Yang, Y.; Zhu, Y.; Chen, S.; Liu, X.; Li, H. Application of AI Chain with Collective Intelligence Strategy in Knowledge Reasoning for Dam Flood Control and Emergency Rescue. J. Hydraul. Eng. 2023, 54, 1122–1132. [Google Scholar] [CrossRef]

- Hu, X.; Niu, R.; Tao, T. Research on Entropy-Based Corrective Maintenance Difficulty Estimation of Metro Signaling. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; IEEE: New York, NY, USA, 2019; pp. 79–85. [Google Scholar]

- Liu, Y.; Wei, S.; Huang, H.; Lai, Q.; Li, M.; Guan, L. Named Entity Recognition of Citrus Pests and Diseases Based on the BERT-BiLSTM-CRF Model. Expert Syst. Appl. 2023, 234, 121103. [Google Scholar] [CrossRef]

- Liao, C.; Huang, Z.; Yang, J.; Shao, J.; Wang, T.; Lin, Y. Transformer Risk Assessment and Auxiliary Maintenance Decision-Making Method Based on Defect Text Recognition. High Volt. Eng. 2024, 50, 2931–2941. [Google Scholar] [CrossRef]

- Liu, D.; Fang, Q.; Zhang, X.; Hu, J.; Qian, S.; Xu, C. Knowledge Graph Completion Based on Graph Contrastive Attention Networks. J. Beihang Univ. 2022, 48, 1428–1435. [Google Scholar] [CrossRef]

- Pang, J.; Liu, X.; Gu, Y.; Wang, X.; Zhao, Y.; Zhang, X.; Yu, G. Knowledge Hypergraph Link Prediction Based on Multi-Granularity Attention Networks. J. Softw. 2023, 34, 1259–1276. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, X.; Wang, C.; Zhang, S.; Yan, H. Time-Aware Knowledge Hypergraph Link Prediction. J. Softw. 2023, 34, 4533–4547. [Google Scholar] [CrossRef]

- Schlichtkrull, M.; Kipf, T.N.; Bloem, P.; Van Den Berg, R.; Titov, I.; Welling, M. Modeling Relational Data with Graph Convolutional Networks. In The Semantic Web: 15th International Conference on Semantic Web (ESWC), Heraklion, Crete, Greece, 3–7 June 2018; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 593–607. [Google Scholar]

| Head Entity Type | Relationship Type | Tail Entity Type | Example Triplets | Number of Triplets |

|---|---|---|---|---|

| Fault Location | Affiliation | Switch equipment | (Switch locking device, affiliation, and external locking and installation) | 127 |

| Fault Phenomenon | Cause | Fault Phenomenon | (Red light flashing during track switch, cause, and switch start circuit cutoff) | 565 |

| Maintenance Measure | Obtain | Maintenance Result | (Simulation experiment, obtain, and switch returns to normal) | 282 |

| Maintenance Condition | Permit | Maintenance Measure | (Maintenance window, permit, and inspect the contact block assembly of the switch machine) | 1021 |

| Maintenance Measure | Verify | Maintenance Experiment | (Inspection and disconnection, verify, and simulation experiment) | 346 |

| Equipment Phenomenon | Take | Maintenance Measure | (Irregular pointer, take, and joint repair by union departments) | 1932 |

| Fault Location | Occur | Fault Phenomenon | (Sliding plate, occur, and switch stock rail lacks lubrication) | 663 |

| Fault Phenomenon | Determine | Fault Nature | (Switch machine motor malfunction, determine, and poor maintenance) | 278 |

| Parameter Name | Parameter Value |

|---|---|

| KG-BERT Learning Rate | 3 × 10−5 |

| KG-BERT Embedding Dimension | 768 |

| KG-BERT Batch Size | 16 |

| GAT Dropout Rate | 0.3 |

| Number of GAT Layers | 1 |

| Number of GAT Attention Heads | 8 |

| ConvE Convolution Kernel Size | 3 |

| Optimization Function | Adam |

| Dataset | Model | MRR/% | Hits@1/% | Hits@3/% | Hits@10/% |

|---|---|---|---|---|---|

| FB15k-237 | TransE | 29.4 | 20.7 | 31.6 | 46.5 |

| TransH | 27.1 | 19.8 | 30.3 | 44.2 | |

| RESCAL | 35.4 | 26.1 | 38.8 | 53.2 | |

| DistMult | 24.1 | 15.5 | 26.3 | 41.7 | |

| ConvKB | 25.3 | 16.0 | 27.9 | 42.2 | |

| KBGAT | 51.8 | 25.8 | 39.8 | 62.1 | |

| KBGC | 53.6 | 41.2 | 52.9 | 63.7 | |

| WN18RR | TransE | 22.6 | 5.3 | 36.1 | 49.7 |

| TransH | 45.9 | 9.8 | 39.3 | 51.7 | |

| RESCAL | 46.8 | 42.2 | 47.1 | 52 | |

| DistMult | 42.8 | 39.0 | 44.0 | 49 | |

| ConvKB | 24.7 | 5.4 | 36.4 | 52.4 | |

| KBGAT | 44 | 45 | 48.6 | 58.1 | |

| KBGC | 48.5 | 43.3 | 48.0 | 59.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, H.; Bao, J.; Hu, N.; Zhao, Z.; Bai, W.; Li, D. Knowledge Graph Completion for High-Speed Railway Turnout Switch Machine Maintenance Based on the Multi-Level KBGC Model. Actuators 2024, 13, 410. https://doi.org/10.3390/act13100410

Lin H, Bao J, Hu N, Zhao Z, Bai W, Li D. Knowledge Graph Completion for High-Speed Railway Turnout Switch Machine Maintenance Based on the Multi-Level KBGC Model. Actuators. 2024; 13(10):410. https://doi.org/10.3390/act13100410

Chicago/Turabian StyleLin, Haixiang, Jijin Bao, Nana Hu, Zhengxiang Zhao, Wansheng Bai, and Dong Li. 2024. "Knowledge Graph Completion for High-Speed Railway Turnout Switch Machine Maintenance Based on the Multi-Level KBGC Model" Actuators 13, no. 10: 410. https://doi.org/10.3390/act13100410

APA StyleLin, H., Bao, J., Hu, N., Zhao, Z., Bai, W., & Li, D. (2024). Knowledge Graph Completion for High-Speed Railway Turnout Switch Machine Maintenance Based on the Multi-Level KBGC Model. Actuators, 13(10), 410. https://doi.org/10.3390/act13100410