3.1. Miss-Distance Advanced Kalman Prediction Filtering Controller

In order to obtain the change in the LOS’s characteristics during target tracking and aiming of an EODS, the aiming linear motion transformation model is established. In actual shooting, the aiming and tracking action belong to a low frequency, small amplitude and a small range motion within 1.5 Hz. And the LOS’s motion of pitch and azimuth direction is basically the same, so the LOS’s jitter motion can be supposed into a linear motion transformation model.

The signal collection of a tracking controller is a discrete process. According to the general model of the random linear discrete system, the mathematical equations for an LOS’s motion state and the image tracker’s measured value can be obtained.

In Equation (5), is the n dimension state vector at time k. is the n × n dimension state transition matrix. is the n × p dimension noise input matrix. is a p dimension state noise sequence. is an m dimension observation sequence. is the m × n dimension observation matrix. is the m dimension observation noise sequence.

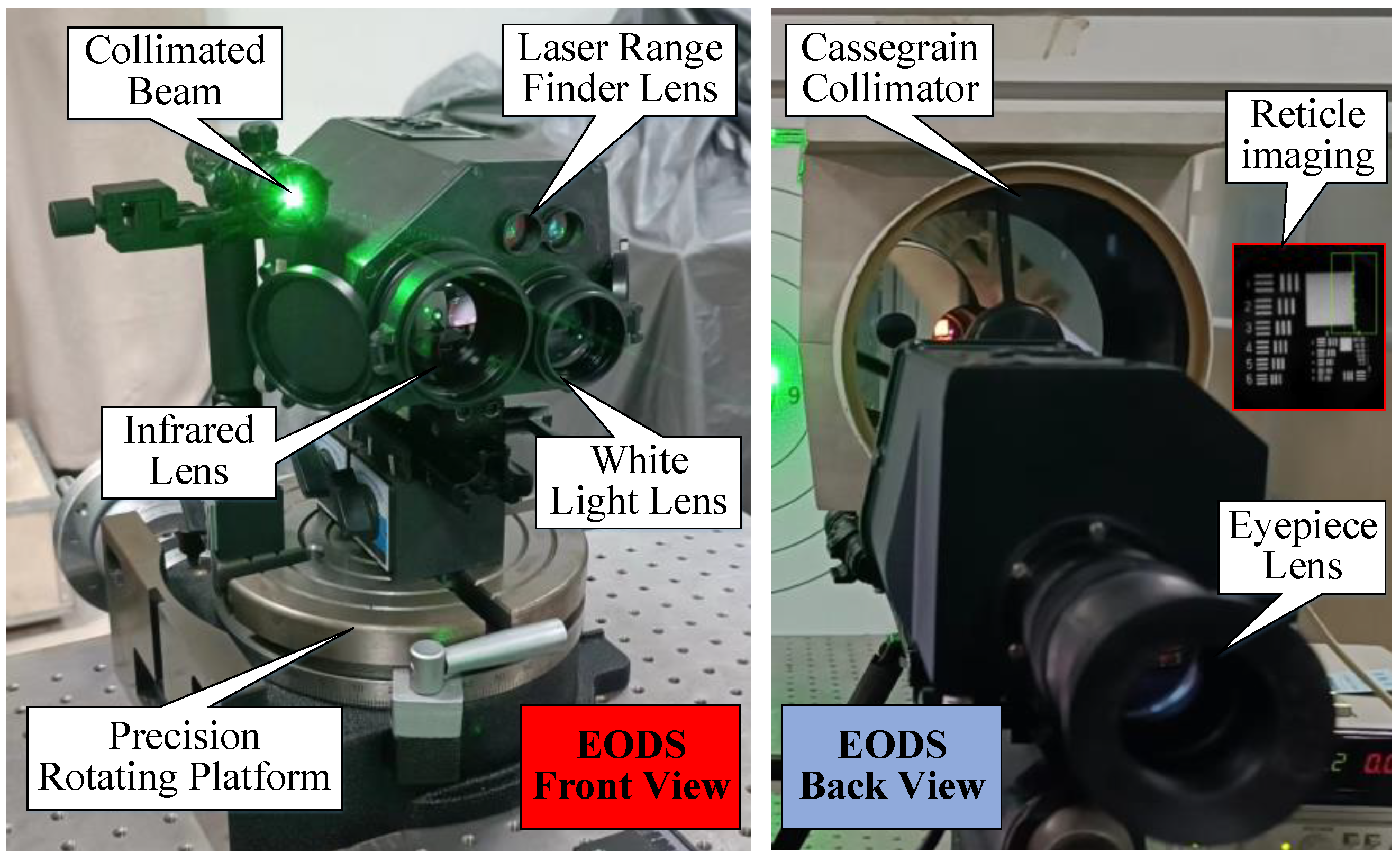

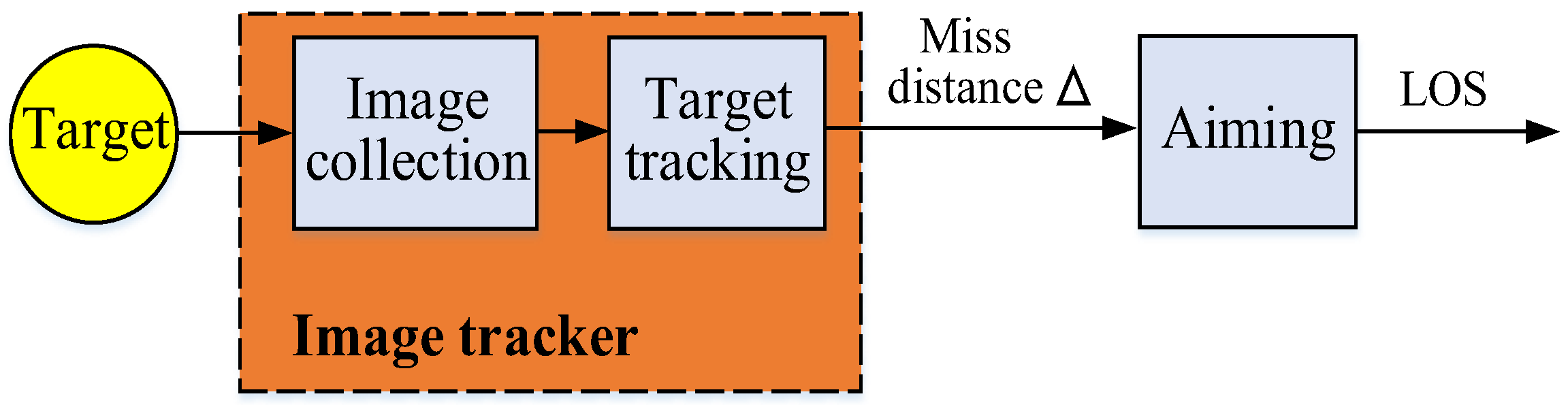

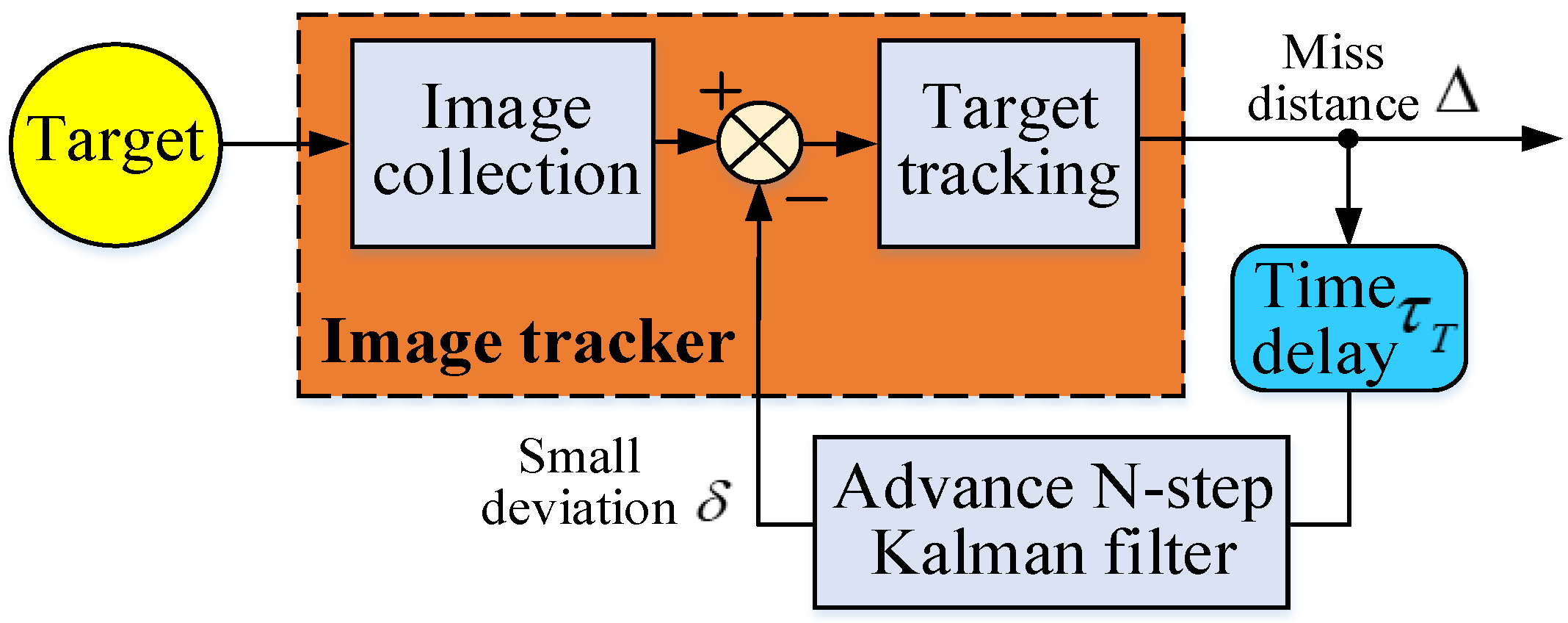

Figure 5 shows the optimized Kalman prediction filter of the EODS. The traditional signal fusion estimation field does not need too high precision. So it is usually limited to using an one-step prediction. However, for the practical applications in engineering fields such as tracking and aiming of EODS, the traditional filter should be improved.

The optimized model satisfies the following two assumptions.

Assumption 1. State noise and observation noise are white noises with zero mean value and they are not related. Their variance is Q and R, respectively.

In Equation (6),

,

is the Kroneck function.

Assumption 2. The initial value is not related to state noise and observation noise .

On the basis of Assumptions 1 and 2, and according to the last

NT time estimated value, the current time-optimized equation can be deduced.

Similarly, according to the last

NT time mean square difference, the current time-prediction mean square error equation can be deduced.

The Kalman prediction gain matrix equation can be obtained.

Then, using the data measured via the image tracker to correct the current state value, the current time-optimal prediction estimation equation can be deduced.

Finally, the advanced N-step optimal filter prediction mean square error equation after data update can be deduced.

The EODS’s image tracker uses an optimal prediction filter structure based on the LOS’s linear motion transformation. So, the state transition matrix in Equation (5) can be deduced.

If the initial values and are known, the state estimation vector at time k can be calculated recursively according to the tracker observation value at time k. If the observation value has a time-delay τ, the actual observation value at time k is . Therefore, the current state estimate value at time k is actually the predicted LOS value at time k-n in the past.

The MD signal is different from the angular velocity signal of the incremental encoder, and the sampling period of the encoder is generally in the range of 10 μs to 500 μs. And the change in period is relatively small. The MD signal is affected by frame rate, lens resolution, and hardware computing power. Moreover, the sampling period of the tracker is generally in the range of 1 ms to 100 ms. The longer the tracking time, the more exponential the increase in the amount of the algorithm running data. So this will lead to a phenomenon where the period starts rapidly and then slows down. If the hardware performance is poor, the tracker will gradually deteriorate from MD time-delay to stagnation in the later stage. Therefore, compared with the speed loop incremental encoder, the MD signal of the position loop tracker can be regarded as a non-uniform sampling discrete signal.

By adjusting the step size

n, the optimal Kalman algorithm suitable for different systems can be obtained. The parameters of this paper include a frame rate of 25 Hz and a lens resolution of 1280 × 720, with good hardware computing power. Based on the parameter configuration of the EODS, simulation was conducted using

n = 3 as an example to demonstrate the effectiveness of the algorithm. According to the single-stage Kalman filter equation, the past state vector

at time

k − 3 is estimated from the current observation value

at time k. Then,

is used to develop a three-step prediction to obtain the estimated LOS value

at time k. Finally, the following mathematical equation is obtained.

According to the statistical results of the image tracker, the observation noise variance is

R = 0.0019, and the time-delay is about

. Suppose the filter initial value is

, the observation matrix is

, the mean square error of the initial value is

, and the filter gain matrix’s initial value is

. The model process noise

Q is mainly obtained through comparative experiments.

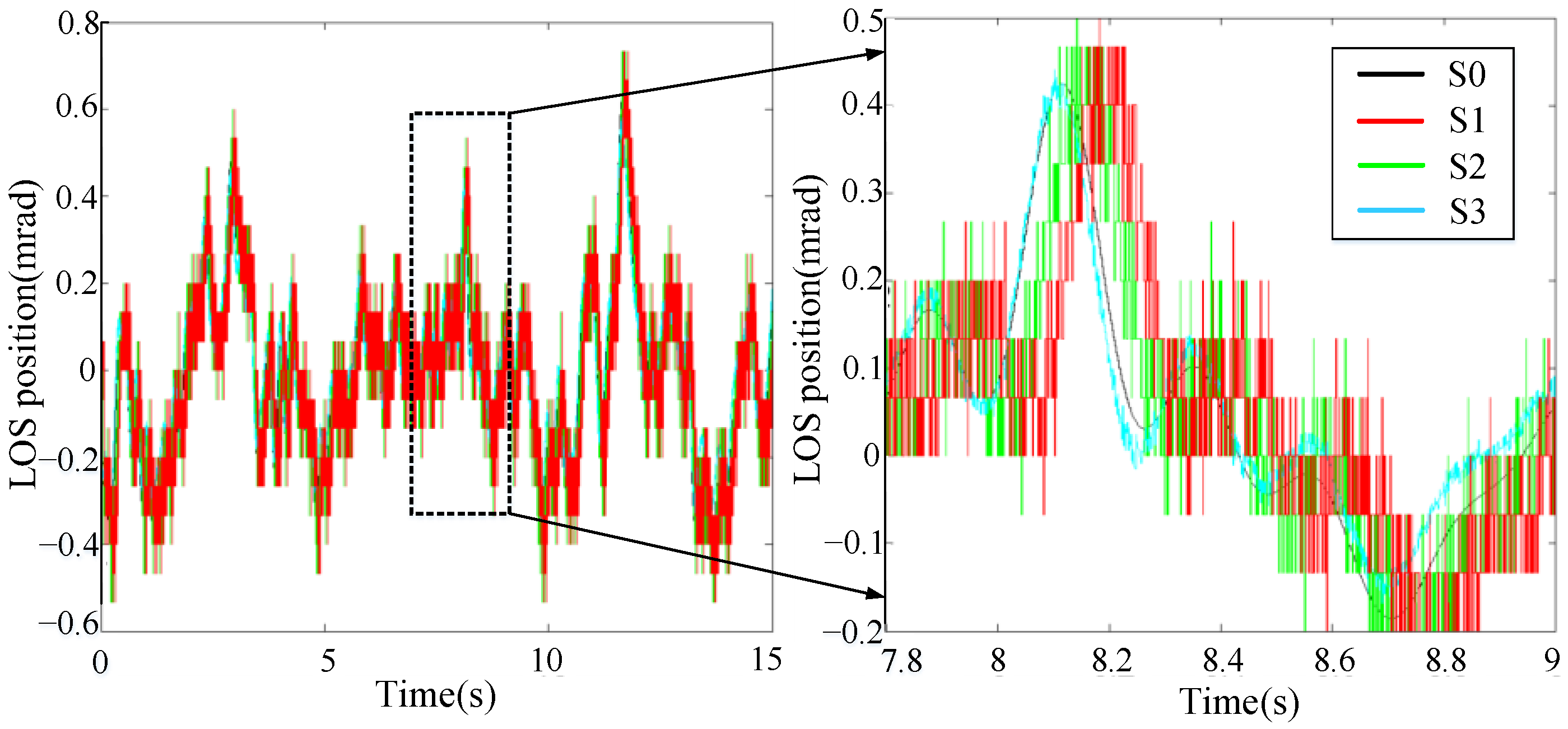

The model adopts the Runge Kutta fourth-order simulation, and the step length is set to 0.001 s. According to the linear motion transformation model, the LOS’s statistical data in the X azimuth direction are fitted as the frequency spectrum function. Then, the filter model inputs this function as the true value for testing. As shown in

Figure 6, the black curve S0 represents the true value of the LOS. The red curve S1 represents the tracker measuring method. The green curve S2 represents the traditional moving-average filter method. The blue curve S3 represents the optimized design advanced N-step Kalman filter prediction method.

The black curve is set as the standard. In

Figure 6, the red, green and blue curves have the same changing trend as the standard black curve. It shows that these methods can basically reflect the dynamic change in LOS’s true values. As shown in

Figure 6, in the local expand area (7.8~9 s), the blue curve is closest to the black curve. It shows that the optimized method has the highest test accuracy compared with the other two groups.

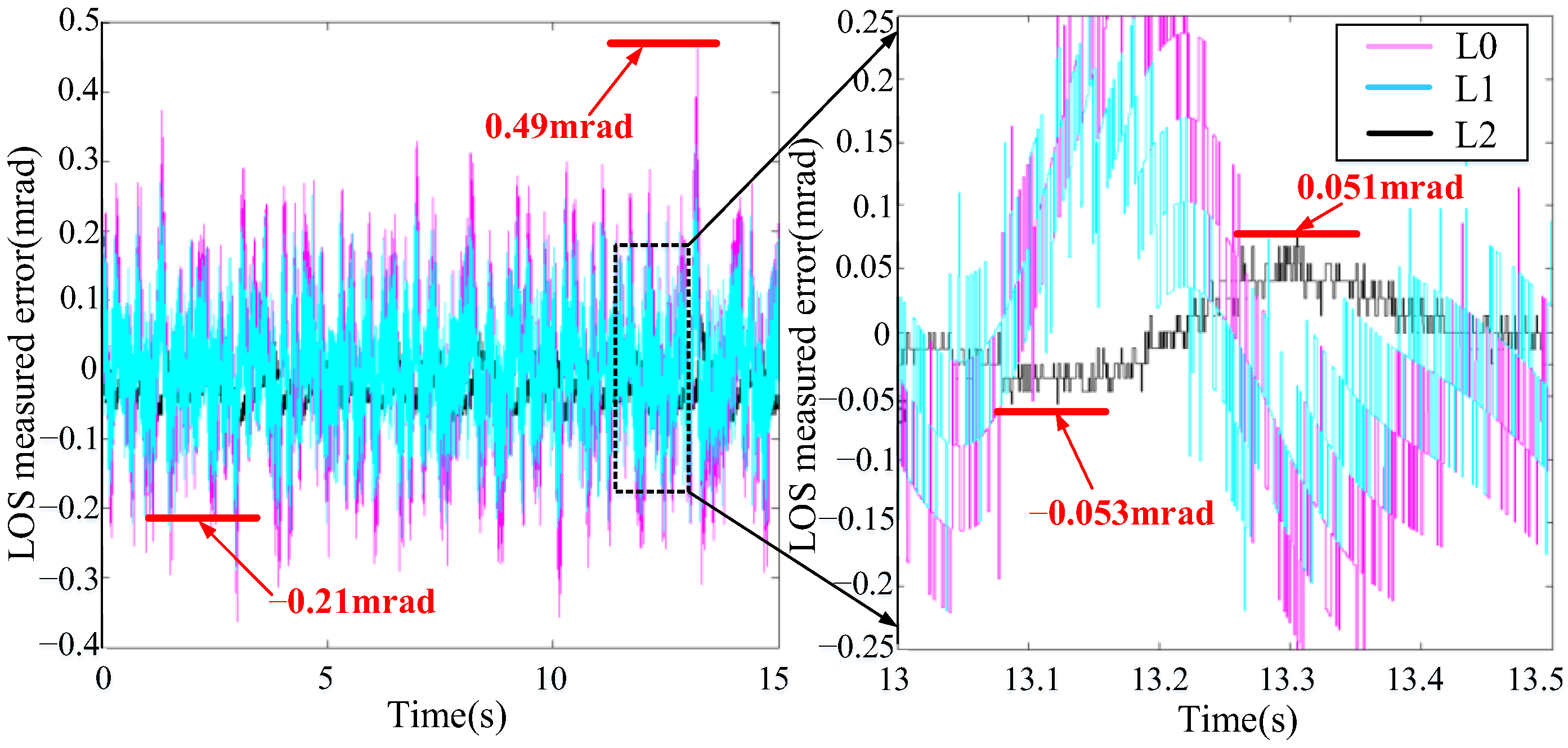

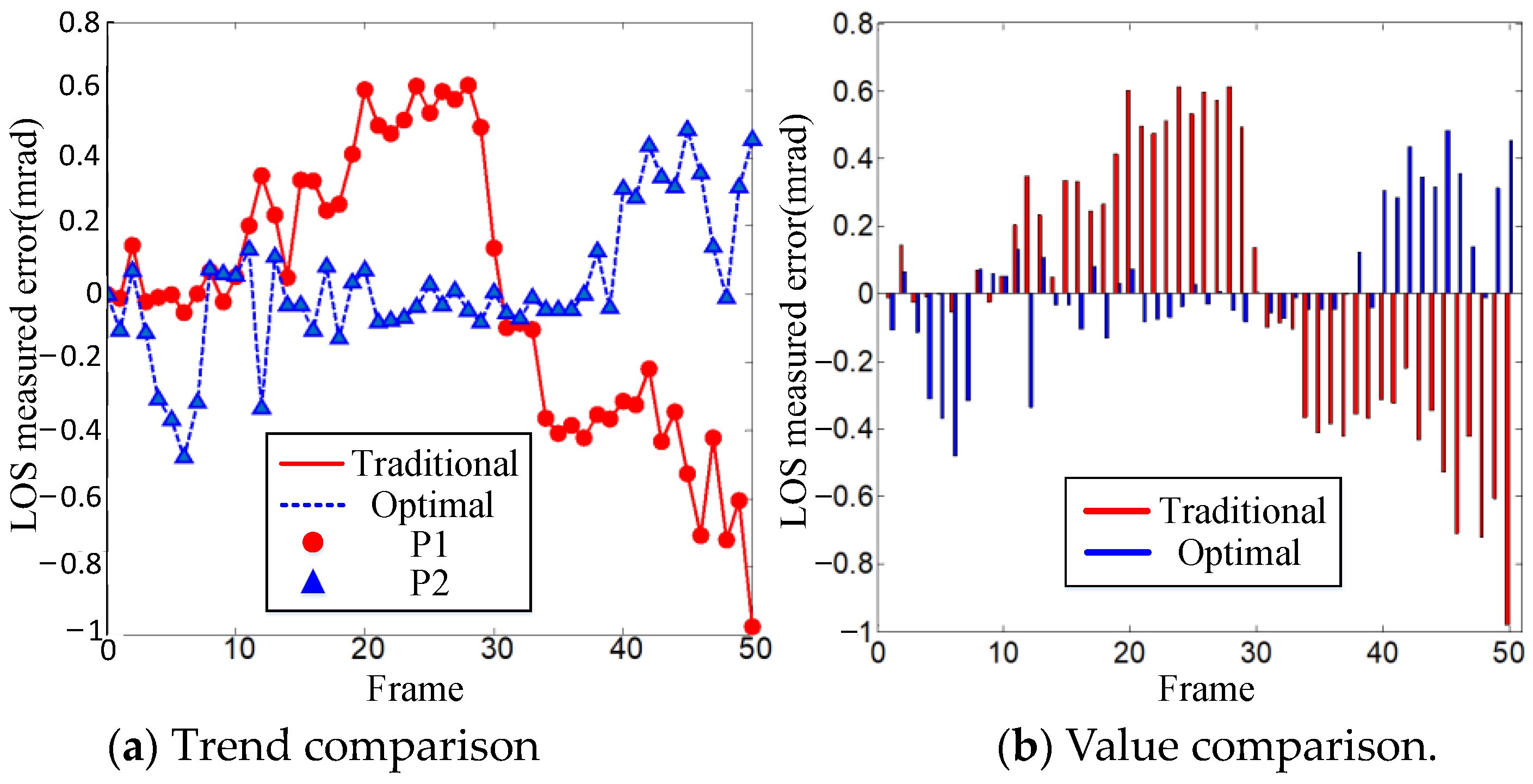

In

Figure 7, the red curve L0 represents the inherent measured error between the image tracker’s measured value and the LOS’s true value. The blue curve L1 represents the traditional method’s measured error between the moving-average filtering value and the LOS’s true value. And the black curve L2 represents the optimal method’s measured error between the advanced N-step Kalman predicted value and the LOS’s true value.

In

Figure 7, compared with the three curves, the red curve has the largest peak value, the black curve has the smallest peak value, and the blue curve is in the middle. Detailed data are shown in

Table 1. The inherent measured error is 0.49 mrad (10~15 s), and the traditional method’s measured error is 0.21 mrad (0~5 s). So the traditional method’s error ratio is reduced by 57.1%. It shows that the traditional method can reduce the tracker’s inherent measured error to a certain extent. In

Figure 7, in the local expand area (13~13.5 s), the black curve has two peaks. The upper bound of the black curve is 0.051 mrad, and the lower bound is −0.053 mrad. So the optimal method’s measured error is 0.053 mrad. The optimized method error ratio is reduced by 89.2%. It shows that both the traditional and optimal methods can reduce the tracker’s measured error. However, compared with the traditional method, the error correction effect of the optimal method is improved by 74.8%. It shows that the advanced N-step Kalman filter prediction controller can effectively correct the small deviation in the time-delay of a tracker and improve the shooting accuracy of an EODS.

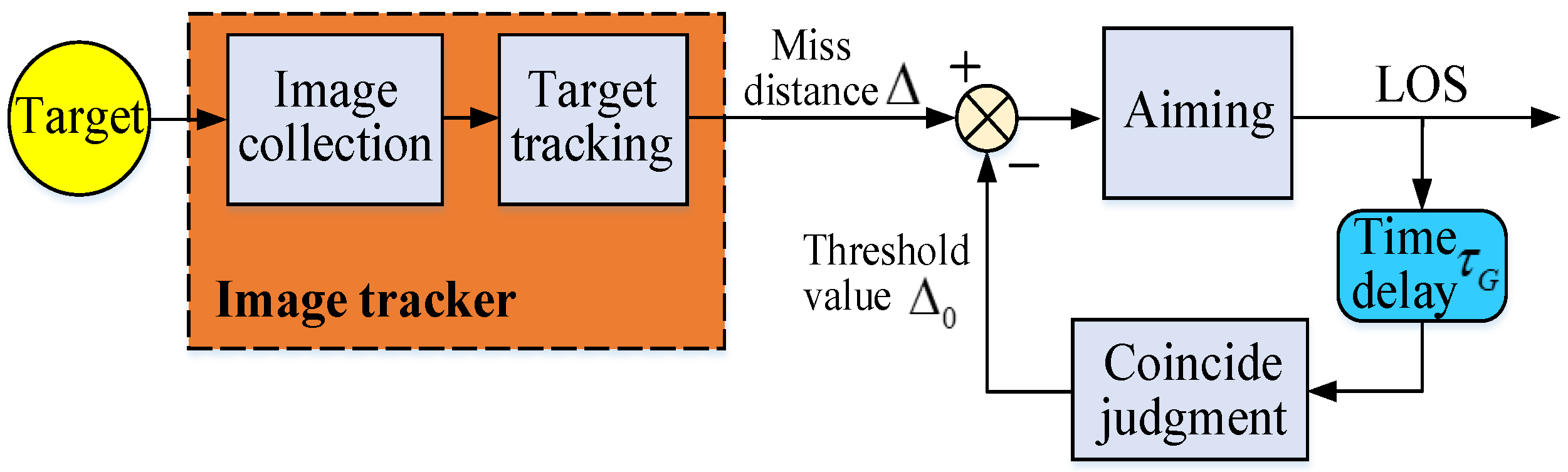

3.2. Miss-Distance Judgment of LOS Firing Controller

The optimized filter controller can reduce the tracking time-delay error between the measured value and the true value. Then, it can output the accurate LOS predicted value. That is, the miss-distance Δ in

Figure 8. To improve the tracking precision, it is also necessary to make the LOS’s predicted position coincide with the target’s actual position in the firing threshold value, so as to reduce the adverse impact of tracker time-delay error on EODS firing.

The time-delay of miss-distance Δ is about

. The aiming and tracking actions of an EODS belong to a low frequency, small amplitude and a small range motion within 1.5 Hz. According to the linear motion transformation model, the mathematical equation of LOS firing control judgment correction can be obtained.

In Equation (17), is the LOS’s predicted value. is the time-delay. is the LOS’s angular velocity. is the firing judgment threshold. is the LOS’s fusion value.

Ideally, when the LOS’s fusion value

coincides with the actual target position,

is the shooting accuracy

ε. So the firing threshold value

should be less than

ε. However, the actual manual tracking and aiming process is complex, and the LOS linear motion model will be affected by external factors. Therefore, a composite constraint Equation (18) is added based on the LOS judgment correction in Equation (17).

Combining Equations (17) and (18), a new mathematical equation can be obtained.

The lens resolution is

N = 1280 × 720, and the lens field angle is

= 3.6125° × 2.034°. When the number of pixels between the tracker’ measured value and the center of field view is

n, the quantitative relationship of miss-distance Δ can be obtained.

Then, the deviation value δ0 of a single pixel is converted to 0.0493 mrad using Equation (20). And the EODS needs to control the small deviation

δ within 1~3 pixels in the lens of 1280 × 720 for long-distance precision shooting [

6,

7]. Suppose the small deviation

δ caused by the tracker time-delay is

n = ±3 pixels. So the tracker accuracy of

X azimuth and

Y pitch direction is ±0.14775 mrad and ±0.1479 mrad, respectively. The mathematical equation of preset accuracy

ε can be obtained.

As shown in

Figure 9, the black curve P1 represents the LOS’s true value. The red curve P2 represents the LOS’s predicted value. The green curve P0 represents the LOS’s firing judgment threshold. The green curve represents the optimized design method proposed in this paper.

The black curve is set as the standard. In

Figure 9, the green curve peaks three times in 0~15 s. It shows that the firing control judgment has been met for three times in this period. The third peak of the green curve occurs in 11.34~11.46 s. As shown in

Figure 9, in the local expand area, the coincidence time of the LOS’s predicted value (red curve) and LOS’s true value (black curve) is 11.4 s. At this time, the LOS’s true value of the black curve is about 0.01 mrad, and the LOS’s predicted value of the red curve is about 0.03 mrad. It shows that the optimized method can effectively select the firing time.

As shown in

Table 2, the test accuracy is 0.02 mrad. Compared with the preset accuracy of 0.15 mrad, the test accuracy is improved by 86.7%. It shows that the optimized method can control the tracker time-delay error within 1~3 pixels. The LOS firing time is effectively judged to identify the target in the field view center of the EODS lens, so as to improve the tracking precision.

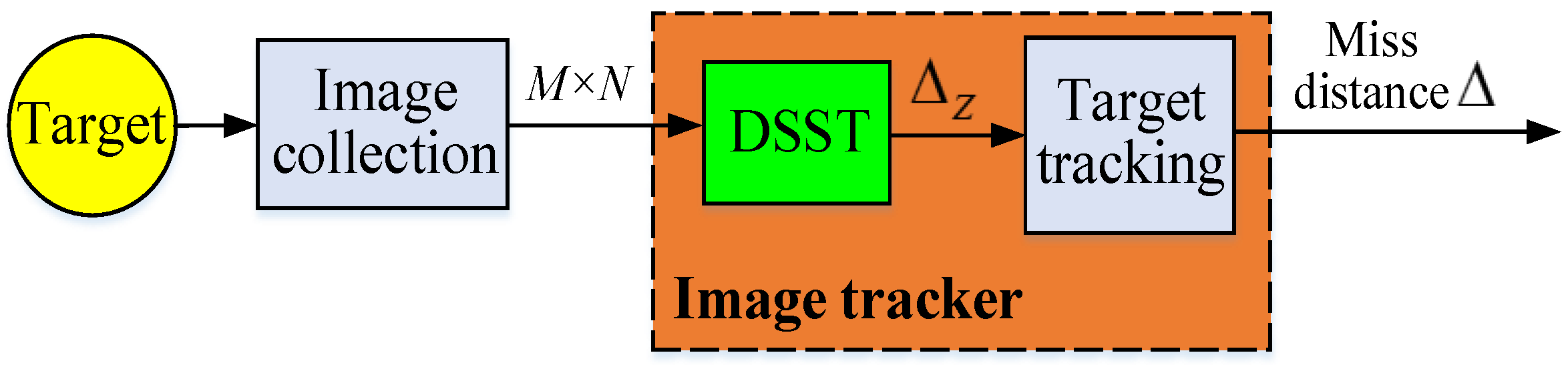

3.3. Miss-Distance Anti-Occlusion Detection and Tracking Controller

The precondition for the correct implementation of an LOS firing controller model is that the image tracker stably outputs the miss-distance Δ signal. In

Figure 10, the tracker time-delay can be compensated via filter prediction. However, once the miss-distance Δ is lost in the tracking and aiming process, the LOS firing controller cannot be implemented. This will lead to a decrease in the tracking precision of the EODS. As shown in

Figure 10, the basic principle of the optimized image tracker is to obtain a resolution coordinate position DSST filter through image processing. Then, the DSST filter stably outputs the pixel coordinate position of the target in the next frame.

The DSST filter is used to extract the image blocks

with gray level feature from the single-sample detection area with resolution

M × N [

19,

20,

21]. Then, the filter

is solved to obtain the gray level response value

corresponding to each image block

. The Gaussian function is selected as the expected response function and marked as

. The function peak value is located in the center of the corresponding sample

. Finally, the mathematical equation of DSST filter

is obtained.

In Equation (22), ∗ represents convolution.

σ is the minimum mean square error.

are parameters extracted from the

M × N detection area.

f is the gray level feature of different image blocks from the previous frame.

g is the response value constructed using the Gaussian function.

h is the template updated by each iteration. The response values

follow the Gaussian distribution and the response maximum

is located at the center of the corresponding gray level image block.

is the discrete Fourier transform corresponding to image block

, response value

, and DSST filter

. The underline indicates the parameter complex conjugate. Then, the mathematical equation of Equation (23) can be obtained.

In order to simplify and reduce the calculation amount of the DSST target tracker, the numerator and denominator of Equation (24) are recorded as

and

respectively.

In Equation (24), η is the adjust coefficient, which represents the learning rate of the optimal filter. and are the parameters of the current frame and the previous frame, respectively.

If the sample image

Y resolution of the next frame is

M × N, the updated response value

Z can be obtained through Equation (25).

In Equation (25), is the inverse discrete Fourier transform. λ is the adjust coefficient.

The area with the gray level response maximum is the target tracking position of the current frame. If the response value Z is the maximum , the center point resolution coordinate of the corresponding image block is the new position for target tracking. The target coordinate is converted into miss-distance Δ, and stably output into the optimized prediction filter.

As shown in

Figure 11a, an aerial view of the complex background is used as the single-sample training data of anti-occlusion DSST target tracking.

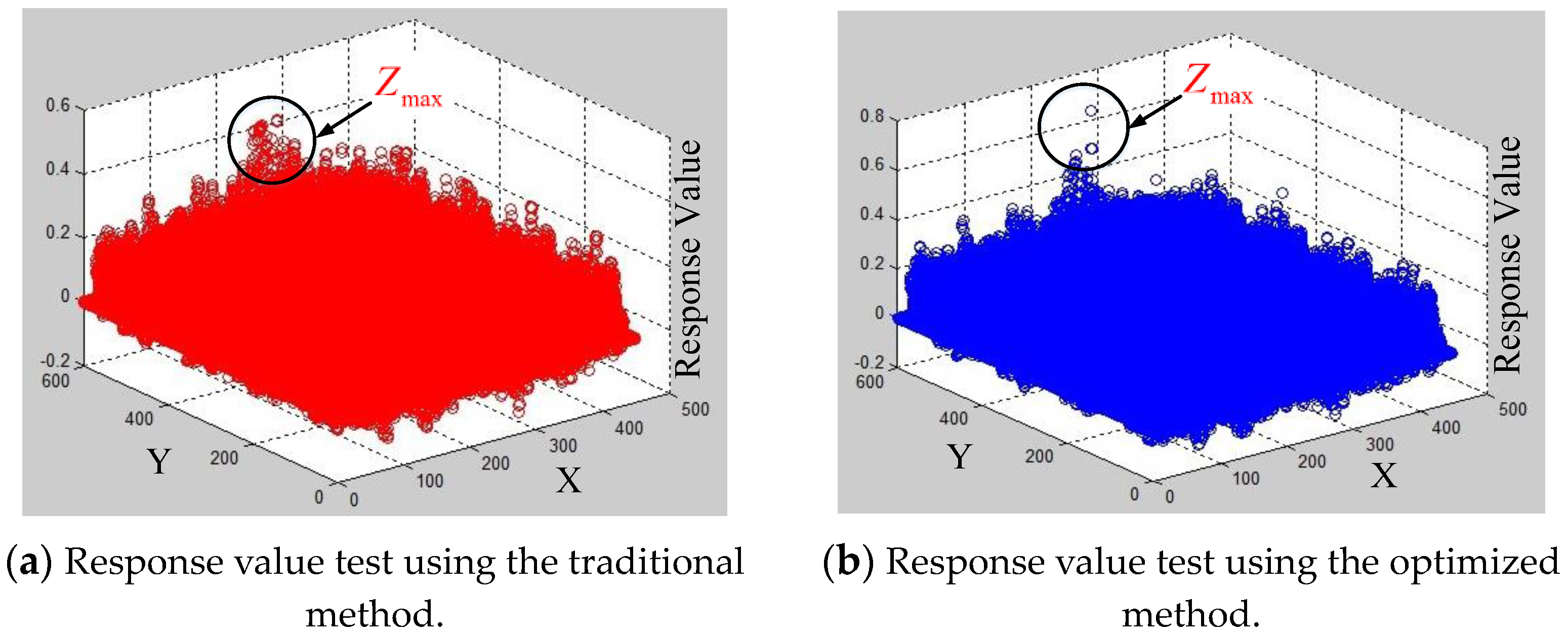

Figure 12a,b shows the response value distribution comparison of two different methods. In

Figure 12, the

X-

Y axes represent the resolution coordinates of the examination image.

Z axis represents the response value distribution. There are multiple points in the black circle, but the highest point on the

Z-axis is the response maximum

. Except for the maximum scatter, the more the interference scatters in the black circle, the more the occurrence of false detection. Compared with the traditional template matching method, the number of interference scatters of the optimized DSST tracking method is significantly decreased. The traditional method’s response maximum is 0.4, the optimized method’s response maximum is 0.7. Both of them can detect the cross target position. However, the response ratio of the optimized method increases by 42.9%. It shows that the target tracking stability of the optimized method is significantly improved and it can thus effectively avoid the target detection failure.

As shown in

Figure 11a, an aerial view of the complex background is used as the single-sample training data for the anti-occlusion DSST target tracking.

Figure 12a,b shows the response value distribution comparison of the two different methods. In

Figure 12, the

X-

Y axes represent the resolution coordinates of the examination image.

Z axis represents the response value distribution. The maximum scatter point in the black circle represents the response maximum

. Except for the maximum scatter, the more the interference scatters in the black circle, the more the occurrence of false detection. Compared with the traditional template matching method, the number of interference scatters of the optimized DSST tracking method is significantly decreased. The traditional method’s response maximum is 0.4, the optimized method’s response maximum is 0.7. Both of them can detect the cross-target position. However, the response ratio of the optimized method increases by 42.9%. It shows that the target tracking stability of the optimized method is significantly improved and it can thus effectively avoid the target detection failure.

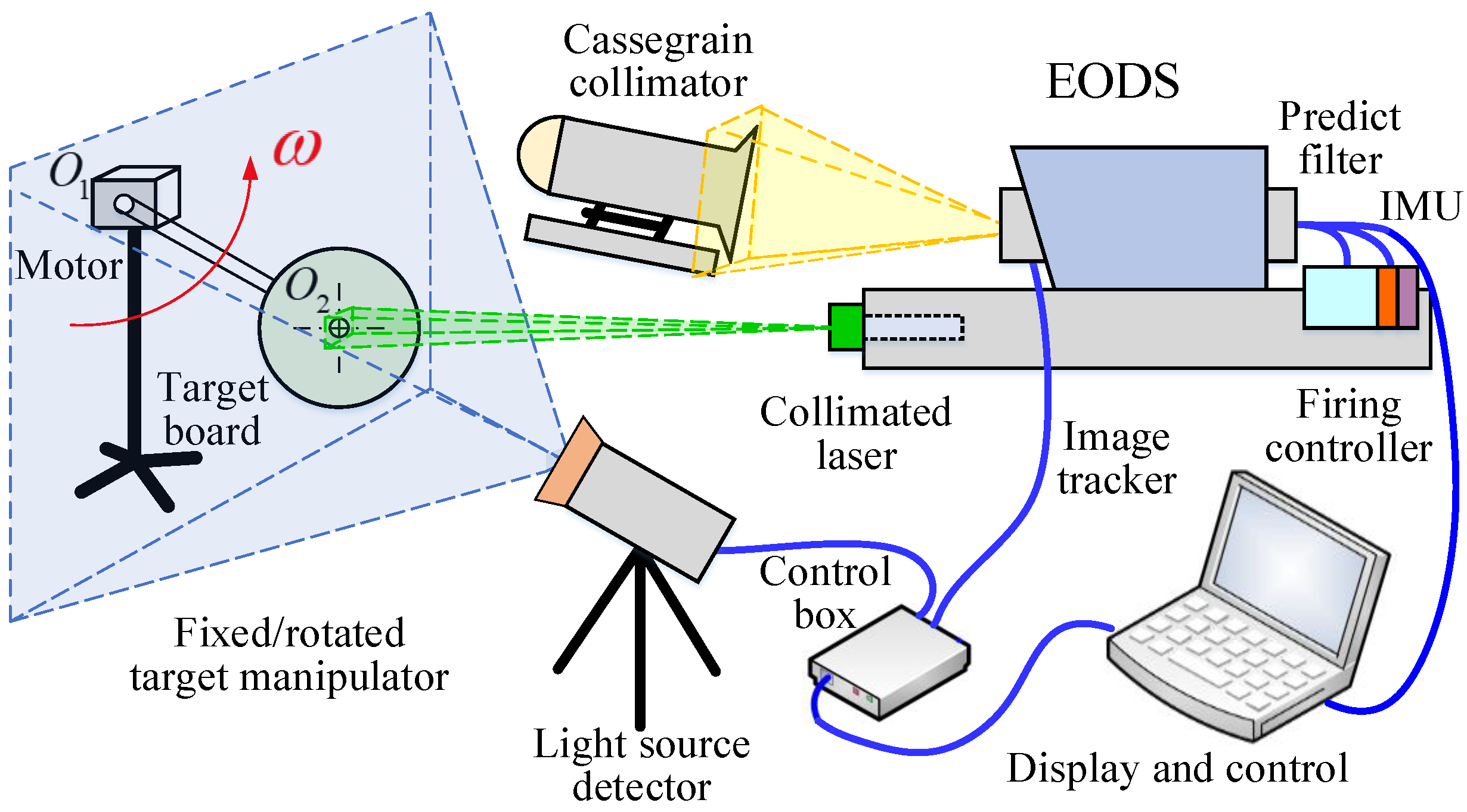

Figure 11b displays a target manipulator. As shown in

Figure 2 and

Figure 11b, the length of the connecting rod

is 70 cm. The target board rotates clockwise at an angular speed of 10°/s.

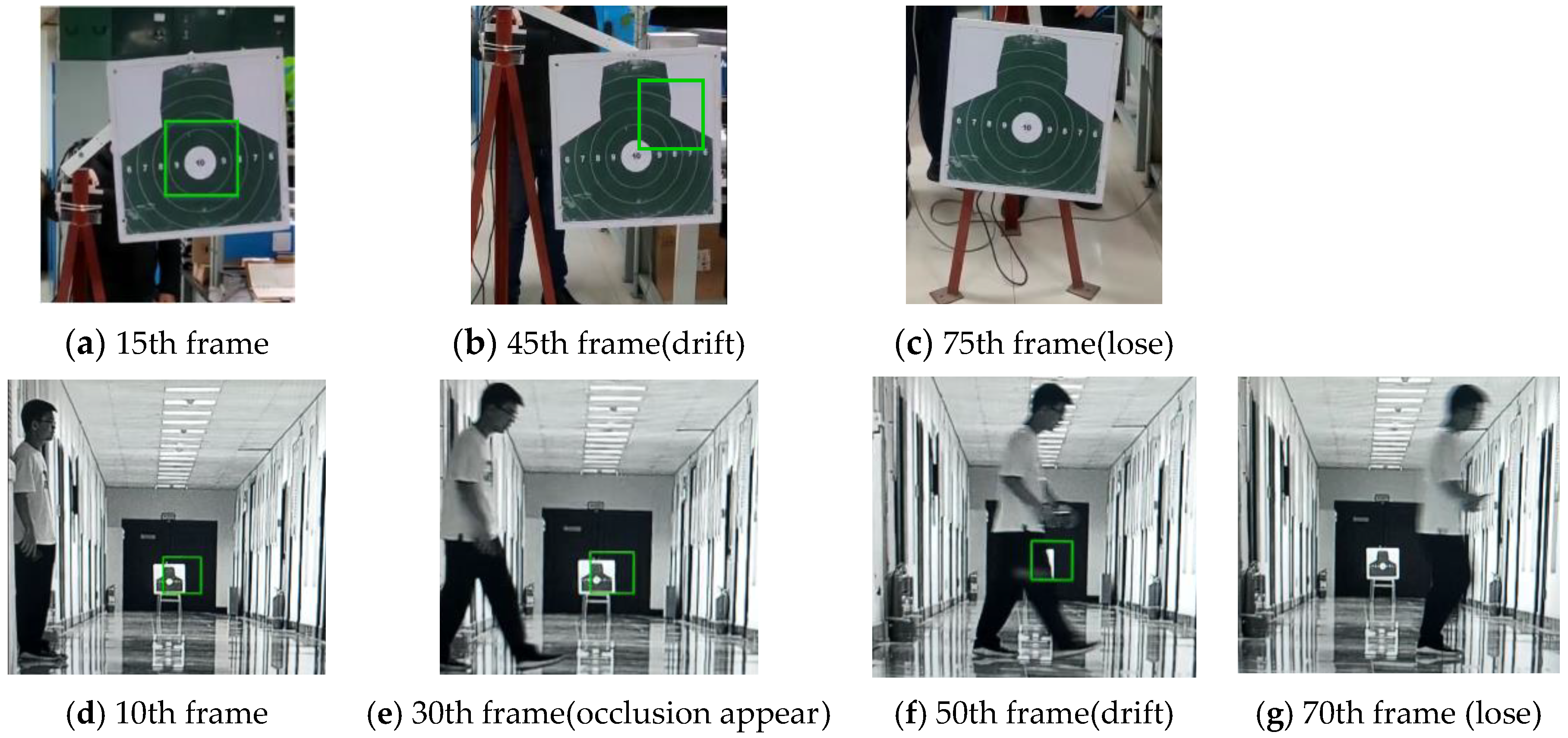

Figure 13 and

Figure 14 show the target tracking test results of the four groups of image sequences. The tracking distance of the rotated target is 50 m. The moving speed of the occlusion object is about 1.2 m/s. The tracking distance of the occluded target is 100 m.

In

Figure 13a–c and

Figure 14a–c, the traditional method causes a tracking drift error at the 45th frame and completely loses the target at the 75th frame. Compared with the traditional method, the optimized method can accurately and stably track the target’s motion. In

Figure 13d–g and

Figure 14d–g, compared with the traditional method, the optimized method can resist target occlusion. At the 30th frame, the occlusion object appears from the left. The traditional method causes a tracking drift error at the 50th frame and completely loses the target at the 70th frame. It shows that the anti-occlusion DSST tracking method can stably output the miss-distance Δ and improve the tracking precision.