1. Introduction

According to the latest statistics released by the International Renewable Energy Agency (IRENA), the global cumulative installed renewable energy capacity reached 3064 GW in 2021. In China, the cumulative installed renewable energy capacity reached 1063 GW in 2021, accounting for 31.9% of the global installed capacity. In particular, the global cumulative installed wind energy capacity is about 732 GW, while China is in a leading position globally with a cumulative installed wind energy capacity of 282 GW, accounting for 38.5% [

1]. In the predictable future, the development of wind energy will go further and become a more important part of energy consumption. The wind speed increases by 0.1 m/s per 100 m of altitude below 1000 m. Wind energy is often abundant in cold regions or high-altitude areas [

2]. Air density is higher in cold regions at high altitudes due to the fact that cold air is denser than warm air. Consequentially, the potential wind energy is 10% higher in cold regions than otherwise [

3]. Therefore, most wind farms are located in these regions. During the winter, these regions are more prone to the occurrence of blade icing [

4]. The process of ice accumulation on the blades is very slow. In fact, many environmental variables, including air humidity, wind speed, and ambient temperature, are associated with blade icing [

5]. The lift force and drag force of the blade will decrease and increase, respectively, when it is icing, which will increase blade weight, and even lead to blade fracture [

6]. Blade icing operation most commonly affects the actual output of the wind turbine for power production [

7]. Regions with extremely cold climates can reduce annual power production by 20% to 50% [

8]. Early diagnosis of blade icing helps to reduce power loss and improve the security of wind turbine operation [

9].

Figure 1 shows a wind turbine blade that has accumulated ice due to the cold climate.

Wind turbine blade icing detection is an emerging research field. At present, many scholars are engaged in research in this field [

10]. Current blade icing detection techniques include two main directions, model-based approaches and data-driven approaches [

11]. Based on an object’s internal workings, accurate mathematical models are known as model-based approaches [

12], which construct a multi-resolution analysis method to extract the current frequency components of wind turbines. Then, based on the fact that the wind turbine inertia gradually increases due to the blade icing, the wavelet analysis method is used to analyze the change in the current frequency component to detect whether the blade is icing. Three methods for creating power threshold curves are proposed to distinguish the icing production cycle from the non-icing production cycle, and they are applied to the wind turbines of four wind farms. The effectiveness of the methods is verified by comparative analysis [

13]. In the literature [

14], early detection of blade icing is achieved using controlled acoustic waves propagating in a wind turbine blade. The ice observations are analyzed using three metrics: fast Fourier transform (FFT), amplitude attenuation, and root-mean-square relative error (RMS). It is quite challenging to build a model-based approach because of the unstable operating environment of the wind turbine and the complicated structure of the components [

15].

At present, due to the remote location of wind farms, SCADA systems are widely used in wind farms [

16]. These systems allow technicians to collect and analyze real-time data remotely and monitor the status of the wind turbine operation based on the acquired data. The collection of real-time data provides the basis for a data-driven approach [

17]. Thus, the data-driven approach is widely used in the field of blade icing detection.

The data-driven approach builds an intelligent model by using information from a significant number of data samples and mining the potential sample features in the data. As a main data-driven method, supervised learning has been widely used in the field of blade icing detection. In the literature [

18], after feature extraction using recursive feature elimination methods, an integrated learning approach combined with Random Forest (RF) and Support Vector Machine (SVM) is used to improve the accuracy of the model. The literature [

19] proposes an MBK-SMOTE algorithm that combines the MiniBatchK-means clustering algorithm and the SMOTE algorithm, aiming to solve the severe class imbalance problem in blade icing. An end-to-end CNN-LSTM model is suggested in the literature [

20] to convert SCADA data into multivariate time series for automatic feature extraction. Deep autoencoders are used in the literature [

21] to extract multi-level fault features from SCADA data and utilize ensemble learning methods to build icing detection models. In the literature [

22], the short-term and long-term features affecting blade icing are extracted according to the icing physics, and the hybrid features formed by the combination of short-term and long-term are fully considered. These features are used to build the Stacked-XGBoost algorithm. A multi-level convolutional recurrent neural network (MCRNN) is proposed in the literature [

23] for blade icing detection. A parallel structure combining LSTM branches and CNN branches is established for feature extraction using discrete wavelet decomposition to extract multilevel features in the time and frequency domains. A temporal attention-based convolutional neural network (TACNN) is proposed in the literature [

24]. Discriminative features in the original data are automatically identified by the temporal attention module. Compared with the model-based method, the supervised learning method reduces the need for long-term knowledge of experts and solves the complexity and challenge of blade icing physical modeling. However, such methods are more dependent on data quality as well as label quality [

25]. It is impossible to rely on human observation to gather substantial volumes of labeled data in the actual setting of wind turbine operation [

26]. Manually annotated labels frequently contain errors. Therefore, supervised learning methods are difficult to effectively apply to wind turbine blade icing detection due to the large amount of inaccurate labeled data included in practical applications.

In order to solve the problems of less blade icing time, imbalance of classes, and inaccurate manually labeled data, this paper proposes a tri-XGBoost method that combines the tri-training [

27] semi-supervised learning algorithm on the basis of the XGBoost [

28] algorithm. As an important semi-supervised learning algorithm, tri-training can be applied to many real-world scenarios because it does not require special learning algorithms. The tri-training algorithm uses three classifiers, which are not required for different supervised learning algorithms. Such a setting determines how to pseudo-label the unlabeled data. With a limited number of labels, the tri-training algorithm greatly improves the classification ability of the algorithm. Overall, the proposed method makes use of the incorrectly labeled data and develops new features based on the original features using domain knowledge. Then, Pearson correlation coefficients are used for feature selection [

29]. Focal loss [

30] is chosen as the loss function for the iterative update of the classifier. The proposed method provides a new solution to the issues of inaccurate labeling and class imbalance in blade icing.

The contributions of this study are shown as follows. The tri-training algorithm is used for the first time to detect icing on wind turbine blades. It can fully exploit the potential information of unlabeled samples to help the model identify icing. It is useful for maintaining the safe operation of wind turbines and improving the efficiency of power generation. This is because it is difficult to obtain a large number of labeled icing samples when the turbine is generating electricity. The proposed method effectively combines feature standardization, cost-sensitive learning, the XGBoost algorithm, and the tri-training semi-supervised learning algorithm to enhance the performance of the model to identify icing. It not only solves the class imbalance problem of labeled samples but also converts incorrectly labeled samples into usable data, which greatly reduces label dependence.

The rest of the paper is organized as follows.

Section 2 briefly describes the theoretical background of the proposed method.

Section 3 describes how the proposed blade icing detection method is implemented.

Section 4 describes a case study of the proposed method in real data. The experimental results of the case study are discussed and analyzed in

Section 5. Finally,

Section 6 summarizes the work.

3. Proposed Wind Turbine Blade Ice Detection Algorithm

3.1. Tri-XGBoost Algorithm

In order to solve the problem of inaccurate labels and class imbalance in SCADA data, a tri-XGBoost based on the wind turbine blade icing detection method is constructed in this paper. The proposed method combines the XGBoost machine learning algorithm and tri-training semi-supervised learning algorithm. On the one hand, using Focal Loss for class imbalance learning focuses training on a sparse set of hard samples. On the other hand, samples with low label reliability are considered as unlabeled samples and their pseudo-labels are generated by the tri-training algorithm. Hence, the icing detection model can make full use of unlabeled data and reduce the reliance on labeled data. It can still achieve a better training performance with fewer minority class labels.

The pseudo-code of the proposed tri-XGBoost algorithm in this paper is shown in Algorithm 1. First, Bootstrap sampling is performed on the original labeled data to obtain three labeled training sets. Then, three base classifiers , , and are initially trained using these three labeled training sets. Unlabeled samples will be fed into these initially trained base classifiers. Next, these initially three base classifiers generate pseudo-labels for the unlabeled samples. In each round of tri-training, the newly labeled samples obtained by a single classifier are provided by the remaining two classifiers in collaboration. If the remaining two classifiers both agree on the labeling of the same unlabeled sample, that sample can be added to the labeled training set of . Namely, it is considered to have a high confidence. Finally, the number of samples selected to be added to the labeled training set varies in each round, so and denote the set of samples added to the training set of classifier in rounds and , respectively. In each round of iteration, is put back into the unlabeled dataset for reprocessing.

| Algorithm 1: tri-XGBoost algorithm |

| Input: Labeled datasets , Unlabeled datasets , Classifiers |

| Output: using the voting method |

| 1. | for do |

| 2. |

|

| 3. |

|

| 4. |

|

| 5. | end for |

| 6. | repeat until |

| 7. | do |

| 8. |

|

| 9. | |

| 10. | do |

| 11. | then |

| 12. | |

| 13. | end if |

| 14. |

end for |

| 15. |

then |

| 16. |

|

| 17. | else |

| 18. | then |

| 19. |

|

| 20. |

|

| 21. |

end if |

| 22. |

end if |

| 23. | then |

| 24. |

|

| 25. |

end if |

| 26. |

end for |

| 27. |

|

| 28. | end repeat |

However, if the remaining two classifiers predict the sample incorrectly, a sample with a noisy label is obtained. After the samples with noisy label are added to the training set of classifier

, it will have a negative impact on the classification performance of the classifier. Based on [

27], the negative impact of noisy labels can be compensated if the number of newly labeled training samples is large enough and satisfies certain conditions. Therefore, the new samples added to the training set of classifier

need to satisfy the following condition:

In Equation (9),

denotes the upper bound of the classification error rate of the remaining two classifiers. If the new samples added to the training set do not satisfy Equation (9), random under-sampling of

is performed to remove

number of samples.

should satisfy Equation (10) such that the size of the training set

is still larger than

after under-sampling.

As mentioned above, we set the initial classification error rate threshold to 0.5, the number of new sample sets added to the training set of classifier to 0, and the number of iterative rounds initialized to 0.

3.2. Modeling Method for Blade Ice Detection

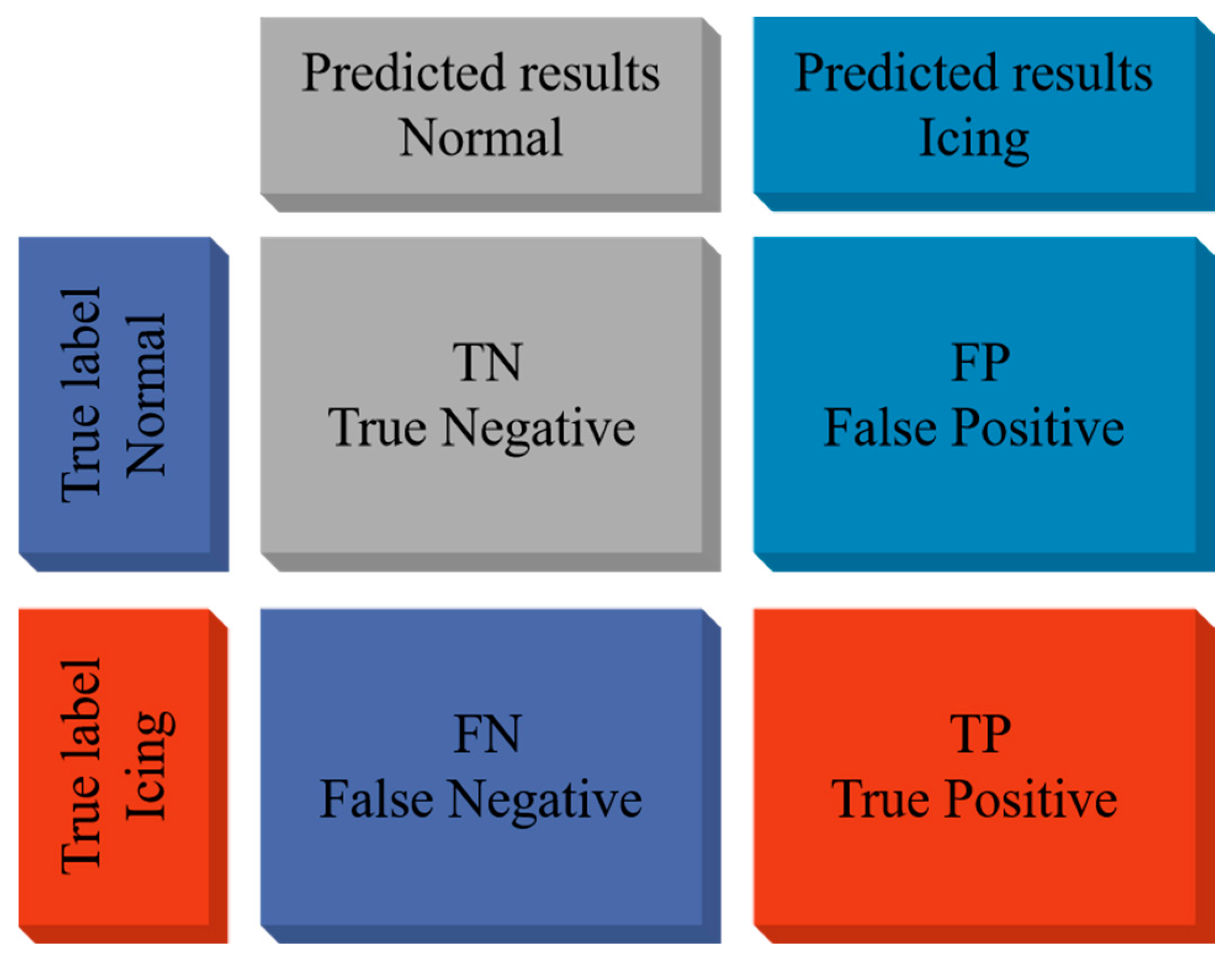

In this paper, we use the data collected from the wind turbine SCADA system to build a model for blade icing detection based on a data-driven approach. The collected data include 18 continuous type variables such as wind speed, active power, generator speed, and pitch angle. Blade icing detection is abstracted as a binary classification task. The tri-XGBoost algorithm is proposed for the problems of inaccurate labeling and class imbalance of SCADA data. The general flow chart of the modeling of blade icing detection based on tri-XGBoost is shown in

Figure 2.

Data-driven modeling for blade icing detection generally consists of several components: icing cause analysis, data pre-processing, feature processing, class imbalance learning, and model evaluation. The specific modeling steps are as follows.

Step 1 Training set construction and data pre-processing. As mentioned before, the ice accumulates on the blades slowly and over time. The labeling information of blade icing is obtained by professional observation. Early icing samples may be mislabeled as normal due to untimely observations. These mislabeled samples used for model training will have a negative impact on the accuracy and generalization ability of the model. In this paper, based on the above tri-XGBoost algorithm, the original SCADA dataset is divided into a labeled dataset and unlabeled dataset.

Blade icing can lead to power limiting operation of the turbine. According to expert’s knowledge, there are several other causes of turbine-limiting power operation as follows: One reason is the electrical grid scheduling power limit; it is difficult to store the power that is transmitted from the wind farm to the grid when the wind speed is too high. Another reason is that due to problems with the main components of the turbine, the turbine needs to operate with limited power to avoid accidents. A further reason is that some wind farms are close to residential areas; during the night to avoid excessive noise, turbines should be in limiting power operation. We found that the non-icing causes of limiting power operation of the turbine can be filtered based on two features: the pitch angle and the active power of the turbine. Therefore, in order to improve the accuracy and generalization performance of the model, these samples with non-icing causes for the limiting power operation of the wind turbine are eliminated in the data pre-processing stage.

Step 2 Feature processing. During the blade icing period, the wind speed, power, and generator speed of the wind turbine deviate significantly from the normal operating conditions. For a given wind speed, it manifests with lower power and generator speed with respect to the normal. In this paper, the existing features of the original data are combined to create three new features to be input into the model. Pearson correlation coefficients are used to reduce the dimensionality of the features, and screen the features with too high a correlation and eliminate them. After feature extraction, to eliminate the effect of unit and scale differences between features, we use the standardization method for feature scaling of the data.

Step 3 Class imbalance learning and model training. The methods for dealing with class imbalance can be divided into three main categories, namely, under-sampling, over-sampling, and cost-sensitive learning. In order to retain the original data as much as possible, this paper selects the cost-sensitive learning method and introduces the Focal Loss to replace the original loss function of the XGBoost algorithm. This method does not change the original distribution of the data compared to data resampling when dealing with class imbalance, which greatly reduces the possibility of model overfitting. In the training process of the model, different examples are classified with different degrees of difficulty. Focal Loss focuses the learning on which examples are more difficult to classify and reduces the weights of which examples are easily classified. The improvement of class weighting is also introduced into Focal Loss. Thus, Focal Loss focuses on both hard examples as well as minority class examples.

The labeled and unlabeled data are input into the tri-XGBoost model for collaborative training. After initializing the base classifier using the labeled data, the pseudo-label of the unlabeled data is generated according to the trained base classifier. The base classifiers are iteratively updated until none of the base classifiers change anymore. The final classification model is obtained using the majority voting.

Step 4 Parameter optimization and model evaluation. The methods of parameter optimization include grid search, random search, and Bayesian optimization. In this paper, the grid search algorithm is chosen to determine the optimal hyperparameters. Grid search traverses the given parameter spaces and finds the parameter with the highest accuracy on the test set from all parameters by exhaustive enumeration. The test set is subjected to the same data pre-processing and feature engineering to ensure the same feature dimensionality. Model evaluation is performed with the processed test set to validate the model classification performance.

5. Discussion of Results

In order to verify the effectiveness of the feature processing method proposed in this paper, data before feature processing and data after feature processing are used for comparison experiments.

Figure 6 shows the test results of the two kinds of data input to the model after training at R = 0.5. As shown in the figure, after the feature processing, all the metrics of the model improve substantially. Specifically, Acc improves by 7.2%, Pre improves by 29.6%, Rec improves by 16.3%, F1 improves by 22.7%, and MCC improves by 31%. The results show that the proposed feature processing method effectively improves the performance of model classification.

In order to verify the superiority of the tri-XGBoost method proposed in this study, we compare supervised XGBoost with the semi-supervised learning method proposed in this study. In this experiment, we select the three most representative labeled rates R. The values of R are taken as 0.2, 0.5, and 0.8. As shown in

Table 3, with R = 0.2, supervised XGBoost and the proposed model perform almost identically in Acc and Pre. However, there is already a large gap in Rec, F1, and MCC, which are quite important for blade icing detection. With R = 0.5, the proposed method significantly outperforms the supervised XGBoost method in all metrics, where Pre reaches the highest value of 0.941 in this experiment. With R = 0.8, our model improves 20% in Rec, 15.9% in F1, and 21% in MCC compared to the supervised XGBoost method. It can be observed that the performance of supervised XGBoost at R = 0.8 decreases compared to R = 0.5, which may be due to the presence of inaccurately labeled samples in the labeled data, resulting in noise within the model. The results show that the proposed method is less affected by labeling inaccuracies. Overall, with extremely limited labels, the proposed method still shows a large improvement in three metrics, Rec, F1, and MCC. Compared with Acc and Pre, the significant improvement of these three metrics can better represent the help of the proposed method for blade icing detection.

The proposed method in this study modifies the loss function of the base classifier XGboost to Focal Loss, and we compare XGboost with Focal Loss and XGboost with Logistic loss. The application scenario of this study suffers from class imbalance and inaccurate labeling, and the problem of hard samples arises when the tri-training method is applied to generate pseudo-labels. In this experiment, we select three most illustrative labeled rates R. The chosen values of R are 0.1, 0.2 and 0.3.

As shown in

Table 4, in the case of insufficient labeled data, the loss function of choosing Focal Loss as the base classifier is effectively improved in all metrics compared to Logistic Loss. It can be observed that the training time of the model is also relatively reduced when there is the same amount of labeled data. The less the amount of labeled data, the more training time is saved by the proposed method in this study. For the labeled rate R = 0.1, 0.2, and 0.3, the training time is saved by 14.7%, 10%, and 7%, respectively. Focal Loss shows the ability to speed up the model fitting and effectively improves the model classification. For the class imbalance problem of the binary classification task, Focal Loss demonstrates good rebalancing ability. However, if future studies go further into the prediction of blade icing degrees, the performance of Focal Loss may need to be reconsidered.

The self-training algorithm [

38] is often compared to the tri-training algorithm as a type of semi-supervised learning algorithm. The self-training algorithm uses the same base classifier as the tri-training algorithm. Unlike the tri-training algorithm, in each round, each classifier generates pseudo-labels for itself for unlabeled samples instead of labeling the samples by the other two classifiers. With the base classifier uniformly set to XGBoost with Focal Loss, we carry out a comparative analysis of two classical semi-supervised strategies.

Table 5 shows the results of detecting blade icing using two different semi-supervised learning strategies when the labeled rate R is chosen to be 0.2, 0.4, 0.6, and 0.8. With R = 0.2, the tri-training algorithm improves 19.6% in Rec, 12.7% in F1, and 19.2% in MCC compared to the self-training algorithm. With R = 0.4, the tri-training algorithm improves 9.9% in Rec, 8.6% in F1, and 11.3% in MCC compared to the self-training algorithm. With R = 0.6, the tri-training algorithm improves 11.7% in Rec, 9.7% in F1, and 12.6% in MCC compared to the self-training algorithm. With R = 0.8, the tri-training algorithm improves 4.4% in Rec, 2.2% in F1, and 12.6% in MCC compared to the self-training algorithm. The classification performance of the tri-training algorithm is generally improved over that of the self-training algorithm regardless of the chosen labeled rate. The only exception is that when the labeled rate R = 0.8, the Pre value of the model with the tri-training algorithm decreases slightly. It is worth noting that the improvement in the tri-training semi-supervised strategy compared to the self-training semi-supervised strategy for the ability of the model to detect blade icing gradually diminishes as the labeled rate increases.

The base classifier XGBoost is replaced with other base classifiers under the same experimental setup for comparison. The performance of the proposed method is tested against the commonly used classification algorithms such as SVM, K Nearest Neighbor (KNN), RF, and LightGBM, all of which are trained using the tri-training method. The test results are shown in

Figure 7. In this experiment, the values of the labeled rate R are taken from 0.1 to 0.9.

As shown in

Figure 7, the proposed model in this study achieves the best performance on all five different evaluation metrics when the labeled rate R is greater than 0.3. The results show that the performance of the models improves with the increase in the labeled rate R, except for the case where the base classifier is a KNN. When the labeled rate R is less than 0.3, KNN performs even better than all base classifiers. However, because the value is still low, it cannot effectively detect blade icing in real-world scenarios. SVM will be particularly vulnerable to false detection in the blade icing detection process as its Rec value is at the same level as other base classifiers and its Pre value is quite low. When the labeled rate R is greater than 0.3, RF and LightGBM have a large gap with the proposed model in Recall, F1, and MCC. It is notable that RF, LightGBM, and XGboost show similar growth trends as the labeled rate R increases. All three algorithms mentioned above are one of the decision-tree-based ensemble methods, and XGBoost shows better classification performance in blade icing detection. It can be concluded that the proposed model works well with a limited number of labels.

In summary, the proposed blade icing detection method in this paper effectively extracts the features characterizing blade icing and reduces the dependence of machine learning models on labeled data. For hard samples, the proposed method demonstrates good generalization ability. By penalizing hard samples more than easy samples, the algorithm performs better. XGBoost is compared with other base classifiers, such as SVM, KNN, RF, and LightGBM. With a good detection rate and low false alarm rate, XGBoost is well-suited as a base classifier for blade icing detection.