Abstract

A human machine interface (HMI) is presented to switch on/off lights according to the head left/right yaw rotation. The HMI consists of a cap, which can acquire the brain’s electrical activity (i.e., an electroencephalogram, EEG) sampled at 500 Hz on 8 channels with electrodes that are positioned according to the standard 10–20 system. In addition, the HMI includes a controller based on an input–output function that can compute the head position (defined as left, right, and forward position with respect to yaw angle) considering short intervals (10 samples) of the signals coming from three electrodes positioned in O1, O2, and Cz. An artificial neural network (ANN) training based on a Levenberg–Marquardt backpropagation algorithm was used to identify the input–output function. The HMI controller was tested on 22 participants. The proposed classifier achieved an average accuracy of 88% with the best value of 96.85%. After calibration for each specific subject, the HMI was used as a binary controller to verify its ability to switch on/off lamps according to head turning movement. The correct prediction of the head movements was greater than 75% in 90% of the participants when performing the test with open eyes. If the subjects carried out the experiments with closed eyes, the prediction accuracy reached 75% of correctness in 11 participants out of 22. One participant controlled the light system in both experiments, open and closed eyes, with 100% success. The control results achieved in this work can be considered as an important milestone towards humanoid neck systems.

1. Introduction

Advances in cognitive engineering support the design, analysis, and development of complex human–machine–environment systems [1]. Recently, special interest has been devoted to the identification of the cognitive human reaction to an adaptive human computer interaction (HCI) [2] or to environment perception by the human. In HCI research, brain–computer interface (BCI) systems represent one of the most challenging research activities. Reviews about BCI and HMI can be found in [3,4]. BCI technologies monitoring brain activities may be classified as either invasive or noninvasive. Invasive BCI technologies generally consist of electrodes implanted in the human cortex. Noninvasive BCI technologies usually refer to electrodes positioned on the scalp to perform electroencephalography (EEG) or magnetoencephalography (MEG). EEG and MEG can, respectively, monitor the electrical and the magnetic fields generated by neuronal activities.

This work relates to the EEG used for the recognition of the head movement in a human participant. A survey on the EEG in this context can be found in [5]. The EEG is one of the most promising tools to monitor brain cognitive functionality due to its higher temporal precision with respect to other tools such as Functional Magnetic Resonance Imaging (FMRI), which presents higher spatial resolution [6]. EEG-based biometric recognition systems have been used in a wide range of clinical and research applications, among others: interpreting humans emotional states [7]; monitoring participants’ alertness or mental fatigue [8]; sleep stage identification [9]; checking memory workload [10]; finding brain area of damage in case of injury or disease [11]; automated diagnosis of patients with learning difficulties [12]; and, in general, diagnosing brain disorders [13]. EEG monitoring, analysis, and processing are useful techniques to identify intentional cognitive activities from brain electrical signals. Some studies investigated the event-related potential when the decisions of the monitored participants are affected by external stimuli during workload sessions. In fact, the workload performance does not only depend on the operator but also on the surrounding environment and on their interactions. A cognitive stimulus represents an event that involves the subject while performing different planned tasks during EEG data collection. Several recent examples may be considered. The evaluation of a driver’s behavior [14,15] or drowsiness [16,17] by analyzing the EEG signals has been conducted. Specifically, a Multitask Deep Neural Network (MTDNN) to distinguish between an alert and drowsy state of mind was set up in [18]. To prevent road accidents, the driver’s fear detected by EEG signals was evaluated when he/she was subjected to unpredictable acoustic or visual external stimuli [19]. In the automotive context, the interaction between the driver and an onboard Human Machine Interface (HMI) may support safer driving, especially in critical situations [20]. Emergency braking, a rapid change of lane, or avoiding an obstacle on the route can also be detected by the driver’s brain in advance with respect to the vehicle dynamic, and this contribution may improve the quality of the driver’s intent prediction [21] and overall system safety [22].

BCI technologies aim at converting mental activities of the human participant into electrical brain signals, producing control command feedback to external devices such as robot systems [23]. In addition, BCIs may also include paradigms that can identify patterns of brain activity for a reliable interpretation of the observed signals [24]. In a control-oriented approach, the BCI has the EEG features of the user in input and can translate the user intention into output commands to carry out an action for the peripheral devices. In this context, most of the BCI applications deal with neuro-aid devices [25]. A quadratic discriminant analysis (QDA) classifier was used to generate the control signals to start, stop, and rotate a DC motor in the reverse direction [26]. Other intelligent classification systems have recently been used: a feedforward Multilayer Neural Network [27] and two Linear Discriminant Analysis (LDA) [28] classifiers were realized to control a robot chair. Other applications relate to the control of drones [29], robot arms [30,31], virtual objects [32], or speech communication [33].

One of the main hard tasks in an EEG BCI application is the implementation of pattern recognition to identify the classes of data coming from brain activities. The pattern recognition task depends on classification algorithms, a survey of which is presented in [34]. Nonlinear classifiers, such as ANNs and support vector machines (SVM), have been demonstrated to produce slightly better classification results with respect to linear techniques (as linear discriminant analysis) in the context of mental tasks [35]. Further research has been carried out to classify neural activities when cognitive stimuli are related to olfactory [36] or visual [37] perception. The correlation between the complexity of visual stimuli and the EEG alpha power variations in the parieto–occipital, right parieto–temporal, and central–frontal regions was demonstrated in [38]; this is in agreement with the findings in [19]. In the context of mental imagery and eye state recognition, a novel learning algorithm was proposed to train neural network classifiers based on Hamilton–Jacobi–Bellman equation, obtaining better accuracy compared with other traditional approaches [39]. A Neuro-fuzzy classifier was used for decoding EEG signals during visual alertness, motor planning, and motor-execution phases by the driver [40]. Other studies demonstrated the feasibility of interpreting EEG signals correlated to drivers’ movements in the context of simulated and real car environment [21]. The cognitive activities related to motor movements have been observed in the EEG both for executed and for imagined actions [41]. A comparison between actual and imaginary movements by neural signals concluded that the brain activities are similar [42]. The classification accuracy to predict the actions of standing and sitting by motor imagery (MI) and motor execution (ME) was evaluated in [43]. The results showed that the classification of MI provides the highest mean accuracy at 82.73 ± 2.54% in the stand-to-sit transition. Another study demonstrated that ANNs perform better than SVM in motor imagery classification [44]. Different classification algorithms including LDA, QDA, k-nearest neighbor (KNN) algorithm, linear SVM, radial basis function (RBF) SVM, and naïve Bayesian were compared to classify left/right hand movement, reaching an accuracy of about 82% by the RBF SVM in [45]. A 2D study was carried out investigating the movements of the dominant hand when a participant had to track a moving cursor on a screen by an imaginary mouse on the table [46]; in this study, the prediction accuracy of horizontal movement intention was found to be higher than the vertical one. An EEG-based classifier has been implemented to identify the driver’s arms movement when he/she must rotate the steering wheel to perform a right or left turn in a virtual driving environment [47].

The classification of head movements appears more complex since, with respect to other monitored actions, EEG signals are affected by artifacts to a greater extent [48]. In this case, the EEG signals may also include noncerebral activities coming from elements such as hair, eye activity, or muscle movements [49]. Many techniques have been used to analyze such artifacts [50]. EEG artifacts were identified using the signal from a gyroscope located on the EEG device in [48]. A SVM-based automatic detection system was developed, where the artifacts were considered as a distinct class, in [51]. Artifacts have also been classified according to autoregressive models [52] and hidden Markov models [53,54], or by introducing independent component analysis (ICA)-based heuristic approaches [55]. ICA and Adaptive Filter (AF) to remove ocular artifacts included in EEG signals were used in [56]. An automatic technique to remove artifacts in head movements was proposed in [57], where the signals from an accelerometer located on the participant’s head were acquired together with EEG signals. Subsequently, EEG components correlated to accelerometer signals were removed from the EEG.

A significant relationship between the head movements and the emotions generated by visual stimuli was found in [58]. EEG spectral analysis in the context of visual-motor tasks was carried out, and the human head movements following visual stimuli were predicted with high accuracy by an ANN classifier in [59]. A significant correlation r = 0.98 was obtained between training and test trials of the same person, but relevant difficulties emerged in head movement prediction for a participant when the classifier had been trained on another subject. A positive correlation has been found between the task of drawing an image boundary on a screen by hand movement on a mouse and the brain activities in the upper alpha band from a single frontal electrode [60]. In a similar experiment, which involved the fingers of one hand, it was found that alpha band variations are correlated with visual motor performance, while the coherence in alpha and beta oscillations implies an integration among visual motor brain areas [61]. A great amount of work has also been devoted to eye or hand movements, while a small amount of work has discussed the correlation of EEG signals associated with head movement in visual-motor tasks.

2. Methods

2.1. System Architecture

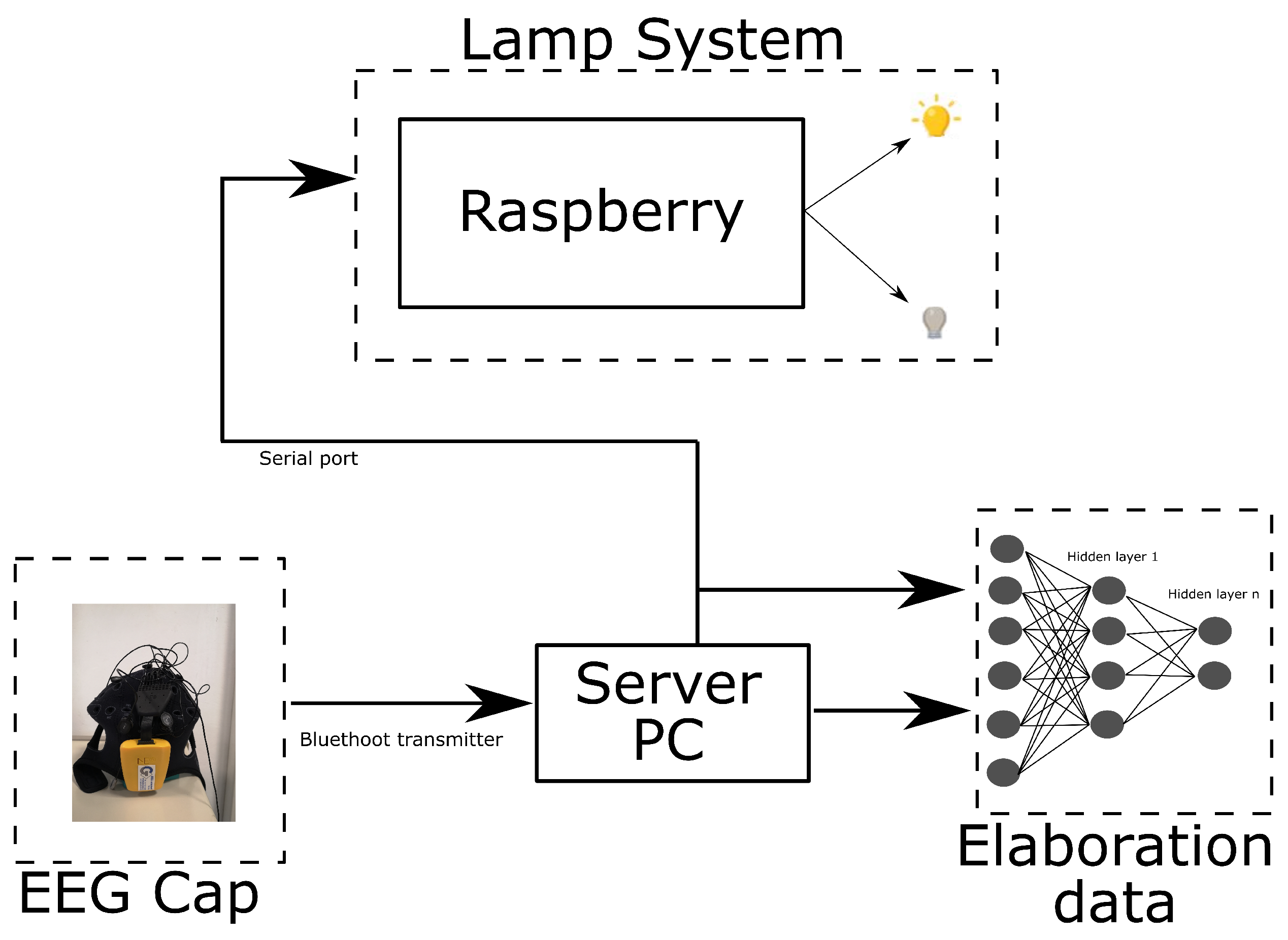

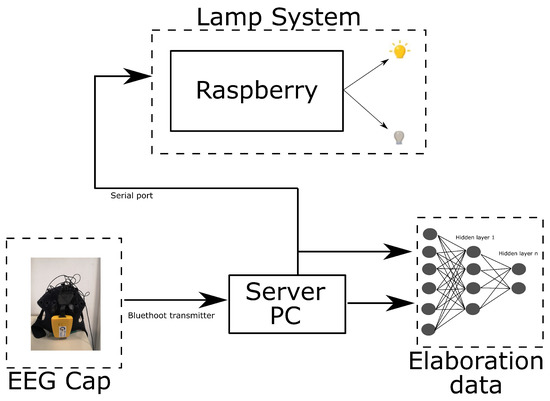

The proposed EEG-based HMI consists of two main components (Figure 1). The first component is the lamp system, which has a twofold role: it generates the external visual stimuli for the participants used to train and test the ANNs, and subsequently acquires the participant’s response, which allows for the evaluation of the predictive model that has been set up. The EEG Enobio cap [62] is the second subsystem used for EEG signal acquisition from users. The two subsystems transmit their input to a common PC server through a serial port and a Bluetooth connector.

Figure 1.

System architecture.

2.1.1. Lamp System

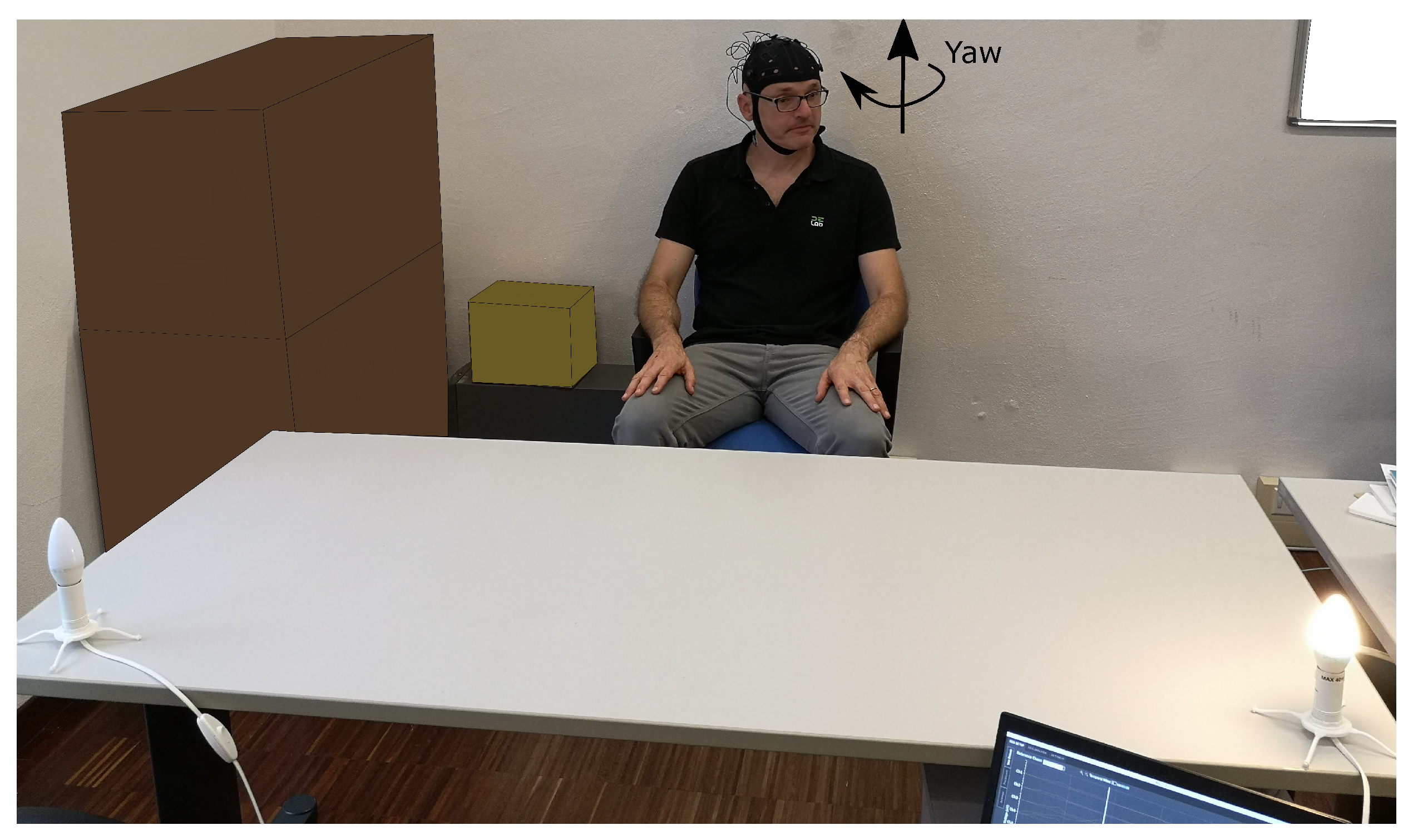

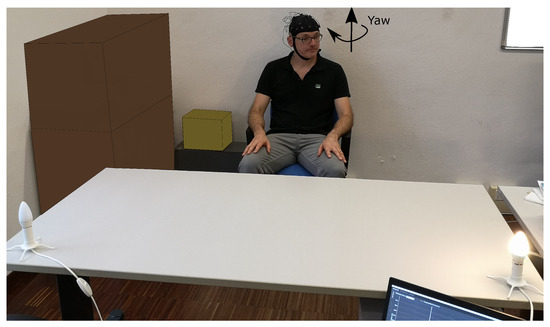

The lamp system consisted of a Raspberry pi 3 control unit and two lamps. The PC server included a python application, which randomly sends an input to the Raspberry by the serial cable, where another python application receives commands to switch on/off the two lamps. The two lamps were located at the extreme sides of a table of 1.3 × 0.6 m, and the participant could rotate their head in the yaw angle range between −45° and 45°. Figure 2 shows the experimental set environment.

Figure 2.

Top vision of the layout for the experimental set environment.

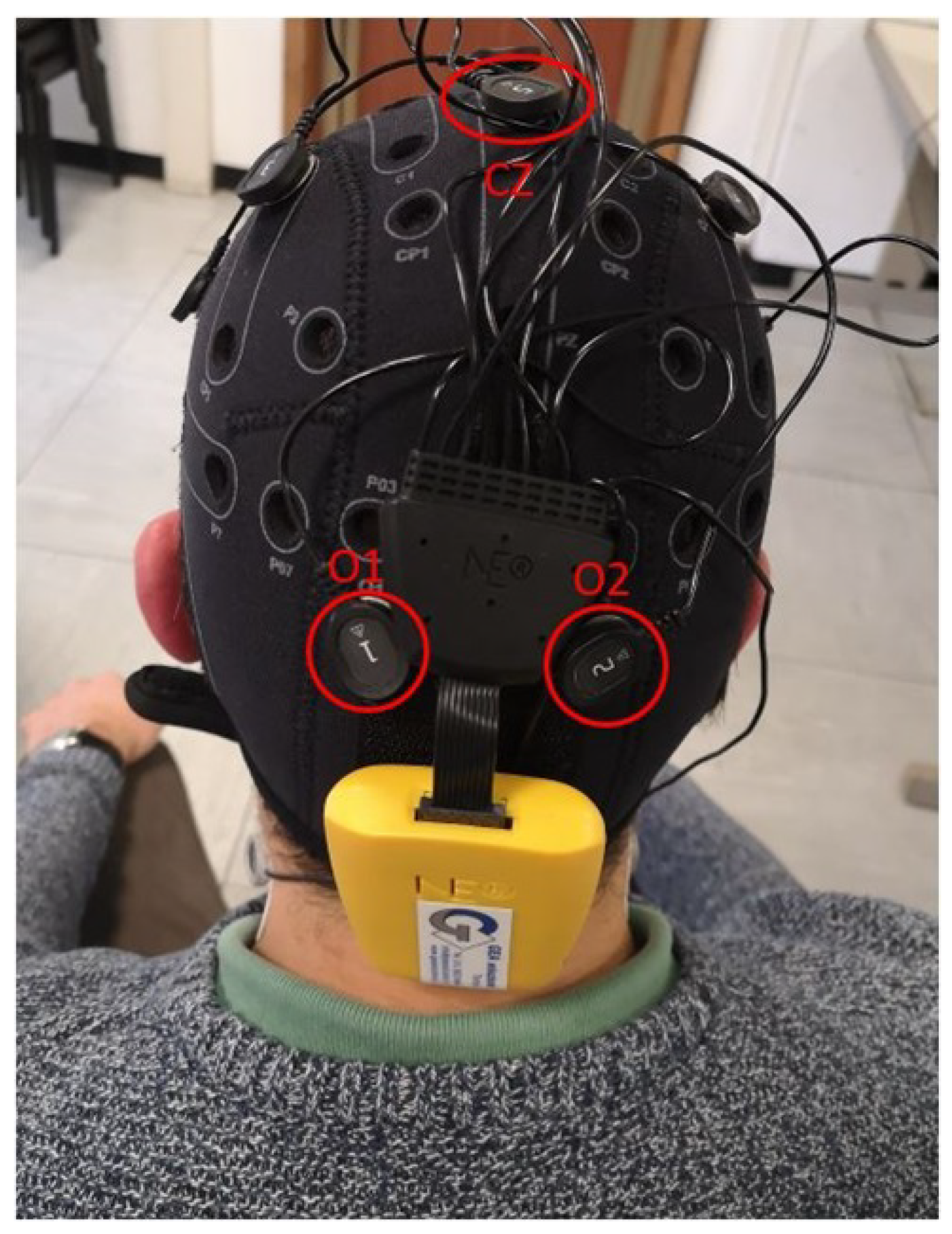

2.1.2. Enobio Cap

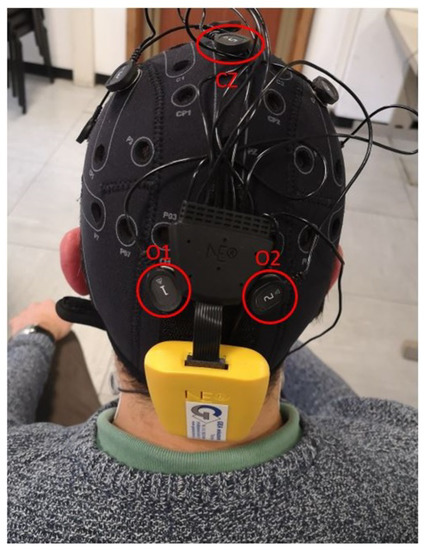

The Enobio cap contained 8 electrodes, which can acquire the brain’s electrical activity (i.e., an electroencephalogram, EEG) sampled at 500 Hz on 8 channels with electrodes positioned according to the standard 10–20 system. So, each sample in each signal corresponds to a duration of s. Besides, 2 additional signals from electrodes positioned in the neck were considered to perform a differential filtering and to limit artifacts due to the electrical muscles’ activity. Three channels—labeled O1, O2 in the occipital lobe, and Cz located in the parietal lobe—were used (Figure 3). In fact, the occipital lobe is associated with visual processing while the parietal lobe is associated with recognition, perception of stimuli, movement, and orientation. In addition, visual motor performance may be detected in the occipital and centroparietal areas [59]. These three electrodes were used due to being located in a brain region unaffected by movement.

Figure 3.

EEG Enobio Cap.

2.2. Experimental Set-Up

The EEG signals collected by a set of 22 participants were treated by sequential application of a Time Delay Neural Network (TDNN) and a Neural Network Pattern Recognition (PRNN) to classify the left or right head yaw rotation in response to external visual stimuli, realizing an HMI to control, in real time, the EEG user’s signals as commands to control switching on/off the lamps. All subjects gave their informed consent for inclusion before they participated in the study. The lamp system was used in two different phases of the experiments.

In the first phase (hereinafter referred to as ”ANN training“), the participant followed the visual stimulus by a yaw rotation and looked towards the switched-on lamp, while in the second phase (hereinafter referred as ”binary controller“), the participant decided on their own to rotate the head and, according to a runtime interpretation of the EEG, the lamp switched on/off.

During the ANN training, the lamps were switched on randomly, one by one, for a 5 s-period. Subsequently, they were both switched off for a period varying between 6 and 9 s. So, as soon as one lamp switched on randomly, the participant had to turn his/her head towards the light. When the lamp switched off, the participant returned to the rest position. Each ANN training experiment lasted 5 min and the participants wore an Enobio cap for the EEG signal recording with dry sensors. The EEG file was used to identify an input–output function between the EEG signal (input) and the state of the lamp system (defined by which lamp was switched on). The approach to the identification of this function is described in Section 2.5.

In the second phase of the experiment, the participant decided to turn his/her head to the left or right side, controlling the lamp system using his/her EEG and generating control through the input–output function. This control phase lasted 3 min.

2.3. Data Acquisition Protocol

The transmission protocol regarding the EEG cap to a Server PC was TCP/IP. The two systems were connected by Bluetooth and the raw data streaming was managed by the TCP/IP protocol in the EDF+ data format. For the lamp system, an owner protocol in a raw data format was used. For the artifacts, no protocol was used because the neck/head movements were collected within the EEG signal; so, the whole signal was acquired during the experiment. Subsequently, artifacts were removed in the data processing phase.

2.4. Data Processing Analysis

Preprocessing Data

The main issue in the EEG signals deployment relates to the artifacts and to the exogenous and endogenous noises that affect the acquired data. For example, the electrical fields that are present in the environment, the movements of the eyes, and muscle activity in the human participants can affect the EEG signal [50]. Muscle-derived artifacts were limited by introducing the signals coming from the differential electrodes placed in the neck. In addition, a bandstop/notch filter between 49 and 51 Hz was applied to reduce the noise produced by the electrical frequency of devices [63].

Further techniques were applied to limit the artifacts. In fact, the resulting time-series data were still contaminated with signal artifacts—such as eye blink and movements—that have the potential to considerably distort any further analysis. During data acquisition, two electrodes located in the hairless part of the head were used, CMS and DRL, similar to a dual reference electrode. The Reference and ground EEG electrodes were fixed on the mastoids of the user for noise reduction. The EEG cap is extremely lightweight to reduce artifacts deriving from movement. The cap fits perfectly to different head shapes and keeps the device in position to guarantee solid contact of the electrode to the scalp, which is a key requirement for high-quality EEG recordings. In EEG-based BCI systems, the implementation of low-pass, high-pass, or bandpass filters has been successfully used to remove physiological artifacts [34]. Independent Component Analysis (ICA) has also been applied to remove these artifacts [55].

Preliminary tests including correlation analysis were performed to reduce the number of channels due to the need to speed up computation of the controller. This analysis suggested to limit the available information only to the signals coming from three main electrodes, labeled O1, O2, and Cz. The resulting samples were normalized by and limited between −1 and 1.

The signal that identified the head position was represented as −1 for left, 1 for right, and 0 for forward positions. The transition between one position and another (e.g., left to forward) was linearly smoothed using a moving average computed on a window of 300 samples (i.e., for a duration of 0.6 s). This value is the time needed to reach the final position by the participant. An empirical analysis was carried out to choose the window size. The time average of all head movement measures that were collected during the simulations is 0.6 s.

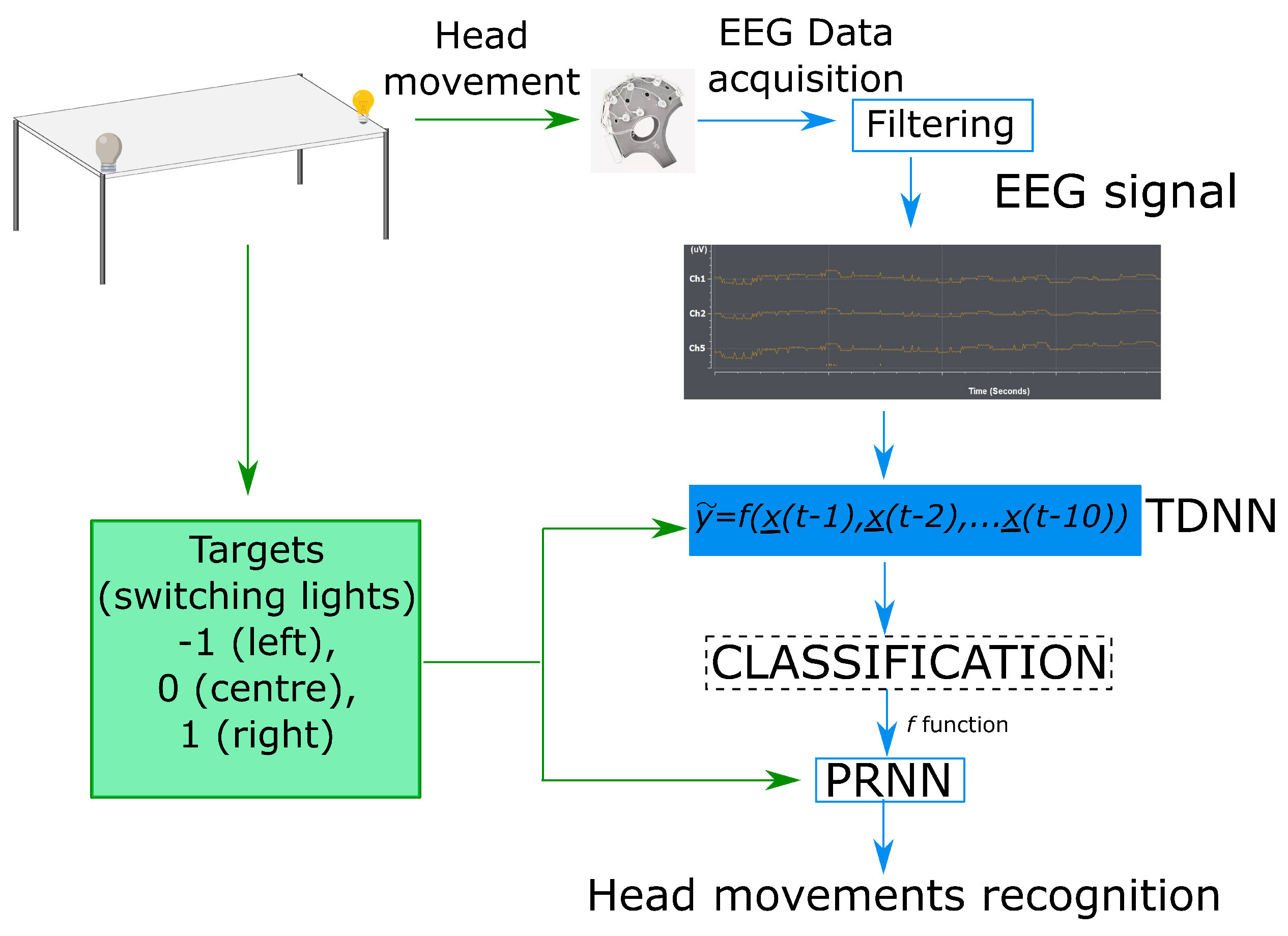

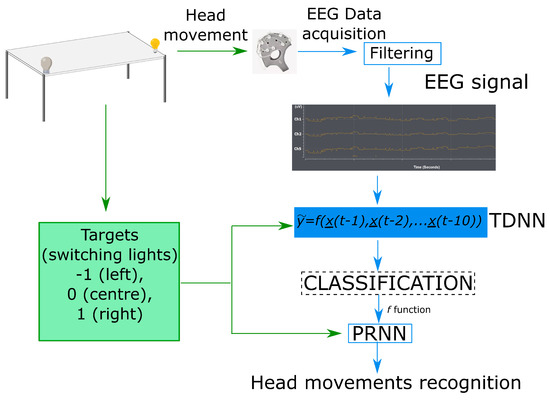

2.5. Input–Output Function Identification

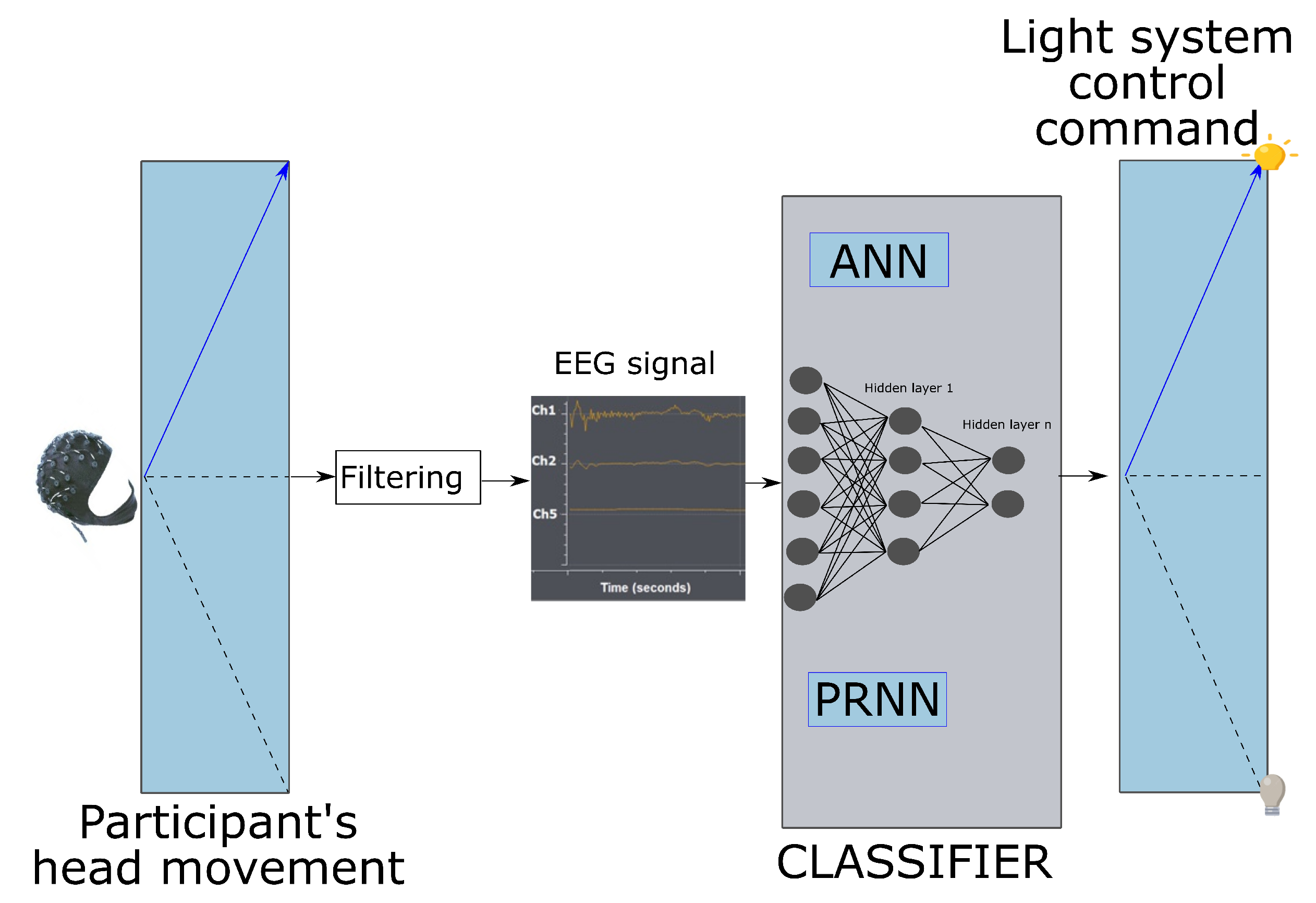

The main objective in this phase was to define the best direct input–output function generated according to the relationship among a specific set of EEG samples with the related value of the head position. In this context, it was demonstrated that the variance [6] and the participants’ sensibility [1] affect the results in the experiments. Due to the complexity of this task, a two-step approach was carried out to obtain the classification results. In Figure 4, the scheme of the proposed architecture is shown, where two different ANN’s have been sequentially applied. Firstly, a Time Delay Neural Network (TDNN) was adopted to model the temporal relationships of the input EEG signals. Then, the TDNN outputs were used as input to an additional Pattern Recognition Neural Network (PRNN).

Figure 4.

Architecture of the proposed system.

2.5.1. The Time Delay Neural Network (TDNN)

The main goal was to identify a nonlinear input–output function between 10 consecutive EEG samples (hereinafter defined as ) taken from O1, O2, and Cz, and the value of the head position in the sample just following the EEG recording (hereinafter defined as ). A nonlinear function f between input pattern and output pattern must be identified, such that the values resulting from (1) minimize the mean squared error (MSE) between and values.

The MSE computed on one prediction is given by

To make predictions less sensitive to input noise, the predicted values are then averaged by a moving average of 300 preceding samples—that is,

The TDNN with 10 neurons in the hidden layer identified the nonlinear input–output function. The identification process is based on the Levenberg–Marquardt backpropagation algorithm [64,65] (Matlab® software version 2020). The training processes obtained a solution after an average of 87 steps (about 45 s) on a common laptop (Intel i5-3360M CPU, 2.8 GHz, 8 GB).

Two key performance indexes were used to evaluate the reliability of the proposed function f generated by the TDNN: MSE and the Pearson correlation coefficient r, defined as follows:

where

- are the standard deviations of y and ;

- is the covariance of y and .

2.5.2. The Pattern Recognition Neural Network (PRNN)

The aim of this computation was to generate the binary control. The PRNN was defined on 3 layers. The input layer was modeled in units related to an input pattern, where the i-th pattern has the values resulting from

where N is the number of time intervals with duration s contained in each resulting . The hidden layer was modeled in 10 units. The output layer was modeled in three units to match the output binary target , defined on three bits 1..3, coded as 100 for left, 010 for right, and 001 for forward positions. For each pattern, the position was the one found at the end of each time interval (e.g., for the 0-th pattern in the time intervals 98 and 99). The identification of the PRNN has been carried out using the scaled conjugate gradient backpropagation algorithm (Matlab® software version 2020).

In the PRNN, the results were evaluated by the cross-entropy loss H [66] given by

To evaluate the accuracy of the results obtained in case of unbalanced class, the Matthews correlation coefficient was used. This index is frequently encountered in biomedical applications [67] and generally applied in machine learning problems to evaluate the quality of binary classifications. Its generalization to a multiclass case is also used as follows:

where is the number correctly predicted—that is, in category k. is the number predicted to belong to class k but belonging to class l, where .

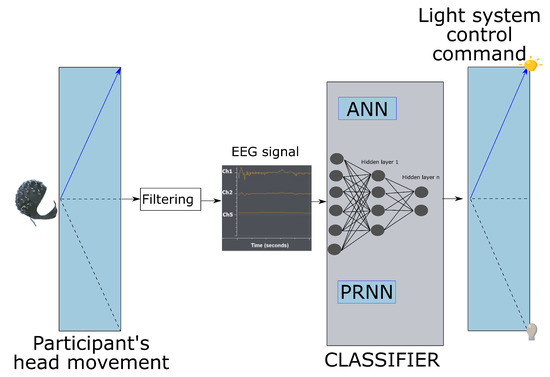

2.6. Binary Controller Testing in Real-Time

To test the reliability of the binary controller, the same participants were involved in the second phase of the experiments. In this phase, each participant, wearing the Enobio cap, decided the direction of head yaw rotations towards one of the two lamps. Consequently, the real-time binary controller, implemented in Matlab® software version 2020, based on the classifier previously identified for each participant (Figure 5), if properly working, should switch on the related lamp.

Figure 5.

Binary controller framework.

If properly working, the binary controller could switch off the lamp once the user turned the head to the frontal position. In the testing protocol, the participant turned the head to the right or left at his/her preference for about 5 s and he/she stayed in the frontal position for about 8 s. The switching on/off control test was performed by the participants both with open and closed eyes. In this test, the action resulting from the binary controller was defined as correct: (a) if in the period with the head turned on one side (i.e., left/right) the lamp on the same side (i.e., left/right) switched on with no switches of the other lamp (i.e., right/left); (b) if in the period with the head in the forward position the lamp switched off with no other switches.

3. Results

3.1. Prediction Accuracy

The trials involved 22 participants: 5 women and 17 men, aged 18 to 62 years, including 4 left-handed. For each participant, one trial lasting 5 min was recorded. To quantify the information of the signals to give added value to the final classification, in this study, the mutual information (MI) rather than the classic statistical methods such as regression was considered more appropriate. MI is a metric that quantifies the dependence between two random variables, taking into account the linear and nonlinear information obtained about one variable observing the other one. It is often used as nonlinear counterpart of the correlation index [68]. When applied to two EEGs, MI gives a measure of the dynamical coupling between them. In comparison with other connectivity measures, mutual information is a measure of statistical dependence between signals that does not make assumptions about the nature of the system generating the signals and gives stable estimates with reasonably long data sets. MI is not limited to real values and determines whether the marginal distribution of the two variables is wider than the joint distribution of the pair.

Considering two random variables and with probability distributions and , respectively, and joint probability , the MI quantifies the reduction in uncertainty of X by measurements made on Y and is given as

When its value slim to none, the two variables are independents. Table 1 reports the MI between the three channels and the output patterns. The MI values in all the cases is close to zero. This low information contribution justifies the design of a complex predictive model.

Table 1.

Mutual Information computed from the three channels with the output features.

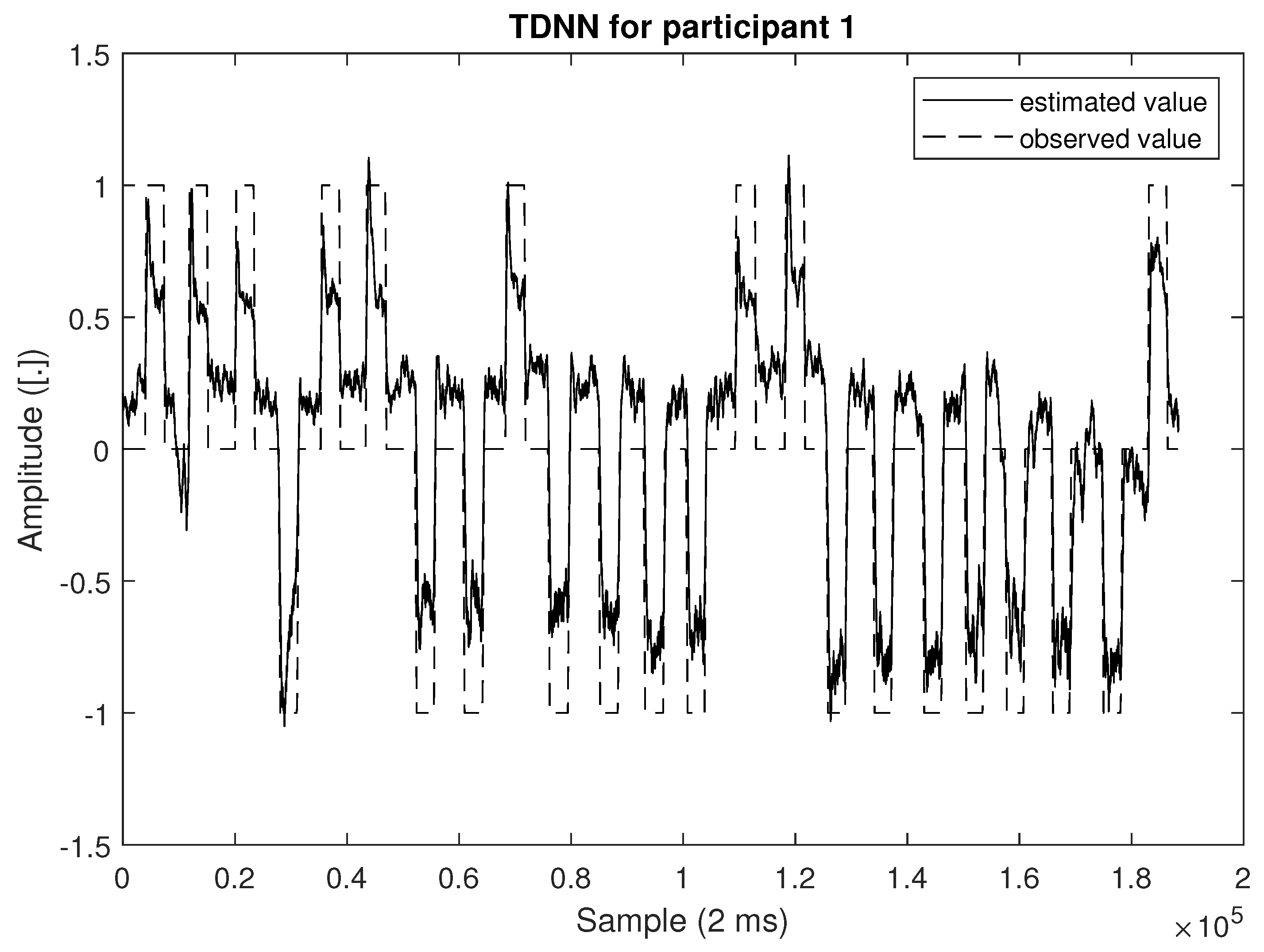

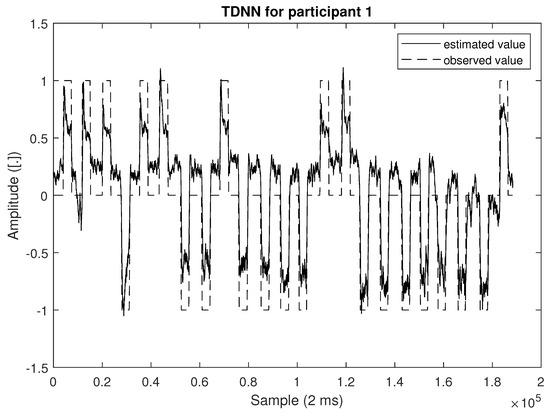

For the classification of the ANNs, each file was divided into two equal parts, with the training performed on the first half, and the testing performed on the second half. Table 2 shows the results related to the MSE and correlation coefficient resulting from the TDNN just for the testing. According to Cohen classification [69], the r values demonstrate a strong correlation, which guarantees that the TDNN classifier is reliable. In all experiments, the r values are greater than 0.5 and, in 15 out of 22 cases, they overcome the value r = 0.75 with a related MSE smaller than 0.55. All the results are statistically significant at a level of 0.01 (p-value ).

Table 2.

TDNN performance indices in the second half of the signal recorded: correlation coefficient r and MSE between the predictive and observed values.

Figure 6 compares the observed value and estimated value in the TDNN during the training phase for participant 1. The r value from the previous table is , which indicates a strong correlation between these two variables.

Figure 6.

Comparison between the observed value and estimated value in the TDNN.

The results related to the PRNN performances are shown in Table 3. In this model, 70% of the EEG signal was adopted for the training phase while the remaining 30% was used for the test. Table 3 shows the result on the testing patterns, evaluated according to the percentage of correctly predicted patterns (referred to as accuracy) and to the cross-entropy loss H. The PRNN accuracy is greater than 74% in all cases with a mean value of 88%. The best performance is 96% for P1. The H values in Table 3 confirm that a good accuracy of the model reflected a lower entropy. In the cases where participants obtained an accuracy greater than 90%, the value H is limited to 0.3. However, the average value for H on the overall experiments is 0.67 with the minimum for P10 (accuracy = 94.72% and H = 0.16) and a maximum for P18 (accuracy = 74.19% and H = 0.6751).

Table 3.

Results for the PRNN for the classification related to the three classes.

The accuracy results were evaluated by the Matthews correlation coefficient, which was used for the P22 data. Table 4 shows the confusion matrix; the data on the matrix diagonal show the number of times in which the classifier correctly predicted the head position associated to label 1 (right movement of the head), label 0 (forward position), and label −1 (left movements). The values out of the diagonal represent the wrong predictions between output class and related target. The MCC (9) for Table 4 is 92.04%; its value is high, confirming a good prediction, according to the previously calculated H value. In Table 5 the global confusion matrix is shown for the test set of each participant. The MCC (9) for the data is 74.3%.

Table 4.

Confusion matrix for P22.

Table 5.

Confusion matrix for all participants.

The Matthews index confirms the good results obtained, which are shown in Table 3. Regarding comparative performance analysis in terms of classifier accuracy, it is important to note that the literature relating to EEG signals classification for HMI applications in the context of human movements mainly focuses on hands, eyes, arms, or movement intention detection. On the contrary, the proposed classifier, and the consequent HMI controller, concern head movements and aim to evaluate the possibility to identify head turning by participants when they are subjected to visual stimuli to provide a noninvasive interface tool to control external devices.

3.2. Binary Controller Performance

Using the same trials, the same preprocessing pipelines, and the related training described in Section 3.1, the performance of the real-time binary controller based on electrical activity related to the head yaw rotation has been tested. Table 6 shows the percentage of correct predictions for HMI used as a binary controller. The tests were performed both with open eyes and with closed eyes. In fact, this analysis in ”blind“ conditions, allows us to verify how the light stimuli affect the controller. The percentage of correct predictions is greater than 75% for 20 participants on 22 with opened eyes and 11 on 22 for closed eyes. The average difference between the performance between open eyes vs. closed eyes is always in favor of open eyes, with an average difference of 9%. The participant P1 controlled the light system without errors during both experiments with 100% success.

Table 6.

HMI accuracy.

4. Conclusions and Future Directions

This work relates to the implementation of a HMI system to implement a binary controller; in the case study, this is applied to a switch on/off lamp system according to electrical brain activity due to head turning movements. The main purpose of the approach may be related with two applications. In the first place, it may support workers’ safety in the workplace with special reference to the driving context. Specifically, the head position and rotation are strongly associated with a driver’s attention during a driving task [70]. The recognition of head movements when the participant is subjected to visual stimuli support the development of automatic safety devices, which the next generation of vehicles will be equipped with to avoid accidents. In general, the detection of driver’s anomalous movements may prevent accident occurrence. Additionally, the HMI system described here may represent a preliminary application to support the development of assistive devices to improve the mobility of physically disabled people who are not able to use their arms or legs, for example, to turn or control motorized wheelchairs or vehicles or to use a PC. It is intended to implement a HMI system that allows interaction with the devices directly driven by the patient’s head movements. Most work that has been carried out so far adopts the EEG to monitor brain activity in cases of participant’s movements intention [71], for tracking eye movement [72], or using a gyroscope controlled to drive external components [73]. The approach that has been adopted represents a novel contribution in this field. Its main purpose is to demonstrate the feasibility of using human signals as input to the HMI to switch on/off external devices. The results show the feasibility to identify human left or right head movements by a nonlinear input–output function recording brain signals from three channels of an EEG cap. The correlation is quite relevant and, for our set of 22 participants, it is greater than 0.75 in 68% of the experiments.

The proposed approach consists of applying two consecutive NNs: firstly, a TDNN; subsequently, a PRNN, providing promising outcomes in the prediction of movements acquired by participants’ EEG signals. The accuracy of the classifier, which is computed as the rate between correct predictions out of total examples, has a mean value of 88%. In addition, the low computational time required by TDNN and PRNN allows for their application in a real time context.

The main achievement of this work is the reliability of the controller that has been realized. The tests related to the application of the classifier to classify the user movements and, consequently, to control the light system by monitoring the EEG signals show a relevant capability of the binary controller to identify the intention of the subjects to move the head on the right and left sides. On the contrary, a drawback may be recognized in the customization of the classifier. When the TDNN–PRNN system is identified on one participant, it cannot be applied on another participant. However, several issues may be questioned. The first aspect relates to the presence of artifacts in the EEG signal, which may affect the overall identification process. In fact, while classic filtering has been adopted, electrical stimuli coming from different sources (for example, the effect of the lamp light on the participant’s eyes) can be still present. However, as it relates to the effects of the light on the participant’s eyes, it is encouraging to observe that the controller works quite well with no need for stimulus, since lamps are switched on by the controller. In addition, reasonable results are also obtained with closed eyes.

The control results achieved in this work can be also considered an important milestone in the development of humanoid neck systems. Focusing on the neck element, several humanoid neck mechanisms have been developed by different researchers, specifically on soft robotic neck. Soft robotics is becoming an emerging solution to many of the problems in robotics, such as weight, cost, and human interaction. In this respect, a novel tuning method for a fractional-order proportional derivative controller has been proposed with applications to the control of the soft robotic neck [74]. A soft robotic cable-driven mechanism with the purpose of later creating softer humanoid robots that meet the characteristics of simplicity, accessibility, and safety has been proposed [75]. The complexity of the required models for control has increased dramatically, and geometrical model approaches—widely used to model rigid dynamics—are not enough to model these new hardware types [76].

Obviously, in this work, better results could have been obtained with a simple, cheaper gyroscope positioned on the head. This is why it is important to underline that the goal of our study is understanding the association between the electrical brain activity and a specific action. In fact, the electrical activity of the muscles in the neck area is 10–100 times stronger in magnitude than the electrical activity of the brain; thus, despite the techniques used to reduce artifacts, there is a high probability that the developed system takes muscle activity as a hint for the classification task.

However, as quoted before, we believe that such a hint could be useful in the first phases of machine learning in degenerative diseases that reduce human mobility when mobility is still possible. A further step that could not be obtained by an accelerometer or a gyroscope is to verify such an association when the actual movement is not performed, while the participant is ”just thinking“ to make a movement. Further work could address the contribution of the EEG versus the electromyogram (EMG) with parallel monitoring of the two systems to verify the effective information contribution of the EEG. Other studies [77] have shown that this further step is possible. A study related to post foot motor imagery has been performed [78]. This case study is focused on the study of the postimagery beta rebound to realize a brain switch using just the EEG channel. An analogous approach, involving the creation of an asynchronous BCI switch using imaginary movement, was designed in [79]; the focus of this work was to demonstrate that the 1-4Hz feature basis have the minimal power to discriminate the voluntary movement-related potentials. An issue of the EEG-based brain switches is related to the detection an asynchronous way of the control and idle states. A P300-based threshold-free brain switch to control an intelligent wheelchair was proposed in [80]; experiments on this new approach showed the goodness of the proposed methods, reporting also the possible application in participants with spinal cord injuries.

Future developments of this work will be devoted to three directions. First, further efforts should be devoted to improving the reliability of the data acquired by the EEG, enhancing filtering while retaining a quite cheap and noninvasive architecture, such that practical future implementation is feasible. While IC is one of the most widely used artifact removal techniques used in this context, recent work based on ”ad hoc“ heuristics by detecting influential independent components show better performance [55]. Secondly, the generalization of the identification of the controller on more participants rather than on one will be investigated, carrying out an additional cluster analysis on participants. Finally, and probably more important as a future goal, is to apply the method in an application context. Two applications are envisaged. The first one relates to the automotive context, as was recently investigated [21]. The second application relates to identification based on the idea of the movement rather than on the movement itself, as this final function could have important applications for severely disabled people.

Author Contributions

Conceptualization, E.Z., C.B. and R.S.; methodology, E.Z., C.B. and R.S.; software, E.Z., C.B. and R.S.; validation, E.Z., C.B. and R.S.; formal analysis, E.Z., C.B. and R.S.; investigation, E.Z., C.B. and R.S.; resources, E.Z., C.B. and R.S.; data curation, E.Z., C.B. and R.S.; writing—original draft preparation, E.Z., C.B. and R.S.; visualization, E.Z., C.B. and R.S.; supervision, E.Z., C.B. and R.S.; project administration, E.Z., C.B. and R.S.; funding acquisition, E.Z., C.B. and R.S. All authors have read and agreed to the published version of the manuscript.

Funding

This study is supported by the SysE2021 project (2021–2023), “Centre d’excellence transfrontalier pour la formation en ingénierie de systèmes” developed in the framework of the Interreg V-A France-Italie (ALCOTRA) (2014–2020), funding number 5665, Programme de coopération trans-frontalière européenne entre la France et l’Italie.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Not applicable.

Acknowledgments

The authors wish to thank prof. Carmelina Ruggiero for their help in the revision of the manuscript and for the precious suggestions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sharit, J. A Human Factors Engineering Perspective to Aging and Work. In Current and Emerging Trends in Aging and Work; Springer: Berlin/Heidelberg, Germany, 2020; pp. 191–218. [Google Scholar]

- Zhang, J.; Yin, Z.; Wang, R. Recognition of mental workload levels under complex human–machine collaboration by using physiological features and adaptive support vector machines. IEEE Trans. Hum.-Mach. Syst. 2014, 45, 200–214. [Google Scholar] [CrossRef]

- Tan, D.; Nijholt, A. Brain-computer interfaces and human-computer interaction. In Brain-Computer Interfaces; Springer: Berlin/Heidelberg, Germany, 2010; pp. 3–19. [Google Scholar]

- Stegman, P.; Crawford, C.S.; Andujar, M.; Nijholt, A.; Gilbert, J.E. Brain–computer interface software: A review and discussion. IEEE Trans. Hum.-Mach. Syst. 2020, 50, 101–115. [Google Scholar] [CrossRef]

- Yang, S.; Deravi, F. On the usability of electroencephalographic signals for biometric recognition: A survey. IEEE Trans. Hum.-Mach. Syst. 2017, 47, 958–969. [Google Scholar] [CrossRef] [Green Version]

- Sakkalis, V. Review of advanced techniques for the estimation of brain connectivity measured with EEG/MEG. Comput. Biol. Med. 2011, 41, 1110–1117. [Google Scholar] [CrossRef] [PubMed]

- Zhong, P.; Wang, D.; Miao, C. EEG-based emotion recognition using regularized graph neural networks. IEEE Trans. Affect. Comput. 2020. [Google Scholar] [CrossRef]

- Monteiro, T.G.; Skourup, C.; Zhang, H. Using EEG for mental fatigue assessment: A comprehensive look into the current state of the art. IEEE Trans. Hum.-Mach. Syst. 2019, 49, 599–610. [Google Scholar] [CrossRef] [Green Version]

- Supriya, S.; Siuly, S.; Wang, H.; Zhang, Y. EEG sleep stages analysis and classification based on weighed complex network features. IEEE Trans. Emerg. Top. Comput. Intell. 2018, 5, 236–246. [Google Scholar] [CrossRef]

- Wang, S.; Gwizdka, J.; Chaovalitwongse, W.A. Using wireless EEG signals to assess memory workload in the n-back task. IEEE Trans. Hum.-Mach. Syst. 2015, 46, 424–435. [Google Scholar] [CrossRef]

- Yu, H.; Zhu, L.; Cai, L.; Wang, J.; Liu, C.; Shi, N.; Liu, J. Variation of functional brain connectivity in epileptic seizures: An EEG analysis with cross-frequency phase synchronization. Cogn. Neurodyn. 2020, 14, 35–49. [Google Scholar] [CrossRef]

- Ghosh, L.; Konar, A.; Rakshit, P.; Nagar, A.K. Mimicking short-term memory in shape-reconstruction task using an EEG-induced type-2 fuzzy deep brain learning network. IEEE Trans. Emerg. Top. Comput. Intell. 2019, 4, 571–588. [Google Scholar] [CrossRef]

- Acharya, U.R.; Sree, S.V.; Swapna, G.; Martis, R.J.; Suri, J.S. Automated EEG analysis of epilepsy: A review. Knowl.-Based Syst. 2013, 45, 147–165. [Google Scholar] [CrossRef]

- Zhang, C.; Eskandarian, A. A survey and tutorial of EEG-based brain monitoring for driver state analysis. arXiv 2020, arXiv:2008.11226. [Google Scholar] [CrossRef]

- He, D.; Donmez, B.; Liu, C.C.; Plataniotis, K.N. High cognitive load assessment in drivers through wireless electroencephalography and the validation of a modified n-back task. IEEE Trans. Hum.-Mach. Syst. 2019, 49, 362–371. [Google Scholar] [CrossRef]

- Ming, Y.; Wu, D.; Wang, Y.K.; Shi, Y.; Lin, C.T. EEG-based drowsiness estimation for driving safety using deep Q-learning. IEEE Trans. Emerg. Top. Comput. Intell. 2020, 5, 583–594. [Google Scholar] [CrossRef]

- Li, G.; Chung, W.Y. Combined EEG-gyroscope-tDCS brain machine interface system for early management of driver drowsiness. IEEE Trans. Hum.-Mach. Syst. 2017, 48, 50–62. [Google Scholar] [CrossRef]

- Reddy, T.K.; Arora, V.; Kumar, S.; Behera, L.; Wang, Y.K.; Lin, C.T. Electroencephalogram based reaction time prediction with differential phase synchrony representations using co-operative multi-task deep neural networks. IEEE Trans. Emerg. Top. Comput. Intell. 2019, 3, 369–379. [Google Scholar] [CrossRef]

- Zero, E.; Bersani, C.; Zero, L.; Sacile, R. Towards real-time monitoring of fear in driving sessions. IFAC-PapersOnLine 2019, 52, 299–304. [Google Scholar] [CrossRef]

- Graffione, S.; Bersani, C.; Sacile, R.; Zero, E. Model predictive control of a vehicle platoon. In Proceedings of the 2020 IEEE 15th International Conference of System of Systems Engineering (SoSE), Budapest, Hungary, 2–4 June 2020; pp. 513–518. [Google Scholar]

- Chavarriaga, R.; Ušćumlić, M.; Zhang, H.; Khaliliardali, Z.; Aydarkhanov, R.; Saeedi, S.; Gheorghe, L.; Millán, J.d.R. Decoding neural correlates of cognitive states to enhance driving experience. IEEE Trans. Emerg. Top. Comput. Intell. 2018, 2, 288–297. [Google Scholar] [CrossRef] [Green Version]

- Zero, E.; Graffione, S.; Bersani, C.; Sacile, R. A BCI driving system to understand brain signals related to steering. In Proceedings of the 18th International Conference on Informatics in Control, Automation and Robotics, ICINCO 2021, Online streaming, 6–8 July 2021; pp. 745–751. [Google Scholar]

- Nourmohammadi, A.; Jafari, M.; Zander, T.O. A survey on unmanned aerial vehicle remote control using brain–computer interface. IEEE Trans. Hum.-Mach. Syst. 2018, 48, 337–348. [Google Scholar] [CrossRef]

- Abiri, R.; Borhani, S.; Sellers, E.W.; Jiang, Y.; Zhao, X. A comprehensive review of EEG-based brain–computer interface paradigms. J. Neural Eng. 2019, 16, 011001. [Google Scholar] [CrossRef]

- Wolpaw, J.R.; Birbaumer, N.; McFarland, D.J.; Pfurtscheller, G.; Vaughan, T.M. Brain–computer interfaces for communication and control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef]

- Gupta, G.S.; Dave, G.B.; Tripathi, P.R.; Mohanta, D.K.; Ghosh, S.; Sinha, R.K. Brain computer interface controlled automatic electric drive for neuro-aid system. Biomed. Signal Process. Control 2021, 63, 102175. [Google Scholar] [CrossRef]

- Nataraj, S.K.; Paulraj, M.P.; Yaacob, S.B.; Adom, A.H.B. Classification of thought evoked potentials for navigation and communication using multilayer neural network. J. Chin. Inst. Eng. 2021, 44, 53–63. [Google Scholar] [CrossRef]

- Tsui, C.S.L.; Gan, J.Q.; Hu, H. A self-paced motor imagery based brain-computer interface for robotic wheelchair control. Clin. EEG Neurosci. 2011, 42, 225–229. [Google Scholar] [CrossRef] [PubMed]

- Holm, N.S.; Puthusserypady, S. An improved five class MI based BCI scheme for drone control using filter bank CSP. In Proceedings of the 2019 7th International Winter Conference on Brain-Computer Interface (BCI), Gangwon, Korea, 18–20 February 2019; pp. 1–6. [Google Scholar]

- Meng, J.; Zhang, S.; Bekyo, A.; Olsoe, J.; Baxter, B.; He, B. Noninvasive electroencephalogram based control of a robotic arm for reach and grasp tasks. Sci. Rep. 2016, 6, 38565. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rakshit, A.; Konar, A.; Nagar, A.K. A hybrid brain-computer interface for closed-loop position control of a robot arm. IEEE/CAA J. Autom. Sin. 2020, 7, 1344–1360. [Google Scholar] [CrossRef]

- Zhao, Q.; Zhang, L.; Cichocki, A. EEG-based asynchronous BCI control of a car in 3D virtual reality environments. Chin. Sci. Bull. 2009, 54, 78–87. [Google Scholar] [CrossRef]

- Brumberg, J.S.; Nieto-Castanon, A.; Kennedy, P.R.; Guenther, F.H. Brain–computer interfaces for speech communication. Speech Commun. 2010, 52, 367–379. [Google Scholar] [CrossRef] [Green Version]

- Lotte, F.; Congedo, M.; Lécuyer, A.; Lamarche, F.; Arnaldi, B. A review of classification algorithms for EEG-based brain–computer interfaces. J. Neural Eng. 2007, 4, R1. [Google Scholar] [CrossRef]

- Garrett, D.; Peterson, D.A.; Anderson, C.W.; Thaut, M.H. Comparison of linear, nonlinear, and feature selection methods for EEG signal classification. IEEE Trans. Neural Syst. Rehabil. Eng. 2003, 11, 141–144. [Google Scholar] [CrossRef]

- Saha, A.; Konar, A.; Chatterjee, A.; Ralescu, A.; Nagar, A.K. EEG analysis for olfactory perceptual-ability measurement using a recurrent neural classifier. IEEE Trans. Hum.-Mach. Syst. 2014, 44, 717–730. [Google Scholar] [CrossRef]

- Jiang, J.; Fares, A.; Zhong, S.H. A context-supported deep learning framework for multimodal brain imaging classification. IEEE Trans. Hum.-Mach. Syst. 2019, 49, 611–622. [Google Scholar] [CrossRef]

- Müller, V.; Lutzenberger, W.; Preißl, H.; Pulvermüller, F.; Birbaumer, N. Complexity of visual stimuli and non-linear EEG dynamics in humans. Cogn. Brain Res. 2003, 16, 104–110. [Google Scholar] [CrossRef]

- Reddy, T.K.; Arora, V.; Behera, L. HJB-equation-based optimal learning scheme for neural networks with applications in brain–computer interface. IEEE Trans. Emerg. Top. Comput. Intell. 2018, 4, 159–170. [Google Scholar] [CrossRef]

- Saha, A.; Konar, A.; Nagar, A.K. EEG analysis for cognitive failure detection in driving using type-2 fuzzy classifiers. IEEE Trans. Emerg. Top. Comput. Intell. 2017, 1, 437–453. [Google Scholar] [CrossRef] [Green Version]

- Liu, C.; Fu, Y.; Yang, J.; Xiong, X.; Sun, H.; Yu, Z. Discrimination of motor imagery patterns by electroencephalogram phase synchronization combined with frequency band energy. IEEE/CAA J. Autom. Sin. 2016, 4, 551–557. [Google Scholar] [CrossRef]

- Athanasiou, A.; Chatzitheodorou, E.; Kalogianni, K.; Lithari, C.; Moulos, I.; Bamidis, P. Comparing sensorimotor cortex activation during actual and imaginary movement. In Proceedings of the XII Mediterranean Conference on Medical and Biological Engineering and Computing 2010, Chalkidiki, Greece, 27–30 May 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 111–114. [Google Scholar]

- Chaisaen, R.; Autthasan, P.; Mingchinda, N.; Leelaarporn, P.; Kunaseth, N.; Tammajarung, S.; Manoonpong, P.; Mukhopadhyay, S.C.; Wilaiprasitporn, T. Decoding EEG rhythms during action observation, motor imagery, and execution for standing and sitting. IEEE Sens. J. 2020, 20, 13776–13786. [Google Scholar] [CrossRef]

- Al-dabag, M.L.; Ozkurt, N. EEG motor movement classification based on cross-correlation with effective channel. Signal Image Video Process. 2019, 13, 567–573. [Google Scholar] [CrossRef]

- Bhattacharyya, S.; Khasnobish, A.; Konar, A.; Tibarewala, D.; Nagar, A.K. Performance analysis of left/right hand movement classification from EEG signal by intelligent algorithms. In Proceedings of the 2011 IEEE Symposium on Computational Intelligence, Cognitive Algorithms, Mind, and Brain (CCMB), Paris, France, 11–15 April 2011; pp. 1–8. [Google Scholar]

- Abiri, R.; Borhani, S.; Kilmarx, J.; Esterwood, C.; Jiang, Y.; Zhao, X. A usability study of low-cost wireless brain-computer interface for cursor control using online linear model. IEEE Trans. Hum.-Mach. Syst. 2020, 50, 287–297. [Google Scholar] [CrossRef]

- Zero, E.; Bersani, C.; Sacile, R. EEG Based BCI System for Driver’s Arm Movements Identification. Proc. Autom. Robot. Commun. Ind. 4.0 2021, 77. [Google Scholar]

- O’Regan, S.; Marnane, W. Multimodal detection of head-movement artefacts in EEG. J. Neurosci. Methods 2013, 218, 110–120. [Google Scholar] [CrossRef] [PubMed]

- Uke, N.; Kulkarni, D. Recent Artifacts Handling Algorithms in Electroencephalogram. 2020. Available online: https://www.academia.edu/43232803/Recent_Artifacts_Handling_Algorithms_in_Electroencephalogram?from=cover_page (accessed on 1 January 2020).

- Jiang, X.; Bian, G.B.; Tian, Z. Removal of artifacts from EEG signals: A review. Sensors 2019, 19, 987. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- O’Regan, S.; Faul, S.; Marnane, W. Automatic detection of EEG artefacts arising from head movements using EEG and gyroscope signals. Med. Eng. Phys. 2013, 35, 867–874. [Google Scholar] [CrossRef] [PubMed]

- Lawhern, V.; Hairston, W.D.; McDowell, K.; Westerfield, M.; Robbins, K. Detection and classification of subject-generated artifacts in EEG signals using autoregressive models. J. Neurosci. Methods 2012, 208, 181–189. [Google Scholar] [CrossRef] [Green Version]

- Chadwick, N.A.; McMeekin, D.A.; Tan, T. Classifying eye and head movement artifacts in EEG signals. In Proceedings of the 5th IEEE International Conference on Digital Ecosystems and Technologies (IEEE DEST 2011), Daejeon, Korea, 31 May–3 June 2011; pp. 285–291. [Google Scholar]

- Wang, M.; Abdelfattah, S.; Moustafa, N.; Hu, J. Deep Gaussian mixture-hidden Markov model for classification of EEG signals. IEEE Trans. Emerg. Top. Comput. Intell. 2018, 2, 278–287. [Google Scholar] [CrossRef]

- Goh, S.K.; Abbass, H.A.; Tan, K.C.; Al-Mamun, A.; Wang, C.; Guan, C. Automatic EEG artifact removal techniques by detecting influential independent components. IEEE Trans. Emerg. Top. Comput. Intell. 2017, 1, 270–279. [Google Scholar] [CrossRef]

- Kim, C.S.; Sun, J.; Liu, D.; Wang, Q.; Paek, S.G. Removal of ocular artifacts using ICA and adaptive filter for motor imagery-based BCI. IEEE/CAA J. Autom. Sin. 2017, 1–8. [Google Scholar] [CrossRef]

- Daly, I.; Billinger, M.; Scherer, R.; Müller-Putz, G. On the automated removal of artifacts related to head movement from the EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 21, 427–434. [Google Scholar] [CrossRef]

- Li, B.J.; Bailenson, J.N.; Pines, A.; Greenleaf, W.J.; Williams, L.M. A public database of immersive VR videos with corresponding ratings of arousal, valence, and correlations between head movements and self report measures. Front. Psychol. 2017, 8, 2116. [Google Scholar] [CrossRef]

- Zero, E.; Bersani, C.; Sacile, R. Identification of Brain Electrical Activity Related to Head Yaw Rotations. Sensors 2021, 21, 3345. [Google Scholar] [CrossRef]

- Mak, J.N.; Chan, R.H.; Wong, S.W. Evaluation of mental workload in visual-motor task: Spectral analysis of single-channel frontal EEG. In Proceedings of the IECON 2013-39th Annual Conference of the IEEE Industrial Electronics Society, Vienna, Austria, 10–13 November 2013; pp. 8426–8430. [Google Scholar]

- Rilk, A.J.; Soekadar, S.R.; Sauseng, P.; Plewnia, C. Alpha coherence predicts accuracy during a visuomotor tracking task. Neuropsychologia 2011, 49, 3704–3709. [Google Scholar] [CrossRef] [PubMed]

- Enobio® EEG Systems. Available online: https://www.neuroelectrics.com/solutions/enobio (accessed on 1 January 2020).

- Singh, V.; Veer, K.; Sharma, R.; Kumar, S. Comparative study of FIR and IIR filters for the removal of 50 Hz noise from EEG signal. Int. J. Biomed. Eng. Technol. 2016, 22, 250–257. [Google Scholar] [CrossRef]

- Sapna, S.; Tamilarasi, A.; Kumar, M.P. Backpropagation learning algorithm based on Levenberg Marquardt Algorithm. Comp. Sci. Inf. Technol. (CS IT) 2012, 2, 393–398. [Google Scholar]

- Lv, C.; Xing, Y.; Zhang, J.; Na, X.; Li, Y.; Liu, T.; Cao, D.; Wang, F.Y. Levenberg–Marquardt backpropagation training of multilayer neural networks for state estimation of a safety-critical cyber-physical system. IEEE Trans. Ind. Inform. 2017, 14, 3436–3446. [Google Scholar] [CrossRef] [Green Version]

- Feng, D.; Chen, L.; Chen, P. Intention Recognition of Upper Limb Movement on Electroencephalogram Signal Based on CSP-CNN. In Proceedings of the 2021 5th International Conference on Robotics and Automation Sciences (ICRAS), Wuhan, China, 11–13 June 2021; pp. 267–271. [Google Scholar]

- Boughorbel, S.; Jarray, F.; El-Anbari, M. Optimal classifier for imbalanced data using Matthews Correlation Coefficient metric. PLoS ONE 2017, 12, e0177678. [Google Scholar] [CrossRef]

- Pravitha Ramanand, M.C.B.; Bruce, E.N. Mutual information analysis of EEG signals indicates age-related changes in cortical interdependence during sleep in middle-aged vs. elderly women. J. Clin. Neurophysiol. Off. Publ. Am. Electroencephalogr. Soc. 2010, 27, 274. [Google Scholar]

- Cohen, L.H. Life Events and Psychological Functioning: Theoretical and Methodological Issues; SAGE Publications, Incorporated: Thousand Oaks, CA, USA, 1988; Volume 90. [Google Scholar]

- Tawari, A.; Martin, S.; Trivedi, M.M. Continuous head movement estimator for driver assistance: Issues, algorithms, and on-road evaluations. IEEE Trans. Intell. Transp. Syst. 2014, 15, 818–830. [Google Scholar] [CrossRef] [Green Version]

- Izzuddin, T.A.; Ariffin, M.; Bohari, Z.H.; Ghazali, R.; Jali, M.H. Movement intention detection using neural network for quadriplegic assistive machine. In Proceedings of the 2015 IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Penang, Malaysia, 27–29 November 2015; pp. 275–280. [Google Scholar]

- Antoniou, E.; Bozios, P.; Christou, V.; Tzimourta, K.D.; Kalafatakis, K.; G Tsipouras, M.; Giannakeas, N.; Tzallas, A.T. EEG-based eye movement recognition using brain–computer interface and random forests. Sensors 2021, 21, 2339. [Google Scholar] [CrossRef]

- Rudigkeit, N.; Gebhard, M. AMiCUS—A head motion-based interface for control of an assistive robot. Sensors 2019, 19, 2836. [Google Scholar] [CrossRef] [Green Version]

- Nagua, L.; Munoz, J.; Monje, C.A.; Balaguer, C. A first approach to a proposal of a soft robotic link acting as a neck. In Proceedings of the XXXIX Jornadas de Automática. Área de Ingeniería de Sistemas y Automática, Universidad de Extremadura, Badajoz, Spain, 5–7 September 2018; pp. 522–529. [Google Scholar]

- Nagua, L.; Monje, C.; Yañez-Barnuevo, J.M.; Balaguer, C. Design and performance validation of a cable-driven soft robotic neck. In Proceedings of the Actas de las Jornadas Nacionales de Robtica, Valladolid, Spain, 14–15 June 2018; pp. 1–5. [Google Scholar]

- Quevedo, F.; Muñoz, J.; Castano Pena, J.A.; Monje, C.A. 3D Model Identification of a Soft Robotic Neck. Mathematics 2021, 9, 1652. [Google Scholar] [CrossRef]

- Bhattacharyya, S.; Konar, A.; Tibarewala, D. Motor imagery and error related potential induced position control of a robotic arm. IEEE/CAA J. Autom. Sin. 2017, 4, 639–650. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Solis-Escalante, T. Could the beta rebound in the EEG be suitable to realize a “brain switch”? Clin. Neurophysiol. 2009, 120, 24–29. [Google Scholar] [CrossRef] [PubMed]

- Mason, S.G.; Birch, G.E. A brain-controlled switch for asynchronous control applications. IEEE Trans. Biomed. Eng. 2000, 47, 1297–1307. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- He, S.; Zhang, R.; Wang, Q.; Chen, Y.; Yang, T.; Feng, Z.; Zhang, Y.; Shao, M.; Li, Y. A P300-based threshold-free brain switch and its application in wheelchair control. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 25, 715–725. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).