Integrating Open Science Principles into Quasi-Experimental Social Science Research

Abstract

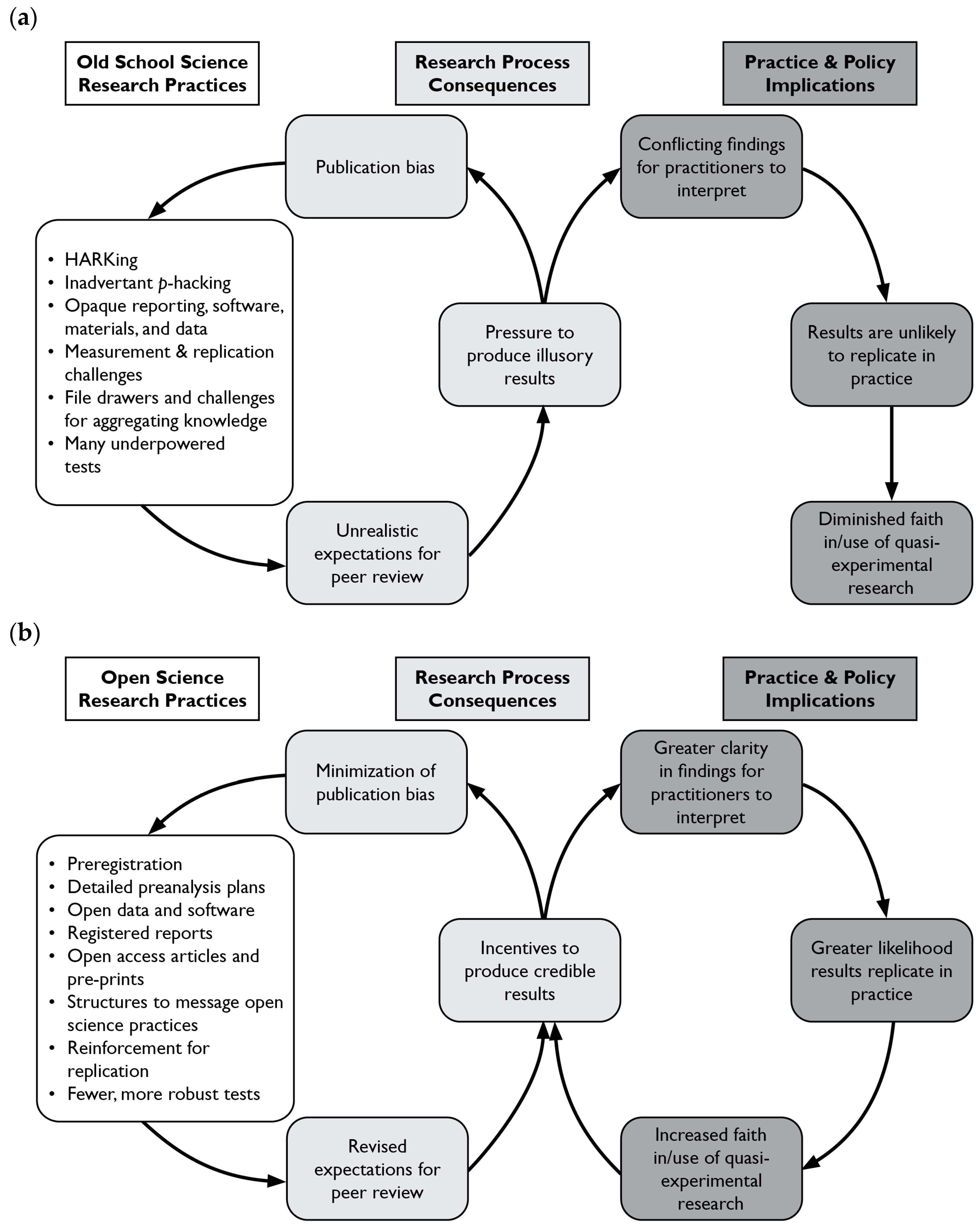

1. Motivation

2. Background on Open Science and Causal Inference

3. Practical Advice for Researchers

3.1. Preregistration

- Establishes the timeline of when a research project was initiated;

- Establishes the primary hypotheses that the project is designed to test;

- Increases the transparency of methods to facilitate replication and limit scope for HARKing;

- Allows for a principled approach to multiple hypothesis correction; and

- Enhances the credibility of prespecified results versus those that emerge from exploratory analyses.

3.2. Preanalysis Plans

3.3. Open Data and Software

3.4. Registered Reports

3.5. Open Access Articles and Preprints

3.6. Messaging Open Science Practices (Or Lack Thereof)

3.7. Replication

3.8. Summary

4. Practical Advice for Other Stakeholders

4.1. Data Providers

- Provide access to simulated datasets;

- Offer partitioned datasets that may omit key outcome variables or time periods; and

- Certify when researchers gained access to restricted-use data.

4.2. Funders

4.3. Journal Editors and Peer Reviewers

4.4. Registries and Repositories

- Allow quasi-experimental studies to be preregistered;

- Provide adaptable or open-ended templates that are compatible with quasi-experimental research designs;

- Provide templates for specific quasi-experimental designs;

- Add quasi-experimental features to sortable meta-data, badges, etc.

5. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AEA | American Economic Association |

| APSA | American Political Science Association |

| BOAI | Budapest Open Access Initiative |

| COARA | Coalition for Advancing Research Assessment |

| CRediT | Contributor Role Taxonomy |

| DORA | Declaration on Research Assessment |

| EGAP | Evidence in Governance and Politics |

| FAIR | Findable, accessible, interoperable, and reusable |

| FOSS | Free and open source software |

| GED | No formal meaning; formerly “General Educational Development” |

| HARKing | Hypothesizing after results are known |

| ICPSR | Inter-university Consortium for Political and Social Research |

| NBER | National Bureau of Economic Research |

| OECD | Organization for Economic Co-operation and Development |

| OSF | Open Science Framework |

| QE | Quasi-experimental |

| RCT | Randomized controlled trial |

| REES | Registry of Efficacy and Effectiveness Studies |

| RIDIE | Registry for International Development Impact Evaluations |

| SSRN | No formal meaning; formerly “Social Science Research Network” |

| TOP | Transparency and Openness Promotion |

| UNESCO | United Nations Educational, Scientific, and Cultural Organization |

| U.S. | United States |

| 1 | Harvard Dataverse: https://dataverse.harvard.edu/. |

| 2 | ICPSR: https://www.icpsr.umich.edu/web/pages/, accessed on 13 August 2025. |

| 3 | OSF: https://osf.io/. |

| 4 | Open Source Initiative: https://opensource.org/. |

| 5 | ResearchBox: https://researchbox.org/. |

| 6 | Scientific Data: https://www.nature.com/sdata/, accessed on 13 August 2025. |

References

- Angrist, Joshua D., and Jörn-Steffen Pischke. 2010. The Credibility Revolution in Empirical Economics: How Better Research Design Is Taking the Con out of Econometrics. Journal of Economic Perspectives 24: 3–30. [Google Scholar] [CrossRef]

- Arasteh-Roodsary, Sona Lisa, Vinciane Gaillard, Federica Garbuglia, Pierre Mounier, Janne Pölönen, Vanessa Proudman, Johan Rooryck, Bregt Saenen, and Graham Stone. 2025. DIAMOND Open Access Recommendations and Guidelines for Institutions, Funders, Sponsors, Donors, and Policymakers. Version 1.0. Zenodo. [Google Scholar] [CrossRef]

- Biden, Joseph R., Jr. 2024. Remarks by President Biden in State of the Union Address. [Speech Transcript]. Available online: https://bidenwhitehouse.archives.gov/state-of-the-union-2024/ (accessed on 13 August 2025).

- Bill and Melinda Gates Foundation. n.d.Evaluation Policy. Available online: https://www.gatesfoundation.org/about/policies-and-resources/evaluation-policy (accessed on 12 November 2024).

- BOAI. 2002. Budapest Open Access Initiative Declaration. Available online: https://www.budapestopenaccessinitiative.org/read/ (accessed on 24 July 2025).

- Borrego, Ángel. 2023. Article Processing Charges for Open Access Journal Publishing: A Review. Learned Publishing 36: 359–78. [Google Scholar] [CrossRef]

- Breznau, Nate, Eike Mark Rinke, Alexander Wuttke, Hung H. V. Nguyen, Muna Adem, Jule Adriaans, Amalia Alvarez-Benjumea, Henrik K. Andersen, Daniel Auer, Flavio Azevedo, and et al. 2022. Observing many researchers using the same data and hypothesis reveals a hidden universe of uncertainty. Proceedings of the National Academy of Sciences 119: e2203150119. [Google Scholar] [CrossRef] [PubMed]

- Brodeur, Abel, Nikolai M. Cook, Jonathan S. Hartley, and Anthony Heyes. 2024. Do Preregistration and Preanalysis Plans Reduce p-Hacking and Publication Bias? Evidence from 15,992 Test Statistics and Suggestions for Improvement. Journal of Political Economy Microeconomics 2: 527–561. [Google Scholar] [CrossRef]

- Card, David. 1990. The Impact of the Mariel Boatlift on the Miami Labor Market. ILR Review 43: 245–57. [Google Scholar] [CrossRef]

- Chambers, Christopher D., Zoltan Dienes, Robert D. McIntosh, Pia Rotshtein, and Klaus Willmes. 2015. Registered Reports: Realigning Incentives in Scientific Publishing. Cortex 66: A1–A2. [Google Scholar] [CrossRef]

- Chan, Monnica, and Blake H. Heller. 2025. When Pell Today Doesn’t Mean Pell Tomorrow: The Challenge of Evaluating Aid Programs With Dynamic Eligibility. Educational Evaluation and Policy Analysis. [Google Scholar] [CrossRef]

- Christensen, Garret, Zenan Wang, Elizabeth Levy Paluck, Nicholas Swanson, David Birke, Edward Miguel, and Rebecca Littman. 2020. Open Science Practices Are on the Rise: The State of Social Science (3S) Survey. Available online: https://escholarship.org/content/qt0hx0207r/qt0hx0207r.pdf (accessed on 12 June 2025).

- Chubin, Daryl E. 1985. Open Science and Closed Science: Tradeoffs in a Democracy. Science, Technology, & Human Values 10: 73–80. [Google Scholar] [CrossRef]

- Coalition for Advancing Research Assessment (COARA). 2022. Agreement on Reforming Research Assessment. Available online: https://coara.eu/app/uploads/2022/09/2022_07_19_rra_agreement_final.pdf (accessed on 27 July 2025).

- Council of Economic Advisors. 2014. Economic Report of the President. Washington, DC: United States Government Printing Office. [Google Scholar]

- Council of Economic Advisors. 2018. Economic Report of the President. Washington, DC: Government Publishing Office. [Google Scholar]

- Council of Economic Advisors. 2022. Economic Report of the President. Washington, DC: Government Publishing Office. [Google Scholar]

- Currie, Janet, Henrik Kleven, and Esmée Zwiers. 2020. Technology and Big Data Are Changing Economics: Mining Text to Track Methods. AEA Papers and Proceedings 110: 42–48. [Google Scholar] [CrossRef]

- Dee, Thomas S. 2025. The Case for Preregistering Quasi-Experimental Program and Policy Evaluations. Evaluation Review. [Google Scholar] [CrossRef]

- DORA. 2012. San Francisco Declaration on Research Assessment. Available online: https://sfdora.org/read/ (accessed on 27 July 2025).

- European Commission. 2016. H2020 Programme Guidelines on FAIR Data Management in Horizon 2020 Version 3.0. Available online: https://arrow.tudublin.ie/dataguide/4/ (accessed on 27 July 2025).

- European Commission. 2019. Future of Scholarly Publishing and Scholarly Communication: Report of the Expert Group to the European Commission. Luxembourg: Publications Office of the European Union. [Google Scholar] [CrossRef]

- Feuer, Michael J. 2016. The Rising Price of Objectivity: Philanthropy, Government, and the Future of Education Research. Cambridge: Harvard Education Press. [Google Scholar]

- Field, Sarahanne M., E.-J. Wagenmakers, Henk A. L. Kiers, Rink Hoekstra, Anja F. Ernst, and Don van Ravenzwaaij. 2020. The Effect of Preregistration on Trust in Empirical Research Findings: Results of a Registered Report. Royal Society Open Science 7: 181351. [Google Scholar] [CrossRef]

- Fleming, Jesse I., Sarah Emily Wilson, Sara A. Hart, William J. Therrien, and Bryan G. Cook. 2021. Open accessibility in education research: Enhancing the credibility, equity, impact, and efficiency of research. Educational Psychologist 56: 110–21. [Google Scholar] [CrossRef]

- Fortunato, Laura, and Mark Galassi. 2021. The Case for Free and Open Source Software in Research and Scholarship. Philosophical Transactions of the Royal Society A 379: 20200079. [Google Scholar] [CrossRef]

- Frandsen, Tove Faber, and Jeppe Nicolaisen. 2010. What Is in a Name? Credit Assignment Practices in Different Disciplines. Journal of Informetrics 4: 608–17. [Google Scholar] [CrossRef]

- Fuchs, Christian, and Marisol Sandoval. 2013. The Diamond Model of Open Access Publishing: Why Policy Makers, Scholars, Universities, Libraries, Labour Unions and the Publishing World Need to Take Non-Commercial, Non-Profit Open Access Seriously. TripleC: Communication, Capitalism & Critique 11: 428–43. [Google Scholar]

- Gehlbach, Hunter, and Carly D. Robinson. 2018. Mitigating illusory results through preregistration in education. Journal of Research on Educational Effectiveness 11: 296–315. [Google Scholar] [CrossRef]

- Gehlbach, Hunter, and Carly D. Robinson. 2021. From old school to open science: The implications of new research norms for educational psychology and beyond. Educational Psychologist 56: 79–89. [Google Scholar] [CrossRef]

- Gibbons, Michael T. 2023. R&D Expenditures at U.S. Universities Increased by $8 Billion in FY 2022. National Science Foundation, National Center for Science and Engineering Statistics. NSF 24-307. Available online: https://ncses.nsf.gov/pubs/nsf24307 (accessed on 30 October 2024).

- GNU Operating System. 2024. What Is Free Software? Version 1.169. Available online: https://web.archive.org/web/20250729105453/https://www.gnu.org/philosophy/free-sw.en.html (accessed on 5 August 2025).

- Goldsmith-Pinkham, Paul. 2024. Tracking the Credibility Revolution across Fields. arXiv arXiv:2405.20604. [Google Scholar] [CrossRef]

- Golub, Benjamin. 2024. In Economics, Editors, Referees, and Authors Often Behave as if a Published Paper Should Reflect Some Kind of Authoritative Consensus. As a Result, Valuable Debate Happens in Secret, and the Resulting Paper is an Opaque Compromise with Anonymous Co-Authors Called Referees. [BlueSky Post]. Available online: https://bsky.app/profile/bengolub.bsky.social/post/3le2omjd5mk2s (accessed on 12 June 2025).

- Gomez-Diaz, Teresa, and Tomas Recio. 2020. Towards an Open Science Definition as a Political and Legal Framework: Sharing and Dissemination of Research Outputs. Polis 19: 5–25. [Google Scholar] [CrossRef]

- Gomez-Diaz, Teresa, and Tomas Recio. 2024. Articles, Software, Data: An Open Science Ethological Study. Maple Transactions 3: 19. [Google Scholar] [CrossRef]

- Grossmann, Alexander, and Björn Brembs. 2021. Current Market Rates for Scholarly Publishing Services. F1000Research 10: 1–24. [Google Scholar] [CrossRef]

- Hardwicke, Tom E., and Eric-Jan Wagenmakers. 2023. Reducing Bias, Increasing Transparency and Calibrating Confidence with Preregistration. Nature Human Behaviour 7: 15–26. [Google Scholar] [CrossRef]

- Heller, Blake H. 2024. GED® College Readiness Benchmarks and Post-Secondary Success. EdWorkingPaper No. 24-914. Annenberg Institute for School Reform at Brown University. Available online: https://doi.org/10.26300/mvvp-cf18 (accessed on 13 August 2025).

- Heller, Blake H. 2025. High School Equivalency Credentialing and Post-Secondary Success: Pre-Registered Qua-si-Experimental Evidence from the GED® Test. EdWorkingPaper No. 25-1240. Annenberg Institute for School Reform at Brown University. Available online: http://doi.org/10.26300/nw9y-a303 (accessed on 13 August 2025).

- Hess, Frederick M., and Jeffrey R. Henig, eds. 2015. The New Education Philanthropy: Politics, Policy, and Reform. Cambridge, MA: Harvard Education Press. [Google Scholar]

- Holzmeister, Felix, Magnus Johannesson, Robert Böhm, Anna Dreber, Jürgen Huber, and Michael Kirchler. 2024. Heterogeneity in Effect Size Estimates. Proceedings of the National Academy of Sciences 121: e2403490121. [Google Scholar] [CrossRef] [PubMed]

- Huntington-Klein, Nick, Andreu Arenas, Emily Beam, Marco Bertoni, Jeffrey R. Bloem, Pralhad Burli, Naibin Chen, Paul Grieco, Godwin Ekpe, Todd Pugatch, and et al. 2021. The Influence of Hidden Researcher Decisions in Applied Microeconomics. Economic Inquiry 59: 944–60. [Google Scholar] [CrossRef]

- Huntington-Klein, Nick, Claus C. Pörtner, Yubraj Acharya, Matus Adamkovic, Joop Adema, Lameck Ondieki Agasa, Imtiaz Ahmad, Mevlude Akbulut-Yuksel, Martin Eckhoff Andresen, David Angenendt, and et al. 2025. The Sources of Researcher Variation in Economics. I4R Discussion Paper Series No. 209. Institute for Replication. Available online: https://hdl.handle.net/10419/312260 (accessed on 25 March 2025).

- Imbens, Guido W. 2021. Statistical significance, p-values, and the reporting of uncertainty. Journal of Economic Perspectives 35: 157–74. [Google Scholar] [CrossRef]

- Imbens, Guido W. 2024. Causal Inference in the Social Sciences. Annual Review of Statistics and Its Application 11: 123–52. [Google Scholar] [CrossRef]

- Imbens, Guido W., and Jeffrey M. Wooldridge. 2009. Recent Developments in the Econometrics of Program Evaluation. Journal of Economic Literature 47: 5–8. [Google Scholar] [CrossRef]

- Imbens, Guido W., and Yiqing Xu. 2024. Lalonde (1986) After Nearly Four Decades: Lessons Learned. arXiv arXiv:2406.00827. [Google Scholar] [CrossRef]

- Institute of Education Sciences. 2022. Standards for Excellence in Education Research. Available online: https://ies.ed.gov/seer/ (accessed on 13 November 2024).

- Kaplan, Robert M., and Veronica L. Irvin. 2015. Likelihood of Null Effects of Large NHLBI Clinical Trials Has Increased over Time. PLoS ONE 10: e0132382. [Google Scholar] [CrossRef]

- Kennedy, James E. 2024. Addressing Researcher Fraud: Retrospective, Real-Time, and Preventive Strategies—including Legal Points and Data Management That Prevents Fraud. Frontiers in Research Metrics and Analytics 9: 1397649. [Google Scholar] [CrossRef]

- Kidwell, Mallory C., Ljiljana B. Lazarević, Erica Baranski, Tom E. Hardwicke, Sarah Piechowski, Lina-Sophia Falkenberg, Curtis Kennett, Agnieska Slowik, Carina Sonnleitner, Chelsey Hess-Holden, and et al. 2016. Badges to Acknowledge Open Practices: A Simple, Low-Cost, Effective Method for Increasing Transparency. PLoS Biology 14: e1002456. [Google Scholar] [CrossRef]

- Klonsky, E. David. 2024. Campbell’s Law Explains the Replication Crisis: Pre-Registration Badges Are History Repeating. Assessment 32: 224–34. [Google Scholar] [CrossRef]

- Lakens, Daniël. 2024. When and How to Deviate from a Preregistration. Collabra: Psychology 10: 117094. [Google Scholar] [CrossRef]

- LaLonde, Robert J. 1986. Evaluating the Econometric Evaluations of Training Programs with Experimental Data. American Economic Review 76: 604–20. [Google Scholar]

- Larivière, Vincent, David Pontille, and Cassidy R. Sugimoto. 2021. Investigating the Division of Scientific Labor Using the Contributor Roles Taxonomy (CRediT). Quantitative Science Studies 2: 111–28. [Google Scholar] [CrossRef]

- Leamer, Edward E. 1983. Let’s Take the Con out of Econometrics. American Economic Review 73: 31–43. [Google Scholar]

- Lee, Monica G., Susanna Loeb, and Carly D. Robinson. 2024a. Effects of High-Impact Tutoring on Student Attendance: Evidence from the OSSE HIT Initiative in the District of Columbia. EdWorkingPaper. Annenberg Institute at Brown University. Available online: https://doi.org/10.26300/wghb-4864 (accessed on 13 August 2025).

- Lee, Monica G., Susanna Loeb, and Carly D. Robinson. 2024b. Year 2 of Effects of High-Impact Tutoring on Student Attendance: Evidence from the OSSE HIT Initiative in the District of Columbia. OSF Registries Preregistration. Available online: https://osf.io/n45vt (accessed on 13 August 2025).

- Limaye, Aditya M. 2022. Article Processing Charges May Not Be Sustainable for Academic Researchers. MIT Science Policy Review 3: 17–20. [Google Scholar] [CrossRef]

- Logg, Jennifer M., and Charles A. Dorison. 2021. Pre-Registration: Weighing Costs and Benefits for Researchers. Organizational Behavior and Human Decision Processes 167: 18–27. [Google Scholar] [CrossRef]

- Makel, Matthew C., and Jonathan A. Plucker. 2014. Facts Are More Important Than Novelty: Replication in the Education Sciences. Educational Researcher 43: 304–16. [Google Scholar] [CrossRef]

- Makel, Matthew C., Jonathan A. Plucker, and Boyd Hegarty. 2012. Replications in Psychology Research: How Often Do They Really Occur? Perspectives on Psychological Science 7: 537–42. [Google Scholar] [CrossRef]

- Marušić, Ana, Lana Bošnjak, and Ana Jerončić. 2011. A Systematic Review of Research on the Meaning, Ethics and Practices of Authorship across Scholarly Disciplines. PLoS ONE 6: e23477. [Google Scholar] [CrossRef] [PubMed]

- Marx, Benjamin M., and Lesley J. Turner. 2018. Borrowing Trouble? Human Capital Investment with Opt-In Costs and Implications for the Effectiveness of Grant Aid. American Economic Journal: Applied Economics 10: 163–201. [Google Scholar] [CrossRef]

- McAdams, Dan P., and Kate C. McLean. 2013. Narrative Identity. Current Directions in Psychological Science 22: 233–38. [Google Scholar] [CrossRef]

- McNutt, Marcia K., Monica Bradford, Jeffrey M. Drazen, Brooks Hanson, Bob Howard, Kathleen Hall Jamieson, Véronique Kiermer, Emilie Marcus, Barbara Kline Pope, Randy Schekman, and et al. 2018. Transparency in Authors’ Contributions and Responsibilities to Promote Integrity in Scientific Publication. Proceedings of the National Academy of Sciences 115: 2557–60. [Google Scholar] [CrossRef]

- McShane, Blakeley B., Jennifer L. Tackett, Ulf Böckenholt, and Andrew Gelman. 2019. Large-Scale Replication Projects in Contemporary Psychological Research. The American Statistician 73 Suppl. S1: 99–105. [Google Scholar] [CrossRef]

- Mellor, David. 2021. Improving Norms in Research Culture to Incentivize Transparency and Rigor. Educational Psychologist 56: 122–31. [Google Scholar] [CrossRef]

- Miguel, Edward. 2021. Evidence on Research Transparency in Economics. Journal of Economic Perspectives 35: 193–214. [Google Scholar] [CrossRef]

- Mueller-Langer, Frank, Benedikt Fecher, Dietmar Harhoff, and Gert G. Wagner. 2019. Replication Studies in Economics—How Many and Which Papers Are Chosen for Replication, and Why? Research Policy 48: 62–83. [Google Scholar] [CrossRef]

- Munafò, Marcus R., Brian A. Nosek, Dorothy V. M. Bishop, Katherine S. Button, Christopher D. Chambers, Nathalie Percie du Sert, Uri Simonsohn, Eric-Jan Wagenmakers, Jennifer J. Ware, and John P. A. Ioannidis. 2017. A Manifesto for Reproducible Science. Nature Human Behaviour 1: 1–9. [Google Scholar] [CrossRef]

- Munafò, Marcus R., Christopher D. Chambers, Alexandra M. Collins, Laura Fortunato, and Malcolm R. Macleod. 2020. Research Culture and Reproducibility. Trends in Cognitive Sciences 24: 91–93. [Google Scholar] [CrossRef]

- Murnane, Richard J., and John B. Willett. 2011. Methods Matter: Improving Causal Inference in Educational and Social Science Research. Oxford: Oxford University Press. [Google Scholar]

- Naguib, Costanza. 2024. P-hacking and significance stars, Discussion Papers, No. 24-09, University of Bern, Department of Economics, Bern. Available online: https://www.econstor.eu/bitstream/10419/308751/1/1912207044.pdf (accessed on 20 July 2025).

- National Institutes of Health. 2016. NIH Policy on the Dissemination of NIH-Funded ClinicalTrial Information. Available online: https://grants.nih.gov/grants/guide/notice-files/NOT-OD-16-149.html (accessed on 12 November 2024).

- Neal, Zachary P. 2022. A Quick Guide to Sharing Research Data & Materials. Available online: https://doi.org/10.31219/osf.io/9mu7r (accessed on 27 March 2025).

- Nosek, Brian A., Charles R. Ebersole, Alexander C. DeHaven, and David T. Mellor. 2018. The Preregistration Revolution. Proceedings of the National Academy of Sciences 115: 2600–6. [Google Scholar] [CrossRef] [PubMed]

- Nosek, Brian A., George Alter, George Christopher Banks, Denny Borsboom, Sara D. Bowman, Steven J. Breckler, Stuart Buck, Christopher D. Chambers, Gilbert Chin, Garret S. Christensen, and et al. 2015. Promoting an Open Research Culture. Science 348: 1422–25. [Google Scholar] [CrossRef] [PubMed]

- Obama, Barack H. 2014. Remarks of President Barack Obama—State of the Union Address as Delivered. [Speech Transcript]. Available online: https://obamawhitehouse.archives.gov/the-press-office/2016/01/12/remarks-president-barack-obama-%E2%80%93-prepared-delivery-state-union-address (accessed on 12 November 2024).

- Open Source Initiative. 2024. The Open Source Definition. Version 1.9. Available online: https://opensource.org/osd (accessed on 27 July 2025).

- Özler, Berk. 2019. Registering Studies When All You Want is a Little More Credibility. Development Impact. World Bank Blogs. Available online: https://blogs.worldbank.org/en/impactevaluations/registering-studies-when-all-you-want-little-more-credibility (accessed on 30 August 2024).

- Park, Rina Seung Eun, and Judith Scott-Clayton. 2018. The Impact of Pell Grant Eligibility on Community College Students’ Financial Aid Packages, Labor Supply, and Academic Outcomes. Educational Evaluation and Policy Analysis 40: 557–85. [Google Scholar] [CrossRef]

- Pilat, Dirk, and Yukiko Fukasaku. 2007. OECD Principles and Guidelines for Access to Research Data from Public Funding. Data Science Journal 6: OD4–OD11. [Google Scholar] [CrossRef]

- Piwowar, Heather, Jason Priem, Vincent Larivière, Juan Pablo Alperin, Lisa Matthias, Bree Norlander, Ashley Farley, Jevin West, and Stefanie Haustein. 2018. The State of OA: A Large-Scale Analysis of the Prevalence and Impact of Open Access Articles. PeerJ 6: e4375. [Google Scholar] [CrossRef]

- Polka, Jessica K., Robert Kiley, Boyana Konforti, Bodo Stern, and Ronald D. Vale. 2018. Publish Peer Reviews. Nature 560: 545–47. [Google Scholar] [CrossRef]

- Reich, Justin. 2021. Preregistration and Registered Reports. Educational Psychologist 56: 101–9. [Google Scholar] [CrossRef]

- Reikosky, Nora. 2024. For (Y) Our Future: Plutocracy and the Vocationalization of Education. Ph.D. thesis, University of Pennsylvania, Philadelphia, PA, USA. [Google Scholar]

- Rosenthal, Robert. 1979. The File Drawer Problem and Tolerance for Null Results. Psychological Bulletin 86: 638. [Google Scholar] [CrossRef]

- Ross-Hellauer, Tony. 2017. What Is Open Peer Review? A Systematic Review. F1000Research 6: 588. [Google Scholar] [CrossRef]

- Rubin, Donald B. 2005. Causal Inference Using Potential Outcomes: Design, Modeling, Decisions. Journal of the American Statistical Association 100: 322–31. [Google Scholar] [CrossRef]

- Sands, Sara R. 2023. Institutional Change and the Rise of an Ecosystem Model in Education Philanthropy. Educational Policy 37: 1511–44. [Google Scholar] [CrossRef]

- Schmidt, Birgit, Tony Ross-Hellauer, Xenia van Edig, and Elizabeth C. Moylan. 2018. Ten Considerations for Open Peer Review. F1000Research 7: 969. [Google Scholar] [CrossRef]

- Scholastica. 2023. Announcing CRediT Taxonomy Support for all Scholastica Products. Available online: https://blog.scholasticahq.com/post/credit-taxonomy-support-scholastica-products/ (accessed on 12 June 2025).

- Silberzahn, Raphael, Eric Luis Uhlmann, Daniel Patrick Martin, Pasquale Anselmi, Frederick Aust, Eli Awtrey, Štěpán Bahník, Feng Bai, Colin Bannard, Evelina Bonnier, and et al. 2018. Many Analysts, One Data Set: Making Transparent How Variations in Analytic Choices Affect Results. Advances in Methods and Practices in Psychological Science 1: 337–56. [Google Scholar] [CrossRef]

- Simmons, Joseph P., Leif D. Nelson, and Uri Simonsohn. 2011. False-Positive Psychology: Undisclosed Flexibility in Data Collection and Analysis Allows Presenting Anything as Significant. Psychological Science 22: 1359–66. [Google Scholar] [CrossRef] [PubMed]

- Smith, Jeffrey A., and Petra E. Todd. 2005. Does Matching Overcome LaLonde’s Critique of Nonexperimental Estimators? Journal of Econometrics 125: 305–53. [Google Scholar] [CrossRef]

- Tennant, Jon. 2019. Open Science is Just Good Science. Version 1.0.0. DARIAH Campus [Video]. Available online: https://campus.dariah.eu/resources/hosted/open-science-is-just-good-science (accessed on 13 August 2025).

- Tennant, Jon, and Nate Breznau. 2022. Legacy of Jon Tennant. Open Science Is Just Good Science. Available online: https://doi.org/10.31235/osf.io/hfns2 (accessed on 27 July 2025).

- Tennant, Jonathan P., François Waldner, Damien C. Jacques, Paola Masuzzo, Lauren B. Collister, and Chris H. J. Hartgerink. 2016. The Academic, Economic and Societal Impacts of Open Access: An Evidence-Based Review. F1000Research 5: 632. [Google Scholar] [CrossRef]

- The White House. n.d. Economic Report of the President. Available online: https://bidenwhitehouse.archives.gov/cea/economic-report-of-the-president/ (accessed on 12 November 2024).

- United Nations Educational, Scientific and Cultural Organization [UNESCO]. 2021. UNESCO Recommendation on Open Science. Available online: https://www.unesco-floods.eu/wp-content/uploads/2022/04/379949eng.pdf (accessed on 27 July 2025).

- University of Houston Education Research Center. 2023. Policies & Procedures: General Information. Available online: https://web.archive.org/web/20240913034134/https://uh.edu/education/research/institutes-centers/erc/proposal-preparation-and-submission/uherc-general-information_rev1_feb2023.pdf (accessed on 15 November 2024).

- van den Akker, Olmo R., Marcel A. J. van Assen, Marjan Bakker, Mahmoud Elsherif, Tsz Keung Wong, and Jelte M. Wicherts. 2024. Preregistration in Practice: A Comparison of Preregistered and Non-Preregistered Studies in Psychology. Behavior Research Methods 56: 5424–33. [Google Scholar] [CrossRef]

- Watson, Mick. 2015. When Will ‘Open Science’ Become Simply ‘Science’? Genome Biology 16: 101. [Google Scholar] [CrossRef]

- Weber, Matthias. 2018. The Effects of Listing Authors in Alphabetical Order: A Review of the Empirical Evidence. Research Evaluation 27: 238–45. [Google Scholar] [CrossRef]

- Wilkinson, Mark D., Michel Dumontier, IJsbrand Jan Aalbersberg, Gabrielle Appleton, Myles Axton, Arie Baak, Niklas Blomberg, Jan-Willem Boiten, Luiz Bonino da Silva Santos, Philip E. Bourne, and et al. 2016. The FAIR Guiding Principles for Scientific Data Management and Stewardship. Scientific Data 3: 1–9. [Google Scholar] [CrossRef]

- Wolfram, Dietmar, Peiling Wang, Adam Hembree, and Hyoungjoo Park. 2020. Open Peer Review: Promoting Transparency in Open Science. Scientometrics 125: 1033–51. [Google Scholar] [CrossRef]

- Woodworth, Robert S., and Edward L. Thorndike. 1901. The Influence of Improvement in One Mental Function upon the Efficiency of Other Functions (I). Psychological Review 8: 247. [Google Scholar] [CrossRef]

- Wuttke, Alexander, Karolin Freitag, Laura Kiemes, Linda Biester, Paul Binder, Bastian Buitkamp, Larissa Dyk, Louisa Ehlich, Mariia Lesiv, Yannick Poliandri, and et al. 2024. Observing Many Students Using Difference-in-Differences Designs on the Same Data and Hypothesis Reveals a Hidden Universe of Uncertainty. SocArXiv Papers. Available online: https://doi.org/10.31235/osf.io/j7nc8 (accessed on 15 November 2024).

- Youtie, Jan, and Barry Bozeman. 2014. Social Dynamics of Research Collaboration: Norms, Practices, and Ethical Issues in Determining Co-Authorship Rights. Scientometrics 101: 953–62. [Google Scholar] [CrossRef]

- Ziliak, Stephen T., and Deirdre N. McCloskey. 2008. The Cult of Statistical Significance: How the Standard Error Costs Us Jobs, Justice, and Lives. Ann Arbor: University of Michigan Press. [Google Scholar]

| Feature ↓ | Repository → | AEA | As Predicted | OSF | REES | RIDIE |

|---|---|---|---|---|---|---|

| Allows QE studies to be registered | No | Yes | Yes | Yes | Yes | |

| Flexible pre-registration template | No | Yes | Yes | Yes | Yes | |

| QE methods can be selected within default template | No | Some | Some | Yes | Yes | |

| Specific QE preregistration templates | No | No | No | Yes | No | |

| Meta-data/tags to identify QE registrations | No | No | Some | Yes | Yes | |

| Specific content or geographic limitations | No | No | No | Yes | Yes | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Heller, B.H.; Robinson, C.D. Integrating Open Science Principles into Quasi-Experimental Social Science Research. Soc. Sci. 2025, 14, 499. https://doi.org/10.3390/socsci14080499

Heller BH, Robinson CD. Integrating Open Science Principles into Quasi-Experimental Social Science Research. Social Sciences. 2025; 14(8):499. https://doi.org/10.3390/socsci14080499

Chicago/Turabian StyleHeller, Blake H., and Carly D. Robinson. 2025. "Integrating Open Science Principles into Quasi-Experimental Social Science Research" Social Sciences 14, no. 8: 499. https://doi.org/10.3390/socsci14080499

APA StyleHeller, B. H., & Robinson, C. D. (2025). Integrating Open Science Principles into Quasi-Experimental Social Science Research. Social Sciences, 14(8), 499. https://doi.org/10.3390/socsci14080499