Abstract

In this comprehensive study, insights from 1389 scholars across the US, UK, Germany, and Switzerland shed light on the multifaceted perceptions of artificial intelligence (AI). AI’s burgeoning integration into everyday life promises enhanced efficiency and innovation. The Trustworthy AI principles by the European Commission, emphasising data safeguarding, security, and judicious governance, serve as the linchpin for AI’s widespread acceptance. A correlation emerged between societal interpretations of AI’s impact and elements like trustworthiness, associated risks, and usage/acceptance. Those discerning AI’s threats often view its prospective outcomes pessimistically, while proponents recognise its transformative potential. These inclinations resonate with trust and AI’s perceived singularity. Consequently, factors such as trust, application breadth, and perceived vulnerabilities shape public consensus, depicting AI as humanity’s boon or bane. The study also accentuates the public’s divergent views on AI’s evolution, underlining the malleability of opinions amidst polarising narratives.

1. Introduction

All of the areas of our life have the potential to become revolutionised by artificial intelligence (AI). The general public’s opinion on and acceptance of AI, however, is still largely unknown. Modern artificial intelligence has its origins in Alan Turing’s test of machine intelligence in 1950, and the phrase was first used by a professor at Dartmouth College in 1956. Today, the phrase refers to a wide variety of technology, ideas, and applications. In this research work, the word “AI” is used to describe a collection of computer science methods that allow systems to carry out operations that would typically require human intellect, such as speech recognition, visual perception, decision making, and language translation. All social systems, including economics, politics, science, and education, have been impacted recently by the rapid advancements in artificial intelligence (AI) technology (Luan et al. 2020). A total of 85% of Americans utilised at least one AI-powered tool, according to Reinhart (2018). People frequently do not recognise the existence of AI applications, nevertheless (Tai 2020). Artificial intelligence is applied in practically every aspect of life because of the quick advancement of cybernetic technology. However, some of them are still viewed as future, even sci-fi, technologies that are disassociated from the reality of existence.

According to Gansser and Reich (2021), AI is just a technology that was created to improve human existence and assist individuals in specific situations. According to Darko et al. (2020), AI is the primary technological advancement of the Fourth Industrial Revolution (Industry 4.0). AI is employed for many good purposes, such as sickness diagnosis, resource preservation, disaster prediction, educational advancement, crime prevention, and risk reduction at work (Brooks 2019). According to Hartwig (2021), AI will increase productivity, open up new options, lessen human mistakes, take on the burden of addressing complicated issues, and complete monotonous chores. These advantages of AI may therefore free up time for learning, experimentation, and discovery, which might ultimately improve human creativity and quality of life.

Numerous anticipated future breakthroughs in AI are listed in a study created by the OECD (2019). The employment market, education, health care, and national security are just a few of the sectors where AI technology is expected to improve things (Zhang and Dafoe 2019). As a result of these advantages, we would anticipate seeing positive views towards these good elements of AI. Even if there are aspirations for AI, there are also worries about this technology (Kaya et al. 2022). Potential ethical, social, and financial hazards have been extensively discussed (see, e.g., Neudert et al. 2020). A key concern with AI is the economic hazards it poses. According to Huang and Rust (2018), artificial intelligence threatens human services.

According to Frey and Osborne (2017), automation, including AI and robots, will put 47% of American employees at risk of losing their employment in the coming years. In a similar vein, Acemoglu and Restrepo (2017) highlighted the fact that robots lower costs and claimed that the US economy is losing between 360,000 and 670,000 jobs annually as a result of robotics. They also emphasised that anticipated overall employment losses will be far higher if developments proceed at the projected rate. Studies from Gerlich (2023) on virtual influencers show that trust and acceptance of AI-run influencers increase compared to human influencers. Furthermore, according to Bossman (2016), the gains will be distributed to a smaller group of individuals as a result of employment losses, which would exacerbate the problem of inequality among people on a worldwide level. In addition to economic hazards, AI may result in several security issues. High-profile AI use is raising concerns since it is unlawful, biased, and discriminatory and infringes on human rights (Gillespie et al. 2021). It was also emphasised that AI might lead to ethical problems and social anxiety (OECD 2019). Racism caused by AI-powered decision systems, potential data privacy violations, such as client tapping using AI technologies, and biased, discriminatory algorithms that disregard human rights (Circiumaru 2022) are some of the ethical issues that are frequently brought up. Other challenges that are frequently highlighted include security holes caused by AI systems, problems with law and administration arising from deploying these technologies, a lack of societal confidence in AI, and unreasonable expectations from AI. People may have unfavourable views towards these undesirable elements of AI. This study aims to investigate the attitudes of citizens towards AI in developed and developing countries.

Research Contribution

To be competitive, organisations must keep up with technological changes, especially in this quickly evolving digital era when e-commerce, mobile technology, and the Internet of Things are gaining popularity. Businesses must adopt technological breakthroughs like artificial intelligence, but it may be even more crucial to fully comprehend these approaches and their effects to implement them with the highest accuracy and precision. To improve the match between AI applications and consumer demands, businesses must comprehend both the technological and behavioural elements of their clients. All of this needs to be considered to possibly enhance the overall impact at different stages during the adoption process, eventually increasing user confidence and resolving peoples’ problems. This survey intends to shed light on how people throughout the world feel about artificial intelligence. The results are anticipated to improve knowledge of the variables influencing public acceptability of AI and their potential effects on adoption and dissemination. The study could potentially spot possible obstacles to the general deployment of AI and offer solutions.

The current scholarly landscape reveals a conspicuous paucity of empirical studies centred on the general public’s perceptions of artificial intelligence, thereby offering a fertile ground for additional inquiry (Pillai and Sivathanu 2020). Gerlich’s (2023) study unearthed intriguing patterns of consumer behaviour, particularly when the majority of participants professed a belief in the sincerity of AI-driven influencers, perceiving them as devoid of self-interest. Despite a prolific corpus of literature addressing multiple facets of artificial intelligence and its attendant challenges for the business sector, a discernible lacuna persists. There remains an unaddressed question concerning societal preparedness for the imminent transformations and prevailing sentiments towards avant-garde technologies such as chatbots, artificial intelligence, and other intricate tools. Artificial intelligence, still in a nascent stage of development, poses considerable uncertainties regarding its future trajectory, a point underscored by Kaplan and Haenlein (2019). For a more nuanced understanding and judicious exploitation of artificial intelligence, it is imperative to consider multiple dimensions, including but not limited to enforcement, employment, ethics, education, entente, and evolution. According to prevailing academic discourse, public sentiment wields substantial influence over the assimilation of emergent technologies, significantly affecting adoption decisions (Lichtenthaler 2020). An array of scholarly endeavours focuses on the scrutiny of intangible assets such as social networks, virtual worlds, and artificial intelligence (Borges et al. 2020). However, equal attention ought to be accorded to the human element—specifically, attitudes and perceptions vis-à-vis the incorporation of cutting-edge technology—as these are critical determinants of organisational success and competitive edge. Astonishingly, scant research exists that assesses technological advancement and digital metamorphosis within corporate frameworks from the perspective of employees’ attitudes towards technological innovation. This present investigation aims to partly ameliorate this scholarly void by examining public sentiments and perspectives on the adoption and deployment of artificial intelligence. By addressing this research gap, both academic institutions and business enterprises stand to gain invaluable insights, thereby enabling strategies to mitigate the most salient elements fuelling public resistance towards AI acceptance.

Key questions addressed in this research work include the following:

- R1

- What is the public’s perception of AI in Western countries?

- R2

- What factors influence public attitudes towards AI?

- R3

- What are the potential consequences of public attitudes towards AI for its adoption and diffusion?

The research work has been performed around these questions. The research is based on analysing the existing studies to filter out the factors and then using them to collect primary data and perform tests and modelling to reach a conclusion.

2. Materials and Methods

The research approach for this study was an ex post facto survey that was correlational, cross-sectional, and non-experimental. This study is non-experimental since it depends on measurement and excludes researcher intervention. Non-experimental designs are intended to look at variance among the sample’s participants. Non-experimental designs enable the analysis of the connections between variables. Multiple justifications are given by McMillan and Schumacher (2014) to support the significance of linkages in educational research. Relationships allow the researcher to identify potential reasons for significant educational outcomes at the preliminary stage. Additionally, this study is correlational. A correlational design, according to Creswell and Guetterman (2019), is acceptable if the purpose of the study is to link two or more variables to look at how they impact one another. They distinguished between two categories of correlational designs: prediction designs, which are effective in making predictions, and explanatory designs, which are used to explain correlations between variables.

This study uses a mixed-methods approach, including both quantitative and qualitative data collection and analysis. A survey questionnaire was administered to a representative sample of citizens in the US and European countries to assess their perceptions of AI. The survey also included questions about demographic characteristics, such as age, gender, education, and income.

Cross-sectional and longitudinal survey designs were contrasted by Creswell and Guetterman (2019). For this study, a cross-sectional survey approach was employed since data were collected all at once. According to Creswell and Guetterman (2019), cross-sectional survey methods are useful for learning about current attitudes, views, or beliefs. Ex post facto designs are used to examine if one or more pre-existing issues may have led to subsequent discrepancies in the subject groups (McMillan and Schumacher 2014).

A total of 625 responses from the US, 321 from the UK, 265 from Germany, and 199 from Switzerland were recorded for the questionnaire circulated in business schools from these countries. Of the total of 1410 responses received, 21 responses were incomplete and hence were discarded, leaving a total of 1389 responses in total as a sample size. In this research, a hybrid sampling approach was employed, blending elements of both convenience and volunteer sampling as well as a stratified random sampling technique within a very specific demographic: students and faculty members from business schools. This choice was guided by the assumption that individuals within this demographic, by virtue of their educational background and professional orientation, are likely to have at least rudimentary, if not advanced, interactions with AI technologies. Given that these individuals are often the vanguard of technological adoption within organisations, we deemed their perspectives to be particularly enlightening for the study.

The sample comprised a balanced representation of genders and diverse socio-economic backgrounds, thus mitigating potential biases. Moreover, the sample size of 1389 was derived from a power analysis, aligning with Cohen’s (1988) recommendations, and permits a margin of error of approximately 3% at a 95% confidence level.

Multistage data collection processes were implemented, starting with an eligibility screen based on a pre-defined set of criteria relevant to business school affiliations and familiarity with AI. These safeguards assure us of the validity and reliability of our findings. The QuestionPro platform was used for participants’ convenience.

The sampling strategy, while focused on a specific educational and professional subset, was meticulously designed to capture nuanced viewpoints on AI from those most likely to be at the intersection of technology and business.

Focus groups are a qualitative research method used to elicit opinions, ideas, and comments from a broad range of people about a certain idea, concept, or subject. They are led by a qualified facilitator who keeps the conversation on track and pushes participants to be candid with one another. Focus groups are well-liked for a variety of reasons, such as deep understanding, catching non-verbal clues, various opinions, cost effectiveness, etc. It is crucial to remember that focus groups have limits as well. Individual answers can be influenced by group dynamics, and participants may not always convey their genuine emotions because of social desirability bias or other issues. The outcomes of focus groups may also not be reflective of the general public due to the limited sample size. In addition, a series of focus group discussions was conducted to gather more in-depth insights into participants’ attitudes towards AI. These focus groups were conducted country-wise for both positive and negative viewpoints. In this study, there were two focus groups created for each nation. The participants were either students or faculty members of business schools. Participants were split into two groups: one that heard favourable things spoken about AI, and the other that heard unfavourable things said about AI. The focus group members were asked at the start of the session whether they saw AI favourably (as a solution to many issues facing humanity) or negatively (AI would bring about humanity’s demise). The methodology employed for all eight focus groups can be categorised as a staged, or phased, qualitative approach. This research design facilitates the capturing of rich, nuanced data by organising the focus group interactions into distinct yet interconnected stages (Morgan 1996). Each stage serves to fulfil specific research objectives, as delineated in progressive inquiry models (Krueger and Casey 2015).

In the initial stage, the research aims to establish baseline attitudes, serving essentially as a diagnostic phase. This provides foundational data against which the findings of subsequent stages may be compared (Maxwell 2013). The second stage introduces curated arguments to participants, acting as an initial intervention designed to provoke contemplation and perhaps modify entrenched beliefs (Flick 2018). The third stage is an innovative incorporation of perspectives from authoritative figures. It allows for the examination of the impact of expert opinions on public sentiment, a phenomenon extensively studied in the literature under the influence of perceived authority (Cialdini 1984). In methodological terms, this stage might be considered an ‘authority intervention’, aiming to assess the sway of authoritative figures on lay opinions (Asch 1955). Finally, the fourth stage serves as a reflective phase, wherein participants acknowledge the limitations of their understanding (Kvale and Brinkmann 2009). This stage provides insights into the cumulative effects of diverse types of information and perspectives, contributing to a more nuanced comprehension of the topic.

Overall, this staged approach resembles an iterative, multi-layered funnel that narrows from general opinions to specific, informed attitudes, incorporating different forms of informational inputs at each stage (Greenbaum 1998). It also has a longitudinal dimension, offering a temporal depth to the data by tracking the evolution of participant attitudes within a single research setting (Longhurst 2016). This methodological design proves particularly beneficial for exploring complex and polarised topics (Denzin and Lincoln 2005), where initial viewpoints may be strongly held but are subject to modification upon the introduction of new, credible information (Creswell and Creswell 2017). The results of the focus groups were then utilised as an additional layer of validation for the survey answer data.

2.1. Structural Equation Modelling

According to Meyers et al. (2013), structural equation modelling (SEM) is a statistical technique used to analyse structural models that contain latent variables. SEM can be used to analyse both measurement models and structural models, two distinct types of models. The measuring model evaluates how well the relationships between the observed variables mirror the expected relationships between the variables. If the data from the observed models and the hypothesised model are congruent, the structural equation model may be used to represent the hypothesised model. The nature of the study hypothesis justifies using structural equation modelling as a method for data analysis. This study’s major focus was the structural model, and its applicability in light of the observed model was investigated.

GSCA (version 1.2.1) software’s structural equation modelling analysis (SEM) was used to evaluate the hypothesis. Models for both measurements and structures may be examined using the data analysis technique known as SEM. Since it can be studied independently of the measurement model, the structural model was the investigation’s primary focus (Meyers et al. 2013).

2.2. Definition of Variables

The questionnaire (Appendix A) had a total of 46 questions, which were recorded on 6-point Likert Scale. These questions were further grouped into seven broad categories to record the understanding and responses of the respondents in detail while reducing the number of variables to the bare minimum. The list of broad categories and their respective question attributes are given below:

- Perceived benefits of AI: this variable explores people’s attitudes towards the benefits that AI can provide, such as increased efficiency, accuracy, and convenience.

- Perceived risks of AI: this variable explores people’s attitudes towards the risks associated with AI, such as job displacement, privacy concerns, and the potential for AI to be used for malicious purposes.

- Trust in AI: this variable explores the level of trust that people have in AI, including their perceptions of AI’s reliability and predictability.

- Societal issues and frustrations: this variable explores how societal issues such as politics, climate change, and social inequality may impact people’s attitudes towards AI, as well as how they perceive AI’s potential to address these issues.

- Demographic factors: this variable explores how factors such as age, gender, education level, and income may impact people’s attitudes towards AI.

- Exposure to AI: this variable explores how people’s level of exposure to AI, including their use of AI-powered products and services, may impact their attitudes towards AI.

- Cultural factors: the impact of cultural factors on the usage and adoption of AI in society.

Demographics:

- Country;

- Age;

- Gender;

- Education;

- Income;

Perceived benefits of AI;

- Increases efficiency and accuracy;

- Offers convenience and saves time;

- Improves decision-making processes;

- Helps solve complex problems;

- Leads to cost savings;

- Creates new job opportunities.

Perceived Risks of AI:

- Leads to job displacement;

- Violates privacy concerns;

- Used for malicious purposes;

- Causes errors and mistakes;

- Perpetuates bias and discrimination;

- Unintended consequences.

Trust in AI:

- Performs tasks accurately.

- Makes reliable decisions.

- Is predictable.

- Confidence in AI’s ability to learn.

- Keeps my personal data secure.

- Used ethically.

- Less or no personal interests compared to humans.

Governmental/societal issues:

- The government does not solve important issues like climate change.

- AI can help address societal issues such as climate change and social inequality.

- Governments cannot solve global issues.

- AI has the potential to solve global issues.

- Politicians and countries have too many vested interests.

- AI has the potential to make society more equitable.

- AI can help create solutions to societal issues.

Usage of/exposure to AI:

- Use AI-powered products and services frequently.

- Basic understanding of what AI is and how it works.

- Zero experience with AI.

- Comfortable using AI-powered products and services.

- Encountered issues with AI-powered products and services in the past.

Cultural influence and AI:

- Cultural background influences my attitudes towards AI.

- Different cultures may have different perceptions of AI.

- AI development should take cultural differences into account.

- Cultural beliefs impact my level of trust in AI.

- Cultural diversity can bring unique perspectives to the development and use of AI.

Future perspectives:

- AI is the future of humankind.

- AI is the end of humankind.

Apart from these, some free text questions were also asked to the respondents on each broader category topic to capture the peculiar points, but the response level for those questions was very thin (~5%), so these questions are not included in the quantitative study. A detailed questionnaire has been appended to the annexure.

3. Literature Review

The idea of how people feel about artificial intelligence has recently come into focus and grown in significance. The perceptions of AI and the variables influencing them are of increasing interest (Schepman and Rodway 2022). Neudert et al. (2020) showed that many people worry about the hazards of utilising AI after conducting extensive studies including 142 nations and 154,195 participants. Similarly to this, Zhang and Dafoe (2019) conducted a survey involving 2000 American individuals and discovered that a sizable fraction of the participants (41%) favoured the development of AI while another 22% were opposed to it. The majority of people have a favourable attitude towards robots and AI, according to a large-scale study that included 27,901 individuals from several European nations (European Commission & Directorate-General for Communications Networks, Content & Technology 2017). It was also emphasised that attitudes are mostly a function of knowledge: higher levels of education and Internet usage were linked to attitudes that were more favourable towards AI. Additionally, it was shown that individuals who were younger and male had more favourable views about AI than participants who were female and older. While numerous demographic determinants of AI views have been identified in earlier studies, there is still a significant need to explore them in many cultural contexts. The 2023 study of Gerlich on AI-run influencers (virtual influencers) showed that the study participants showed more trust and comfort with an AI than human influencers. Therefore, there may be a variety of factors influencing a person’s propensity to adopt AI in particular application areas. In a thorough study, Park and Woo (2022) discovered that the adoption of AI-powered applications was predicted by personality traits; psychological factors like inner motivation, self-efficacy, voluntariness, and performance expectation; and technological factors like perceived practicality, perceived ease of use, technology complexity, and relative advantage. It was also discovered that facilitating factors, such as user experience and cost; factors related to personal values, such as optimism about science and technology, anthropocentrism, and ideology; and factors regarding risk perception, such as perceived risk, perceived benefit, positive views of technology, and trust in government, were significantly associated with the acceptance of smart information technologies. Additionally, subjective norms, culture, technological efficiency, perceived job loss, confidence, and hedonic variables all have an impact on people’s adoption of AI technologies (Kaya et al. 2022). The results of another study including 6054 individuals in the US, Australia, Canada, Germany, and the UK showed that people’s confidence in AI is low and that trust is crucial for AI acceptance (Gillespie et al. 2021).

The Special Eurobarometer, which was conducted in 2017, investigated not only how digital technology affects society, the economy, and quality of life but also how the general public feels about artificial intelligence (AI), robots, and their ability to do a variety of activities. Overall, 61% of respondents from Europe were enthusiastic about AI and robots, whereas 30% were disapproving. Additionally, it was claimed that exposure to robots or artificial intelligence is likely to have a good impact on their attitudes. Furthermore, 68% of respondents concur that AI and robots are good for society since they may assist with household chores. However, over 90% still think that in order to handle hazards and security issues, AI and robots need cautious management. 70% of respondents also voiced concern about the possibility of job loss as a result of the use of AI and robots. Additionally, only a small percentage of respondents feel at ease with AI or robots performing tasks like driving a driverless car (22%), operating on patients with robots (26%), and receiving items with robots or drones (35%). With the proliferation of AI application governance in the future, understanding public views and opinions has become more important over time. To better understand Americans’ attitudes about AI applications in general and in governance in particular, the Centre for the Governance of AI polled 2000 American adults in a manner akin to that of the Eurobarometer (2017). The results demonstrated a mixed attitude towards future support for the development of AI: 41% of respondents support it, while 22% oppose it somewhat or severely. However, just 23% of Americans had an unfavourable view of AI applications, whereas 77% of Americans believed AI will have a good influence or be somewhat useful in the job and lives of civilians in the next 10 years (Zhang and Dafoe 2019).

The research also identified the contribution of income, education, and gender to the advancement of AI. It showed that male respondents who are more literate or wealthy or have technical experience prefer AI and support its use. It is also important to note that 82% of Americans agreed that the government should carefully monitor AI and robotics given the hazards that have not yet been addressed.

This leads to the conclusion that attitudes towards and the reasons for the acceptance of AI technology are complex structures made up of several elements. In order to gain a wider perspective and a deeper understanding of society’s response to these recently discovered technologies, it may be beneficial to investigate the variables influencing attitudes towards AI. In the current study, we looked at the influence of a few personal variables and, in part, the interaction of broad and specific predictive variables in predicting attitudes towards AI. But before we discuss the methodology around the current research, it will be pragmatic to understand the underlying theories.

3.1. The Unified Theory of Acceptance and Usage of Technology

Civilians have been utilising technology and information systems to raise the standard of living thanks to the technological and informational revolution of the 20th and 21st centuries. To increase efficiency, organisations use technology in their daily operations. The technology needs to be adopted and used by customers or employees in order to achieve that goal. Therefore, a critical challenge in the life of any information system is to understand how users perceive innovative technology and the elements that impact their acceptance or rejection behaviour (Sivathanu and Pillai 2019). The unified Theory of Acceptance and Use of Technology (henceforth UTAUT), published in 2003 by Venkatesh et al., is one of the most effective models ever created to predict technology acceptance and adoption intention of new technology (Lai 2017). Performance expectancy, effort expectancy, and social influence were suggested as three direct determinants of behaviour intentions by Venkatesh et al. (2003) after reviewing eight prior theories of technology acceptance. Usage intention and facilitating conditions were suggested as two direct determinants of usage behaviour. Theories of technological acceptability have been developed as a result of this progression (Momani and Jamous 2017).

The degree to which one expects that AI will improve their performance or work is known as performance expectancy (PE). It is built using related components including outcome expectancy, relative advantage, and perceived usefulness from the technology acceptance model (TAM) (Davis et al. 1989). According to Davis et al. (1989), perceived usefulness refers to how much a person believes that employing technology would improve their performance. In the research on system utilisation, the perceived impacts on performance components had the strongest association with one’s own self-prediction of system usage. According to Brandtzaeg and Følstad (2017), a key motivator for AI (in the form of chatbot) usage intention is the expectation of a chatbot’s productivity, which is defined as how well it helps users gain important information in a short period and almost at any moment.

The term “effort expectancy” (EE) describes how simple or easy it is to utilise AI systems or apps. The perceived ease of use from the technology acceptance model (TAM) and the complexity of the system to comprehend and use are among the components of effort expectation. According to Davis (1989), perceived ease of use refers to how little effort a person thinks utilising a certain system would need. As a result, if one programme is thought to be more user-friendly than another, it is more likely to be adopted. The perceived ease of use not only directly affects usage intentions but also has an effect on perceived usefulness since, when all other factors are held constant, the perceived usefulness of a system increases with perceived ease of use.

According to Venkatesh et al. (2003), social influence (SI) is the extent to which a person’s close friends or family members think he or she should adopt AI systems or applications. In the Theory of Planned Behaviour (Ajzen 2005), social influence is modelled as subjective norms and functions as a direct predictor of usage intention. Subjective norms are determined by an individual’s perception of whether peers, significant persons, or major collective groupings believe that a specific behaviour should be carried out. The motivation to comply, which weighs the social pressures one feels to conform their behaviour to others’ expectations, and normative views, which relate to how significant others see or approve of the performed behaviour, decide it (Hale et al. 2003). According to the study by Gursoy et al. (2019), consumers consider their friends and family to be the most valuable sources of information when making decisions. Users have been seen to absorb the customs and ideas of their social groups and behave accordingly, particularly with regard to their intentions for using technological applications like online services.

The term “facilitating conditions” (FC) describes how much a person thinks they have the tools or organisational backing to make using AI applications easier. According to the Theory of Planned Behaviours, the perception of perceived behavioural control is likewise a facilitating condition (Ajzen 2005). People who perceive themselves to have behavioural control believe they have the skills and resources needed to carry out the behaviour. The greater the capacity and resources people feel themselves to possess, the greater the influence that perceived behavioural control has on behaviour. Two effects of perceived behaviour control are also supported by the Theory of Planned Behaviours. It may affect someone’s intention to act in a particular way. Our intention to behave is greater the more control we believe we have over our behaviour. Ajzen (2005) also raised the idea of perceived behaviour. Further, Venkatesh et al. (2003) examined the moderating impacts of the variables of gender, age, experience, and voluntarism use and discovered an improvement in the testing model’s predictive power when moderating factors were included. Age, for example, was shown to mitigate all of the interactions between behavioural intentions and determinants (Venkatesh et al. 2003).

Additionally, Venkatesh et al. (2003) investigated the three variables of attitude, self-efficacy, and anxiety and came to the conclusion that they do not directly affect behavioural intention. The four primary UATUT components and these external factors are still being looked at by numerous academics in their models. Since its inception, UTAUT has gained popularity as a paradigm for several analyses of the behaviour of technology adoption. In addition to re-examining the original models, researchers also take into account theoretical and fresh external factors. Williams et al. (2015) found that perceptions of ease of use, usefulness, attitude, perceived risk, gender, income, and experience have a significant impact on behavioural intention, while perceptions of age, anxiety, and training have less of an impact.

Depending on the particular study environment, social influence and facilitating conditions demonstrated their value in various ways. For instance, according to Alshehri et al. (2012), facilitating conditions have weakly favourable effects on Saudi residents’ intentions to use and accept e-government services, whereas social influence has little effect on such intentions. In contrast, social influence was found to be the most significant and direct determinant of acceptance intention in a study by Jaradat and Al Rababaa (2013) about the drivers of usage acceptance of mobile commerce in Jordan, whereas facilitating conditions was suggested to have no significant effects on usage intention. Additionally, it is advised that moderating variables be investigated because the moderating effects may differ depending on the testing contexts.

3.2. AI Device Use Acceptance Model (AIDUA)

To improve the precision of our forecasts, we must take into account theoretical models created with the express intent of gauging the acceptability of AI. Traditional technology acceptance models (i.e., TAM and UTAUT) should only be used to examine non-intelligent technology, according to Gursoy et al. (2019), as their predictors are useless for AI adoption. To research consumer acceptability of AI technologies, they created the AI device use acceptability model (AIDUA). The AIDUA builds on prior acceptance models by examining user experience at three different levels (primary appraisal, secondary appraisal, and result stage) to investigate user acceptance of AI agents.

According to Gursoy et al. (2019), customers should base their major appraisal of an AI device’s value on social impact, hedonic motivation, and anthropomorphism. Hedonic incentive is the term used to describe the outward pleasure that using AI technology would appear to provide. Anthropomorphism defines human traits that technology could try to replicate, such as how people seem. Based on their perceptions of the performance expectation and effort expectation of the AI device, consumers would weigh the costs and benefits of the device, which would result in the creation of feelings towards the AI (Gursoy et al. 2019). The early evaluation phase, which also affects whether customers will accept or reject the technology, determines the result stage (Gursoy et al. 2019). The unwillingness to use AI technology in favour of human aid is objectionable. This two-pronged outcome stage differs from traditional acceptance models, which is useful for researchers who do not view acceptance and rejection as diametrically opposed concepts. For instance, a user may disapprove of Amazon due to moral concerns, yet they nevertheless demonstrate their acceptance by utilising the service.

3.3. Perceived Risks of AI

The term “perceived risk” was originally used by Bauer in his work from 1960 and refers to worries about the negative effects of a certain action. It typically arises when dealing with options since the result of a choice cannot be known until after it has been made. This introduces uncertainty and hazards, which are typically viewed as unpleasant and anxious-inducing. Numerous studies have examined the effects of perceived risk on the uptake of products and services (Featherman and Pavlou 2003). He further broke down perceived risk into six primary dimensions, (1) financial, (2) performance, (3) psychological, (4) safety, (5) opportunity/time social, and (6) loss, in order to better understand how perceived risk affects adoption behaviour.

According to Man Hong et al. (2018), the following are the facets of perceived risks:

- Time Risk: the risk that might have an influence on how important time, convenience, and effort are when buying or replacing items.

- Physical danger: a danger that might have negative effects on one’s health and vitality.

- Psychological Risk: the possibility of losing confidence or self-esteem.

- Social danger: a danger that affects one’s standing or social group connections.

- Financial risk is the possibility that one’s assets or fortune may be harmed.

- Performance risk is the possibility that a product will not work as intended or will not produce the desired results.

Numerous investigations using technology systems and information system adoption behaviour have also accepted the approach. For instance, Featherman and Pavlou (2003) investigated seven aspects of perceived risks in their study on predicting e-service adoption and came to the conclusion that performance-related risks (privacy, financial, and time risk) had a significant inhibitory effect on usage intentions for electric bill payment systems.

3.4. AI Adoption and Cultural Bias

According to Arrow et al. (2017), the length of time it takes for an invention to be adopted depends on when people first learn about it. In addition to significant individual and overall variations in innovation adoption patterns among consumers, Restuccia and Rogerson (2017) found significant variations in innovation adoption patterns between markets. There are then questions about whether various consumer groups behave differently across nations and what factors affect the differences in consumer adoption rates of AI. Combining the aforementioned concepts results in a comprehensive model that takes into account temporal arrangements among diverse groupings of individual adopters. According to Tubadji and Nijkamp (2016), if the local culture is not sufficiently receptive to unique ideas, the generation of creative investment ideas may be inhibited. However, even if a novel idea succeeds in slipping through the cultural net and coming to fruition, its adoption, replication, and dissemination will still be influenced by the degree to which local social and business networks are interconnected, with network interconnectedness also being culturally dependent (Verdier and Zenou 2017). Beyond attitudes to uncertainty, many examples illustrate the effect of cultural bias on economic choice. Cultural biases are known to affect preferences for types of income distribution, gender roles, developments of financial institutions, crime, competitiveness, economic productivity, work culture, and socio-economic development (Guiso et al. 2006). Culture seems to pervade all economic systems, making it unlikely that any sector including artificial intelligence adoption is spared from this powerful cultural effect.

4. Results and Discussion

4.1. Demographic Analysis

A total of 1389 responses were found to be complete, and these were taken as the sample size for research. Of the 1389 responses, 53% were recorded for males and 47% were recorded for females. This figure was consistent across countries and the overall combined data. So, there is no chance of any skewness in the results because of the change of gender weightage in responses.

Referring to the age factor (Figure 1), a fair representation was found for all of the age groups in the sample. Approx. 26% of respondents were between the ages of 18 and 24 years, another 25% were for the age group between 25 and 34 years, and 23% of the total responses were from people between 35 and 44 years old. So, it can be safely inferred that the responses recorded and the results thereof are a fair representation of the public at large and are not concentrated on a particular age group.

Figure 1.

Age distribution of participants.

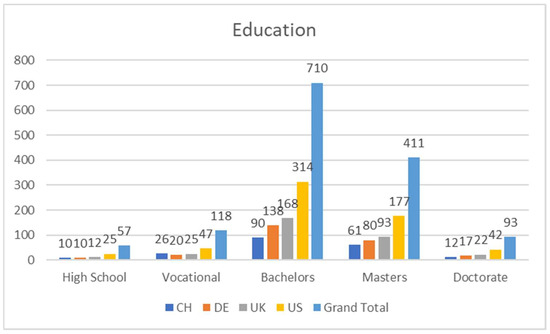

From the finished education standpoint (Figure 2), most of the respondents are educated to a bachelor’s level. This means that the respondents have a fair level of understanding of the subject and are educated to the level that they can give a fair and legitimate opinion on the matter that is not based on a random selection of responses owing to lack of knowledge. These people are the building blocks of the nation and are also the major users of the Internet and the services that can be related to artificial intelligence.

Figure 2.

Finished education distribution of participants.

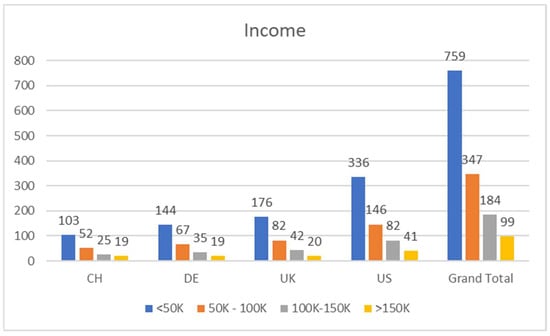

Looking at the income levels (Figure 3), ~75% of the respondents are people who earn <USD 100 K per annum. The reason for the concentration of such respondents in the sample is that these people are approachable using the means adopted for circulating the questionnaire. Also, these are the people who devote time to fill in online questionnaires and are widely impacted by the adoption and proliferation of any new technology.

Figure 3.

Income distribution of participants.

Table 1 shows below the mean values of the demography-related responses for all countries and the total questionnaire. This table further validates the assumption that the distribution of all respondents across countries is almost the same, and no skewness or spurious result is anticipated because of any outliers in a certain category. The distribution of the sample population across nations on the parameters of age, gender, education, and income is almost the same.

Table 1.

Mean values of the demography.

4.2. Regression Model

A regression test (Table 3) has been performed as a next step to check the validity of the responses captured under the perception-related questions. The perception or the future usage of AI tools is taken as the dependent variable. The independent variables are the basic understanding, comfort level, cultural aspects, and beliefs of the respondents. An attempt has been made to check if these factors contribute to the adoption and acceptance of AI.

The proposed hypotheses are shown in Table 2:

Table 2.

Hypothesis structure.

Table 2.

Hypothesis structure.

| Hypothesis No. | Null Hypothesis | Alternate Hypothesis |

|---|---|---|

| 1 | Basic understanding of AI directly relates to perception that AI is the solution to societal, climatic, and global issues | Basic understanding of AI does not relate to perception that AI is the solution to societal, climatic, and global issues |

| 2 | Comfort of using AI directly relates to the perception that AI is the solution to societal, climatic, and global issues | Comfort of using AI does not relate to the perception that AI is the solution to societal, climatic, and global issues |

| 3 | Cultural background of people directly relates to the perception that AI is the solution to societal, climatic, and global issues | Cultural background of people does not relate to the perception that AI is the solution to societal, climatic, and global issues |

| 4 | Cultural beliefs of people directly relate to the perception that AI is the solution to societal, climatic, and global issues | Cultural beliefs of people do not relate to the perception that AI is the solution to societal, climatic, and global issues |

| 5 | People believe that AI is the future, and, hence, there is a perception that AI is the solution to societal, climatic, and global issues | People do not believe that AI is the future, and, hence, there is a perception that AI is the solution to societal, climatic, and global issues |

Table 3.

Regression results.

Table 3.

Regression results.

| Paratmeter | CH | DE | UK | US | Total | |

|---|---|---|---|---|---|---|

| Multiple R | 0.922 | 0.929 | 0.912 | 0.912 | 0.838 | |

| R Square | 0.850 | 0.863 | 0.833 | 0.831 | 0.837 | |

| Intercept | p-value | 0.547 | 0.803 | 0.825 | 0.900 | 0.484 |

| Basic Understanding | 0.358 | 0.575 | 0.092 | 0.093 | 0.267 | |

| Comfort with AI | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |

| Cultural Background | 0.726 | 0.594 | 0.090 | 0.655 | 0.684 | |

| Cultural Belief | 0.091 | 0.412 | 0.233 | 0.428 | 0.108 | |

| AI is Future | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 |

The average score for the questions about the AI being the future of mankind is 3.98, which shows a positive inclination. Respondents feel that AI is going to be the future of life. But is this perception supported by the underlying reasons? That was tested by the regression model, and it was found that the coefficients of these factors were not significant (p-value was >0.1, 90% confidence level). It was interesting that each country has a different combination. While respondents in all countries expressed their comfort with AI and admitted to believing that AI is the future technology to solve their problems and ease their pain points, the underlying factors gave a different picture. None of the factors had coefficients significant at a 95% confidence level, but when checked at 90% confidence, the overall picture was a bit better. Respondents in the UK and the US showed a basic understanding of AI. For other countries, the coefficient of basic understanding was not significant. All respondents refuted that cultural background has any impact on the adoption and future use of AI. This finding is not in line with the past studies discussed in the literature review section. However, Swiss nationals responded that AI should make sure that it is in line with their cultural beliefs for the people to trust AI in the future. So, with regression as well, we obtained mixed responses, and, thus, there is a need to include more parameters in the study to check on the usage of AI in the future. This paves the path for SEM.

The final acceptance and rejection of the null hypothesis is shown in Table 4:

Table 4.

Hypothesis acceptance/rejection.

4.3. Structural Equation Method

SEM was conducted on the entire set of data as mentioned in the methodology section using GSCA software (version 1.2.1). The results of the test are as follows:

- Analysis Type: basic GSCA/single group.

- Number of bootstrap samples: 100.

- The ALS algorithm converged in six iterations (convergence criterion = 0.0001).

H0:

The proposed model is a good fit, and multiple factors directly influence the acceptability and usage of AI in the future.

H1:

The proposed model is not a good fit, and multiple factors do not influence the acceptability and usage of AI in the future.

4.3.1. Model Fit Measures

The amount of variation across all variables (indicators and components) that a given model specification can explain is known as FIT. The values of FIT are in the range of 0 to 1, such as R squared in linear regression. The more variance in the variables is accounted for by the given model, the bigger this number. For instance, FIT = 0.50 denotes that the model explains 50% of the variation in all variables. There is no FIT cutoff that can be used as a general indicator of a good fit. Therefore, this parameter in itself is not sufficient to draw conclusions on the goodness of fit of the model, but this parameter, when coupled with mean squared residuals, can provide a conclusive response on the goodness of fit.

The SRMR (standardised root mean squared residual) and the GFI (goodness-of-fit indicator) are both proportional to the difference between the covariances in the sample and the covariances that the parameter estimations of the GSCA replicate. According to recent research, the following cutoff parameters for GFI and SRMR in GSCA should be used as a general rule of thumb (Cho et al. 2020).

A GFI greater than 0.89 and an SRMR less than 1.4 imply a satisfactory fit when the sample size is 100. While it is possible to evaluate model fit using either index, it may be preferable to utilise the SRMR with the aforementioned cutoff value rather than the GFI with the proposed cutoff value. Additionally, a GFI cutoff value of less than 0.85 may still be suggestive of a good match if the SRMR is less than 0.9.

When the sample size exceeds 100, a GFI greater than 0.93 or an SRMR less than 1.2 denotes a satisfactory fit. In this instance, there is no preference for utilising a combination of the indexes over using each one independently or for using one index over the other. The proposed cutoff value for each index can be used individually to evaluate the model’s fit.

Based on these outcomes, the null hypothesis is accepted, and the model is a good fit (Table 5) to understand and estimate the acceptability of AI in the future based on the perception and attitude of people towards the enlisted factors.

Table 5.

Model fit measures.

4.3.2. Path Coefficients

Table 6 shows the estimates of path coefficients and their bootstrap standard errors (SE) and 95% confidence intervals (95% CI). All of the path coefficients are within the 95% CI level, thus indicating that the estimates are significant.

Table 6.

Path coefficients.

4.3.3. Variable Correlations

As seen from the correlations table (Table 7), the results are very much in line with the expectations and the theories discussed. The usage/acceptance of AI is directly impacted by the trust, the social/governmental issues, and the current use cases of AI. The cultural factors have the least impact on the usage of AI, whereas risks pertaining to AI have a detrimental impact on the usage of AI. Further, the most important factor is the trust factor for the adoption and proliferation of AI technology. People need to trust in the technology, and the responses show that people have high trust in the calibre and prospects of AI. The least correlated factor is the cultural background and cultural beliefs, which would require people to understand AI better. It will take them some time to understand the impacts of AI on cultural backgrounds and beliefs, and the factor might start playing its role after that.

Table 7.

Component correlations.

4.4. Focus Groups

For each country, two focus groups with ten participants each were created. The focus group sessions were two hours long and guided by the author to ensure consistency over all focus groups. One focus group per study country received only positive arguments for AI development, while the other group received only negative arguments (from Stephen Hawking, James Barrat, and Gary Marcus).

The focus group study was an instructive investigation into public attitudes concerning the role of artificial intelligence (AI) in society. Utilising a meticulously designed four-stage process, the study not only revealed the initially steadfast opinions that participants held but also captured the nuanced ways in which these attitudes could change over the course of a structured discussion.

In the first stage, participants manifested strongly held initial beliefs, either in favour or against AI’s impact on society. Their initial reticence to deviate from these beliefs provided a compelling backdrop for the subsequent phases.

The second stage saw the moderator presenting a curated set of arguments, either advocating for or cautioning against AI. Notably, despite this added layer of complexity and nuance, the participants largely retained their original viewpoints. Nevertheless, a discernible shift towards contemplation was observed, suggesting that while their convictions had not been overturned, they had at least been questioned.

A critical juncture was reached in the third stage. At this point, the moderator introduced perspectives from figures of public repute, either endorsing or warning against AI. Remarkably, female participants were consistently the initial change agents, revealing a potentially gender-specific dynamic that warrants further study. Their willingness to modify their viewpoints seemed to act as a catalyst, enabling a more widespread attitudinal shift among the remainder of the group.

By the fourth and final stage, a commonality had emerged among the participants: an admission of their limited grasp of AI’s complexities. This collective acknowledgment served as evidence that the dialogues had not just swayed viewpoints but also deepened the overall understanding of AI’s intricate role in societal constructs.

The outcomes remained remarkably uniform across all eight focus groups: Participants who were exposed to cogent arguments and statements either underwent a transformation in their viewpoints or remained steadfast in their existing beliefs. Individuals who initially harboured a negative predisposition towards artificial intelligence experienced a metamorphosis towards a more favourable disposition upon being presented exclusively with arguments advocating the merits of AI. Conversely, participants with ambivalent sentiments towards AI prior to their engagement in the focus group discussion found their perspectives solidified into a more positive stance after encountering solely affirming justifications. Similarly, those who commenced the focus group session harbouring a favourable view towards AI, but who were then exposed exclusively to arguments challenging its societal implications, experienced a consequential shift towards a more sceptical outlook. Participants who entered the discourse with mixed emotions concerning AI underwent a significant transformation in their viewpoints after being confronted solely with critical arguments. Irrespective of the participants’ preliminary attitudes prior to the focus group discussions, the ultimate consensus gravitated towards a unified perspective, significantly influenced by either the commendatory or critical evidence provided during the discussion.

Incorporating the significant influence exerted by public intellectuals, the third stage of the focus group process further revealed an intriguing trend. Upon introducing the perspectives of globally recognised figures who have been vocal on matters of AI, a pronounced shift in viewpoints was observed. This finding corroborates the literature on the role of experts in shaping public opinion; a well-cited example of this influence is evident in Collins and Evans’ “Rethinking Expertise” (Collins and Evans 2007). Such luminaries act as “boundary figures,” straddling the line between specialised knowledge and public discourse, and thus possess the capacity to reshape commonly held viewpoints. In the focus groups, the inclusion of perspectives from noted experts such as Stephen Hawking significantly expedited this process of attitudinal transformation, highlighting the pivotal role that these figures can play in shaping public debates and ultimately influencing policy (Collins and Evans 2007; Brossard 2013).

The revelations from the focus groups were aligned with the well-established theory of ‘agenda-setting’, wherein the mass media and opinion leaders like academics or renowned experts play a pivotal role in moulding public opinion (McCombs and Shaw 1972). Therefore, the significant impact noted when introducing comments from such experts is consistent with existing scholarly contributions on the dynamics of public opinion.

Continuing, the implications of this study are particularly relevant for a scenario wherein the hypothetical development of a superintelligence threatens humanity and necessitates decisive action from governments. Supported by previous works such as Bostrom’s “Superintelligence” (Bostrom 2014), the focus group findings indicate that public opinion can be both malleable and influenced by authoritative figures, lending urgency to the need for factual, well-reasoned information dissemination, especially in matters as consequential as the governance of transformative technologies.

Finally, the focus group study illuminates the challenges governments may face in adopting effective policies when public opinions are so susceptible to change. A divided and easily manipulated public makes it difficult, if not impossible, for governments to adopt coherent, long-term strategies, a concern supported by Zollmann’s study on public opinion and policy adoption (Zollmann 2017). This susceptibility further underscores the importance of equipping the general populace with a robust understanding of complex issues, as this could serve as a foundational pillar for democratic governance in an age increasingly influenced by advanced technologies.

To support the findings, a correlation between the average responses of each major factor and the perception of people about AI (whether AI is good for mankind or will lead to the end of mankind) was also performed (Table 8):

Table 8.

Component correlations and future perceptions of mankind.

The results of the correlation are in line with the expectations. It is seen that people who feel AI has risks associated have a negative correlation with all of the factors like trust, uniqueness, and usage and do not believe AI to be the future of mankind. However, such people feel that AI would lead to the end of mankind, hence resulting in a positive correlation. In contrast, people who believe in the uses and usage of AI have positive correlations with trust and uniqueness and believe that AI is the future of mankind. Thus, it can be safely inferred that the factors of trust, usage, uniqueness, and risks associated with AI are a good fit for people to frame a perception of AI being good or bad for mankind.

The study results presented offer substantive evidence and a nuanced understanding to answer the research questions (R1, R2, and R3) posed at the beginning of the study.

For R1, concerning the public’s perception of AI in Western countries, the data imply a direct and significant relationship between trust and the adoption or usage of AI technology. The factor of trust substantially influences how the public perceives AI, corroborating earlier works that emphasise the centrality of trust in technology adoption (Lewicki et al. 1998; Mayer et al. 1995).

For R2, which asks what factors influence public attitudes towards AI, the results indicate multiple factors, including trust, risks, and uses, playing vital roles. Importantly, the factor of trust is observed to be the most crucial determinant in shaping public attitudes, consistent with the existing literature on trust as a catalyst for technological adoption (Vance et al. 2008). Risks and uses also have significant but negative path coefficients, suggesting that they inversely impact AI adoption or usage. This finding aligns with the technology acceptance model (TAM), which considers perceived ease of use and perceived usefulness as key variables (Davis 1989).

As for R3, regarding the potential consequences of public attitudes for the adoption and diffusion of AI, the results lend themselves to two primary interpretations. First, high levels of trust can serve as a facilitator for broader AI adoption, a notion that has been discussed in the context of other emergent technologies (Venkatesh and Davis 2000). Second, the negative coefficients for risks and uses indicate that misconceptions or apprehensions about AI can serve as barriers to its adoption, aligning with Rogers’ Diffusion of Innovations theory, which posits perceived risks as impediments to the adoption of new technologies (Rogers 2003).

The model fit indices, such as FIT, SRMR, and GFI, provide statistical validation for the proposed model. They meet the general rules of thumb for an acceptable fit as delineated by Cho et al. (2020). Therefore, the model appears to be robust and reliably explains the variance in the variables, thereby bolstering the study’s findings.

Moreover, the correlations between major factors and the public’s perception of AI’s impact on mankind are particularly illuminating. The results manifest that those who perceive AI as a risk tend to be sceptical about its benefits for mankind, supporting the previous findings about the impact of perceived risks on technological adoption (Venkatesh et al. 2012).

5. Discussion Summary

The salient finding that a mean score of 3.98 exists among respondents when evaluating AI as the future of mankind warrants an in-depth discussion. While the result superficially corresponds with Fountaine et al.’s (2019) observations about the organisational acceptance of AI, the implication transcends mere acceptance and signifies a broader cultural embrace. This delineation is crucial, particularly when situated against Brynjolfsson and McAfee’s (2014) cautionary narrative about the ‘AI expectation-reality gap.’ By critically analysing this juxtaposition, this research not only confirms but also challenges established paradigms about societal optimism surrounding AI. Here, the study adds a layer of complexity by demonstrating that this societal optimism persists despite a lack of statistically significant predictors in the data.

Equally compelling is the discovery of geographical variations in attitudes towards AI, which raises nuanced questions about the role of cultural conditioning in technology adoption. Hofstede’s (2001) seminal work on cultural dimensions is pertinent here. The divergence across countries in our data adds empirical richness to Hofstede’s theory, expanding it from traditional technologies to nascent fields like AI. This effectively opens the door for interdisciplinary inquiries that can explore the interaction between technology and culture through varying analytical frameworks, perhaps even challenging some of Hofstede’s dimensions in the context of rapidly evolving technologies.

Furthermore, the ethical considerations highlighted in this study provide an empirical dimension to the normative frameworks proposed by the European Commission (2019) and OECD (2019). While the existing literature stresses the conceptual importance of ethics in AI (European Commission 2019; OECD 2019), the empirical data from this study enriches this dialogue by pinpointing specific ethical attributes that currently hold societal importance. This could have implications for policymakers and industry stakeholders, as it elevates abstract ethical principles into empirically supported priorities.

Lastly, the use of SEM in this study finds validation in the methodological literature, particularly the works of Hair et al. (2016). Our results strengthen their assertion that SEM can effectively untangle complex interrelations in social science research. However, while Hair et al. (2016) primarily address SEM’s robustness as a methodology, our study takes this a step further by questioning the adequacy of existing models in explaining societal attitudes towards complex and continually evolving technologies like AI.

6. Conclusions

In the realm of artificial intelligence (AI), it is intriguing to note that people predominantly regard the benefits to processes—such as greater innovation and productivity—as outweighing what AI can offer to human outcomes, including enhanced decision-making capabilities and potential. This inclination aligns with Reckwitz’s (2020) observations that society is gradually moving from a mindset focused on rationalisation and standardisation towards one that values the exceptional and unique. In this transformed landscape, technology, including AI, plays a central role. The increasing prominence of quantifiable metrics, including rankings and scores, bolsters this shift. These metrics, enabled by the continually expanding capabilities of data processing in our digital era, serve to measure and define the exceptional (Reckwitz 2020). Johannessen (2020) reminds us that the adoption of these new technologies requires humanity to develop new skill sets, especially those that allow for ethical reflection on the complexities introduced by such technological advancements. This is not just an academic exercise; it is a societal imperative.

As our data demonstrate, trust and practical applications of AI are significant factors shaping public opinion and engagement with the technology. A unanimous sentiment emanating from the survey is the perceived ineffectiveness of governmental institutions in tackling global crises such as climate change and COVID-19, often attributed to the vested interests of politicians. Consequently, AI is increasingly viewed as a reliable and unbiased tool for decision making at a high level, particularly for addressing complex global issues. This account, then, does more than simply sum up the findings; it situates them within broader scholarly and societal discussions about the evolving role of AI, pointing towards the multifaceted implications of our collective trust in, and adoption of, this technology.

The role of governmental institutions in regulating the dissemination of information has come to the forefront, especially at a time when the integrity of information is pivotal. The findings of this research underscore that we are at a critical juncture where digital platforms can easily amplify misinformation, thereby affecting public understanding and fuelling polarisation. This situation calls for enhanced governance to set precedents concerning what information should be shared and in what context. The public discourse is presently characterised by polarisation, fragmenting society into opposing camps susceptible to manipulation and sudden shifts in opinion. The implications of this research extend to scenarios where the unchecked development of superintelligence poses a potential threat to humanity. In such a context, governmental action, often influenced by the will of the voting public, becomes imperative to regulate and govern the trajectory of artificial intelligence to ensure it aligns with the best interests of human society. The fragmentation of public opinion and its susceptibility to manipulation serve as significant barriers to effective governmental action. Such divisions render decision making not only difficult but, in extreme cases, virtually unfeasible, thereby stymying the enactment of policies and regulations essential for societal well-being. The findings from the focus groups indicate that public intellectuals and renowned experts, such as Stephen Hawking, exert a considerable influence on shifts in public opinion, thereby acting as pivotal agents in shaping societal attitudes towards complex issues.

In this complex landscape, the need for leadership with foresight and deep understanding has never been more apparent. The findings pose an important question: can artificial intelligence assume a leadership role? While AI might serve as an auxiliary tool for informed decision making, the acceptance and adaptation of AI technologies among policymakers and key decision-makers remain topics warranting further exploration.

This research carries inherent limitations that must be acknowledged for a comprehensive understanding of its findings and implications. Primarily, the respondents consist of business school students or faculty members. Given their exposure to a contemporary curriculum, their viewpoints on AI technology and its applications are likely more evolved than those of the general population. Additionally, the survey was conducted in English, thereby excluding non-English-speaking respondents who might offer culturally specific insights. Such limitations also extend to the sample size, which was drawn from developed nations with high levels of Internet penetration and general awareness.

As for future research directions, the current study lays the groundwork for expansive inquiries into AI. It is crucial, however, to consider the cultural dimensions, which have not been fully integrated into the current study. Future researchers might benefit from employing a diverse sample encompassing multiple geographies, languages, and educational backgrounds. Such an approach would yield a more nuanced understanding of the topics explored in this study and could potentially illuminate how cultural beliefs and backgrounds influence the perceptions and applications of AI.

Funding

This research received no external funding.

Institutional Review Board Statement

Approval from the Ethics Committee of SBS Swiss Business School has been received.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study prior to accessing the questionnaire anonymously online or before starting the focus groups.

Data Availability Statement

The data can be requested directly by the author.

Conflicts of Interest

The author declares no conflict of interest.

Appendix A

Questionnaire of the study.

- (1)

- What is your age?

- 18–24

- 25–34

- 35–44

- 45–54

- 55–64

- Above 64

- (2)

- What is your gender?

- Female

- Male

- Other (specify)

- (3)

- What is your education?

- High school (freshmen)

- Trade/vocational/technical

- Bachelors

- Masters

- Doctorate

- (4)

- What is your annual income level

- under 50,000 USD

- 50,000–100,000 USD

- 100,000–150,000 USD

- above 150,000 USD

- (5)

- AI can increase efficiency and accuracy in tasks (1 = I do not agree at all, 6 = I fully agree)

- (6)

- AI can offer convenience and save time. (1 = I do not agree at all, 6 = I fully agree)

- (7)

- AI can improve decision-making processes. (1 = I do not agree at all, 6 = I fully agree)

- (8)

- AI can help solve complex problems. (1 = I do not agree at all, 6 = I fully agree)

- (9)

- AI can lead to cost savings. (1 = I do not agree at all, 6 = I fully agree)

- (10)

- AI can create new job opportunities. (1 = I do not agree at all, 6 = I fully agree)

- (11)

- Other benefits of AI I consider to be important for me:

- (12)

- AI may lead to job displacement. (1 = I do not agree at all, 6 = I fully agree)

- (13)

- AI may violate privacy concerns. (1 = I do not agree at all, 6 = I fully agree)

- (14)

- AI may be used for malicious purposes. (1 = I do not agree at all, 6 = I fully agree)

- (15)

- AI may cause errors and mistakes. (1 = I do not agree at all, 6 = I fully agree)

- (16)

- AI may perpetuate bias and discrimination. (1 = I do not agree at all, 6 = I fully agree)

- (17)

- AI may have unintended consequences. (1 = I do not agree at all, 6 = I fully agree)

- (18)

- Other risks of AI I consider to be important for me:

- (19)

- I trust AI to perform tasks accurately. (1 = I do not agree at all, 6 = I fully agree)

- (20)

- I trust AI to make reliable decisions. (1 = I do not agree at all, 6 = I fully agree)

- (21)

- I believe AI is predictable. (1 = I do not agree at all, 6 = I fully agree)

- (22)

- I have confidence in AI’s ability to learn. (1 = I do not agree at all, 6 = I fully agree)

- (23)

- I trust AI to keep my personal data secure. (1 = I do not agree at all, 6 = I fully agree)

- (24)

- I trust AI to be used ethically. (1 = I do not agree at all, 6 = I fully agree)

- (25)

- I trust an AI has less or no personal interests compared to humans. (1 = I do not agree at all, 6 = I fully agree)

- (26)

- I trust AI because:

- (27)

- The government does not solve important issues like climate change. (1 = I do not agree at all, 6 = I fully agree)

- (28)

- AI can help address societal issues such as climate change and social inequality. (1 = I do not agree at all, 6 = I fully agree)

- (29)

- Governments cannot solve global issues. (1 = I do not agree at all, 6 = I fully agree)

- (30)

- AI has the potential to solve global issues. (1 = I do not agree at all, 6 = I fully agree)

- (31)

- Politicians and countries have too many vested interests. (1 = I do not agree at all, 6 = I fully agree)

- (32)

- AI has the potential to make society more equitable. (1 = I do not agree at all, 6 = I fully agree)

- (33)

- AI can help create solutions to societal issues. (1 = I do not agree at all, 6 = I fully agree)

- (34)

- I believe that AI can solve the following issue that humans failed to solve:

- (35)

- I use AI-powered products and services frequently. (1 = I do not agree at all, 6 = I fully agree)

- (36)

- I have a basic understanding of what AI is and how it works. (1 = I do not agree at all, 6 = I fully agree)

- (37)

- I have no experience with AI. (1 = I do not agree at all, 6 = I fully agree)

- (38)

- I am comfortable using AI-powered products and services. (1 = I do not agree at all, 6 = I fully agree)

- (39)

- I have encountered issues with AI-powered products and services in the past. (1 = I do not agree at all, 6 = I fully agree)

- (40)

- My cultural background influences my attitudes towards AI. (1 = I do not agree at all, 6 = I fully agree)

- (41)

- Different cultures may have different perceptions of AI. (1 = I do not agree at all, 6 = I fully agree)

- (42)

- AI development should take cultural differences into account. (1 = I do not agree at all, 6 = I fully agree)

- (43)

- My cultural beliefs impact my level of trust in AI. (1 = I do not agree at all, 6 = I fully agree)

- (44)

- Cultural diversity can bring unique perspectives to the development and use of AI. (1 = I do not agree at all, 6 = I fully agree)

- (45)

- I believe AI is the future for human kind (1 = I do not agree at all, 6 = I fully agree)

- (46)

- I believe AI is the end of human kind. (1 = I do not agree at all, 6 = I fully agree)

- (47)

- How optimistic or pessimistic are you about the future of AI and its impact on society?

References

- Acemoglu, Daron, and Pascual Restrepo. 2017. Robots and Jobs: Evidence from US Labor Markets. VoxEU. Available online: https://voxeu.org/article/robots-and-jobs-evidence-us (accessed on 28 April 2023).

- Ajzen, Icek. 2005. Attitudes, Personality, and Behavior, 2nd ed. New York: Open University Press. [Google Scholar]

- Alshehri, Mohammed, Steve Drew, and Rayed Alghamdi. 2012. Analysis of citizens’ acceptance for e-government services: Applying the UTAUT model. Paper presented at the IADIS International Conferences Theory and Practice in Modern Computing and Internet Applications and Research, Lisbon, Portugal, July 17–23. [Google Scholar]

- Arrow, Kenneth J., Kamran Bilir, and Alan Sorensen. 2017. The impact of information technology on the diffusion of new pharmaceuticals. American Economic Journal: Applied Economics 12: 1–39. [Google Scholar]

- Asch, Solomon E. 1955. Opinions and social pressure. Scientific American 193: 31–35. [Google Scholar] [CrossRef]

- Borges, Alex F. S., Fernando J. B. Laurindo, M. S. Mauro, R. F. Gonçalves, and C. A. Mattos. 2020. The strategic use of artificial intelligence in the digital era: Systematic literature review and future research directions. International Journal of Information Management 57: 102225. [Google Scholar]

- Bossman, Jeremy. 2016. Top 9 Ethical Issues in Artificial Intelligence. World Economic Forum. Available online: https://www.weforum.org/agenda/2016/10/top-10-ethical-issues-in-artificial-intelligence/ (accessed on 15 March 2023).

- Bostrom, Nick. 2014. Superintelligence: Paths, Dangers, Strategies. Oxford: Oxford University Press. [Google Scholar]

- Brandtzaeg, Petter B., and Asbjørn Følstad. 2017. Why people use chatbots. Paper presented at the Internet Science: 4th International Conference, INSCI 2017, Thessaloniki, Greece, November 22–24. [Google Scholar]

- Brooks, Amanda. 2019. The Benefits of AI: 6 Societal Advantages of Automation. Rasmussen University. Available online: https://www.rasmussen.edu/degrees/technology/blog/benefits-of-ai/ (accessed on 15 March 2023).