1. Introduction

Artificial intelligence (AI) is significantly influencing human society. The shipbuilding and shipping industries are intensifying their efforts to leverage AI across various fields (

Ye et al. 2023). With the evolving landscape of these industries, the emergence of Maritime Autonomous Surface Ships (MASS) is becoming prominent. These ships utilize Fourth Industrial Revolution technologies, such as AI, the Internet of Things (IoT), and information and communications technology (ICT). In 2023, the 105th session of the Maritime Safety Committee (MSC) of the International Maritime Organization (IMO) established a roadmap for developing a non-mandatory MASS Code among member states and initiated its development. Previously, in 2019, the IMO had introduced the Interim Guidelines for MASS Trials to provide a framework for an international agreement on autonomous ships and established the MASS Joint Working Group to collaborate with participating member states to develop the MASS Code and regulate detailed requirements for MASS functions (

Issa et al. 2022).

Fully autonomous ships, corresponding to Level Four autonomous operation, may make decisions without the intervention of a crew member or remote shore operator based on optimization algorithms developed by shipbuilders. However, considering AI’s autonomous and unpredictable nature, a regulatory framework must be established to ensure ethical and appropriate reliance on AI for the safe management of fully autonomous ships (

Negenborn et al. 2023). Instead of solely relying on autonomy to address AI unpredictability, AI algorithms should be ethically designed to incorporate the maritime data values—when used by AI in conjunction with future maritime data—based on the experiences of traditional seafarers following agreed-upon regulations.

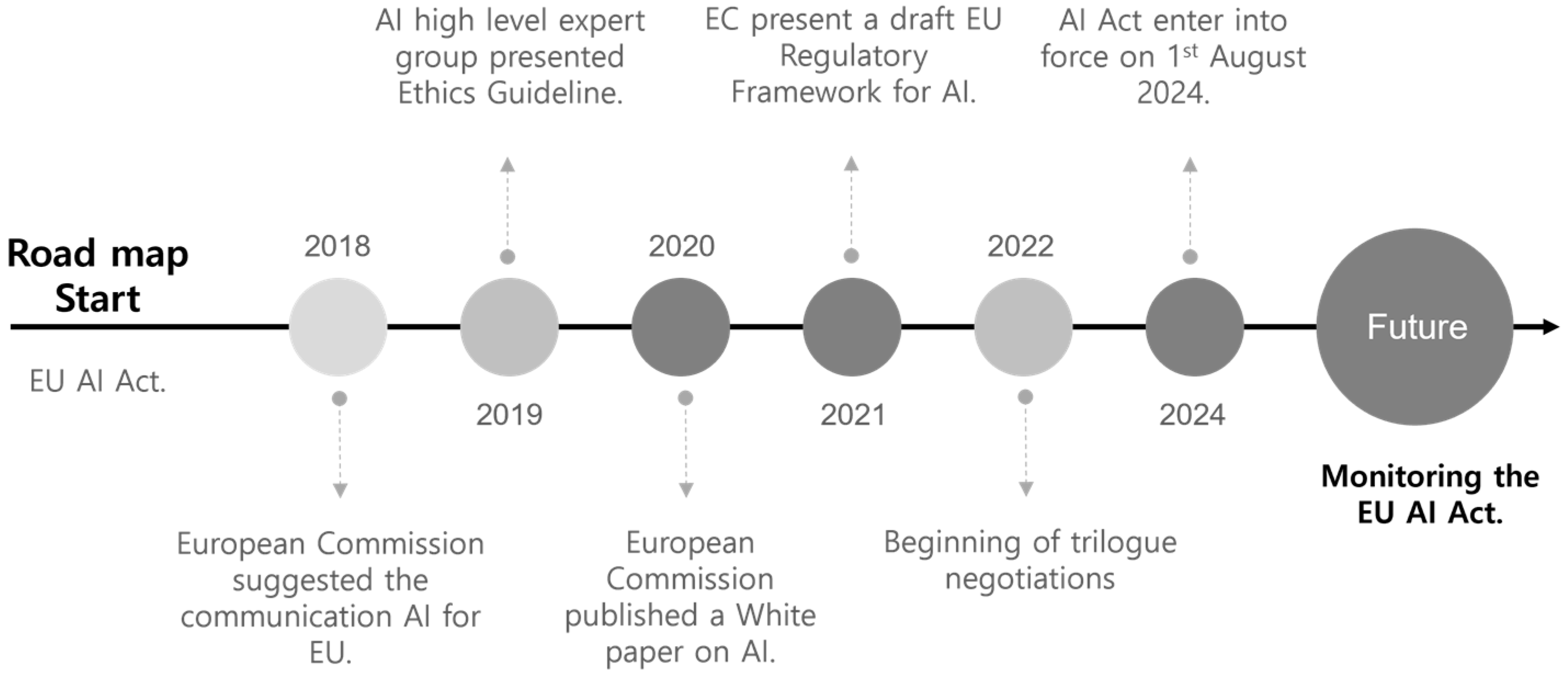

To tackle the issue of regulations lagging behind technological advancements, on 21 April 2021, the European Commission (EC) proposed an AI Act to establish harmonized AI rules and amend specific union legislation. Subsequently, on 11 December 2023, the European Parliament, in collaboration with the EC and representatives of 27 European Union (EU) member states, endorsed a legislative proposal known as the AI Act (

European Council 2023).

The EU AI Act is significant as it provides the EC with a pioneering legal framework to address the risks associated with the rapid advancement of AI technologies. This positions the EU as a global leader in AI regulation. In anticipation of its enforcement, the European AI Office was established within the EC in February 2024 to oversee the Act’s implementation in collaboration with the member states. The objective was to establish an environment in which AI technologies respect human dignity, rights, and trust while fostering collaboration, innovation, and research among various stakeholders. Furthermore, the office serves as a pivotal conduit for international discourse and collaboration on AI matters, underscoring the necessity for global consensus on AI governance. The European AI Office aims to establish Europe as a leader in the ethical and sustainable development of AI technologies. It is anticipated that the Act will regulate several stakeholders within the maritime cluster, particularly as the commercialization of autonomous ships gains momentum. The Act will likely significantly impact the global discourse surrounding AI regulatory frameworks. This encompasses the influence of related areas, including the EU’s General Data Protection Regulation (GDPR) and platform regulation (

European Parliament 2024).

The EU AI Act officially entered into force on 1 August 2024, with the full application set for two years later. However, certain provisions will take effect sooner. Prohibitions will become effective after six months, governance rules and obligations for general-purpose AI models after 12 months, and rules for AI systems embedded in regulated products after 24 months. To facilitate the transition to this new regulatory framework, the Commission launched the AI Pact, a voluntary initiative encouraging AI developers in Europe and beyond to comply with the Act’s key obligations before the legal deadline. The first call for interest in the AI Pact, issued in November 2023, received responses from over 550 organizations across various sizes, sectors, and countries, demonstrating a significant commitment to proactive compliance (

European Commission 2024).

1.1. Study Objectives

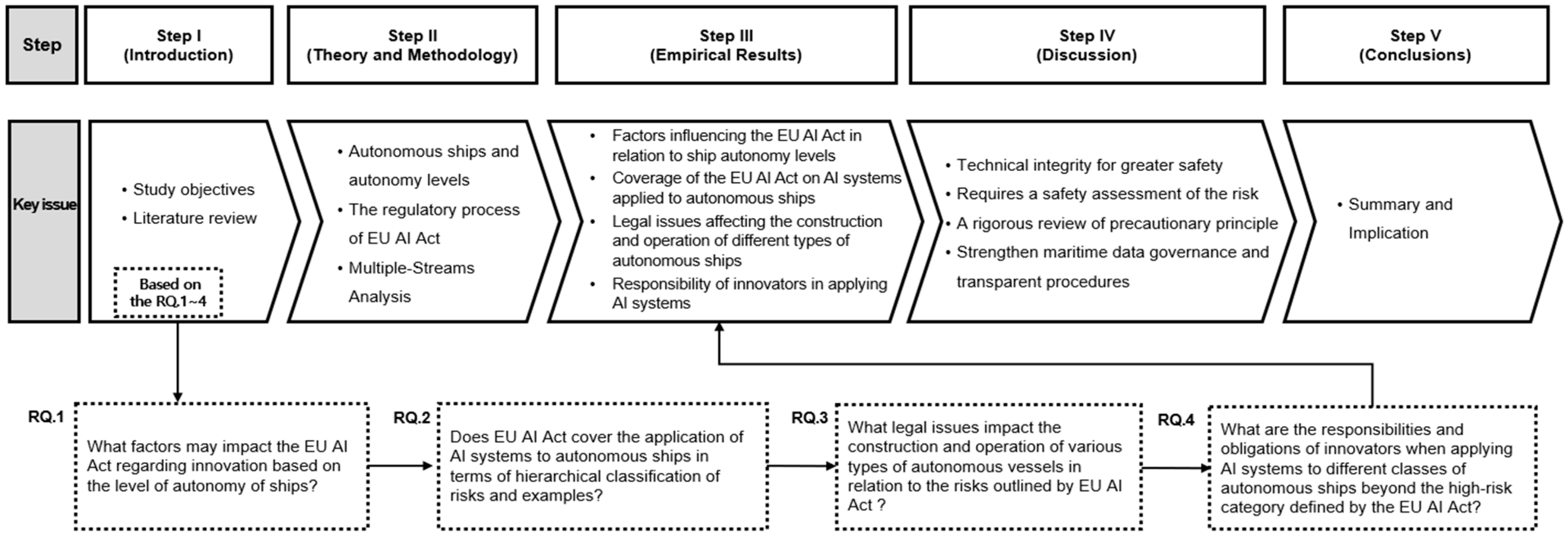

This study aimed to analyze and highlight how the EU AI law impacts shipyards and shipping companies, focusing on ethical AI use and user safety. It employed a multiple-streams model to visualize how AI-related technological innovations could assist shipbuilders and shipping companies regarding unforeseen risks. This study examines interpretive empirical results to examine the research questions (

Figure 1), identifying and analyzing the multifaceted legal influences of the EU AI Act on AI risks in autonomous ships. Furthermore, this study proposes empirical enhancements to the EU AI legislation for AI-related industries and explores how major shipbuilding and shipping companies can respond to and navigate the new protectionist regulatory framework while retaining technological leadership.

Figure 1 depicts the research process, from the Introduction to the Conclusion, the corresponding key issues, and the emergent research questions that the study seeks to address.

1.2. Literature Review

The literature review highlights the technical and legal challenges associated with AI implementation in autonomous ships.

Thombre et al. (

2020) examined the operational demands of autonomous and traditional ships, emphasizing the need to detect various situations during ship operations, monitor sensor system integrity, and develop a cognitive system that integrates sensor data and AI technology.

Chang et al. (

2021) devised an approach for assessing the risk levels of primary hazards linked with MASS, quantifying them by utilizing the failure mode and effects analysis method and the evidential reasoning approach and restricting the Boltzmann network. They concluded that collisions with third-party objects pose the highest risk to autonomous ship management, followed by cyberattacks, human error, and equipment failure.

Li and Fung (

2019) scrutinized advanced autonomous vessels introduced in Norway, evaluating their impact on maritime traffic, vessel safety, and regional development, focusing on autonomous ships developed in Norway. Their findings suggested that reducing navigator fatigue and onboard workload in autonomous ships could enhance navigational safety and environmental protection.

Fan et al. (

2020) proposed a comprehensive framework for navigational risk assessment, considering factors related to crew, vessels, environment, and technology pertinent to autonomous ships.

Kim et al. (

2022) scrutinized legal concerns in anticipating the commercial operation of autonomous ships, advocating for extending product liability to the software domain to safeguard consumer rights. Their study proposed vital legal guidance for evolving legislation and suggested implementation strategies to enhance maritime safety and sustainable development through mitigation and technological advancement.

Coito (

2021) explored the opportunities and risks for autonomous ships across three domains—maritime search and rescue, maritime drug interdiction, and navigational risk control. They argued that despite challenges, such as crime implications and human error, various technologies associated with autonomous operations can ensure ship safety.

Ringbom (

2019) analyzed maritime law issues concerning autonomous ships based on the autonomy level in ship operations, categorizing them by the extent of human intervention required at Level Four. The study hermeneutically examined the impact of regulations directly related to ship management and navigation, and mariners’ judgment and negligence on maritime law.

This study examines previous studies to delineate the scope and limitations of the factors influencing the AI application risk concerning the EU AI Act framework.

Thelisson and Verma (

2024) proposed a tool for conducting conformity assessments to scrutinize the governance structure outlined in the Act. They noted limited guidance on conducting conformity assessments and monitoring practices, highlighting the need for consensus.

Stuurman and Lachaud (

2022) observed that the Act primarily focuses on regulating unacceptable risk systems through mandatory requirements and prohibitions. However, their study predominantly explored the limits of the Act’s impact on high-risk systems and the introduction of a voluntary labeling scheme to bolster protection against medium- and low-risk AI systems.

Neuwirth (

2023) concluded that the approach of the EU AI Act is suitable for a balance of proportionality and reliability. The study emphasized that justifying the approach to determine risk acceptability is crucial and called for detailed guidance from regulators and stakeholder engagement.

This study draws on previous studies to assess the suitability of the method employed. Multiple-stream analysis is a widely utilized theoretical framework in political science, initially developed to comprehend the policymaking process. In the methodology literature,

Herweg et al. (

2023) examined the empirical application of the multiple-streams framework (MSF), addressing recent publications and debates, specifically regarding innovations since 2000. Their study focused on five structural elements (problems, policies, politics, policy entrepreneurs, and policy instruments) to implement and refine the policy framework, discussing its application in the decision-making and implementation stages.

Knaggård (

2015) asserted that the MSF serves as a decision-making tool, using multiple streams to demonstrate projected improvements through a strategic opening.

Fowler (

2022) used MSF to evaluate the relevance of policymaking and policy implementation and the conditional nature of the political, policy, and problem streams. The study concluded that policy and policy implementation are mutually dependent.

Herweg (

2016) applied MSF to the EU’s agenda-setting process, defining functional equivalents of framework elements within the EU context and analyzing shifts in the streams that open policy windows. Furthermore, the study explored the potential applications of MSF in policy and regional contexts, concluding with a transparent overview of the breadth and diversity of research in this field.

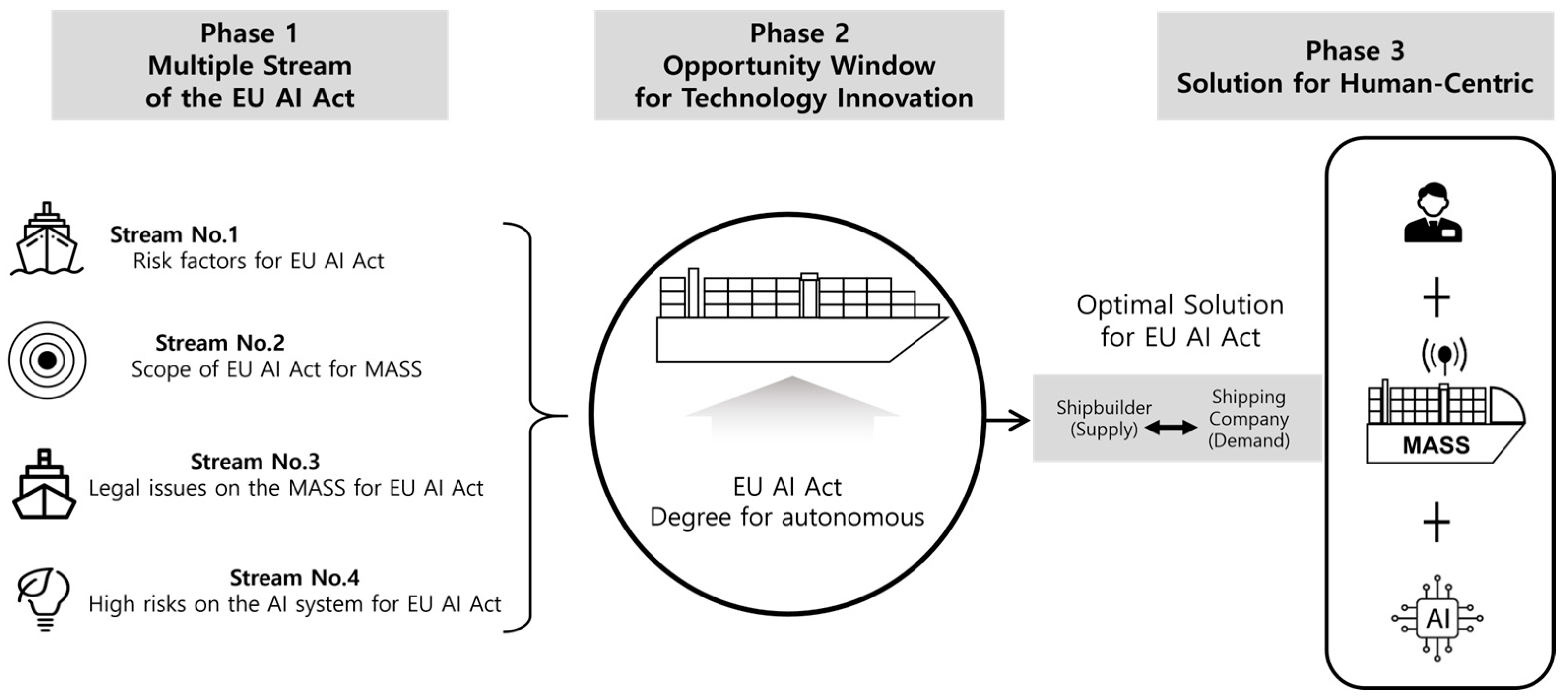

Previous studies on autonomous ships have predominantly concentrated on technical aspects, safety, operational efficiency, and safety-related regulations. However, research on the EU AI Act confirms the evolution of the regulatory framework for AI risks. Hence, this study addresses this legal literature gap concerning how a new legal framework, such as the EU AI Act, will impact the final product generated by the new technology of autonomous ships. This study employs multiple-stream analysis to enhance the understanding of the Act’s decision-making process and its context and analyze the potential impacts of these decisions on shipbuilders and shipping companies serving as innovators in the field of autonomous ships. This study’s findings are suitable for the development of future technologies within legal and ethical frameworks, avoiding the chasm phenomenon and ensuring commercialization.

3. Findings

3.1. Factors Influencing the EU AI Act Concerning Ship Autonomy Levels

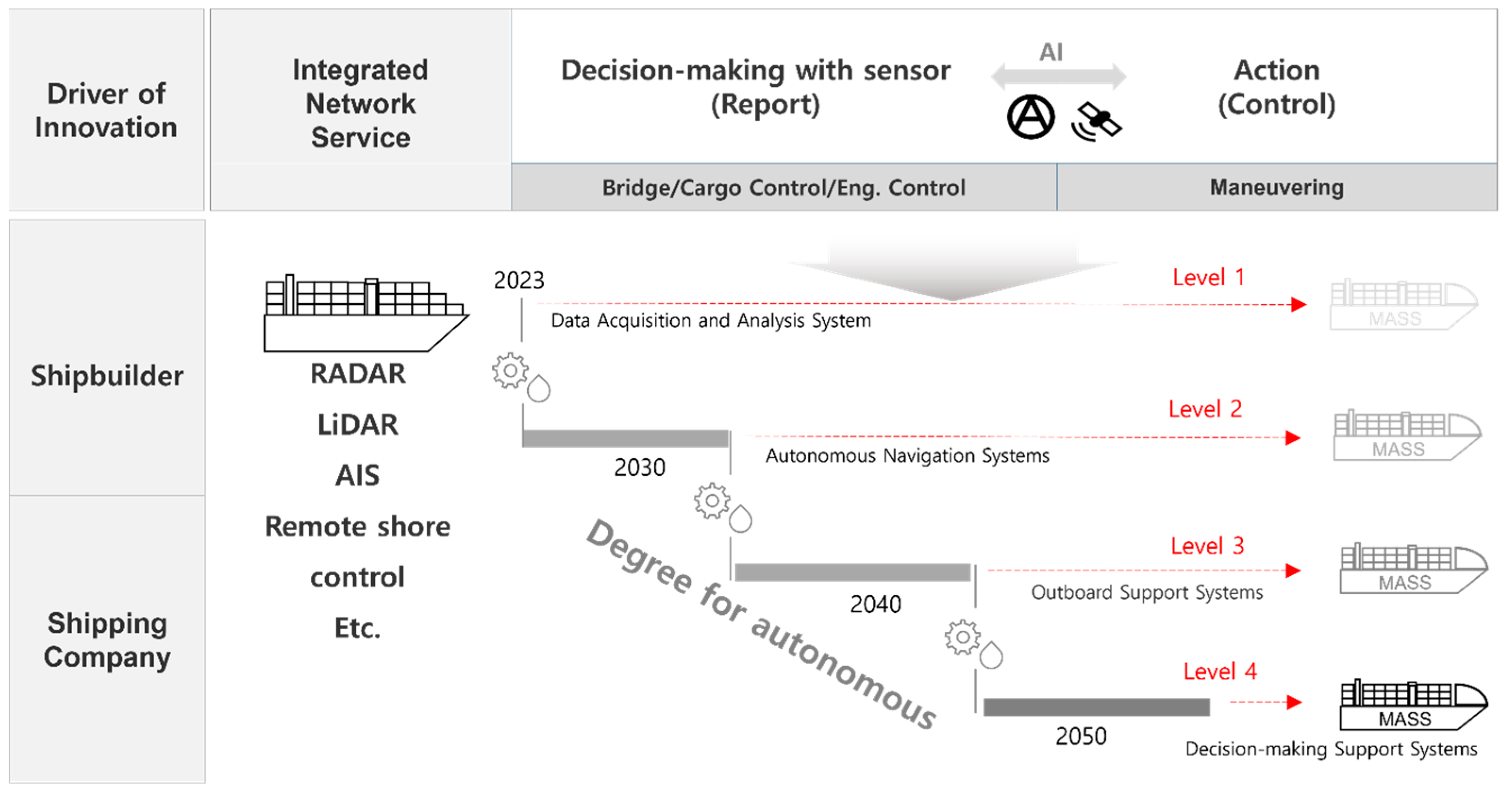

To ensure the safety of ship operations, the core technologies for autonomous ships are categorized into situational awareness, detection technology, judgment technology, action and control technology, and infrastructure technology based on technical completeness. These technologies utilize, as the primary method, vision systems, such as radio detection and ranging (RADAR), light detection and ranging (LiDAR), closed-circuit television, and automatic identification systems for fundamental situational awareness and detection. These are essential for autonomous ships to accurately detect and recognize weather conditions, other ships, and third-party objects. Nevertheless, in the secondary method, where AI judgment is necessary during decision-making, it can be applied to visualize automatic navigation, berthing, and the mooring of autonomous ships based on sensor data, both collected and predicted.

The EU AI Act defines an AI system as software developed using cutting-edge technologies, such as machine learning, logic and knowledge-based approaches, statistical methods, Bayesian estimation, and search and optimization methods. These technologies are employed to achieve specific goals (

Kim et al. 2020). The system generates outputs, including forecasts, guidance, and determinations, which impact its operating environment. Therefore, in terms of the Act, an AI system is operational when an autonomous ship is guided by the decisions of an onboard AI to autonomously chart a course that ensures economical and safe navigation according to sea conditions, predicts failures, and takes proactive algorithmically designed actions (

Sovrano et al. 2022). For instance, advancements in deep learning-enabled autonomous ships address collision avoidance by analyzing pre-collected and real-time sensor data and executing collision avoidance procedures based on the Convention on the International Regulations for Preventing Collisions at Sea, 1972 (COLREGs). However, the effectiveness of these systems hinges on the “judgment technique” at various levels. A critical concern arises when the onboard decision-making algorithm must respond to situations where the other vessel is not complying with COLREGs or is acting against them. In such cases, the ideal approach would involve data exchange between the two systems, similar to how pilots or navigators communicate via radio or phone when there is uncertainty about the safety of the other ship’s course. Furthermore, the IMO documents on MASS should be considered as they highlight significant safety concerns that could elevate the risk level to four. The IMO Maritime Safety Committee’s list of instruments to be covered includes safety and maritime security (SOLAS), collision regulations (COLREGs), loading and stability (Load Lines), training of seafarers and fishers (STCW, STCW-F), search and rescue (SAR), tonnage measurement (Tonnage Convention), safe containers (CSCs), and special trade passenger ship instruments (SPACE STP, STP). Addressing these issues is crucial to ensure the safety of autonomous ships and their compliance with international regulations, particularly in high-risk scenarios.

SAR operations provide an especially illustrative example of AI integration in MASS. The utilization of AI-driven systems can notably reduce response times, facilitated by the deployment of sophisticated sensors and data analysis techniques for the rapid detection of distress signals. Furthermore, AI is capable of processing extensive quantities of environmental data, enabling the prediction and mitigation of potential risks during SAR missions, which enhances the safety and efficacy of the operation. Additionally, the AI automation of hazardous tasks can minimize the physical and mental strain on human personnel, which reduces their exposure to danger (

Burgén and Bram 2024). As

Figure 4 illustrates, these technologies are applied across all stages of detection, judgment, and action. The crucial aspect is allocating decision-making authority to the onboard crew or a remote operator managing the external support system, as required.

Figure 4 presents the four stages of autonomy in maritime vessels, illustrating the transition from human-operated systems to fully autonomous control, with AI playing a crucial role in supporting decision-making and maneuvering, from utilizing technologies such as RADAR, LiDAR, and remote shore control at lower autonomy levels, to achieving full independence at Level 4, where AI systems execute real-time data processing, situational analysis, and control actions without human intervention.

3.2. Coverage of the EU AI Act on AI Systems Applied to Autonomous Ships: Hierarchical Risk Classification and Key Cases

With the adoption of the AI Bill by the European Parliament in December 2023, 27 EU member countries adopted comprehensive overarching rules governing AI technology. Thus, the EU AI legislation will serve as a critical benchmark for predicting the scope and application of autonomous ships as they progress through research and development, experimental design, commercialization, and operational deployment across different autonomy levels.

The criminal offenses listed in Annex II of the EU AI Act, referred to in Article 5(1), first subparagraph, point (h) (iii), encompass a wide range of serious crimes. These include terrorism, human trafficking, sexual exploitation of children and child pornography, illicit trafficking of narcotic drugs or psychotropic substances, illicit trafficking of weapons, munitions or explosives, murder, grievous bodily injury, illicit trade in human organs or tissues, illicit trafficking in nuclear or radioactive materials, kidnapping, illegal restraint or hostage-taking, crimes within the jurisdiction of the International Criminal Court, unlawful seizure of aircraft or ships, rape, environmental crime, organized or armed robbery, sabotage, and participation in a criminal organization involved in one or more of the aforementioned offenses (

EU AI Act 2024).

According to the scope outlined in the EU AI legislation (Section 5.2—Detailed explanation of the specific provisions of the proposal; 5.2.1. Scope and Definitions—Title I), it applies to suppliers who place AI systems on the global market or put it into service within the EU, and to users of an AI system established within the EU. It extends to suppliers and users of AI systems placed in third countries if the outcome of the system is utilized within the EU (

European Commission 2021b).

To illustrate, this study considers a scenario in which a South Korean shipbuilder constructs an autonomous vessel equipped with AI control software designed and manufactured by a Norwegian company. Subsequently, if the vessel is operated by a German shipowner, the EU AI Act gives rise to several legal conflicts and enforcement challenges. Although the South Korean shipbuilder is not based in an EU member state, the AI system installed may fall under the jurisdiction of the EU AI Act, given that the system is utilized within the EU. Consequently, should the AI system be deemed in contravention of the EU AI Act, the South Korean shipbuilder may be held legally liable for non-compliance. Such liability could manifest in the form of financial penalties, compensation claims, or limitations on their capacity to conduct business activities within the EU. If the German shipowner, as the end user of the AI system within the EU, suffers losses due to defects or regulatory non-compliance with the AI system, they may seek legal remedies. As an operator within the EU, the German shipowner may be entitled to claim compensation from the manufacturer or software supplier for damages incurred. Litigation would probably ensue in a German court, with the potential for claims to be upheld under EU legislation. These potential conflicts and enforcement issues are particularly significant because of the global reach of the EU AI Act. They illustrate the complexities that can arise when AI systems developed or manufactured outside the EU are deployed within its borders. Effectively addressing these challenges will require careful consideration of how different legal frameworks and compliance procedures can be harmonized (

Hacker 2023). It is essential to explore what forms of international legal recourse may be available to address losses or damages occurring within the EU as a result of such transnational deployments.

Regarding the classification and examples of risks linked to AI system application to autonomous ships, the EU AI Act adopts a risk-based approach and treats the systems according to the risk levels (

Figure 5).

AI system risks are classified as unacceptable, high, limited, and minimal, determining whether the products should be prohibited, subject to a premarket conformity assessment, or regulated according to each category. The certification of AI systems under the Act proactively addresses risks (

Fraser and Villarino 2023). By rearranging the risks in an inverted pyramid and empirically applying the examples of innovation risks that may affect the autonomous ships’ autonomy class, the following issues were identified.

First, the study examined the definition of unacceptable risk outlined in the EU AI Act and its relevance to autonomous ships. Unacceptable risk is addressed in Article 5 (Prohibited Artificial Intelligence Practices) of the EU AI Directive, which imposes a complete ban on specific AI uses and applications (

Li and Yuen 2022). For instance, social scoring involves analyzing the behavioral patterns of seafarers during the operation of autonomous ships by ship owners. Dark-pattern AI employs manipulative or deceptive tactics to influence interactions with seafarers and other ships, potentially leading to inappropriate or hazardous decisions by an autonomous vessel. Additionally, real-time biometric identification systems identify an autonomous ship during interactions with crew members or other vessels (

Xu et al. 2023). As such, the primary objective of the Act is to ensure that, despite AI’s economic benefits and advantages, public authorities prioritize the fundamental rights of ordinary citizens in EU member states. Moreover, it prohibits ship owners from utilizing AI to operate autonomous ships, not surpassing the seafarers’ awareness, and from using unconscious technology that significantly alters seafarers’ behavior since this would lead to psychological or physical harm (

Veitch and Alsos 2022). For instance, following Article 5 of the EU AI Directive, as ships become more technologically advanced, a subtle balance must be ensured to maintain safe operations while respecting the privacy and freedom of personal choice of seafarers aboard autonomous vessels at the first and second autonomy levels.

To protect the freedom and rights of data subjects, the Ministry of Oceans and Fisheries and the Coast Guard in South Korea emphasize strict adherence to the Personal Information Protection Act (PIPA) (

Personal Information Protection Act 2017) and relevant regulations, ensuring that personal information is processed lawfully and managed securely. As such, the use of facial recognition technologies to identify individuals, including seafarers, through real-time remote biometric identification systems in public areas of ships, such as dining rooms, recreation rooms, and gyms, is strictly prohibited.

The second aspect relates to the definition of high risk in the EU AI legislation and its implications for autonomous ships. High-risk AI systems must endure third-party assessments, encompassing those integrated into products serving as safety components. This criterion can be extended to the AI integrated into autonomous ships and final products. These systems reflect sector-specific legislation, which is amended to incorporate the stipulated obligations set in the proposed regulations (

Hohma and Lütge 2023). To comply with sectoral legislation, autonomous ships must adhere to the rules and regulations of classification societies and the applicable laws of their flag state. As briefly mentioned above, even in cases involving independent, non-integrated AI, certain acts may be deemed high risk, such as the utilization of AI-based systems or devices for biometric identification and classification of seafarers aboard, seafarer training and education, and the enforcement of international conventions and national laws during the operation of autonomous ships.

Third, the EU AI Act defines limited risk and its application to autonomous ships as encompassing both high- and low-risk technologies. AI systems differ based on specific transparency issues they raise, necessitating particular disclosure obligations. There are three types of technology with specific transparency requirements—deep fakes, human interaction AI systems, and AI-based emotion recognition or biometric categorization systems (

Gaumond 2021). The Act’s Article 52 on transparency obligations for specific AI systems grants individuals residing in the EU the right to be informed in advance regarding specific videos being deep fakes, distinguishing whether the entity they are conversing with is a chatbot or voice assistant rather than a human, and if they undergo emotion recognition analysis or biometric categorization generated by an AI system. However, when considering the transparency obligation under the Act, legal regulations and transparency requirements concerning autonomous ships should acknowledge complex situations requiring diverse ethical and social considerations. For instance, a data subject (individual or corporation) who has suffered damage owing to a maritime accident may seek the disclosure of AI’s functional operation and data logging from the entity operating and managing the autonomous ship. Nonetheless, even in this scenario, shipyards and shipping companies that operate and manage autonomous ships may not disclose information to the victims owing to trade secrets. Hence, AI system manufacturers onboard autonomous ships should offer adequate recourse for data subjects affected by scientific flaws. The AI systems with flaws that should be banned are highly transparent (

Wróbel et al. 2022).

Fourth, the study delves into the definition of low and minimum risks in the EU AI Act and how they pertain to autonomous ships (

European Parliament 2023). These categories encompass all existing AI systems, recognizing that most AI systems currently in use in the EU do not pose significant hazards (

European Commission 2021a). Technologies, such as simple spam filters and VR/XR/MR-based AI virtual interactive games, fall into this category and are not subject to the new legal requirements. For instance, the autonomous navigation systems of autonomous ships operating at Levels 1–2 utilize various sensors and instruments alongside GPS to ensure efficient and safe operation, leveraging low-level AI technologies. Nevertheless, Article 69 of the Code of Conduct of the EU AI Directive strongly advises shipyards and shipping companies to develop voluntary codes of conduct to govern AI usage. The EC is instating these soft-law regimes to encourage adherence to transparency, avoidance of human oversight, and robustness, primarily targeting high-risk AI systems. Thus, they aim to foster innovation in AI-related technology ventures and startups across Europe (

Hickman and Petrin 2021).

3.3. Latest Regulatory Requirements of the EU AI Act Concerning Autonomous Ships

The finalization of the EU AI Act under Regulation (EU) 2024/1689 introduces a crucial regulatory framework that will have a significant impact on MASS. The Act targets GPAIs with a risk-based approach that imposes new obligations on developers and users, diverging from previous classifications. GPAI models deployed in autonomous ships, particularly those posing a systemic risk, are required to comply with rigorous regulatory standards, including comprehensive documentation, systemic risk assessments, and robust cybersecurity measures. These regulations are fundamental to ensuring the safety and reliability of autonomous ships and addressing potential legal and ethical challenges that may arise in the maritime industry. The EU AI Act’s comprehensive regulation of GPAI models considers the broad impacts that these technologies can have across various sectors, establishing it as a critical standard that must be met for autonomous ships to enter the international market. Moreover, it seems probable that other countries, including the United States, will respond by introducing regulatory frameworks, similar in nature, or harmonizing reporting requirements and standards. The EU AI Act establishes a precedent for global regulations, ensuring the safe and ethical deployment of autonomous ships through international collaboration and rigorous compliance standards (

European Commission 2024).

3.4. Legal Issues Affecting the Construction and Operation of Different Types of Autonomous Ships Considering the EU AI Act Risks

The EU AI Act rigorously applies the precautionary principle of risk regulation, a high-level concept of technological innovation, to mitigate risks associated with AI technologies, specifically those not adequately marketed or tested. Autonomous ships engaged in international trade face numerous legal challenges regarding international standards and public interest. For instance, in the operation of autonomous ships, an AI-based automatic navigation system might prioritize algorithmic efficiency and economic considerations, leading to unethical decisions, such as ignoring distress signals to choose a shorter route. This could introduce uncertain risks due to the lack of human intervention and ethical oversight in decision-making (

Fraser and Villarino 2023).

Introducing AI to autonomous ships necessitates the application of the precautionary principle during the technology development phase. This is because if AI in autonomous ships poses severe and irreversible risks, then precautionary measures should be implemented, even if the causal process is scientifically uncertain. However, in applying this strict precautionary principle to new technologies, such as AI, several limitations may be encountered. For instance, precautionary measures against one uncertain risk may increase another uncertain risk, and the application may be complex when it is based solely on speculation without a scientific basis.

For autonomous ships at autonomy levels 3–4 equipped with high-risk AI systems, practical limitations exist to thoroughly verify the risk implications of the technology, potentially leading to market failure in shipping. The commercial operation of the end product of the technological innovation process of autonomous ships is a hypothetical situation in which irreversible damage may occur.

An approach grounded in the precautionary principle, which delays technological development through extensive regulatory management, may stimulate technological progress for autonomous ships yet reduce the competitiveness of existing shipbuilders, shipbuilding equipment companies, and shipping companies. Anticipating all unforeseen risks and ensuring that all maritime data collected by AI systems onboard autonomous ships are relevant, representative, error-free, and complete are formal mandates based on a priori assumptions regarding AI systems (

Chen et al. 2023).

Despite this regulatory philosophy, shipyards and shipping companies should consistently advocate for science and technology to overcome regulatory barriers through innovations based on actor–network theory (

Aka and Labelle 2021). Therefore, this study examines the empirical enhancement of the EU AI Act by systematically applying it, primarily by mitigating risks based on the unacceptable risk category, assessing acceptable risks under minimal risk, and addressing the most detailed regulations on high risk (

Table 1).

3.5. Responsibilities of Innovators in Applying AI Systems to Various Classes of Autonomous Ships beyond the High-Risk Category Defined by the EU AI Act

To commercialize autonomous ships successfully, they must be equipped with AI systems that exceed the high-risk level defined by the EU AI Act. The related empirical analysis is presented in

Table 2, focusing on five innovation aspects based on the classification of autonomous ships.

4. Discussion

This study revealed how the EU AI Act will influence the regulatory landscape governing AI and data privacy. This is crucial for establishing a technological innovation system grounded in trust in and excellence of AI. The EU is spearheading advancements in the shipbuilding and shipping industry, particularly regarding autonomous ships. With the expected enactment of the EU AI Act in 2024, the EU, alongside the 2016 GDPR, is expected to significantly shape the business strategies of technology innovators. This will ensure its exclusive position as a global regulator across the entire value chain of data and algorithms, which act as the lifeblood of the digital economy. The integration of AI technology into end products, such as autonomous ships, is expected to foster collaboration among various innovators, facilitating innovations through a sequential flow of research, design, and development, regulatory framework establishment, pilot implementation, adjustments, and commercialization.

Through four research questions and an empirical analysis matrix, this study identified the following empirical enhancements in the structured hierarchical and interactive feedforward relationships among the actors accountable for each activity.

First, the influence of the EU AI legislation on autonomous ship innovation primarily concerns technological maturity, which improves safety. The fundamental technologies of autonomous ships, delineated by the legislation, include situational awareness and detection, judgment, action, control, and infrastructure technologies supported by AI systems. Specifically, AI systems governed by the Act are expected to impact autonomous ship innovators by algorithmically incorporating AI based on deep learning and COLREGs to elevate their autonomy level. This would enable autonomous structuring of ship collision avoidance and recommended routes, which could be remotely controlled with outboard support systems.

Second, the EU AI Act regulates specific categories of AI systems through a hierarchical risk classification. The inclusion of marine equipment in Annex II mandates a safety assessment. The EU AI legislation extends its jurisdiction to suppliers and users within the EU and AI systems in third-party countries. The standardization and accreditation of cross-border safety assessment factors are necessary for the third-party operation of ships. Specifically, in applying the EU AI Act to autonomous ships, ethical and social concerns must be addressed, along with technical limitations stemming from unacceptable and high risks. The act should prioritize ship safety and the protection of the marine environment from a legal and regulatory standpoint, ensuring an appropriate balance between the fundamental rights of seafarers and transparency.

Third, when applying the EU AI Act to AI systems for autonomous ships, the precautionary principles should guide strict measures to address uncertain risks. However, comprehensive verification for the commercialization of new technologies for autonomous ships may lead to market failure or a lack of participation and flexibility among innovators, causing them to remain at the pilot level, and reduce the industry’s competitiveness. Therefore, in updating the MASS Code, the IMO must review the Act’s regulations to strike a balance with technological innovations. Shipbuilders and shipping companies, as innovators, should be encouraged to adopt a voluntary AI code of conduct in cooperation with classification societies.

Fourth, innovators, shipbuilders, and shipping companies should enhance maritime data governance and ensure transparent developmental processes in preparation for the application of high-risk AI systems to autonomous ships. High-risk AI systems must validate the relevance, representativeness, and accuracy of maritime data while maintaining transparency throughout the process. Moreover, stakeholders must identify and address shortcomings in AI systems. Establishing independent AI integrity and cybersecurity procedures is vital for ships’ seaworthiness. Additionally, implementing traceability and oversight standards for AI systems and ensuring transparency and compliance should be the foundation of a post-implementation oversight regime that promotes technological innovation and ethical accountability.

The implications of the EU AI Act on autonomous ship innovation can be inferred by analyzing—via the multiple-streams analysis method—the interactions among innovators regarding AI technologies installed in end products. Innovators should establish a voluntary code of conduct endorsed by third parties, such as classification societies, to pursue legal and technical flexibility and ensure the effectiveness of regulations, considering the continuous improvement of AI technologies through machine learning. Moreover, shipbuilders must enhance their technical flexibility to adapt to rapid changes in AI technology.

To achieve this, a compliant design approach should be adopted beginning in the initial design stage to develop autonomous ships that adhere to the EU AI legislation and IMO regulations. Fundamental design principles should encompass safety, privacy, transparency, and accountability. Cross-industry collaboration and standardization are vital to ensure technological compatibility and maintain competitiveness. Furthermore, certification and testing procedures should be used to verify the safety and efficacy of AI systems for autonomous ships. Shipping companies must devise strategies to mitigate AI-related risks. This involves implementing effective risk management protocols and response plans to minimize potential incidents or losses. Additionally, training and educational initiatives for seafarers and operational management personnel are crucial for enhancing their understanding of the technical aspects and operational protocols.

The EU AI Act is a regulation; unlike a directive, it does not require individual legislation from member states once it has been passed by the European Parliament and implemented. However, the Act may evolve based on feedback from the European Parliament and the member states during the consultation process.

As the EU AI Act’s impact on autonomous ships will extend across the shipbuilding and shipping sectors, future studies should consider its potential implications on global regulation. The EU’s significant role as an import and export market for shipbuilding and marine equipment should be recognized. Additional efforts must focus on preventing malicious use rather than stifling technological innovation through regulation. Moreover, enhanced transparency and accountability are required for practical use.

AI regulation is a crucial ethical factor that must be acknowledged in future maritime transport systems. It should foster the development of human-centered and sustainable AI technology applied to autonomous ships. This can be achieved through smooth communication and cooperation between shipbuilding and shipping industries and regulatory agencies, which would advance autonomous ship technology and establish a stable regulatory framework.