1. Introduction

The integration of artificial intelligence (AI)-based technologies into higher education systems is no longer an option but an inevitable reality that fundamentally reconfigures the way educational, administrative, and decision-making processes are managed [

1,

2,

3]. While AI brings considerable opportunities in terms of efficiency, personalisation of learning, and expanded access to educational resources, the use of these technologies raises major challenges in terms of algorithmic accountability, data protection, and legal compliance [

4]. While most proposed frameworks pursue common principles such as transparency, fairness, and algorithmic safety, the lack of a global consensus has led to a normative fragmentation, with major challenges for the cross-border governance of AI [

5]. Balancing innovation with ethical considerations and strict regulations on AI can stifle innovation, while permissive regulations increase risks (e.g., AI-driven cyberattacks, bias, and deepfake manipulation). International organisations such as the United Nations Educational, Scientific and Cultural Organization (UNESCO) and the Organisation for Economic Co-operation and Development (OECD) are advocating for a global AI ethics framework to guide AI governance [

5,

6,

7].

However, the rapid development of artificial intelligence poses serious challenges for data protection law, as traditional regulatory systems are not always able to keep pace with technological changes. AI models that collect and process data, especially those based on machine learning and deep learning, often work better when they have access to large datasets [

8,

9,

10].

But beyond these issues, other major challenges arise related to the fair and transparent use of technology: the lack of clarity in automated decisions, the risk of algorithmic bias, the difficulty of attributing responsibility for errors, and, last but not least, respecting fundamental human rights principles [

11,

12,

13]. Faced with this complexity, academic institutions are faced with a difficult task: to benefit from the advantages brought by AI, but to do so in a way that respects ethical values and legal requirements, both at the national and international level.

Students are no longer simple beneficiaries of digital technologies but become active actors in AI-based educational ecosystems, in which the ability to understand and critically analyse the functioning of these systems becomes important. Thus, AI literacy—understood as a set of skills that allow the evaluation, interpretation and responsible use of intelligent technologies—is inevitably intertwined with awareness of their legal and ethical implications [

14,

15,

16]. The formation of these skills cannot be achieved in the absence of an institutional framework that promotes AI governance, that is, the set of policies, principles, and control mechanisms through which AI technologies are regulated and supervised in the academic environment.

From this perspective, knowledge-based organisational strategies—such as knowledge valorisation, a learning-orientated culture, and active information sharing—play a decisive role in the ability of institutions to adopt effective AI governance [

6,

17]. Although the specialised literature frequently addresses the benefits of AI in education, few studies analyse in an integrated way the link between these strategies and the development of students’ legal and ethical skills [

17,

18,

19]. The mediating role that AI governance can play between organisational culture and the responsible digital preparation of future graduates is even less explored [

20,

21,

22].

Building on these considerations, the present study aims to address the following research questions:

RQ1: What role do students assign to knowledge-orientated strategies in helping them understand and engage with AI in a more informed and responsible way within academic settings?

RQ2: How do students interpret the importance of ethical and legal concerns—such as data protection, decision-making transparency, or fairness—when it comes to regulating the use of AI in education?

RQ3: To what degree do students view existing institutional efforts—rules, policies, and guidance around AI use—as meaningful in helping them build a clearer sense of what is legally and ethically appropriate when working with these technologies?

The study involved 270 students from different university programmes, each responding to a set of questions about how artificial intelligence is managed—or at least perceived—in their academic settings. The aim was not only to gather opinions but to understand how things like institutional culture, the way knowledge circulates, and governance practices shape their awareness of legal and ethical issues around AI. Instead of isolating governance as a technical factor, we looked at how it fits into the broader picture. Governance, in this context, acts as something in between—connected to how institutions value knowledge and how students begin to form their own judgements about AI’s role.

The model we followed paid attention to three core ideas: whether knowledge is treated as something worth engaging with, how that knowledge is shared among peers, and whether a culture exists that actually supports open learning and reflection. When those aspects came together, we noticed that governance mechanisms became more tangible—not just as policy, but as part of daily academic life.

The originality of this research lies in proposing and testing an integrated model that investigates how knowledge-based organisational strategies contribute to the formation of students’ legal and ethical literacy in the field of artificial intelligence through institutional governance mechanisms of AI. The study makes a relevant theoretical contribution by expanding the traditional conceptual framework focused on employability, reorienting the analysis towards critical digital skills such as understanding the legal, ethical and social norms that govern the use of intelligent technologies. At the same time, the research offers a rigorous methodological approach by applying a PLS-SEM model and has a broad applicability, going beyond the boundaries of a specific educational context, which recommends it for the formulation of educational policies in international contexts. Through this perspective, the paper contributes to the consolidation of an emerging literature on responsible AI and supports the development of educational models focused on regulation, ethics, and sustainable innovation.

It is important to note that the participants’ reflections also provide valuable insight into how artificial intelligence is perceived and applied in an educational context by users who are not AI specialists but who are learning to integrate it critically, ethically, and responsibly into their academic work.

The structure of the paper is as follows: the first part presents a review of the relevant literature on the topic of AI literacy and educational governance. The following section describes the methodology applied in conducting the research. The results are presented and analysed in the dedicated section, followed by their interpretation in relation to the specialised literature within the

Section 5. The paper concludes with the formulation of the authors’ conclusions and the theoretical and practical implications identified.

2. Literature Review and Hypothesis Development

To meet the increasingly diverse challenges of today’s organisational environment, digital transformation has become a strategic objective for many institutions, including higher education institutions, which are increasingly considering the possibility of digitising their educational services and processes [

23]. The study by Mhlongo et al. (2023) [

24] highlights that the adoption of digital technologies in education has experienced rapid development, creating favourable contexts for the modernisation of the teaching process and supporting forms of learning more adapted to current needs. In the specialised literature, knowledge-based educational strategies are increasingly seen as a transformational factor for higher education institutions, which pursue not only academic performance but also social responsibility in relation to the use of emerging technologies [

25,

26,

27]. This orientation is not reduced to the simple implementation of digital platforms or the automation of administrative functions but implies a paradigm shift in the way institutions capitalise on available cognitive resources.

In a university that cultivates such a vision, knowledge is not treated as a static entity but as a process in continuous reconfiguration, with a strategic role in the internal governance of advanced technologies [

1,

21]. By intentionally integrating the value of knowledge into educational policies, institutions become better able to formulate clear rules regarding the use of artificial intelligence, to establish procedures for assessing technological risks, and to develop control mechanisms that take into account the specifics of the academic field. Moreover, this form of educational orientation provides the necessary framework for an AI governance that transcends simple technological compliance and that is anchored in accountability, transparency, and institutional legitimacy [

3,

25]. In this context, AI governance is not limited to reactive policies but becomes a set of proactive tools through which the university can anticipate the social, legal, and ethical impact of the algorithms used in the educational process or in administrative decision-making.

Recent literature argues that this knowledge-based approach provides the premises for intelligent and participatory governance, in which not only technical experts but also teachers, students, and institutional decision-makers are involved in defining the norms regarding the use of AI [

5,

7,

25,

28]. Naturally, institutions that base their technology policies on a deep understanding of the dynamics of knowledge become better prepared to manage the complexity of AI regulation.

They can more easily build protocols for checking algorithmic bias, ethical guidelines for the use of digital assistants, or even curricular modules that develop critical thinking towards AI [

5]. Thus, the knowledge-based educational orientation involves integrating the value of information, continuous learning, and cognitive infrastructures into institutional strategies, which can directly influence artificial intelligence governance (AIG), from the perspectives of both internal regulation and alignment with international good practices. Based on these previous studies, the following hypothesis is proposed:

Hypothesis 1 (H1). A knowledge-based educational orientation positively influences artificial intelligence governance within higher education institutions.

Higher education institutions that calibrate their educational strategies according to the potential value of information tend to develop forms of organisational culture in which issues such as algorithmic transparency, user rights, or the prevention of bias become topics of continuous reflection [

29,

30]. It is not just about formulating codes of ethics or adopting abstract principles but about a cultural transformation in which the entire academic community—from management to students—internalises the idea that AI is not neutral, and its applicability must be orientated towards the common good.

This form of organisational culture develops more easily in environments where educational strategies are designed to stimulate intellectual autonomy, reflective learning, and interactive communication [

29,

31,

32]. Therefore, the knowledge-based educational orientation creates an ecosystem in which AI is introduced not only as a tool for efficiency but as an object of critical reflection and responsible action [

18]. Moreover, in such contexts, institutions tend to integrate AI ethically even before formal regulations exist, and institutional culture functions as a value filter that guides technological decisions. Therefore, the current study proposes the following hypothesis:

Hypothesis 2 (H2). A knowledge-based educational orientation positively influences the development of an ethical AI-orientated institutional culture.

In those institutions where knowledge sharing is constantly encouraged, regardless of hierarchical position—whether between teachers, researchers, students, or administrative staff—a more solid basis is created for the development of a form of AI governance that works not only formally but also in practice [

33]. Where information flows freely and dialogue is treated as a resource, there is an increased capacity to assess the impact of new technologies, to anticipate algorithmic risks, and to adapt control mechanisms to the specifics of the institution [

34]. In such conditions, AI governance takes on a natural character, not imposed from the outside, but gradually built through internal collaboration.

The literature has suggested that where knowledge is distributed and decisions are participatory, governance tends to be more responsive, transparent, and sustainable [

35,

36,

37]. In this sense, the collaborative dynamics of knowledge are not just a “support” for AI governance but a catalyst for it: through collaboration, the institution gains the capacity to integrate AI regulation in a contextualised, intelligent, and reflective way [

38]. The following is the third hypothesis of this study:

Hypothesis 3 (H3). Collaborative knowledge dynamics for AI awareness positively influence artificial intelligence governance within academic institutions.

In an academic environment where values are transmitted through dialogue and learning is reciprocal, the idea of “algorithmic ethics” becomes part of the organisational culture, not just an isolated chapter in regulations [

39]. Institutional members begin to show sensitivity to the effects of technologies on equity, on individual rights, or on the way in which AI can sometimes amplify pre-existing inequalities. Thus, ethical culture is formed through continuous exposure to collaborative practices, which emphasise critical reflection, transparency, and shared responsibility.

This collaborative dimension contributes not only to the dissemination of technical knowledge about AI but also to the formation of a value framework in which the use of technologies is informally regulated by group norms [

40]. In such a framework, behaviours related to AI—from the selection of tools to the way of interpreting algorithmic results—are influenced by shared expectations, and institutional culture becomes the space in which AI ethics is lived, not just declared.

Interdisciplinary groups, dialogue between academic generations, or transversal projects can reveal ethical tensions that formal governance might miss. From this perspective, an ethical culture orientated towards AI is not a by-product of institutional strategy but an expression of the organisation’s capacity to learn collectively, reflexively, and responsibly [

41,

42,

43,

44]. In universities that function as cognitive ecosystems, the collaborative dynamics of knowledge create a favourable backdrop for the emergence of an institutional culture in which ethics in the use of artificial intelligence is not treated as a formal obligation but as a natural, collectively shared concern. Thus, this study proposes the following hypothesis:

Hypothesis 4 (H4). Collaborative knowledge dynamics for AI awareness positively influence the emergence of an ethical AI-orientated institutional culture.

Institutional culture has a subtle but constant influence on how students perceive technologies, learn to question them critically, and form their value frameworks [

45,

46]. When this culture actively promotes reflection on the ethical and legal consequences of AI, when it encourages difficult questions and provides space for debate, literacy occurs not only on a cognitive level but also on a moral level [

47]. Students do not just learn about AI but also learn how to relate to AI from the position of an informed citizen and a future professional aware of his or her responsibilities.

However, institutional ethical culture functions as an informal regulatory framework: it draws the boundaries of what is acceptable and generates a climate in which issues such as data confidentiality, the explainability of algorithmic decisions, or the risks of discrimination become part of daily conversation [

35,

39]. In this vein, legal and ethical literacy is not reduced to an optional course or an occasional presentation but is organically integrated into the functioning of the institution.

Recent studies show that students who learn in an environment where ethical values are lived—not just stated—are more receptive to topics related to algorithmic responsibility and show a greater willingness to engage in reflections on the regulation of AI [

43,

48,

49,

50]. On top of that, these institutions become models of good practice for other organisations, demonstrating that legal and ethical literacy is not a barrier to innovation but a condition for its sustainability. Thus, an institutional culture orientated towards ethical AI creates the necessary framework for legal and ethical literacy to not be a random result but an assumed finality of the educational process. Hence, the following hypothesis is proposed:

Hypothesis 5 (H5). An ethical AI-orientated institutional culture has a significant positive effect on students’ AI literacy and legal awareness.

AI governance, understood as the set of policies, procedures, and institutional mechanisms that regulate the use of artificial intelligence, is not just a technical–administrative framework [

33]. It is a direct expression of how an institution positions itself towards technological responsibility, informational equity, and the moral obligation to train users capable of understanding and critically evaluating the implications of AI.

Where AI governance is clearly formulated, applicable, and visible in everyday academic life, students become familiar with the norms, rules, and principles that govern the use of algorithmic technologies [

35]. They not only interact with AI but are also constantly exposed to a framework that teaches them how to use it responsibly, what questions to ask themselves about automated decisions, and how to recognise the risks hidden in algorithmic processes.

Moreover, institutional AI governance sends an indirect but powerful educational message: that technology is not neutral, and its use requires not only technical competence but also legal awareness and ethical sensitivity [

33]. This form of implicit learning is often more sustainable than simple formal training because it is integrated into the institution’s operating rules, interactions between actors, and organisational culture.

Through measures such as ethical evaluation of AI applications used in learning, regulating access to generative models, establishing protocols for the use of AI in research, or promoting decision-making transparency in automated systems, institutions create a concrete framework that facilitates legal and ethical literacy [

51,

52,

53]. Students thus learn not only what is permitted but especially why certain practices are considered acceptable and others are not.

AI governance functions, in this sense, as an invisible educational infrastructure that guides the formation of critical skills. It shapes not only behaviours but also values, providing students with clear guidelines in an ever-changing technological universe [

33,

47]. Without this framework, legal and ethical literacy risks remaining abstract, lacking real-world applicability. In contrast, where AI governance is active and well articulated, literacy becomes a complete formative experience. As a result, the following hypothesis is proposed:

Hypothesis 6 (H6). Artificial intelligence governance has a significant positive effect on students’ AI literacy and legal awareness.

3. Research Methodology

Perceived benefits, perceived technical effort, institutional support, and openness to emerging digital technologies have consistently influenced professional attitudes, according to previous studies [

3,

14,

32,

40]. Continuing in this direction, the present research proposes a coherent yet flexible interpretative framework that allows for a clearer understanding of the mechanisms through which governance systems can support the integration of AI into educational activities, starting with concrete institutional realities and the experiences of the actors involved.

In essence, this study looks at how students encounter artificial intelligence in their academic lives. It considers more than just the use of AI in classrooms or on digital platforms. The focus is on how these technologies are introduced, how people talk about them at the university, and what expectations are formed around their ethical and legal use.

The analysis brings together several interconnected aspects. It looks at whether knowledge is valued as something meaningful, whether collaboration and open dialogue are encouraged, and whether there is a culture that supports critical reflection on technology. When these conditions are present, students appear to engage with AI more thoughtfully. They tend to question what the systems do, why they are used, and what the implications are for fairness, privacy, and accountability.

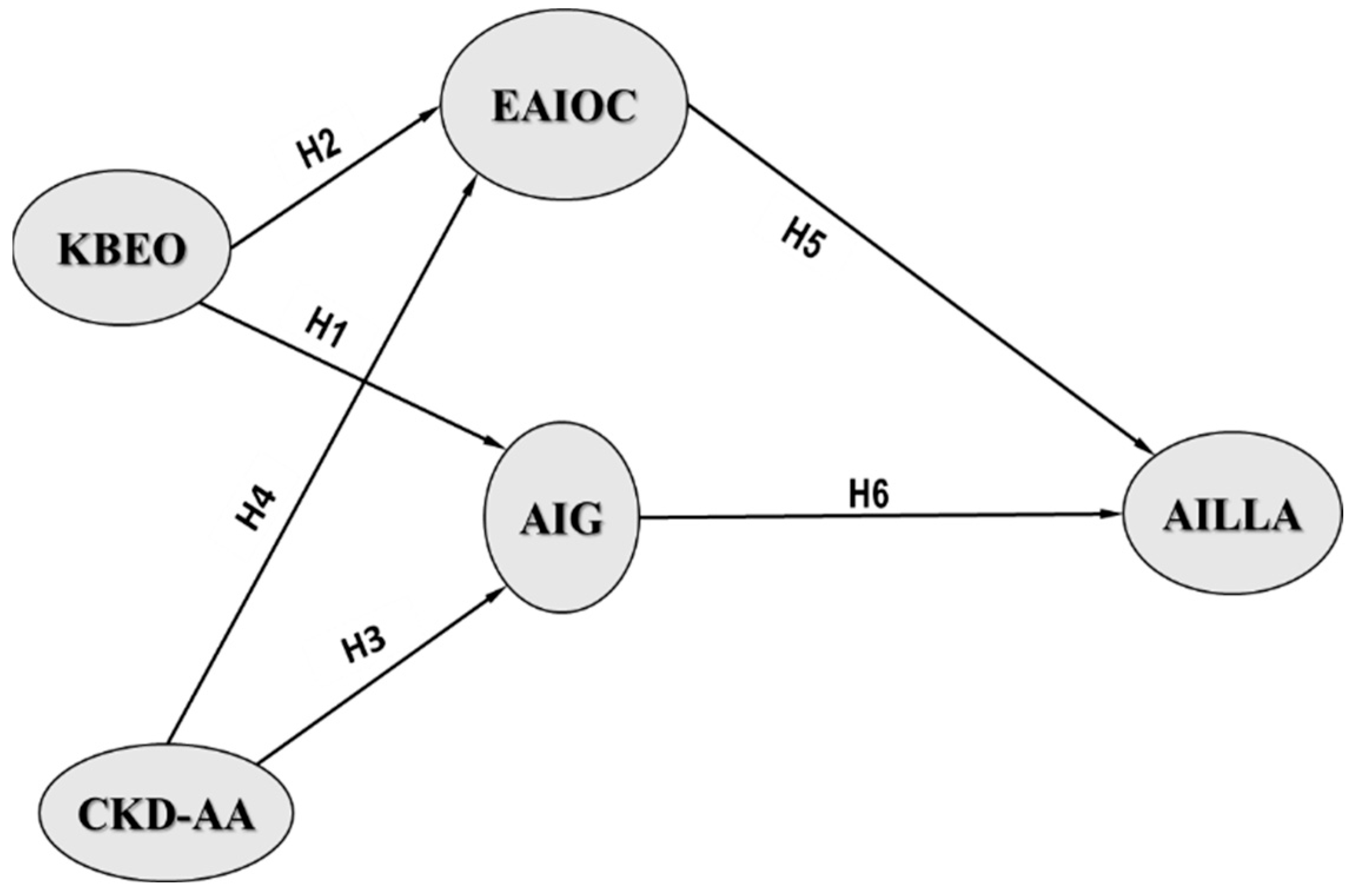

The framework developed for this research, shown in

Figure 1, connects institutional knowledge practices with AI governance structures and students’ awareness of legal and ethical concerns. It does not attempt to measure adoption in a narrow sense. Instead, it asks whether the way AI is governed within educational institutions contributes to a more informed and responsible understanding among students.

This study followed a structured approach to develop survey items rooted in established theoretical frameworks, along with insights from recent empirical work. To ensure validity and relevance, the content was carefully refined to reflect the particular challenges and realities of artificial intelligence governance in higher education. As detailed in

Table 1, the measurement scales were adapted from models related to technology adoption and institutional effectiveness, incorporating perspectives from current research on ethics, legal awareness, and responsible AI use in academic environments.

The data collection instrument was a standardised questionnaire, applied to a sample of 270 students from study programmes in an internationally spoken language within three higher education institutions and selected based on the criterion of active participation in digitalised educational contexts. The data collection process took place between November 2024 and March 2025, and the selection of participants was carried out through a stratified random sampling method. This methodological choice aimed not only to obtain a balanced distribution of respondents but also to minimise possible systematic errors that may occur within conventional samples.

The questionnaire was applied through the TEAMS online platform, and participation was completely anonymous. We only included individuals studying in higher education institutions who have demonstrated a minimum of interaction with digital educational platforms or artificial intelligence applications. The actual distribution of the questionnaire was achieved through a combination of the “snowball” method and dissemination through institutional channels (academic discussion lists, internal platforms).

The questionnaire, sent in English, was composed of several sets of items reflecting the constructs of the theoretical model: a knowledge-based educational orientation (KBEO), collaborative knowledge dynamics (CKD-AA), an ethical AI-orientated institutional culture (EAIOC), AI governance (AIG), as well as legal and ethical literacy in AI (AILLA). All items were evaluated on a 5-point Likert scale (1—completely disagree, 5—completely agree).

The instrument previously went through a pre-testing phase, carried out on a group of 20 academic staff and doctoral students specialised in educational technologies and digital educational policies from three higher education institutions participating in the study. This stage allowed the refinement of the formulations so as to correspond to the real experiences of the respondents and to ensure terminological coherence between the theoretical dimensions and the practical formulations. Also, the changes resulting from the pre-test contributed to increasing the relevance of the instrument, which allowed for more precise and applicable answers in subsequent statistical interpretations.

Out of a total of 320 people initially contacted, 270 completed the questionnaire in full, which corresponds to a response rate of 84.5%. This rate is considered significant for research conducted online, especially in contexts that involve voluntary participation and anonymity. The database thus obtained provides sufficient empirical references to support subsequent statistical interpretations and to test the validity of the proposed conceptual model regarding the governance of artificial intelligence in the academic environment. Of the total of 270 people who completed the questionnaire in full, 62.5% were male. This distribution provides a general picture of the gender structure of the sample, without indicating an unusual imbalance for the context analysed.

If we refer to the age of the respondents, most of them mentioned that they were over 25 years old, which represents 54.28% of the total participants. A lower percentage, of 5%, was recorded among those aged between 41 and 45. From the perspective of academic specialisations, most of the responses came from the area of business administration, which was the concentration of 65.71% of the participants. This was followed by students from computer science, with a percentage of 15.43%, and the rest, 18.86%, came from programmes orientated towards digital media. Regarding institutional affiliation, the overwhelming majority of respondents (61.07%) come from Titu Maiorescu University (Romania). Other participants were students from Firat University (Turkey) (28.57%), and a small number came from the Transilvania University of Brașov (Romania) (10.36%).

In order to test the proposed conceptual model and answer the formulated research questions, a quantitative approach based on the analysis of structural relationships of the PLS-SEM (Partial Least Squares Structural Equation Modelling) type was applied [

54]. SmartPLS 4—noted for its adaptability in handling non-normally distributed datasets and its efficiency in studies with modest sample sizes—was used for data analysis and to validate both the measurement and structural models. The validation of the instrument was carried out by analysing internal reliability (Cronbach’s alpha and CR coefficients) and convergent validity (AVE), as well as by testing discriminant validity. The structural model was evaluated from the perspective of path coefficients, R

2 values, and statistical significance through bootstrapping.

4. Results

To ensure the quality of the measurement and the coherence of the theoretical constructs used, the research instrument was subjected to a statistical validation stage. First, the internal consistency of each scale was assessed using the Cronbach’s alpha coefficient. The Cronbach’s alpha coefficient was calculated for each dimension and indicated a high internal consistency, with values ranging between 0.843 (for ethical AI-orientated institutional culture—EAIOC) and 0.971 (for AI governance—AIG), which indicates a satisfactory level of item fidelity within each construct.

Next, convergent validity was analysed, using the AVE (Average Variance Extracted) scores. All calculated values were above the minimum accepted level of 0.50, confirming that the individual items explain an adequate proportion of the variance in the constructs they represent. The results are summarised in

Table 2, which presents the reliability and validity indicators associated with constructs analysed in this study.

In

Table 3, the factor loadings on their respective variables are high, confirming that each item significantly contributes to defining its construct. At the same time, the cross-loadings on other constructs are significantly lower, indicating strong discriminant validity. These results support the use of this model to investigate the relationships between variables free from the risk of conceptual overlap across dimensions.

To assess the discriminability of the constructs, the Fornell–Larcker criterion was applied. In all cases, the square root of the AVE value for each construct was greater than its correlation with the other constructs in the model, which supports the existence of a clear separation between the analysed dimensions. The results presented in

Table 4 confirm this criterion for all the analysed variables, indicating that each construct is well defined conceptually.

In the final stage, the HTMT criterion was employed for an additional assessment of discriminant validity. This criterion requires corresponding HTMT coefficients against a specified threshold; results beyond this level suggest a possible lack of discriminant validity. The reflective structures’ HTMT ratios are shown in

Table 5.

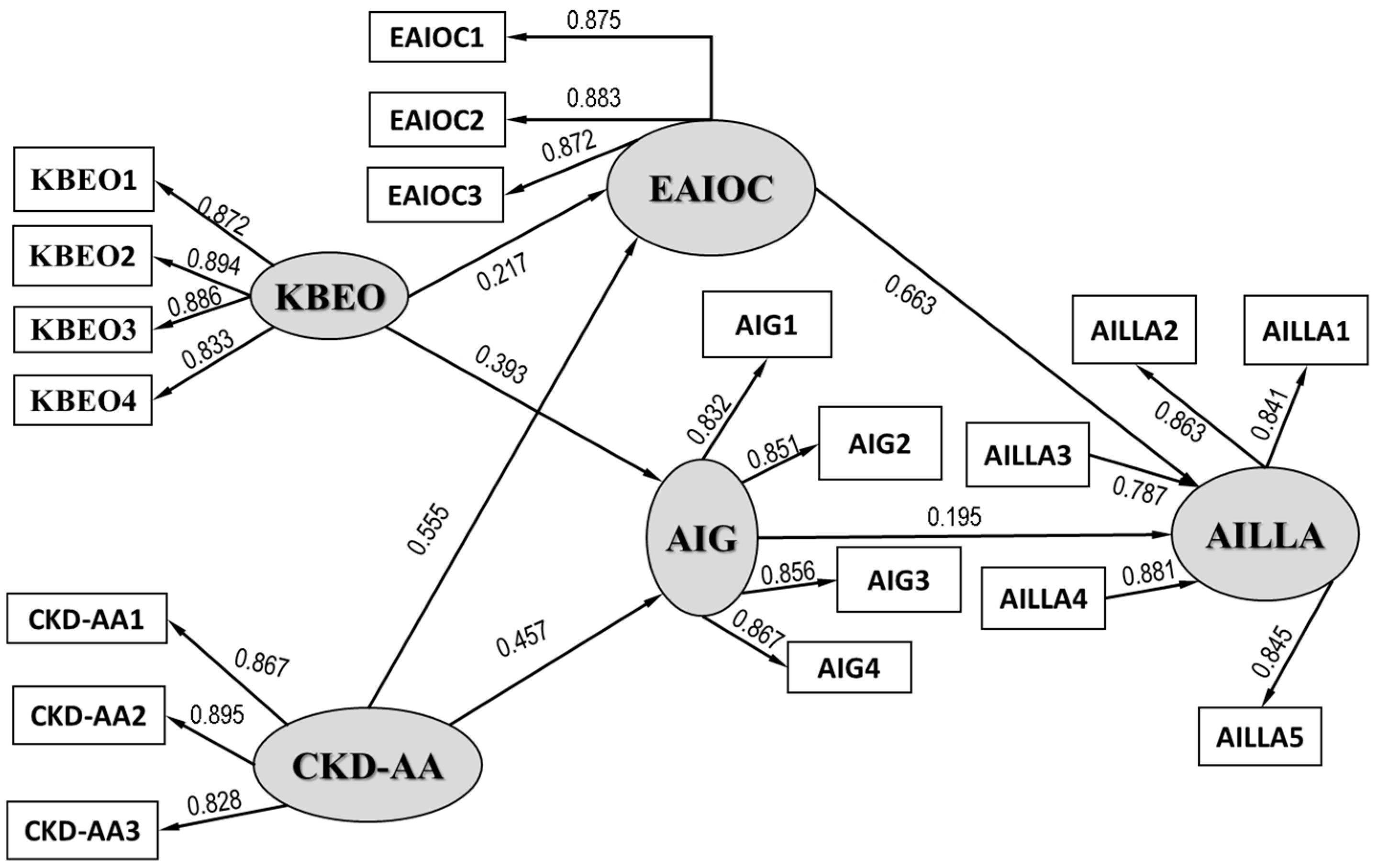

The hypothesis testing of the model confirms that all relationships between variables are statistically significant (

p < 0.001), with path coefficients (

β) indicating the strength of these associations. The effect size (

f2) further confirms that the significant relationships exert a moderate to strong impact on the dependent variable, reinforcing the validity of the model. In addition, the R

2 value and confidence intervals are employed to validate the structural paths of the conceptual model. The results in

Figure 2 assume that all hypotheses are supported with a significance of

p = 0.05.

The structural relationship analysis confirms the validity of the hypotheses formulated, highlighting significant links between the variables included in the model. A knowledge-based educational orientation (KBEO) has a positive effect on the institutional culture that promotes the ethical use of artificial intelligence (H1: β = 0.217), meaning that programmes aimed at encouraging independent thinking and valuing knowledge help create a clearer and better communicated set of rules for using AI within the institution.

At the same time, a KBEO exerts an additional influence on the policies and governance mechanisms of AI (H2: β = 0.393), supporting the idea that institutions that treat knowledge as a strategic resource are more inclined to develop coherent regulatory structures orientated towards the balanced use of algorithmic technologies in the educational process.

The collaborative dynamics of knowledge (CKD-AA) have, in turn, a direct impact on the two major institutional components: ethical organisational culture (H3: β = 0.555) and AI governance (H4: β = 0.457). These relationships confirm the importance of inter- and transdisciplinary dialogue spaces in shaping a university ecosystem in which AI is introduced not only as a technology but also as a topic of collective reflection, discussed and regulated in a participatory manner.

The results also highlight the fact that the level of legal and ethical literacy in AI (AILLA) is determined to a significant extent by the character of the institutional culture (H5: β = 0.663). When the institutional framework’s values of transparency, equity, and accountability are visible, students develop a deeper understanding of the legal and ethical implications associated with AI technologies.

Complementarily, AI governance has a positive, albeit more moderate, contribution to the development of the same construct (H6: β = 0.195). This relationship suggests that the existence of formal policies and procedures is not sufficient in the absence of an institutional context that supports them, communicates them effectively, and facilitates their integration into everyday academic life.

The coefficient of determination (R2) value for the artificial intelligence governance (AIG) construct is 0.614, indicating that the independent variables—knowledge-based educational orientation (KBEO) and collaborative knowledge dynamics (CKD-AA)—explain approximately 61.4% of the observed variation in the level of AI governance. This value is considered substantial in social research, suggesting a solid predictive capacity of the model on this institutional construct.

Regarding AI literacy and legal awareness (AILLA), the R2 coefficient is 0.597, indicating that almost 60% of the variation in this educational outcome can be attributed to the influence exerted by EAIOC and AIG. It can thus be appreciated that the proposed model has a satisfactory degree of robustness, being able to capture a significant part of the complexity of the processes underlying the formation of legal and ethical awareness in the context of AI.

In addition to these explanatory values, the predictive relevance indicators (Q

2), calculated using the blindfolding technique, support the model’s ability to predict the observed values. Thus, for AIG, a Q

2 value of 0.462 was obtained, and for AILLA, the value is 0.498—both exceeding the minimum threshold, values that indicate good predictive relevance for the dependent constructs. According to the standards in the methodological literature [

54], any positive Q

2 value demonstrates that the model provides valuable predictive information, which strengthens confidence in the practical validity of the results. Overall, the results confirm the hypothetical structure of the proposed model and highlight the importance of integrating knowledge, collaboration, and organisational values in the effort to build sustainable institutional mechanisms capable of supporting the formation of a conscious and informed relationship between students and artificial intelligence.

5. Discussion

This study sheds light on how strategies grounded in knowledge influence both the way artificial intelligence is governed within universities and how students come to understand its ethical and legal dimensions. At the same time, the same construct (KBEO) has a stronger effect on the mechanisms of governance of artificial intelligence (AIG), with a coefficient of 0.393, which indicates that institutions that promote reflection, contextualised learning, and the exchange of ideas are more likely to develop clear and coherent policies regarding the use of AI in the educational environment.

Where institutions make efforts to support deeper learning—through structured information, open reflection, and meaningful knowledge exchange—governance mechanisms tend to be clearer and more active. These findings echo previous studies [

6,

28,

31,

55], which link knowledge-centred leadership with more grounded approaches to adopting new technologies.

A similar relationship was noted between a KBEO and students’ awareness of legal and ethical aspects of AI (AILLA). In learning environments where knowledge is not simply delivered but also reexamined, questioned, and refined, students appear to build a more nuanced understanding of what AI use entails. This supports the idea that knowledge is not just material to be absorbed—it is also a lens through which technology is interpreted. The conclusions of Chan (2023) [

56] align with this, pointing to the importance of how institutional leadership positions knowledge within academic life.

The process of sharing knowledge, here framed as collaborative knowledge dynamics (CKD-AA), also stood out, showing relevance for both governance structures and student literacy. Collaborative knowledge dynamics (CKD-AA) positively influence both ethically orientated organisational culture (0.555) and AI governance (0.457). These results indicate that collective learning processes and interdisciplinary dialogue contribute to building more transparent institutions and more attention being paid to the implications of technology in academic life.

In communities where dialogue flows easily and participation is encouraged, there is more space for governance to take shape in ways that feel grounded and applicable. This conclusion is in concordance with studies by [

55,

57], who note that internal openness and communication can make institutional policies more accessible and visible to those they affect.

An unexpected result was the weaker role of ethically aligned institutional organisational culture (EAIOC) in shaping either governance or legal–ethical awareness. Thus, a knowledge-based educational orientation (KBEO) exerts a positive influence, of moderate intensity, on institutional culture orientated towards the ethical use of artificial intelligence (EAIOC), with a standardised path coefficient of 0.217. This relationship suggests that where the university environment authentically values knowledge as a strategic resource, there are favourable premises for the emergence of institutional norms that encourage the responsible use of intelligent technologies.

Although the data suggested a positive direction, the impact was not strong enough to be considered consistent. One explanation might lie in contextual variables—such as the role of administrative procedures or the weight of external regulatory demands. It is also possible that students simply do not associate the broader institutional culture with decisions about how AI is implemented or governed.

Further, mediation analysis indicated that a KBEO and CKD-AA contribute indirectly to ethical and legal literacy, with governance acting as the bridge. This reinforces the idea that literacy of this kind does not emerge in isolation. It develops in response to how knowledge is treated, how responsibilities are distributed, and how clearly institutions define the space in which technology is used.

Regarding the impact on AI legal and ethical literacy (AILLA), the data reveal two significant trajectories. On the one hand, an organisational culture aligned with ethical values has a considerable effect on students’ awareness of the legal and normative implications of AI (0.663). On the other hand, AI governance contributes to a lesser extent to this type of literacy (0.195), suggesting that the existence of policies is not sufficient in itself but requires cultural support to produce its formative effects.

The direct association between AI governance (AIG) and students’ ethical and legal literacy (AILLA) was clearly visible. When institutions establish understandable rules, ensure transparency, and maintain oversight, students tend to engage with AI differently. Their questions become sharper, and their ability to assess risk or fairness improves. These structures do not only regulate—they also inform and shape how students interpret the presence of intelligent systems in academic life.

The three research questions outlined in this study reflect a growing concern in academic circles: how do students engage with artificial intelligence when it becomes part of their learning experience? As universities continue to evolve through rapid digital transformation, AI is no longer just a background tool—it now appears in how assessments are designed, how content is delivered, and how information is processed. In this shifting context, higher education institutions are called not only to adopt new technologies, but also to create critical spaces where students are encouraged to understand, evaluate, and question the ways in which AI shapes their academic journey.

The first research question aims at understanding how a knowledge-based educational orientation (KBEO) influences the formation of critical thinking about AI. This refers not only to access to information but also to the valorisation of the learning process as a reflexive, collaborative, and contextual act. It is investigated whether and to what extent institutions that encourage the exchange of ideas, stimulate reflection, and develop a climate conducive to knowledge contribute to building a more mature and responsible reporting of students regarding artificial intelligence.

The second research question focuses on the normative dimension—more precisely, on how students perceive the legal and ethical implications associated with the use of AI in the academic environment. This question reflects the level of AI legal and ethical literacy (AILLA) and provides indications on how prepared future professionals are to recognise issues such as data protection, algorithmic transparency, decisional fairness, or responsibility in the use of intelligent technologies. In an era where AI can directly influence the assessment of academic performance or educational recommendations, understanding these principles becomes a fundamental part of student training.

The third research question analyses students’ perception of AI governance (AIG) within academic institutions. This dimension involves not only the existence of explicit policies or formal regulations but also how these mechanisms are perceived: are they clear, accessible, and legitimate? Are they accompanied by transparency and consultation? In this sense, AI governance is seen as a complex institutional mechanism that should function as a framework for ethical, legal, and practical guidance. The question explored the extent to which students believe that their institutions have such structures in place and whether they truly help them navigate responsibly and informedly through the technological ecosystem of higher education.

The findings indicate that artificial intelligence is increasingly integrated into everyday academic life. Also, many aspects concerning its implementation and management remain unclear or unresolved. While this study has primarily focused on students’ perspectives, incorporating insights from additional stakeholders would provide a more comprehensive understanding.

It would also be useful to examine how these perceptions evolve. Educational environments change quickly, especially with the adoption of new AI tools or shifts in legal frameworks. A longer-term view—following students or institutions across multiple academic cycles—could offer more context on how awareness and attitudes develop over time.

Another direction worth exploring is how ideas like transparency, fairness, or accountability actually show up in everyday academic settings. While these concepts often appear in official documents, it is less clear how students or staff experience them when they use AI tools in real teaching and learning situations.

6. Conclusions

This research sought to capture how AI-based tools are making their way into academic life and how they are perceived by students and faculty. While the technology promises quick fixes and useful automation, what we discovered suggests that the changes brought about by AI are deeper and, at times, difficult to assimilate without a clear framework of understanding and institutional support.

Some students reported that certain functionalities of platforms with intelligent components simplified routine activities. However, this ease was accompanied by uncertainties—especially regarding how the results are generated. Some responses showed that it was not clear who to approach with questions when inconsistencies or misunderstandings arose. The lack of transparency was felt not as a technological barrier, but rather as a communication gap between the system and the user.

On the other hand, teachers have in some cases been in the position of “post-factum” users—that is, without having been consulted or sufficiently prepared before certain platforms were introduced [

58]. In an educational climate where there is increasing talk of strategic digitalisation, the absence of moments of shared reflection risks affecting the way in which technology is understood and integrated.

The need for explanation was repeatedly highlighted, not just about how the systems work, but also about why they are needed. Sometimes, the lack of this “why” was what reduced users’ trust. Students said, on several occasions, that they would have needed a human validation of automated decisions, not so much to correct errors, but to feel that someone also understands the context behind a result.

The institutions involved in the study approached technology with varying degrees of openness. Some created discussion frameworks—through workshops, guides or dedicated sessions. In these cases, the responses showed a greater acceptance of AI tools, but also a more active participation in the process. Where this type of support was lacking, there was a tendency to passively comply or, in some cases, to avoid interacting with the platform altogether.

A recurring idea was related to the speed with which new systems are implemented. While some administrative decisions were motivated by the need for efficiency, in practice, this haste did not leave enough room for questions or adaptation. Some participants highlighted that they would have liked more time to understand, test, or simply discuss the implications of the technology for their work or learning routines.

The results obtained in this research provide universities with clear guidelines for formulating institutional strategies that are better calibrated to new technological realities. The fact that collaborative dynamics of knowledge and knowledge-based educational orientation influence both ethical organisational culture and the level of AI governance suggests that the adoption of advanced digital technologies is not enough; a framework is needed in which knowledge can circulate freely, be discussed, re-evaluated, and linked to clear social and institutional purposes.

Thus, higher education institutions can consider revising curricular mechanisms to integrate themes related to algorithmic accountability, fairness in automated decision-making, and transparency of AI processes. The introduction of transdisciplinary modules, bringing together perspectives from social sciences, ethics, technology, and law, can create a conducive framework for the development of independent and applied thinking around AI.

At the same time, it is necessary to strengthen internal governance mechanisms so that policies related to the use of AI are not only formulated but also internalised by the entire academic community. This requires, among other things, the existence of clear consultation structures and accessible channels for reporting algorithmic malfunctions, as well as the creation of forms of participatory monitoring. Only in this way will AI governance be perceived as an integral part of university life, not as an external intervention imposed by regulations or trends.

The fact that an ethically orientated institutional culture has the strongest impact on legal and ethical literacy in AI indicates a privileged direction of action: investment in organisational values. It is advisable for universities to facilitate contexts of collective reflection on the implications of AI, including through open debates, case simulations, ethical scenarios, and practical deliberation exercises. In this sense, educational experiences that cultivate individual and collective responsibility for the use of technology are essential for the formation of a generation capable of navigating consciously in today’s digital ecology.

In conclusion, it can be said that the success of integrating artificial intelligence in higher education depends on more than the technical performance of the systems. Trust, clarity of decisions, access to explanations, and user involvement in the implementation process are important elements for these technologies to be not only useful but also accepted. Finally, the moderate but significant influence of AI governance on legal and ethical literacy highlights the need for technological regulation not to be treated exclusively as a technical or legal process but as an educational one.