Validation Using Structural Equations of the “Cursa-T” Scale to Measure Research and Digital Competencies in Undergraduate Students

Abstract

1. Introduction

1.1. Research Skills in Undergraduate Students

1.2. The Importance of Technology for Health Research

1.3. Measuring Competencies

2. Methodology

2.1. Sample

2.2. Data Collection Instrument

2.3. Data Collection and Analysis Procedure

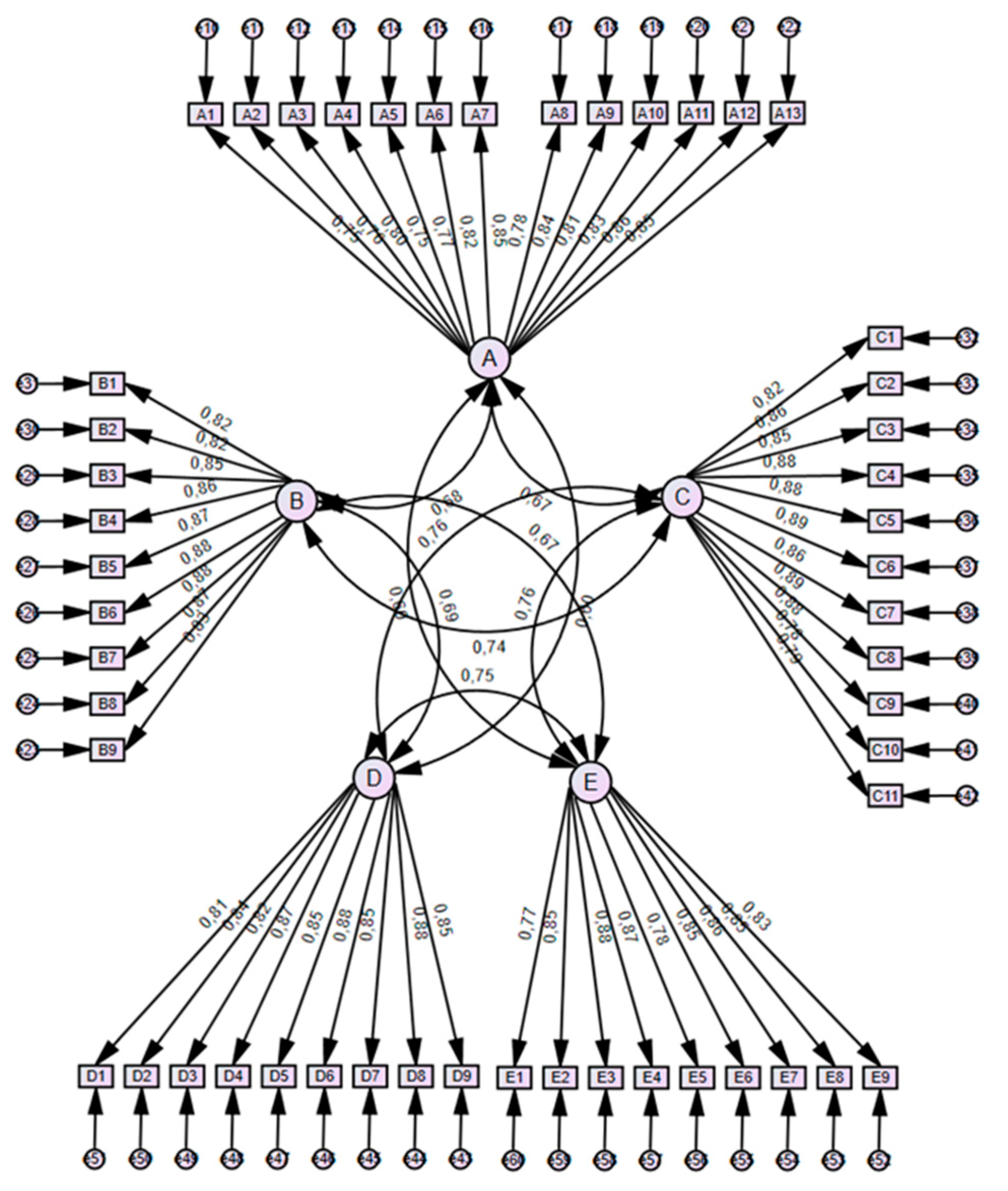

3. Results

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Márquez-Valdés, A.; Delgado Farfán, S.; Fernández Cáceres, M.; Acosta Bandomo, R. Formación de competencias investigativas en pregrado: Su diagnóstico. InterCambios. Dilemas Transic. Educ. Super. 2018, 5, 44–51. Available online: https://ojs.intercambios.cse.udelar.edu.uy/index.php/ic/article/view/161 (accessed on 1 October 2023).

- Ramírez-Armenta, M.O.; García-López, R.I.; Edel-Navarro, R. Validation of a scale to measure digital competence in graduate students. Form. Univ. 2021, 14, 115–126. [Google Scholar] [CrossRef]

- Ramos Vargas, L.F.; Escobar Cornejo, G.S. La formación investigativa en pregrado: El estado actual y consideraciones hacia el futuro. Rev. Psicol. 2020, 10, 101–116. [Google Scholar] [CrossRef]

- Castro-Rodríguez, Y. Iniciativas curriculares orientadas a la formación de competencias investigativas en los programas de las ciencias de la salud. Iatreia 2023, 36, 562–577. [Google Scholar] [CrossRef]

- Hueso-Montoro, C.; Aguilar-Ferrándiz, M.E.; Cambil-Martín, J.; García-Martínez, O.; Serrano-Guzmán, M.; Cañadas-De la Fuente, G.A. Efecto de un programa de capacitación en competencias de investigación en estudiantes de ciencias de la salud. Enfermería Glob. 2016, 15, 141–161. [Google Scholar] [CrossRef]

- Ramírez-Armenta, M.O.; García López, R.I.; Edel Navarro, R. Validación de escala que mide competencia metodológica en posgrado. Revista ProPulsión 2022, 5, 48–62. [Google Scholar] [CrossRef]

- Labrador Falero, D.M.; González Crespo, E.; Prado Tejido, D.; Fundora Sosa, A.; Vinent González, R. Estrategia para la formación de competencias investigativas en pregrado. Rev. Cienc. Médicas 2020, 24, e4414. Available online: http://revcmpinar.sld.cu/index.php/publicaciones/article/view/4414 (accessed on 1 October 2023).

- Nóbile, C.I.; Gutiérrez, I. Dimensiones e instrumentos para medir la competencia digital en estudiantes universitarios: Una revisión sistemática. Edutec. Rev. Electrónica Tecnol. Educ. 2022, 81, 88–104. [Google Scholar] [CrossRef]

- Juárez Popoca, D.; Torres Gastelú, C.A. La competencia investigativa básica. Una estrategia didáctica para la era digital. Sinéctica 2022, 58, e1302. Available online: https://www.scielo.org.mx/scielo.php?script=sci_arttext&pid=S1665-109X2022000100202 (accessed on 1 October 2023). [CrossRef]

- Albornoz-Ocampo, J.A. Validación de instrumentos para medir la autogestión del aprendizaje y competencias informacionales en un sistema de clases en línea. Rev. Electrónica Educ. Pedagog. 2022, 6, 184–196. [Google Scholar] [CrossRef]

- Abbott, D. Game-based learning for postgraduates: An empirical study of an educational game to teach research skills. Higher Educ. Pedagog. 2019, 4, 80–104. [Google Scholar] [CrossRef]

- Chávez-Ayala, C.; Farfán-Córdova, N.; San Lucas-Poveda, H.; Falquez-Jaramillo, J. Construcción y validación de una escala de habilidades investigativas para universitarios. Rev. Innova Educ. 2023, 5, 62–78. [Google Scholar] [CrossRef]

- Arias Marín, L.; García Restrepo, G.; Cardona-Arias, J.A. Impacto de las prácticas profesionales sobre las competencias de investigación formativa en estudiantes de Microbiología de la Universidad de Antioquia-Colombia. Rev. Virtual Univ. Católica Norte 2019, 56, 2–15. Available online: https://dialnet.unirioja.es/servlet/articulo?codigo=7795742 (accessed on 1 October 2023).

- Carrillo-Larco, R.M.; Carnero, A.M. Autoevaluación de habilidades investigativas e intención de dedicarse a la investigación en estudiantes de primer año de medicina de una universidad privada en Lima, Perú. Rev. Médica Hered. 2013, 24, 17–25. Available online: http://www.scielo.org.pe/scielo.php?script=sci_arttext&pid=S1018-130X2013000100004&lng=es&tlng=es (accessed on 1 October 2023). [CrossRef]

- Casillas Martín, S.; Cabezas González, M.; Sanches-Ferrerira, M.; Teixeira Diogo, F.L. Estudio psicométrico de un cuestionario para medir la competencia digital de estudiantes universitarios (CODIEU). Educ. Knowl. Soc. 2018, 19, 69–81. [Google Scholar] [CrossRef]

- Paye-Huanca, O.; Mejia-Alarcón, C. Validez de constructo y fiabilidad de una escala de autopercepción de habilidades en investigación científica y estrategias de aprendizaje autónomo. Rev. Cient. Mem. Posgrado 2022, 3, 21–28. Available online: http://postgrado.fment.umsa.bo/memoriadelposgrado/wp-content/uploads/2022/09/PAYE-HUANCA-ERICK.pdf (accessed on 1 October 2023). [CrossRef]

- González-Rivera, J.A.; Dominguez-Lara, S.; Torres-Rivera, N.; Ortiz-Santiago, T.; Sepúlveda-López, V.; Tirado de Alba, M.; González-Malavé, C.M. Análisis estructural de la Escala de Autoeficacia para Investigar en estudiantes de posgrado. Rev. Evaluar 2022, 22, 17–27. [Google Scholar] [CrossRef]

- Silva-Quiroz, J.E.; Abricot-Marchant, N.; Aranda-Faúndez, G.; Rioseco-País, M. Diseño y validación de un instrumento para evaluar competencia digital en estudiantes de primer año de las carreras de educación de tres universidades públicas de Chile. Edutec. Rev. Electrónica Tecnol. Educ. 2022, 79, 319–335. [Google Scholar] [CrossRef]

- Hernández-Suárez, C.A.; Gamboa Suárez, A.A.; Avendaño Castro, W.R. Validación de una escala para evaluar competencias investigativas en docente de básica y media. Rev. Boletín Redipe 2021, 1, 393–406. [Google Scholar] [CrossRef]

- Cobos, H. Lectura crítica de investigación en educación médica. Investig. Educ. Médica 2016, 5, 115–120. Available online: https://www.redalyc.org/pdf/3497/349745408008.pdf (accessed on 1 October 2023). [CrossRef]

- Veytia Bucheli, M.G.; Lara Villanueva, R.S.; García, O. Objetos Virtuales de Aprendizaje en Educación Superior. Eikasía: Rev. Filos. 2018, 207–224. Available online: https://dialnet.unirioja.es/servlet/articulo?codigo=6632246 (accessed on 1 October 2023).

- Sari, S.M.; Rahayu, G.R.; Prabandari, Y. The correlation between written and practical assessments of communication skills among the first year medical students. Southeast Asian J. Med. Educ. 2014, 8, 48–53. Available online: http://seajme.md.chula.ac.th/articleVol8No2/9_OR6_Sari.pdf (accessed on 1 October 2023). [CrossRef]

- Peralta-Heredia, I.C.; Espinosa-Alarcón, P.A. ¿El dominio de la lectura crítica va de la mano con la proximidad a la investigación en salud? Rev. Investig. Clínica 2005, 57, 775–782. Available online: https://www.scielo.org.mx/scielo.php?pid=S0034-83762005000600003&script=sci_abstract&tlng=pt (accessed on 1 October 2023).

- Rocher, L.Y.; Rodríguez, S.F.; Lau, J. Fundamentación, diseño y validación de un cuestionario:“perfil del estudiante universitario en formación investigativa”. Campus Virtuales 2019, 8, 85–102. Available online: http://www.uajournals.com/ojs/index.php/campusvirtuales/article/view/501 (accessed on 1 October 2023).

- Ruiz, M.A.; Pardo, A.; San Martín, R. Modelos de ecuaciones estructurales. Papeles Psicólogo 2010, 31, 34–45. [Google Scholar]

- O’Dwyer, L.; Bernauer, J. Quantitative Research for the Qualitative Researcher; SAGE: Newcastle upon Tyne, UK, 2014. [Google Scholar] [CrossRef]

- Lévy Mangin, J.P.; Varela Mallou, J.; Abad González, J. Modelización con Estructuras de Covarianzas en Ciencias Sociales: Temas Esenciales, Avanzados y Aportaciones Especiales; Netbiblo: A Coruña, Spain, 2006. [Google Scholar]

- Hair, J.; Black, W.; Babin, B.; Anderson, R. Multivariate Data Analysis; Prentice-Hall: Hoboken, NJ, USA, 2010. [Google Scholar]

- Carmines, E.; Zeller, R. Reliability and Validity Assessment; SAGE: Newcastle upon Tyne, UK, 1979. [Google Scholar] [CrossRef]

- Llanos, R.A.; Dáger, Y.B. Estrategia de formación investigativa en jóvenes universitarios: Caso Universidad del Norte. Studiositas 2007, 2, 5–12. Available online: https://dialnet.unirioja.es/descarga/articulo/2719634.pdf (accessed on 1 October 2023).

- Aliaga-Pacora, A.A.; Juárez-Hernández, L.G.; Sumarriva-Bustinza, L.A. Validity of a rubric to assess graduate research skills. Rev. Cienc. Soc. 2023, 29, 415–427. [Google Scholar] [CrossRef]

- Palacios-Rodríguez, A.; Guillén-Gámez, F.D.; Cabero-Almenara, J.; Gutiérrez-Castillo, J.J. Teacher Digital Competence in the stages of Compulsory Education according to DigCompEdu: The impact of demographic predictors on its development. Interact. Des. Archit. 2023, 57, 115–132. [Google Scholar] [CrossRef]

- Mejia Corredor, C.; Ortega Ferreira, S.; Maldonado Currea, A.; Silva Monsalve, A. Adaptación del cuestionario para el estudio de la competencia digital de estudiantes de educación superior (CDAES) a la población colombiana. Pixel-Bit. Rev. Medios Educ. 2023, 68, 43–85. [Google Scholar] [CrossRef]

- Mallidou, A.; Deltsidou, A.; Nanou, C.; Vlachioti, E. Psychometric properties of the research competencies assessment instrument for nurses (RCAIN) in Greece. Heliyon 2023, 9, e19259. [Google Scholar] [CrossRef]

- Pabico, C.; Warshawsky, N.E.; Park, S.H. Psychometric Properties of the Nurse Manager Competency Instrument for Research. J. Nurs. Meas. 2023, 31, 273–283. [Google Scholar] [CrossRef]

- Pinto Santos, A.R.; Pérez-Garcias, A.; Darder Mesquida, A. Training in Teaching Digital Competence: Functional Validation of the TEP Model. Innoeduca. Int. J. Technol. Educ. Innov. 2023, 9, 39–52. [Google Scholar] [CrossRef]

- Swank, J.M.; Lambie, G.W. Development of the Research Competencies Scale. Meas. Eval. Couns. Dev. 2016, 49, 91–108. [Google Scholar] [CrossRef]

- Martínez-Salas, A.M.; Alemany-Martínez, D. Redes sociales educativas para la adquisición de competencias digitales en educación superior. Rev. Mex. Investig. Educ. 2022, 27, 209–234. Available online: http://www.scielo.org.mx/scielo.php?script=sci_arttext&pid=S1405-66662022000100209&lng=es&tlng=es (accessed on 1 October 2023).

| Dimension | Item | Indicator |

| A. Knowledge of the research methodology (Dim. A) | A1 | I can build an innovative and relevant contribution into a research project. |

| A2 | I make, formulate or identify variables from the title, research question, hypothesis and research objectives. | |

| A3 | I recognise the types of methodology applied in a study, such as qualitative or quantitative or mixed. | |

| A4 | I identify the types of sampling (probability and non-probability). | |

| A5 | I am aware of the differences between inclusion, exclusion and elimination criteria. | |

| A6 | I use original and relevant ideas from scientific articles in the argumentation of theoretical frameworks or state of the art. | |

| A7 | I distinguish the techniques and instruments of data collection in research. | |

| A8 | I compare the results of my research with those of other authors. | |

| A9 | I can reflect on and draw conclusions from research based on the results and confirmation of the researcher’s hypothesis. | |

| A10 | I can distinguish between exploratory, descriptive, correlational or explanatory phases of research. | |

| A11 | I formulate a research question | |

| A12 | I define a research problem on the basis of background information. | |

| A13 | I integrate information from primary sources to propose a possible solution to a problem. | |

| B. Use of statistics for research (Dim. B) | B1 | I can recognise probability and non-probability samples in the results. |

| B2 | I interpret the results of statistical tests | |

| B3 | I can distinguish in some results whether parametric or non-parametric statistics are used. | |

| B4 | I can conclude the main research variables of a scientific paper | |

| B5 | I identify measures of central tendency and dispersion in an article. | |

| B6 | Use descriptive statistics for reporting | |

| B7 | I identify inferential statistics in research | |

| B8 | Statistically estimate the sample size of my research project. | |

| B9 | I can predict the results of a research project by reviewing the theoretical and methodological framework. | |

| C. Scientific report (Dim. C) | C1 | I identify popular science magazines or materials |

| C2 | I draft clear and precise objectives with their basic elements. | |

| C3 | I write research reports | |

| C4 | I identify the parts of a research report | |

| C5 | I can present the introduction, theoretical framework, results, analysis and discussion of a research topic. | |

| C6 | I know the parts that make up a research project. | |

| C7 | I detect experimental and non-experimental research | |

| C8 | I develop a full research report | |

| C9 | I write a proper research report | |

| C10 | I recognise spelling rules | |

| C11 | I draft documents identifying proper spelling and spelling | |

| D. Oral presentation of projects (Dim. D) | D1 | I participate in scientific poster exhibitions |

| D2 | I advocate research topics in forums | |

| D3 | I make presentations of free papers | |

| D4 | I present popular science topics | |

| D5 | I know spaces for feedback on research topics. | |

| D6 | I present topics considering the order of the scientific method | |

| D7 | I have the oral skills to present a research poster in synthesis | |

| D8 | I use my written skills to present scientific papers. | |

| D9 | I have the skills to write a research poster | |

| E. Technological applications in research (Dim. E) | E1 | I am familiar with programmes for building references or quotations |

| E2 | I identify digital tools | |

| E3 | I recognise the technological tools to develop a research project. | |

| E4 | I am familiar with platforms for searching scientific information | |

| E5 | I know software for statistical analysis of data. | |

| E6 | I easily find scientific articles on the Internet | |

| E7 | I am familiar with the process of strategic search for scientific information. | |

| E8 | I am familiar with and apply standardised search terms. | |

| E9 | I recognise different programmes or digital platforms to form a research project. |

| Dimension | CR | Model Fit | AVE | Model Fit | MSV | Model Fit |

|---|---|---|---|---|---|---|

| A | 0.960 | CR > 0.7 | 0.650 | AVE > 0.5 | 0.469 | MSV < AVE |

| B | 0.961 | 0.730 | 0.553 | |||

| C | 0.967 | 0.726 | 0.585 | |||

| D | 0.960 | 0.726 | 0.584 | |||

| E | 0.955 | 0.704 | 0.585 |

| Item | Dim. A | Dim. B | Dim. C | Dim. D | Dim. E |

|---|---|---|---|---|---|

| A1 | 0.772 | ||||

| A2 | 0.779 | ||||

| A3 | 0.812 | ||||

| A4 | 0.770 | ||||

| A5 | 0.795 | ||||

| A6 | 0.833 | ||||

| A7 | 0.844 | ||||

| A8 | 0.791 | ||||

| A9 | 0.835 | ||||

| A10 | 0.819 | ||||

| A11 | 0.823 | ||||

| A12 | 0.848 | ||||

| A13 | 0.842 | ||||

| B1 | 0.830 | ||||

| B2 | 0.824 | ||||

| B3 | 0.849 | ||||

| B4 | 0.853 | ||||

| B5 | 0.867 | ||||

| B6 | 0.880 | ||||

| B7 | 0.886 | ||||

| B8 | 0.870 | ||||

| B9 | 0.833 | ||||

| C1 | 0.817 | ||||

| C2 | 0.862 | ||||

| C3 | 0.842 | ||||

| C4 | 0.875 | ||||

| C5 | 0.869 | ||||

| C6 | 0.877 | ||||

| C7 | 0.855 | ||||

| C8 | 0.875 | ||||

| C9 | 0.863 | ||||

| C10 | 0.807 | ||||

| C11 | 0.816 | ||||

| D1 | 0.836 | ||||

| D2 | 0.872 | ||||

| D3 | 0.844 | ||||

| D4 | 0.881 | ||||

| D5 | 0.872 | ||||

| D6 | 0.879 | ||||

| D7 | 0.862 | ||||

| D8 | 0.876 | ||||

| D9 | 0.855 | ||||

| E1 | 0.812 | ||||

| E2 | 0.845 | ||||

| E3 | 0.876 | ||||

| E4 | 0.863 | ||||

| E5 | 0.809 | ||||

| E6 | 0.843 | ||||

| E7 | 0.867 | ||||

| E8 | 0.856 | ||||

| E9 | 0.847 |

| Item | Dim. A | Dim. B | Dim. C | Dim. D | Dim. E |

|---|---|---|---|---|---|

| A1 | 0.681 | ||||

| A2 | 0.720 | ||||

| A3 | 0.733 | ||||

| A4 | 0.667 | ||||

| A5 | 0.698 | ||||

| A6 | 0.744 | ||||

| A7 | 0.772 | ||||

| A8 | 0.723 | ||||

| A9 | 0.768 | ||||

| A10 | 0.721 | ||||

| A11 | 0.788 | ||||

| A12 | 0.790 | ||||

| A13 | 0.788 | ||||

| B1 | 0.706 | ||||

| B2 | 0.644 | ||||

| B3 | 0.773 | ||||

| B4 | 0.673 | ||||

| B5 | 0.736 | ||||

| B6 | 0.768 | ||||

| B7 | 0.783 | ||||

| B8 | 0.714 | ||||

| B9 | 0.640 | ||||

| C1 | 0.679 | ||||

| C2 | 0.706 | ||||

| C3 | 0.668 | ||||

| C4 | 0.704 | ||||

| C5 | 0.728 | ||||

| C6 | 0.724 | ||||

| C7 | 0.675 | ||||

| C8 | 0.688 | ||||

| C9 | 0.678 | ||||

| C10 | 0.698 | ||||

| C11 | 0.661 | ||||

| D1 | 0.680 | ||||

| D2 | 0.749 | ||||

| D3 | 0.712 | ||||

| D4 | 0.762 | ||||

| D5 | 0.748 | ||||

| D6 | 0.730 | ||||

| D7 | 0.731 | ||||

| D8 | 0.700 | ||||

| D9 | 0.688 | ||||

| E1 | 0.684 | ||||

| E2 | 0.753 | ||||

| E3 | 0.772 | ||||

| E4 | 0.754 | ||||

| E5 | 0.662 | ||||

| E6 | 0.691 | ||||

| E7 | 0.710 | ||||

| E8 | 0.673 | ||||

| E9 | 0.680 |

| Index | Result | Model Fit |

|---|---|---|

| CMIN | 414.535 | CMIN < 500 |

| GFI | 0.929 | GFI > 0.7 |

| PGFI | 0.885 | PGFI > 0.7 |

| NFI | 0.911 | NFI > 0.7 |

| PNFI | 0.867 | PNFI > 0.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Duarte Ayala, R.E.; Palacios-Rodríguez, A.; Guzmán-Cedillo, Y.I.; Segura, L.R. Validation Using Structural Equations of the “Cursa-T” Scale to Measure Research and Digital Competencies in Undergraduate Students. Societies 2024, 14, 22. https://doi.org/10.3390/soc14020022

Duarte Ayala RE, Palacios-Rodríguez A, Guzmán-Cedillo YI, Segura LR. Validation Using Structural Equations of the “Cursa-T” Scale to Measure Research and Digital Competencies in Undergraduate Students. Societies. 2024; 14(2):22. https://doi.org/10.3390/soc14020022

Chicago/Turabian StyleDuarte Ayala, Rocío Elizabeth, Antonio Palacios-Rodríguez, Yunuen Ixchel Guzmán-Cedillo, and Leticia Rodríguez Segura. 2024. "Validation Using Structural Equations of the “Cursa-T” Scale to Measure Research and Digital Competencies in Undergraduate Students" Societies 14, no. 2: 22. https://doi.org/10.3390/soc14020022

APA StyleDuarte Ayala, R. E., Palacios-Rodríguez, A., Guzmán-Cedillo, Y. I., & Segura, L. R. (2024). Validation Using Structural Equations of the “Cursa-T” Scale to Measure Research and Digital Competencies in Undergraduate Students. Societies, 14(2), 22. https://doi.org/10.3390/soc14020022