Toward a Comprehensive Model of Fake News: A New Approach to Examine the Creation and Sharing of False Information

Abstract

:1. Introduction

Fake News

2. Fake News Model Overview

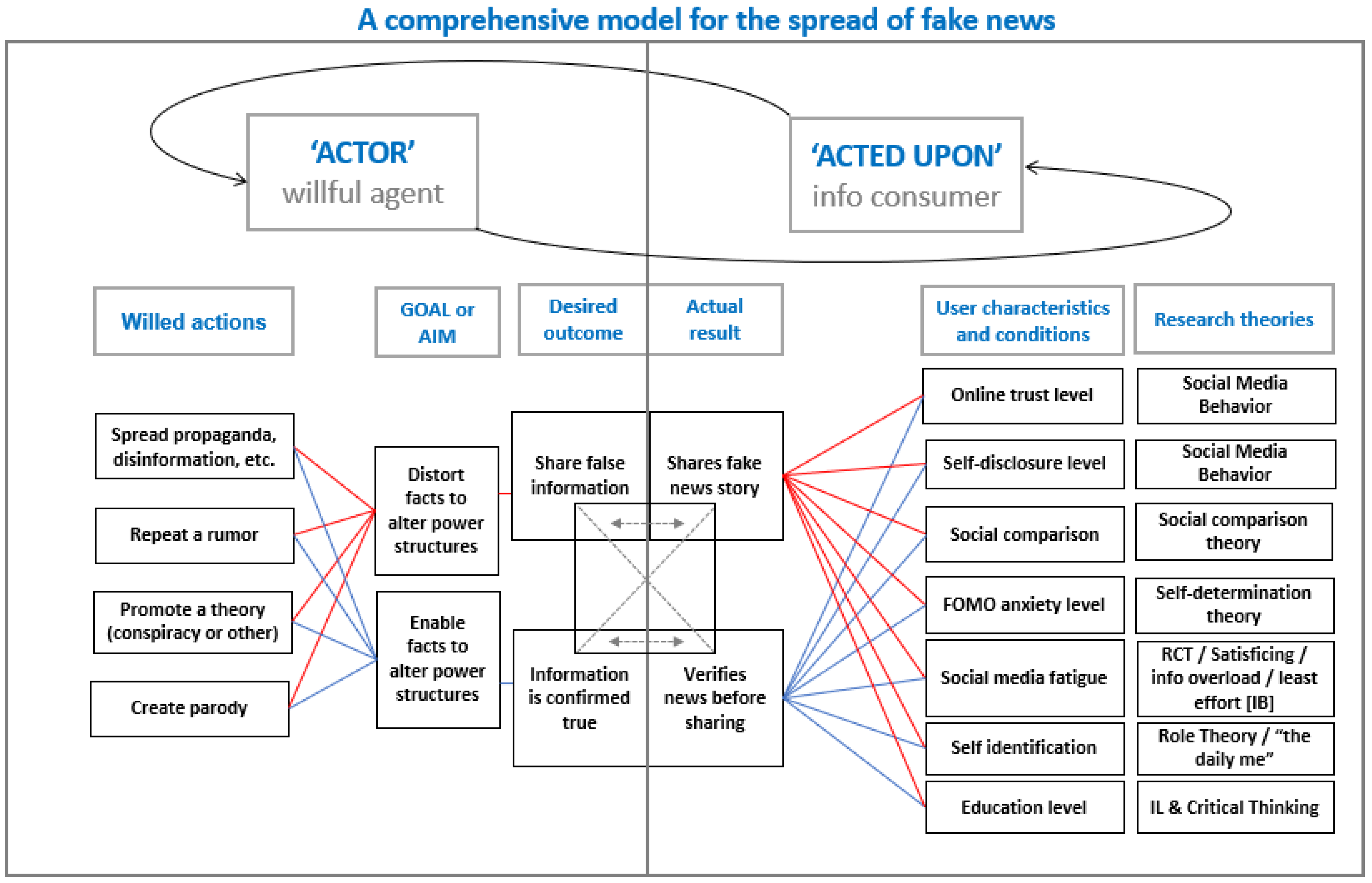

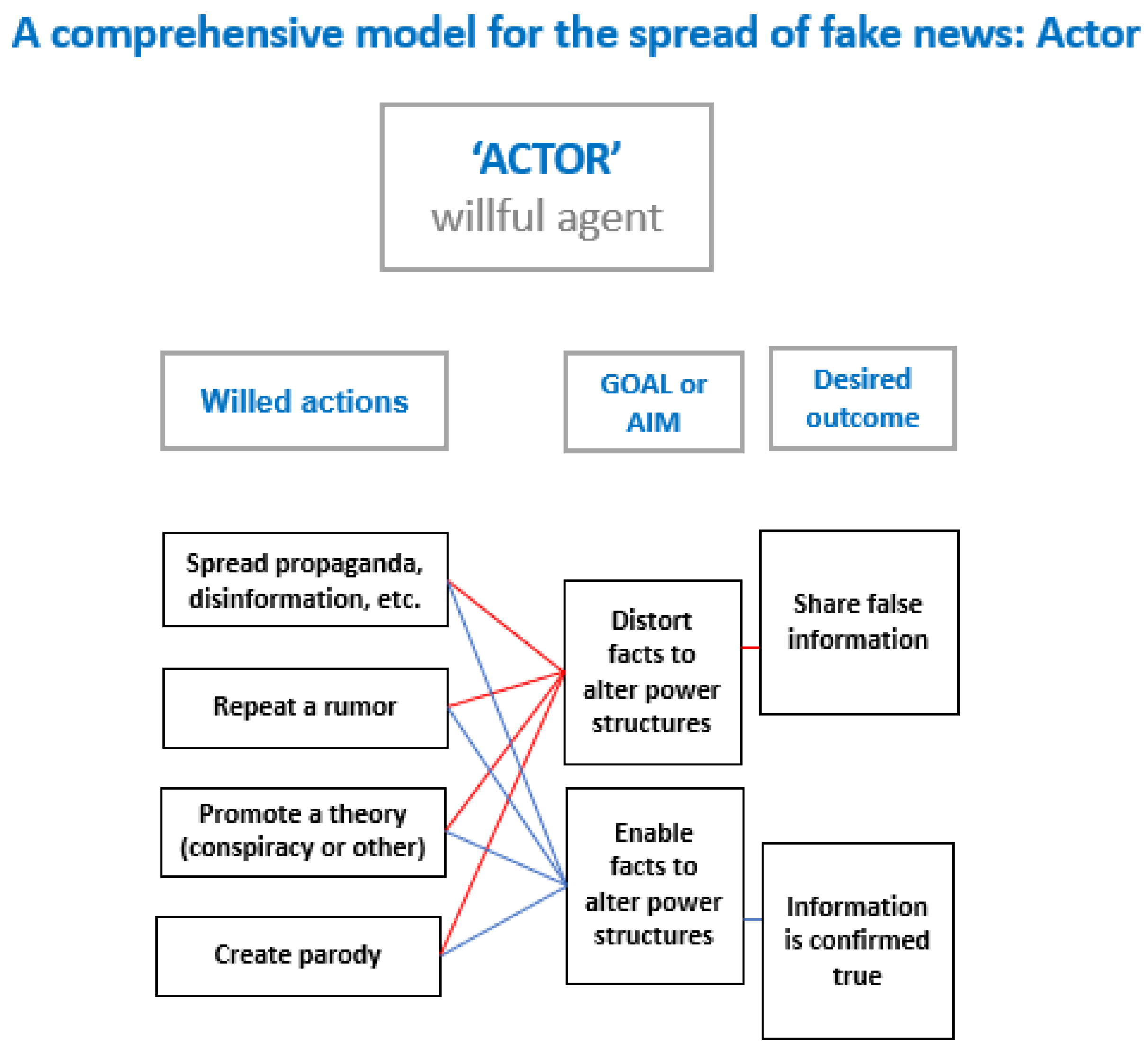

2.1. A New Comprehensive Model for Fake News

- Which characteristics and conditions are evident in users most and least likely to share fake news?

- What do the creators of fake news intend by sharing it?

- What combination of factors between the ‘actor’ and ‘acted upon’ most align the desired outcomes of creating fake news with the actual results? (i.e., how effective or successful is a fake news story in attaining its intended goal?)

- What characteristics of fake news make it more or less likely to be shared?

- What factors contribute to an actor becoming acted upon? Conversely, what factors contribute to the acted upon becoming actors/agents in sharing fake news?

2.2. The Actor and Agency

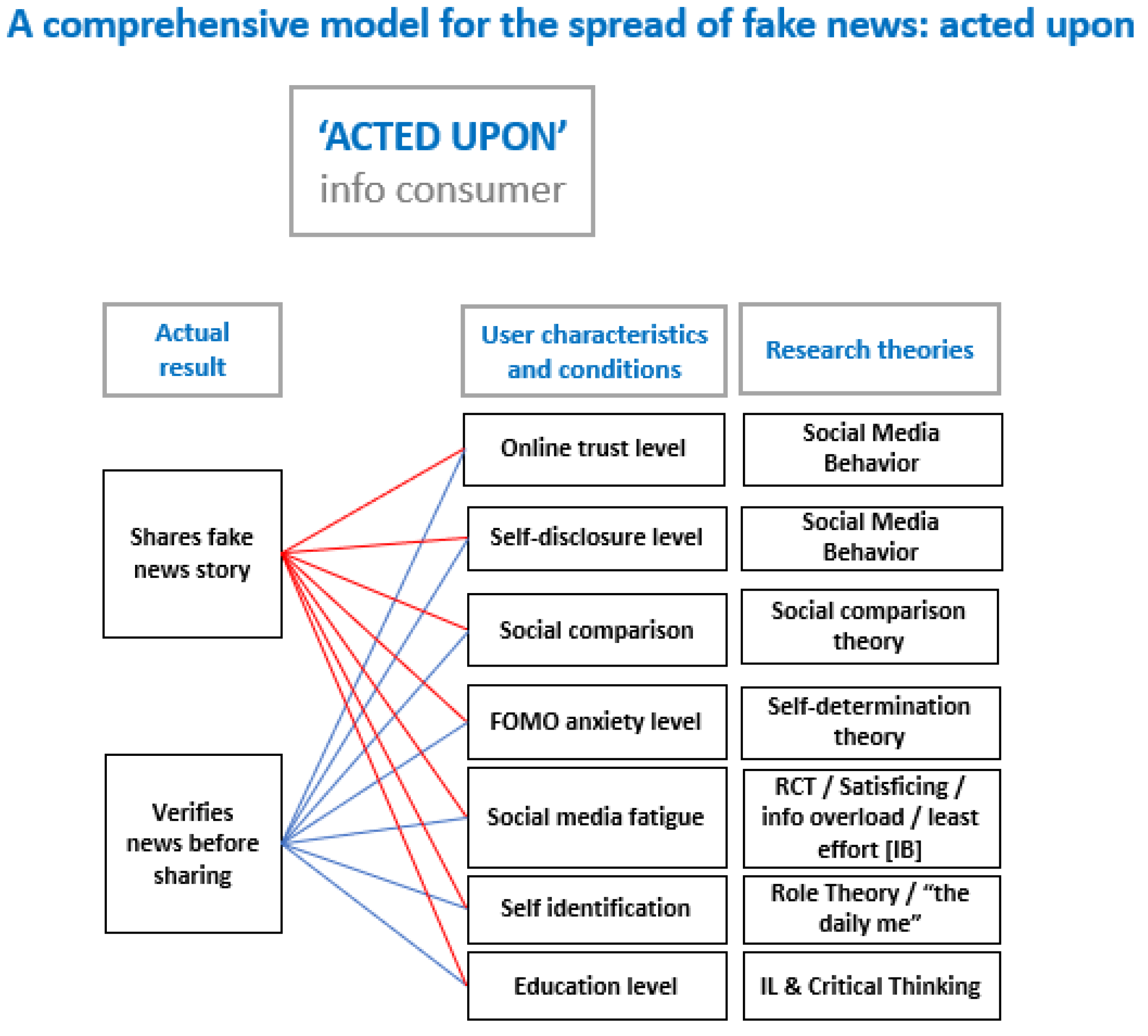

2.3. The ‘Acted Upon’

- (1)

- Users’ level of trust online;

- (2)

- Users’ level of online self-disclosure;

- (3)

- Users’ amount of social comparison;

- (4)

- Users’ level of ‘Fear Of Missing Out’ (FOMO) anxiety;

- (5)

- Users’ level of social media fatigue;

- (6)

- Users’ concepts of self and their role identity;

- (7)

- Users’ educational level/attainment.

2.4. New Additions: Self-Identification, Role, and Education Attainment

3. Discussion: Implications for Research and Potential Directions

3.1. ‘Solving’ Fake News with Critical Thinking and Information Literacy: The Limits to Relying Entirely on Educational Outcomes

3.2. Meeting in the Muddled Middle

3.2.1. When Does the Acted upon Become the Actor (and Vice-Versa)?

Those who spend their time in the library of the unreal have an abundance of something that is scarce in college classrooms: information agency. One of the powers they feel elites have tried to withhold from them is the ability to define what constitutes knowledge. They don’t simply distrust what the experts say; they distrust the social systems that create expertise. They take pleasure in claiming expertise for themselves, on their own terms.[5]

3.2.2. How Effective Is Fake News?

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kornbluh, K.; Goldstein, A.; Weiner, E. New Study by Digital New Deal Finds Engagement with Deceptive Outlets Higher on Facebook Today than Run-Up to 2016 Election. The German Marshall Fund of the United States, 2020. Available online: https://www.gmfus.org/blog/2020/10/12/new-study-digital-new-deal-finds-engagement-deceptive-outlets-higher-facebook-today (accessed on 6 July 2021).

- Lee, T. The global rise of “fake news” and the threat to democratic elections in the USA. Public Adm. Policy Asia Pac. J. 2019, 22, 15–24. [Google Scholar] [CrossRef] [Green Version]

- McDonald, K. Unreliable News Sites More than Doubled Their Share of Social Media Engagement in 2020. NewsGuard. 2020. Available online: https://www.newsguardtech.com/special-report-2020-engagement-analysis/ (accessed on 6 July 2021).

- Fielding, J. Rethinking CRAAP: Getting students thinking like fact-checkers in evaluating web sources. Coll. Res. Libr. News 2019, 80, 620. [Google Scholar] [CrossRef] [Green Version]

- Fister, B. The Librarian War against QAnon. The Atlantic. 2021. Available online: https://www.theatlantic.com/education/archive/2021/02/how-librarians-can-fight-qanon/618047/ (accessed on 20 June 2021).

- Wineburg, S.; Breakstone, J.; Ziv, N.; Smith, M. Educating for Misunderstanding: How Approaches to Teaching Digital Literacy Make Students Susceptible to Scammers, Rogues, Bad Actors, and Hate Mongers; Working Paper A-21322; Stanford History Education Group/Stanford University: Stanford, CA, USA, 2020; Available online: https://purl.stanford.edu/mf412bt5333 (accessed on 20 June 2021).

- Wineburg, S.; Ziv, N. Op-ed: Why can’t a generation that grew up online spot the misinformation in front of them? Los Angeles Times. 6 November 2020. Available online: https://www.latimes.com/opinion/story/2020-11-06/colleges-students-recognize-misinformation (accessed on 8 July 2021).

- Alwan, A.; Garcia, E.; Kirakosian, A.; Weiss, A. Fake news and libraries: How teaching faculty in higher education view librarians’ roles in counteracting the spread of false information. Can. J. Libr. Inf. Pract. Res. under review.

- Weiss, A.; Alwan, A.; Garcia, E.P.; Garcia, J. Surveying fake news: Assessing university faculty’s fragmented definition of fake news and its impact on teaching critical thinking. Int. J. Educ. Integr. 2020, 16, 1–30. [Google Scholar] [CrossRef] [Green Version]

- Burkhardt, J.M. Combating Fake News in the Digital Age; ALA TechSource: Chicago, IL, USA, 2017. [Google Scholar]

- Fallis, D. What is disinformation? Libr. Trends 2015, 63, 401–426. [Google Scholar] [CrossRef] [Green Version]

- Derakhshan, H. Information Disorder: Toward an Interdisciplinary Framework for Research and Policy Making; Council of Europe Report DGI; Consejo de Europa: Bruselas, Belgium, 2017; Available online: https://bit.ly/3gTqUbV (accessed on 25 May 2021).

- Kim, S.; Kim, S. The Crisis of Public Health and Infodemic: Analyzing Belief Structure of Fake News about COVID-19 Pandemic. Sustainability 2020, 12, 9904. [Google Scholar] [CrossRef]

- McNair, B. Fake News: Falsehood, Fabrication and Fantasy in Journalism; Routledge: New York, NY, USA, 2018. [Google Scholar]

- Balmas, M. When fake news becomes real: Combined exposure to multiple news sources and political attitudes of inefficacy, alienation, and cynicism. Commun. Res. 2014, 41, 430–454. [Google Scholar] [CrossRef]

- Allcott, H.; Gentzkow, M. Social media and fake news in the 2016 election. J. Econ. Perspect. 2017, 31, 211–236. Available online: https://pubs.aeaweb.org/doi/pdfplus/10.1257/jep.31.2.211 (accessed on 21 June 2021). [CrossRef] [Green Version]

- McGivney, C.; Kasten, K.; Haugh, D.; DeVito, J.A. Fake news & information literacy: Designing information literacy to empower students. Interdiscip. Perspect. Equal. Divers. 2017, 3, 1–18. Available online: http://journals.hw.ac.uk/index.php/IPED/article/viewFile/46/30 (accessed on 6 July 2021).

- Mustafaraj, E.; Metaxas, P.T. The Fake News Spreading Plague: Was it Preventable? Cornell University Library. arXiv 2017, arXiv:1703.06988. [Google Scholar]

- Golbeck, J.; Mauriello, M.; Auxier, B.; Bhanushali, K.H.; Bonk, C.; Bouzaghrane, M.A.; Buntain, C.; Chanduka, R.; Cheakalos, P.; Everett, J.B.; et al. Fake news vs satire: A dataset and analysis. In Proceedings of the 10th ACM Conference on Web Science, Amsterdam, The Netherlands, 27–30 May 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 17–21. [Google Scholar] [CrossRef]

- Brennen, B. Making Sense of Lies, Deceptive Propaganda, and Fake News. J. Media Ethics 2017, 32, 179–181. [Google Scholar] [CrossRef]

- Gupta, A.; Lamba, H.; Kumaraguru, P.; Joshi, A. Faking sandy: Characterizing and identifying fake images on twitter during hurricane sandy. In WWW ‘13 Companion: Proceedings of the 22nd International Conference on World Wide Web, Proceedings of the WWW ‘13: 22nd International World Wide Web Conference, Rio de Janeiro, Brazil, 13–17 May 2013; Association for Computing Machinery: New York, NY, USA, 2013; pp. 719–736. [Google Scholar] [CrossRef]

- Caplan, R.; Hanson, L.; Donovan, J. Dead reckoning: Navigating content moderation after “fake news”. Data Soc. 2018. Available online: https://datasociety.net/pubs/oh/DataAndSociety_Dead_Reckoning_2018.pdf (accessed on 23 May 2021).

- Gray, J.; Bounegru, L.; Venturini, T. ‘Fake news’ as infrastructural uncanny. New Media Soc. 2020, 22, 317–341. [Google Scholar] [CrossRef]

- Tandoc, E.; Lim, Z.; Ling, R. Defining “Fake News”: A typology of scholarly definitions. Digit. J. 2017, 6, 1–17. [Google Scholar] [CrossRef]

- Shu, K.; Sliva, A.; Wang, S.; Tang, J.; Liu, H. Fake news detection on social media: A data mining perspective. ACM SIGKDD Explor. Newsl. 2017, 19, 22–36. [Google Scholar] [CrossRef]

- De Beer, D.; Matthee, M. Approaches to identify fake news: A systematic literature review. In Integrated Science in Digital Age; Springer: Cham, Switzerland, 2020; pp. 13–22. [Google Scholar] [CrossRef]

- Mustafaraj, E.; Metaxas, P.T. Fake News spreading plague. In Proceedings of the 2017 ACM on Web Science Conference, Troy, MI, USA, 25–28 June 2017. [Google Scholar]

- Talwar, S.; Dhir, A.; Kaur, P.; Zafar, N.; Alrasheedy, M. Why do people share fake news? Associations between the dark side of social media use and fake news sharing behavior. J. Retail. Consum. Serv. 2019, 51, 72–82. [Google Scholar] [CrossRef]

- Mayer, R.C.; Davis, J.H.; Schoorman, F.D. An integrative model of organizational trust. Acad. Manag. Rev. 1995, 20, 709–734. [Google Scholar] [CrossRef]

- Schoorman, F.D.; Roger, C.; Mayer, R.C.; Davis, J.H. An Integrative Model of Organizational Trust: Past, Present, and Future. Acad. Manag. Rev. 2007, 32, 344–354. [Google Scholar] [CrossRef] [Green Version]

- DuBois, T.; Golbeck, J.; Srinivasan, A. Predicting trust and distrust in social networks. In Proceedings of the 2011 IEEE Third International Conference on Privacy, Security, Risk and Trust and 2011 IEEE Third International Conference on Social Computing, Boston, MA, USA, 9–11, October 2011; IEEE Computer Society: Boston, MA, USA, 2011; pp. 418–424. [Google Scholar] [CrossRef] [Green Version]

- Grabner-Kräuter, S.; Bitter, S. Trust in online social networks: A multifaceted perspective. Forum Soc. Econ. 2013, 44, 48–68. [Google Scholar] [CrossRef] [Green Version]

- Krasnova, H.; Spiekermann, S.; Koroleva, K.; Hildebrand, T. Online social networks: Why we disclose. J. Inf. Technol. 2010, 25, 109–125. [Google Scholar] [CrossRef]

- Grosser, T.J.; Lopez-Kidwell, V.; Labianca, G. A social network of positive and negative gossip in organizational life. Group Organ. Manag. 2010, 35, 177–212. [Google Scholar] [CrossRef]

- Wenger, E. Communities of practice: Learning as a social system. Syst. Think. 1998, 9, 2–3. [Google Scholar] [CrossRef]

- Davies, E. Communities of practice. In Theories of Information Behaviors; Fisher, K.E., Erdelez, S., McKechnie, L.E.F., Eds.; Information Today, Inc.: Medford, NJ, USA, 2006; pp. 104–109. [Google Scholar]

- Rioux, K. Information acquiring-and-sharing. In Theories of Information Behavior; Fisher, K., Erdelez, S., McKechnie, L., Eds.; Information Today: Medford, NJ, USA, 2005; pp. 169–173. [Google Scholar]

- Festinger, L. A theory of social comparison processes. Hum. Relat. 1954, 7, 117–140. [Google Scholar] [CrossRef]

- Cramer, E.M.; Song, H.; Drent, A.M. Social comparison on Facebook: Motivation, affective consequences, self-esteem, and Facebook fatigue. Comput. Hum. Behav. 2016, 64, 739–746. [Google Scholar] [CrossRef]

- Wert, S.R.; Salovey, P. A social comparison account of gossip. Rev. Gen. Psychol. 2004, 8, 122–137. [Google Scholar] [CrossRef] [Green Version]

- Sundin, O.; Hedman, J. Professions and occupational identities. In Theories of Information Behavior; Fisher, K., Erdelez, S., McKechnie, L., Eds.; Information Today: Medford, NJ, USA, 2005; pp. 293–297. [Google Scholar]

- Johnson, C.A. Nan Lin’s theory of social capital. In Theories of Information Behavior; Fisher, K., Erdelez, S., McKechnie, L., Eds.; Information Today: Medford, NJ, USA, 2005; pp. 323–327. [Google Scholar]

- Deci, E.L.; Ryan, R.M. Intrinsic Motivation and Self-Determination in Human Behavior; Plenum Press: New York, NY, USA, 1985. [Google Scholar]

- Przybylski, A.K.; Murayama, K.; DeHaan, C.R.; Gladwell, V. Motivational, emotional, and behavioral correlates of fear of missing out. Comput. Hum. Behav. 2013, 29, 1841–1848. [Google Scholar] [CrossRef]

- Alt, D. College students’ academic motivation, media engagement and fear of missing out. Comput. Hum. Behav. 2015, 49, 111–119. [Google Scholar] [CrossRef]

- Beyens, I.; Frison, E.; Eggermont, S. I don’t want to miss a thing: Adolescents’ fear of missing out and its relationship to adolescents’ social needs, Facebook use, and Facebook related stress. Comput. Hum. Behav. 2016, 64, 1–8. [Google Scholar] [CrossRef]

- Blackwell, D.; Leaman, C.; Tramposch, R.; Osborne, C.; Liss, M. Extraversion, neuroticism, attachment style and fear of missing out as predictors of social media use and addiction. Pers. Indiv. Differ. 2017, 116, 69–72. [Google Scholar] [CrossRef]

- Buglass, S.L.; Binder, J.F.; Betts, L.R.; Underwood, J.D.M. Motivators of online vulnerability: The impact of social network site use and FoMO. Comput. Hum. Behav. 2017, 66, 248–255. [Google Scholar] [CrossRef] [Green Version]

- Ryan, R.M.; Deci, E.L. Intrinsic and extrinsic motivations: Classic definitions and new directions. Contemp. Educ. Psychol. 2001, 25, 54–56. [Google Scholar] [CrossRef]

- Watters, C.; Duffy, J. Motivational factors for interface design. In Theories of Information Behavior; Fisher, K., Erdelez, S., McKechnie, L., Eds.; Information Today: Medford, NJ, USA, 2005; pp. 242–246. [Google Scholar]

- Ravindran, T.; Yeow Kuan, A.C.; Hoe Lian, D.G. Antecedents and effects of social network fatigue. J. Assoc. Infor. Sci. Technol. 2014, 65, 2306–2320. [Google Scholar] [CrossRef]

- Blair, A. Too Much to Know: Managing Scholarly Information Before the Modern Age; Yale University Press: New Haven, CT, USA, 2010. [Google Scholar]

- Eppler, M.; Mengis, J. The concept of information overload: A review of literature from organization science, accounting, marketing, MIS, and related disciplines. Inf. Soc. 2004, 20, 325–344. [Google Scholar] [CrossRef]

- Good, A. The Rising Tide of Educated Aliteracy. The Walrus. 2017. Available online: https://thewalrus.ca/the-rising-tide-of-educated-aliteracy/ (accessed on 12 December 2020).

- Prabha, C.; Silipigni-Connaway, L.; Olszewski, L.; Jenkins, L.R. What is enough? Satisficing information needs. J. Doc. 2007, 63, 74–89. [Google Scholar] [CrossRef] [Green Version]

- Zipf, G.K. Human Behavior and the Principle of Least Effort: An Introduction to Human Ecology; Addison-Wesley: Cambridge, MA, USA, 1949. [Google Scholar]

- Given, L.M. Social Positioning. In Theories of Information Behavior; Fisher, K., Erdelez, S., McKechnie, L., Eds.; Information Today: Medford, NJ, USA, 2005; pp. 334–338. [Google Scholar]

- Ogasawara, M. The Daily Us (vs. Them) from Online to Offline: Japan’s Media Manipulation and Cultural Transcoding of Collective Memories. J. Contemp. East. Asia 2019, 18, 49–67. [Google Scholar]

- Sunstein, C. Republic.Com; Princeton University Press: Princeton, NJ, USA, 2001. [Google Scholar]

- Batchelor, O. Getting out the truth: The role of libraries in the fight against fake news. Ref. Serv. Rev. 2017, 45, 143–148. [Google Scholar] [CrossRef]

- De Paor, S.; Heravi, B. Information literacy and fake news: How the field of librarianship can help combat the epidemic of fake news. J. Acad. Librariansh. 2020, 46, 102218. [Google Scholar] [CrossRef]

- Gardner, M.; Mazzola, N. Fighting Fake News: Tools and Resources to Combat Disinformation. Knowl. Quest 2018, 47, 6. [Google Scholar]

- Chatman, E.A. The impoverished life-world of outsiders. J. Am. Soc. Inf. Sci. 1996, 47, 193–206. [Google Scholar] [CrossRef]

- Williamson, K. Discovered by Chance: The role of incidental information acquisition in an ecological model of information use. Libr. Inf. Sci. Res. 1998, 20, 23–40. [Google Scholar] [CrossRef]

- Allcott, H.; Gentzkow, M.; Yu, C. Trends in the diffusion of misinformation on social media. Res. Politics 2019, 6. [Google Scholar] [CrossRef] [Green Version]

- Flynn, D.J.; Nyhan, B.; Reifler, J. The Nature and Origins of Misperceptions: Understanding False and Unsupported Beliefs about Politics. Adv. Political Psychol. 2017, 38, 127–150. [Google Scholar] [CrossRef]

- Smith, A. An Inquiry into the Nature and Causes of the Wealth of Nations. With a Memoir of the Author’s Life; Clark, A.G., Ed.; 1776/1848; George Clark and Son: Aberdeen, Scotland, 1848. [Google Scholar]

- Roth, M.S. Beyond critical thinking. Chron. High Educ. 2010, 56, B4–B5. Available online: https://www.chronicle.com/article/beyond-critical-thinking/ (accessed on 8 July 2021).

- Renaud, R.D.; Murray, H.G. A comparison of a subject-specific and a general measure of critical thinking. Think Ski. Creat. 2008, 3, 85–93. [Google Scholar] [CrossRef]

- Behar-Horenstein, L.S.; Niu, L. Teaching critical thinking skills in higher education: A review of the literature. J. Coll. Teach. Learn. 2011, 8, 25–42. Available online: https://clutejournals.com/index.php/TLC (accessed on 8 July 2021). [CrossRef]

- Allegretti, C.L.; Frederick, J.N. A model for thinking critically about ethical issues. Teach. Psychol. 1995, 22, 46–48. [Google Scholar] [CrossRef]

- Hobbs, R. Multiple visions of multimedia literacy: Emerging areas of synthesis. In International Handbook of Literacy and Technology; McKenna, M.C., Labbo, L.D., Kieffer, R.D., Reinking, D., Eds.; Temple University: Philadelphia, PA, USA, 2006; pp. 15–28. [Google Scholar]

- Schuster, S.M. Information literacy as a core value. Biochem. Mol. Biol. Educ. 2007, 35, 372–373. [Google Scholar] [CrossRef]

- Cooke, N.A. Fake News and Alternative Facts: Information Literacy in a Post-Truth Era; ALA Editions: Chicago, IL, USA, 2018. [Google Scholar]

- Zimmerman, M.S.; Ni, C. What we talk about when we talk about information literacy. IFLA J. 2021. [Google Scholar] [CrossRef]

- Dixon, J. First impressions: LJ’s first year experience survey. Libr. J. 2017. Available online: https://www.libraryjournal.com/?detailStory=first-impressions-ljs-first-year-experience-survey (accessed on 8 July 2021).

- Buzzetto-Hollywood, N.; Wang, H.; Elobeid, M.; Elobaid, M. Addressing Information literacy and the digital divide in higher education. Interdiscip. J. E Ski. Lifelong Learn. 2018, 14, 77–93. [Google Scholar] [CrossRef] [Green Version]

- Cullen, R. The digital divide: A global and national call to action. Electron. Libr. 2003, 21, 247–257. [Google Scholar] [CrossRef]

- Wong, S.H.R.; Cmor, D. Measuring association between library instruction and graduation GPA. Coll. Res. Libr. 2011, 72, 464–473. [Google Scholar] [CrossRef] [Green Version]

- Jardine, S.; Shropshire, S.; Koury, R. Credit-bearing information literacy courses in academic libraries: Comparing peers. Coll. Res. Libr. 2018, 79, 768–784. [Google Scholar] [CrossRef] [Green Version]

- Cooke, N.A. Posttruth, truthiness, and alternative facts: Information behavior and critical information consumption for a new age. Libr. Q. 2017, 87, 211–221. [Google Scholar] [CrossRef]

- Bluemle, S.R. Post-Facts: Information Literacy and Authority after the 2016 Election. Portal Libr. Acad. 2018, 18, 265–282. [Google Scholar] [CrossRef]

- Pawley, C. Information literacy: A contradictory coupling. Libr. Q. Inf. Community Policy 2003, 73, 422–452. Available online: https://www.jstor.org/stable/4309685 (accessed on 19 June 2021).

- Fallis, D. A conceptual analysis of disinformation. iConference 2009. Available online: https://www.ideals.illinois.edu/handle/2142/15205 (accessed on 15 June 2021).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Weiss, A.P.; Alwan, A.; Garcia, E.P.; Kirakosian, A.T. Toward a Comprehensive Model of Fake News: A New Approach to Examine the Creation and Sharing of False Information. Societies 2021, 11, 82. https://doi.org/10.3390/soc11030082

Weiss AP, Alwan A, Garcia EP, Kirakosian AT. Toward a Comprehensive Model of Fake News: A New Approach to Examine the Creation and Sharing of False Information. Societies. 2021; 11(3):82. https://doi.org/10.3390/soc11030082

Chicago/Turabian StyleWeiss, Andrew P., Ahmed Alwan, Eric P. Garcia, and Antranik T. Kirakosian. 2021. "Toward a Comprehensive Model of Fake News: A New Approach to Examine the Creation and Sharing of False Information" Societies 11, no. 3: 82. https://doi.org/10.3390/soc11030082

APA StyleWeiss, A. P., Alwan, A., Garcia, E. P., & Kirakosian, A. T. (2021). Toward a Comprehensive Model of Fake News: A New Approach to Examine the Creation and Sharing of False Information. Societies, 11(3), 82. https://doi.org/10.3390/soc11030082