Abstract

Artificial intelligence (AI) and machine learning (ML) advancements increasingly impact society and AI/ML ethics and governance discourses have emerged. Various countries have established AI/ML strategies. “AI for good” and “AI for social good” are just two discourses that focus on using AI/ML in a positive way. Disabled people are impacted by AI/ML in many ways such as potential therapeutic and non-therapeutic users of AI/ML advanced products and processes and by the changing societal parameters enabled by AI/ML advancements. They are impacted by AI/ML ethics and governance discussions and discussions around the use of AI/ML for good and social good. Using identity, role, and stakeholder theories as our lenses, the aim of our scoping review is to identify and analyze to what extent, and how, AI/ML focused academic literature, Canadian newspapers, and Twitter tweets engage with disabled people. Performing manifest coding of the presence of the terms “AI”, or “artificial intelligence” or “machine learning” in conjunction with the term “patient”, or “disabled people” or “people with disabilities” we found that the term “patient” was used 20 times more than the terms “disabled people” and “people with disabilities” together to identify disabled people within the AI/ML literature covered. As to the downloaded 1540 academic abstracts, 234 full-text Canadian English language newspaper articles and 2879 tweets containing at least one of 58 terms used to depict disabled people (excluding the term patient) and the three AI terms, we found that health was one major focus, that the social good/for good discourse was not mentioned in relation to disabled people, that the tone of AI/ML coverage was mostly techno-optimistic and that disabled people were mostly engaged with in their role of being therapeutic or non-therapeutic users of AI/ML influenced products. Problems with AI/ML were mentioned in relation to the user having a bodily problem, the usability of AI/ML influenced technologies, and problems disabled people face accessing such technologies. Problems caused for disabled people by AI/ML advancements, such as changing occupational landscapes, were not mentioned. Disabled people were not covered as knowledge producers or influencers of AI/ML discourses including AI/ML governance and ethics discourses. Our findings suggest that AI/ML coverage must change, if disabled people are to become meaningful contributors to, and beneficiaries of, discussions around AI/ML.

1. Introduction

Artificial intelligence (AI) and machine learning (ML) are applied in many areas [1] such as medical technologies [2,3], big data [4], various neuro linked technologies [5,6,7,8,9,10,11,12,13,14,15], autonomous cars, drones, robotics, assistive technologies[16,17,18], gaming, urban design, various forms of surveillance, and the military. Many countries have AI strategies [19,20] and numerous societal and other implications of AI/ML have been identified [1,5,21,22,23,24,25,26,27,28]. Discourses under the header AI for “social good” [21,29,30,31,32,33,34,35,36] and other words with similar connotation such as “common good” [37] and “for good” [38] explicitly look at how to make a positive contribution to society. According to the Canadian Institute for Advanced Research (CIFAR), which coordinates the Canadian AI strategy, its “AI & Society program aims to examine the questions AI will pose for society involving diverse topics, including the economy, ethics, policymaking, philosophy and the law” [39]. How to govern AI (if possible, all the way from the anticipatory to the implementation stage) is one focus of the discussions around the societal impact of AI [39,40,41,42,43,44,45,46,47,48] and includes terms such as social governance [19].

Disabled people1 can be impacted by AI/ML-driven advancements in several ways:

- (a)

- as potential non-therapeutic users (consumer angle)

- (b)

- as potential therapeutic users

- (c)

- as potential diagnostic targets (diagnostics to prevent ‘disability’, or to judge ‘disability’)

- (d)

- by changing societal parameters caused by humans using AI/ML (military, changes in how humans interact, employers using it in the workplace, etc.)

- (e)

- AI/ML outperforming humans (e.g., workplace)

- (f)

- increasing autonomy of AI/ML (AI/ML judging disabled people)

As such, disabled people have a stake in AI/ML advancements and how they are governed. Furthermore, disabled people have many distinct roles to contribute to AI/ML advancement discussions in general and in particular to AI/ML ethics and governance discussions, such as therapeutic and non-therapeutic user, knowledge producer, knowledge consumer, influencer of the discourses, and victims. At the same time, it is noted that disabled people face particular barriers to participation, knowledge production, and knowledge consumption of governance discussions [49].

Given the wide reaching and diverse impacts of AI/ML on disabled people and the potential roles of disabled people in AI/ML discourses, the aim of our scoping review drawing from academic literature, Canadian newspapers, and Twitter tweets was to answer the following research questions: How does the AI/ML focused literature covered engage AI/ML in relation to disabled people? What is said and not said? What is the tone of AI/ML coverage? How are disabled people defined? Are disabled people mentioned in relation to “AI for good” or “AI for social good”? What roles, identities, and stakes are assigned to disabled people? Lastly, which of the above-mentioned potential effects of AL/ML on disabled people (a–f) are present in the literature?

1.1. Portrayal and Role, Identity, and Stake Narrative of Disabled People and AI/ML

Many factors influence how AI/ML is discussed and what is said or not said but could have been said [50,51,52,53]. How one defines disabled people [54,55,56] is one such factor that can influence how a problem is defined and what solution is sought in relation to disabled people [57,58] and how a disabled person is portrayed impacts discourses [59,60]. As such how disabled people are defined and portrayed within AI/ML discourses influences how AI/ML is discussed and what is said or not said, but could have been said, in relation to disabled people.

There are many ways one can define and portray disabled people. The terms “disabled people” and “people with disabilities” for example, are often used to depict the social group of disabled people [61] and the social issues they face. Whereby, the term “patient” is mostly used to focus on the medical aspect of the disabled patient.

According to role theory, how one is portrayed impacts the role one is expected to have [62,63,64,65]. Role expectation of oneself is impacted by the role expectations others have of oneself [66]. According to identity theory, the perception of ‘self’ is influenced by the role one occupies in the social world [67]. In relation to AI/ML, disabled people could have roles such as being therapeutic and non-therapeutic users of AI/ML linked products, being victims of AI/ML product use and processes or being knowledge producers and knowledge consumers of the products and processes. Disabled people could also have the role of influencer of, and knowledge producer for, AI/ML ethics and governance discourses.

One can have many different identities whereby different identities have different weight for oneself [67]. Based on the roles disabled people can have within the AI/ML discourse, disabled people can choose various identities they exhibit in AI/ML discourses ranging from passive user of AI/ML products to active shaper of AI/ML discourses. However, it is well described that there are many barriers for disabled people to live out certain identities and perform certain roles such as being influencers of, and knowledge producers for, science and technology governance and ethics discourses [49].

How an individual perceives oneself influences how they perceive actions such as disabling actions towards themselves [68], which in turn influences what role they see themselves occupying in relation to AI/ML discourses. It also influences intergroup relationships [68,69] between disabled and non-disabled people within the AI/ML discourses and the relationship between different disability groups linked to different identities of self.

Indeed identities are seen to have five distinct features: identities are social products, self-meaning, symbolic in a sense that one’s response is similar to the response of others, reflexive, and a “source of motivation for action particularly actions that result in the social confirmation of the identity” [70] (p. 242), whereby all five features play themselves out within AI/ML discourses. Dirth and Branscombe asked: “For instance, to what degree do members of the disability community use medical, rehabilitation, and technological means to distance themselves from the stigmatized identity, and do orientations to treatment vary as a function of social identification strength?” [68] (p. 809). How this question is answered is one factor influencing the roles disabled people occupy in relation to AI/ML discourses.

Stakeholder theory has been applied to organizational management for some time, and it can also be applied to our study. According to the stakeholder theory, a stakeholder’s action expresses their identity [71]. In our case, how disabled people are portrayed and what identity is attached to disabled people by others and disabled people attach to themselves could therefore be one factor that influences stakeholder salience and stakeholder identification [72]. This includes whether disabled people see themselves or are seen by others as stakeholders in the AI/ML discourses and what is seen as the stake.

Regarding the potential role of disabled people, the question is whether only the ability to fulfill the role of the therapeutic or non-therapeutic user of AI/ML products is at stake or if there are other aspects deemed to be at stake and who sees what being at stake. According to Mitchell et al., there are three main factors identifying a stakeholder: “(1) the stakeholder’s power to influence the firm, (2) the legitimacy of the stakeholder’s relationship with the firm, and (3) the urgency of the stakeholder’s claim on the firm” [72] (p. 853). Applying this to disabled people and AI/ML discourses, the questions are whether disabled people have the stakeholder power to influence AI/ML discourse; whether disabled people are seen to be socially impacted by AI/ML discourses, which would give legitimacy to disabled people to be involved in AI/ML ethics and governance discussions; and whether there is a feeling of urgency for disabled people to be engaged in AI/ML discourses. Each of Mitchell et al.’s three points might be answered differently, and different actions might be flagged as needed in relation to AI/ML and disabled people depending on the role assigned to disabled people in relation to AI/ML, which in turn is impacted in part by the identity of the disabled person. A narrow and broad definition of stakeholder exists [73,74]. Michell et al. list Freeman’s broad definition, “A stakeholder in an organization is (by definition) any group or individual who can affect or is affected by the achievement of the organization’s objectives”, and Clarkson’s narrow definition, “Voluntary stakeholders bear some form of risk as a result of having invested some form of capital, human or financial, something of value, in a firm. Involuntary stakeholders are placed at risk as a result of a firm’s activities” [72]. Both definitions would suggest that disabled people are stakeholders in the AI/ML discourses in all their potential roles already mentioned. Various articles outline ways to identify stakeholder groups [75]. However, depending on identity and role, disabled people have different stakes in AI/ML discourses.

1.2. The Tone of the Discourse

The tone of a discourse is another factor that can influence how AI/ML is discussed and what is said or not said but could have been said [50,51,52,53]. A techno-optimistic or techno-enthusiastic tone [76,77] could not lend itself to cover disabled people as being negatively impacted by AI/ML advancements (whereby a techno-skeptic or techno-pessimistic tone could), but rather, facilitates the coverage of disabled people as potential therapeutic or non-therapeutic users. Words such as risk, challenge, and problem can be used to shape any given topic [78], including what is seen at stake for disabled people and what AI/ML development is impacting disabled people. How one defines disabled people and the tone of discourse influence how such words are used. For many targets of AI/ML, such as assistive devices and technologies, it is known that disabled people already face many challenges such as costs, access, and design issues [79], the imagery of the disabled person [58,80], and the fear of being judged for using them [81,82] or not using them [79]. Such aspects would not be covered under a techno-optimistic tone.

1.3. The Issue of Social Good

Social good is for example described as achieving “human well-being on a large scale” [83], although no one definition is accepted. The concept of “social good” is applied to many areas such as education [84,85] (wherein, education is changing the meaning of “social good” [86]), water [87], health [88], food [89], meaningful work [90], healthcare [91], and sustainability [92]. Many conflicts are outlined around “social good” and some describe “social good” as a “dispensable commodity” [93].

AI is also discussed in relation to “social good” [21,29,30,31,32,33,34,35,36] and other words with similar connotation such as “common good” [37] and “for good” [38]. There are subfields which include “data for social good” [94,95], “IT for social good” [96], and “computer for social good” [97,98,99]. The ethical framework for “AI for the Social Good” defines AI as “addressing societal challenges, which have not yet received significant attention by the AI community or by the constellation of AI sub-communities, [the use of] AI methods to tackle unsolved societal challenges in a measurable manner” [37]. In a workshop focusing explicitly on “AI for social good”, the term “social good” is described as follows: “[social good] is intended to focus AI research on areas of endeavor that are to benefit a broad population in a way that may not have direct economic impact or return, but which will enhance the quality of life of a population of individuals through education, safety, health, living environment, and so forth” [36]. However, what exactly constitutes “AI for social good” [100,101] and what “makes AI socially good” [31] (p. 1) is still debated. Cowl et al. argued that the following seven factors are essential for AI for social good: “(1) falsifiability and incremental deployment; (2) safeguards against the manipulation of predictors; (3) receiver-contextualised intervention; (4) receiver-contextualised explanation and transparent purposes; (5) privacy protection and data subject consent; (6) situational fairness; and (7) human-friendly semanticisation” [31] (p. 3). AI can be a major force for social good; it depends in part on how we shape this new technology and the questions we use to inspire young researchers [36].

Disabled people are impacted by who defines “social goods”, what is defined as a “social good”, who has access to the “social good”, and whether “social good” discourses focus on just “doing good” or also “preventing bad”. Many of the problems faced by disabled people highlighted in the United Nations Convention on the Rights of Persons with Disabilities [102] indicate inequitable access to “social goods”. Discourses around “social good” often lead to detrimental consequences for disabled people, such as eugenic practices done for the “social good” [103], how and what meanings of work are seen as a “social good”, and how this is operationalized [90,104] or how social justice as a public good is conceived [93].

2. Methods

2.1. Study Design

We chose a modified scoping review drawing from another study [105] as the most appropriate approach for the study given our research questions to identify the current understanding of a given topic [106]; in our case, how the AI/ML literature covered engages with disabled people. Our study followed a modified version of the stages outlined by Arksey and O’Malley [105], namely, identifying the review’s research question, identifying databases to search, generating inclusion/exclusion criteria, recording the descriptive quantitative results, selecting literature based on descriptive quantitative results for directed content analysis of qualitative data, and reporting findings of qualitative analysis.

2.2. Identifying and Clarifying the Purpose and Research Questions

The objective of our study was to ascertain whether, to what extent, and how AI/ML focused academic literature, Canadian newspapers, and Twitter tweets engaged with disabled people in general and in relation to “for good” and “social good”. Our study focused on literature directly using the terms “artificial intelligence”, “AI”, or “machine learning”. As such, we did not engage with literature that mentioned only related terms such as “ICT” or “web accessibility” without also mentioning the AI related terms.

Our research questions were: How does academic literature, Canadian newspapers, and Twitter tweets cover AI/ML in relation to disabled people? What is said and not said? How are disabled people defined in the literature covered? Are disabled people mentioned in relation to “for good” or for “social good”? What is the tone of AI/ML coverage of disabled people? What roles, identities, and stakes are assigned to disabled people in the AI/ML literature? Are disabled people engaged with as active agents, such as influencers of development of AI/ML products and processes or ethics and governance discussions? Lastly, which of the below potential effects of AL/ML on disabled people are present in the literature?

- (a)

- as potential non-therapeutic users (consumer angle)

- (b)

- as potential therapeutic users

- (c)

- as potential diagnostic targets (diagnostic to prevent disability or to judge disability)

- (d)

- by changing societal parameters caused by humans using AI/ML (military, changes in how humans interact, employers using it in the workplace, etc.)

- (e)

- AI/ML outperforming humans (see workplace)

- (f)

- increasing autonomy of AI/ML (AI/ML judging disabled people)

2.3. Data Sources and Data Collection

Canadian newspapers were chosen as a source of data because a) the government of Canada’s 2017 AI strategy includes the investigation of the impact of AI on society as one focus, which could be discussed in newspapers; b) Canada has a developed AI/ML academic community that could contribute to newspaper coverage; and c) over 75% of Canadians still read newspapers [107,108] and as such, are influenced by what they read. Tweets from Twitter.com were searched, as Twitter is seen to be highly effective in its message propagation [109,110,111]. Academic literature was chosen because academic discourses are to generate evidence that informs policies [112,113,114].

Eligibility criteria and search strategies for articles:

2.3.1. Search Strategy 1: Newspapers

We used the Canadian Newsstream, a database consisting of n = 300 English language Canadian newspapers, from January 1980 to June 2019. An explicit search strategy was employed to obtain the data [115].

We searched in the full-text of articles for the presence of AI related terms (“artificial intelligence” OR “machine learning” OR “AI”) in conjunction with the term patient (results not downloaded) and terms linked to disabled people (results downloaded): “disabled people” OR “people with a disability” OR “deaf people” OR “blind people” OR “people with disabilities” OR “people with a learning disability” OR “people with a physical disability” OR “people with a hearing impairment” OR “people with a visual impairment” OR “people with a mental disability” OR “people with a mental health” OR “learning disability people” OR “physical disability people” OR “physically disabled people” OR “hearing impaired people” OR “visually impaired people” OR “mental disability people” OR “mental health people” OR “autism people” OR “autistic people” OR “people with autism” OR “ADHD people” OR “people with ADHD” OR “people with a mental health” OR “people with a mental disability” OR “people with mental disabilities” OR “mental health people” OR “mental disability people” OR “mentally disabled people” OR “disabled person”, OR “person with a disability” OR “deaf person” OR “blind person” OR “person with disabilities” OR “person with a learning disability” OR “person with a physical disability” OR “person with a hearing impairment” OR “person with a visual impairment” OR “person with a mental disability” OR “person with a mental health” OR “learning disability person” OR “physical disability person” OR “physically disabled person” OR “hearing impaired person” OR “visually impaired person” OR “mental disability person” OR “mental health person” OR “autism person” OR “autistic person” OR “person with autism” OR “ADHD person” OR “person with ADHD” OR “person with a mental health” OR “person with a mental disability” OR “person with mental disabilities” OR “mental health person” OR “mental disability person” OR “mentally disabled person”.

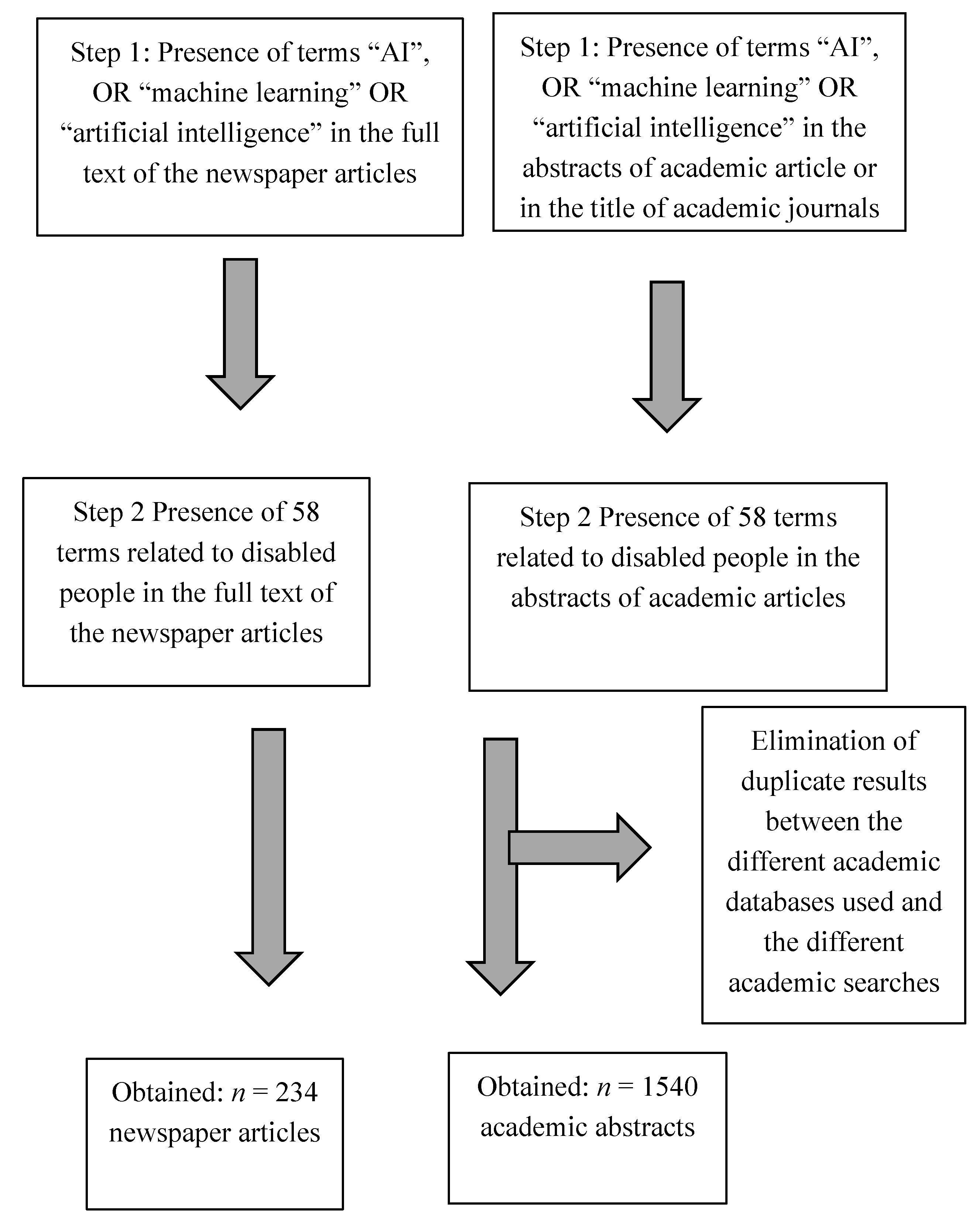

We obtained n = 234 non-duplicate newspaper articles for download (Figure 1).

Figure 1.

Flow chart of the selection of academic abstracts and full-text newspaper articles for qualitative analysis.

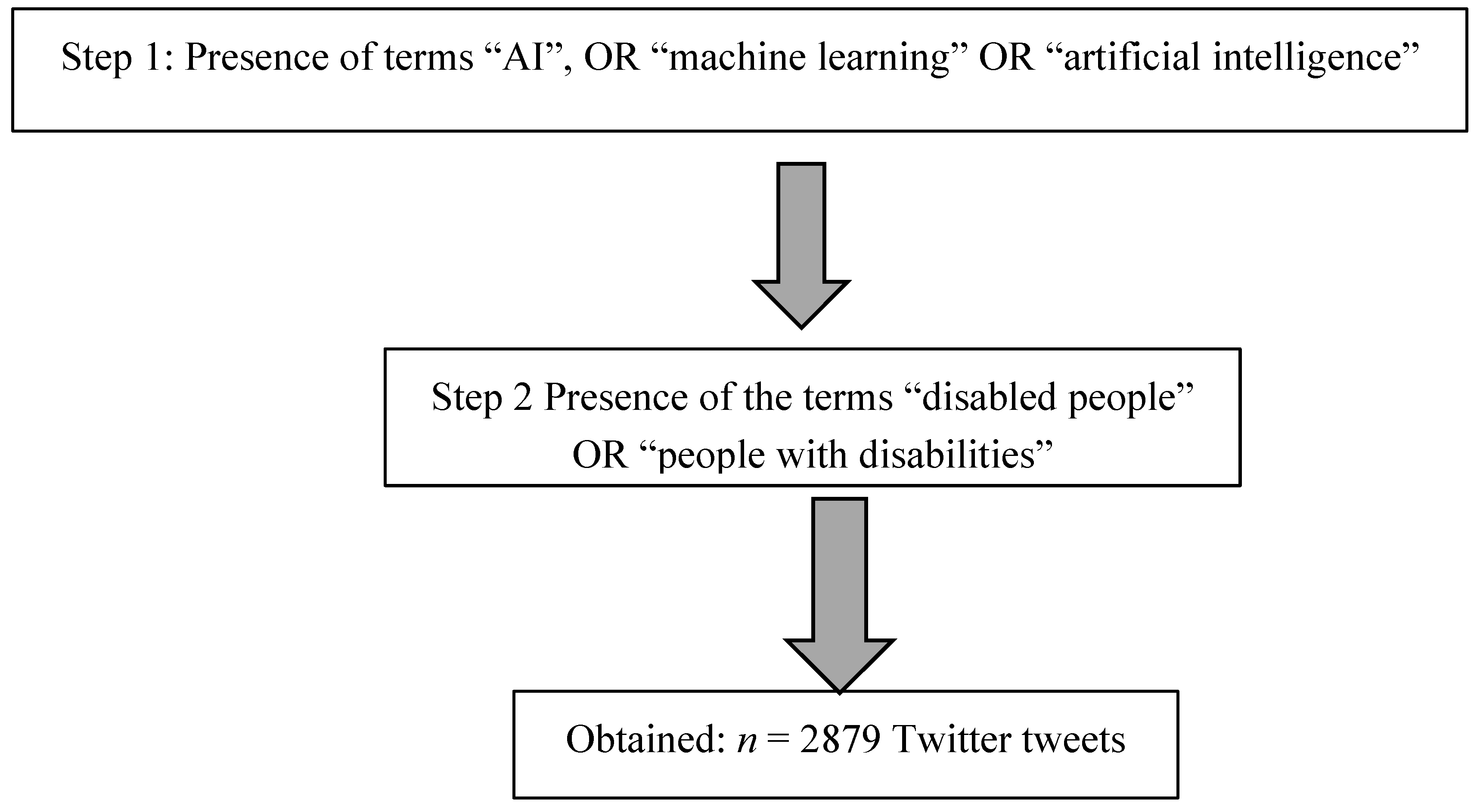

2.3.2. Search Strategy 2: Twitter

For tweets, the search engine of the Twitter.com webpage was searched on 17 August 2018.

- Step 2a:

- We searched for the presence of “AI” OR “machine learning” OR “artificial intelligence”.

- Step 2b:

- We searched for the presence of “disabled people” OR “people with disabilities” within the tweets of step 2a.

We obtained n = 2879 unique tweets for download (Figure 2).

Figure 2.

Flow chart of the selection of Twitter tweets for qualitative analysis.

2.3.3. Search Strategy 3: Academic Literature

Eligible articles were identified using explicit search strategies [115]. On 1 June 2019, we searched the academic databases EBSCO-ALL, an umbrella database that includes over 70 other databases, and Scopus, which incorporates the full Medline database collection with no time restrictions. These two databases were chosen because together they contain journals that cover a wide range of topics from areas relevant to answer the research questions. The two databases contain over 4.8 million articles published by journals that contain the terms “AI” OR “artificial intelligence” OR “machine learning” OR “IEEE” in the journal title and include journals focusing on societal aspects of AI such as the journal “AI and Society” and the proceedings of the AAAI/ACM Conference on AI, Ethics, and Society. We also searched the ACM Guide to Computing Literature, arXiv, and the IEEE Xplore digital databases.

- Strategy 3a:

- We searched the abstracts of EBSCO-ALL, Scopus, arXiv, IEEE Xplore, and ACM Guide to Computing Literature using the same search terms used for the newspapers and the same download criteria.

- Strategy 3b:

- We searched Scopus for the presence of the AI terms used for the newspapers in the academic journal title and the presence of the term “patient” and the 58 terms depicting disabled people we used for the newspapers in the abstracts of the academic articles; we used the same download criteria as mentioned under newspapers.

Altogether, after elimination of duplicates, we obtained n = 1540 unique academic abstracts for download (Figure 1).

Not using full-text searches of academic literature as an exclusion criterium has been used in scoping reviews conducted by others [116] and was chosen to ensure that AI/ML and disabled people were the primary theme of the article found. As an additional exclusion criterion, we only searched for scholarly peer-reviewed journals in EBSCO-ALL, while we searched for reviews, peer reviewed articles, conference papers, and editorials in Scopus. The other databases were searched without exclusion limits. All databases were searched for the full time-frame available.

2.4. Data Analysis

To answer the research questions, we (both authors) employed a) a descriptive quantitative analysis approach and b) a thematic qualitative content approach [117,118] using a combination of both manifest and latent content coding methods [118,119,120,121,122]. Manifest coding is used to examine “…the visible, surface, or obvious components of communication” [121], most specifically the frequency and location of a certain “recording unit” [120]. Latent coding, on the other hand, is used to assist with “…discovering underlying meanings of the words or the content” [117], including the discourses, settings, and tone reflected in the mentions of AI themes in relation to disabled people [117,121]. We employed the manifest coding approach on the level of the database searches (non-downloaded material) and on the level of the downloaded 1540 academic abstracts, 234 full-text newspaper articles, and 2879 tweets. We employed latent coding and a directed qualitative content analysis approach keeping in mind the research questions [117] on the level of the downloaded material. As to the coding procedure, we familiarized ourselves with the downloaded content [123]. We then independently identified and clustered the themes based on meaning, repetition, and the research questions [117,121].

2.5. Trustworthiness Measures

Trustworthiness measures include confirmability, credibility, dependability, and transferability [124,125,126]. No difference in the hit counts for terms on the level of the databases and the downloaded material was evident between both authors. Differences in codes and theme suggestions of the qualitative data using latent coding were few and discussed between both authors and revised as needed. Confirmability is evident in the audit trail made possible by using the Memo and coding functions within ATLAS.Ti 8™. As for transferability, our methods description gives all the required information for others to decide whether they want to apply our keyword searches on other data sources such as grey literature, or AI and machine learning literature in other languages, or whether they want to perform more in-depth latent coding based on our manifest coding.

2.6. Limitation

Our findings cannot be generalized to the whole academic literature, non-academic literature, or non-English literature. However, our findings allow for conclusions to be made within the parameters of the searches. Our newspaper results cannot be generalized to newspapers in general or newspapers in Canada. Our English language Twitter results cannot be generalized to non-English Twitter results or results one might obtain in other countries or with other social media platforms.

3. Results

The results are divided into four parts: In part 1, we give quantitative data from our first search stage (non-downloaded stage) and second stage (downloaded material) on some terms used for disabled people and the focus of the coverage. In parts 2–4, we present the results of the content analysis of the downloaded material. In part 2 we focus on the tone of the AI/ML coverage. In part 3, we focus on the role, identity, and stake narrative of AI/ML on disabled people and in part 4 we focus on the presence of the terms “social good” and “for good”.

3.1. Part 1: Classification of Disabled People and Focus of Coverage

How a disabled person is classified often sets the stage for what a discourse focuses on. In the first step, we searched for three terms (patient, disabled people, people with disabilities) in academic literature, newspapers, and Twitter tweets.

The academic literature, newspapers, and Twitter tweets contained at least 20 times more content for the term “patient” than for the terms “disabled people” and “people with disabilities” together. With the term “patient”, we obtained 23,990 academic hits and 6154 newspaper hits. With the terms “disabled people” and “people with disabilities” together we obtained 1258 academic hits and 214 newspaper hits.

As to Twitter tweets, we found 2879 hits for the terms “disabled people” and “people with disabilities” from the beginning of Twitter till 17 August 2018. Whereas, the term “patient” generated 2700 hits from 1–17 August 2018 alone (all-time hit not obtained for “patient”). The terms “disabled people” and “people with disabilities” generated 119 hits for that time frame.

The vastly higher numbers for the term “patient” indicate that health is a major focus for AI/ML discourse covering disabled people in the academic literature, newspapers, and Twitter tweets.

In a second step we analyzed the downloaded 1540 academic abstracts, 234 full-text newspaper articles, and 2879 tweets obtained by using terms related to disabled people excluding the term “patient” (Figure 1 and Figure 2), for health-related content (other content is dealt with in another section).

Within the 1540 academic abstracts, the following health-linked terms were mentioned: “health” (254 abstracts), “patient” (167), “therapy” (34), “rehabilitation” (171), “care” (220), “medical” (62), “clinical” (45), “treatment” (33), “disease” (41), “disorder” (44), “healthy” (26), “diagnos*” (36), “mental health” (17), and “healthcare”/”health care” (67).

As to the newspapers, although many of the health-related terms were mentioned many times in the 234 newspaper articles (“health” was mentioned 720 times, “care” (790), “healthcare”/”health care” (173), “patient” (110), and “disease” (88)), many of these hits were false positives meaning that most hits did not relate to content that covered disabled people and fewer of these hits were linked to disabled people in relation to AI/ML. We found five articles that covered the terms “healthcare”/”health care” in relation to disabled people and AI/ML, three for “rehabilitation”, two for “care”, and one article each for “treatment”, “therapy”, “disease”, and “disorder”.

Within the 2879 Twitter tweets, the following health-linked terms were mentioned: “health” (117 times), “patient” (2), “therapy” (4), “rehabilitation” (2), “care” (56), “medical” (5), “clinical” (0), “treatment” (0), “disease” (1), “disorder” (0), “healthy” (1), “diagnos*” (0), “mental health” (3), and “healthcare”/”health care” (12).

The findings suggest that health is still a major focus even in articles downloaded based on terms related to disabled people excluding the term “patient”.

3.2. Part 2: Tone of Coverage

The tone in the downloaded content from all three sources was predominantly techno-optimistic. We found no content covering the negative effects of AI/ML use by society on disabled people or negative effects of autonomous AI/ML on disabled people in the academic literature and newspapers and little in tweets. There are many terms such as “ethic”, “risk”, “challenge”, “barrier”, “problem’, and “negative”, which have the potential to present a differentiated picture of what impact AI/ML advancements could have for disabled people but were not used to convey existing, or potentially problematic societal issues disabled people might face. Other terms that could be used also to cover existing, or potentially problematic societal issues disabled people might face, were hardly present such as “justice” (3), “equity” (2), and “equality” (4).

3.2.1. Academic Literature

Most abstracts followed a techno-optimistic narrative, for example “During the last decades, people with disabilities have gained access to Human-Computer Interfaces (HCI); with a resultant impact on their societal inclusion and participation possibilities, standard HCI must therefore be made with care to avoid a possible reduction in this accessibility” [127] (p. 247). The term “negative” was not used to indicate an impact of AI/ML on disabled people. “Challenge” was linked to the use of products to compensate for a ‘bodily deficiency’ [128] but not to indicate societal changes enabled by AI products and processes that might pose challenges for disabled people. “At risk” was used to indicate medical and social consequences linked to the ‘disability’ [129] or “at risk” to not have access to a product [130]. “At risk” was not used within the context of disabled people being “at risk” of AI related products and processes. “Barrier” was used in the sense of not having access to the product [131] or that technology eliminates barriers [132], not that AI/ML generates societal or other negative barriers for disabled people. The focus of the term “problem” was on products helping to solve problems disabled people face due to their ‘disability’ [133], and access to a new product was flagged as a problem. “Problem” was not used to indicate that AI/ML generates societal problems for disabled people. “Ethics” was only mentioned in four academic abstracts. In the first abstract, authors argued that ethical issues are often not covered if the focus is on the consumer angle [134]. According to the second abstract of a paper that focused on the very issue of how ethics are covered in relation to disabled people and AI/ML, the conclusion was that very few articles exist [1]. In the third abstract, the authors suggested that ethical problems appear when hearing computer scientists work on Sign Languages (SL) used by the deaf community [135]. The fourth abstract, which focused on AI applied to robots for children with disabilities, acknowledged that there are ethical considerations around data needed by AI algorithms [136] without mentioning them.

3.2.2. Newspapers

A techno-optimistic tone was present throughout all newspaper coverage. To give one example, “companies like Microsoft and Google try to harness the power of artificial intelligence to make life easier for people with disabilities” [137] (p. B2). Terms such as “risk”, “challenge”, “barrier”, “problem”, and “negative” were not linked to disabled people. “Ethics” was mentioned once in which the ethical issue of whether to use invasive BCI or wait for non-invasive versions was highlighted, although the article is not clear whether this was about non-disabled people [138]. If the focus was not on disabled people, articles often mentioned negative aspects of AI/ML such as Stephen Hawkins warning about AI [139]. Many articles covered job loss by non-disabled people, for example “While numbers can vary wildly, one analysis says automation, robots and artificial intelligence (AI) have the potential to wipe out nearly 50 per cent of jobs around the globe over the next decade or two” [140] (p.A6). Not one article covered the threat of AI/ML to disabled people such as in relation to job situations.

3.2.3. Twitter

Within the 2879 tweets, the coverage was overwhelmingly techno-optimistic. Common phrases included “Empower people with disabilities” appeared in n = 439 tweets; “AI to help people with disabilities”, n = 414; “help disabled people”, n = 268; “AI to empower people with disabilities” n = 248, “Machine Learning Opens Up New Ways to Help Disabled People”, n = 170; “Artificial Intelligence Poised to Improve Lives of People With Disabilities”, n = 136; “AI can improve tech for people with disabilities” n = 74; or “AI can be a game changer for people with disabilities”, n = 14. There were n = 1739 tweets linked to the accessibility initiative of Microsoft using wording such as “AI can do more for people with disabilities” and “Microsoft is launching a $25 million initiative”, finishing the sentence with various versions of “to use Artificial Intelligence (AI) to build better technology for people with disabilities.”

The term “ethics” was mentioned 10 times; seven of which did not mention ethics explicitly in relation to AI and disabled people. One indicated that ethics needs to be tackled [141]. Two tweets mentioned actual ethical issues [142,143]. As to “barrier” in 18 tweets, 16 saw AI enabling technology to break down barriers. The term was used once to indicate newly generated problems for disabled people [144]. “Challenge” was present in 49 tweets of which all were in regard to AI taking on the challenges disabled people face, such as “AI to help people with disabilities deal with challenges” [145]. “Risk” was mentioned eight times with three seeing risks of more inequity for disabled people. The term “problem” was used in 11 tweets, six of which indicated the problem of AI use causing problems for disabled people such as problematic use of an algorithm [146,147,148], problems around suicide [149], personality tests [150], and job hiring [151].

3.3. Part 3: Role, Identity, and Stake Narrative

In the content downloaded from all sources, the data we found engaged with disabled people predominantly as therapeutic and non-therapeutic users.

Within the 1540 academic abstracts, the term “user” was employed 1643 times and the term “consumer” 29 times. Linked to the user angle was the presence of terms such as “design” (1141), “access” (1756), “accessibility” (803), and “usability” (195). Within the 1141 times the term “design” was used, all but eight focused on products envisioned specifically for disabled people. Of these eight, one gave a general overview of design for all and the convention on the rights of persons with disabilities [152]; one covered the advancement on “access for all” for a part of Germany [153]; one was a review of social computing (SC) for social inclusion [154]; one made the case of access issues with the Prosperity4all platform [155]; one was a review of ICT and emergency management research [156]; and one was about urban design education [157].

Within the 234 full-text newspaper articles, the term “user” was mentioned 91 times but only 36 times in relation to disabled people and three times in relation to disabled people and AI/ML (AI making hearing aids better once and AI and robotics, twice). The term “consumer’ was mentioned 41 times but only four times in relation to disabled people and not once in relation to disabled people and AI/ML. The term “design*” was mentioned 33 times in relation to disabled people and two times in relation to disabled people and AI/ML, one reporting on an autonomous homecare bot and one mentioning “AI for inclusive design”. “Access *” was mentioned 21 times in relation to disabled people; twice in conjunction with disabled people and AI covering the Microsoft AI for accessibility initiative and an accessibility sport hub chatbot that finds accessible sport programs and resources for disabled people.

Within the 2879 Twitter tweets, the term “user” was employed 17 times and the term “consumer” seven times. Linked to the user angle was the presence of terms such as “design” (161), “access” (989), “accessibility” (672), and “usability” (3).

In all sources, we did not find any discussions linked to AI/ML governance involving disabled people or disabled people as knowledge producers (outside of the consumer angle and being involved in development of AI/ML as consumers) (for tweet examples see [158,159,160]).

Two tweets questioned the helping narrative [161,162]. We did not find any engagement with the potential negative impacts of AI/ML use by members of society and autonomous AI/ML action for disabled people.

3.4. Part 4: Mentioning of “Social Food” or “for Good”

The term “social good” was mentioned once in the newspaper data covering Google’s initiative "AI for Social Good" and mentioning military and job losses as negative consequences; however, disabled people were not mentioned [163]. “For good” was mentioned twice. One article stated “Microsoft has committed $115 million to an "AI for Good" initiative that provides grants to organizations harnessing AI for humanitarian, accessibility and environmental projects” [163] (p. A47). The second article focused on the “Award for good”, which simply stated that AI has the possibility to improve the life of disabled people [164]. The phrases “social good” or “for good” were not mentioned in the academic abstracts or Twitter tweets downloaded.

4. Discussion

Our scoping review revealed that a) the term “patient” was used 20 times more than the terms “disabled people” or “people with disabilities” together; b) the tone of coverage was mostly techno-optimistic; c) the main role, identity, and stake narratives surrounding disabled people reflected the roles and identities of therapeutic and non-therapeutic users of AI/ML advanced products and processes and stakes linked to fulfilling these roles and identities; d) content related to AI/ML causing social problems for disabled people was nearly absent (beyond the need to actually access AI/ML related technologies or processes); e) discussions around disabled people being involved in, or impacted by, AI/ML ethics and governance discourses were absent; and f) the absence of content around “AI for good” and “AI for social good” in relation to disabled people. These findings were evident for the academic abstracts, full-text newspaper articles, and Twitter tweets. In the remainder of the discussion section, we discuss our findings in relation to the four parts of the results section.

4.1. Part 1: Classification of Disabled People and Focus of Coverage

Our study showed that the term “patient” was present at least 20 times more than the terms “disabled people” and “people with disabilities” together in all the material used suggesting that AI/ML is much more discussed around the role and identity of the “patient” and what is at stake for “patients” than in relation to the terms “disabled people” or “people with disabilities”. Our study also showed that health is more of a focus in relation to disabled people than social issues experienced by disabled people in the material covered. These findings are problematic for disabled people. Objective information, good decision making, resource allocation, innovation, diffusion of new technologies [165], and functionality of the health care system [166] are some stakes identified for patients. However, stakes such as objective information, good decision making, resource allocation, innovation, and diffusion of new technologies will be different outside of the “patient” arena namely in the arena of the social life of disabled people. Furthermore, there are many other issues at stake for disabled people that are outside the health and healthcare arena, as evident by the many issues beyond health and healthcare flagged as problematic for disabled people in the UN Convention on the Rights of Persons with Disabilities [102]. Many of these issues are already or will be impacted by advancements in AI/ML, such as employment.

Whether AI/ML is discussed with a health or non-health focus and patient versus non-patient focus in relation to disabled people is one factor that influences how AI/ML is discussed and what is said or not said but could have been said [50,51,52,53]. A focus on health and content linked to the term “patient” would fit with the role and identity narrative of disabled people as therapeutic users and ‘disability’/‘impairment’ as a diagnostic target. The possible role and identity of disabled people as knowledge producers and consumers would be linked to the topics of health and healthcare and not societal issues disabled people face due to AI/ML use by others and the increasing appearance of autonomous AI. Furthermore, if disabled people would be involved as influencers of, and knowledge producers for AI/ML governance and ethics discourses, the role and identity of disabled people that focuses on patient, health, and healthcare would lead to different interventions and contributions to the AI/ML governance and ethics discourses by disabled people and different groups of disabled people would be involved than the role and identity of disabled people that focuses on being negatively impacted as members of society by AI/ML use by others and the increasing appearance of autonomous AI. Indeed, a similar difference of focus and involvement of groups of disabled people based on their identity can be observed in discussions around anti-genetic discrimination laws [60].

That the literature covering AI/ML uses the term “patient” more frequently than the terms “disabled people” or “people with disabilities” is similar to many other technology discussions such as brain computer interfaces [80] or social robotics [58]. However, such bias in focus has an impact on AI/ML use not present in relation to other technologies. Machine learning (ML) is centered around the goal of making artificial machines learn without supervision. However, what the artificial machine learns depends on the data it obtains. Uneven data mean biased judgments. Amazon recently stopped their experiment of using AI instead of a human to deal with hiring procedures because the AI driven hiring process was too biased towards males [167]. Given the lopsided quantity of clinical/medical/health versus non-medical/clinical/health role, identity, and stake narratives related to disabled people present in the literature, one can predict that AI technology will learn a biased picture of disabled people and will not learn about many of the problems that AI/ML might cause for disabled people.

According to role theory, how one is portrayed impacts the role one is to have [62,63,64,65]. As such, one can predict that AI/ML will see certain roles (therapeutic and non-therapeutic user) as applicable to disabled people but not others (influencer of ethics and governance of AI/ML discussions). Role expectations of oneself are impacted by the role expectations others have of oneself [66]. This correlation is linked so far to human beings acting as the other, whereby one can debate with the other human being at least in principle if one does not agree. However, if the other is an autonomous AI this will disempower disabled people to go against the other as one cannot argue with the autonomous AI entity.

According to identity theory, the perception of ‘self’ is influenced by the role one occupies in the social world [67]. As such, if the role within the AI/ML social world disabled people occupy continues to be mostly the role of patient and therapeutic and non-therapeutic user, it will bias the perception of self to move towards such patient and therapeutic and non-therapeutic user identity and make the exhibition of other identities difficult and problematic. Indeed, disabled people in an open forum discussion on sustainability stated that the medical role of disabled people predominantly present in many discourses hinders the involvement of disabled people in policy discussions [168,169]. In that consultation many demands were flagged in relation to academics [168]. Although the focus was on sustainable development these demands are also applicable to AI/ML discourses:

“[w]ork closely with all other stakeholders in the area and undertake research which can provide an evidence base for addressing relevant policy and practice challenges”;

“should undertake research on relevant topics to increase knowledge and understanding of the CRPD and the human rights-based approach to disability, and to develop tools for development programming and planning”;

“monitor CRPD”;

“provide evidence for effective inclusive practices in development/research”;

“to research, publish and interrogate reliable data on disability and ensure that it is disseminated to inform policy and programs and the appropriate levels”;

“to conduct action research to highlight and develop efficient tools and methods to accelerate disability-inclusive policies and practices”.

“teach universal design”;

“capacity building and awareness-raising throughout society” [168] (pp. 4162–4163).

One can predict that some demands, namely to “include disability as a topic in relevant study courses” and to “develop, organize and monitor specific study courses” [168] (pp. 4162–4163), are not met by the current AI/ML discourse although we did not investigate curricula content in our study.

Our data suggest that many of these roles and actions were not met in the AI/ML discourse. It is not enough to discuss disabled people as therapeutic or non-therapeutic users of AI/ML products. These aspects are certainly important but disabled people have more at stake than access to AI/ML influenced product and processes and role and identity must move beyond understanding disabled people only as therapeutic and non-therapeutic users of AI/ML influenced products and processes.

4.2. Part 2 and 3: Tone of Coverage and Role, Identity, and Stake Narrative

The tone of coverage is another factor that influences how AI/ML is discussed and what is said or not said but could have been said [50,51,52,53]. We found that academic abstracts, newspaper articles, and Twitter tweets mostly exhibited a techno-optimistic tone. Situations such as where disabled people are not direct users but are impacted by bad design of autonomous AI, as outlined in an example of a sidewalk robot [170], were not present. This fits with the techno-optimistic focus of the literature covered.

Furthermore, all sources engaged in techno-optimistic way with disabled people in their roles and identities as therapeutic and non-therapeutic users and the stakes linked to these roles and identities. The mostly techno-optimistic tone and the limited role, identity, and stake narrative come with consequences.

4.2.1. The Issue of Techno-Optimism

The report “Ethical and societal implications of algorithms, data, and artificial intelligence: a roadmap for research” [34] lists the following as essential research topics: economic impact, inclusion, and equality; AI, labor, and the economy; governance and accountability; social and societal influences of AI; AI morality and values; AI and “social good”; and managing AI risk. All these topics should include a focus on the negative impact of AI/ML on disabled people. However, our data suggest that these topics are not researched keeping in mind disabled people. Indeed, one would not cover these topics within a techno-optimistic tone. The same is true for the many impacts and principles listed in “appendix 2” of the same report [34]. Most of these impacts and principle are relevant to disabled people and as such, should also be dealt with in relation to disabled people but cannot if the coverage is predominantly techno-optimistic, as we found.

The same report acknowledges that “different publics concern themselves with different problems and have different perspectives on the same issues” [34] (p. 56) and that technology can be a threat or an opportunity [34]. However, different publics also have different issues at stake even if they work on the same problem. For example, there is increasing literature indicating that many disabled people feel left out, feel not being taken into account in climate change discussions, and although disabled people also care about the environment and climate change, the discourse often instrumentalizes disabled people (for example using a medical identity of ‘disability’ [171] or does not take into account the impact of demanded climate change actions [172] on disabled people. The AI/ML discourse in all three sources we covered does not come close in what is needed to understand the issues disabled people already face and increasingly will face in relation to AI/ML advancements.

Justice is mentioned in many documents linked to AI governance [21,22,23,24,25] as is solidarity [23,25] and equity or equality [23,25,173] demanding a differentiated engagement with disabled people beyond the techno-optimistic tone we found in our study.

Various countries have AI strategies [19,20]. However, of the 26 strategies that are listed [19,20] only five mention disabled people, whereby the rest is based on a techno-optimistic view of AI/ML and disabled people [174,175,176,177,178,179].

Various AI strategies and reports mention the media [177,179,180,181], although none mentioned media in relation to disabled people. Our findings suggest that readers of the Canadian newspapers and AI tweets covered will rarely be triggered to think about inappropriate use or negative consequences of AI/ML in all their forms for disabled people. In a UK report, it is stated that many AI researchers and witnesses that connected to AI developments felt that the public had too negative a view of AI and its implications, and that more positive coverage was needed [179] (for the same point see also the New Zealand report [180]). Our findings do not support these views, given the techno-optimistic tone of coverage we found. If the AI researchers and witnesses connected with AI development are correct, then there is a two-tiered system of reporting on AI: one related to non-disabled people and one in relation to disabled people. Such a hierarchy is not surprising. A recent study looking at the coverage of robotics in academic literature and newspapers made the point that many discussions exist surrounding the negative impact of robotics on the employment situation of non-disabled people, and the study also revealed that coverage of the impacts of robotics on the employment situation of disabled people was highly techno-optimistic [182].

4.2.2. Linking Techno-Optimism to the Role, Identity, and Stake Narrative

A techno-optimistic tone facilitates the role and identity of disabled people as potential therapeutic and non-therapeutic users, both of which could be seen as consumer identities and a stake narrative that is linked to the user angle. As much as the therapeutic and non-therapeutic user role and identity is important, there is more to the relationship between AI/ML and disabled people. Indeed, the research topics, principles, and impacts mentioned in a report by Whittlestone and co-workers called “Ethical and societal implications of algorithms, data, and artificial intelligence: a roadmap for research” [34] cannot be dealt with if the role, identity, and stake narratives linked to disabled people are purely from a user angle. It ignores the identity problems that disabled people have flagged for so long. The consumer identity is engaged with outside [183] and in relation to disabled people [184]. Within the techno-optimistic coverage, the consumer identity diminishes disabled people to being cheerleaders of techno-advancements with the only problem being the lack of access to a given technology and technology consumption being the solution to the problems disabled people face. A consumer identity, especially in conjunction with a techno-optimistic narrative, is not enough to prevent the problems AI/ML might pose for disabled people. It might work for products such as webpages and computers and for therapeutics, but it does not work for societal problems disabled people might face due to AI/ML advancements, such as war and conflict or changing occupational landscapes and social and societal influences of AI/ML in general.

If oneself and others see disabled people only within a consumer identity, then this will limit what other roles oneself and others see disabled people as occupying in relation to AI/ML discourses. It furthermore influences the scope of a given role such as knowledge producer or influencer of AI/ML ethics governance discourses. It also entails the danger that others instrumentalize disabled people of the consumer flavor to question disabled people that are looking at issues beyond consumerism. As such, AI/ML discourses that focus so exclusively on therapeutic and non-therapeutic consumer identities influence intergroup relationships [68,69] between disabled and non-disabled people within the AI/ML discourses and between disability groups and disabled people exhibiting different identities, roles, and stakes.

If the consumer identity is predominantly used, it will impact in accordance with the stakeholder theory, stakeholder salience, and stakeholder identification [72]. It will impact for which aspects of AI/ML advancements disabled people see themselves as stakeholders or are seen by others in AI/ML discourses as stakeholders and what is seen as being at stake for disabled people.

Considering Mitchell’s three main factors identifying a stakeholder: “(1) the stakeholder’s power to influence the firm, (2) the legitimacy of the stakeholder’s relationship with the firm, and (3) the urgency of the stakeholder’s claim on the firm” [72] (p. 853), the techno-optimistic tone, consumer role, and consumer identity narrative raise the issue: if the only stakeholder power of disabled people is linked to being consumers, can they only influence AI/ML discussions linked to the consumer angle? Social, ethics, and governance of AI/ML discussions might not be seen in need of involving disabled people as stakeholders, and disabled people might not be seen as being at risk, but a stakeholder under Clarkson’s definition of involuntary stakeholder [74] puts disabled people at risk as a result of AI/ML actors.

4.2.3. Techno-Optimism, User Narrative, and the Issue of Governance

Public engagement is seen as a necessary component for AI advancements [43,45,46] and AI governance. Many problems have been highlighted that diminish the opportunity for the involvement of disabled people in public policy and governance engagements [49,60] including the very medical imagery of disabled people [168,169]. Our findings suggest that the overall role expectation and identity of being therapeutic or non-therapeutic users and the techno-optimistic tone are two other factors that are barriers to the involvement of disabled people in the governance and ethics debate around the societal aspects of AI/ML.

In 2018, the Canadian government started a “national consultation to reinvigorate Canada’s support for science and to position Canada as a global leader in research excellence” [185]. One of the three main consultation areas is “Strengthen equity, diversity and inclusion in research”, and one pillar of that strategy is “Equitable participation: Increase participation of researchers from underrepresented groups in the research enterprise” [185]. Disabled people are an underrepresented group in the research enterprise. To entice disabled people to perform research, they need to be recognized in a broader role and identity narrative than being the user of AI/ML products and processes. Similarly, universities and funders need to be enticed to perform research that covers the breath of impact of AI/ML on disabled people.

Our data suggest that although the Canadian AI strategy has the goal of being a globally recognized leader in the economic, ethical, policy, and legal implications of AI [186], so far, they do not indicate that they think disabled people are missing in AI/ML discourse, which might be a reflection of the dominant role and identity of ‘user’ linked to disabled people for which our data suggest disabled people are involved. Various articles outline ways to identify stakeholder groups [75]; however, given the main identity and role of disabled people as users, our data suggest that AI/ML discourses are not identifying disabled people as stakeholders outside of the user label.

4.3. The Social Good Discourse

Given the lack of presence in the literature covered, our data suggest the need for more engagement by the “AI for social good” actors with disabled people. Our data also indicate an opportunity for conceptual work on the meaning of “social good” and the conflicts between social groups in relation to “social good”. Furthermore, the AI/ML coverage in relation to disabled people in general falls short given discussions within the “AI for social good” literature.

Cowl et al. argued that the following seven factors are essential for AI for social good: “(1) falsifiability and incremental deployment; (2) safeguards against the manipulation of predictors; (3) receiver-contextualised intervention; (4) receiver-contextualised explanation and transparent purposes; (5) privacy protection and data subject consent; (6) situational fairness; and (7) human-friendly semanticisation” [31] (p. 3). If these seven factors are to work with disabled people, one needs an intricate understanding of disabled people and their situation, which is not provided within the literature covered. Furthermore, one needs to engage with disabled people outside of the therapeutic and non-therapeutic user role.

For example, Cowl et al., under situational fairness concluded, “6) AI4SG designers should remove from relevant datasets variables and proxies that are irrelevant to an outcome, except when their inclusion supports inclusivity, safety, or other ethical imperatives” [31] (p. 17). Would people even know about the impact on inclusivity or safety in relation to disabled people if disabled people are not systemically engaged? For example, the focus of the 2018 “AI for Good Summit” was to bring together stakeholders together to tackle the 17 sustainable development goals (SDGs). However, did they think about how AI could be used in a detrimental way by enabling problematic SDG goals, such as the one evident in paragraph number 26:

“We are committed to the prevention and treatment of non-communicable diseases, including behavioural, developmental and neurological disorders, which constitute a major challenge for sustainable development” [187]? This goal for sure is questionable if looked at through a disability rights lens.

A recent Dagstuhl workshop on “AI for social good” produced 10 challenges and many topics one has to think about [35]. Nearly all the challenges and topics indicate that the AI/ML coverage we found is lacking and needs to improve. However, the workshop itself showed the same limited framework in covering disabled people only as therapeutic and non-therapeutic users [35] as we identified in our study. According to an IEEE document, respect for human rights as set out in the UN Convention on the Rights of Persons with Disabilities is an important goal of AI [23], which indicates that the gap we found in our study has to be filled in relation to and outside of the “AI for social good” focus.

5. Conclusions and Future Research

The findings of our study suggest that the role, identity, and stake narrative of disabled people in the AI/ML literature covered was limited. The patient and user roles and identities were the most frequently used as were stakes in sync with these roles. The social impact of AI/ML on disabled people was not engaged with, and disabled people were only seen as knowledge producers in relation to usability of AI/ML products, but not in relation to direct or indirect societal impact of AI/ML use by others on disabled people. Ethical issues were not engaged with in relation to disabled people; AI governance and public participation in AI/ML policy development was not a topic linked to disabled people and the concepts of ‘social good” and “for good” were not engaged with in relation to AI/ML and disabled people.

Furthermore, our study suggests minor differences in the coverage of AI/ML in relation to disabled people between the academic abstracts, newspaper articles, and Twitter tweets covered suggesting a broader systemic problem and not a problem with any one source.

Given that the roles one expects of oneself are impacted by the role expectations others have of oneself [66] and given that the perception of ‘self’ is influenced by the role one occupies in the social world [67], the role narrative we found in our data is disempowering for disabled people. Our findings suggest that the role and identity narrative of disabled people in relation to AI/ML must change in academic literature, newspaper articles, and Twitter tweets.

Our findings of a mostly techno-optimistic coverage and a limited role narrative around disabled people might explain why disabled people are covered so limitedly and one-sidedly, if at all, in the AI strategies of various governments. To rectify the problematic findings of our study, our data suggest that we need a systemic change in how one engages with the topic of AI/ML and disabled people. It is not just about any one group such as researchers or journalists having to broaden their focus of reporting on AI/ML and disabled people. Many academic and newspaper articles engage with the negative impact of AI/ML on non-disabled people. Indeed, in government reports covering AI/ML, it is stated that the coverage is too negative [179,180]. If this is the case, the question is, why is the situation so different if disabled people are covered? This discrepancy of tone of coverage based on whether one covers disabled people or non-disabled people is also evident in the robotics coverage (academic articles, newspapers) [182], and as such, our findings suggest a broader systemic problem. So how does one achieve a more diverse and realistic coverage so that disabled people do not only benefit from AI/ML advancements but also are not negatively impacted by AI/ML?

It is argued that “understanding how AI will impact society requires interdisciplinary research, especially for the social sciences and humanities to understand its lived impacts and our everyday understandings of new technology” [48] (see also [39]). This argument and our findings suggest that there is a need for scholars, including community-based scholars (community members doing the research) [188] and students, to focus on the impact of AI/ML on the lived situation of disabled people beyond the user and techno-optimistic angle. We must better understand why scholars covering the social aspects of disabled people have not engaged with the topics we found lacking. Another angle of investigation could be why disabled students are not acting as knowledge producers on the topics we found lacking. Based on a study that investigated the experience of disabled postsecondary students in postsecondary education [189], we suggest that the experience reported (feeling medicalized, hesitant to self-advocate, to try to fit in with the norm) might hinder disabled students to be knowledge producers in relation to governance, public engagement, and ethics in relation to AI/ML and disabled people. Studies that directly investigate the question of why the topics we found lacking where not engaged with are warranted. Given the Canadian government’s effort to diversify its research force [185], our findings suggest that interviewing people involved in this diversification effort on how to deal with our problematic findings are useful. We see the Canadian government initiative as a possibility to deal with some of the systemic problems [49,189] that contribute to the problems we found in our study.

Given our findings, further studies that interview disabled people, AI/ML policy makers, AI/ML academics, AI/ML funders, people in AI/ML governance and AI/ML ethics discussion, and people involved in the development and execution of AI/ML strategies in numerous countries, regarding their views on AI/ML and disabled people are warranted; whereby questions could focus on the discrepancy of tone of coverage and the limited role understanding of disabled people.

Social media, such as Twitter, has become increasingly influential [109,110,111]. As such, it is important to understand our Twitter results better to change our problematic findings. For example, why was the coverage so overwhelmingly techno-optimistic and focused on disabled people as users and so few tweets indicated the social impact of AI/ML on disabled people? Studies interviewing disabled people about how to be public perception influencers, such as on Twitter, in relation to AI/ML and to better understand why we found so few tweets indicating problems of AI/ML for disabled people are warranted.

Given the problematic findings with newspapers, which is a source of information still read by many people, studies that interview journalist students on their knowledge on AI/ML and disabled people should also be useful.

We also need studies that investigate the views of the “AI for good” and “AI for social good” community on disabled people and what this community thinks about how AI/ML impacts disabled people and what disabled people think about the concept of “for good” and “for social good” in general and in relation to AI/ML.

Finally, we think that other review studies would be useful using non-English material and grey literature. Although we think that the main findings of our study will also be found in other sources, data on sources beyond what we focused on are needed, such as how social media in China, newspapers in India, or academic literature in German language journals cover disabled people and AI/ML, to name a few possibilities.

Author Contributions

Conceptualization, A.L. and G.W.; methodology, A.L. and G.W.; formal analysis, A.L. and G.W.; investigation, A.L. and G.W.; data curation, A.L. and G.W.; writing—original draft preparation, A.L. and G.W.; writing—review and editing, A.L. and G.W.; supervision, G.W.; project administration, G.W.; funding acquisition, G.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Government of Canada, Canadian Institutes of Health Research, Institute of Neurosciences, Mental Health and Addiction ERN 155204 in cooperation with ERA-NET NEURON JTC 2017.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Lillywhite, A.; Wolbring, G. Coverage of ethics within the artificial intelligence and machine learning academic literature: The case of disabled people. Assist. Technol. 2019, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Feng, R.; Badgeley, M.; Mocco, J.; Oermann, E.K. Deep learning guided stroke management: A review of clinical applications. J. NeuroInterventional Surg. 2017, 10, 358–362. [Google Scholar] [CrossRef] [PubMed]

- Ilyasova, N.; Kupriyanov, A.; Paringer, R.; Kirsh, D. Particular Use of BIG DATA in Medical Diagnostic Tasks. Pattern Recognit. Image Anal. 2018, 28, 114–121. [Google Scholar] [CrossRef]

- André, Q.; Carmon, Z.; Wertenbroch, K.; Crum, A.; Frank, D.; Goldstein, W.; Huber, J.; Van Boven, L.; Weber, B.; Yang, H. Consumer Choice and Autonomy in the Age of Artificial Intelligence and Big Data. Cust. Needs Solutions 2017, 5, 28–37. [Google Scholar] [CrossRef]

- Deloria, R.; Lillywhite, A.; Villamil, V.; Wolbring, G. How research literature and media cover the role and image of disabled people in relation to artificial intelligence and neuro-research. Eubios J. Asian Int. Bioeth. 2019, 29, 169–182. [Google Scholar]

- Hassabis, D.; Kumaran, D.; Summerfield, C.; Botvinick, M. Neuroscience-Inspired Artificial Intelligence. Neuron 2017, 95, 245–258. [Google Scholar] [CrossRef]

- Bell, A.J. Levels and loops: The future of artificial intelligence and neuroscience. Philos. Trans. R. Soc. B Boil. Sci. 1999, 354, 2013–2020. [Google Scholar] [CrossRef][Green Version]

- Lee, J. Brain–computer interfaces and dualism: A problem of brain, mind, and body. AI Soc. 2014, 31, 29–40. [Google Scholar] [CrossRef]

- Cavazza, M.; Aranyi, G.; Charles, F. BCI Control of Heuristic Search Algorithms. Front. Aging Neurosci. 2017, 11, 225. [Google Scholar] [CrossRef]

- Buttazzo, G. Artificial consciousness: Utopia or real possibility? Computer 2001, 34, 24–30. [Google Scholar] [CrossRef]

- De Garis, H. Artificial Brains. Inf. Process. Med. Imaging 2007, 8, 159–174. [Google Scholar]

- Catherwood, P.; Finlay, D.; McLaughlin, J. Intelligent Subcutaneous Body Area Networks: Anticipating Implantable Devices. IEEE Technol. Soc. Mag. 2016, 35, 73–80. [Google Scholar] [CrossRef]

- Meeuws, M.; Pascoal, D.; Bermejo, I.; Artaso, M.; De Ceulaer, G.; Govaerts, P. Computer-assisted CI fitting: Is the learning capacity of the intelligent agent FOX beneficial for speech understanding? Cochlea- Implant. Int. 2017, 18, 198–206. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.-C.; Feng, J.-W. Development and Application of Artificial Neural Network. Wirel. Pers. Commun. 2017, 102, 1645–1656. [Google Scholar] [CrossRef]

- Garden, H.; Winickoff, D. Issues in Neurotechnology Governance. Available online: https://doi.org/10.1787/18151965 (accessed on 26 January 2020).

- Crowson, M.G.; Lin, V.; Chen, J.M.; Chan, T.C.Y. Machine Learning and Cochlear Implantation—A Structured Review of Opportunities and Challenges. Otol. Neurotol. 2020, 41, e36–e45. [Google Scholar] [CrossRef]

- Wangmo, T.; Lipps, M.; Kressig, R.W.; Ienca, M. Ethical concerns with the use of intelligent assistive technology: Findings from a qualitative study with professional stakeholders. BMC Med. Ethic. 2019, 20, 1–11. [Google Scholar] [CrossRef]

- Neto, J.S.D.O.; Silva, A.L.M.; Nakano, F.; Pérez-Álcazar, J.J.; Kofuji, S.T. When Wearable Computing Meets Smart Cities. In Smart Cities and Smart Spaces; IGI Global: Pennsylvania, PA, USA, 2019; pp. 1356–1376. [Google Scholar]

- Ding, J. Deciphering China’s AI Dream. Available online: https://www.fhi.ox.ac.uk/wp-content/uploads/Deciphering_Chinas_AI-Dream.pdf (accessed on 26 January 2020).

- Dutton, T. An Overview of National AI Strategies. Available online: https://medium.com/politics-ai/an-overview-of-national-ai-strategies-2a70ec6edfd (accessed on 26 January 2020).

- Floridi, L.; Cowls, J.; Beltrametti, M.; Chatila, R.; Chazerand, P.; Dignum, V.; Luetge, C.; Madelin, R.; Pagallo, U.; Rossi, F.; et al. AI4People—An Ethical Framework for a Good AI Society: Opportunities, Risks, Principles, and Recommendations. Minds Mach. 2018, 28, 689–707. [Google Scholar] [CrossRef]

- Asilomar and AI Conference Participants. Asilomar AI Principles Principles Developed in Conjunction with the 2017 Asilomar Conference. Available online: https://futureoflife.org/ai-principles/?cn-reloaded=1 (accessed on 26 January 2020).

- The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems, T.I.G.I. Ethically Aligned Design: A Vision for Prioritizing Human Well-Being with Autonomous and Intelligent Systems (A/IS). Available online: http://standards.ieee.org/develop/indconn/ec/ead_v2.pdf (accessed on 26 January 2020).

- Participants in the Forum on the Socially Responsible Development of AI. Montreal Declaration for a Responsible Development of Artificial Intelligence. Available online: https://www.montrealdeclaration-responsibleai.com/the-declaration (accessed on 26 January 2020).

- European Group on Ethics in Science and New Technologies. J. Med Ethic 1998, 24, 247. [CrossRef]

- University of Southern California USC Center for Artificial Intelligence in Society. USC Center for Artificial Intelligence in Society: Mission Statement. Available online: https://www.cais.usc.edu/wp-content/uploads/2017/05/USC-Center-for-Artificial-Intelligence-in-Society-Mission-Statement.pdf (accessed on 26 January 2020).

- Lehman-Wilzig, S.N. Frankenstein unbound: Towards a legal definition of artificial intelligence. Futures 1981, 13, 442–457. [Google Scholar] [CrossRef]

- Brundage, M.; Avin, S.; Clark, J.; Toner, H.; Eckersley, P.; Garfinkel, B.; Dafoe, A.; Scharre, P.; Zeitzoff, T.; Filar, B.; et al. The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation. Available online: https://img1.wsimg.com/blobby/go/3d82daa4-97fe-4096-9c6b-376b92c619de/downloads/1c6q2kc4v_50335.pdf (accessed on 26 January 2020).

- Smith, K.J. The AI community and the united nations: A missing global conversation and a closer look at social good. In Proceedings of the AAAI Spring Symposium—Technical Report, Palo Alto, CA, USA, 27–29 March 2017; pp. 95–100. [Google Scholar]

- Prasad, M. Back to the future: A framework for modelling altruistic intelligence explosions. In Proceedings of the AAAI Spring Symposium—Technical Report, Palo Alto, CA, USA, 27–29 March 2017; pp. 60–63. [Google Scholar]

- Cowls, J.; King, T.; Taddeo, M.; Floridi, L. Designing AI for Social Good: Seven Essential Factors. SSRN Electron. J. 2019, 1–21. [Google Scholar] [CrossRef]

- Varshney, K.R.; Mojsilovic, A. Open Platforms for Artificial Intelligence for Social Good: Common Patterns as a Pathway to True Impact. Available online: https://aiforsocialgood.github.io/icml2019/accepted/track1/pdfs/39_aisg_icml2019.pdf (accessed on 26 January 2020).

- Ortega, A.; Otero, M.; Steinberg, F.; Andrés, F. Technology Can Help to Right Technology’s Social Wrongs: Elements for a New Social Compact for Digitalisation. Available online: https://t20japan.org/policy-brief-technology-help-right-technology-social-wrongs/ (accessed on 26 January 2020).

- Whittlestone, J.; Nyrup, R.; Alexandrova, A.; Dihal, K.; Cave, S. Ethical and Societal Implications of Algorithms, Data, and Artificial Intelligence: A Roadmap for Research. Available online: https://www.nuffieldfoundation.org/sites/default/files/files/Ethical-and-Societal-Implications-of-Data-and-AI-report-Nuffield-Foundat.pdf (accessed on 26 January 2020).

- Clopath, C.; De Winne, R.; Emtiyaz Khan, M.; Schaul, T. Report from Dagstuhl Seminar 19082, AI for the Social Good. Available online: http://drops.dagstuhl.de/opus/volltexte/2019/10862/ (accessed on 26 January 2020).

- Hager, G.D.; Drobnis, A.; Fang, F.; Ghani, R.; Greenwald, A.; Lyons, T.; Parkes, D.C.; Schultz, J.; Saria, S.; Smith, S.F.; et al. Artificial Intelligence for Social Good. Available online: https://cra.org/ccc/wp-content/uploads/sites/2/2016/04/AI-for-Social-Good-Workshop-Report.pdf (accessed on 26 January 2020).

- Berendt, B. AI for the Common Good?! Pitfalls, challenges, and ethics pen-testing. Paladyn J. Behav. Robot. 2019, 10, 44–65. [Google Scholar] [CrossRef]

- Efremova, N.; West, D.; Zausaev, D. AI-Based Evaluation of the SDGs: The Case of Crop Detection With Earth Observation Data. SSRN Electron. J. 2019, 1–4. [Google Scholar] [CrossRef]

- Canadian Institute for Advanced Research (CIFAR). AI & Society. Available online: https://www.cifar.ca/ai/ai-society (accessed on 26 January 2020).

- Gasser, U.; Almeida, V. A Layered Model for AI Governance. IEEE Internet Comput. 2017, 21, 58–62. [Google Scholar] [CrossRef]

- Lauterbach, B.; Bonim, A. Artificial Intelligence: A Strategic Business and Governance Imperative. Available online: https://gecrisk.com/wp-content/uploads/2016/09/ALauterbach-ABonimeBlanc-Artificial-Intelligence-Governance-NACD-Sept-2016.pdf (accessed on 26 January 2020).

- Rahwan, I. Society-in-the-loop: Programming the algorithmic social contract. Ethic- Inf. Technol. 2017, 20, 5–14. [Google Scholar] [CrossRef]