1. Introduction

Spodoptera frugiperda, belonging to the order

Lepidoptera, family

Noctuidae, and genus

Spodoptera [

1], is a transboundary migratory agricultural pest listed on the global alert system of the Food and Agriculture Organization (FAO) of the United Nations. Native to tropical regions of the Americas, it has now spread to over 100 countries across Africa, Asia, and beyond [

2]. Adult

S. frugiperda are capable of long-distance migration, often covering several hundred kilometers in a single flight, making them a primary vector for regional pest outbreaks. Under optimal conditions (25 °C), a single female moth can lay between 800 and 1800 eggs, with an average of 1482, and has an egg hatching rate exceeding 95%. This leads to exponential population growth during the larval stage, characterized by voracious feeding behavior that can result in 20–70% crop yield losses. Staple crops such as maize and rice are particularly vulnerable to such destructive infestations [

3]. Monitoring adult

S. frugiperda populations using pheromone trapping plays a vital role in forecasting population dynamics, disrupting reproductive chains, and implementing integrated pest management strategies. In particular, the accurate identification and quantification of trapped adults serve as critical technical foundations for quantitative surveillance and precision control of this invasive pest.

However, field-based monitoring of

S. frugiperda adults faces three critical technical bottlenecks. First, due to the morphological similarity between

S. frugiperda and other pests (e.g., Mythimna separata), field surveys reveal that many farmers misidentify the species, leading to the misuse of pesticides and heightened ecological risks [

4]. Second, mainstream monitoring methods suffer from inherent limitations: pheromone traps exhibit high false detection rates due to interference from non-target insects, while both pheromone- and light-based monitoring techniques offer insufficient classification accuracy and low efficiency in field deployments [

5,

6]. Third, current AI-based recognition models heavily rely on standardized laboratory samples, whereas in field conditions, the scales of trapped adults detach progressively with prolonged struggle. The rate of scale loss increases linearly with trapping duration, and, after 72 h, wing surface patterns become severely degraded, resulting in a significant decline in recognition accuracy [

7].

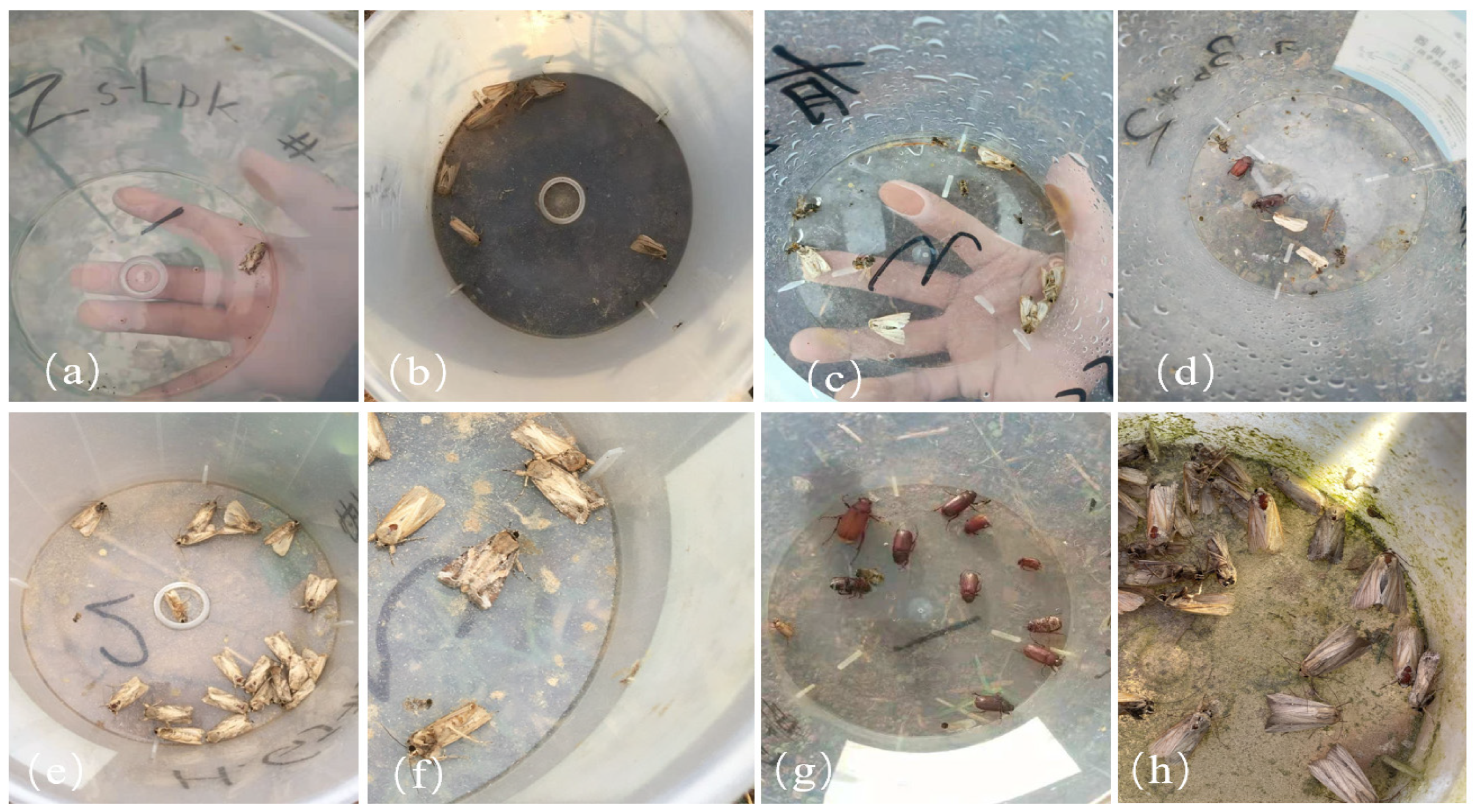

Field monitoring of S. frugiperda using pheromone traps presents multiple challenges. Firstly, the trapped adults often exhibit highly variable morphological features due to scale loss, and are frequently intermixed with a variety of non-target insect species. Secondly, the process of capturing images of trapped specimens within the collection buckets is subject to numerous uncontrolled variables. These include inconsistent camera models, leading to significant differences in image quality; uneven image resolution; uncontrollable lighting conditions; varying shooting distances (i.e., from the lens to the bottom of the bucket); diverse shooting angles; and complex, cluttered backgrounds. As a result, the collected images differ substantially in clarity and overall quality, complicating subsequent recognition tasks.

Traditional insect recognition methods have commonly employed classical machine learning algorithms such as Support Vector Machine (SVM) [

8], Decision Tree (DT) [

9], and Random Forest (RF) [

10], in combination with handcrafted feature extraction techniques including the Gray-Level Co-occurrence Matrix (GLCM) [

11], Histogram of Oriented Gradient (HOG) [

12], and Local Binary Pattern (LBP) [

13]. For instance, Larios et al. [

14] utilized Haar features in conjunction with an SVM classifier to achieve high-accuracy identification of stonefly larvae. Zhu Leqing et al. [

15] extracted OpponentSIFT descriptors and color information features from

Lepidoptera specimens and successfully classified them using SVM. Liu Deying et al. [

16] performed binary processing on dorsal images of rice planthoppers and applied logical AND operations with grayscale images to extract discriminative parameters, achieving a recognition accuracy exceeding 90%. Xiao et al. [

17] combined Scale-Invariant Feature Transform (SIFT) descriptors with a Bag-of-Features framework and classified vegetable pest images using SVM, reaching an accuracy of 91.6% with an inference speed of 0.39 s per image, thereby demonstrating suitability for real-time applications in precision agriculture. Bakkay et al. [

18] proposed a multi-scale feature extraction approach based on HCS descriptors, coupled with SVM, to detect and count moths in trap images, achieving an accuracy of 95.8%.

In 2006, Hinton et al. [

19] introduced an unsupervised greedy layer-wise training algorithm for Deep Belief Networks (DBNs) [

20], which addressed key challenges in improving deep learning accuracy and significantly accelerated the advancement of the field. Watson et al. [

21] developed an automated identification system for 35 species of

Lepidoptera using Convolutional Neural Networks (CNNs), achieving an identification accuracy of 83% under natural field conditions. Proença et al. [

22] applied YOLOv5 to identify green leafhoppers trapped on yellow sticky boards in vineyards, attaining a precision of 99.62% with an average inference time of approximately 2.5 s per image, thereby reducing manual labor costs. Zhang Yinsong et al. [

23] enhanced the Faster R-CNN framework by replacing VGG16 with ResNet50 and employing Soft-NMS in place of traditional non-maximum suppression, achieving a detection accuracy of 90.7%. Li et al. [

24] combined CNNs with non-maximum suppression techniques to locate and count rice aphids, obtaining 93% accuracy with an mAP of 0.885. Malathi et al. [

25] proposed a tri-channel T-shaped deep CNN (T-CNN) for rapid identification of

S. frugiperda, establishing an effective recognition technique. Feng et al. [

26] developed a CNN-based detection method for

S. frugiperda on maize leaves, validating the use of a separable attention mechanism within ResNet50 to enhance both accuracy and robustness. Zhang et al. [

27] introduced a two-stage classification network, MaizePestNet, integrating Grad-CAM and knowledge distillation strategies to identify 36 species of adult and larval maize field pests, achieving a precision of 93.85%. Although recent studies have achieved notable improvements in the recognition accuracy of

S. frugiperda, research on its monitoring remains limited. Despite the widespread use of pheromone-based trapping, there is a lack of dedicated studies on image-based identification from trap captures. Furthermore, the loss of wing scales following entrapment often degrades key morphological features, thereby hindering effective feature extraction and reducing recognition accuracy. To address these challenges, it is imperative to construct a field-based, high-quality dataset that reflects real monitoring conditions in order to enhance the practical applicability of deep learning models for pest surveillance.

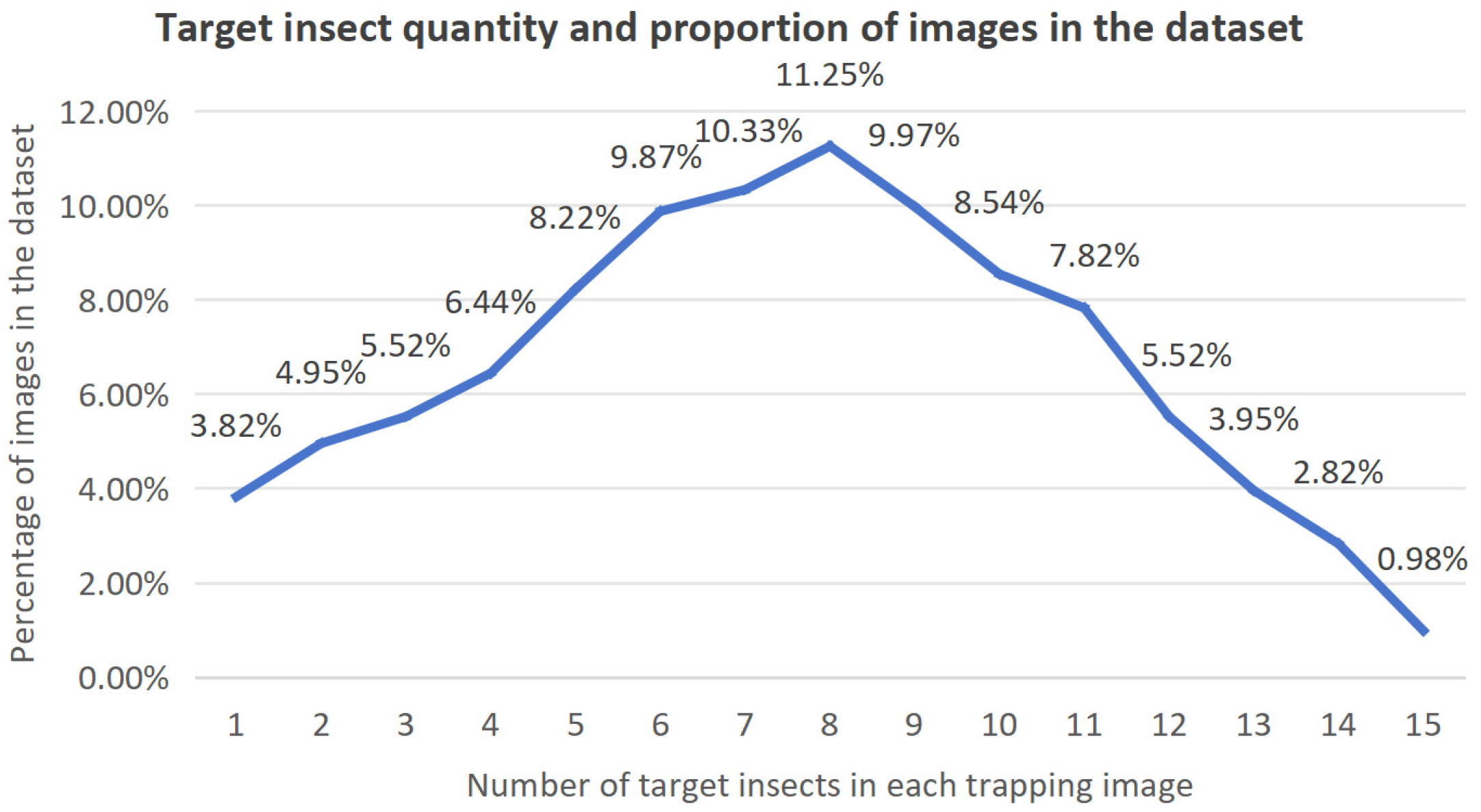

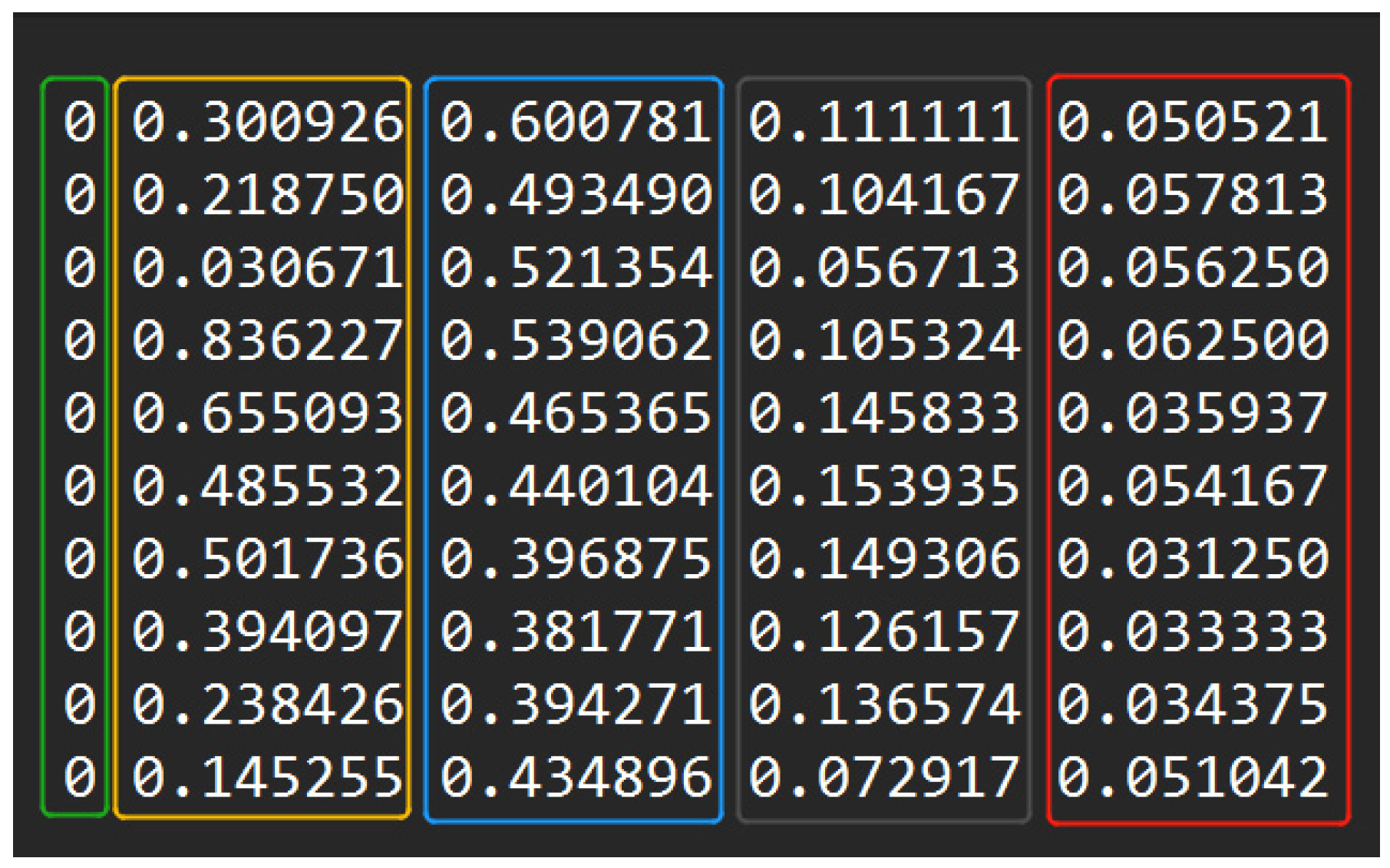

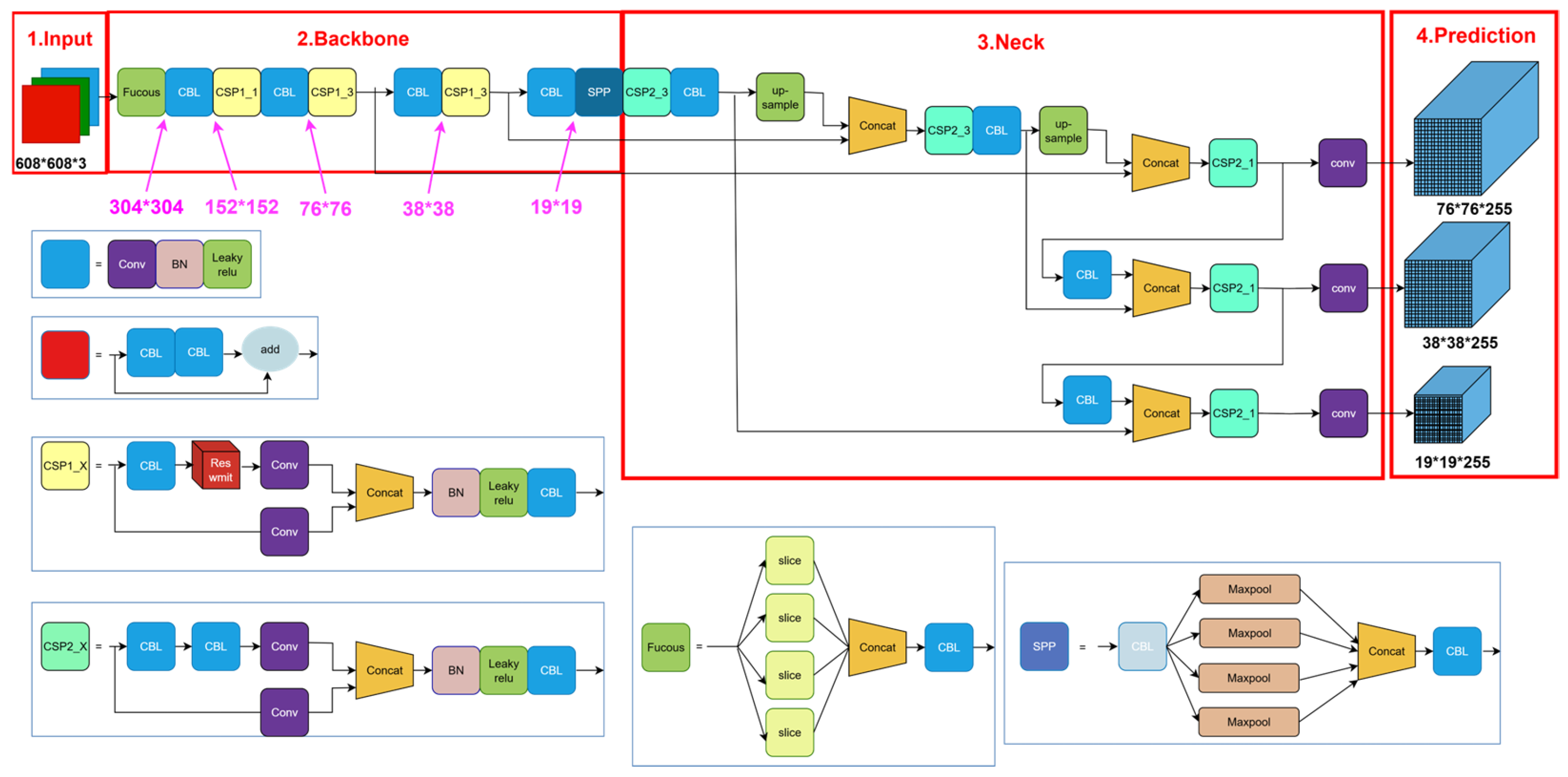

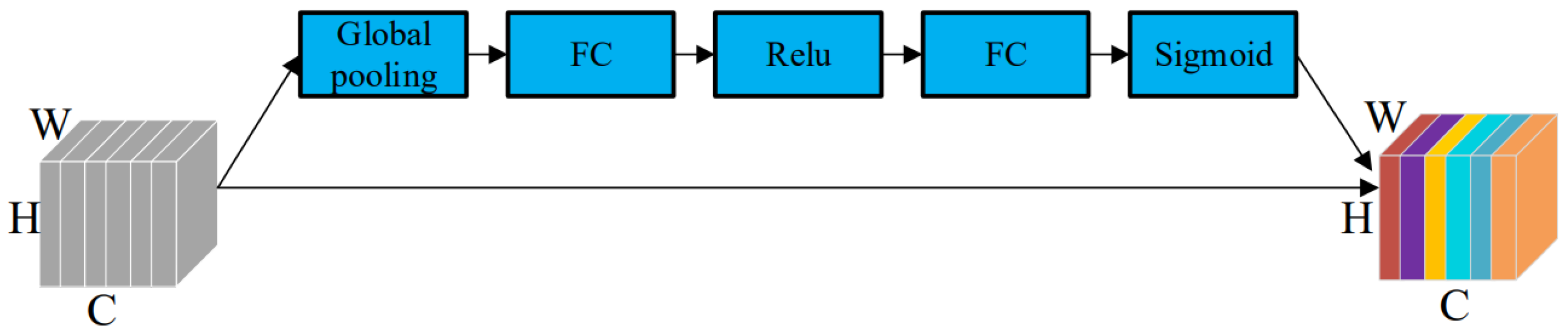

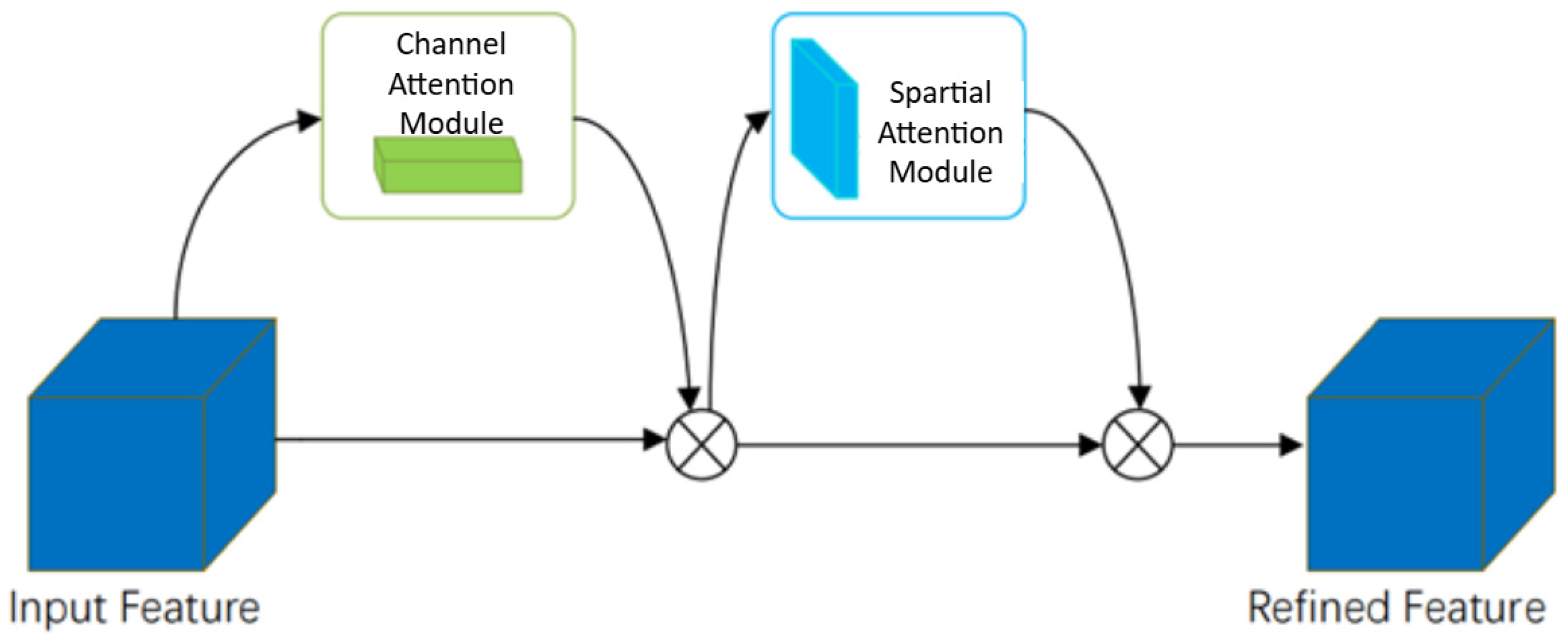

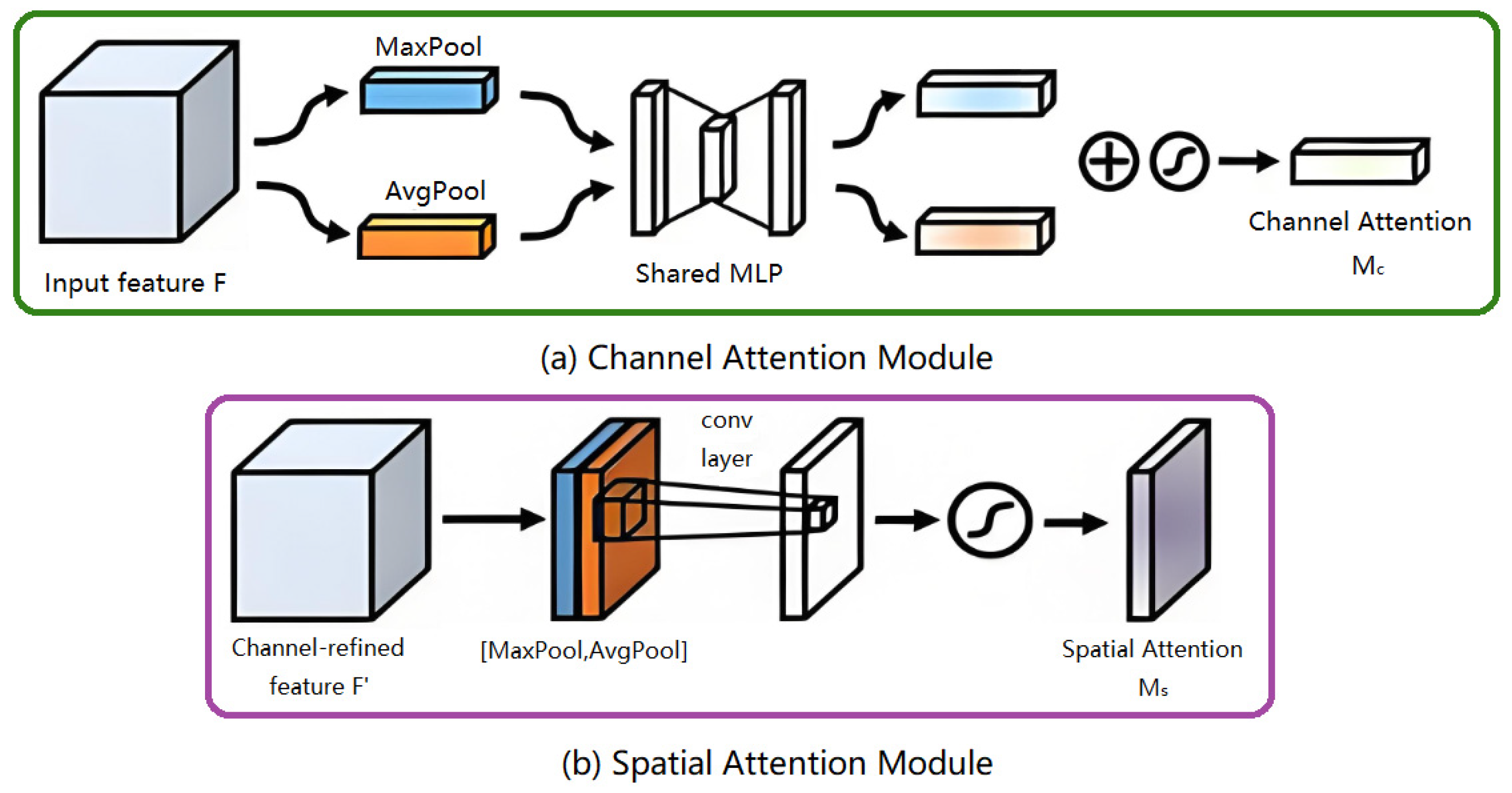

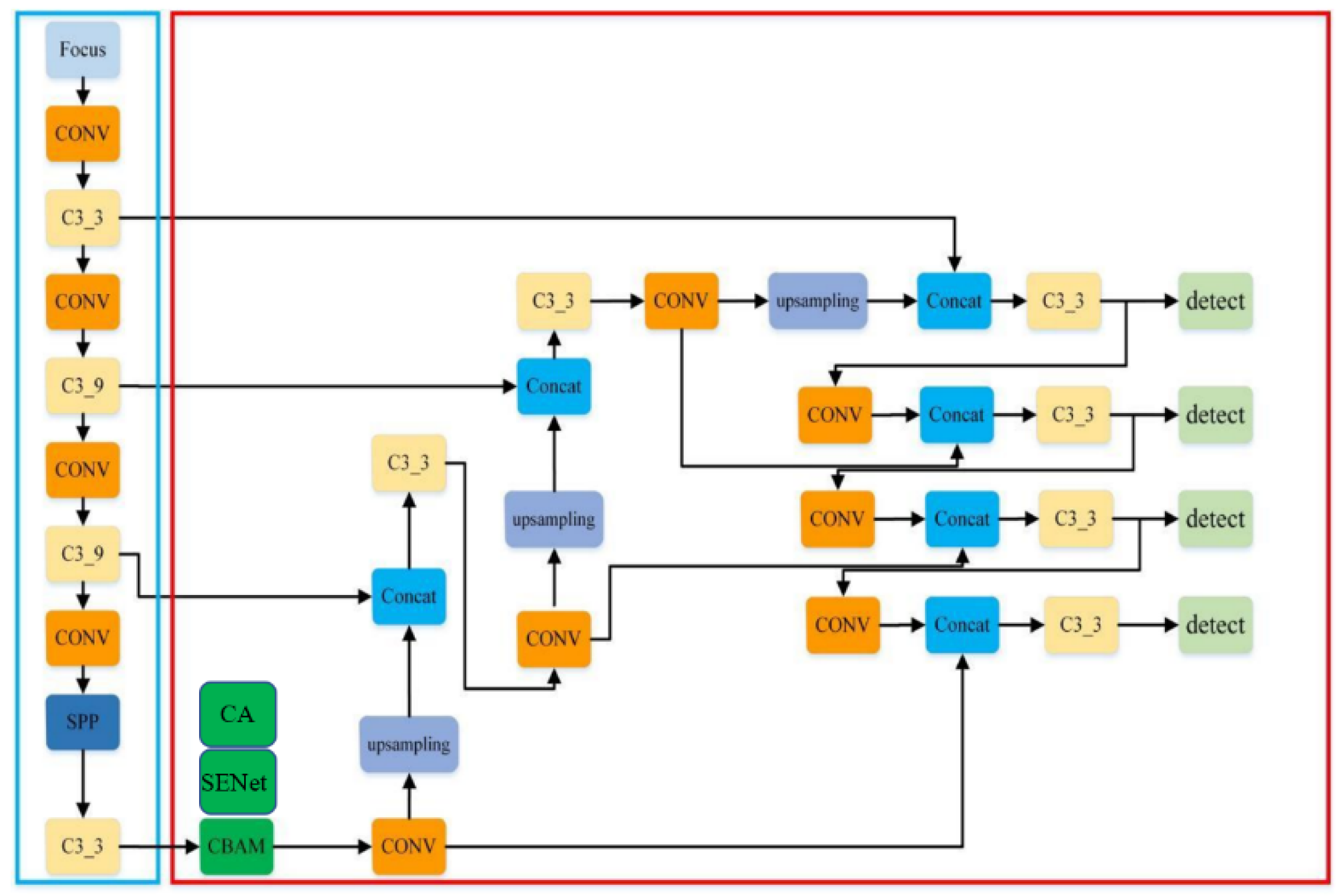

This study departs from conventional research paradigms by constructing a dynamic field-based image dataset of pheromone-trapped insects, comprising 9550 annotated specimens, and proposing an enhanced YOLOv5 detection framework. The key innovations of this work are threefold: (1) A multi-stage data augmentation strategy is designed to specifically address the degradation of morphological features caused by scale loss in trapped moths; (2) a channel–spatial attention coordination mechanism (CBAM) is introduced to suppress background interference while compensating for degraded insect features; and (3) a lightweight insect counting algorithm is developed, demonstrating strong real-time processing capabilities.

Comprehensive comparative experiments confirm that the improved model achieves a mAP@0.5 of 97.8% even under trapping conditions involving 72-h scale loss. These results provide a highly accurate and deployable algorithmic solution for intelligent pest-trapping devices.

3. Results

3.1. Experimental Setup

The experiments were conducted on a computer equipped with an AMD Ryzen 9 5950X processor and 192 GB of RAM. The models were implemented using the PyTorch (v2.1) deep learning framework and trained on an NVIDIA RTX 4090 GPU. The training process consisted of 300 epochs with a batch size of 8.

Using YOLOv5 as the baseline network model for

S. frugiperda detection, the baseline model was trained with the same hyperparameters applied in subsequent experiments. The primary hyperparameters are summarized in

Table 4.

3.2. Comparison of Benchmark Experiments

To comprehensively compare the performance of different deep learning networks in the task of S. frugiperda recognition, we systematically conducted a series of experiments and evaluations. Specifically, four representative object detection and instance segmentation frameworks—YOLOv7, YOLOv8, DETR, and Mask R-CNN—were trained and tested on the same S. frugiperda image dataset. These networks embody distinct architectural designs and are suited to different application scenarios: the YOLO series emphasizes detection speed and real-time performance, DETR leverages the global modeling capability of Transformers, while Mask R-CNN provides pixel-level segmentation alongside object recognition. For a fair comparison, we adopted mean Average Precision (mAP), number of parameters (Params), floating-point operations per second (FLOPs), and per-image inference time as evaluation metrics, thereby enabling a systematic analysis of the trade-offs among accuracy, computational complexity, and application efficiency.

As shown in

Table 5 and

Table 6, the experimental results demonstrate that different models exhibit distinct strengths in terms of accuracy, inference speed, and parameter scale. YOLOv7 and YOLOv8 offer advantages in detection speed, but tend to miss targets in scenarios with high insect density. DETR, which relies on the Transformer architecture, provides strong global modeling capability; however, its relatively slow inference speed limits practical applicability. Mask R-CNN is capable of pixel-level segmentation, yet it shows a high false detection rate in dense and overlapping insect scenarios and performs poorly in recognizing small targets, often misclassifying background elements or other insect species such as

S. frugiperda. In contrast, YOLOv5 achieves a favorable balance between accuracy and real-time performance, maintaining a relatively high mean Average Precision (mAP) while simultaneously reducing parameter size and accelerating inference speed. Its lightweight and efficient design further enables stable deployment on resource-constrained embedded devices, thereby offering greater practical advantages. Consequently, this study ultimately adopts YOLOv5 as the baseline network for subsequent improvements and optimizations, providing a reliable experimental foundation for enhancing the recognition performance of

S. frugiperda.

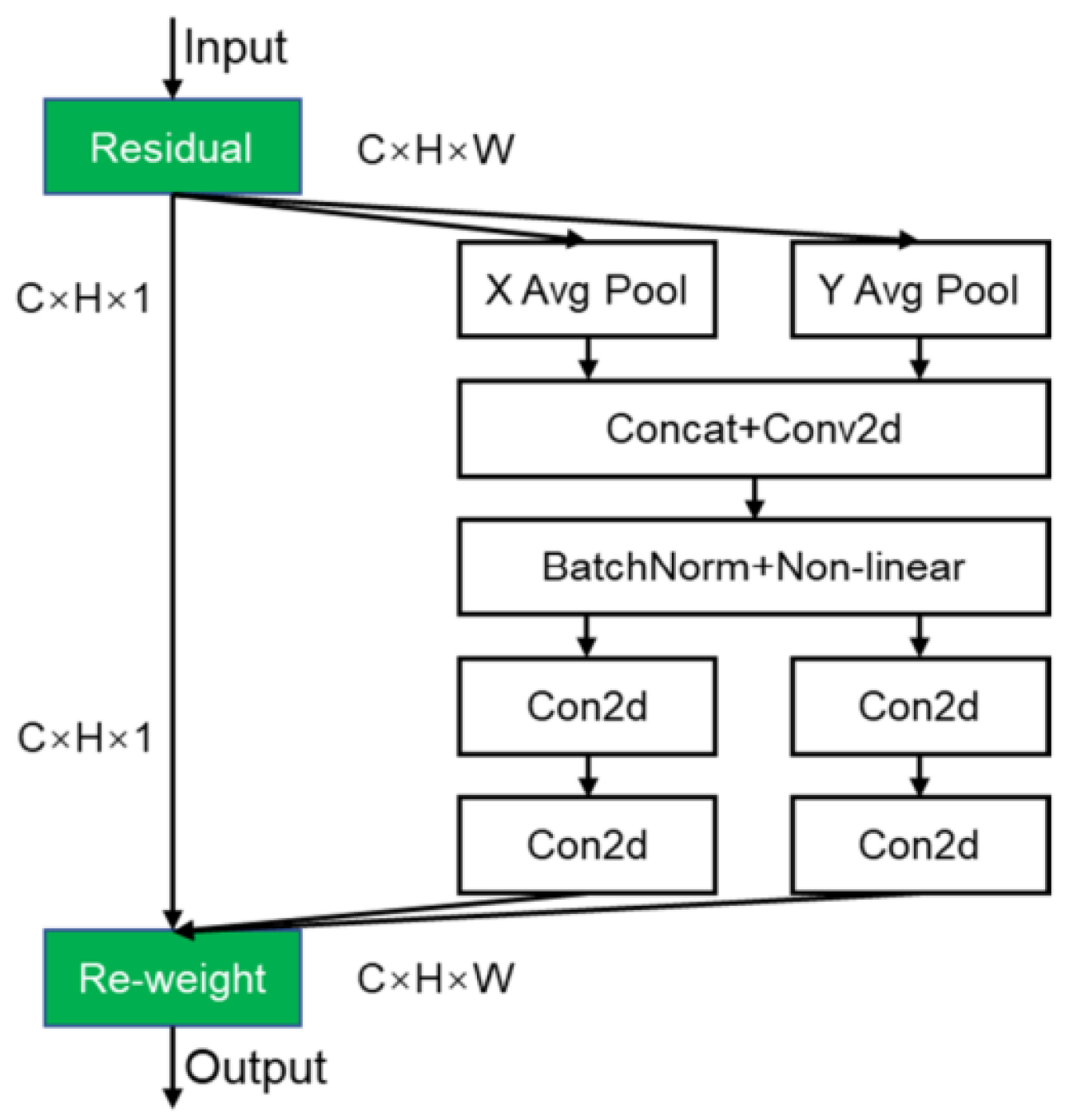

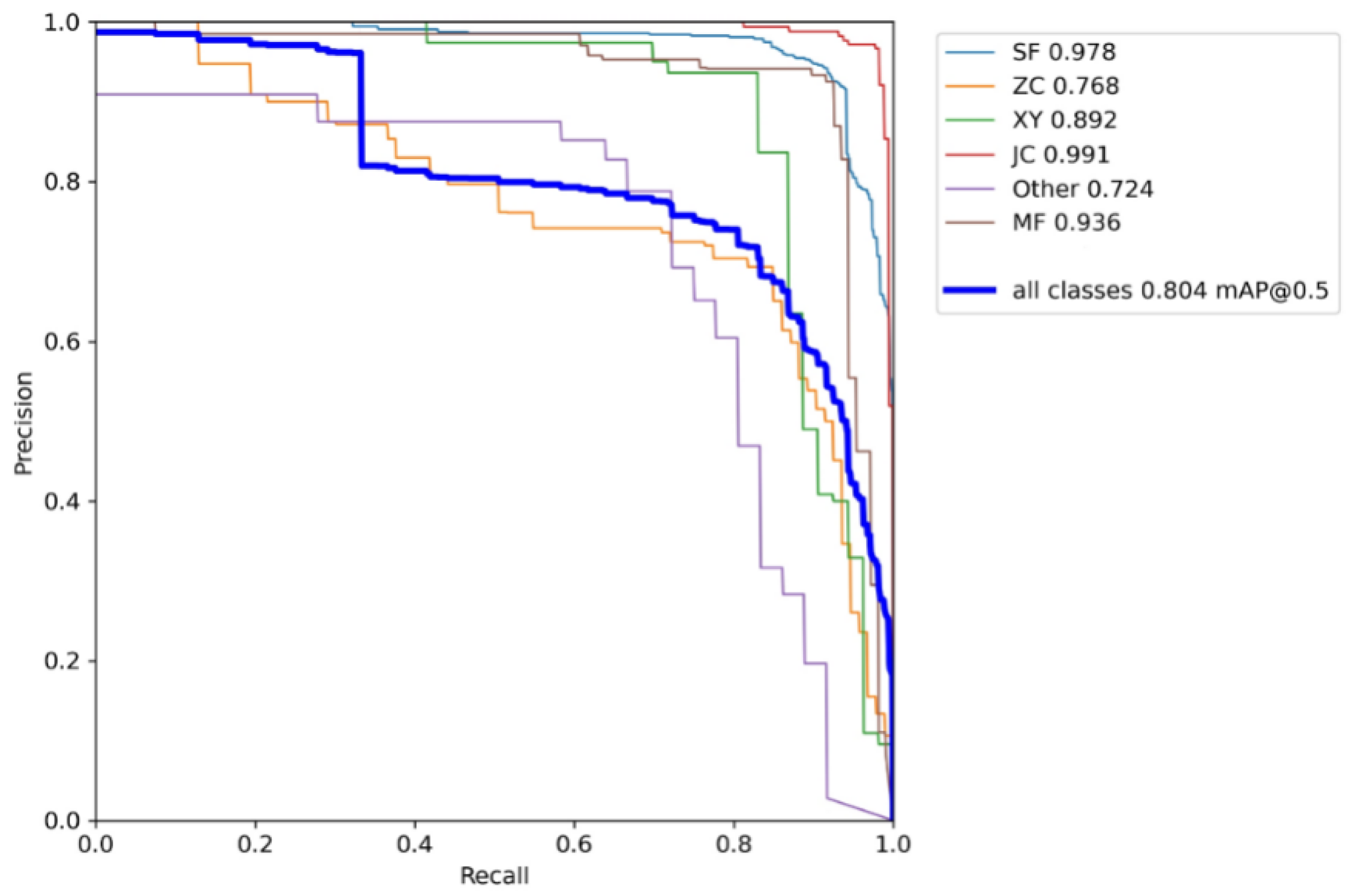

3.3. Experimental Results of the Attention-Enhanced YOLOv5 Algorithm

The

S. frugiperda dataset was used to train the improved YOLOv5 models incorporating different attention mechanisms, including YOLOv5 integrated with Coordinate Attention (CA), Convolutional Block Attention Module (CBAM), and Squeeze-and-Excitation (SE) mechanisms. The hardware and software environment, as well as the experimental hyperparameter settings, were kept consistent with those of the baseline YOLOv5 model. The experimental results are summarized in

Table 7,

Table 8,

Table 9 and

Table 10, as well as

Figure 13,

Figure 14 and

Figure 15.

The experimental results demonstrate that integrating the three different attention modules into the baseline YOLOv5 network led to improvements or maintenance in precision, recall, and mean average precision (mAP) across various insect categories in the trapped dataset. Specifically, with the Coordinate Attention (CA) module, the mean average precision improved for five insect categories—including S. frugiperda—except for the “other insects” category. Notably, S. frugiperda’s mAP increased by 0.1%, while S. litura exhibited the highest improvement with a 21% increase. With the Convolutional Block Attention Module (CBAM), all five major insect categories, excluding “other insects,” showed mAP gains, with the largest overall improvement among the three attention mechanisms. In particular, S. frugiperda’s mAP increased by 0.8%, and S. litura again demonstrated the most significant gain, improving by 23.5%. In contrast, the model augmented with the Squeeze-and-Excitation (SENet) attention module showed the smallest mAP improvement on the S. frugiperda dataset compared to the CA and CBAM modules. Moreover, the mAP for S. frugiperda slightly declined, although the decrease was marginal.

After incorporating different attention mechanisms (e.g., SENet, CBAM, and CA) into the model, the experimental results indicate that the overall number of parameters increased by only approximately 0.13 M. Such a marginal increment is negligible compared to the original YOLOv5s model and exerts minimal influence on computational complexity and storage requirements, thereby preserving the model’s lightweight characteristics. At the same time, the integration of attention mechanisms effectively enhances the model’s ability to represent and discriminate key features without compromising its lightweight nature, ultimately improving detection accuracy. This advantage is particularly critical in embedded systems, where computational power, memory, and energy consumption are severely constrained. The proposed improvement not only retains the efficiency and practicality of the model, but also ensures a balanced trade-off between real-time performance and accuracy, thereby achieving the fundamental objective of lightweight object detection.

3.4. A PyQt5-Based Visual Detection System for Adult S. frugiperda

The visual interface for fall armyworm detection is shown in the figure below. As illustrated in

Figure 16, the detection results demonstrate that the system accurately identifies and counts the target insects in the image. As shown, the system achieves accurate recognition and counting of target insects. The interface also includes an informational section about

S. frugiperda, providing a brief introduction along with expandable content. By clicking on the prompt “Or you can learn more here,” users are redirected to the

S. frugiperda page on Baidu Encyclopedia for further information. This system successfully integrates the PyQt5-based graphical user interface with the YOLOv5 detection model for

S. frugiperda, enabling real-time detection and visualization of trapped fall armyworm images.

6. Conclusions

S. frugiperda is a major agricultural pest posing a serious threat to food security. Compared to traditional field manual monitoring, pest survey instruments, and trap-based methods, current pest monitoring techniques suffer from inaccurate identification and are time-consuming, failing to meet the urgent demand for efficient and precise pest surveillance in smart agriculture. To enhance the recognition capability and monitoring efficiency of S. frugiperda, this study established manual monitoring sites to collect field-trapped images of S. frugiperda, supplemented by laboratory and publicly available web samples, thereby constructing a dataset comprising six categories of trapped insects. To address sample imbalance, eleven image augmentation techniques were randomly combined to expand and balance the dataset, providing a stable foundation for model training. Based on this dataset, we developed and compared several detection models, including Mask R-CNN, YOLOv7, YOLOv8, DETR, and YOLOv5. The results demonstrate that YOLOv5 outperformed the other networks in terms of both detection accuracy and localization precision, while also exhibiting superior lightweight characteristics. Consequently, YOLOv5 can be regarded as a reliable baseline model. To further improve YOLOv5’s recognition accuracy and robustness, three attention mechanisms—SENet, CBAM, and Coordinate Attention (CA)—were integrated for model optimization. Experimental results demonstrate that the YOLOv5 model enhanced with CBAM attention achieved the best performance, reaching an average recognition precision of 97.8% on S. frugiperda trap images. This represents a significant improvement over the baseline, with notable gains in precision for four major insect categories, excluding the “Other” class. Finally, the CBAM-optimized YOLOv5 model was deployed within a PyQt5-based graphical user interface, completing the construction of an S. frugiperda detection system. The system integrates image recognition, result visualization, and pest information modules, enabling the automatic identification and counting of trapped S. frugiperda individuals. This provides an efficient and practical technical support tool for pest monitoring and intelligent pest management, with promising application prospects.