Preliminary Development of Global–Local Balanced Vision Transformer Deep Learning with DNA Barcoding for Automated Identification and Validation of Forensic Sarcosaphagous Flies

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Collection of Samples and Images

2.1.1. Collection of Samples

2.1.2. Collection of Images

2.2. Insect Species Determination

2.2.1. DNA Extraction of Samples

2.2.2. Polymerase Chain Reaction

2.2.3. Sanger Sequencing

2.2.4. Molecular Species Identification

2.3. Dataset Expansion and Model Training

2.3.1. Data Set Preparation

2.3.2. Model Architecture Selection

2.3.3. Training Strategies

2.4. Building and Testing the WMP

2.4.1. System Architecture

2.4.2. Model Deployment

2.4.3. Tests of AI Auto-Recognition Effectiveness

2.5. Establishment of a Molecular Species Identification System

2.5.1. Selection of Primers

2.5.2. Polymerase Chain Reaction

2.5.3. High Resolution Melting Analysis

3. Results

3.1. New Insect Species and Image Count

3.2. Status of AI Training

3.3. Establishment of WMP

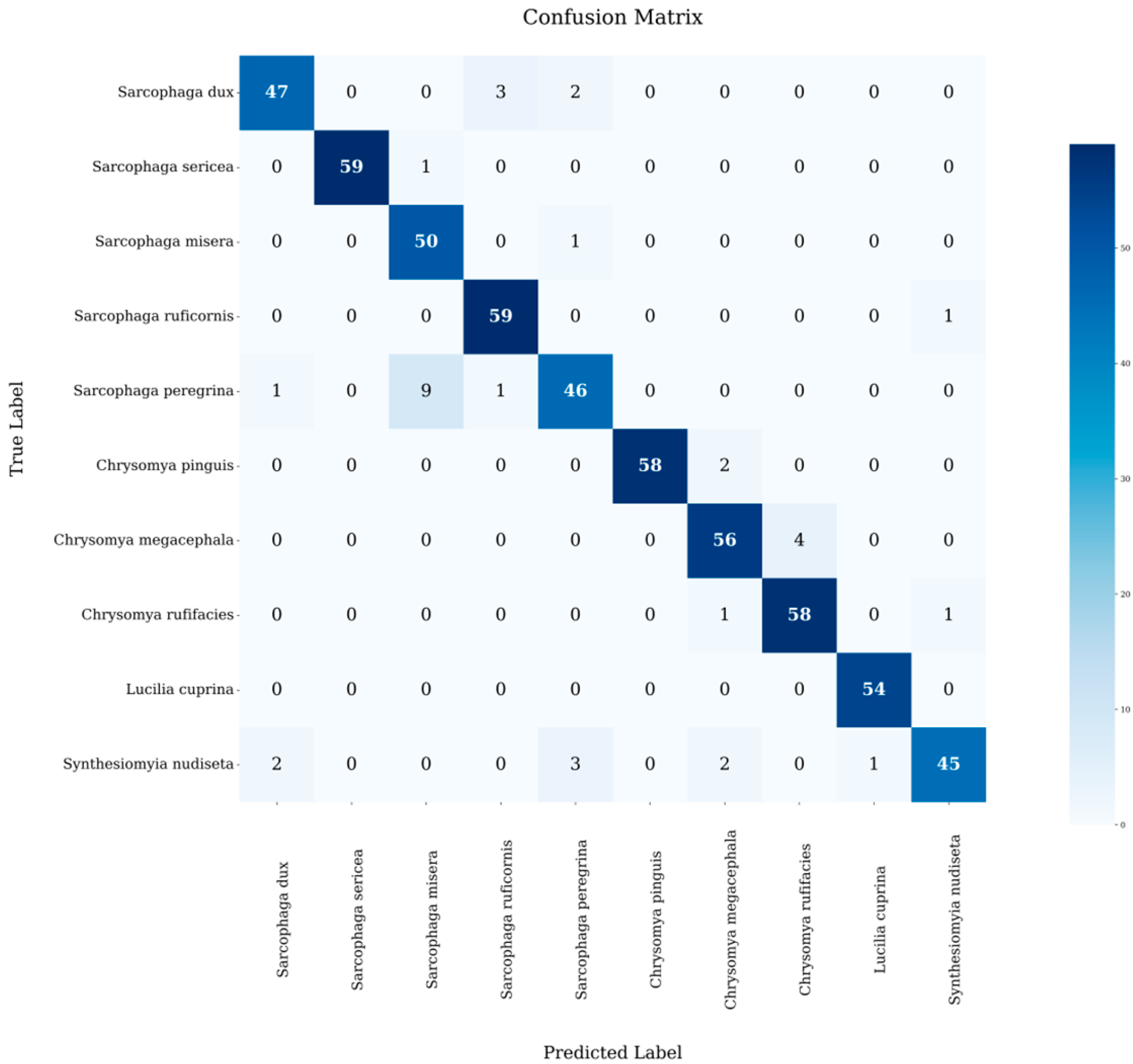

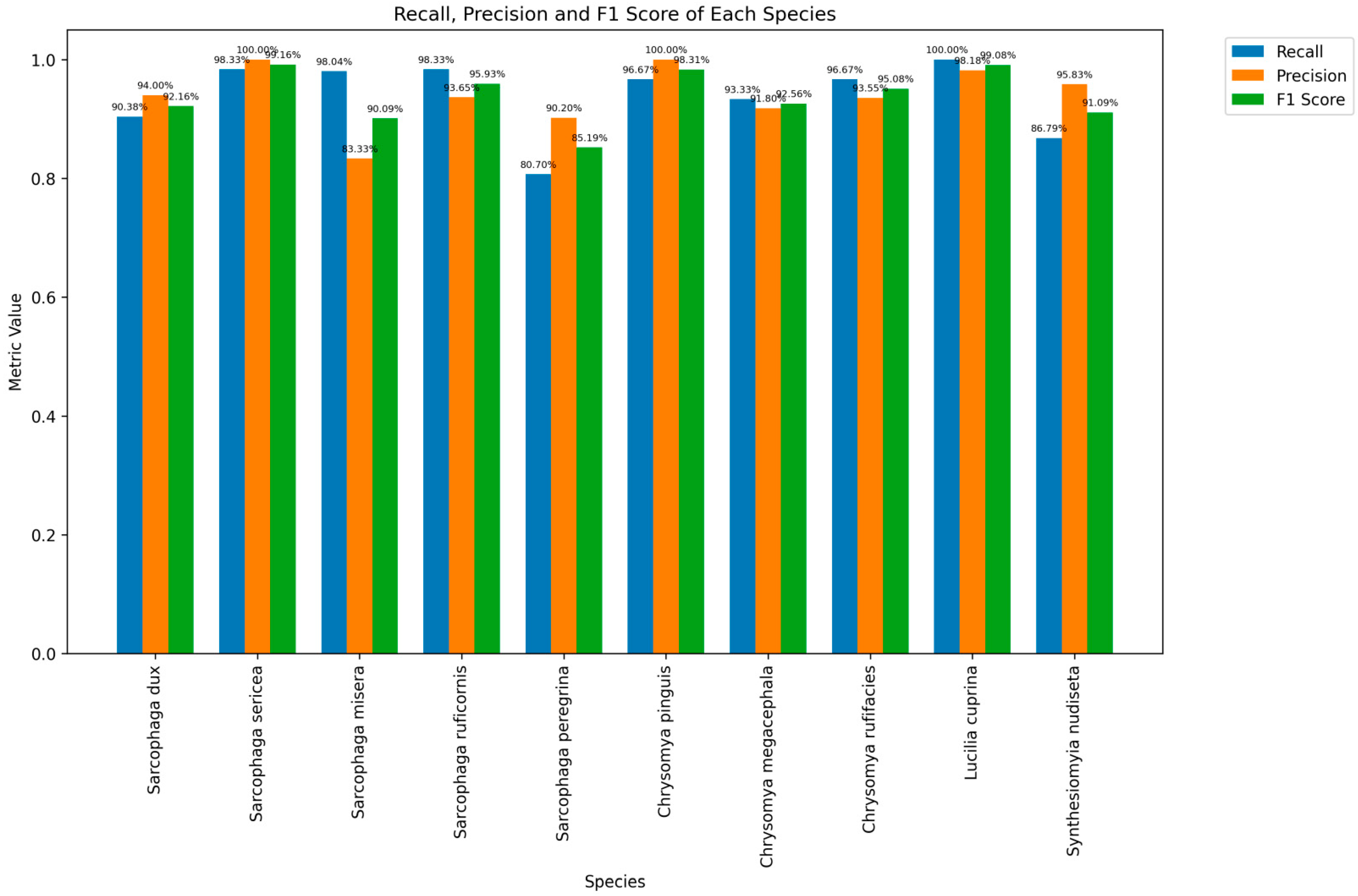

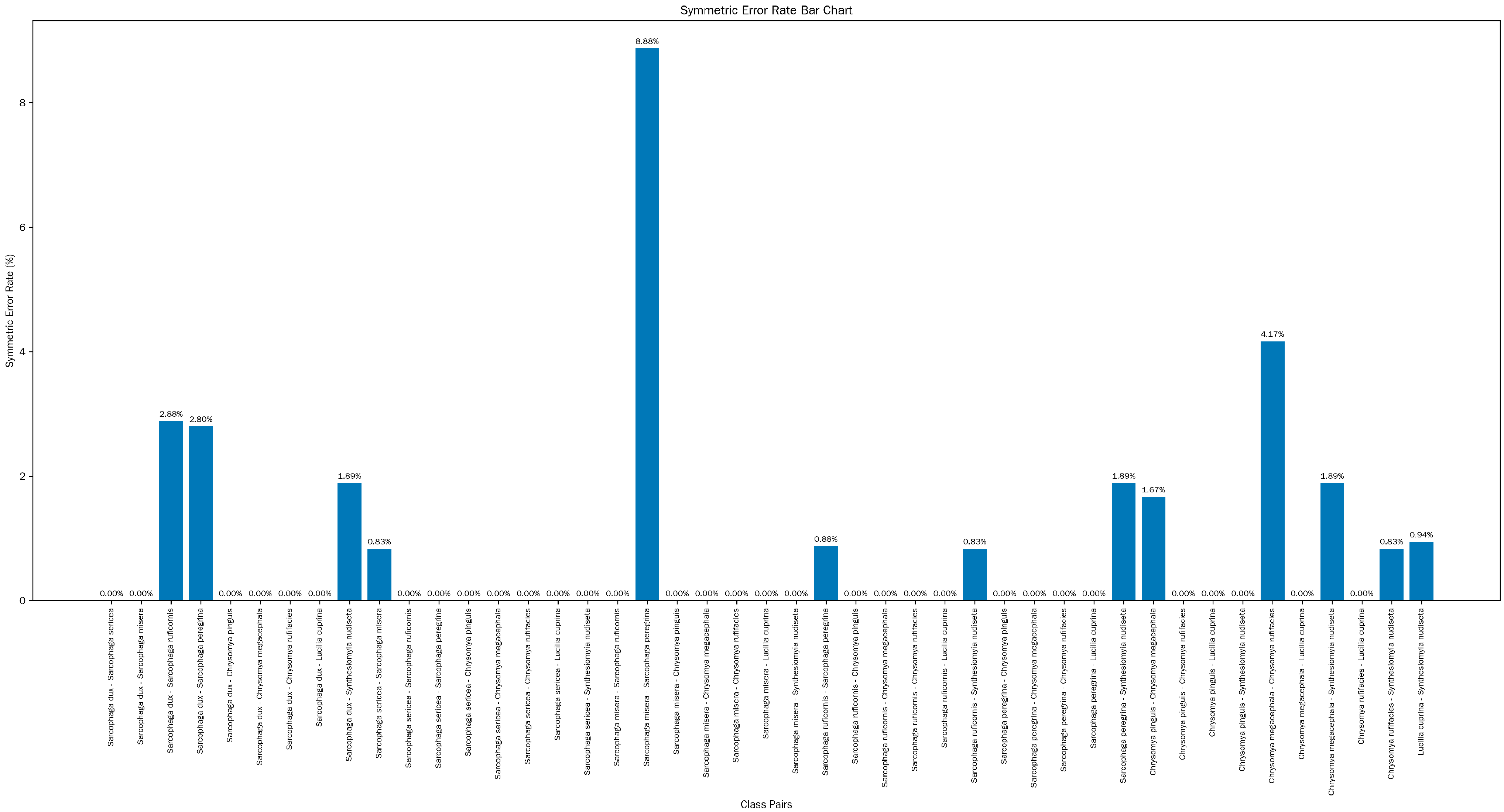

3.4. AI System Testing

3.5. Establishment of the HRM Rapid Inspection System

4. Discussion

4.1. Performance of the Model

4.2. Comparative Analysis with Similar Models

4.3. About the Molecular Rapid Test HRM

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, Y.; Wang, Y.; Wang, M.; Xu, W.; Zhang, Y.; Wang, J. Forensic Entomology in China and Its Challenges. Insects 2021, 12, 230. [Google Scholar] [CrossRef] [PubMed]

- Lei, G.; Liu, F.; Liu, P.; Zhou, Y.; Jiao, T.; Dang, Y.-H. A Bibliometric Analysis of Forensic Entomology Trends and Perspectives Worldwide over the Last Two Decades (1998–2017). Forensic Sci. Int. 2019, 295, 72–82. [Google Scholar] [CrossRef]

- Baena-Bejarano, N.; Reina, C.; Martínez-Revelo, D.E.; Medina, C.A.; Tovar, E.; Uribe-Soto, S.; Neita-Moreno, J.C.; Gonzalez, M.A. Taxonomic Identification Accuracy from BOLD and GenBank Databases Using over a Thousand Insect DNA Barcodes from Colombia. PLoS ONE 2023, 18, e0277379. [Google Scholar] [CrossRef] [PubMed]

- Ong, S.-Q.; Hamid, S.A. Next Generation Insect Taxonomic Classification by Comparing Different Deep Learning Algorithms. PLoS ONE 2022, 17, e0279094. [Google Scholar] [CrossRef] [PubMed]

- Batz, P.; Will, T.; Thiel, S.; Ziesche, T.M.; Joachim, C. From Identification to Forecasting: The Potential of Image Recognition and Artificial Intelligence for Aphid Pest Monitoring. Front. Plant Sci. 2023, 14, 1150748. [Google Scholar] [CrossRef]

- Guo, B.H.W.; Zou, Y.; Fang, Y.; Goh, Y.M.; Zou, P.X.W. Computer Vision Technologies for Safety Science and Management in Construction: A Critical Review and Future Research Directions. Saf. Sci. 2021, 135, 105130. [Google Scholar] [CrossRef]

- Esteva, A.; Chou, K.; Yeung, S.; Naik, N.; Madani, A.; Mottaghi, A.; Liu, Y.; Topol, E.; Dean, J.; Socher, R. Deep Learning-Enabled Medical Computer Vision. NPJ Digit. Med. 2021, 4, 5. [Google Scholar] [CrossRef]

- Wäldchen, J.; Mäder, P. Plant Species Identification Using Computer Vision Techniques: A Systematic Literature Review. Arch. Comput. Methods Eng. 2018, 25, 507–543. [Google Scholar] [CrossRef]

- Petso, T.; Jamisola, R.S.; Mpoeleng, D. Review on Methods Used for Wildlife Species and Individual Identification. Eur. J. Wildl. Res. 2021, 68, 3. [Google Scholar] [CrossRef]

- Christakakis, P.; Papadopoulou, G.; Mikos, G.; Kalogiannidis, N.; Ioannidis, D.; Tzovaras, D.; Pechlivani, E.M. Smartphone-Based Citizen Science Tool for Plant Disease and Insect Pest Detection Using Artificial Intelligence. Technologies 2024, 12, 101. [Google Scholar] [CrossRef]

- Jafar, A.; Bibi, N.; Naqvi, R.A.; Sadeghi-Niaraki, A.; Jeong, D. Revolutionizing Agriculture with Artificial Intelligence: Plant Disease Detection Methods, Applications, and Their Limitations. Front. Plant Sci. 2024, 15, 1356260. [Google Scholar] [CrossRef] [PubMed]

- Zhao, X.; Wang, L.; Zhang, Y.; Han, X.; Deveci, M.; Parmar, M. A Review of Convolutional Neural Networks in Computer Vision. Artif. Intell. Rev. 2024, 57, 99. [Google Scholar] [CrossRef]

- GLB-ViT: Global-Local Balanced Vision Transformer for Fine-Grained Image Classification of Flies|IEEE Conference Publication|IEEE Xplore. Available online: https://ieeexplore.ieee.org/document/10718872 (accessed on 28 February 2025).

- Wang, B.; Xu, Y.-Z.; Zhou, Y.-C.; Ha, S.; Qin, X.-S.; Cai, J.; Cong, B.; Chen, J.-H.; Deng, J.-Q. DNA Extraction and Comparison between Old and Fresh Necrophilic Fly Samples. J. Vis. Exp. 2024. [Google Scholar] [CrossRef]

- Folmer, O.; Black, M.; Hoeh, W.; Lutz, R.; Vrijenhoek, R. DNA Primers for Amplification of Mitochondrial Cytochrome c Oxidase Subunit I from Diverse Metazoan Invertebrates. Mol. Mar. Biol. Biotechnol. 1994, 3, 294–299. [Google Scholar]

- Ryali, C.; Hu, Y.-T.; Bolya, D.; Wei, C.; Fan, H.; Huang, P.-Y.; Aggarwal, V.; Chowdhury, A.; Poursaeed, O.; Hoffman, J.; et al. Hiera: A Hierarchical Vision Transformer without the Bells-and-Whistles. In Proceedings of the 40th International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023. [Google Scholar]

- Liu, Q.L. Detection of Molecular Markers of Sarcosaphagous Calliphorid Flies and Study on the Growth and Development Laws. Ph.D. Thesis, Central South University, Changsha, China, 2013. Available online: https://www.dissertationtopic.net/doc/1854998 (accessed on 28 February 2025).

- Wells, J.D.; Sperling, F.A. A DNA-Based Approach to the Identification of Insect Species Used for Postmortem Interval Estimation and Partial Sequencing of the Cytochrome Oxydase b Subunit Gene I: A Tool for the Identification of European Species of Blow Flies for Postmortem Interval Estimation. J. Forensic Sci. 2000, 45, 1358–1359. [Google Scholar]

- Gohe, A.K.; Kottek, M.J.; Buettner, R.; Penava, P. Classifying Forensically Important Flies Using Deep Learning to Support Pathologists and Rescue Teams during Forensic Investigations. PLoS ONE 2024, 19, e0314533. [Google Scholar] [CrossRef]

- Tannous, M.; Stefanini, C.; Romano, D. A Deep-Learning-Based Detection Approach for the Identification of Insect Species of Economic Importance. Insects 2023, 14, 148. [Google Scholar] [CrossRef]

- Bodlah, M.A.; Iqbal, J.; Ashiq, A.; Bodlah, I.; Jiang, S.; Mudassir, M.A.; Rasheed, M.T.; Fareen, A.G.E. Insect Behavioral Restraint and Adaptation Strategies under Heat Stress: An Inclusive Review. J. Saudi Soc. Agric. Sci. 2023, 22, 327–350. [Google Scholar] [CrossRef]

- Ong, S.-Q.; Ahmad, H. An Annotated Image Dataset of Medically and Forensically Important Flies for Deep Learning Model Training. Sci. Data 2022, 9, 510. [Google Scholar] [CrossRef]

- Khang, T.F.; Mohd Puaad, N.A.D.; Teh, S.H.; Mohamed, Z. Random Forests for Predicting Species Identity of Forensically Important Blow Flies (Diptera: Calliphoridae) and Flesh Flies (Diptera: Sarcophagidae) Using Geometric Morphometric Data: Proof of Concept. J. Forensic Sci. 2021, 66, 960–970. [Google Scholar] [CrossRef]

- Tanajitaree, C.; Sanit, S.; Sukontason, K.L.; Sukontason, K.; Somboon, P.; Anakkamatee, W.; Amendt, J.; Limsopatham, K. Identification of Medically and Forensically Relevant Flies Using a Decision Treelearning Method. Trop. Biomed. 2023, 40, 80–87. [Google Scholar] [CrossRef] [PubMed]

- Ling, M.H.; Ivorra, T.; Heo, C.C.; Wardhana, A.H.; Hall, M.J.R.; Tan, S.H.; Mohamed, Z.; Khang, T.F. Accurate Identification of Fly Species in Forensic, Medical and Veterinary Entomology Using Wing Venation Patterns. Res. Sq. 2023. preprint. [Google Scholar] [CrossRef]

- Gálvez Salido, A.; Herrán Moreno, R.D.L.; Robles Rodríguez, F.; Ruiz Rejón, C.; Navajas Pérez, R. Automatic Counting and Identification of Two Drosophila melanogaster (Diptera: Drosophilidae) Morphs with Image-Recognition Artificial Intelligence. Can. Entomol. 2024, 156, e40. [Google Scholar] [CrossRef]

- Høye, T.T.; Ärje, J.; Bjerge, K.; Hansen, O.L.P.; Iosifidis, A.; Leese, F.; Mann, H.M.R.; Meissner, K.; Melvad, C.; Raitoharju, J. Deep Learning and Computer Vision Will Transform Entomology. Proc. Natl. Acad. Sci. USA 2021, 118, e2002545117. [Google Scholar] [CrossRef]

- Wittwer, C.T.; Hemmert, A.C.; Kent, J.O.; Rejali, N.A. DNA Melting Analysis. Mol. Aspects Med. 2024, 97, 101268. [Google Scholar] [CrossRef]

- Zhang, X.; Yu, H.; Yang, Q.; Wang, Z.; Xia, R.; Chen, C.; Qu, Y.; Tan, R.; Shi, Y.; Xiang, P.; et al. A Forensic Detection Method for Hallucinogenic Mushrooms via High-Resolution Melting (HRM) Analysis. Genes 2021, 12, 199. [Google Scholar] [CrossRef]

- Oliveira, P.V.; de Almeida, F.A.N.; Lugon, M.D.; Britto, K.B.; Oliveira-Costa, J.; Santos, A.R.; Paneto, G.G. Using High-Resolution Melting to Identify Calliphoridae (Blowflies) Species from Brazil. PeerJ 2020, 8, e9680. [Google Scholar] [CrossRef]

- Malewski, T.; Draber-Mońko, A.; Pomorski, J.; Łoś, M.; Bogdanowicz, W. Identification of Forensically Important Blowfly Species (Diptera: Calliphoridae) by High-Resolution Melting PCR Analysis. Int. J. Leg. Med. 2010, 124, 277–285. [Google Scholar] [CrossRef]

| Species | Initial | Increment | Total |

|---|---|---|---|

| Sarcophaga dux | 372 | 296 | 668 |

| Sarcophaga sericea | 405 | 0 | 405 |

| Sarcophaga misera | 505 | 0 | 505 |

| Sarcophaga peregrina | 72 | 502 | 574 |

| Chrysomya pinguis | 2029 | 393 | 2422 |

| Lucilia cuprina | 360 | 0 | 360 |

| Sarcophaga ruficornis * | 0 | 120 | 120 |

| Chrysomya megacephala * | 0 | 731 | 731 |

| Chrysomya rufifacies * | 0 | 796 | 796 |

| Synthesiomyia nudiseta * | 0 | 351 | 351 |

| Total | 3743 | 3189 | 6932 |

| Species | Training | Verifying | Testing | Total |

|---|---|---|---|---|

| Sarcophaga dux | 936 | 52 | 52 | 1040 |

| Sarcophaga sericea | 1080 | 60 | 60 | 1200 |

| Sarcophaga misera | 909 | 50 | 51 | 1010 |

| Sarcophaga ruficornis | 1080 | 60 | 60 | 1200 |

| Sarcophaga peregrina | 1033 | 58 | 57 | 1148 |

| Chrysomya pinguis | 1080 | 60 | 60 | 1200 |

| Chrysomya megacephala | 1080 | 60 | 60 | 1200 |

| Chrysomya rufifacies | 1080 | 60 | 60 | 1200 |

| Lucilia cuprina | 972 | 54 | 54 | 1080 |

| Synthesiomyia nudiseta | 947 | 53 | 53 | 1053 |

| Total | 10,197 | 567 | 567 | 11,331 |

| Families | Genera | Species | Accuracy (Species) | Accuracy (Genus) | Accuracy (Family) |

|---|---|---|---|---|---|

| Sarcophagidae | Sarcophaga | Sarcophaga dux | 90.38% | 93.21% | 93.21% |

| Sarcophaga misera | 98.33% | ||||

| Sarcophaga sericea | 98.04% | ||||

| Sarcophaga ruficornis | 98.33% | ||||

| Sarcophaga peregrina | 80.70% | ||||

| Calliphoridae | Chrysomya | Chrysomya pinguis | 96.67% | 95.56% | 99.57% |

| Chrysomya megacephala | 93.33% | ||||

| Chrysomya rufifacies | 96.67% | ||||

| Lucilia | Lucilia cuprina | 100.00% | 100.00% | ||

| Muscidae | Synthesiomyia | Synthesiomyia nudiseta | 86.79% | 86.79% | 86.79% |

| Species | Sarcophaga misera | Sarcophaga peregrina | ||

|---|---|---|---|---|

| Sample | Standard | Testing | Standard | Testing |

| Tm1 (°C) | 76.02 | 76.23 | 76.78 | 76.87 |

| Tm2 (°C) | 76.05 | 76.22 | 76.95 | 76.98 |

| Tm3 (°C) | 76.00 | 76.25 | 76.65 | 76.80 |

| Species | Chrysomya megacephala | Chrysomya rufifacies | ||

|---|---|---|---|---|

| Samples | Standard | Testing | Standard | Testing |

| Tm1 (°C) | 76.85 | 76.65 | 75.53 | 75.48 |

| Tm2 (°C) | 76.20 | 76.25 | 75.75 | 75.62 |

| Tm3 (°C) | 76.45 | 76.60 | 75.88 | 75.75 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, Y.; Niu, L.; Wang, B.; Li, D.; Gao, Y.; Ha, S.; Fan, B.; Xiong, Y.; Cong, B.; Chen, J.; et al. Preliminary Development of Global–Local Balanced Vision Transformer Deep Learning with DNA Barcoding for Automated Identification and Validation of Forensic Sarcosaphagous Flies. Insects 2025, 16, 529. https://doi.org/10.3390/insects16050529

Ma Y, Niu L, Wang B, Li D, Gao Y, Ha S, Fan B, Xiong Y, Cong B, Chen J, et al. Preliminary Development of Global–Local Balanced Vision Transformer Deep Learning with DNA Barcoding for Automated Identification and Validation of Forensic Sarcosaphagous Flies. Insects. 2025; 16(5):529. https://doi.org/10.3390/insects16050529

Chicago/Turabian StyleMa, Yixin, Lin Niu, Bo Wang, Dianxin Li, Yanzhu Gao, Shan Ha, Boqing Fan, Yixin Xiong, Bin Cong, Jianhua Chen, and et al. 2025. "Preliminary Development of Global–Local Balanced Vision Transformer Deep Learning with DNA Barcoding for Automated Identification and Validation of Forensic Sarcosaphagous Flies" Insects 16, no. 5: 529. https://doi.org/10.3390/insects16050529

APA StyleMa, Y., Niu, L., Wang, B., Li, D., Gao, Y., Ha, S., Fan, B., Xiong, Y., Cong, B., Chen, J., & Deng, J. (2025). Preliminary Development of Global–Local Balanced Vision Transformer Deep Learning with DNA Barcoding for Automated Identification and Validation of Forensic Sarcosaphagous Flies. Insects, 16(5), 529. https://doi.org/10.3390/insects16050529