Development of a Fundus Image-Based Deep Learning Diagnostic Tool for Various Retinal Diseases

Abstract

1. Introduction

2. Materials and Methods

2.1. Subjects

2.2. Fundus Imaging

2.3. Augmentation of Data

2.4. Preprocessing

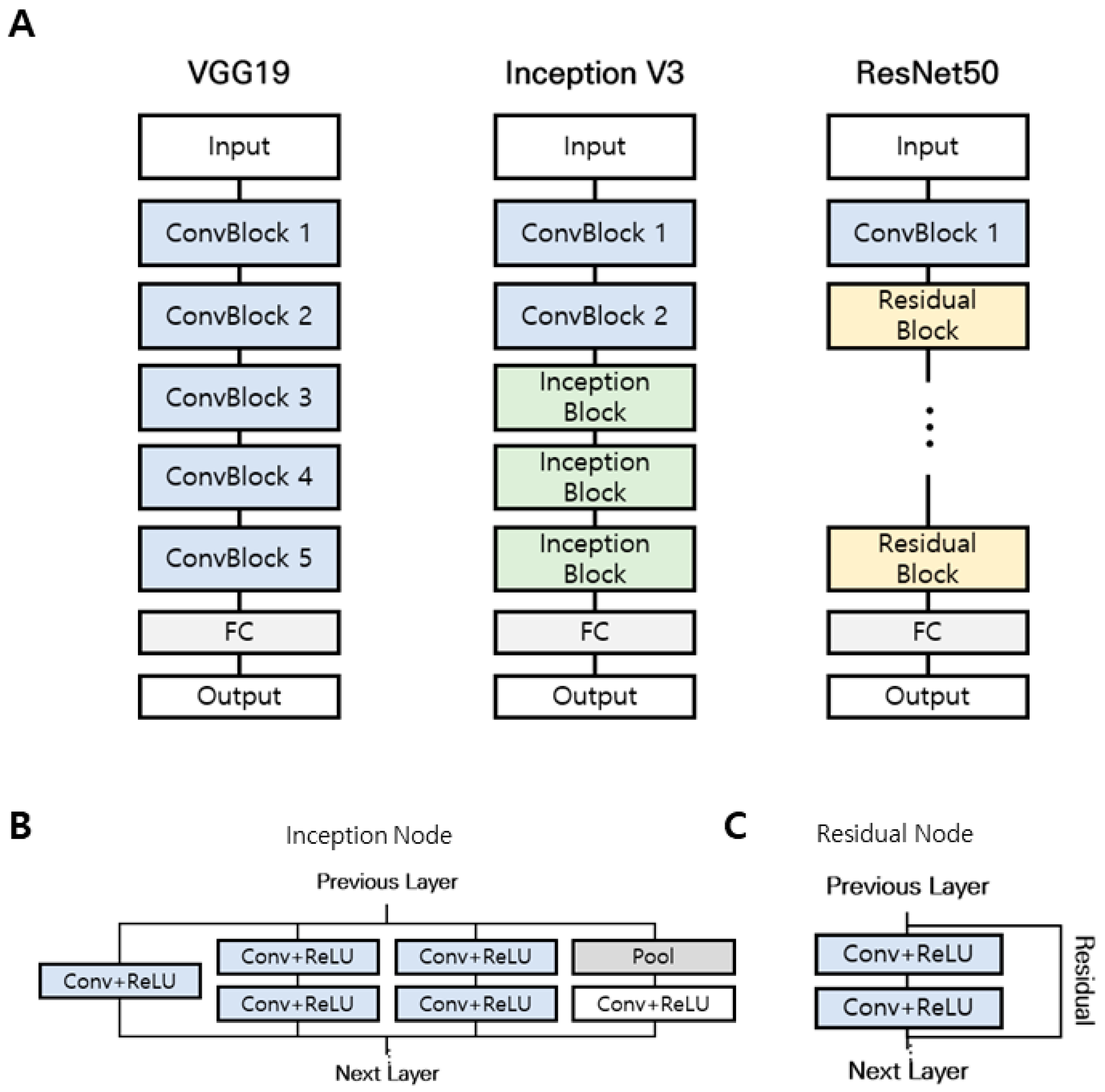

2.5. Convolutional Neural Network (CNN) Modeling

2.6. Cross-Validation of AI-Based Diagnosis

2.7. Classification Performance Evaluation Index

3. Results

3.1. Two-Class Diagnosis

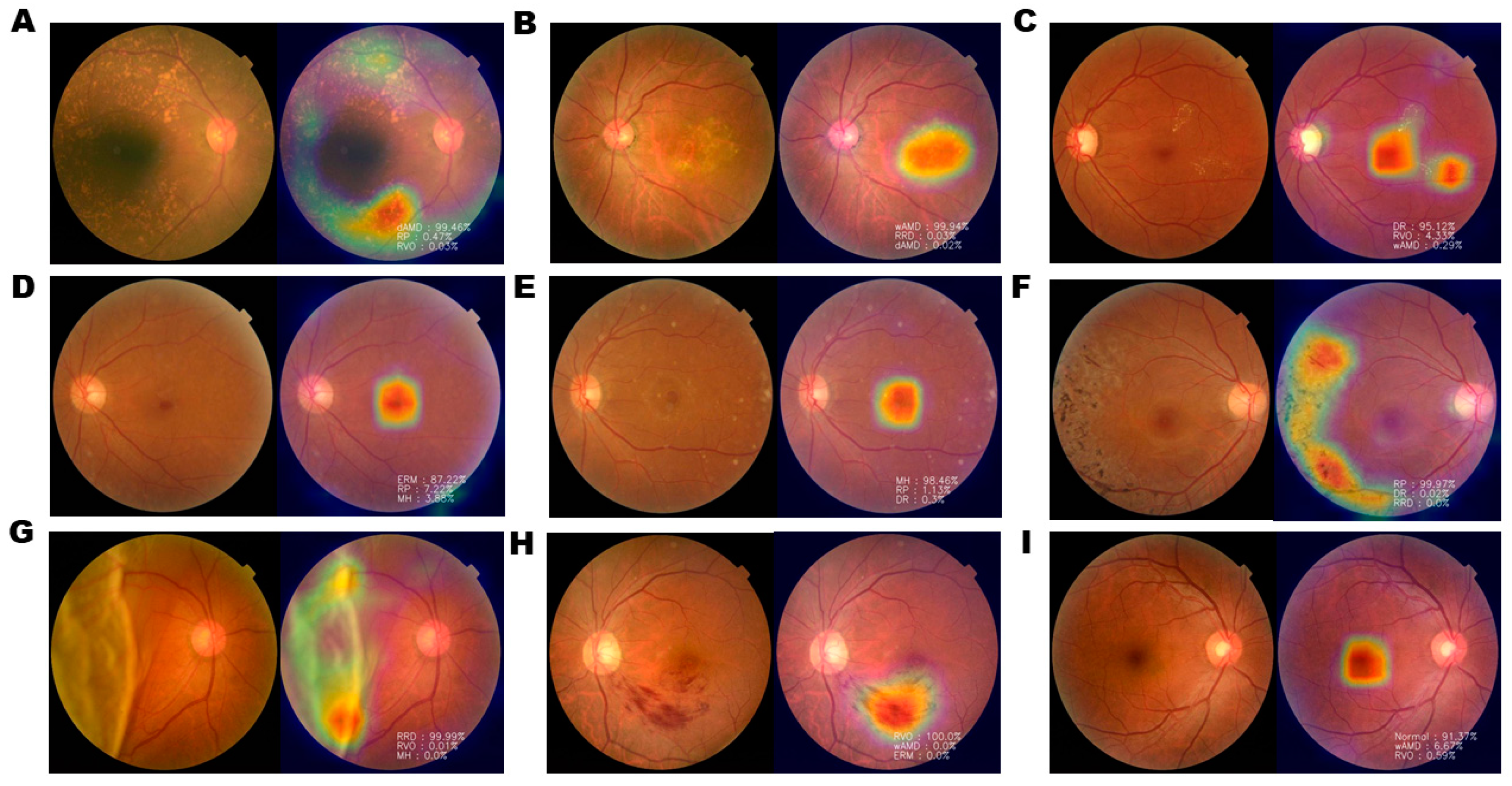

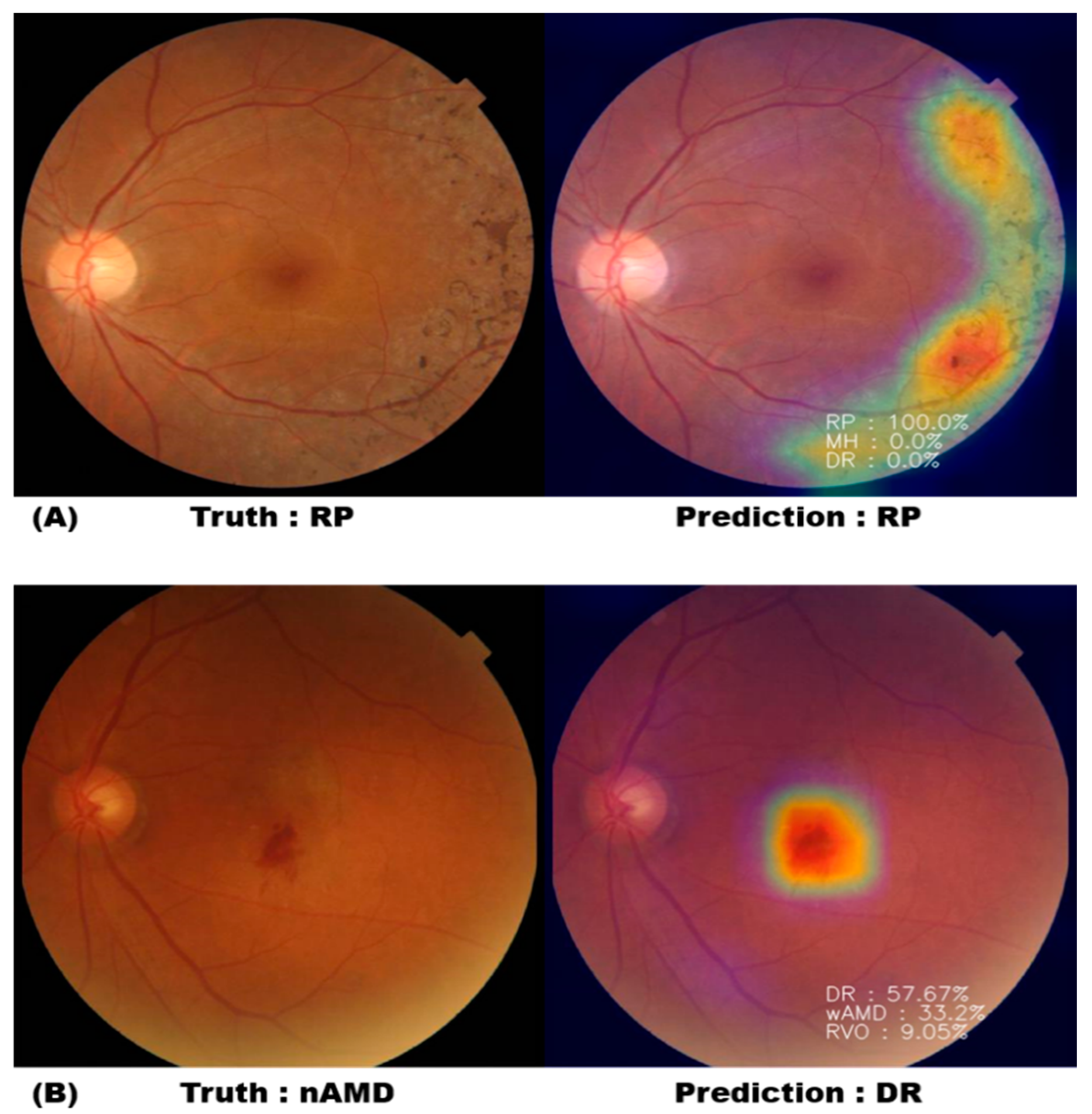

3.2. Nine-Class Diagnosis and Visualization

3.3. Classification Probability

3.4. Comparison of Accuracy Values of the Deep Learning Diagnostic Tool and Residents in Ophthalmology

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Abramoff, M.D.; Lavin, P.T.; Birch, M.; Shah, N.; Folk, J.C. Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. NPJ Digit. Med. 2018, 1, 39. [Google Scholar] [CrossRef] [PubMed]

- Heo, T.Y.; Kim, K.M.; Min, H.K.; Gu, S.M.; Kim, J.H.; Yun, J.; Min, J.K. Development of a deep-learning-based artificial intelligence tool for differential diagnosis between dry and neovascular age-related macular degeneration. Diagnostics 2020, 10, 261. [Google Scholar] [CrossRef]

- Bernardes, R.; Serranho, P.; Lobo, C. Digital ocular fundus imaging: A review. Ophthalmologica 2011, 226, 161–181. [Google Scholar] [CrossRef]

- Adhi, M.; Brewer, E.; Waheed, N.K.; Duker, J.S. Analysis of morphological features and vascular layers of choroid in diabetic retinopathy using spectral-domain optical coherence tomography. JAMA Ophthalmol. 2013, 131, 1267–1274. [Google Scholar] [CrossRef]

- Hwang, T.S.; Gao, S.S.; Liu, L.; Lauer, A.K.; Bailey, S.T.; Flaxel, C.J.; Wilson, D.J.; Huang, D.; Jia, Y. Automated quantification of capillary nonperfusion using optical coherence tomography angiography in diabetic retinopathy. JAMA Ophthalmol. 2016, 134, 367–373. [Google Scholar] [CrossRef]

- Hall, A. Recognising and managing diabetic retinopathy. Community Eye Health 2011, 24, 5–9. [Google Scholar]

- Tremolada, G.; Del Turco, C.; Lattanzio, R.; Maestroni, S.; Maestroni, A.; Bandello, F. The role of angiogenesis in the development of proliferative diabetic retinopathy: Impact of intravitreal anti-VEGF treatment. Exp. Diabetes Res. 2012, 2012, 728325. [Google Scholar] [CrossRef]

- McDonald, H.R.; Verre, W.P.; Aaberg, T.M. Surgical management of idiopathic epiretinal membranes. Ophthalmology 1986, 93, 978–983. [Google Scholar] [CrossRef]

- Hillenkamp, J.; Saikia, P.; Gora, F.; Sachs, H.G.; Lohmann, C.P.; Roider, J.; Bäumler, W.; Gabel, V.-P. Macular function and morphology after peeling of idiopathic epiretinal membrane with and without the assistance of indocyanine green. Br. J. Ophthalmol. 2005, 89, 437–443. [Google Scholar] [CrossRef][Green Version]

- Falkner-Radler, C.I.; Glittenberg, C.; Hagen, S.; Benesch, T.; Binder, S. Spectral-domain optical coherence tomography for monitoring epiretinal membrane surgery. Ophthalmology 2010, 117, 798–805. [Google Scholar] [CrossRef]

- Niwa, T.; Terasaki, H.; Kondo, M.; Piao, C.H.; Suzuki, T.; Miyake, Y. Function and morphology of macula before and after removal of idiopathic epiretinal membrane. Invest. Ophthalmol. Vis. Sci. 2003, 44, 1652–1656. [Google Scholar] [CrossRef]

- Schadlu, R.; Tehrani, S.; Shah, G.K.; Prasad, A.G. Long-term follow-up results of ilm peeling during vitrectomy surgery for premacular fibrosis. Retina 2008, 28, 853–857. [Google Scholar] [CrossRef]

- Kuhn, F.; Aylward, B. Rhegmatogenous retinal detachment: A reappraisal of its pathophysiology and treatment. Ophthalmic Res. 2014, 51, 15–31. [Google Scholar] [CrossRef]

- Levine, A.B.; Schlosser, C.; Grewal, J.; Coope, R.; Jones, S.J.M.; Yip, S. Rise of the machines: Advances in deep learning for cancer diagnosis. Trends Cancer 2019, 5, 157–169. [Google Scholar] [CrossRef]

- Coudray, N.; Ocampo, P.S.; Sakellaropoulos, T.; Narula, N.; Snuderl, M.; Fenyo, D.; Moreira, A.L.; Razavian, N.; Tsirigos, A. Classification and mutation prediction from non-small cell lung cancer histopathology images using deep learning. Nat. Med. 2018, 24, 1559–1567. [Google Scholar] [CrossRef]

- Gass, J.D. Idiopathic senile macular hole. Its early stages and pathogenesis. Arch. Ophthalmol. 1988, 106, 629–639. [Google Scholar] [CrossRef]

- Chung, H.; Byeon, S.H. New insights into the pathoanatomy of macular holes based on features of optical coherence tomography. Surv. Ophthalmol. 2017, 62, 506–521. [Google Scholar] [CrossRef]

- Scott, I.U.; VanVeldhuisen, P.C.; Ip, M.S.; Blodi, B.A.; Oden, N.L.; Awh, C.C. Effect of bevacizumab vs aflibercept on visual acuity among patients with macular edema due to central retinal vein occlusion: The SCORE2 randomized clinical trial. JAMA 2017, 317, 2072–2087. [Google Scholar] [CrossRef] [PubMed]

- Ip, M.; Hendrick, A. Retinal vein occlusion review. Asia Pac. J. Ophthalmol 2018, 7, 40–45. [Google Scholar] [CrossRef]

- Age-Related Eye Disease Study Research, G. A randomized, placebo-controlled, clinical trial of high-dose supplementation with vitamins C and E and beta carotene for age-related cataract and vision loss: AREDS report no. 9. Arch. Ophthalmol. 2001, 119, 1439–1452. [Google Scholar]

- Muller, P.L.; Wolf, S.; Dolz-Marco, R.; Tafreshi, A.; Schmitz-Valckenberg, S.; Holz, F.G. Ophthalmic diagnostic imaging: Retina. In High. Resolution Imaging in Microscopy and Ophthalmology: New Frontiers in Biomedical Optics; Bille, J.F., Ed.; Springer: Cham, Switzerland, 2019; pp. 87–106. [Google Scholar]

- Donato, L.; Scimone, C.; Alibrandi, S.; Pitruzzella, A.; Scalia, F.; D’Angelo, R.; Sidoti, A. Possible A2E Mutagenic Effects on RPE Mitochondrial DNA from Innovative RNA-Seq Bioinformatics Pipeline. Antioxidants 2020, 9, 1158. [Google Scholar] [CrossRef]

- Scimone, C.; Alibrandi, S.; Scalinci, S.Z.; Battaglioda, E.T.; D’Angelo, R.; Sidoti, A.; Donato, L. Expression of Pro-Angiogenic Markers Is Enhanced by Blue Light in Human RPE Cells. Antioxidants 2020, 9, 1154. [Google Scholar] [CrossRef]

| Disease | dAMD | nAMD | DR | ERM | RRD | RP | MH | RVO | Control | |

|---|---|---|---|---|---|---|---|---|---|---|

| Fundus images (n) | 58 | 79 | 95 | 99 | 80 | 50 | 49 | 39 | 79 | |

| Gender | Male | 27 | 41 | 53 | 53 | 47 | 26 | 31 | 19 | 40 |

| Female | 31 | 38 | 42 | 46 | 33 | 24 | 18 | 20 | 39 | |

| Age (years) | 69.6 ± 8.0 | 69.1 ± 8.3 | 53.2 ± 10.4 | 63.6 ± 7.6 | 54.4 ± 14.6 | 53.4 ± 11.0 | 64.2 ± 8.9 | 67.5 ± 8.0 | 56.7 ± 7.3 | |

| Argument | Value | |

|---|---|---|

| (1) | Width_shift_range | 0.4 |

| (2) | Height_shift_range | 0.2 |

| (3) | Rotation_range | 90 |

| (4) | Zoom_range | 0.1 |

| (5) | Horizontal_flip | True |

| Vertical_flip | ||

| (6) | Shear_range | 30 |

| Model | VGG19 | Inception v3 | ResNet50 |

|---|---|---|---|

| Accuracy | 99.12% | 98.08% | 97.85% |

| Dense Layer | VGG19 | Inception v3 | ResNet50 |

|---|---|---|---|

| 128 nodes | 0.8200 ± 0.0282 | 0.8340 ± 0.0364 | 0.8742 ± 0.0349 |

| 256 nodes | 0.8135 ± 0.0315 | 0.8212 ± 0.0444 | 0.8646 ± 0.0205 |

| 128 nodes + 128 nodes | 0.8168 ± 0.0243 | 0.8360 ± 0.0115 | 0.8694 ± 0.0338 |

| 256 nodes + 256 nodes | 0.8026 ± 0.0365 | 0.8483 ± 0.0381 | 0.8452 ± 0.0351 |

| Model | Accuracy | Class | Sensitivity | Specificity | PPV | NPV |

|---|---|---|---|---|---|---|

| ResNet50 with 128 nodes | 87.42% | dAMD | 0.8190 | 0.9844 | 0.8439 | 0.9770 |

| DR | 0.9262 | 0.9833 | 0.9052 | 0.9868 | ||

| ERM | 0.9252 | 0.9830 | 0.9089 | 0.9850 | ||

| MH | 0.8192 | 0.7960 | 0.7556 | 0.9861 | ||

| Normal | 0.8830 | 0.9873 | 0.9092 | 0.9800 | ||

| RP | 0.9085 | 0.9966 | 0.9600 | 0.9914 | ||

| RRD | 0.8143 | 0.9870 | 0.9125 | 0.9671 | ||

| RVO | 0.8514 | 0.9916 | 0.8750 | 0.9882 | ||

| wAMD | 0.9708 | 0.9667 | 0.7600 | 0.9964 |

| AI Results | Ophthalmology Residents’ Results | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Before Referring to AI Results | After Referring to AI Results | ||||||||

| 1 | 2 | 3 | 4 | 1 | 2 | 3 | 4 | ||

| Wrong count | 29 | 15 | 21 | 28 | 27 | 14 | 17 | 29 | 23 |

| Accuracy (%) | 83.9 | 91.7 | 88.3 | 84.4 | 85 | 92.2 | 90.6 | 83.9 | 87.2 |

| Time (min) | 50 | 70 | 75 | 32 | 15 | 25 | 24 | 25 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, K.M.; Heo, T.-Y.; Kim, A.; Kim, J.; Han, K.J.; Yun, J.; Min, J.K. Development of a Fundus Image-Based Deep Learning Diagnostic Tool for Various Retinal Diseases. J. Pers. Med. 2021, 11, 321. https://doi.org/10.3390/jpm11050321

Kim KM, Heo T-Y, Kim A, Kim J, Han KJ, Yun J, Min JK. Development of a Fundus Image-Based Deep Learning Diagnostic Tool for Various Retinal Diseases. Journal of Personalized Medicine. 2021; 11(5):321. https://doi.org/10.3390/jpm11050321

Chicago/Turabian StyleKim, Kyoung Min, Tae-Young Heo, Aesul Kim, Joohee Kim, Kyu Jin Han, Jaesuk Yun, and Jung Kee Min. 2021. "Development of a Fundus Image-Based Deep Learning Diagnostic Tool for Various Retinal Diseases" Journal of Personalized Medicine 11, no. 5: 321. https://doi.org/10.3390/jpm11050321

APA StyleKim, K. M., Heo, T.-Y., Kim, A., Kim, J., Han, K. J., Yun, J., & Min, J. K. (2021). Development of a Fundus Image-Based Deep Learning Diagnostic Tool for Various Retinal Diseases. Journal of Personalized Medicine, 11(5), 321. https://doi.org/10.3390/jpm11050321