1. Introduction

Post-thrombotic syndrome (PTS) is a frequent and clinically consequential complication of lower-extremity deep vein thrombosis (DVT), characterized by chronic pain, edema, skin changes, and functional limitation, with attendant impairment in quality of life and increased health-care utilization [

1,

2]. Patients with unprovoked DVT are of particular concern because long-term venous sequelae remain difficult to predict early in the clinical course at the point of care [

3,

4]. Interventions most likely to mitigate PTS—prompt initiation and sustained use of anticoagulation, adherence to compression therapy, and structured follow-up—are time-sensitive and often resource dependent [

5]. However, routine practice seldom provides calibrated, horizon-specific estimates of absolute PTS risk, which limits shared decision-making and targeted allocation of preventive care. Accordingly, pragmatic, data-driven tools are needed to translate routinely collected clinical information into individualized estimates of PTS risk during the first year after unprovoked DVT, thereby informing follow-up strategies and supportive interventions.

Venous pathophysiology provides a rationale for prespecifying candidate predictors. Proximal thrombus burden—particularly iliofemoral involvement—may impair venous outflow, damage valves, and sustain venous hypertension; in parallel, care-process variables (time to anticoagulation, duration of anticoagulation, and subsequent use of compression stockings) may modify downstream remodeling [

5,

6,

7]. Beyond these more mechanical and purely procedural factors, we deliberately incorporated a metabolic–inflammatory dimension; hyperuricemia was chosen as the candidate predictor, informed by prior reports linking serum urate to various aspects of endothelial dysfunction, near-constant oxidative stress, and some form of thrombo-inflammatory signaling [

8,

9]. To reduce nonspecific inflammatory confounding, major inflammatory conditions (e.g., active infection and autoimmune disease) were excluded, while hyperuricemia was retained as the exposure of interest. The model thus captures both actionable care processes and more fundamental thrombus anatomy; testing a readily measurable metabolic marker’s addition of any truly independent prognostic information for post-thrombotic remodeling is the endpoint we are seeking to achieve in unprovoked DVT.

Several limitations in existing prognostic studies for PTS support the need for a revised approach. Many studies are single-center and retrospective, and they often emphasize conventional Cox nomograms with performance assessed at only one time point, thereby providing limited insight into risk trajectories. Reporting of absolute-risk calibration is inconsistent, and clinical utility is seldom quantified using decision-curve methods. Moreover, a clear separation between model development and independent evaluation in a held-out test cohort is absent in some studies, increasing susceptibility to overfitting and optimistic performance estimates [

10]. Prior work has variably measured or completely omitted actionable process-of-care factors; metabolic–inflammatory contributors, when addressed, suffer from poor control of confounding. These limitations motivate a prospective design, some form of survival-oriented modeling across multiple horizons, and a more comprehensive evaluation—prediction leading in part to, or at least informing, potential future interventions.

Survival-oriented machine learning offers a suitable framework for this problem. The risk of post-thrombotic syndrome unfolds over time; clinical decisions are inherently horizon-specific. Single-time-point nomograms cannot capture this well, whereas survival learners are capable of estimating risk at multiple, prespecified follow-up points; nonlinearity and various interactions between variables are naturally accommodated within these estimates. A prespecified training–test workflow, using 10-fold cross-validation and a subsequent one-time evaluation against a true held-out test set, helps control overfitting and produces more “deployment-relevant” performance estimates. Comprehensive reporting—time-dependent AUC, some form of fixed-time ROC, concordance, calibration, overall error, and decision-curve analysis—grounds the models’ clinical utility in quantifiable terms. Penalized Cox models provide the near-perfect transparency needed for bedside use; tree/boosting methods, when appropriate, offer the flexible function learning we all seek.

The objective of this study is to develop and compare multiple survival machine-learning models for predicting incident PTS after unprovoked lower-extremity DVT. Routinely available clinical information serves as the basis for all predictions; clinically relevant horizons (3, 6, 9, and 12 months) are specifically targeted for performance quantification. The evaluation emphasizes discrimination, calibration, overall prediction error, and decision value; models generate continuous, patient-level risk estimates, with simple risk groupings used as a secondary output. Model development and subsequent tuning occur entirely within the training set; a single, independent test set appraisal is used for final evaluation, reflecting as closely as possible a form of true prospective use.

This study employs a prospective, dual-center cohort with standardized Villalta assessments at 3/6/9/12 months, a 70/30 training–test split stratified by center and events, and harmonized preprocessing across eight survival learners (penalized Cox, CoxBoost, Random Survival Forest, Gradient Boosting Machine, survivalsvm, XGBoost with a Cox objective, superpc, and plsRcox). The intended contribution is to provide calibrated absolute-risk estimates and actionable summaries that can be integrated into clinical information systems to support tiered follow-up and preventive strategies after unprovoked DVT. External validation and implementation studies remain the necessary next steps for translation of these prediction tools into routine care.

2. Materials and Methods

2.1. Study Design and Participants

We conducted a prospective, dual-center cohort study at Fuxing Hospital and Xuanwu Hospital (Capital Medical University). The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of Fuxing Hospital, Capital Medical University (protocol code 2025FXHEC-KSP043, approval date 14 January 2025). Adults (≥18 years) with a first episode of unprovoked lower-extremity DVT were enrolled; confirmation of the DVT itself was achieved through either duplex ultrasound or CT venography. In this study cohort, the DVT location was limited to the femoral and iliofemoral veins; isolated distal DVT cases were not included. The “index date” being the date of this diagnosis, participants were enrolled within a prespecified post-diagnosis window. Key exclusions included the following: provoked DVT (post-operative/trauma, pregnancy/puerperium, and/or active cancer), any prior form of PTS, and essential baseline data that exceeded an allowable degree of missingness. Hyperuricemia was a prespecified predictor of interest—reflecting a more systemic inflammatory–metabolic status—and thus conditions capable of confounding various inflammatory profiles were also excluded; active infections, autoimmune diseases, and all chronic non-hyperuricemic inflammatory disorders fell into this category. The study protocol received full approval from the institutional review board (ethics approval 2025FXHEC-KSP043, approval date 14 January 2025); informed consent was obtained from all subjects involved in the study. Standardized enrollment procedures, case ascertainment, and, to a large extent, data capture, were put in place to harmonize definitions across both sites; selection bias remains a low priority but controlled concern.

2.2. Outcome, Predictors, Preprocessing, and Data Split

The primary endpoint was incident PTS, adjudicated by trained assessors utilizing the Villalta scale at 3, 6, 9, and 12 months; survival time was defined from the index date to the first occurrence of any form of PTS, with censoring at last contact or end of study. Candidate predictors were pre-specified and clinically motivated; demographics/comorbidities (age, sex, body mass index (BMI), hyperuricemia, and chronic kidney disease (CKD)), thrombus characteristics (notably iliac–femoral involvement), care-process/treatment variables (time-to-anticoagulation, as well as class and duration of anticoagulant use), compression-stocking use/adherence, various lifestyle factors (smoking and alcohol) and baseline laboratories made up the full set. Treatment delay (time to anticoagulant treatment) is defined as the time interval (in days) from the onset of symptoms reported by the patient to the initiation of therapeutic anticoagulant treatment, not the delay after imaging diagnosis. “Anticoagulant type” was defined as the anticoagulant class used at the index presentation (initial treatment phase), whereas the overall duration of anticoagulation was recorded separately as “Anticoag Duration”. Concomitant pulmonary embolism at the index presentation was not prespecified for systematic capture in the study case-report forms and was therefore not included in the current analyses; it was not an exclusion criterion. In the training set, we performed univariable followed by multivariable Cox regression to identify a parsimonious yet clinically plausible subset of predictors. Collinearity was screened, and all subsequent modeling based upon this was reconciled. The dataset was randomly partitioned 70/30 into training and independent test sets, stratified by both center and event type for reproducible analysis. Feature screening and all forms of hyperparameter tuning were kept within the training set; the true, final evaluation of the model was and remains to be held back on the test set.

2.3. Model Development

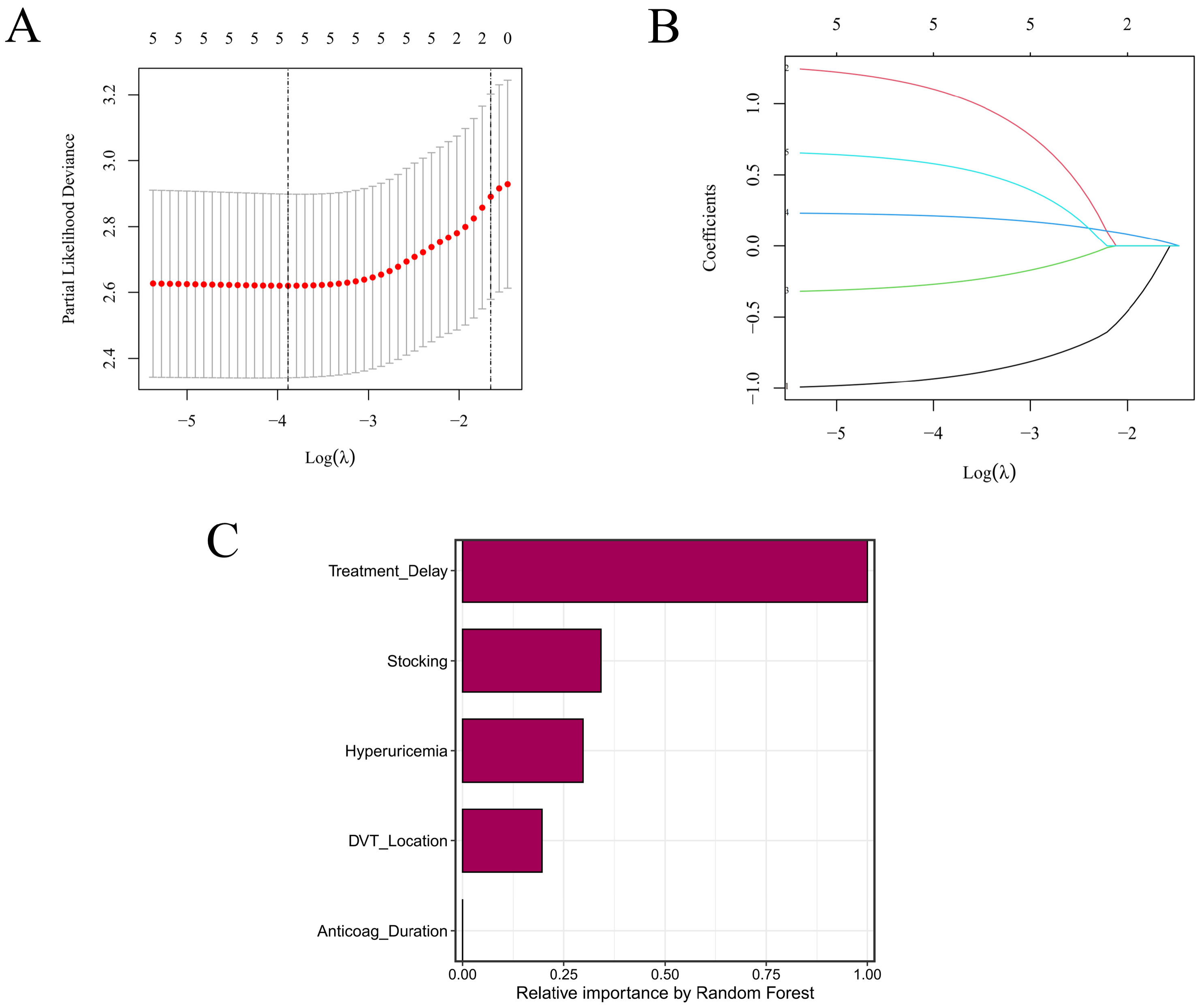

Eight survival learners were trained in the training set using 10-fold cross-validation for tuning/selection, with a fixed random seed (set.seed(123)) across pipelines. For Random Survival Forest (RSF), we used rfsrc with ntree = 1000, nodesize = 3, mtry = 2, splitrule = “logrank”, and enabled importance, proximity, and forest objects. Gradient Boosting Machine (GBM) (gbm, distribution = “coxph”) used n.trees = 1000, interaction.depth = 5, n.minobsinnode = 3, shrinkage = 0.01, and cv.folds = 10 (parallel cores as available). LASSO + Cox employed glmnet (alpha = 1, maxit = 1000), with 10-fold CV (λ_min and λ_1se) for penalty selection consistent with our LASSO CV plots. CoxBoost penalties were optimized via optimCoxBoostPenalty (start.penalty = 200), and the number of boosting steps chosen by 10-fold cv.CoxBoost (maxstepno = 200, type = “verweij”); the final model was refit with stepno = cv.optimal and the selected penalty. For survivalsvm, we used a linear kernel (kernel = “lin_kernel”) with gamma.mu = 0.1, opt.meth = “quadprog”, diff.meth = “makediff3”, sgf.sv = 5, sigf = 7, maxiter = 100, margin = 0.05, bound = 10. XGBoost (Cox) used a tree booster with objective = “survival:cox”, eval_metric = “cox-nloglik”, eta = 0.03, max_depth = 3, subsample = 1, colsample_bytree = 1, gamma = 0.5, nrounds = 100, and early_stopping_rounds = 50. superpc was trained with s0.perc = 0.5 and cross-validated using n.fold = 10, n.threshold = 20, n.components = 3 (with min.features = 5, max.features set to all available, and full CV/prevalidation enabled). For plsRcox, component selection used 10-fold CV up to nt = 10, yielding a final model with nt = 3; risks were then predicted for both training and test sets. To aid interpretability, we examined LASSO coefficient paths and RSF permutation/Gini importance to summarize variable contributions across linear-penalized and tree-based learners. Final models were refit on the full training data using the selected hyperparameters and then evaluated once in the test set without further tuning.

2.4. Performance Evaluation and Clinical Utility

Model performance was first assessed within the training set using 10-fold cross-validation, followed by a single evaluation in the independently held-out test set. Accordingly, training-set metrics are presented for the model development context, whereas test-set metrics represent the primary internal validation estimates. Discrimination was quantified through time-dependent AUCs at 3, 6, 9, and 12 months; fixed-time ROC AUCs (also at 9 and 12 months) were used as complements, with bootstrap-derived 95% CIs where applicable. Harrell’s C-index (and its corresponding CI) was reported. Calibration was evaluated both visually and by use, again at regular intervals throughout the follow-up; optimism-correction by some form of bootstrap resampling was applied where possible. Overall prediction error was summarized via Brier and integrated Brier scores. Clinical usefulness was appraised using decision-curve analysis, net benefits compared against “treat-all” and “treat-none”, and upper envelope tracing was the endpoint of interest. Uncertainty around key estimates was characterized by 1000 resamples (two-sided α = 0.05). All pre-specified thresholds, metrics, and/or plotting decisions were made uniformly for both training and test sets.

2.5. Risk Stratification, Software, and Reproducibility

For each fitted model, we computed an individual risk score. Risk groups were defined separately within both the training and test cohorts; the cohort-specific median of said score formed the definition of high vs. low groups. Survival functions for these groups were estimated using Kaplan–Meier methods with associated Greenwood standard errors; two-sided log-rank tests served as the means of comparison. Plots were generated via survminer::ggsurvplot with near-identical, consistent options: conf.int = TRUE, pval = TRUE (pval.method was also set to true), surv.median.line = “hv”, legend.labs = c(“high”,”low”), and ggtheme = theme_bw(), a simple two-color palette; risk tables were suppressed. Time origin, censoring rules, and follow-up all matched the primary analysis. All computations took place in R (version 4.5.0); survival, survminer, glmnet, randomForestSRC, CoxBoost, survivalsvm, gbm, xgboost, riskRegression, pec, timeROC, pROC, superpc, and plsRcox, and mice were among the packages used. Reproducibility was a primary concern: set.seed(123) was applied across all pipelines, full hyperparameter grids archived, and/or analysis environments frozen (version controlled). De-identified data and scripts are stored securely on institutional servers to allow for perfect reruns of all analyses.

4. Discussion

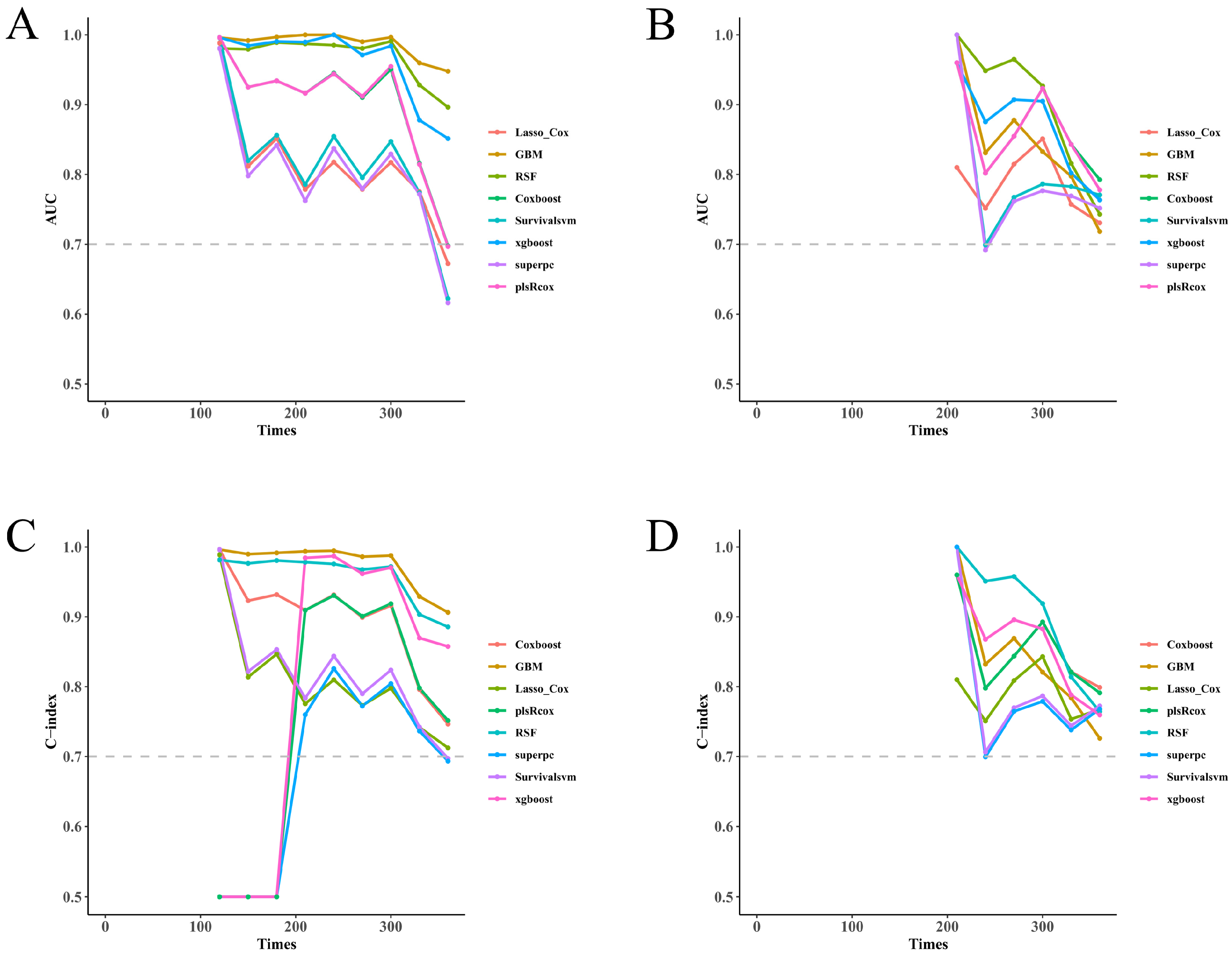

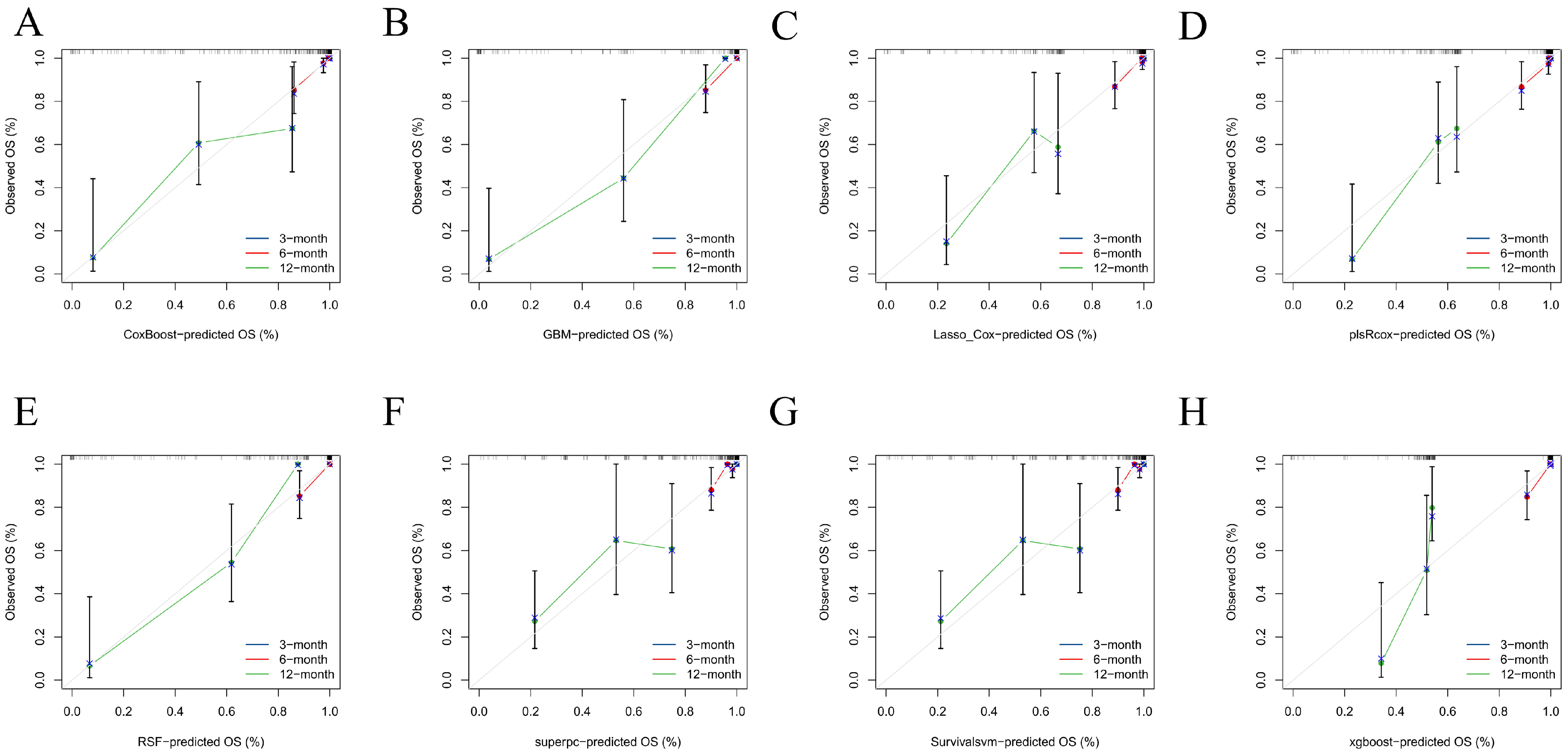

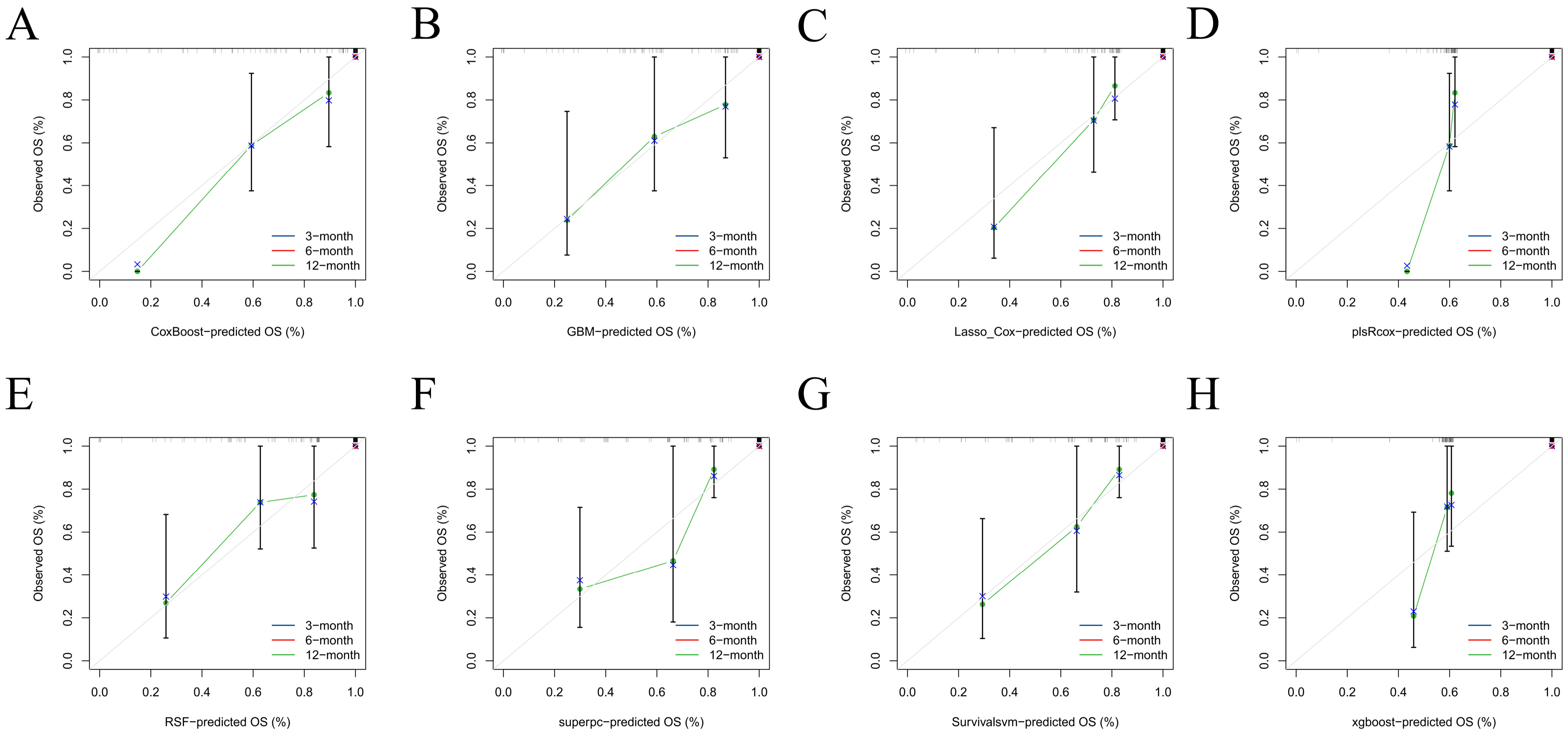

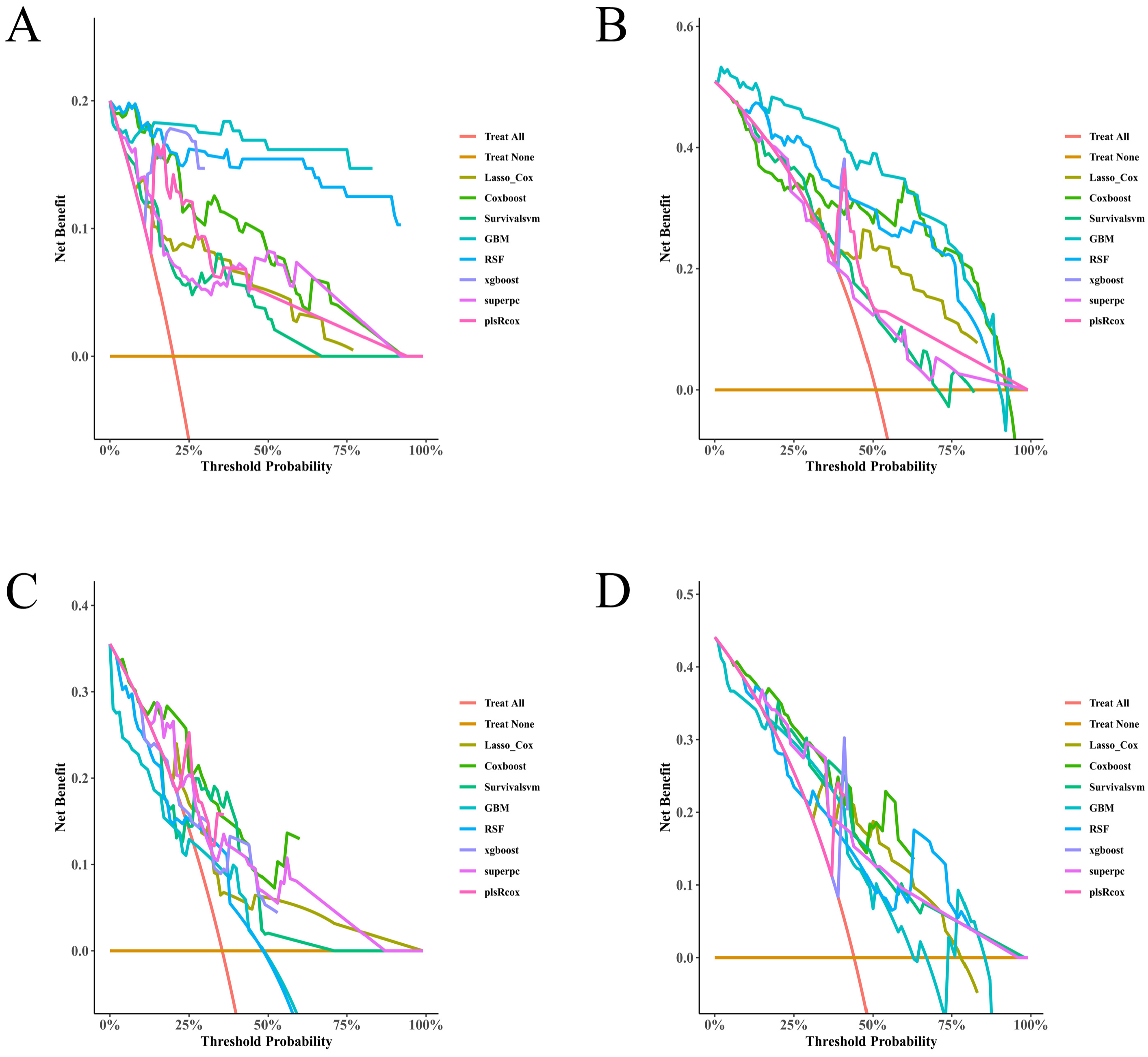

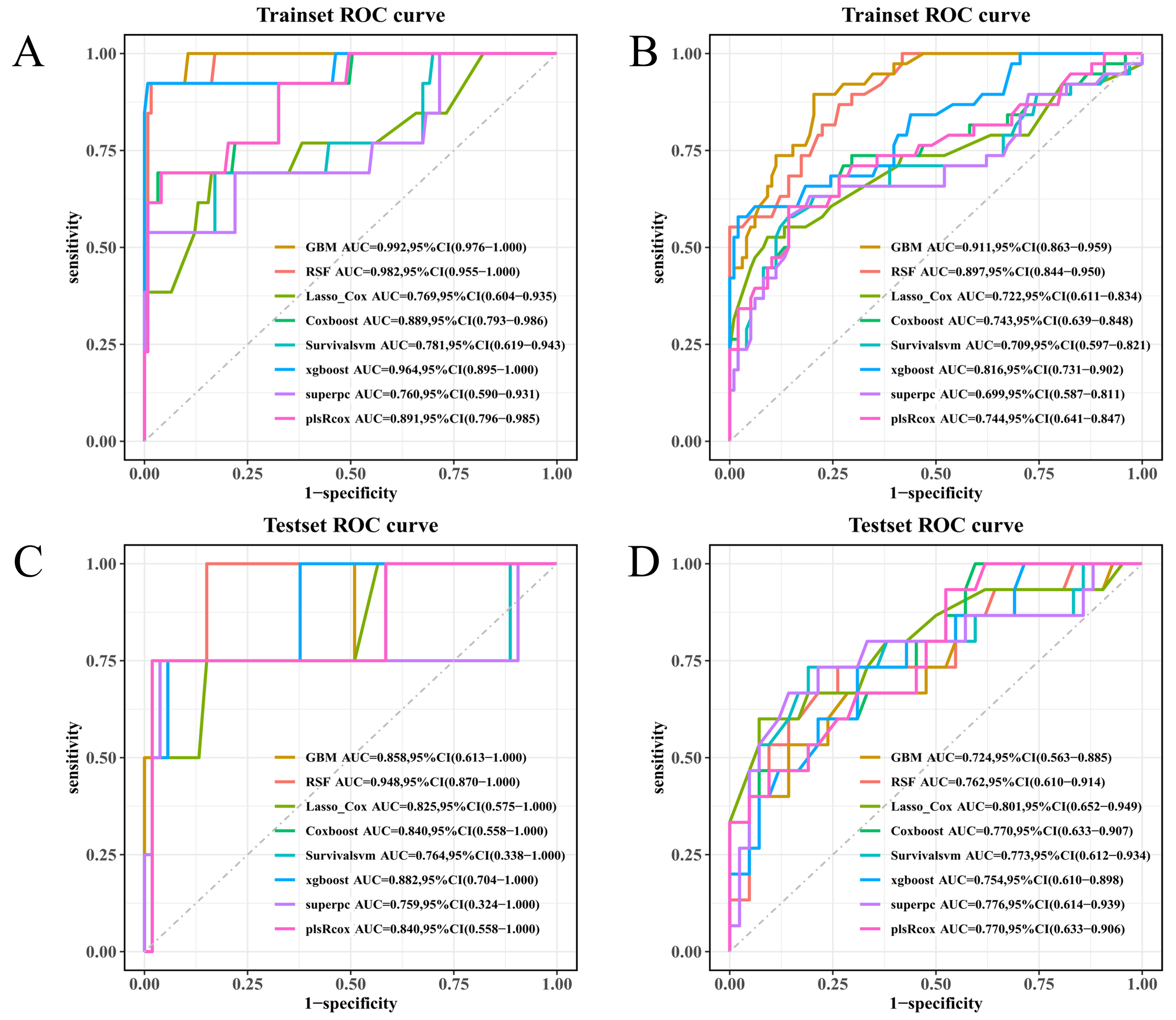

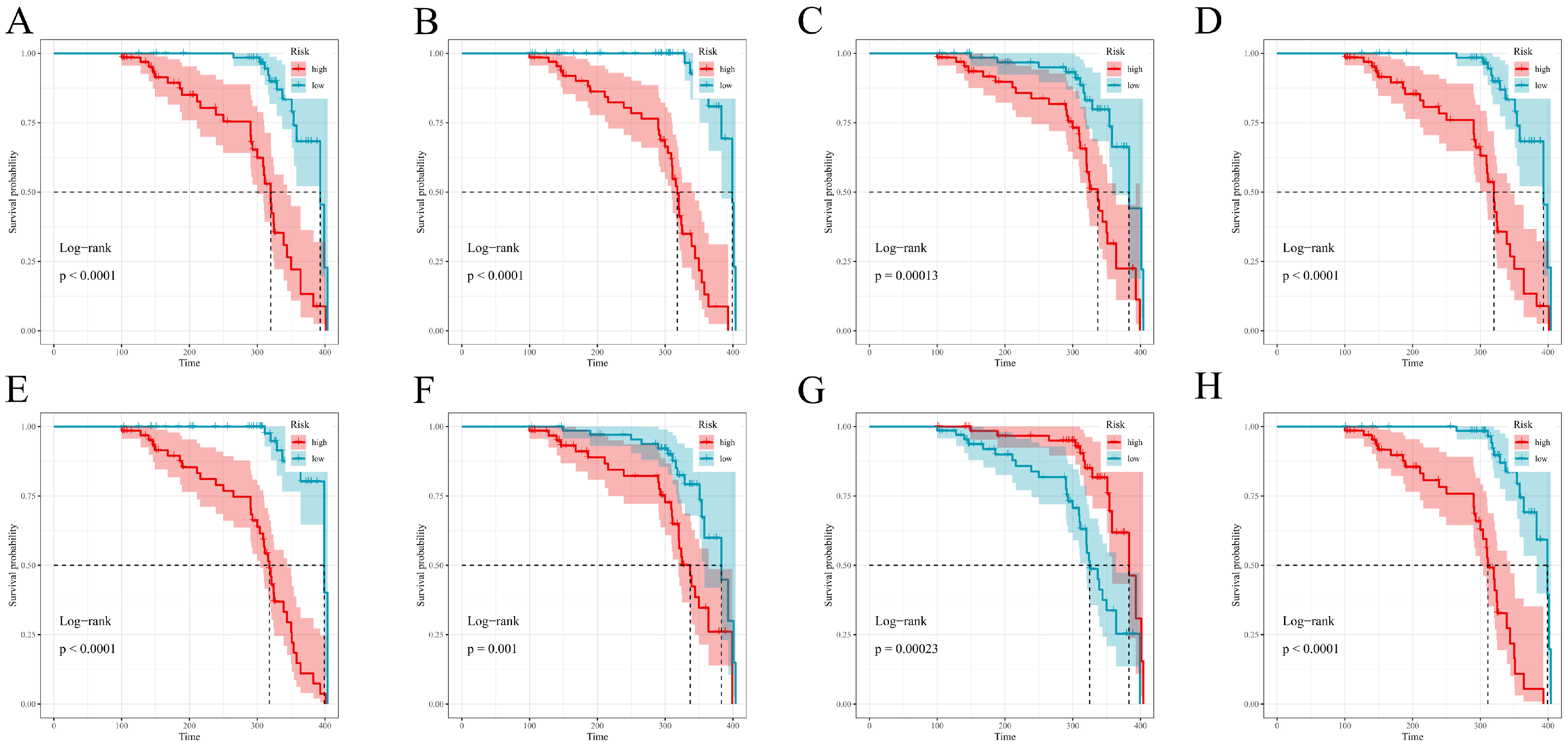

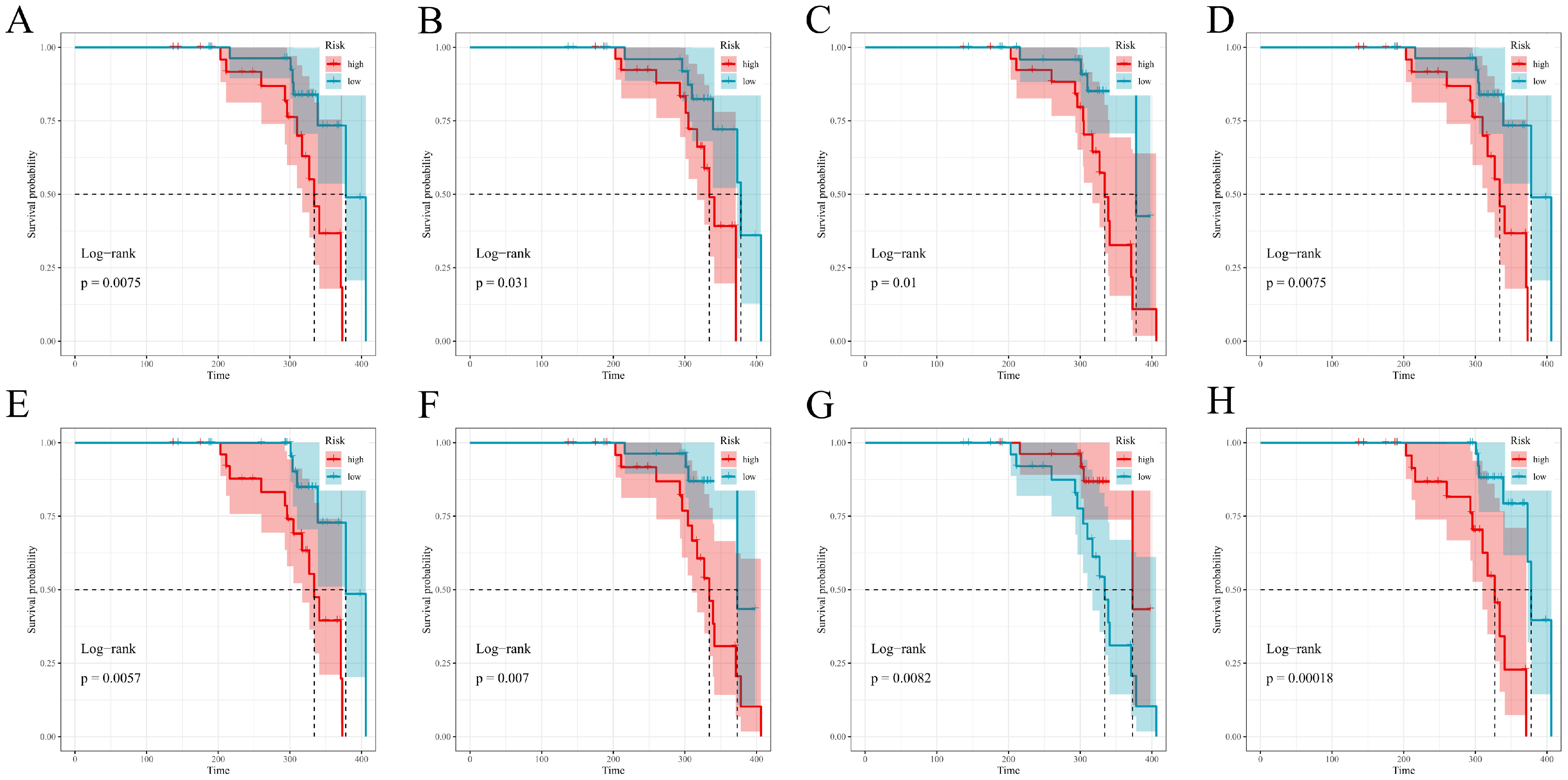

In this prospective, dual-center cohort of unprovoked lower-extremity DVT, multiple survival machine-learning models demonstrated consistent predictive performance and potential clinical utility. Discrimination was moderate to high in both the training and test cohorts as assessed by time-dependent AUC, and the relative ranking of models was broadly preserved (

Figure 2A–D), with fixed-time ROC analyses at 9 and 12 months providing concordant results (

Figure 6A–D). Predicted absolute risks corresponded well with observed outcomes at the prespecified horizons of 3, 6, and 12 months, indicating satisfactory calibration in both cohorts (

Figure 3 and

Figure 4). Decision-curve analysis suggested positive net benefit for the top-performing models across clinically plausible thresholds, supporting the concept that model-based risk estimates could inform follow-up intensity and supportive care (

Figure 5A–D). Risk scores derived from each model stratified patients into distinct PTS-free survival groups over follow-up (

Figure 7 and

Figure 8). Overall, the prespecified train/test separation, 10-fold cross-validation within training, and multi-metric evaluation support reproducibility and provide a foundation for future external validation.

Prior prognostic studies of PTS have largely been retrospective and single-center, often relying on conventional Cox nomograms evaluated at a single horizon; absolute-risk calibration and explicit assessments of clinical utility have received comparatively limited attention. Across this study, proximal thrombus burden, delayed or suboptimal anticoagulation, and inconsistent use of compression stockings have been repeatedly reported as relevant factors [

11,

12,

13,

14]. Effect sizes and “transportability” of these factors, however, show considerable variation—heterogeneity in case mix, endpoint definitions, and, to a large extent, follow-up windows are believed to be responsible. Using a prospective dual-center cohort, strict 10-fold training/test set separation, multi-horizon performance reporting, and decision-curve net benefit as a form of clinical utility metric, our study extends this line of work towards truly deployable predictive tools. A key emphasis was linking prediction to partially modifiable care processes assessed at clinically relevant time points (3, 6, and 12 months), which have been inconsistently handled in prior models.

The association of iliac–femoral thrombosis with a higher PTS risk is biologically consistent: thrombus within this specific segment implies a greater clot burden and, by extension, a more persistent form of outflow obstruction [

7,

14]. Valve injury and chronic venous hypertension are other direct consequences of such a state—all being central to the established pathophysiology of PTS10. This predictor’s persistence following multivariable adjustment suggests it captures some form of structural substrate; demographic and treatment-related factors cannot fully account for this effect. Time to anticoagulation emerged as another very robust determinant. Earlier initiation plausibly limits propagation of the thrombus, promotes recanalization in at least some cases, and reduces various types of inflammatory damage to (near-)normal venous valves; daily delays in starting anticoagulation represent an increasing window for organization and associated fibrosis [

15,

16]. From a care-delivery perspective, streamlining diagnostic pathways, timely initiation of anticoagulation, and standardized discharge processes may therefore represent actionable targets to mitigate downstream PTS risk.

To reduce inflammatory confounding, we excluded active infection, autoimmune disease, and chronic inflammatory disorders other than hyperuricemia; within this restricted background, an association with hyperuricemia can be interpreted in the context of inflammatory mechanisms relevant to venous disease. Initiation and propagation of DVT involve inflammatory pathways such as endothelial activation, leukocyte adhesion, monocyte tissue-factor expression, and NETosis, which promote thrombin generation and contribute to thrombus stabilization; systemic low-grade inflammation may prime both platelets and the endothelium [

17,

18,

19]. Hyperuricemia amplifies these processes: xanthine oxidase-derived reactive oxygen species, reduced nitric-oxide bioavailability, and, to a larger extent, NLRP3 inflammasome activation (IL-1β/IL-18) allow for near-constant “inflammatory priming” [

20]. Upregulation of adhesion molecules and/or impaired endothelial repair are additional consequences. The post-thrombotic phase sees persistent venous hypertension driving a form of sterile inflammation; vein-wall remodeling (leukocyte infiltration, MMPs, collagen deposition, and valve damage) is the end result—all slowed, if not completely inhibited, by the ongoing pro-inflammatory and/or oxidative stress milieus [

21,

22]. Thus, hyperuricemia may function as a tractable marker of a hostile healing environment; recanalization and/or PTS risk are both affected by this. Clinically, routine urate profiling in DVT pathways at least partially addresses risk conversations. Weight management, diet, and/or future urate-lowering therapy should be considered. Causality remains to be seen, but improving venous healing is a real and important clinical outcome [

23,

24].

Consistent with venous-rehabilitation principles, compression-stocking use showed a protective association. Beyond simple edema control, effective compression reduces venous hypertension and the associated shear-induced inflammation; limiting the subsequent cascade towards fibrosis and various forms of valve incompetence is a key benefit. Adherence drives this benefit, and therefore our results call for some sort of structured adherence support (education, fitting, follow-up troubleshooting) rather than just a prescription of the stockings themselves [

25]. Anticoagulation > 6 months was similarly associated with lower PTS risk within our cohort when compared to shorter courses. Primarily aimed at recurrent thromboembolism, adequate duration of anticoagulation may also promote a more stable recanalization—late extension of thrombus being one of the worst-case scenarios compromising valve integrity [

6,

26]. Decisions regarding anticoagulation duration must continue to balance bleeding risk against competing indications; nevertheless, the observed associations support individualized discussion of time-dependent management factors. Collectively, timely anticoagulation, adherence to compression therapy, and other modifiable care-process factors identified by prediction models may help inform patient-level follow-up and supportive strategies.

For real-world translation, the risk model could be integrated into existing clinical information systems (for example, an electronic health record or some form of institutional electronic medical record). Implementation should (i) map the required predictors to routinely captured fields (thrombus segment, time to anticoagulation, urate level, and compression-stocking use being prime examples), (ii) compute a continuous risk estimate at prespecified follow-up time points and display this alongside brief, action-oriented guidance regarding follow-up intensity, and (iii) permit periodic monitoring of both calibration drift and various outcome-linked quality indicators (adherence, revisit rates, patient-reported symptoms). Computational resources or transparency at the point of care being top priorities would lead to a parsimonious version of the model being used; tree-based implementations, should they offer any meaningful performance gain, could remain as a back-end service. Performance is not the end goal, but all forms of implementation should at least partially answer the clinical question the model was designed to address.

This study has several strengths. It used a prospective, dual-center cohort of unprovoked DVT with harmonized recruitment procedures and standardized Villalta assessments at 3, 6, 9, and 12 months. Model development followed a prespecified 70/30 training–test split, with 10-fold cross-validation restricted to the training set and a single final evaluation in the held-out test set. Multiple survival learners were benchmarked using consistent preprocessing and candidate-predictor handling, and performance was assessed using prespecified metrics, including discrimination, calibration, overall prediction error, and decision value. Reproducibility was supported through fixed random seeds, archived parameter grids, and a stable analysis environment. Finally, the exclusion of active infection, autoimmune disease, and most other chronic inflammatory conditions (while retaining hyperuricemia as the exposure of interest) reduced major inflammatory confounding and enabled a more targeted assessment of a metabolically related signal.

Several limitations merit consideration. This prospective observational cohort study was not prospectively registered on a public clinical trial registry platform. This cohort comprised proximal (femoral or iliofemoral) unprovoked DVT only; isolated distal DVT was not represented, which limits extrapolation of our findings to patients with distal DVT. Furthermore, because hyperuricemia was a prespecified predictor of interest, we excluded conditions that could substantially confound inflammatory profiles (e.g., active infection, autoimmune disease, and chronic non-hyperuricemic inflammatory disorders); therefore, our results may be less generalizable to broader unprovoked DVT populations with concurrent inflammatory comorbidity. Sample size and subsequent event counts constrain precision at some horizons, particularly within subgroups; wider confidence intervals are a direct consequence. Both centers are part of the same academic system, meaning transportability must be externally validated. Targeted exclusions were used, but residual confounding likely persists; adherence and/or timing variables were captured in a pragmatic manner. Kaplan–Meier risk stratification used median splits for communication; these groupings should not be interpreted as defining optimal intervention thresholds. In addition, imaging-derived quantification of thrombus burden, longitudinal inflammatory biomarkers, and formal modeling of bleeding-risk trade-offs were not incorporated. Finally, the study design is predictive rather than causal, and future work could explore extensions such as competing-risk approaches.