Exploring AI’s Potential in Papilledema Diagnosis to Support Dermatological Treatment Decisions in Rural Healthcare

Abstract

1. Introduction

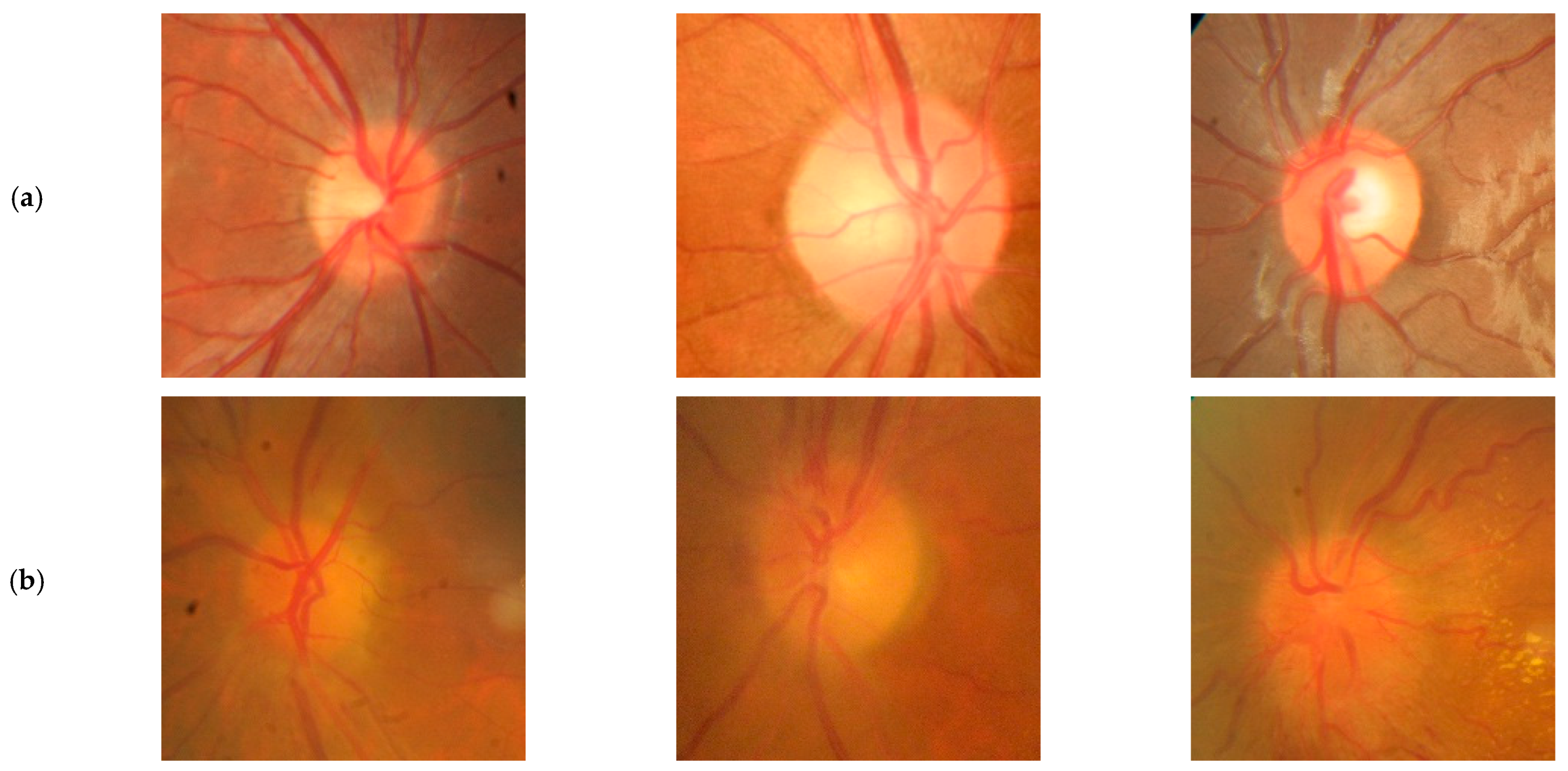

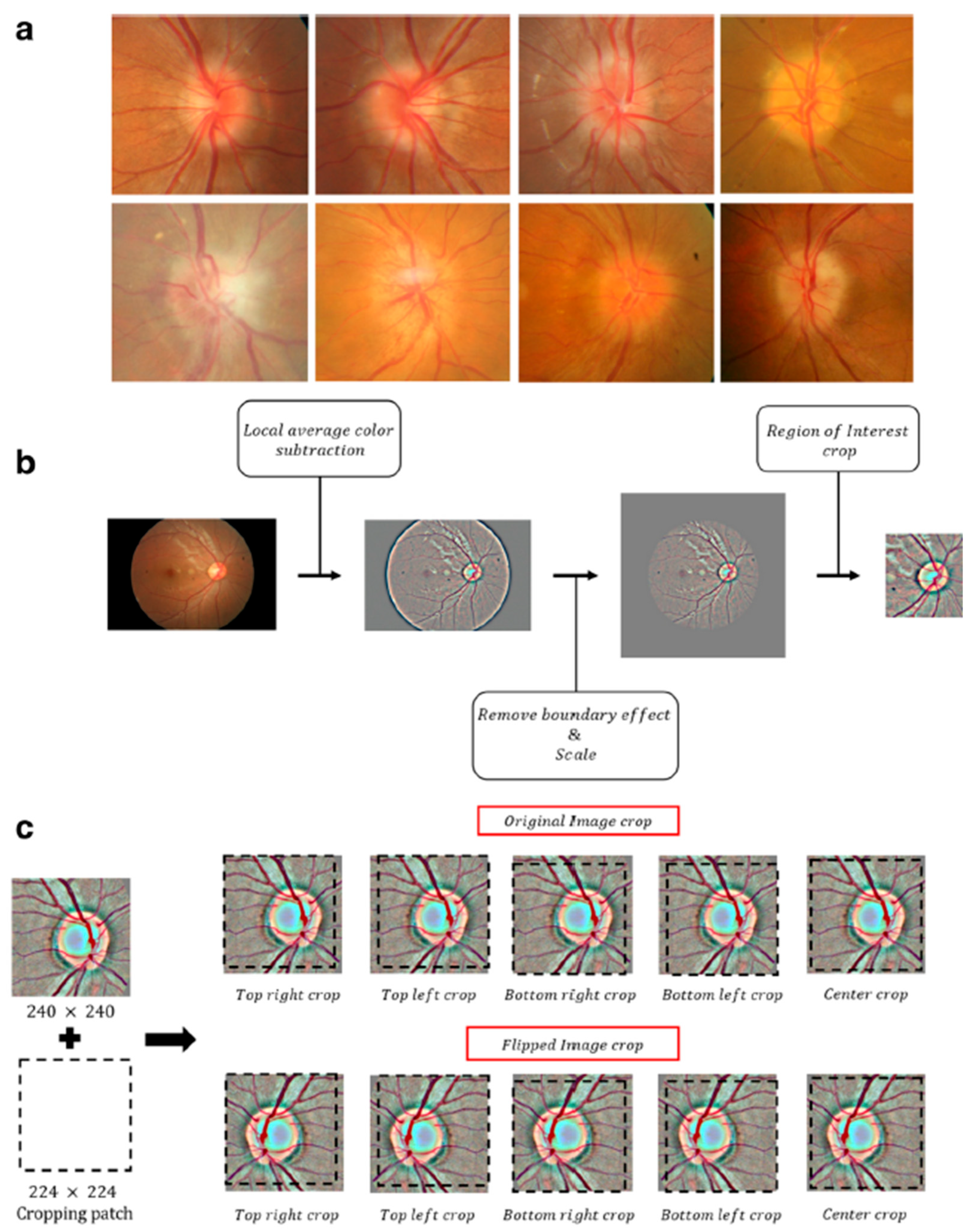

2. Methods

Statistical Analysis

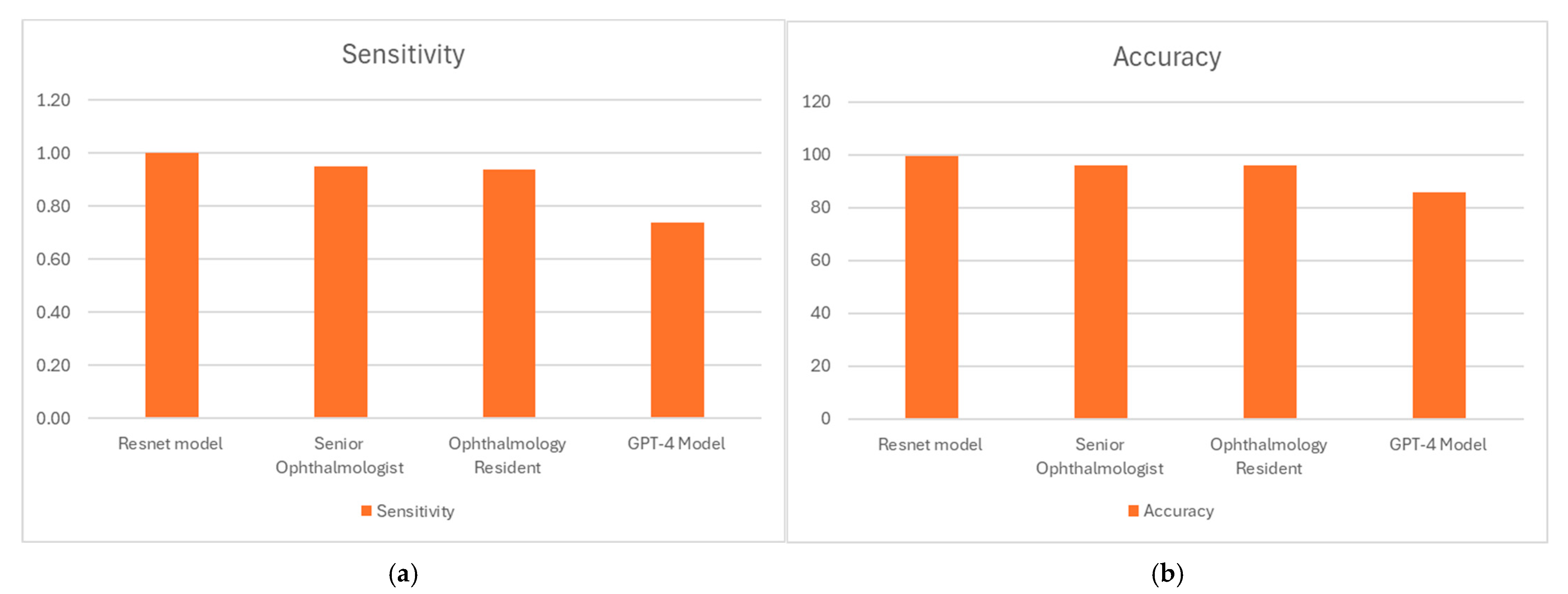

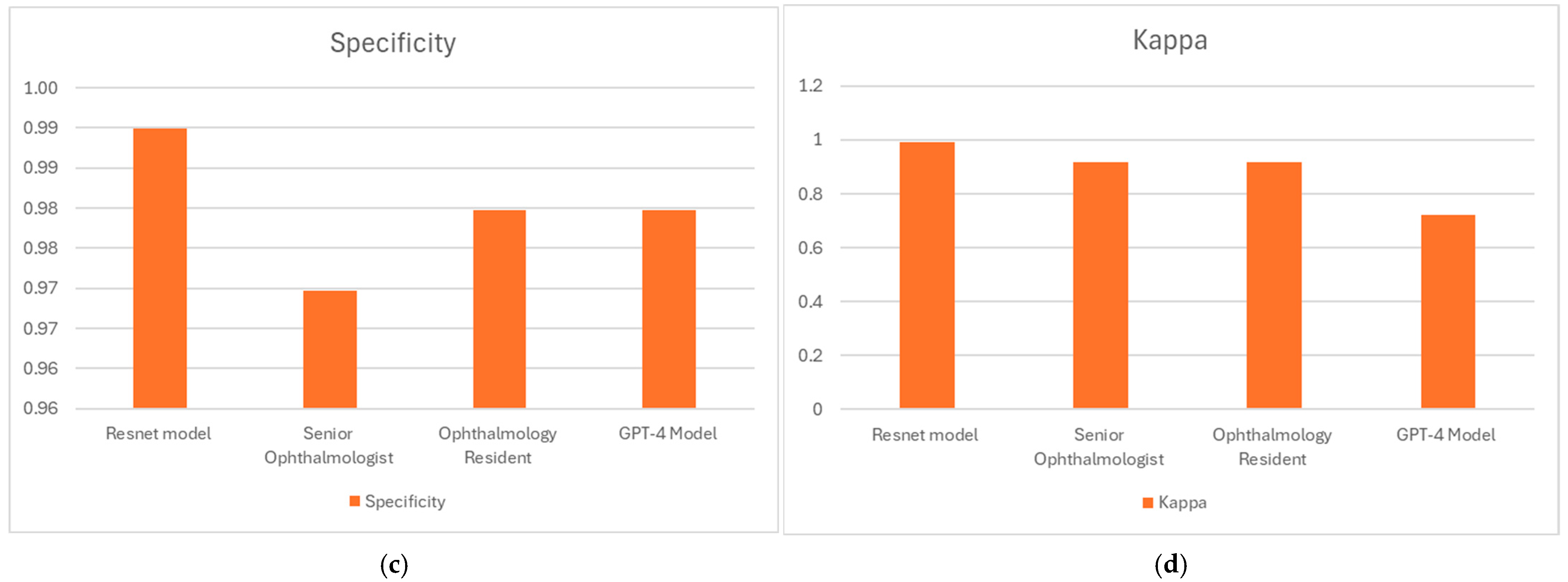

3. Results

4. Discussion

5. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Reier, L.; Fowler, J.B.; Arshad, M.; Hadi, H.; Whitney, E.; Farmah, A.V.; Siddiqi, J. Optic Disc Edema and Elevated Intracranial Pressure (ICP): A Comprehensive Review of Papilledema. Cureus 2022, 14, e24915. [Google Scholar] [CrossRef] [PubMed]

- Orylska-Ratynska, M.; Placek, W.; Owczarczyk-Saczonek, A. Tetracyclines-An Important Therapeutic Tool for Dermatologists. Int. J. Environ. Res. Public Health 2022, 19, 7246. [Google Scholar] [CrossRef] [PubMed]

- Yasir, M.; Goyal, A.; Sonthalia, S. Corticosteroid Adverse Effects. In StatPearls; StatPearls: Treasure Island, FL, USA, 2025. [Google Scholar]

- Tan, M.G.; Worley, B.; Kim, W.B.; Ten Hove, M.; Beecker, J. Drug-Induced Intracranial Hypertension: A Systematic Review and Critical Assessment of Drug-Induced Causes. Am. J. Clin. Dermatol. 2020, 21, 163–172. [Google Scholar] [CrossRef] [PubMed]

- Rigi, M.; Almarzouqi, S.J.; Morgan, M.L.; Lee, A.G. Papilledema: Epidemiology, etiology, and clinical management. Eye Brain 2015, 7, 47–57. [Google Scholar] [CrossRef]

- Gabros, S.; Nessel, T.A.; Zito, P.M. Topical Corticosteroids. In StatPearls; StatPearls: Treasure Island, FL, USA, 2025. [Google Scholar]

- Trayer, J.; O’Rourke, D.; Cassidy, L.; Elnazir, B. Benign intracranial hypertension associated with inhaled corticosteroids in a child with asthma. BMJ Case Rep. 2021, 14, e242455. [Google Scholar] [CrossRef]

- Reifenrath, J.; Rupprecht, C.; Gmeiner, V.; Haslinger, B. Intracranial hypertension after rosacea treatment with isotretinoin. Neurol. Sci. 2023, 44, 4553–4556. [Google Scholar] [CrossRef]

- Pile, H.D.; Patel, P.; Sadiq, N.M. Isotretinoin. In StatPearls; StatPearls: Treasure Island, FL, USA, 2025. [Google Scholar]

- Friedman, D.I. Medication-induced intracranial hypertension in dermatology. Am. J. Clin. Dermatol. 2005, 6, 29–37. [Google Scholar] [CrossRef]

- Gardner, K.; Cox, T.; Digre, K.B. Idiopathic intracranial hypertension associated with tetracycline use in fraternal twins: Case reports and review. Neurology 1995, 45, 6–10. [Google Scholar] [CrossRef]

- Shutter, M.C.; Akhondi, H. Tetracycline. In StatPearls; StatPearls: Treasure Island, FL, USA, 2025. [Google Scholar]

- Del Rosso, J.Q.; Webster, G.; Weiss, J.S.; Bhatia, N.D.; Gold, L.S.; Kircik, L. Nonantibiotic Properties of Tetracyclines in Rosacea and Their Clinical Implications. J. Clin. Aesthet. Dermatol. 2021, 14, 14–21. Available online: http://www.ncbi.nlm.nih.gov/pubmed/34840653 (accessed on 21 January 2025).

- Hashemian, H.; Peto, T.; Ambrosio, R., Jr.; Lengyel, I.; Kafieh, R.; Muhammed Noori, A.; Khorrami-Nejad, M. Application of Artificial Intelligence in Ophthalmology: An Updated Comprehensive Review. J. Ophthalmic Vis. Res. 2024, 19, 354–367. [Google Scholar] [CrossRef]

- Balyen, L.; Peto, T. Promising Artificial Intelligence-Machine Learning-Deep Learning Algorithms in Ophthalmology. Asia Pac. J. Ophthalmol. 2019, 8, 264–272. [Google Scholar] [CrossRef]

- Joseph, S.; Selvaraj, J.; Mani, I.; Kumaragurupari, T.; Shang, X.; Mudgil, P.; Ravilla, T.; He, M. Diagnostic Accuracy of Artificial Intelligence-Based Automated Diabetic Retinopathy Screening in Real-World Settings: A Systematic Review and Meta-Analysis. Am. J. Ophthalmol. 2024, 263, 214–230. [Google Scholar] [CrossRef] [PubMed]

- Olawade, D.B.; Weerasinghe, K.; Mathugamage, M.; Odetayo, A.; Aderinto, N.; Teke, J.; Boussios, S. Enhancing Ophthalmic Diagnosis and Treatment with Artificial Intelligence. Medicina 2025, 61, 433. [Google Scholar] [CrossRef]

- Tonti, E.; Tonti, S.; Mancini, F.; Bonini, C.; Spadea, L.; D’Esposito, F.; Gagliano, C.; Musa, M.; Zeppieri, M. Artificial Intelligence and Advanced Technology in Glaucoma: A Review. J. Pers. Med. 2024, 14, 1062. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Wang, L.; Wu, X.; Jiang, J.; Qiang, W.; Xie, H.; Zhou, H.; Wu, S.; Shao, Y.; Chen, W. Artificial intelligence in ophthalmology: The path to the real-world clinic. Cell Rep. Med. 2023, 4, 101095. [Google Scholar] [CrossRef]

- Bajwa, J.; Munir, U.; Nori, A.; Williams, B. Artificial intelligence in healthcare: Transforming the practice of medicine. Future Healthc. J. 2021, 8, e188–e194. [Google Scholar] [CrossRef]

- Chen, X.; Wang, X.; Zhang, K.; Fung, K.M.; Thai, T.C.; Moore, K.; Mannel, R.S.; Liu, H.; Zheng, B.; Qiu, Y. Recent advances and clinical applications of deep learning in medical image analysis. Med. Image Anal. 2022, 79, 102444. [Google Scholar] [CrossRef]

- Li, Z.; Chen, W. Solving data quality issues of fundus images in real-world settings by ophthalmic AI. Cell Rep. Med. 2023, 4, 100951. [Google Scholar] [CrossRef]

- Chen, J.; Liu, L.; Ruan, S.; Li, M.; Yin, C. Are Different Versions of ChatGPT’s Ability Comparable to the Clinical Diagnosis Presented in Case Reports? A Descriptive Study. J. Multidiscip. Healthc. 2023, 16, 3825–3831. [Google Scholar] [CrossRef]

- Sathianvichitr, K.; Najjar, R.P.; Zhiqun, T.; Fraser, J.A.; Yau, C.W.L.; Girard, M.J.A.; Costello, F.; Lin, M.Y.; Lagreze, W.A.; Vignal-Clermont, C.; et al. A Deep Learning Approach for Accurate Discrimination Between Optic Disc Drusen and Papilledema on Fundus Photographs. J. Neuroophthalmol. 2024, 44, 454–461. [Google Scholar] [CrossRef]

- Saba, T.; Akbar, S.; Kolivand, H.; Ali Bahaj, S. Automatic detection of papilledema through fundus retinal images using deep learning. Microsc. Res. Tech. 2021, 84, 3066–3077. [Google Scholar] [CrossRef] [PubMed]

- Milea, D.; Najjar, R.P.; Zhubo, J.; Ting, D.; Vasseneix, C.; Xu, X.; Aghsaei Fard, M.; Fonseca, P.; Vanikieti, K.; Lagreze, W.A.; et al. Artificial Intelligence to Detect Papilledema from Ocular Fundus Photographs. N. Engl. J. Med. 2020, 382, 1687–1695. [Google Scholar] [CrossRef]

- Carla, M.M.; Crincoli, E.; Rizzo, S. Retinal imaging analysis performed by ChatGPT-4O and Gemini Advanced: The turning point of the revolution? Retina 2025, 45, 694–702. [Google Scholar] [CrossRef] [PubMed]

- Jin, K.; Ye, J. Artificial intelligence and deep learning in ophthalmology: Current status and future perspectives. Adv. Ophthalmol. Pract. Res. 2022, 2, 100078. [Google Scholar] [CrossRef]

- Moraru, A.D.; Costin, D.; Moraru, R.L.; Branisteanu, D.C. Artificial intelligence and deep learning in ophthalmology—Present and future (Review). Exp. Ther. Med. 2020, 20, 3469–3473. [Google Scholar] [CrossRef]

- Goktas, P.; Grzybowski, A. Assessing the Impact of ChatGPT in Dermatology: A Comprehensive Rapid Review. J. Clin. Med. 2024, 13, 5909. [Google Scholar] [CrossRef]

- Cuellar-Barboza, A.; Brussolo-Marroquin, E.; Cordero-Martinez, F.C.; Aguilar-Calderon, P.E.; Vazquez-Martinez, O.; Ocampo-Candiani, J. An evaluation of ChatGPT compared with dermatological surgeons’ choices of reconstruction for surgical defects after Mohs surgery. Clin. Exp. Dermatol. 2024, 49, 1367–1371. [Google Scholar] [CrossRef]

- Elias, M.L.; Burshtein, J.; Sharon, V.R. OpenAI’s GPT-4 performs to a high degree on board-style dermatology questions. Int. J. Dermatol. 2024, 63, 73–78. [Google Scholar] [CrossRef]

- Ahn, J.M.; Kim, S.; Ahn, K.S.; Cho, S.H.; Kim, U.S. Accuracy of machine learning for differentiation between optic neuropathies and pseudopapilledema. BMC Ophthalmol. 2019, 19, 178. [Google Scholar] [CrossRef]

- OpenMEDLab. Awesome-Medical-Dataset: Papilledema.md (Resources Folder). GitHub Repository: Openmedlab/Awesome-Medical-Dataset. Available online: https://github.com/openmedlab/Awesome-Medical-Dataset/blob/main/resources/Papilledema.md (accessed on 21 February 2025).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Shibata, N.; Tanito, M.; Mitsuhashi, K.; Fujino, Y.; Matsuura, M.; Murata, H.; Asaoka, R. Development of a deep residual learning algorithm to screen for glaucoma from fundus photography. Sci. Rep. 2018, 8, 14665. [Google Scholar] [CrossRef] [PubMed]

- Howard, J.; Ruder, S. Universal Language Model Fine-tuning for Text Classification. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; Association for Computational Linguistics: Stroudsburg, PL, USA, 2018; pp. 328–339. [Google Scholar]

- Smith, L.N. A disciplined approach to neural network hyper-parameters: Part 1—Learning rate, batch size, momentum, and weight decay. arXiv 2018. [Google Scholar] [CrossRef]

- Viera, A.J.; Garrett, J.M. Understanding interobserver agreement: The kappa statistic. Fam. Med. 2005, 37, 360–363. Available online: https://www.ncbi.nlm.nih.gov/pubmed/15883903 (accessed on 14 March 2025).

- Gupta, A.; Al-Kazwini, H. Evaluating ChatGPT’s Diagnostic Accuracy in Detecting Fundus Images. Cureus 2024, 16, e73660. [Google Scholar] [CrossRef]

- Shapiro, J.; Avitan-Hersh, E.; Greenfield, B.; Khamaysi, Z.; Dodiuk-Gad, R.P.; Valdman-Grinshpoun, Y.; Freud, T.; Lyakhovitsky, A. The use of a ChatGPT-4-based chatbot in teledermatology: A retrospective exploratory study. J. Dtsch. Dermatol. Ges. 2025, 23, 311–319. [Google Scholar] [CrossRef]

- Wang, S.; Hu, M.; Li, Q.; Safari, M.; Yang, X. Capabilities of GPT-5 on Multimodal Medical Reasoning. arXiv 2025, arXiv:2508.08224. [Google Scholar] [CrossRef]

- AlRyalat, S.A.; Musleh, A.M.; Kahook, M.Y. Evaluating the strengths and limitations of multimodal ChatGPT-4 in detecting glaucoma using fundus images. Front. Ophthalmol. 2024, 4, 1387190. [Google Scholar] [CrossRef] [PubMed]

- Leong, Y.Y.; Vasseneix, C.; Finkelstein, M.T.; Milea, D.; Najjar, R.P. Artificial Intelligence Meets Neuro-Ophthalmology. Asia Pac. J. Ophthalmol. 2022, 11, 111–125. [Google Scholar] [CrossRef]

- Ahn, S.J.; Kim, Y.H. Clinical Applications and Future Directions of Smartphone Fundus Imaging. Diagnostics 2024, 14, 1395. [Google Scholar] [CrossRef] [PubMed]

- Wintergerst, M.W.M.; Brinkmann, C.K.; Holz, F.G.; Finger, R.P. Undilated versus dilated monoscopic smartphone-based fundus photography for optic nerve head evaluation. Sci. Rep. 2018, 8, 10228. [Google Scholar] [CrossRef]

- Ruamviboonsuk, P.; Ruamviboonsuk, V.; Tiwari, R. Recent evidence of economic evaluation of artificial intelligence in ophthalmology. Curr. Opin. Ophthalmol. 2023, 34, 449–458. [Google Scholar] [CrossRef]

- Rau, A.; Rau, S.; Zoeller, D.; Fink, A.; Tran, H.; Wilpert, C.; Nattenmueller, J.; Neubauer, J.; Bamberg, F.; Reisert, M.; et al. A Context-based Chatbot Surpasses Trained Radiologists and Generic ChatGPT in Following the ACR Appropriateness Guidelines. Radiology 2023, 308, e230970. [Google Scholar] [CrossRef]

- Savelka, J.; Ashley, K.D. The unreasonable effectiveness of large language models in zero-shot semantic annotation of legal texts. Front. Artif. Intell. 2023, 6, 1279794. [Google Scholar] [CrossRef]

- Tan, W.; Wei, Q.; Xing, Z.; Fu, H.; Kong, H.; Lu, Y.; Yan, B.; Zhao, C. Fairer AI in ophthalmology via implicit fairness learning for mitigating sexism and ageism. Nat. Commun. 2024, 15, 4750. [Google Scholar] [CrossRef] [PubMed]

- Nazari Khanamiri, H.; Nakatsuka, A.; El-Annan, J. Smartphone Fundus Photography. J. Vis. Exp. 2017, 125, 55958. [Google Scholar] [CrossRef]

- Raju, B.; Raju, N.S.; Akkara, J.D.; Pathengay, A. Do it yourself smartphone fundus camera—DIYretCAM. Indian J. Ophthalmol. 2016, 64, 663–667. [Google Scholar] [CrossRef] [PubMed]

- Kumari, S.; Venkatesh, P.; Tandon, N.; Chawla, R.; Takkar, B.; Kumar, A. Selfie fundus imaging for diabetic retinopathy screening. Eye 2022, 36, 1988–1993. [Google Scholar] [CrossRef]

- Dolatkhah Laein, G. Global perspectives on governing healthcare AI: Prioritising safety, equity and collaboration. BMJ Lead 2025, 9, 72–75. [Google Scholar] [CrossRef]

- Boden, A.C.S.; Molin, J.; Garvin, S.; West, R.A.; Lundstrom, C.; Treanor, D. The human-in-the-loop: An evaluation of pathologists’ interaction with artificial intelligence in clinical practice. Histopathology 2021, 79, 210–218. [Google Scholar] [CrossRef]

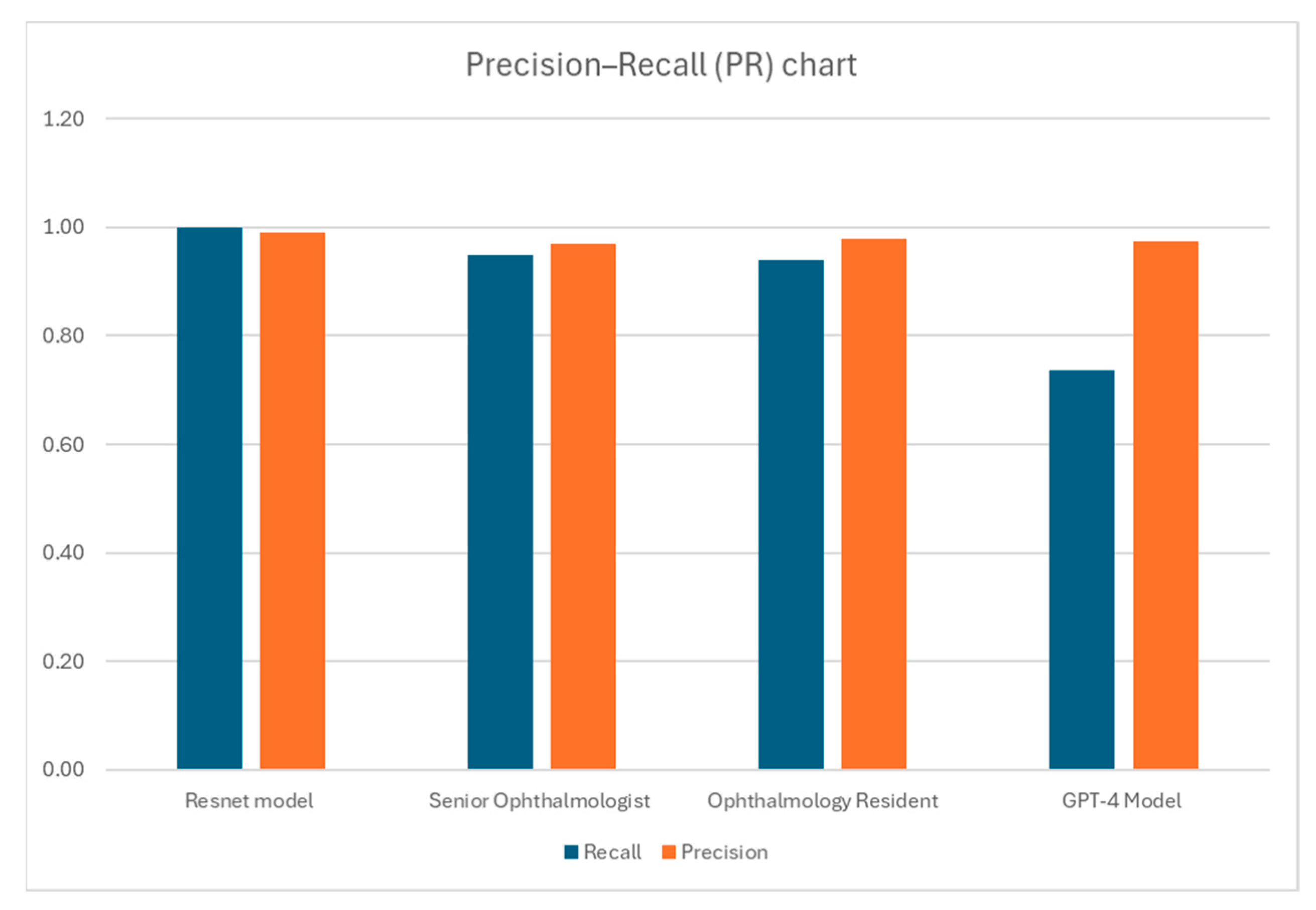

| (a) ResNet AI Model | |||

| Resnet model P * | Resnet model N ** | Total | |

| Labeled P | 99 | 0 | 99 |

| Labeled N | 1 | 98 | 99 |

| Total | 100 | 98 | 198 |

| Sensitivity | 1.0 | ||

| Specificity | 0.99 | ||

| Accuracy (%) | 99.5 | ||

| Cohen’s Kappa | 0.99 | ||

| (b) Senior Ophthalmologists | |||

| Senior Ophthalmologist P * | Senior Ophthalmologist N ** | Total | |

| Labeled P | 94 | 5 | 99 |

| Labeled N | 3 | 96 | 99 |

| Total | 97 | 101 | 198 |

| Sensitivity | 0.95 | ||

| Specificity | 0.97 | ||

| Accuracy (%) | 95.96 | ||

| Cohen’s Kappa | 0.9192 | ||

| (c) Ophthalmology Resident | |||

| Ophthalmology Resident P * | Ophthalmology Resident N ** | Total | |

| Labeled P | 93 | 6 | 99 |

| Labeled N | 2 | 97 | 99 |

| Total | 95 | 103 | 198 |

| Sensitivity | 0.94 | ||

| Specificity | 0.98 | ||

| Accuracy (%) | 95.96 | ||

| Cohen’s Kappa | 0.92 | ||

| (d) GPT-4 Model | |||

| GPT-4o model P * | GPT-4o model N ** | Total | |

| Labeled P | 73 | 26 | 99 |

| Labeled N | 2 | 97 | 99 |

| Total | 75 | 123 | 198 |

| Sensitivity | 0.74 | ||

| Specificity | 0.98 | ||

| Accuracy (%) | 85.86 | ||

| Cohen’s Kappa | 0.72 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shapiro, J.; Atlas, M.; Fridman, N.; Cohen, I.; Khamaysi, Z.; Awwad, M.; Silverstein, N.; Kozlovsky, T.; Maharshak, I. Exploring AI’s Potential in Papilledema Diagnosis to Support Dermatological Treatment Decisions in Rural Healthcare. Diagnostics 2025, 15, 2547. https://doi.org/10.3390/diagnostics15192547

Shapiro J, Atlas M, Fridman N, Cohen I, Khamaysi Z, Awwad M, Silverstein N, Kozlovsky T, Maharshak I. Exploring AI’s Potential in Papilledema Diagnosis to Support Dermatological Treatment Decisions in Rural Healthcare. Diagnostics. 2025; 15(19):2547. https://doi.org/10.3390/diagnostics15192547

Chicago/Turabian StyleShapiro, Jonathan, Mor Atlas, Naomi Fridman, Itay Cohen, Ziad Khamaysi, Mahdi Awwad, Naomi Silverstein, Tom Kozlovsky, and Idit Maharshak. 2025. "Exploring AI’s Potential in Papilledema Diagnosis to Support Dermatological Treatment Decisions in Rural Healthcare" Diagnostics 15, no. 19: 2547. https://doi.org/10.3390/diagnostics15192547

APA StyleShapiro, J., Atlas, M., Fridman, N., Cohen, I., Khamaysi, Z., Awwad, M., Silverstein, N., Kozlovsky, T., & Maharshak, I. (2025). Exploring AI’s Potential in Papilledema Diagnosis to Support Dermatological Treatment Decisions in Rural Healthcare. Diagnostics, 15(19), 2547. https://doi.org/10.3390/diagnostics15192547