Reproducibility of AI in Cephalometric Landmark Detection: A Preliminary Study

Abstract

1. Introduction

2. Materials and Methods

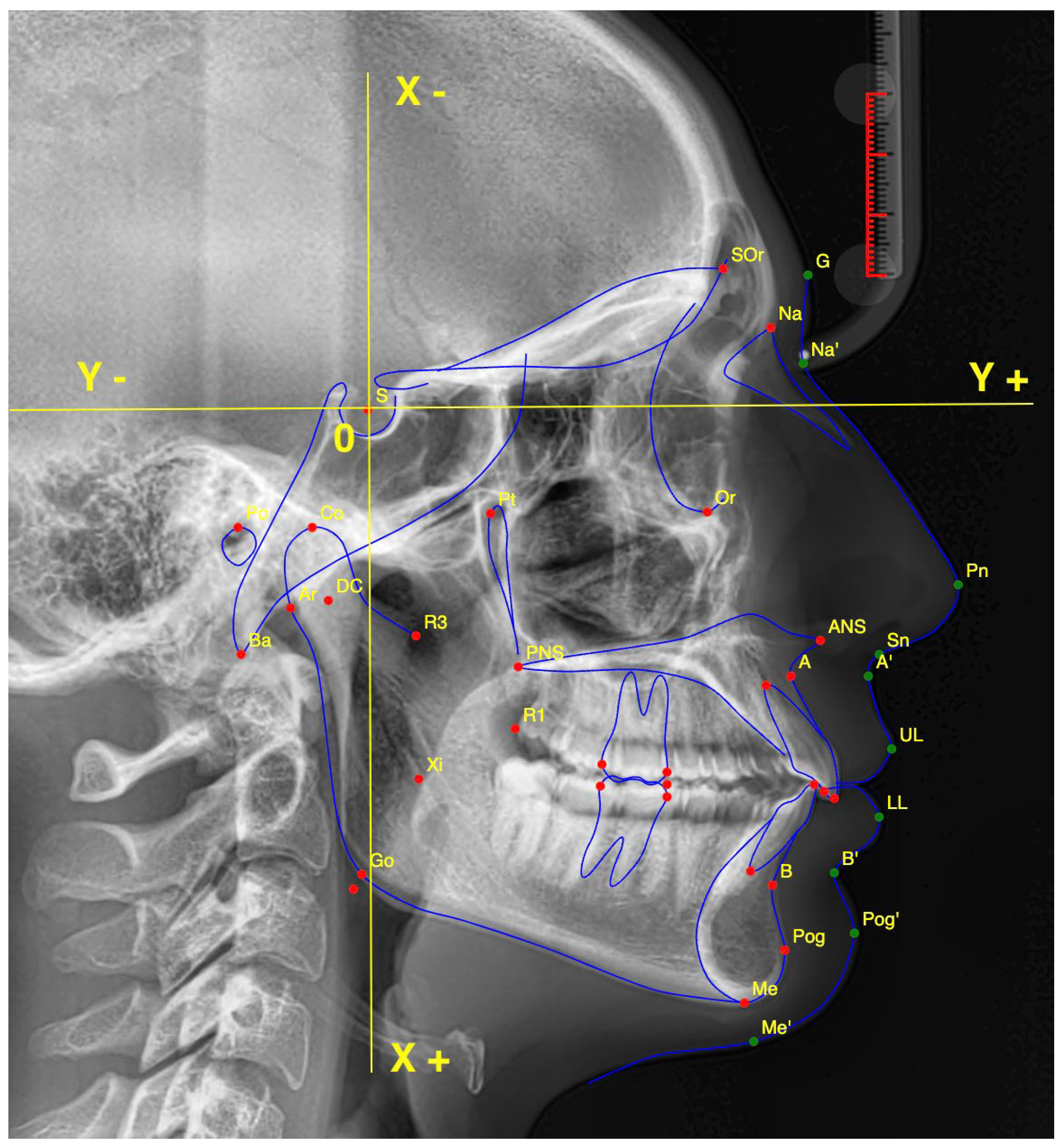

2.1. Cephalometric Landmarks

- Point (A);

- Anterior Nasal Spine (ANS);

- Articulare (Ar);

- Point (B);

- Gnathion (Gn);

- Gonion (Go);

- Lower Incisal Tip (L1);

- Lower Lip (LL);

- Menton (Me);

- Nasion (NA);

- Soft Tissue Pogonion (ST-Pog);

- Orbitale (Or);

- Posterior Nasal Spine (PNS);

- Porion (Po);

- Pogonion (Pog);

- Subnasale (SN);

- Upper Incisal Tip (U1);

- Upper Lip (UL).

2.2. Study Design and Experimental Groups

- Artificial Intelligence Cephalometric Tracing—No Image Modification (AInocut):AI-assisted identification of the 18 landmarks was performed on the original, unmodified image. The procedure was repeated 10 times, modifying only the patient identification data between trials to avoid potential duplication or memory effects by the software.

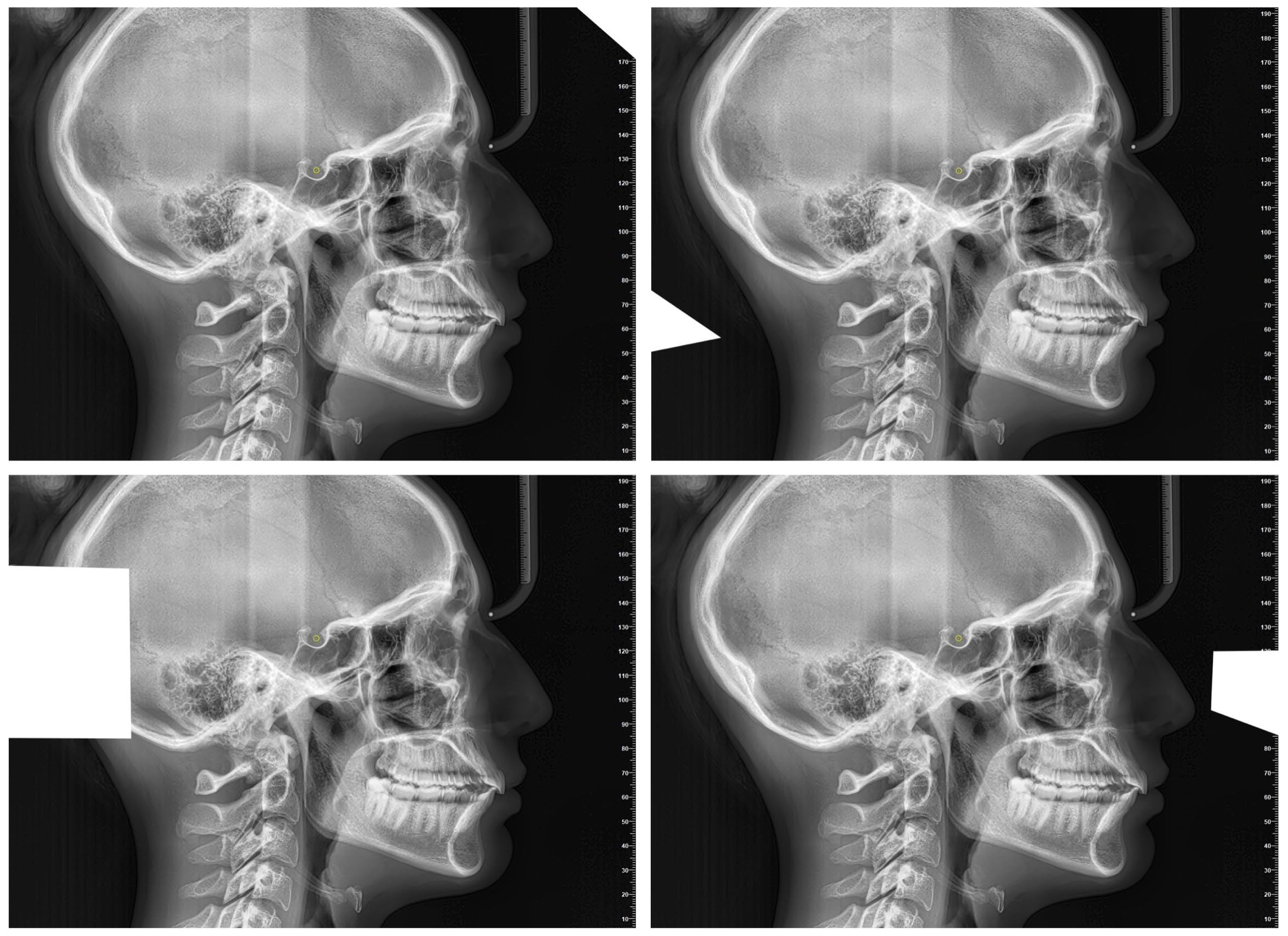

- Artificial Intelligence Cephalometric Tracing—With Image Modification (AIcut):The same AI model was used to detect landmarks, but the image was intentionally resized or cropped in each of the 10 repetitions. This aimed to test the AI’s robustness and precision under altered input conditions (Figure 2). The image cropping was intended to alter the grayscale values at the image margins, which represent one of the parameters used by the AI to orient itself within the radiograph. Importantly, the introduction of these crops did not in any way affect the anatomical structures relevant for landmark localization.

- Experienced Operator Manual Cephalometric Tracing (Oper):The same 18 landmarks were manually traced by the expert operator 10 times, with 15-day intervals between repetitions to minimize memory bias and assess intra-operator reproducibility.

2.3. Data Processing

2.4. Statistical Analysis

3. Results

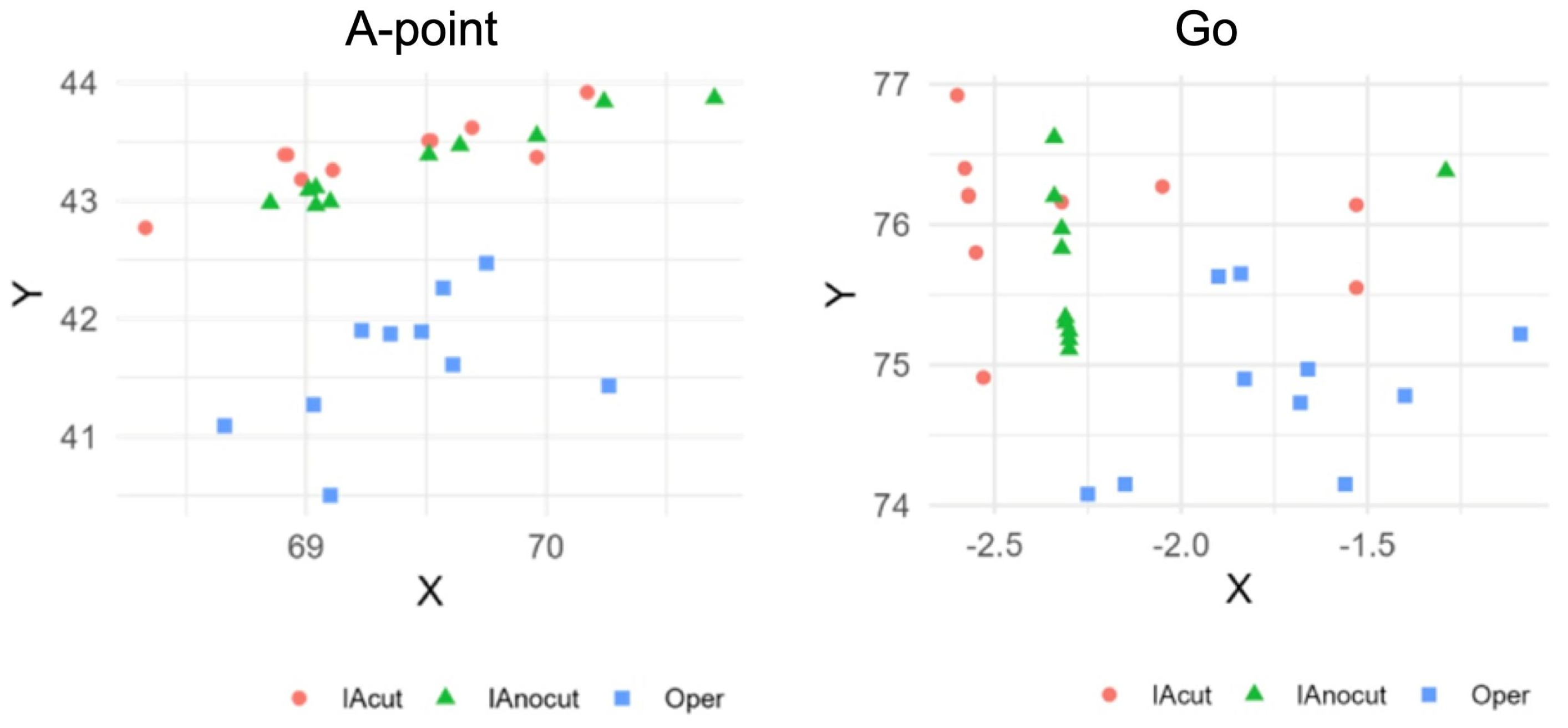

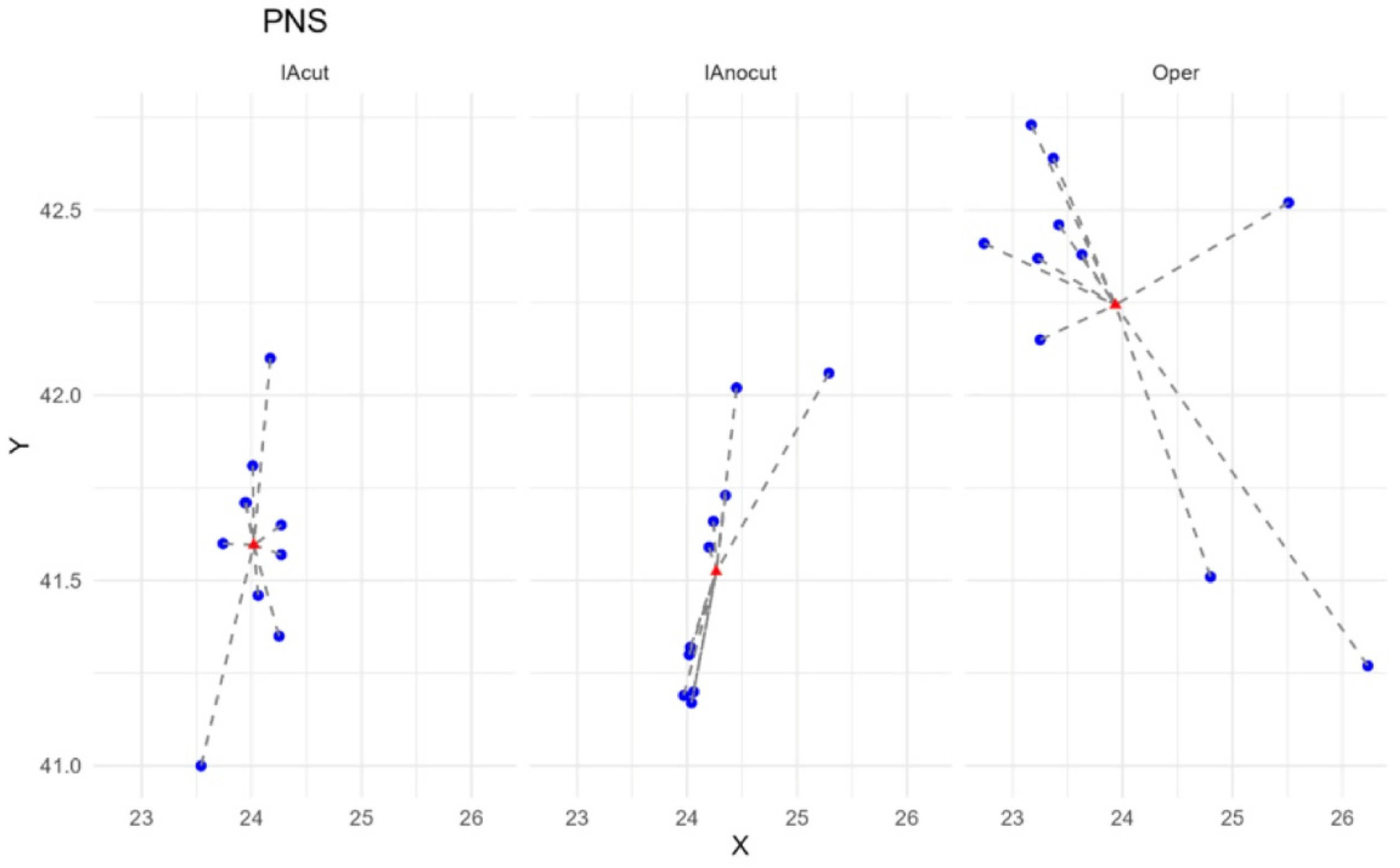

3.1. Analysis of Coordinate Variability

3.1.1. Coefficient of Variation (CV) per Single Point

3.1.2. Overall Mean Coefficient of Variation by Evaluation Method

3.1.3. Parameters with Highest and Lowest Variability

3.2. Analysis of Distances to the Centroid

Analysis of Variance

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Obermeyer, Z.; Emanuel, E.J. Predicting the Future—Big Data, Machine Learning, and Clinical Medicine. N. Engl. J. Med. 2016, 375, 1216–1219. [Google Scholar] [CrossRef]

- Schwendicke, F.; Samek, W.; Krois, J. Artificial Intelligence in Dentistry: Chances and Challenges. J. Dent. Res. 2020, 99, 769–774. [Google Scholar] [CrossRef] [PubMed]

- Kothari, S.; Gionfrida, L.; Bharath, A.A.; Abraham, S. Artificial Intelligence (AI) and rheumatology: A potential partnership. Rheumatology 2019, 58, 1894–1895. [Google Scholar] [CrossRef] [PubMed]

- Rawson, T.M.; Ahmad, R.; Toumazou, C.; Georgiou, P.; Holmes, A.H. Artificial intelligence can improve decision-making in infection management. Nat. Hum. Behav. 2019, 3, 543–545. [Google Scholar] [CrossRef] [PubMed]

- Baumrind, S.; Miller, D.M. Computer-aided head film analysis: The University of California San Francisco method. Am. J. Orthod. 1980, 78, 41–65. [Google Scholar] [CrossRef]

- Forsyth, D.B.; Shaw, W.C.; Richmond, S.; Roberts, C.T. Digital imaging of cephalometric radiographs, Part 2: Image quality. Angle Orthod. 1996, 66, 43–50. [Google Scholar]

- Angelakopoulos, N.; De Luca, S.; Oliveira-Santos, I.; Ribeiro, I.L.A.; Bianchi, I.; Balla, S.B.; Kis, H.C.; Jiménez, L.G.; Zolotenkova, G.; Yusof, M.Y.P.; et al. Third molar maturity index (I3M) assessment according to different geographical zones: A large multi-ethnic study sample. Int. J. Legal Med. 2023, 137, 403–425. [Google Scholar] [CrossRef]

- Wang, C.W.; Huang, C.T.; Hsieh, M.C.; Li, C.H.; Chang, S.W.; Li, W.C.; Vandaele, R.; Marée, R.; Jodogne, S.; Geurts, P.; et al. Evaluation and Comparison of Anatomical Landmark Detection Methods for Cephalometric X-Ray Images: A Grand Challenge. IEEE Trans. Med. Imaging 2015, 34, 1890–1900. [Google Scholar] [CrossRef]

- Ren, R.; Luo, H.; Su, C.; Yao, Y.; Liao, W. Machine learning in dental, oral and craniofacial imaging: A review of recent progress. PeerJ 2021, 9, e11451. [Google Scholar] [CrossRef]

- Park, J.H.; Hwang, H.W.; Moon, J.H.; Yu, Y.; Kim, H.; Her, S.B.; Srinivasan, G.; Aljanabi, M.N.A.; Donatelli, R.E.; Lee, S.J. Automated identification of cephalometric landmarks: Part 1-Comparisons between the latest deep-learning methods YOLOV3 and SSD. Angle Orthod. 2019, 89, 903–909. [Google Scholar] [CrossRef]

- Liu, J.-K.; Chen, Y.-T.; Cheng, K.-S. Accuracy of computerized automatic identification of cephalometric landmarks. Am. J. Orthod. Dentofac. Orthop. 2000, 118, 535–540. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Shim, E.; Park, J.; Kim, Y.J.; Lee, U.; Kim, Y. Web-based fully automated cephalometric analysis by deep learning. Comput. Methods Programs Biomed. 2020, 105513, 194. [Google Scholar] [CrossRef] [PubMed]

- Panesar, S.; Zhao, A.; Hollensbe, E.; Wong, A.; Bhamidipalli, S.S.; Eckert, G.; Dutra, V.; Turkkahraman, H. Precision and Accuracy Assessment of Cephalometric Analyses Performed by Deep Learning Artificial Intelligence with and without Human Augmentation. Appl. Sci. 2023, 13, 6921. [Google Scholar] [CrossRef]

- Laitenberger, F.; Scheuer, H.T.; Scheuer, H.A.; Lilienthal, E.; You, S.; Friedrich, R.E. Cephalometric landmark detection using vision transformers with direct coordinate prediction. J. Craniomaxillofac. Surg. 2025, 53, 1518–1529. [Google Scholar] [CrossRef]

- Han, M.; Huo, Z.; Ren, J.; Zhu, H.; Li, H.; Li, J.; Mei, L. Automated Landmark Detection and Lip Thickness Classification Using a Convolutional Neural Network in Lateral Cephalometric Radiographs. Diagnostics 2025, 15, 1468. [Google Scholar] [CrossRef]

- Lin, J.; Liao, Z.; Dai, J.; Wang, M.; Yu, R.; Yang, H.; Liu, C. Digital and artificial intelligence-assisted cephalometric training effectively enhanced students’ landmarking accuracy in preclinical orthodontic education. BMC Oral Health 2025, 25, 623. [Google Scholar] [CrossRef]

- Guinot-Barona, C.; Alonso Pérez-Barquero, J.; Galán López, L.; Barmak, A.B.; Att, W.; Kois, J.C.; Revilla-León, M. Cephalometric analysis performance discrepancy between orthodontists and an artificial intelligence model using lateral cephalometric radiographs. J. Esthet. Restor. Dent. 2024, 36, 555–565. [Google Scholar] [CrossRef]

- Lee, H.T.; Chiu, P.Y.; Yen, C.W.; Chou, S.T.; Tseng, Y.C. Application of artificial intelligence in lateral cephalometric analysis. J. Dent. Sci. 2024, 19, 1157–1164. [Google Scholar] [CrossRef]

- Kartbak, S.B.A.; Özel, M.B.; Kocakaya, D.N.C.; Çakmak, M.; Sinanoğlu, E.A. Classification of Intraoral Photographs with Deep Learning Algorithms Trained According to Cephalometric Measurements. Diagnostics 2025, 15, 1059. [Google Scholar] [CrossRef]

- Gallardo-Lopez, E.A.; Moreira, L.; Cruz, M.H.; Meneses, N.; Schumiski, S.; Salgado, D.; Crosato, E.M.; Costa, C. Cephalometric Tracing: Comparing Artificial Intelligence and Augmented Intelligence on Online Platforms. Dentomaxillofac. Radiol. 2025, twaf045. [Google Scholar] [CrossRef]

- Kottner, J.; Gajewski, B.J.; Streiner, D.L. Guidelines for Reporting Reliability and Agreement Studies (GRRAS). Int. J. Nurs. Stud. 2011, 48, 659–660. [Google Scholar] [CrossRef]

- Tejani, A.S.; Klontzas, M.E.; Gatti, A.A.; Mongan, J.T.; Moy, L.; Park, S.H.; Kahn, C.E., Jr.; CLAIM 2024 Update Panel. Checklist for Artificial Intelligence in Medical Imaging (CLAIM): 2024 Update. Radiol. Artif. Intell. 2024, 6, e240300. [Google Scholar] [CrossRef] [PubMed]

- Goodyear, M.D.; Krleza-Jeric, K.; Lemmens, T. The Declaration of Helsinki. BMJ 2007, 335, 624–625. [Google Scholar] [CrossRef] [PubMed]

- Darkwah, W.K.; Kadri, A.; Adormaa, B.B.; Aidoo, G. Cephalometric study of the relationship between facial morphology and ethnicity: Review article. Transl. Res. Anat. 2018, 12, 20–24. [Google Scholar] [CrossRef]

- Tanikawa, C.; Lee, C.; Lim, J.; Oka, A.; Yamashiro, T. Clinical applicability of automated cephalometric landmark identification: Part I-Patient-related identification errors. Orthod. Craniofac. Res. 2021, 24, 43–52. [Google Scholar] [CrossRef]

- Raghu, M.; Poole, B.; Kleinberg, J.; Ganguli, S.; Sohl-Dickstein, J. On the expressive power of deep neural networks. Proc. Mach. Learn. Res. 2017, 70, 2847–2854. [Google Scholar]

- Eche, T.; Schwartz, L.H.; Mokrane, F.Z.; Dercle, L. Toward generalizability in the deployment of artificial intelligence in radiology: Role of computation stress testing to overcome underspecification. Radiol. Artif. Intell. 2021, 3, e210097. [Google Scholar] [CrossRef]

- Hwang, H.W.; Park, J.H.; Moon, J.H.; Yu, Y.; Kim, H.; Her, S.B.; Srinivasan, G.; Aljanabi, M.N.A.; Donatelli, R.E.; Lee, S.J. Automated identification of cephalometric landmarks: Part 2-Might it be better than human? Angle Orthod. 2020, 90, 69–76. [Google Scholar] [CrossRef]

- Kim, J.; Kim, I.; Kim, Y.J.; Kim, M.; Cho, J.H.; Hong, M.; Kang, K.H.; Lim, S.H.; Kim, S.J.; Kim, Y.H.; et al. Accuracy of automated identification of lateral cephalometric landmarks using cascade convolutional neural networks on lateral cephalograms from nationwide multi-centres. Orthod. Craniofac. Res. 2021, 24 (Suppl. S2), 59–67. [Google Scholar] [CrossRef]

- Hendrickx, J.; Gracea, R.S.; Vanheers, M.; Winderickx, N.; Preda, F.; Shujaat, S.; Jacobs, R. Can artificial intelligence-driven cephalometric analysis replace manual tracing? A systematic review and meta-analysis. Eur. J. Orthod. 2024, 46, cjae029. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, S.K.; Yao, J.C.; Chang, H.F. The effects of differences in landmark identification on the cephalometric measurements in traditional versus digitized cephalometry. Angle Orthod. 2004, 74, 155–161. [Google Scholar]

- Kazimierczak, W.; Serafin, Z.; Nowicki, P.; Nożewski, J.; Janiszewska-Olszowska, J. AI in Orthodontics: Revolutionizing Diagnostics and Treatment Planning-A Comprehensive Review. J. Clin. Med. 2024, 13, 344. [Google Scholar] [CrossRef]

- Bor, S.; Ciğerim, S.Ç.; Kotan, S. Comparison of AI-assisted cephalometric analysis and orthodontist-performed digital tracing analysis. Prog. Orthod. 2024, 25, 41. [Google Scholar] [CrossRef]

| IAcut X | IAcut Y | IAcut (Mean) | IAnocut X | IAnocut Y | IAnocut (Mean) | Operator X | Operator Y | Operator (Mean) | |

|---|---|---|---|---|---|---|---|---|---|

| PARAMETERS (Mean) | 1.90 | 1.12 | 1.51 | 1.81 | 0.83 | 1.32 | 2.15 | 1.11 | 1.63 |

| 95%CI | (0–4.01) | (0.75–1.49) | (0.46–2.56) | (0.20–3.42) | (0.76–0.92) | (0.52–2.12) | (0–4.39) | (0.89–1.33) | (0.53–2.73) |

| Evaluator (Mean) | |

|---|---|

| A-point | 0.87 |

| ANS | 0.81 |

| AR | 1.51 |

| B-point | 0.84 |

| Gn | 0.86 |

| Go | 9.25 |

| L1Incisal | 0.76 |

| LowerLip | 0.92 |

| Me | 0.75 |

| Na | 1.56 |

| SoftTissue | 0.77 |

| Or | 1.40 |

| UpperLip | 0.90 |

| U1Incisal | 0.78 |

| Subnasale | 0.87 |

| Pog | 0.75 |

| Po | 1.49 |

| PNS | 1.69 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fracchia, D.E.; Bignotti, D.; Lai, S.; Cubeddu, S.; Curreli, F.; Lombardo, M.; Verdecchia, A.; Spinas, E. Reproducibility of AI in Cephalometric Landmark Detection: A Preliminary Study. Diagnostics 2025, 15, 2521. https://doi.org/10.3390/diagnostics15192521

Fracchia DE, Bignotti D, Lai S, Cubeddu S, Curreli F, Lombardo M, Verdecchia A, Spinas E. Reproducibility of AI in Cephalometric Landmark Detection: A Preliminary Study. Diagnostics. 2025; 15(19):2521. https://doi.org/10.3390/diagnostics15192521

Chicago/Turabian StyleFracchia, David Emilio, Denis Bignotti, Stefano Lai, Stefano Cubeddu, Fabio Curreli, Massimiliano Lombardo, Alessio Verdecchia, and Enrico Spinas. 2025. "Reproducibility of AI in Cephalometric Landmark Detection: A Preliminary Study" Diagnostics 15, no. 19: 2521. https://doi.org/10.3390/diagnostics15192521

APA StyleFracchia, D. E., Bignotti, D., Lai, S., Cubeddu, S., Curreli, F., Lombardo, M., Verdecchia, A., & Spinas, E. (2025). Reproducibility of AI in Cephalometric Landmark Detection: A Preliminary Study. Diagnostics, 15(19), 2521. https://doi.org/10.3390/diagnostics15192521