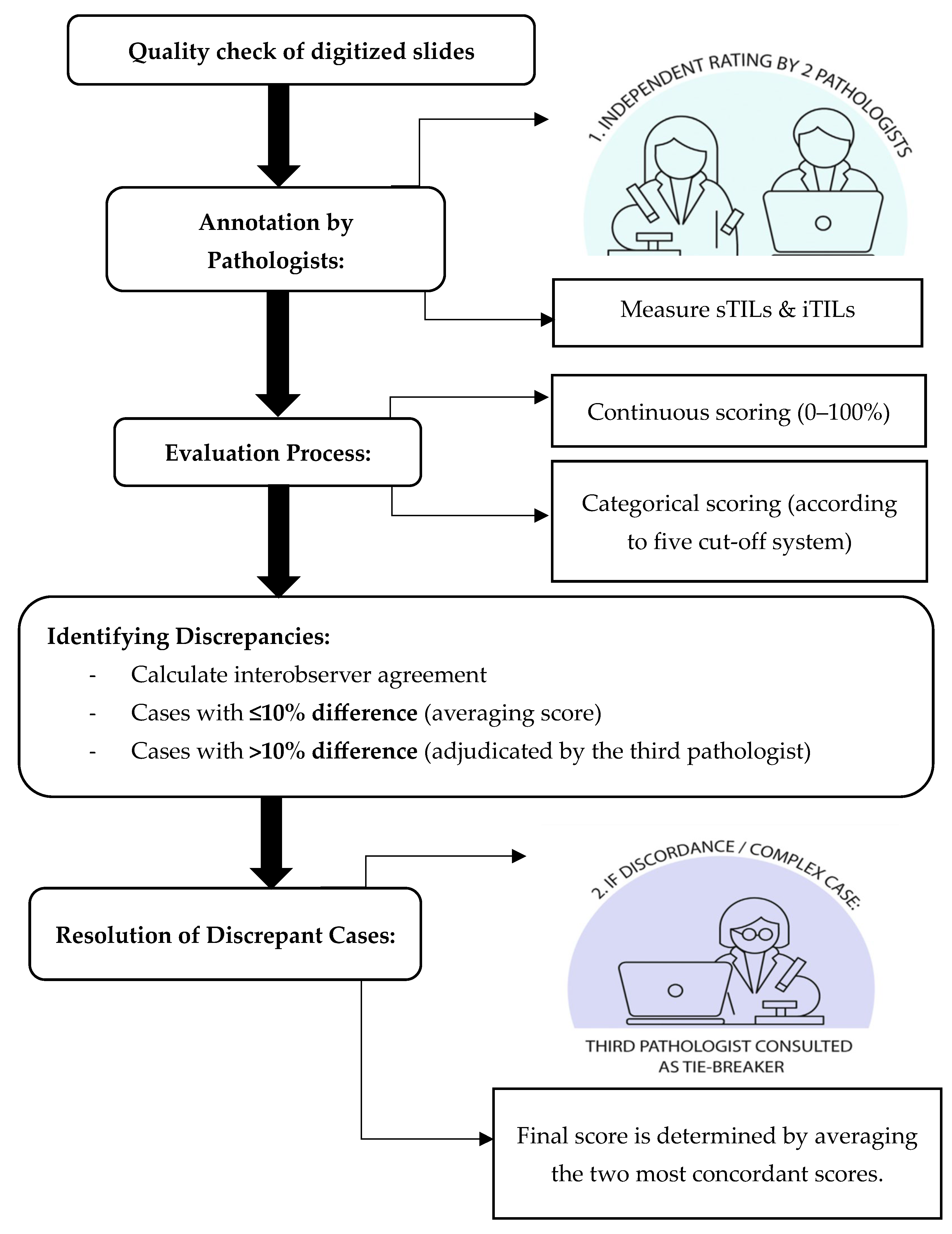

4.1. Interobserver Agreement and Consensus Review for Manual TIL Assessment

Accurate and reproducible assessment of TILs is essential for their use as prognostic biomarkers in TNBC. In this study, we evaluated interobserver variability among pathologists in scoring sTILs and iTILs using both continuous and categorical metrics. Our findings demonstrated moderate agreement for sTILs (ICC = 0.57–0.58,

p < 0.001) and good agreement for iTILs (ICC = 0.70–0.75,

p < 0.001), aligning with previous studies that report higher reproducibility for iTILs due to their more well-defined localization within tumor nests [

13,

27]. Minor differences between ICC values for agreement and consistency, particularly in sTIL scoring, suggest that while raters generally ranked cases similarly, some variability in absolute scoring remained, particularly in more subjective stromal regions. This reinforces the importance of standardized scoring protocols and consensus calibration to improve reproducibility in sTIL evaluation.

In contrast, the higher agreement seen for iTILs suggests that identifying lymphocytes within tumor cell nests is more straightforward and consistent across observers [

18]. The reduced reproducibility in sTIL scoring likely reflects the interpretive challenges associated with assessing lymphocytes dispersed across variable stromal regions. This heterogeneity may lead to subjective variation in identifying representative fields, especially in areas with ambiguous tumor-stroma boundaries or dense focal infiltrates.

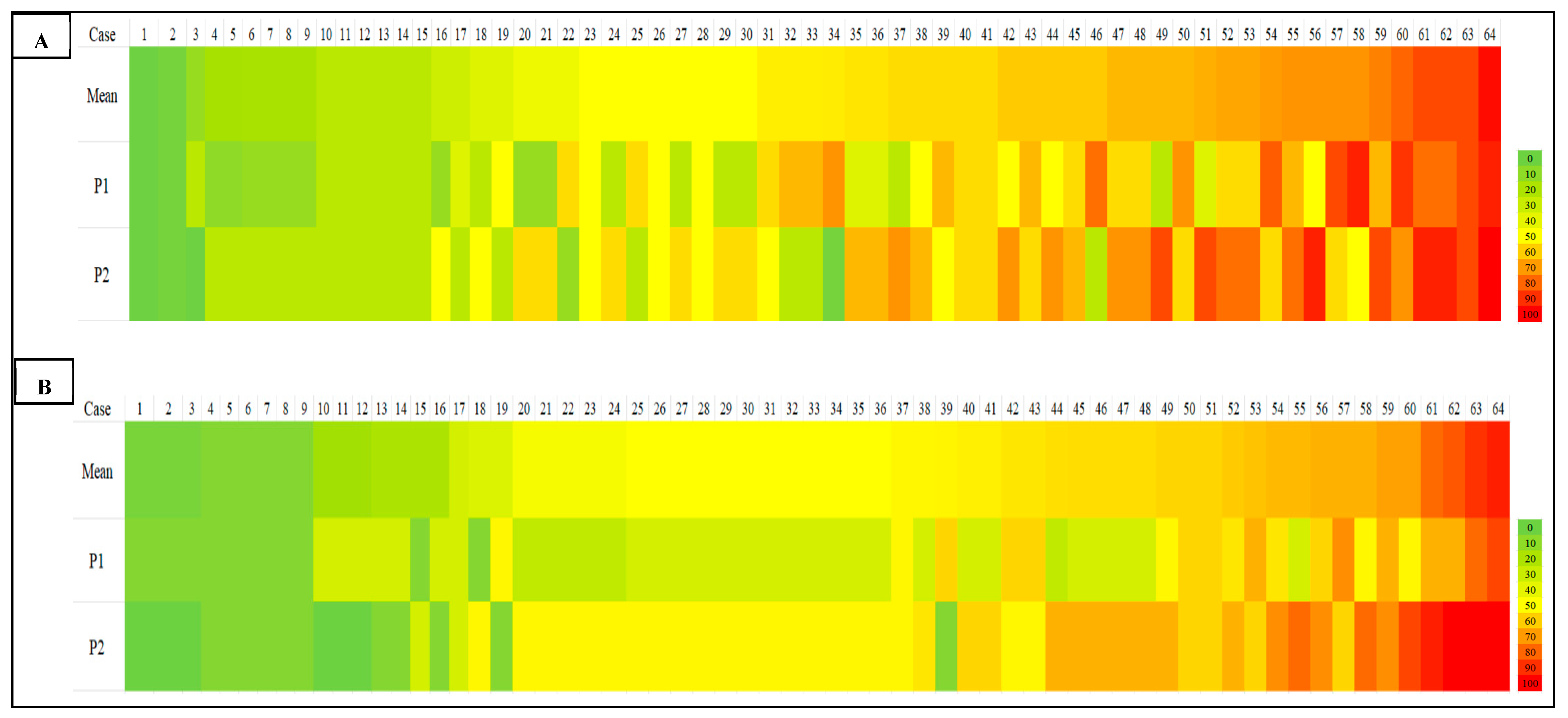

4.4. Heatmap Visualization of Scoring Discrepancies Across TIL Density Levels

Our heatmap analysis revealed notable variability in TIL scoring, particularly in cases with intermediate to high TILs, for both sTILs and iTILs. Pronounced color shifts between yellow, orange, and red across similar cases reflected inconsistencies in the density estimate between observers, highlighting the subjectivity inherent in manual evaluation. To address this, one promising strategy is the implementation of artificial intelligence (AI)-driven models for automated TIL (aTILs) quantification. A recent systematic review identified 27 studies employing such approaches in breast cancer, with the majority utilizing deep learning architectures, such as convolutional neural networks (CNNs) and fully convolutional networks (FCNs), for tasks like image segmentation and lymphocyte detection. These models were generally trained using pathologist-annotated ground truth datasets, with 58% of the studies reporting moderate to strong correlation (R = 0.6–0.98) between AI-generated outputs and manual TIL scores [

28], underscoring their potential to enhance reproducibility and diagnostic precision.

As a summary, the notable interobserver variability demonstrated in

Table 3,

Table 4 and

Table 5 and

Figure 2 and

Figure 3, despite the implementation of a calibration session, highlights the challenge of achieving reproducibility in manual TIL scoring. This variability was most significant in regions with moderate-to-high TIL densities, where the lack of a clear morphological cut-off may have contributed to greater interpretive subjectivity. Such discrepancies highlight the intrinsic limitations of visual-based scoring, which remains highly context-dependent and susceptible to individual bias, particularly in histologically complex or ambiguous regions, such as those with poorly defined tumor–stroma interfaces or clustered lymphoid infiltrates. These findings align with previous literature and collectively emphasize the urgent need for more objective, standardized frameworks, potentially supported by digital pathology and AI-based quantification tools, to minimize inconsistencies and enhance reproducibility in both clinical and research settings.

Collectively, evidence from prior studies suggests that concordance between manual TIL (mTIL) assessment and automated (aTIL) scoring ranges from weak to strong, primarily influenced by the differences in algorithm design, training data, and study methodology [

4,

5,

20,

29,

30,

31,

32,

33,

34,

35]. While these findings reinforce the growing potential of AI-based tools in pathology, they also highlight significant variability in performance. Notably, the automated models may struggle to replicate the nuanced judgment applied by experienced pathologists, particularly in histologically complex cases. This underscores the importance of ongoing validation, refinement, and clinical benchmarking of AI algorithms before their routine integration into diagnostic workflows can be justified.

4.5. Impact of Consensus Review on Scoring Consistency

Of the 64 cases assessed, 36 showed scoring discrepancies exceeding 10% between the two primary observers. Following adjudication by a third pathologist, interobserver agreement improved markedly, particularly for sTILs (ICC = 0.70–0.81) and iTILs (ICC = 0.81–0.84). This highlights the utility of structured consensus review in enhancing scoring reliability and reducing variability. Pairwise kappa analysis confirmed these improvements, with substantial agreement achieved in iTIL scoring at the 50% cut-off (κ = 1.00 between P1 and P3). Overall, these findings showed that cases that underwent consensus review showed improved interobserver agreement, supporting the value of multi-observer assessment in reducing subjectivity and enhancing consistency, particularly in cases with initial scoring discrepancies.

Consensus review methodologies have proven valuable in diagnostic settings involving subjective assessments and may serve as a quality control measure in pathology workflows, especially in multicenter trials or AI training datasets [

36]. These improvements underscore the importance of incorporating consensus-based scoring into clinical and research workflows, particularly when addressing cases that exhibit significant interobserver variability.

As demonstrated in

Table 3,

Table 4 and

Table 5, the level of interobserver agreement measured by Cohen’s kappa varied depending on the cut-off thresholds applied for TIL categorization. Notably, we observed that kappa values for sTIL assessment tended to be lower at intermediate cut-offs, particularly around the 30% cut-off, which approximates the mean sTIL value in our cohort. This reduction in agreement is attributable to increased classification discordance when both observers assign scores that fall close to the cut-off, resulting in small numerical differences leading to disagreement in categorical classification (e.g., high vs. low TILs). In contrast, more extreme cut-offs (e.g., 10% and 50%) demonstrated higher kappa values due to the score stratification, whereby the majority of cases fall uniformly into one category, thereby reducing the possibility of disagreement, even in the absence of true concordance. This phenomenon highlights a key limitation in interpreting kappa statistics, especially when cut-off-based classification is applied to continuous scoring data. These findings emphasize the importance of selecting cut-off points not only based on clinical validity but also with consideration of the score distribution to avoid skewed agreement metrics that could misrepresent the true extent of interobserver variability.

4.6. Contributing Factors to Interobserver Discrepancies

To better understand the factors causing disagreements in scoring, the discrepant cases were reviewed in detail. Discussions with the participating pathologist were undertaken to gather expert perspectives on the potential histological factors underlying the lack of agreement. Several potential contributors to interobserver discrepancies in TIL scoring have been identified. Among these, three key morphologic features were most consistently associated with discrepant cases: (1) heterogeneous distribution of TILs, (2) poorly defined tumor–stroma boundaries, and (3) focal dense lymphoid aggregates. Each of the 36 discrepant cases was reviewed and categorized accordingly. The frequency of each feature is summarized in

Table 6. The majority of discrepant cases (32%) demonstrated heterogeneous TIL distribution, followed by poorly defined tumor–stroma boundaries (30%) and focal lymphoid aggregates (20%). Several cases exhibited more than one contributing factor, suggesting that combined histological complexity can exacerbate scoring variability. The findings are summarized in

Table 6, providing a more precise visualization of the frequency and nature of each contributing factor across discrepant cases.

Other contributing factors to scoring discrepancies include the presence of necrosis and immune cell mimics, such as apoptotic bodies or reactive stromal cells, which may further interfere with accurate TIL identification and contribute to interobserver variability. Reactive plasma cells sometimes closely resembled tumor cells, leading to possible misinterpretation during assessment. Cases with extensive tumor necrosis made it challenging to distinguish between viable tumor tissue and the surrounding stroma, which in turn obscured the identification of infiltrating lymphocytes. These sources of error are inherently subjective and can mislead even experienced pathologists. In several cases, TILs were densely packed into small, focal areas, making it difficult to determine whether those regions accurately represented the overall immune response. This led to different observers choosing different regions for evaluation, which naturally contributed to scoring differences. These findings are consistent with previous reports indicating that tissue complexity, biological heterogeneity, and technical quality significantly influence TIL interpretation [

19,

20,

37,

38]. Such factors can lead to notable variability, even among experienced pathologists, particularly for ambiguous histological features.

Clinicopathological factors such as tumor size, nodal involvement, histological grade, and prior exposure to neoadjuvant chemotherapy may also influence interobserver variability in manual TIL scoring. These factors can significantly modulate the immune microenvironment, thereby affecting the density and spatial distribution of tumor-infiltrating lymphocytes. For instance, tumors with larger size, extensive necrosis, or treatment-induced regression patterns may present with heterogeneous immune cell infiltration, leading to interpretive challenges during manual assessment. Structured training programs focused on these morphologic pitfalls, along with consensus guidelines on region-of-interest (ROI) selection, could help reduce this variability and improve scoring consistency.

The absence of a widely accepted gold standard for TIL quantification remains a significant challenge in clinical and research applications. Although the TIL-WG has developed guidelines to enhance consistency in sTIL assessment, subjectivity persists due to interpretive variations, particularly in morphologically complex tissue regions. In this study, the observed interobserver variability highlights the inherent limitations of manual scoring approaches, which are influenced by factors such as heterogeneity in TIL distribution, ill-defined tumor–stroma boundaries, and focal dense lymphoid infiltrates. These challenges emphasize the need for developing a standardized quantification framework for TIL assessment. In parallel, there is growing support for the integration of artificial intelligence (AI)-based tools, which offer the potential to improve consistency and objectivity in TIL quantification, especially in histologically complex or borderline cases. Despite advances in automated detection and multi-target segmentation, the clinical adoption of AI in pathology remains limited. Barriers include interobserver variability in annotated datasets, complex tissue morphology, inconsistent labelling standards, and the limited transparency of AI decision-making processes [

39,

40]. These challenges highlight the need for robust, interpretable, and scalable AI models that can be seamlessly embedded into real-world pathology workflows. This is particularly critical in TNBC, where accurate TIL quantification is essential for prognostic classification and treatment planning.

Although this study did not directly evaluate AI, the findings provide a valuable benchmark for future studies that aim to compare manual scoring with automated approaches. Moving forward, combining expert review with AI-driven support systems could be key to improving the reliability of TIL assessment in both clinical and research settings.

4.7. Limitations and Future Directions

This study presents several novel contributions to the field of TIL assessment in TNBC, including a dual-compartment analysis of both stromal and intratumoral TILs, the integration of CD4+ and CD8+ IHC as visual aids to guide manual annotation on H&E-stained slides, and a descriptive, semi-quantitative examination of histopathological features underlying interobserver discrepancies. By incorporating these elements, our work offers a more comprehensive and biologically grounded evaluation of TILs in TNBC while also addressing critical sources of interpretive variability not sufficiently explored in previous interobserver studies.

This study has several limitations that warrant consideration. First, the sample size was relatively modest (n = 64), which may limit the generalizability of the findings to broader TNBC populations. Second, although the pathologists involved had comparable experience and were trained in standardized scoring protocols, inherent subjectivity in manual TIL assessment could still influence outcomes. Third, while interobserver variability was thoroughly examined, the study did not assess intra-observer consistency, which is also relevant for clinical reproducibility. Although IHC staining with CD4 and CD8 markers was used to assist pathologists in estimating TIL density during manual TIL scoring, the inclusion of another T-cell marker, such as CD3, could have offered a more comprehensive representation of total TIL infiltration. Additionally, although the potential of AI-assisted TIL scoring was discussed, no automated tools were directly evaluated in this study. This limits our ability to draw empirical conclusions about the comparative performance of manual versus automated approaches. Finally, the absence of clinical outcome correlation (e.g., survival or treatment response) restricts interpretation of the prognostic relevance of the observed scoring discrepancies.

While this study establishes the reliability and limitations of manual TIL scoring, future work should explore the integration of automated digital pathology tools for the quantification of TIL. Given the subjectivity and variability inherent to manual assessments, especially in morphologically complex regions, AI-based models offer an attractive pathway for reproducibility and scalability. Recent studies have demonstrated that deep learning approaches, CNNs and FCNs, can accurately segment lymphocytes and quantify TILs across large histological fields with minimal observer bias [

20,

33,

34,

35]. Tools such as QuPath, high-throughput analytics for learning and optimization (HALO) AI, and in-house trained pipelines have achieved moderate to strong correlation (r = 0.6–0.98) with pathologist-annotated ground truth. Incorporating such tools into our digitized TNBC slide dataset could provide valuable comparative insights into scoring consistency and highlight cases where AI either resolves or contributes to interobserver disagreement.

A potential analytical pipeline would involve running AI-based TIL quantification on the same set of whole-slide images and evaluating agreement metrics (e.g., ICC, Bland–Altman, and Spearman correlation) against consensus human scores. Additionally, discordant or outlier cases, particularly those resolved through adjudication by a third pathologist, could be re-evaluated to determine if AI models align more closely with consensus outcomes. This would serve to benchmark the practical utility of AI in reducing scoring bias and improving throughput in clinical settings. While the current study focused exclusively on assessing interobserver variability in manual TIL scoring using H&E-stained slides, we acknowledge the transformative potential of automated image analysis tools, such as ImageJ (

https://imagej.net/software/imagej/ accessed on 3 February 2025), FIJI (

https://imagej.net/software/fiji/ accessed on 3 February 2025), and QuPath (

https://qupath.github.io/ accessed on 3 February 2025), in establishing reproducible reference standards. These platforms enable high-throughput and scalable TIL quantification, which could address subjectivity and enhance consistency across institutions. Ultimately, validating AI outputs against high-fidelity consensus scores may allow the development of hybrid models, where human oversight is retained for ambiguous regions, and AI handles bulk quantification. Future efforts should focus on building explainable AI frameworks that incorporate histological context, handle variable TIL distributions, and provide confidence scores to guide clinical decision making.

Another limitation of the present study is the lack of stratification based on clinicopathological parameters, such as tumor size, lymph node status, histological grade, and treatment history. These variables are known to influence the tumor immune landscape and may confound the interpretation of TIL density and distribution. Stratified evaluation of TIL scoring agreement based on clinical subgroups will provide a more detailed understanding of the contexts in which variability is most distinct and may inform more refined scoring strategies.