Heart Murmur Detection in Phonocardiogram Data Leveraging Data Augmentation and Artificial Intelligence

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Availability

2.2. Audio Synchronization

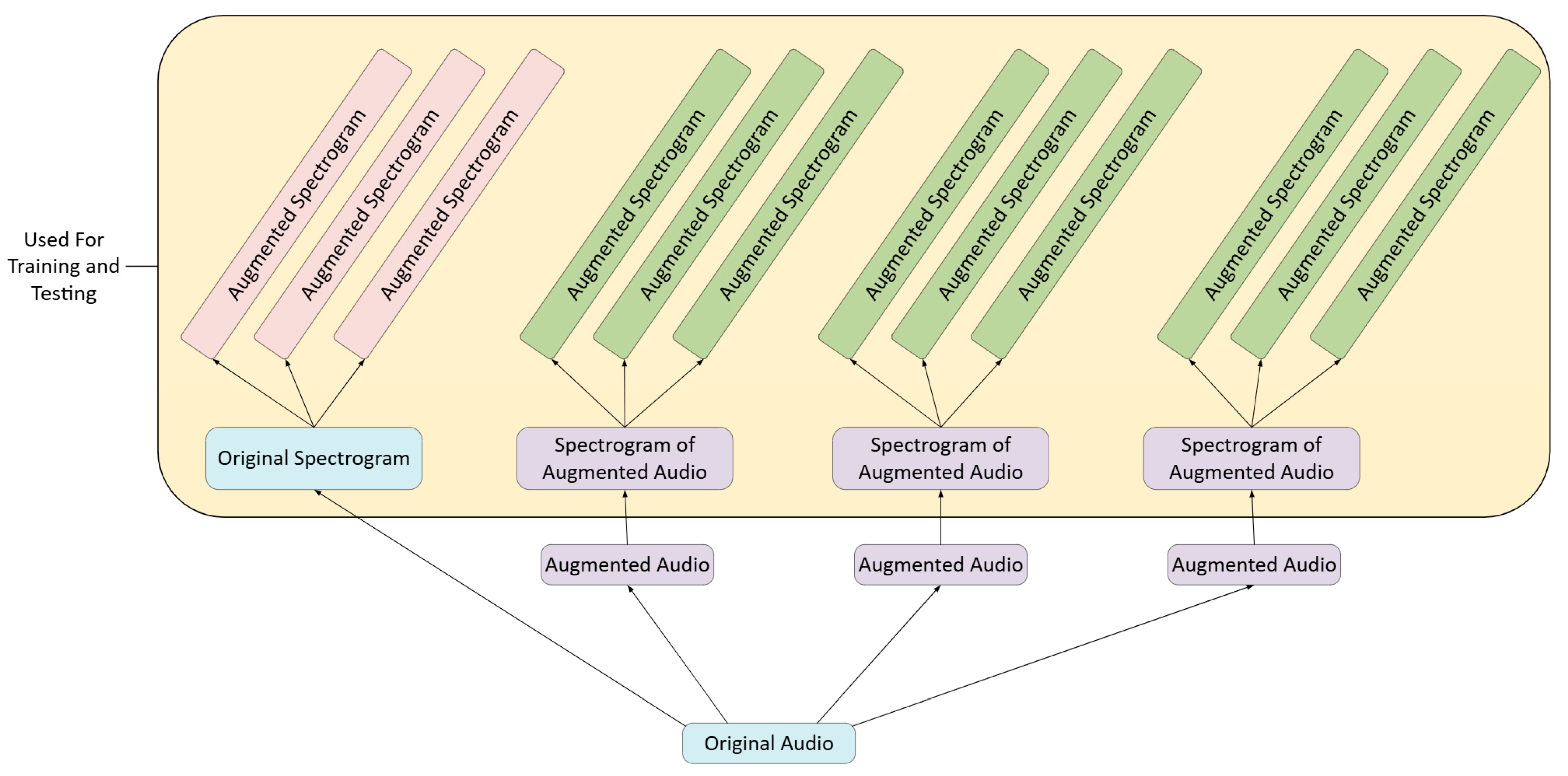

2.3. Data Augmentation

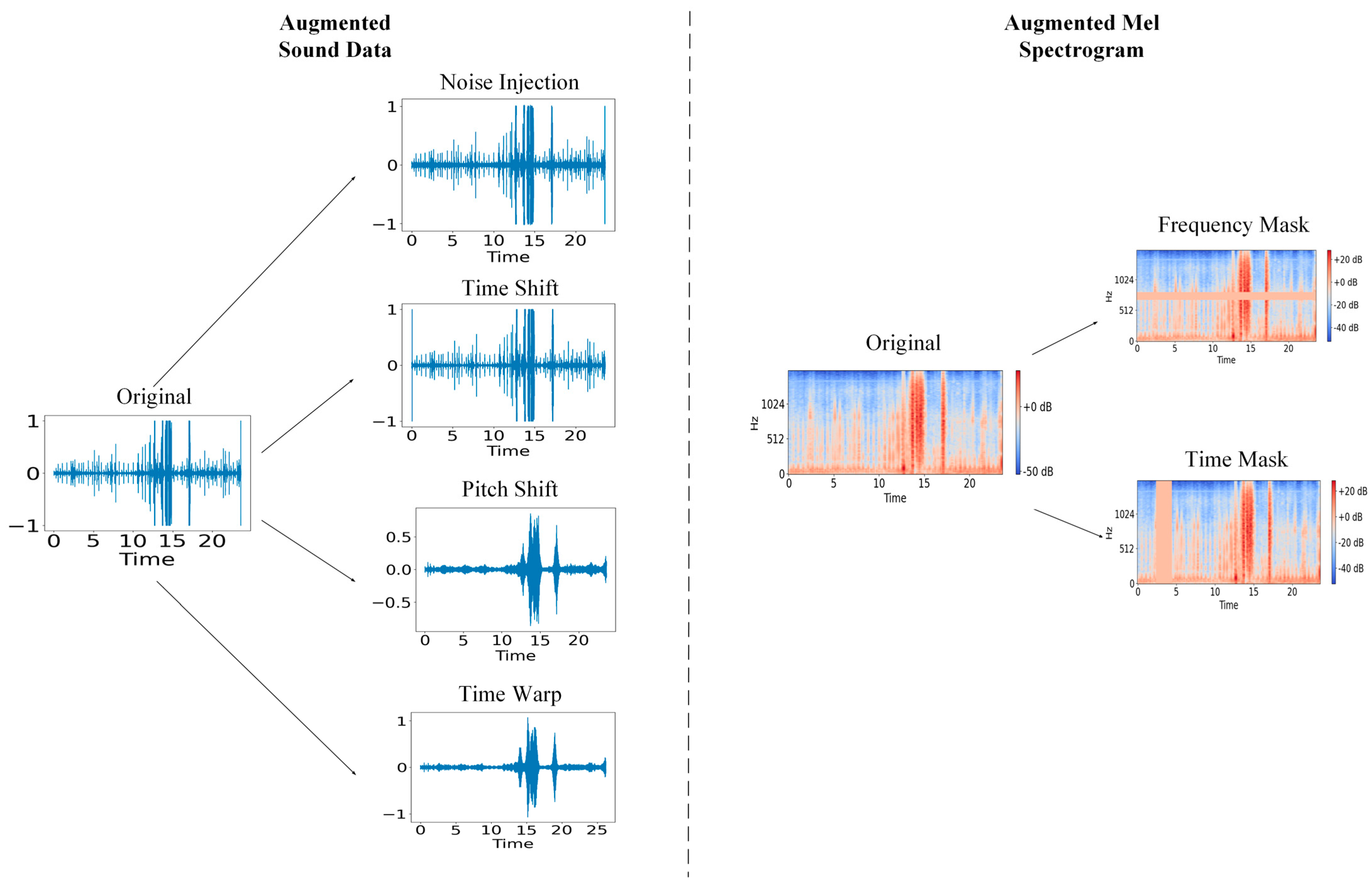

2.3.1. Audio File Augmentation

2.3.2. Mel Spectrogram Augmentation

2.4. Algorithm Development

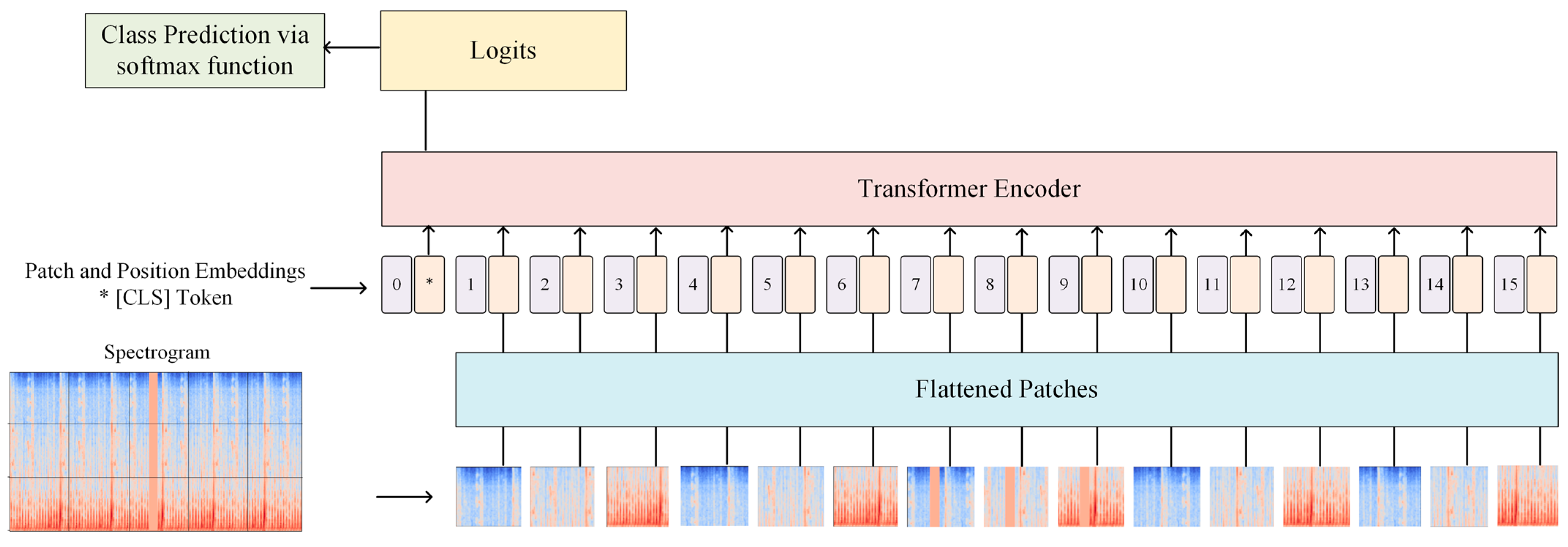

2.4.1. Vision Transformer

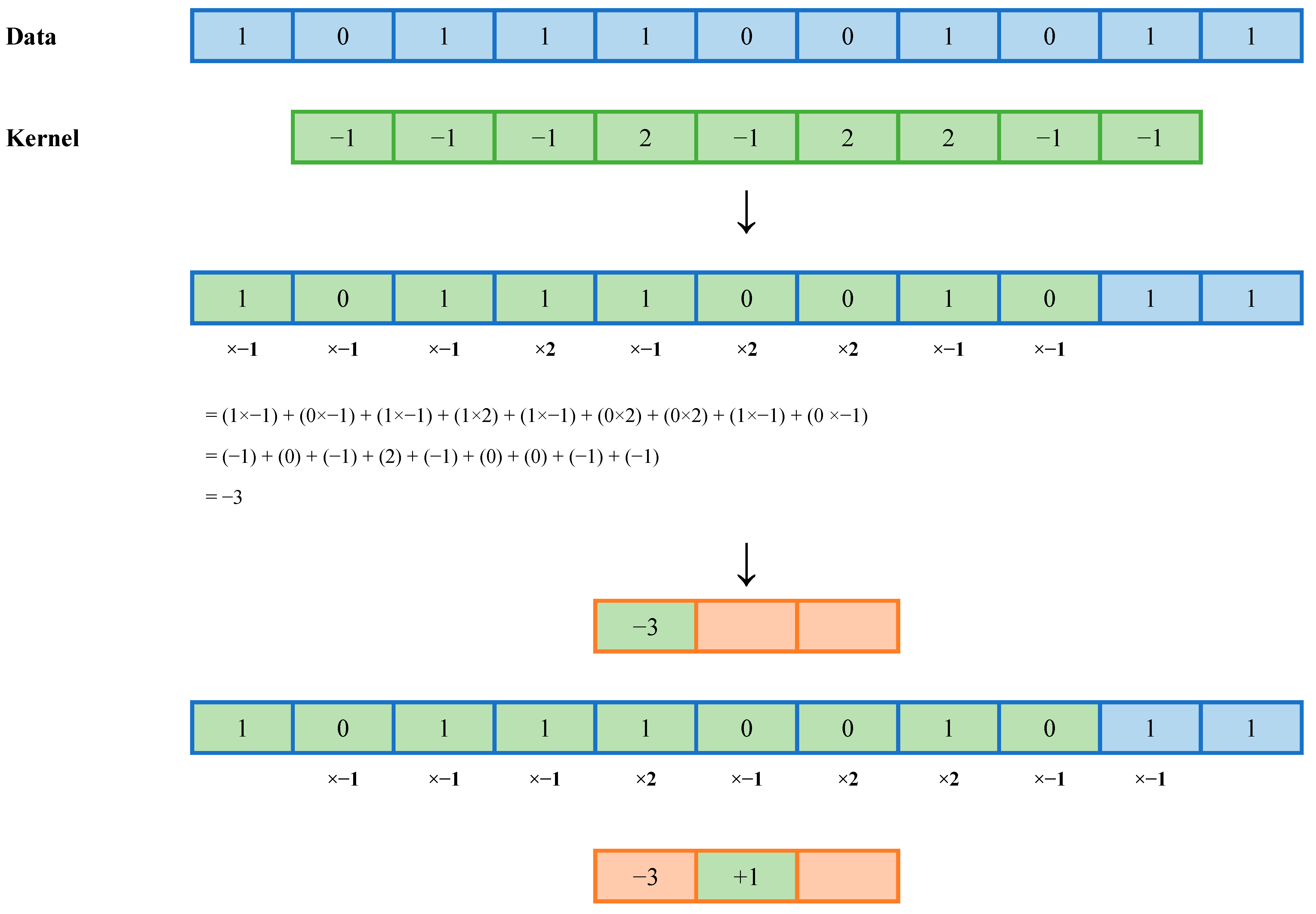

2.4.2. MiniROCKET

2.5. Statistical Analysis

- The effect of varying levels of data augmentation

- The overall accuracy of our MiniROCKET methodology

- The speed of our model

3. Results

3.1. Assessment of Vision Transformer

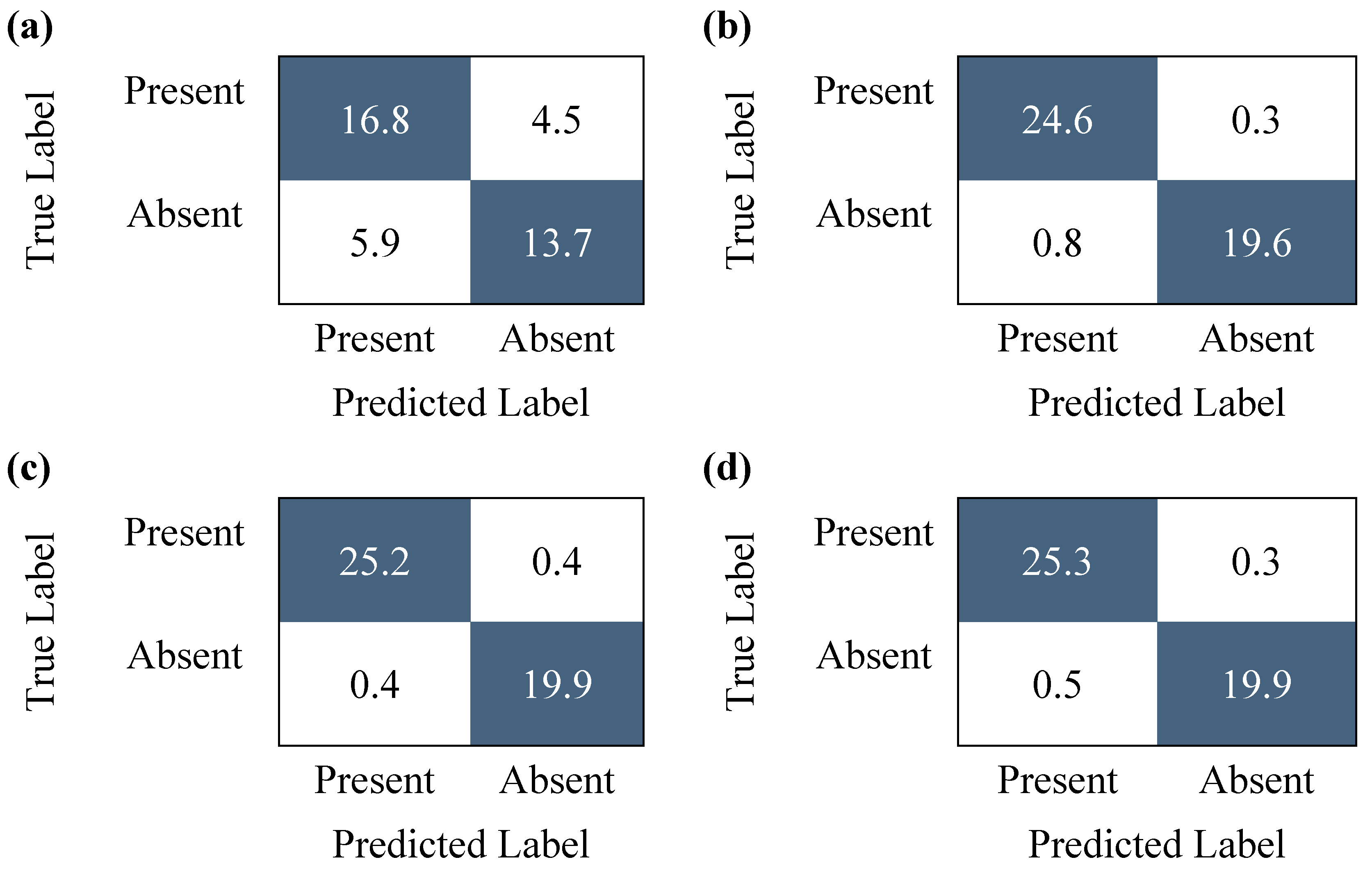

3.2. Assessment of Data Augmentation Techniques

3.3. Comparison with Pre-Existing Models

3.4. Evaluation Time Assessment

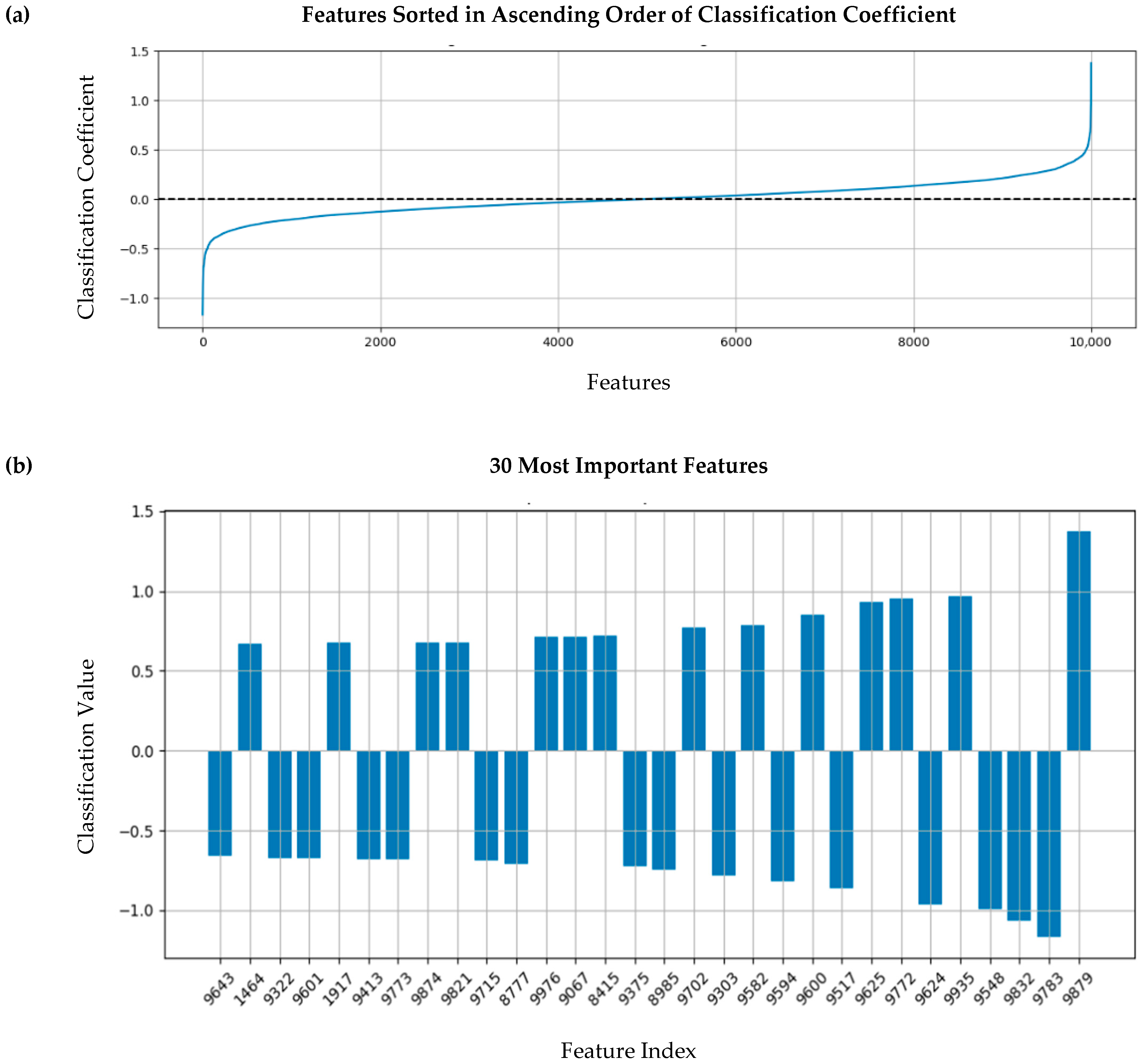

3.5. Feature Importance Assessment

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| MiniROCKET | Minimally Random Convolutional Kernel Transform |

| ViT | Vision Transformer |

| STFT | Short Time Fourier Transform |

References

- World Health Organization: Cardiovascular Diseases. Available online: https://www.who.int/health-topics/cardiovascular-diseases#tab=tab_1 (accessed on 6 August 2025).

- Dornbush, S.; Turnquest, A.E. Physiology, Heart Sounds. In StatPearls; StatPearls Publishing: Treasure Island, FL, USA, 2023; Volume 2. Available online: https://www.ncbi.nlm.nih.gov/books/NBK541010/ (accessed on 5 August 2025).

- Reyna, M.A.; Kiarashi, Y.; Elola, A.; Oliveira, J.; Renna, F.; Gu, A.; Perez Alday, E.A.; Sadr, N.; Sharma, A.; Kpodonu, J.; et al. Heart murmur detection from phonocardiogram recordings: The George B. Moody PhysioNet Challenge 2022. PLoS Digit. Health 2023, 2, e0000324. [Google Scholar] [CrossRef] [PubMed]

- Mintz, Y.; Brodie, R. Introduction to artificial intelligence in medicine. Minim. Invasive Ther. Allied Technol. 2019, 2, 73–81. [Google Scholar] [CrossRef] [PubMed]

- Fetzer, J.H. What is Artificial Intelligence? In Artificial Intelligence: Its Scope and Limits; Springer Netherlands: Dordrecht, The Netherlands, 1990; Volume 4, Available online: http://link.springer.com/10.1007/978-94-009-1900-6_1 (accessed on 5 August 2025).

- Dempster, A.; Schmidt, D.F.; Webb, G.I. MiniRocket: A Very Fast (Almost) Deterministic Transform for Time Series Classification. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Virtual Event, Singapore, 14 August 2021. [Google Scholar] [CrossRef]

- Oliveira, J.; Renna, F.; Costa, P.D.; Nogueira, M.; Oliveira, C.; Ferreira, C.; Jorge, A.; Mattos, S.; Hatem, T.; Tavares, T.; et al. The CirCor DigiScope Dataset: From Murmur Detection to Murmur Classification. IEEE J. Biomed. Health Inform. 2022, 26, 2524–2535. [Google Scholar] [CrossRef] [PubMed]

- Goldberger, A.L.; Amaral, L.A.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.-K.; Stanley, H.E. PhysioBank, PhysioToolkit, and PhysioNet: Components of a New Research Resource for Complex Physiologic Signals. Circulation 2000, 101, e215–e220. [Google Scholar] [CrossRef]

- Susič, D.; Gradišek, A.; Gams, M. PCGmix: A Data-Augmentation Method for Heart-Sound Classification. IEEE J. Biomed. Health Inform. 2024, 28, 6874–6885. [Google Scholar] [CrossRef]

- Moles, L.; Andres, A.; Echegaray, G.; Boto, F. Exploring Data Augmentation and Active Learning Benefits in Imbalanced Datasets. Mathematics 2024, 12, 1898. [Google Scholar] [CrossRef]

- Maharana, K.; Mondal, S.; Nemade, B. A review: Data pre-processing and data augmentation techniques. Glob. Transit. Proc. 2022, 3, 91–99. [Google Scholar] [CrossRef]

- Sacks, M.S.; Yoganathan, A.P. Heart valve function: A biomechanical perspective. Phil. Trans. R Soc. B 2007, 362, 1369–1391. [Google Scholar] [CrossRef] [PubMed]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. Computer Vision and Pattern Recognition. 2021. Available online: http://arxiv.org/abs/2010.11929 (accessed on 5 August 2025).

- Hoerl, A.E.; Kennard, R.W. Ridge Regression: Biased Estimation for Nonorthogonal Problems. Technometrics 1970, 12, 55–67. [Google Scholar] [CrossRef]

- McDonald, A.; Gales, M.J.; Agarwal, A. Detection of Heart Murmurs in Phonocardiograms with Parallel Hidden Semi-Markov Models. 2022 Comput. Cardiol. 2022, 498, 1–4. Available online: https://www.cinc.org/archives/2022/pdf/CinC2022-020.pdf (accessed on 5 August 2025).

- Manshadi, O.D.; Mihandoost, S. Murmur identification and outcome prediction in phonocardiograms using deep features based on Stockwell transform. Sci. Rep. 2024, 14, 7592. [Google Scholar] [CrossRef] [PubMed]

- Moukadem, A.; Bouguila, Z.; Abdeslam, D.O.; Dieterlen, A. A new optimized Stockwell transform applied on synthetic and real non-stationary signals. Digit. Signal Process. 2015, 46, 226–238. [Google Scholar] [CrossRef]

- Lu, H.; Yip, J.B.; Steigleder, T.; Grießhammer, S.; Heckel, M.; Jami, N.V.; Eskofier, B.; Ostgathe, C.; Koelpin, A. A Lightweight Robust Approach for Automatic Heart Murmurs and Clinical Outcomes Classification from Phonocardiogram Recordings. 2022 Comput. Cardiol. 2022, 498, 1–4. Available online: https://www.cinc.org/archives/2022/pdf/CinC2022-165.pdf (accessed on 5 August 2025).

- Xu, Y.; Bao, X.; Lam, H.K.; Kamayuako, E.N. Hierarchical multi-scale convolutional network for murmurs detection on pcg signals. 2022 Comput. Cardiol. 2022, 498, 1–4. [Google Scholar] [CrossRef]

- Walker, B.; Krones, F.; Kiskin, I.; Parsons, G.; Lyons, T.; Mahdi, A. Dual Bayesian ResNet: A Deep Learning Approach to Heart Murmur Detection. 2022 Comput. Cardiol. 2022, 498, 1–4. Available online: https://www.cinc.org/archives/2022/pdf/CinC2022-355.pdf (accessed on 5 August 2025).

- Lee, J.; Kang, T.; Kim, N.; Han, S.; Won, H.; Gong, W.; Kwak, I.-Y. Deep Learning Based Heart Murmur Detection using Frequency-time Domain Features of Heartbeat Sounds. 2022 Comput. Cardiol. 2022, 498, 1–4. Available online: https://www.cinc.org/archives/2022/pdf/CinC2022-071.pdf (accessed on 5 August 2025).

| True Positive | False Positive | True Negative | False Negative | Accuracy | Evaluation Time (s) | |

|---|---|---|---|---|---|---|

| Without ViT | 24 | 5 | 14 | 2 | 84.44% | 50.405 |

| With ViT | 22 | 6 | 14 | 2 | 81.82% | 0.019 |

| Metric | Total Number of Spectrograms | ||||

|---|---|---|---|---|---|

| 928 (No Augmentation) | 3712 | 8352 | 14,848 | ANOVA p-Value | |

| Number of Train Set Groups | 185 | 881 | 2041 | 3665 | N/A * |

| Number of Test Set Groups | 47 | 47 | 47 | 47 | N/A |

| Sensitivity | 81.5% [95% CI: 77.9% to 85.2%] | 95.8% [95% CI: 93.7% to 97.8%] | 100% [95% CI: 100% to 100%] | 100% [95% CI: 100% to 100%] | <2.00 × 10−16 ** |

| Specificity | 69.0% [95% CI: 66.2% to 71.9%] | 87.1% [95% CI: 83.2% to 91.1%] | 94.8% [95% CI: 91.0% to 98.5%] | 97.6% [95% CI: 95.8% to 99.4%] | 3.79 × 10−16 |

| Positive Predictive Value | 76.6% [95% CI: 74.7% to 78.4%] | 90.4% [95% CI: 87.8% to 93.0%] | 96.1% [95% CI: 93.4% to 98.8%] | 98.1% [95% CI: 96.8% to 99.5%] | <2.00 × 10−16 |

| Negative Predictive Value | 75.4% [95% CI: 71.7% to 79.1%] | 94.5% [95% CI: 92.0% to 96.9%] | 100% [95% CI: 100% to 100%] | 100% [95% CI: 100% to 100%] | <2.00 × 10−16 |

| F-Score | 0.789 [95% CI: 0.766 to 0.812] | 0.929 [95% CI: 0.915 to 0.944] | 0.980 [95% CI: 0.965 to 0.994] | 0.991 [95% CI: 0.983 to 0.998] | 4.35 × 10−16 |

| Accuracy | 76.0% [95% CI: 73.6% to 78.3%] | 91.9% [95% CI: 90.2% to 93.6%] | 97.7% [95% CI: 96.0% to 99.3%] | 98.9% [95% CI: 98.1% to 99.7%] | <2.00 × 10−16 |

| Weighted Accuracy | 79.8% [95% CI: 76.7% to 82.9%] | 94.6% [95% CI: 92.9% to 96.2%] | 99.3% [95% CI: 98.8% to 99.8%] | 99.7% [95% CI: 99.4% to 99.9%] | <2.00 × 10−16 |

| Testing Time per Sample (seconds) | 0.023 | 0.023 | 0.023 | 0.022 | N/A |

| METRIC | Sensitivity | Specificity | Positive Predictive Value | Negative Predictive Value | F-Score | Accuracy | Weighted Accuracy | ||

|---|---|---|---|---|---|---|---|---|---|

| Levels of Augmentation | None-Light | Mean Difference | −0.142 [95% CI: −0.178 to −0.107] | −0.180 [95% CI: −0.235 to −0.127] | 0.138 [95% CI: 0.101 to 0.175] | −0.1901 [95% CI: −0.229 to −0.153] | 0.190 [95% CI: 0.128 to 0.252 | −0.160 [95% CI: −0.189 to −0.130] | −0.148 [95% CI: −0.178 to −0.117] |

| p-value | <0.0001 * | <0.0001 | <0.0001 | <0.0001 | <0.0001 | <0.0001 | <0.0001 | ||

| None-Medium | Mean Difference | −0.185 [95% CI: −0.220 to −0.150] | −0.257 [95% CI: −0.311 to −0.203 | 0.195 [95% CI: 0.158 to 0.232] | −0.246 [95% CI: −0.284 to −0.209] | 0.290 [95% CI: 0.228 to 0.352] | −0.217 [95% CI: −0.246 to −0.188] | −0.195 [95% CI: −0.225 to −0.164] | |

| p-value | <0.0001 | <0.0001 | <0.0001 | <0.0001 | <0.0001 | <0.001 | <0.0001 | ||

| None-Heavy | Mean Difference | −0.185 [95% CI: −0.220 to −0.150] | −0.286 [95% CI: −0.340 to −0.232] | 0.216 [95% CI: 0.179 to 0.253] | −0.246 [95% CI: −0.284 to −0.209] | 0.327 [95% CI: 0.265 to 0.389] | −0.230 [95% CI: −0.259 to −0.200] | −0.199 [95% CI: −0.229 to −0.168] | |

| p-value | <0.0001 | <0.0001 | <0.0001 | <0.0001 | <0.0001 | <0.0001 | <0.0001 | ||

| Light-Medium | Mean Difference | 0.042 [95% CI: 0.007 to 0.077] | 0.076 [95% CI: 0.022 to 0.130] | −0.057 [95% CI: −0.094 to −0.020] | 0.055 [95% CI: 0.018 to 0.093] | −0.100 [95% CI: −0.162 to −0.038] | 0.057 [95% CI: 0.028 to 0.087] | 0.047 [95% CI: 0.017 to 0.078] | |

| p-value | 0.012 | 0.003 | 0.001 | 0.002 | 0.0003 | <0.0001 | 0.001 | ||

| Light-Heavy | Mean Difference | −0.042 [95% CI: −0.078 to −0.007] | −0.105 [95% CI: −0.159 to −0.051] | 0.078 [95% CI: 0.041 to 0.115] | −0.055 [95% CI: −0.093 to −0.018] | 0.137 [95% CI: 0.075 to 0.199] | −0.070 [95% CI: −0.100 to −0.041] | −0.051 [95% CI: −0.081 to −0.021] | |

| p-value | 0.0124 | <0.0001 | <0.0001 | 0.002 | <0.0001 | <0.0001 | 0.0003 | ||

| Medium-Heavy | Mean Difference | 1.11 × 10−16 [95% CI: −0.035 to −0.035] | −0.029 [95% CI: −0.083 to 0.026] | 0.021 [95% CI: −0.016 to 0.058] | 0.000 [95% CI: −0.038 to 0.038] | 0.038 [95% CI: −0.024 to 0.099] | −0.013 [95% CI: −0.042 to 0.017] | −0.004 [95% CI: −0.034 to 0.026] | |

| p-value | 1.000 | 0.494 | 0.447 | 1.000 | 0.374 | 0.647 | 0.985 | ||

| Number of Train Groups | Number of Test Groups | Sensitivity | Specificity | Positive Predictive Value | Negative Predictive Value | F-Score | Accuracy | Weighted Accuracy | Time |

|---|---|---|---|---|---|---|---|---|---|

| 2969 | 743 | 91.7% [95% CI: 90.4% to 92.9%] | 91.7% [95% CI: 90.4% to 92.9%] | 91.4% [95% CI: 90.7% to 92.1%] | 91.8% [95% CI: 90.6% to 93.1%] | 91.4% [95% CI: 90.7% to 92.2%] | 91.5% [95% CI: 90.8% to 92.3%] | 91.6% [95% CI: 90.6% to 92.7%] | 0.022 |

| Reference | Method | WAcc | F-Score | Sensitivity | Evaluation Time (Seconds per Patient) |

|---|---|---|---|---|---|

| Lu et al., 2022 [18] | Lightweight Convolutional Neural Network | 0.780 | 0.619 | NR * | 0.62 |

| McDonald et al., 2022 [15] | Parallel Hidden Semi-Markov Model | 0.776 | 0.623 | NR | 0.70 |

| Xu et al., 2022 [19] | Hierarchical multi-scale Convolutional Network | 0.776 | 0.647 | NR | 0.24 |

| Walker et al., 2022 [20] | Dual Bayesian ResNet | 0.771 | 0.686 | NR | 35.31 |

| Lee et al., 2022 [21] | Light Convolutional Neural Network + ResMax | 0.767 | 0.521 | NR | 1.59 |

| Manshadi & Mihandoost, 2024 [16] | Deep Features + RFE + RF | 0.930 | 0.910 | 0.910 | NR |

| Our Model | ViT + MiniROCKET | 0.916 ** | 0.914 | 0.917 | 0.02 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Valaee, M.; Shirani, S. Heart Murmur Detection in Phonocardiogram Data Leveraging Data Augmentation and Artificial Intelligence. Diagnostics 2025, 15, 2471. https://doi.org/10.3390/diagnostics15192471

Valaee M, Shirani S. Heart Murmur Detection in Phonocardiogram Data Leveraging Data Augmentation and Artificial Intelligence. Diagnostics. 2025; 15(19):2471. https://doi.org/10.3390/diagnostics15192471

Chicago/Turabian StyleValaee, Melissa, and Shahram Shirani. 2025. "Heart Murmur Detection in Phonocardiogram Data Leveraging Data Augmentation and Artificial Intelligence" Diagnostics 15, no. 19: 2471. https://doi.org/10.3390/diagnostics15192471

APA StyleValaee, M., & Shirani, S. (2025). Heart Murmur Detection in Phonocardiogram Data Leveraging Data Augmentation and Artificial Intelligence. Diagnostics, 15(19), 2471. https://doi.org/10.3390/diagnostics15192471