Using Artificial Intelligence to Develop Clinical Decision Support Systems—The Evolving Road of Personalized Oncologic Therapy

Abstract

1. Introduction

- We developed a clinician-led, proof-of-concept CDSS for predicting complications of Bevacizumab using routinely available pretherapeutic data.

- We tested several machine learning algorithms (logistic regression, Random Forest, XGBoost) and selected the best-performing approach.

- We derived a simplified logistic regression-based risk score and translated it into an accessible offline HTML calculator for clinical use.

- We demonstrated feasibility by applying user-friendly AI tools to a real-world oncology dataset without advanced IT expertise.

- We positioned this work not as a final model but as a step toward reducing clinicians’ apprehension toward AI and fostering future multidisciplinary collaborations.

2. Materials and Methods

2.1. Patient Selection and Data Collection

2.2. Variable Selection

2.3. Database Pre-Processing and Leakage Prevention

2.4. Training Predictive Models

- Simple logistic regression—model based on linear relationships between predictors and log-odds, relatively sensitive to outliers and noisy variables, but which offers the advantage of obtaining coefficients that are easily interpretable in a clinical context.

- Penalized logistic regression with Elastic Net—method that combines the advantages of L1 (sparse, variable selection) and L2 (stability) regularization.

- Random Forest—robust nonlinear model, based on decision trees, with great capacity to capture complex relationships.

- XGBoost—boosting-type model (improves errors gradually) that offers the highest accuracy, superior performance on complicated data and good management of imbalances but at the cost of lower interpretability.

- Logistic regression: Inverse weights were applied for the classes (to correct class imbalance) and L1 (Lasso), L2 (Ridge) and Elastic-Net-type penalties were tested (to reduce the overfitting effect and select the relevant variables); it is a method that combines the advantages of L1 (sparse regularization, variable selection) and L2 (stability).

- Random Forest: Hyperparameters were adjusted (such as the number of trees, maximum tree depth, minimum number of samples per leaf), and weights were applied to reduce minority class classification errors.

- XGBoost: Boost parameters (learning_rate, max_depth, n_estimators, subsample) and class weights were adjusted to improve performance in detecting cases with complications.

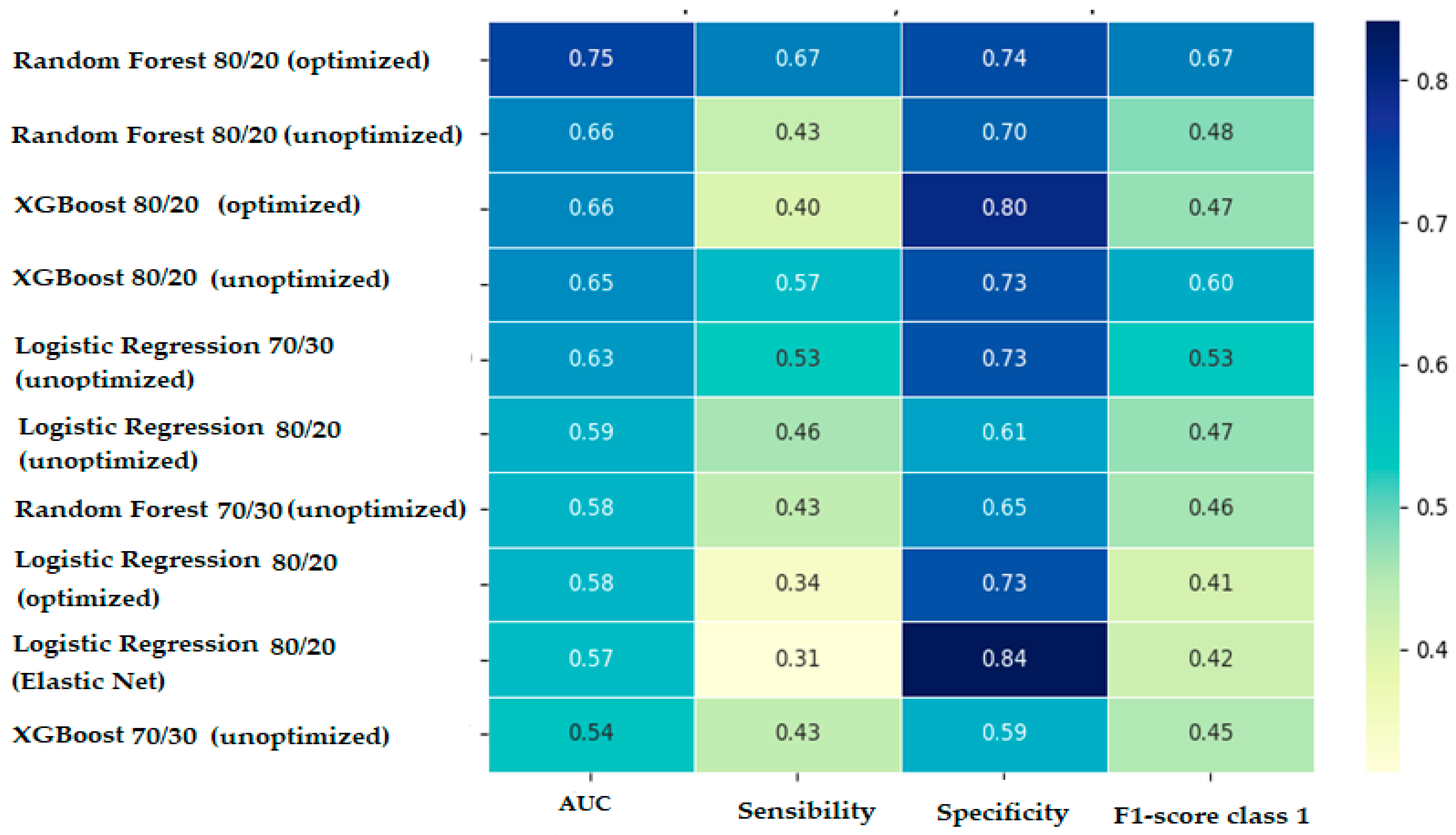

2.5. Comparing the Performance of Predictive Models

- Overall accuracy.

- AUC ROC (area under the receiver operating characteristic curve) score—to measure the overall ability to discriminate between classes.

- Sensitivity (recall) for class 1 (ability to correctly identify patients with complications)—essential in a medical context, as the inability to identify cases that have the potential to suffer complications can have serious clinical consequences.

- Specificity (ability to correctly identify cases without complications)—clinically significant, as it confirms the possibility of safe drug administration.

- F1-score for class 1—indicates the balance between precision and sensitivity.

- Error rate—indicates the total proportion of incorrect classifications (false positive and false negative).

2.6. Internal Validation

3. Results

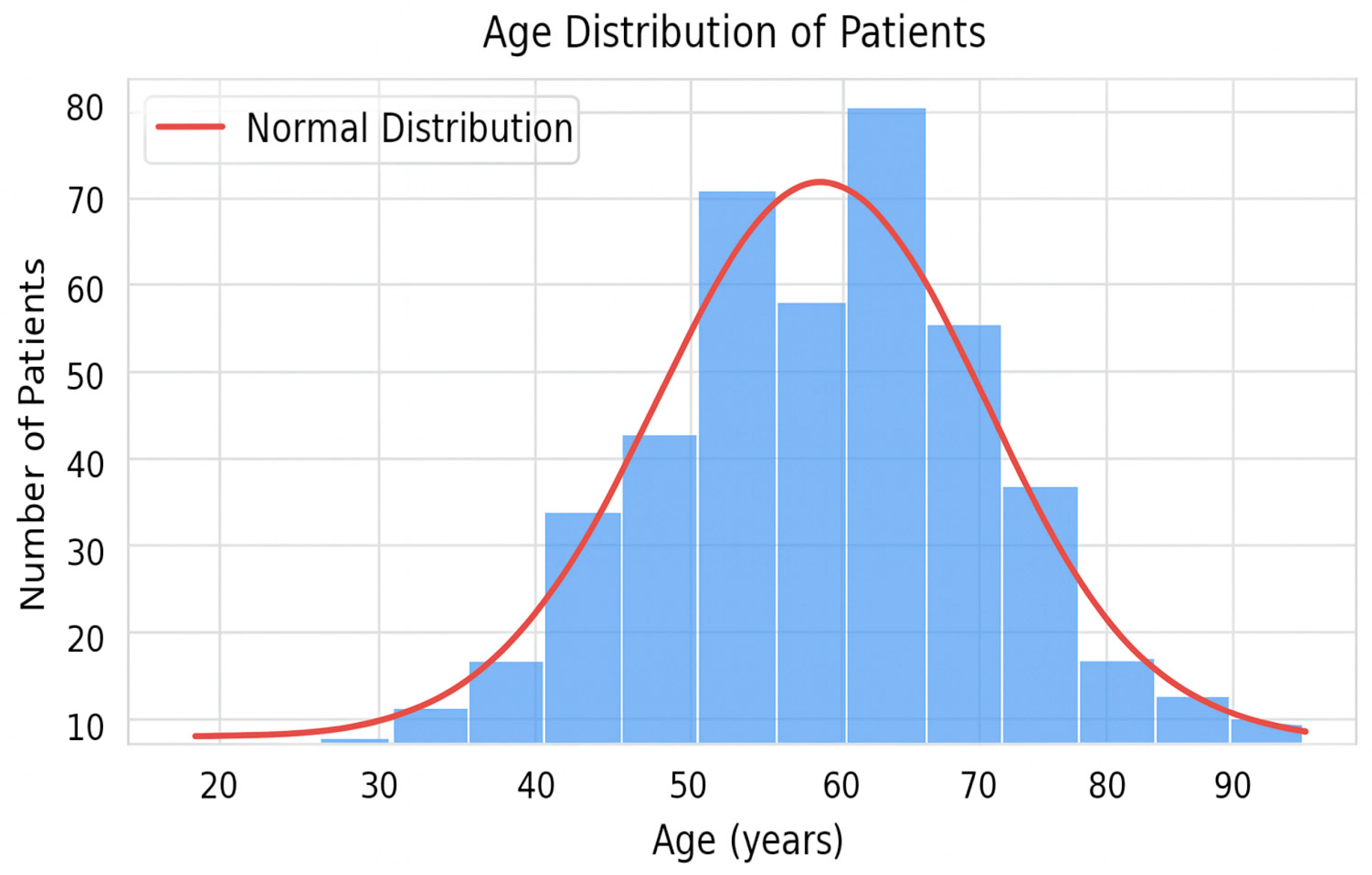

3.1. General Description of the Cohorts and Distribution of the Target Variable

3.2. Comparative Interpretation of Predictive Models’ Performances

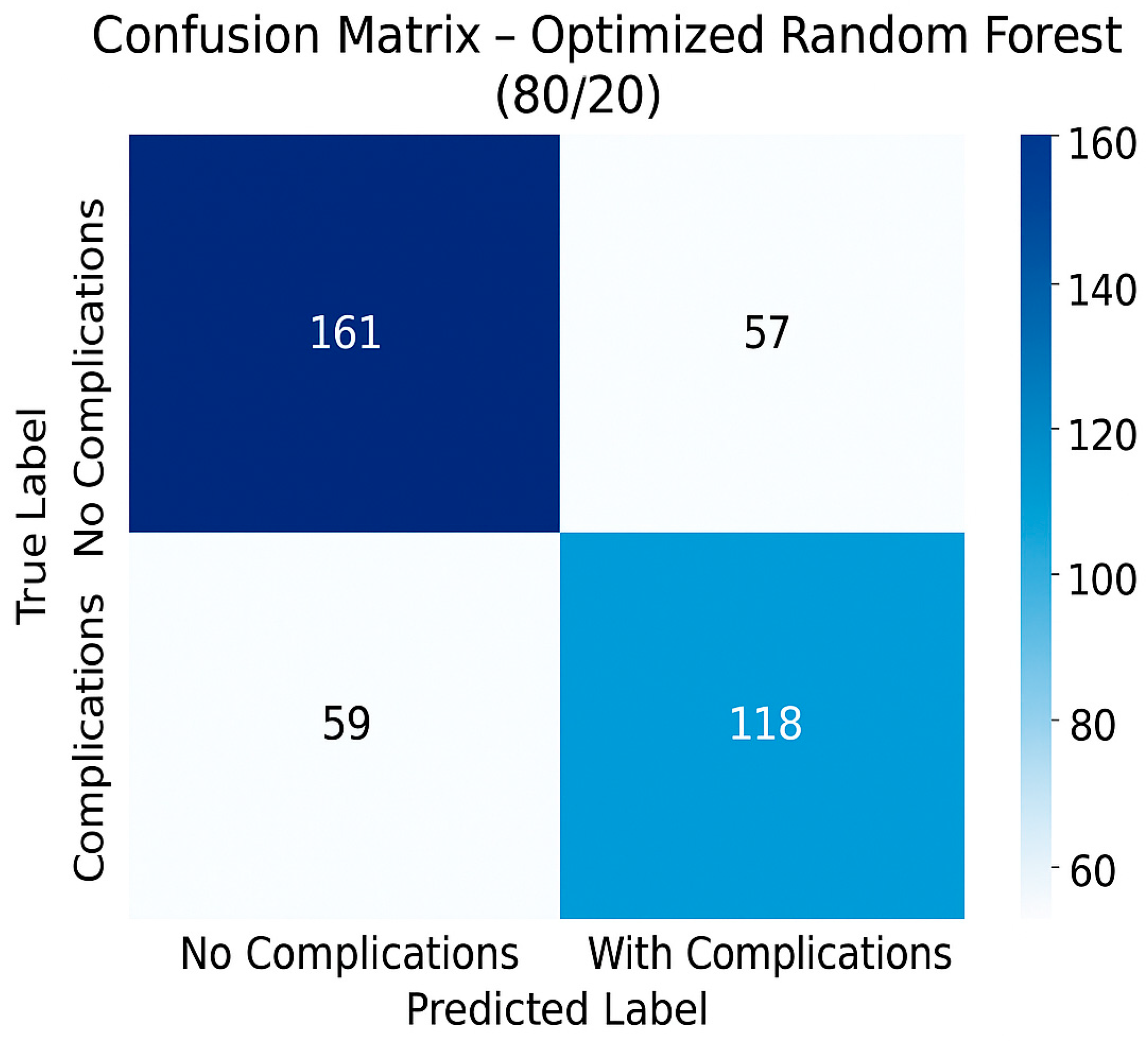

3.3. Performances of the Optimal Predictive Model

- Overall accuracy: 70.63%.

- Error rate: 29.37%.

- Sensitivity (recall)—detection of patients with complications: 66.67%.

- Specificity (detection of patients without complications): 73.85%.

- AUC ROC: 0.75.

- F1-score for class 1 (patients with complications): 0.67.

- Precision: 0.673.

3.4. Model Validation

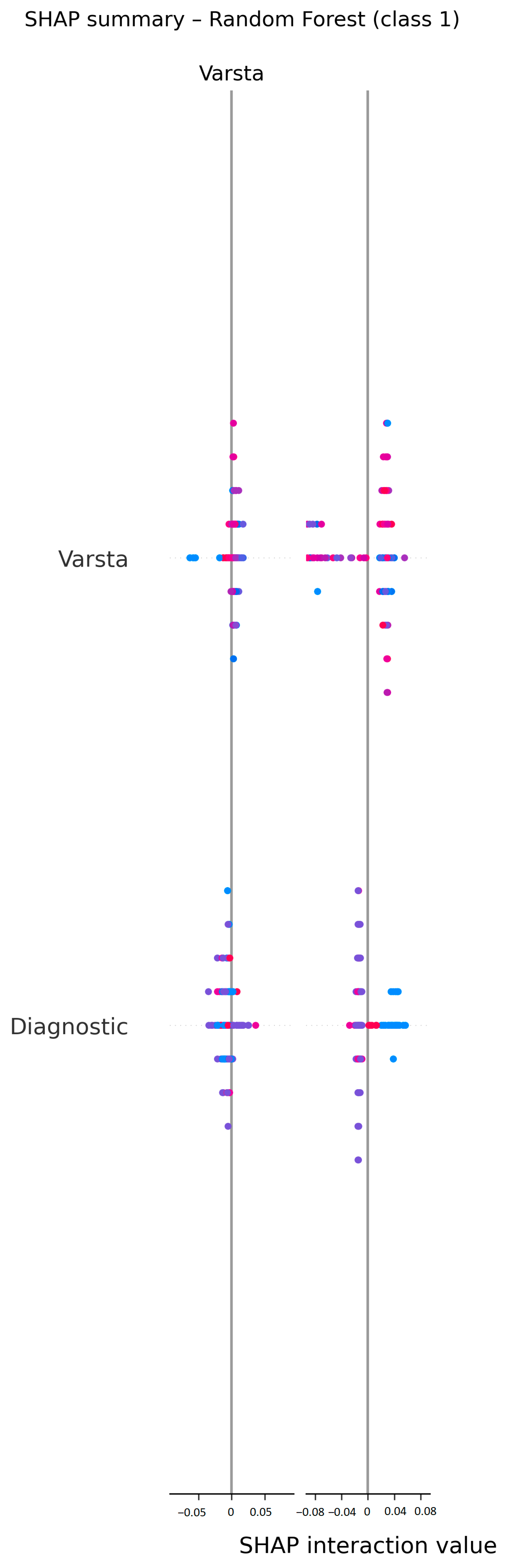

3.5. Model Interpretability (SHapley Additive exPlanations—SHAP)

3.6. Translating the Predictive Model into Clinical Tools Applicable in Oncological Practice

- Selection of major predictive variables: The first step in the construction of the clinical score consisted of extracting the most relevant predictive variables by analyzing the importance coefficients of the variables generated by the selected model. We were, thus, able to identify variables that the predictive model considered to have greater predictive power. Of these, those that were easily accessible in current practice, available pretherapeutically and clinically significant were retained. The list of biological parameters considered predictive included the following: hemoglobin level, platelet and leukocyte count, blood glucose, creatinine, urea and transaminase values. Clinical parameters, such as age, location of neoplastic disease, stage of disease and degree of tumor differentiation, were also introduced into the list.

- Construction of the initial clinical score: For each selected numerical variable, binary thresholds were defined (e.g., Hg < 11 g/dL, urea ≥ 40 mg/dL), based on the distribution in the dataset and clinical expertise. Then, each category was assigned a score proportional to its relative importance in the optimized Random Forest 80/20 model. The scores were aggregated into a cumulative score, which was subsequently divided into three risk classes: low (0–2 points), intermediate (3–5 points) and high (≥6 points).

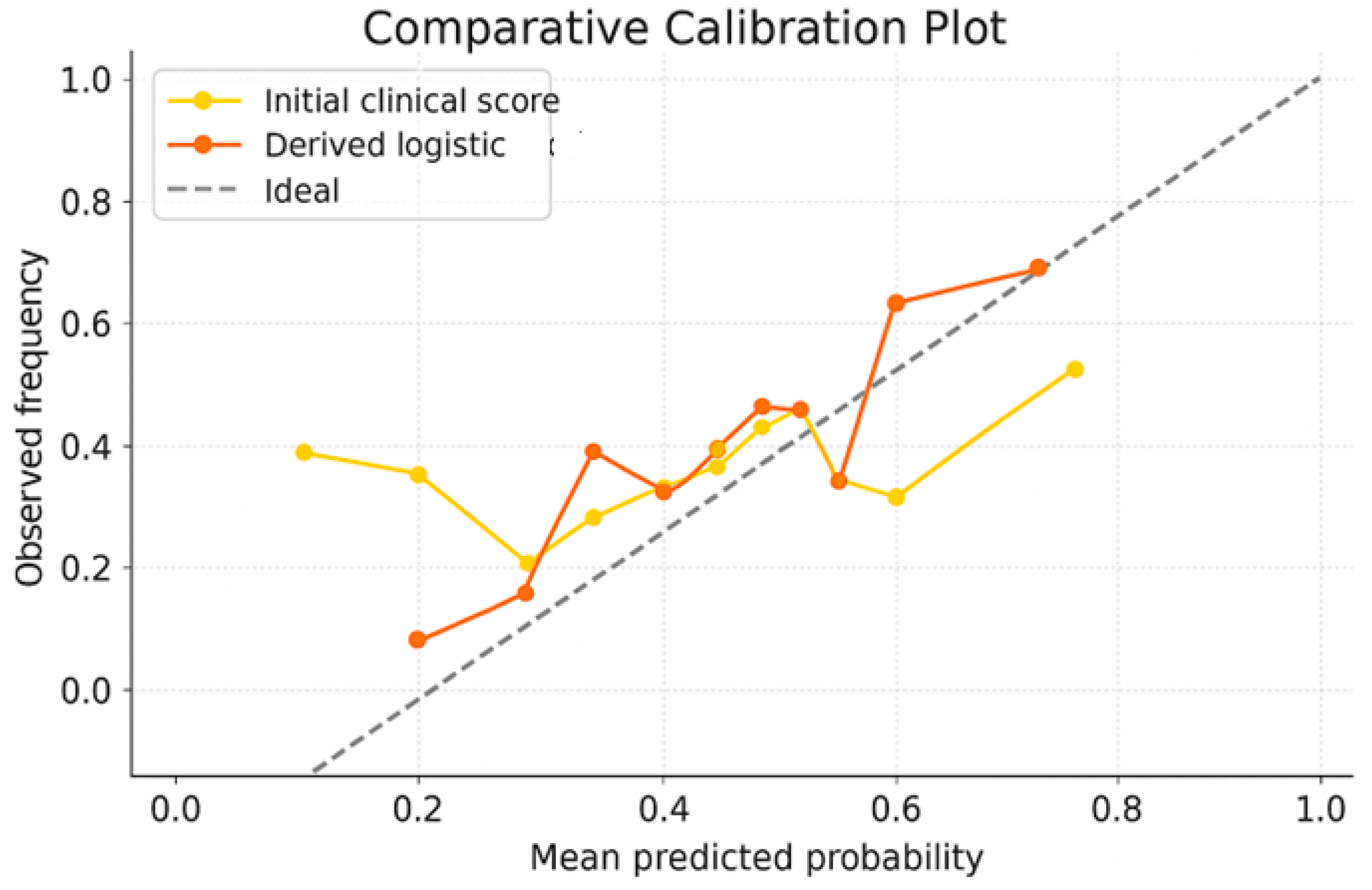

- Testing of the initial clinical score: The score was applied retrospectively to the entire cohort to evaluate its performance in identifying patients at risk. We analyzed the distribution of patients according to score, the actual complication rates in each risk group and the statistical performances (AUC ROC, accuracy, sensitivity and specificity). The performances of the initial clinical score were as follows: overall accuracy—59%, AUC ROC—0.555, sensitivity (recall for class 1)—47.2%, specificity—60.8%, F1-score class 1—0.502. These results suggest a low capacity to triage patients exposed to Bevacizumab according to the risk of developing specific complications. Moreover, since the initial score has a discrete nature, with fixed thresholds, the premises of some limitations in the fine representation of individual risk are created. These aspects motivated us to try to optimize the score.

- Optimization of clinical score performances: The refinement of the clinical score was performed by applying a logistic regression model, using the derived raw score as predictors. A calibrated probability for the occurrence of complications was, thus, generated, which allowed us to eliminate arbitrary thresholds and generate a continuous risk estimate and generate a new logistic-derived clinical score. The advantages of the logistic-derived score are as follows: personalized probabilities of risk occurrence, better separation of high-risk from low-risk cases, and the possibility of validation through calibration (observed vs. predicted ratio). A simplified logistic regression-based risk score was derived from the multivariate logistic model. Regression coefficients (β) were scaled and rounded to obtain integer weights, which were then summed into a total score. Cut-off points for risk stratification (low, intermediate, high) were selected based on Youden’s J index from ROC analysis.

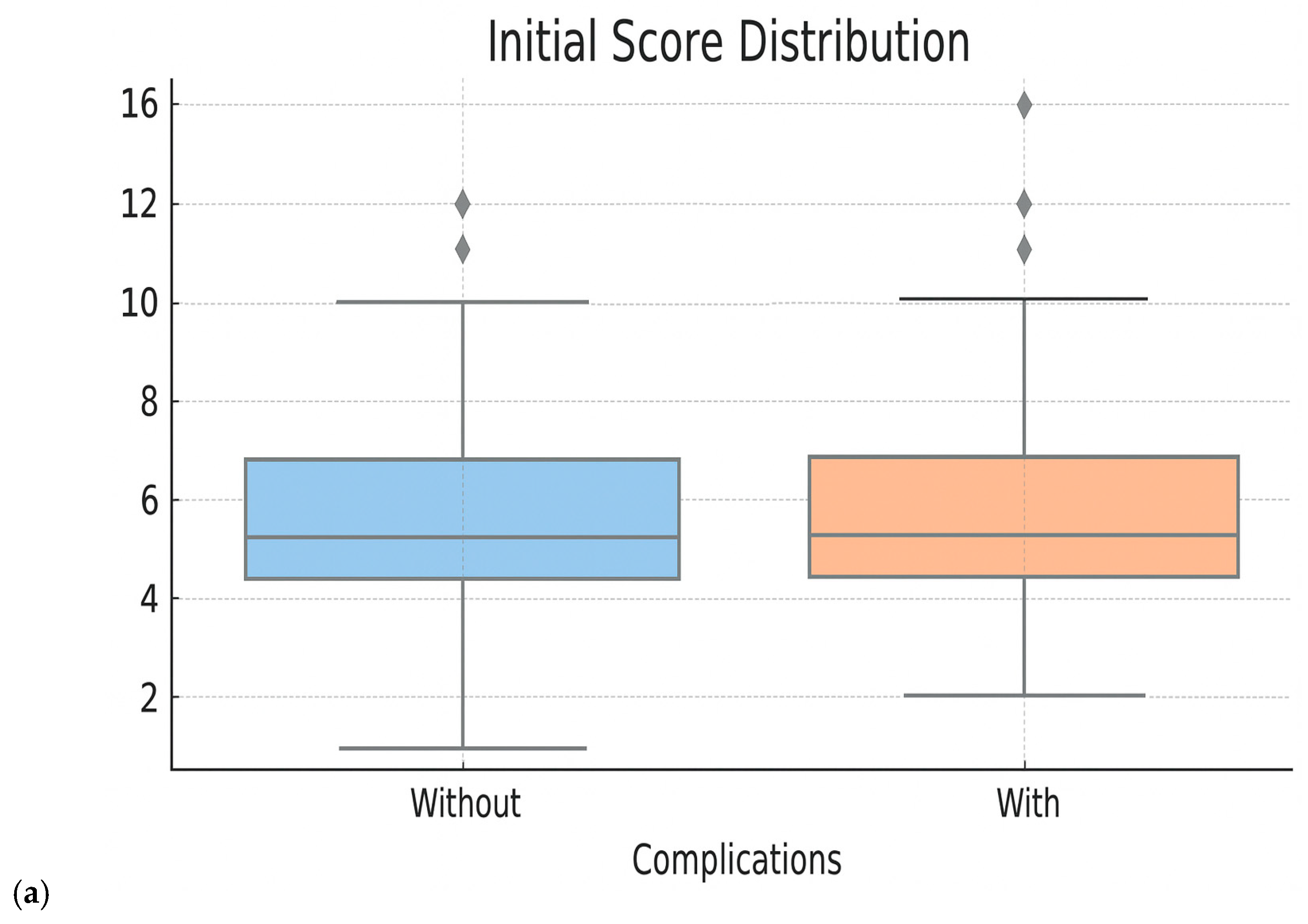

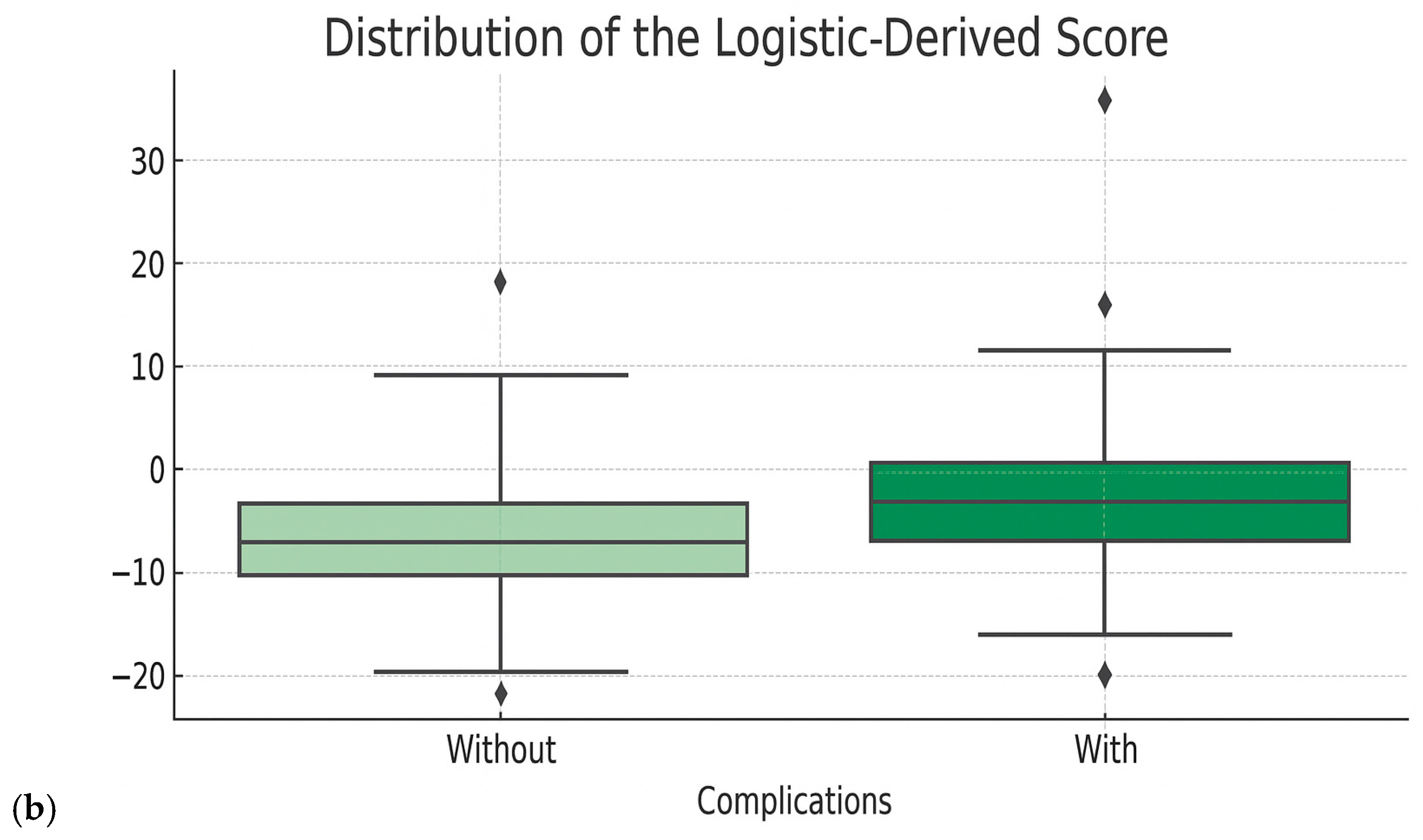

- Performance evaluation of the optimized score: The performances of the new score were compared with the initial score and with the Random Forest model directly through ROC curves, analysis of the score distribution and performance indicators—accuracy, sensitivity, specificity, F1-score. These evaluations showed superior performances to the initial score, comparable to those of the Random Forest model, but with the additional advantage of clinical portability and increased interpretability (Figure 5 and Figure 6 and Table 5). When analyzing the comparative distribution of scores, we find that for the initial score, no clear separation is observed (the scores of patients with and without complications often overlap), indicating a limited discrimination capacity, with a close mean between groups and a large variation. In contrast, the logistic-derived score shows a clearer separation between groups. Patients who developed complications tend to have higher probability scores, while patients without complications are concentrated in the lower part of the distribution.

3.7. Building an Interactive Form Applicable in Oncologic Practice

4. Discussion

- Machine learning algorithms (Random Forest, Gradient Boosting such as XGBoost, Support Vector Machines, logistic regression)—used for developing predictive models based on historical data. They work by identifying patterns in a dataset and applying them to new cases, providing quantifiable and reproducible results. Possible applications include prediction of risk of toxicity/adverse effects, prognostic stratification of patients or classifying therapeutic response to various agents. One such example is xDECIDE, which is a web-based platform that combines AI power with human clinical expertise and offers personalized oncological therapeutic recommendations. Studies have proven that this platform has real impact on decision making; changes in the initial treatment plan proposed by the clinician were recorded after using the platform [23,24].

- Deep Learning is an extension of machine learning, which employes complex neural networks with multiple layers to learn highly abstract representations of data. These models, such as CNN (Convolutional Neural Networks) or LSTM (Long Short-Term Memory), are capable of analyzing medical images (such as CT, MRI, histopathology) or time-series data (such as evolution of biological parameters). Deep Learning is used for the automatic recognition of anomalies and interpretation of sequential data. Deep Learning can be used for automatic recognition of lesions on CT scans and MRI or for early detection of toxicity signs based on serial analyses. An example of real applications of Deep Learning-based CDSS is DeepMind Health (Google), which employs Complementarity-Driven Deferral to Clinical Workflow (CoDoC) for screening of breast lesions. Compared to double reading with arbitration, the predictive model reduced false-positive rates by 25% and reduced clinician workload by 66% [25]. Clinical trials on the deployment of Deep Learning CDSSs in healthcare are underway with encouraging results [26].

- Natural Language Processing (NLP)—allows AI to “understand” and process human written speak, thus being able to extract valuable information from unstructured medical records (charts, clinical or trial notes, guidelines) and transforming the data into structured data usable for CDSS development. NLP tools, such as Named Entity Recognition (NER), text summarization or Embedding models (e.g., Word2Vec, BERT), can be used for automatic extraction of data from medical records, identifying eligible patients for clinical trials and generation of guideline-concordant therapeutic recommendations [27,28,29,30,31,32].

- Large Language Models (LLMs)—recent developments like ChatGPT, Flamingo, PaLI or Gemini led to the emergence of conversational AIs capable of coherent text generation and real-time synthetization of data from multiple sources. LLMs can be incorporated into interactive interfaces where the clinician can interact with the system, ask questions, demand explanations or viable alternatives for the proposed clinical/therapeutic decision. LLMs can also be used for automatic generation of documentation, thus reducing clinician workload. AMIE and MedGemini (medical LLMs created by Google DeepMind in 2025) have managed to generate complex oncologic therapy plans in more than 90% of the simulated cases and surpass human experts in text summarization and document generation [33,34,35,36].

- Reinforcement Learning (RL)—a lesser-known but very promising type of AI, which learns through interactions and rewards. It can be used in scenarios in which the therapeutic decision needs to be dynamically adaptative (e.g., adjusting doses based on individual patients’ response and reaction) [37,38,39]. This type of AI resembles the way a physician refines his clinical intuition after gaining experience based on the results of previous decisions.

- In order for AI to be truly useful in clinical practice, it needs to be transparent, and the variable which formed the bases for a decision needs to be easily explained to the human doctor/patient. Higher transparency will ensure better adoption of AI technologies into clinical practice. Explainable AI (XAI), through technologies such as LIME or SHAP, has precisely this role [40,41].

- At the heart of all these technologies is Data Engineering—the process of collecting, cleaning, and shaping medical data. Without this step, no AI model will work properly. Libraries like Pandas, NumPy or TensorFlow Data are used to convert raw data into formats that can be used by algorithms.

- Finally, in the context of multicenter collaboration, Federated Learning offers an ethical and safe solution to train AI models on distributed databases (data from multiple medical centers) without sharing patient information between institutions (e.g., Flower, NVIDIA Clara, TensorFlow Federated). In this way, hospitals can contribute to shared models while respecting data privacy under GDPR or HIPAA regulations.

4.1. Related Work and State-of-the-Art (SOTA) Approaches

4.2. Study Strengths, Limitations and Future Directions of Research

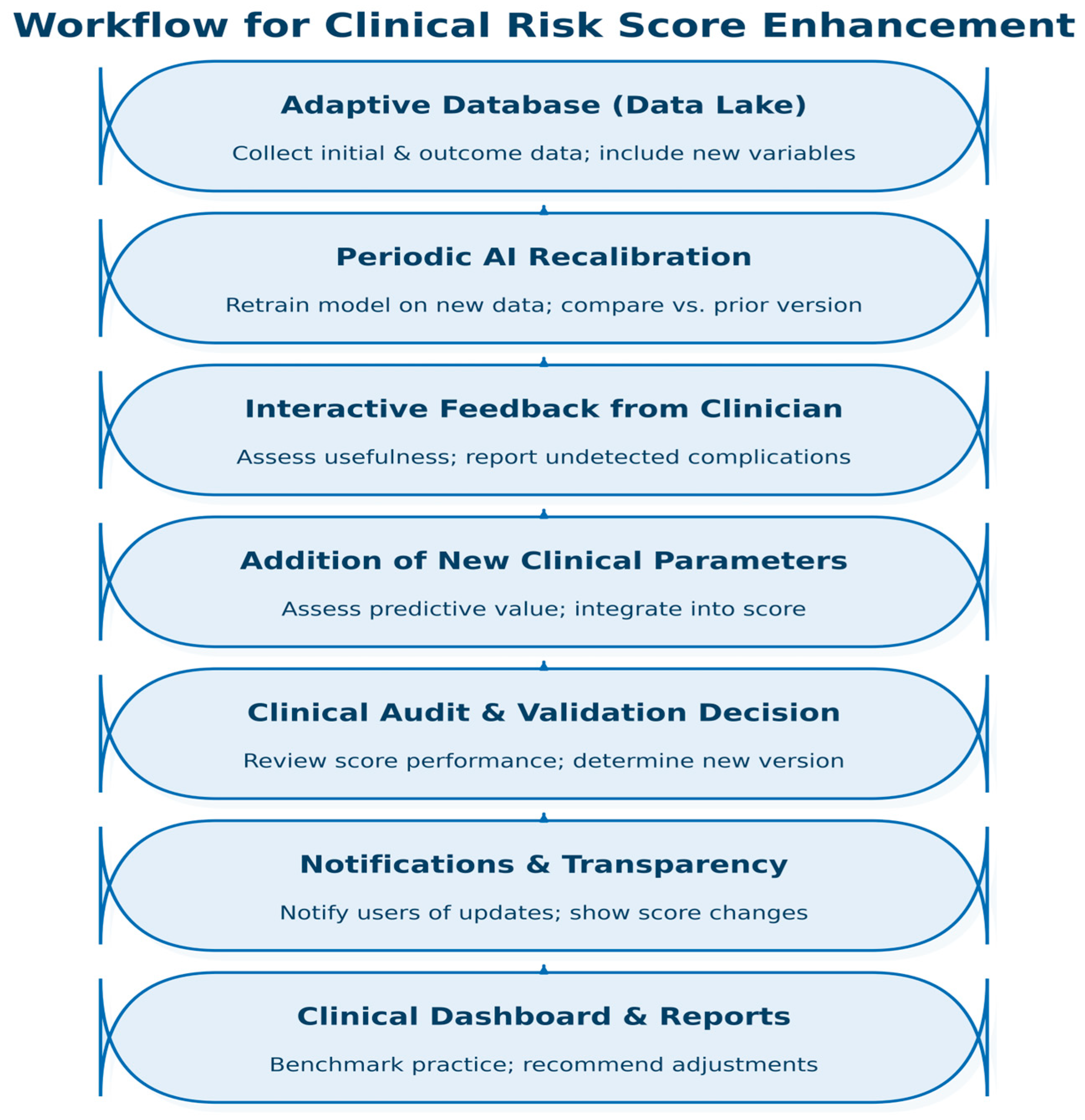

- The most significant limitation of our study is the fact that our risk score is not developed using an adaptive database, and, as a result, our predictive models cannot be retrained once new clinical data become available. This was done to highlight that even in the absence of a trained AI expert, AI tools have become so readily available and intuitive that medical professionals can employ them in their current research and practice. However, collaboration with AI experts is highly recommended for optimal results.

- Relatively small and unbalanced sample size: The relatively modest size of the dataset and the unequal distribution between patients in the group with and without complications (unbalanced classes) may limit the generalizability of the predictive models that we trained. While 395 treatment episodes were included, we acknowledge that the sample size may still limit the statistical power of the model, particularly in edge-case scenarios. This reinforces the need for further multicenter validation.

- The data come from a single medical unit, which introduces an institutional selection bias, which reduces the external validity of the results obtained and increases the risk of overfitting.

- Absence of potentially relevant variables: Some clinical and biological information that could have predictive value for the occurrence of complications (such as concomitant treatments, detailed ECOG score, immunohistochemical factors, the presence of oncogenic mutations and mutation repair deficiencies) was available for a very small number of patients, which made them statistically unusable.

- The final risk score has not been validated on an external dataset, nor has it been prospectively tested in clinical practice to prove its reliability.

- Implementing a data-lake adaptive system will allow for continuous refinement of clinical tools developed and maintaining clinical relevance.

- Increasing the sample size by introducing data from other medical centers (multicenter study) could strengthen the robustness and generalizability of the predictive models.

- Prospective validation of the score on an external sample is necessary to test its performance and utility in bedside decision making. The current study represents a single-center proof of concept. Future work should aim to externally validate the model on independent cohorts, ideally through multicentric collaborations.

- Intense collaboration with IT experts would mainstream the process of developing better AI tools.

- Future versions of the predictive model could include other variables (such as immunohistochemical and genetic markers or other imaging, clinical and therapeutic data) to increase the accuracy of predictions

- The development of offline digital applications such as the one already created paves the way for personalized decision support tools, easy to use for clinicians.

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AI-CDSS | Artificial Intelligence-based Clinical Decision Support System |

| AMIE | Articulate Medical Intelligence Explorer |

| AUC | Area Under the Curve |

| BERT | Bidirectional Encoder Representations from Transformers |

| CDSS | Clinical Decision Support System |

| CNN | Convolutional Neural Network |

| CT | Computed Tomography |

| DVT | Deep Vein Thrombosis |

| ECOG | Eastern Cooperative Oncology Group (performance status score) |

| EGFR | Epidermal Growth Factor Receptor |

| EHR | Electronic Health Record |

| F1 | F1-Score (harmonic mean of precision and recall) |

| GDPR | General Data Protection Regulation |

| GGT | Gamma-Glutamyl Transferase |

| HIPAA | Health Insurance Portability and Accountability Act |

| HTML | HyperText Markup Language |

| LIME | Local Interpretable Model-agnostic Explanations |

| LLM | Large Language Model |

| LSTM | Long Short-Term Memory |

| MINDACT | Microarray In Node-negative and 1 to 3 positive lymph node Disease may Avoid ChemoTherapy |

| ML | Machine Learning |

| MRI | Magnetic Resonance Imaging |

| NER | Named Entity Recognition |

| NLP | Natural Language Processing |

| NSCLC | Non-Small Cell Lung Cancer |

References

- Nafees, A.; Khan, M.; Chow, R.; Fazelzad, R.; Hope, A.; Liu, G.; Letourneau, D.; Raman, S. Evaluation of clinical decision support systems in oncology: An updated systematic review. Crit. Rev. Oncol. Hematol. 2023, 192, 104143. [Google Scholar] [CrossRef] [PubMed]

- Oehring, R.; Ramasetti, N.; Ng, S.; Roller, R.; Thomas, P.; Winter, A.; Maurer, M.; Moosburner, S.; Raschzok, N.; Kamali, C.; et al. Use and accuracy of decision support systems using artificial intelligence for tumor diseases: A systematic review and meta-analysis. Front. Oncol. 2023, 13, 1224347. [Google Scholar] [CrossRef] [PubMed]

- Elhaddad, M.; Hamam, S. AI-Driven Clinical Decision Support Systems: An Ongoing Pursuit of Potential. Cureus 2024, 16, e57728. [Google Scholar] [CrossRef] [PubMed]

- Karuppan Perumal, M.K.; Rajan Renuka, R.; Kumar Subbiah, S.; Manickam Natarajan, P. Artificial intelligence-driven clinical decision support systems for early detection and precision therapy in oral cancer: A mini review. Front. Oral Health 2025, 6, 1592428. [Google Scholar] [CrossRef]

- Verlingue, L.; Boyer, C.; Olgiati, L.; Brutti Mairesse, C.; Morel, D.; Blay, J.Y. Artificial intelligence in oncology: Ensuring safe and effective integration of language models in clinical practice. Lancet Reg. Health Eur. 2024, 46, 101064. [Google Scholar] [CrossRef]

- Kann, B.H.; Hosny, A.; Aerts, H.J.W.L. Artificial Intelligence for Clinical Oncology. Cancer Cell 2021, 39, 916. [Google Scholar] [CrossRef]

- Cross, J.L.; Choma, M.A.; Onofrey, J.A. Bias in medical AI: Implications for clinical decision-making. PLoS Digit. Health 2024, 3, e0000651. [Google Scholar] [CrossRef]

- Abdeldjouad, F.Z.; Brahami, M.; Sabri, M. Evaluating the Effectiveness of Artificial Intelligence in Predicting Adverse Drug Reactions among Cancer Patients: A Systematic Review and Meta-Analysis. In Proceedings of the 2023 IEEE International Conference Challenges and Innovations on TIC, I2CIT 2023, Hammamet, Tunisia, 21–23 December 2023. [Google Scholar] [CrossRef]

- Wang, L.; Chen, X.; Zhang, L.; Li, L.; Huang, Y.B.; Sun, Y.; Yuan, X. Artificial intelligence in clinical decision support systems for oncology. Int. J. Med. Sci. 2023, 20, 79–86. [Google Scholar] [CrossRef]

- Hussain, Z.; Lam, B.D.; Acosta-Perez, F.A.; Riaz, I.B.; Jacobs, M.; Yee, A.J.; Sontag, D. Evaluating Physician-AI Interaction for Cancer Management: Paving the Path towards Precision Oncology. arXiv 2024, arXiv:2404.15187. [Google Scholar] [CrossRef]

- Grüger, J.; Geyer, T.; Brix, T.; Storck, M.; Leson, S.; Bley, L.; Braun, S.A. AI-Driven Decision Support in Oncology: Evaluating Data Readiness for Skin Cancer Treatment. arXiv 2025, arXiv:2503.09164. [Google Scholar] [CrossRef]

- Chitoran, E.; Rotaru, V.; Stefan, D.C.; Gullo, G.; Simion, L. Blocking Tumoral Angiogenesis VEGF/VEGFR Pathway: Bevacizumab—20 Years of Therapeutic Success and Controversy. Cancers 2025, 17, 1126. [Google Scholar] [CrossRef]

- Chitoran, E.; Rotaru, V.; Ionescu, S.-O.; Gelal, A.; Capsa, C.-M.; Bohiltea, R.-E.; Mitroiu, M.-N.; Serban, D.; Gullo, G.; Stefan, D.-C.; et al. Bevacizumab-Based Therapies in Malignant Tumors—Real-World Data on Effectiveness, Safety, and Cost. Cancers 2024, 16, 2590. [Google Scholar] [CrossRef] [PubMed]

- Shateri, A.; Nourani, N.; Dorrigiv, M.; Nasiri, H. An Explainable Nature-Inspired Framework for Monkeypox Diagnosis: Xception Features Combined with NGBoost and African Vultures Optimization Algorithm. arXiv 2025, arXiv:2504.17540. [Google Scholar] [CrossRef]

- Welcome To Colab-Colab, n.d. Available online: https://colab.research.google.com/notebooks/intro.ipynb (accessed on 23 July 2025).

- ChatGPT, n.d. Available online: https://chatgpt.com/?model=gpt-4o (accessed on 23 July 2025).

- Farzipour, A.; Elmi, R.; Nasiri, H. Detection of Monkeypox Cases Based on Symptoms Using XGBoost and Shapley Additive Explanations Methods. Diagnostics 2023, 13, 2391. [Google Scholar] [CrossRef] [PubMed]

- De Paola, L.; Treglia, M.; Napoletano, G.; Treves, B.; Ghamlouch, A.; Rinaldi, R. Legal and Forensic Implications in Robotic Surgery. Clin. Ter. 2025, 176, 233–240. [Google Scholar] [CrossRef]

- Marinelli, S.; De Paola, L.; Stark, M.; Vergallo, G.M. Artificial Intelligence in the Service of Medicine: Current Solutions and Future Perspectives, Opportunities, and Challenges. Clin. Ter. 2025, 176, 77–82. [Google Scholar] [CrossRef]

- Cirimbei, C.; Rotaru, V.; Chitoran, E.; Cirimbei, S. Laparoscopic Approach in Abdominal Oncologic Pathology. In Proceedings of the 35th Balkan Medical Week, Athens, Greece, 25–27 September 2018; pp. 260–265. Available online: https://www.webofscience.com/wos/woscc/full-record/WOS:000471903700043 (accessed on 20 July 2023).

- Rotaru, V.; Chitoran, E.; Cirimbei, C.; Cirimbei, S.; Simion, L. Preservation of Sensory Nerves During Axillary Lymphadenectomy. In Proceedings of the 35th Balkan Medical Week, Athens, Greece, 25–27 September 2018; pp. 271–276. Available online: https://www.webofscience.com/wos/woscc/full-record/WOS:000471903700045 (accessed on 20 July 2023).

- Chitoran, E.; Rotaru, V.; Mitroiu, M.-N.; Durdu, C.-E.; Bohiltea, R.-E.; Ionescu, S.-O.; Gelal, A.; Cirimbei, C.; Alecu, M.; Simion, L. Navigating Fertility Preservation Options in Gynecological Cancers: A Comprehensive Review. Cancers 2024, 16, 2214. [Google Scholar] [CrossRef]

- Shapiro, M.A.; Stuhlmiller, T.J.; Wasserman, A.; Kramer, G.A.; Federowicz, B.; Hoos, W.; Kaufman, Z.; Chuyka, D.; Mahoney, W.; Newton, M.E.; et al. AI-Augmented Clinical Decision Support in a Patient-Centric Precision Oncology Registry. AI Precis. Oncol. 2024, 1, 58–68. [Google Scholar] [CrossRef]

- Xu, F.; Sepúlveda, M.-J.; Jiang, Z.; Wang, H.; Li, J.; Liu, Z.; Yin, Y.; Roebuck, M.C.; Shortliffe, E.H.; Yan, M.; et al. Effect of an Artificial Intelligence Clinical Decision Support System on Treatment Decisions for Complex Breast Cancer. JCO Clin. Cancer Inform. 2020, 4, CCI.20.00018. [Google Scholar] [CrossRef]

- Dvijotham, K.; Winkens, J.; Barsbey, M.; Ghaisas, S.; Stanforth, R.; Pawlowski, N.; Strachan, P.; Ahmed, Z.; Azizi, S.; Bachrach, Y.; et al. Enhancing the reliability and accuracy of AI-enabled diagnosis via complementarity-driven deferral to clinicians. Nat. Med. 2023, 29, 1814–1820. [Google Scholar] [CrossRef]

- Abràmoff, M.D.; Lavin, P.T.; Birch, M.; Shah, N.; Folk, J.C. Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. npj Digit. Med. 2018, 1, 39. [Google Scholar] [CrossRef] [PubMed]

- Rojas-Carabali, W.; Agrawal, R.; Gutierrez-Sinisterra, L.; Baxter, S.L.; Cifuentes-González, C.; Wei, Y.C.; Abisheganaden, J.; Kannapiran, P.; Wong, S.; Lee, B.; et al. Natural Language Processing in medicine and ophthalmology: A review for the 21st-century clinician. Asia-Pac. J. Ophthalmol. 2024, 13, 100084. [Google Scholar] [CrossRef] [PubMed]

- Natural Language Processing in Healthcare: 8 Key Use Cases n.d. Available online: https://kms-healthcare.com/blog/natural-language-processing-in-healthcare/ (accessed on 27 July 2025).

- Mendonça, E.A.; Haas, J.; Shagina, L.; Larson, E.; Friedman, C. Extracting information on pneumonia in infants using natural language processing of radiology reports. J. Biomed. Inform. 2005, 38, 314–321. [Google Scholar] [CrossRef] [PubMed]

- Aramaki, E.; Wakamiya, S.; Yada, S.; Nakamura, Y. Natural Language Processing: From Bedside to Everywhere. Yearb. Med. Inform. 2022, 31, 243. [Google Scholar] [CrossRef]

- Locke, S.; Bashall, A.; Al-Adely, S.; Moore, J.; Wilson, A.; Kitchen, G.B. Natural language processing in medicine: A review. Trends Anaesth. Crit. Care 2021, 38, 4–9. [Google Scholar] [CrossRef]

- Natural Language Processing in Healthcare Medical Records n.d. Available online: https://www.foreseemed.com/natural-language-processing-in-healthcare (accessed on 27 July 2025).

- Palepu, A.; Dhillon, V.; Niravath, P.; Weng, W.H.; Prasad, P.; Saab, K.; Tu, T. Exploring Large Language Models for Specialist-level Oncology Care. arXiv 2024, arXiv:2411.03395. [Google Scholar] [CrossRef]

- Saab, K.; Tu, T.; Weng, W.H.; Tanno, R.; Stutz, D.; Wulczyn, E.; Natarajan, V. Capabilities of Gemini Models in Medicine. arXiv 2024, arXiv:2404.18416. [Google Scholar] [CrossRef]

- Yang, L.; Xu, S.; Sellergren, A.; Kohlberger, T.; Zhou, Y.; Ktena, I.; Golden, D. Advancing Multimodal Medical Capabilities of Gemini. arXiv 2024, arXiv:2405.03162. [Google Scholar] [CrossRef]

- McDuff, D.; Schaekermann, M.; Tu, T.; Palepu, A.; Wang, A.; Garrison, J.; Singhal, K.; Sharma, Y.; Azizi, S.; Kulkarni, K.; et al. Towards Accurate Differential Diagnosis with Large Language Models. Nature 2023, 642, 451–457. [Google Scholar] [CrossRef]

- Yu, C.; Liu, J.; Nemati, S.; Yin, G. Reinforcement Learning in Healthcare: A Survey. ACM Comput. Surv. 2022, 55, 1–36. [Google Scholar] [CrossRef]

- Eckardt, J.-N.; Wendt, K.; Bornhäuser, M.; Middeke, J.M. Reinforcement Learning for Precision Oncology. Cancers 2021, 13, 4624. [Google Scholar] [CrossRef] [PubMed]

- Jayaraman, P.; Desman, J.; Sabounchi, M.; Nadkarni, G.N.; Sakhuja, A. A Primer on Reinforcement Learning in Medicine for Clinicians. npj Digit. Med. 2024, 7, 337. [Google Scholar] [CrossRef] [PubMed]

- Almadhor, A.; Ojo, S.; Nathaniel, T.I.; Alsubai, S.; Alharthi, A.; Hejaili AAl et, a.l. An interpretable XAI deep EEG model for schizophrenia diagnosis using feature selection and attention mechanisms. Front. Oncol. 2025, 15, 1630291. [Google Scholar] [CrossRef]

- Abas Mohamed, Y.; Ee Khoo, B.; Shahrimie Mohd Asaari, M.; Ezane Aziz, M.; Rahiman Ghazali, F. Decoding the black box: Explainable AI (XAI) for cancer diagnosis, prognosis, and treatment planning-A state-of-the art systematic review. Int. J. Med. Inform. 2025, 193, 105689. [Google Scholar] [CrossRef]

- Chang, J.S.; Kim, H.; Baek, E.S.; Choi, J.E.; Lim, J.S.; Kim, J.S.; Shin, S.J. Continuous multimodal data supply chain and expandable clinical decision support for oncology. npj Digit. Med. 2025, 8, 1–13. [Google Scholar] [CrossRef]

- Mahadevaiah, G.; Prasad, R.V.; Bermejo, I.; Jaffray, D.; Dekker, A.; Wee, L. Artificial intelligence-based clinical decision support in modern medical physics: Selection, acceptance, commissioning, and quality assurance. Med. Phys. 2020, 47, e228–e235. [Google Scholar] [CrossRef]

- Walsh, S.; de Jong, E.E.C.; van Timmeren, J.E.; Ibrahim, A.; Compter, I.; Peerlings, J.; Sanduleanu, S.; Refaee, T.; Keek, S.; Larue, R.T.H.M.; et al. Decision Support Systems in Oncology. JCO Clin. Cancer Inform. 2019, 3, 1–9. [Google Scholar] [CrossRef]

- Haddad, T.; Helgeson, J.M.; E Pomerleau, K.; Preininger, A.M.; Roebuck, M.C.; Dankwa-Mullan, I.; Jackson, G.P.; Goetz, M.P. Accuracy of an Artificial Intelligence System for Cancer Clinical Trial Eligibility Screening: Retrospective Pilot Study. JMIR Med. Inform. 2021, 9, e27767. [Google Scholar] [CrossRef]

- Audeh, W.; Blumencranz, L.; Kling, H.; Trivedi, H.; Srkalovic, G. Prospective Validation of a Genomic Assay in Breast Cancer: The 70-gene MammaPrint Assay and the MINDACT Trial. Acta Med. Acad. 2019, 48, 18–34. [Google Scholar] [CrossRef]

- Filho, O.M.; Cardoso, F.; Poncet, C.; Desmedt, C.; Linn, S.; Wesseling, J.; Hilbers, F.; Delaloge, S.; Pierga, J.-Y.; Brain, E.; et al. Survival outcomes for patients with invasive lobular cancer by MammaPrint: Results from the MINDACT phase III trial. Eur. J. Cancer 2025, 217, 115222. [Google Scholar] [CrossRef]

- Cardoso, F.; Piccart-Gebhart, M.; Van’t Veer, L.; Rutgers, E. The MINDACT trial: The first prospective clinical validation of a genomic tool. Mol. Oncol. 2007, 1, 246–251. [Google Scholar] [CrossRef]

- Schefler, A.C.; Skalet, A.; Oliver, S.C.N.; Mason, J.; Daniels, A.B.; Alsina, K.M.; Plasseraud, K.M.; A Monzon, F.; Firestone, B. Prospective evaluation of risk-appropriate management of uveal melanoma patients informed by gene expression profiling. Melanoma Manag. 2020, 7, MMT37. [Google Scholar] [CrossRef]

- Harbour, J.W.; Chen, R. The DecisionDx-UM Gene Expression Profile Test Provides Risk Stratification and Individualized Patient Care in Uveal Melanoma. PLoS Curr. 2013, 9, 5. [Google Scholar] [CrossRef] [PubMed]

- Sun, W.; Hu, G.; Long, G.; Wang, J.; Liu, D.; Hu, G. Predictive value of a serum-based proteomic test in non-small-cell lung cancer patients treated with epidermal growth factor receptor tyrosine kinase inhibitors: A meta-analysis. Curr. Med. Res. Opin. 2014, 30, 2033–2039. [Google Scholar] [CrossRef] [PubMed]

- Molina-Pinelo, S.; Pastor, M.D.; Paz-Ares, L. VeriStrat: A prognostic and/or predictive biomarker for advanced lung cancer patients? Expert Rev. Respir. Med. 2014, 8, 1–4. [Google Scholar] [CrossRef] [PubMed]

- Ferber, D.; El Nahhas, O.S.; Wölflein, G.; Wiest, I.C.; Clusmann, J.; Leßman, M.E.; Foersch, S.; Lammert, J.; Tschochohei, M.; Jäger, D.; et al. Autonomous Artificial Intelligence Agents for Clinical Decision Making in Oncology. arXiv 2024, arXiv:2404.04667. [Google Scholar] [CrossRef]

- Lee, C.S.; Lee, A.Y. Clinical applications of continual learning machine learning. Lancet Digit. Health 2020, 2, e279–e281. [Google Scholar] [CrossRef]

- Labkoff, S.; Oladimeji, B.; Kannry, J.; Solomonides, A.; Leftwich, R.; Koski, E.; Joseph, A.L.; Lopez-Gonzalez, M.; A Fleisher, L.; Nolen, K.; et al. Toward a responsible future: Recommendations for AI-enabled clinical decision support. J. Am. Med. Inform. Assoc. 2024, 31, 2730–2739. [Google Scholar] [CrossRef]

| Variable | Whole Sample | Study Group (with Complications) | Witness Group (Without Complications) | p-Value * |

|---|---|---|---|---|

| Sex | 0.259 | |||

| Male | 130 (32.91%) | 66 | 64 | |

| Female | 265 (67.09%) | 152 | 113 | |

| Age | 0.475 | |||

| Extremes 22–88 | 61.6 ± 10.88 | 61 ± 11.0 | 62.4 ± 10.7 | |

| Residence environment | 0.167 | |||

| Urban area | 341 (86.33%) | 183 | 158 | |

| Rural Area | 54 (13.67%) | 35 | 19 | |

| Blood type | 0.987 | |||

| A | 164 (41.52%) | 92 | 72 | |

| O | 139 (35.19%) | 76 | 63 | |

| B | 58 (14.68%) | 32 | 26 | |

| AB | 34 (8.61) | 18 | 16 | |

| Rhesus factor | 0.667 | |||

| Rh-positive | 321 (81.27%) | 175 | 146 | |

| Rh-negative | 74 (18.73%) | 43 | 31 | |

| Comorbidities | ||||

| Hypertension | 147 (37.22%) | 73 (33.5%) | 74 (41.8%) | 0.1103 |

| Ischemic heart disease | 29 (7.34%) | 10 (4.6%) | 19 (10.7%) | 0.0327 |

| Cardiac insufficiency | 33 (8.35%) | 12 (5.5%) | 21 (11.9%) | 0.0367 |

| Valvopathies | 62 (15.70%) | 26 (11.9%) | 36 (20.3%) | 0.0318 |

| Arrythmias | 32 (8.10%) | 15 (6.9%) | 17 (9.6%) | 0.4230 |

| Stroke/TIA | 3 (0.76%) | 1 (0.5%) | 2 (1.1%) | 0.8560 |

| PTE/DVT | 20 (5.06%) | 6 (2.8%) | 14 (7.9%) | 0.0362 |

| Diabetes | 44 (11.14%) | 18 (8.3%) | 26 (14.7%) | 0.0629 |

| Chronic lung disease | 20 (5.06%) | 9 (4.1%) | 11 (6.2%) | 0.4779 |

| Chronic renal disease | 10 (2.53%) | 1 (0.5%) | 9 (5.1%) | 0.0096 |

| Chronic hepatitis or cirrhosis | 13 (3.29%) | 6 (2.8%) | 7 (4.0%) | 0.7020 |

| Previous cancer | 34 (8.61%) | 21 (9.6%) | 13 (7.3%) | 0.5313 |

| Psychiatric disorders | 15 (3.80%) | 6 (2.8%) | 9 (5.1%) | 0.3465 |

| Recurrent urinary infections | 15 (3.80%) | 11 (5.0%) | 4 (2.3%) | 0.2396 |

| Previous surgery | 288 (72.91%) | 158 (72.5%) | 130 (73.4%) | 0.9190 |

| Chronic/previous treatments | ||||

| Oral Anticoagulants | 29 (7.34%) | 9 (4.1%) | 20 (11.3%) | 0.0116 |

| Oral Antidiabetics | 20 (5.06%) | 7 (3.2%) | 13 (7.3%) | 0.1025 |

| Insulin | 4 (1.01%) | 1 (0.5%) | 3 (1.7%) | 0.4746 |

| Bevacizumab | 55 (13.92%) | 28 (12.8%) | 27 (15.3%) | 0.5878 |

| Neoadjuvant Immunotherapy | 94 (23.80%) | 44 (20.2%) | 50 (28.2%) | 0.0796 |

| Neoadjuvant Chemotherapy | 234 (59.24%) | 134 (61.5%) | 100 (56.5%) | 0.3698 |

| Neoadjuvant Radiotherapy | 92 (23.29%) | 47 (21.6%) | 45 (25.4%) | 0.4331 |

| Hormonotherapy | 5 (1.27%) | 2 (0.9%) | 3 (1.7%) | 0.8143 |

| Nutritional status | 0.2531 | |||

| Normal weight | 257 (65.06%) | 148 (67.9%) | 109 (61.6%) | |

| Overweight/Obesity | 73 (18.48%) | 40 (18.3%) | 33 (18.6%) | |

| Underweight/Cachexia | 65 (16.46%) | 30 (13.8%) | 35 (19.8%) |

| Variable | Whole Sample | Study Group (with Complications) | Witness Group (Without Complications) | p-Value * |

|---|---|---|---|---|

| Type of cancer | 0.0002 | |||

| Colo-rectal | 209 (52.91%) | 104 (47.7%) | 105 (59.3%) | |

| Ovarian/tubal/primary peritoneal | 102 (25.82%) | 70 (32.1%) | 32 (18.1%) | |

| Cervical | 40 (10.13%) | 16 (7.3%) | 24 (13.6%) | |

| Breast | 28 (7.09%) | 22 (10.1%) | 6 (3.4%) | |

| Pulmonary | 7 (1.77%) | 4 (1.8%) | 3 (1.7%) | |

| Others | 9 (2.28%) | 2 (0.9%) | 7 (4.0%) | |

| Histopathology | 0.0013 | |||

| Adenocarcinoma | 206 (52.15%) | 104 (47.7%) | 102 (57.6%) | |

| Serous carcinoma | 93 (23.54%) | 63 (28.9%) | 30 (16.9%) | |

| Squamous carcinoma | 33 (8.35%) | 13 (6.0%) | 20 (11.3%) | |

| Invasive ductal/lobular carcinoma | 25 (6.33%) | 20 (9.2%) | 5 (2.8%) | |

| Mucinous adenocarcinoma | 23 (5.82%) | 9 (4.1%) | 14 (7.9%) | |

| Others | 15 (3.80%) | 9 (4.1%) | 6 (3.4%) | |

| Differentiation degree | 0.0065 | |||

| Poorly differentiated (G3) | 144 (36.46%) | 92 (42.2%) | 53 (29.9%) | |

| Moderate differentiated (G2) | 227 (57.47%) | 110 (50.5%) | 117 (66.1%) | |

| Well differentiated (G1) | 24 (6.07%) | 16 (7.3%) | 8 (4.52%) | |

| Perineural invasion | 75 (18.99%) | 40 (18.3%) | 35 (19.8%) | 0.7049 |

| Lymphovascular invasion | 117 (29.62%) | 64 (29.4%) | 53 (29.9%) | 0.9877 |

| Peritumoral lymphocyte infiltrate | 79 (20.00%) | 36 (16.5%) | 43 (24.3%) | 0.1473 |

| Stage | 0.3306 | |||

| IV | 316 (80.00%) | 168 (77.1%) | 148 (83.6%) | |

| III | 74 (18.73%) | 46 (21.1%) | 28 (15.8%) | |

| II | 4 (1.01%) | 3 (1.4%) | 1 (0.6%) | |

| I | 1 (0.25) | 1 (0.5%) | 0 (0.0%) | |

| Metastasis | ||||

| Peritoneale | 142 (35.95%) | 81 (37.2%) | 61 (34.5%) | 0.6533 |

| Bone | 25 (6.33%) | 14 (6.4%) | 11 (6.2%) | 1.0000 |

| Hepatic | 169 (42.78%) | 89 (40.8%) | 82 (46.3%) | 0.238 |

| Pulmonary | 95 (24.05%) | 53 (24.3%) | 42 (23.7%) | 0.9869 |

| Lymphatic | 46 (11.65%) | 28 (12.8%) | 18 (10.2%) | 0.5052 |

| Cerebral | 8 (2.03%) | 4 (1.8%) | 4 (2.3%) | 1.0000 |

| Others | 15 (3.80%) | 8 (3.7%) | 7 (4.0%) | 1.0000 |

| Type of disease | 0.1061 | |||

| Metastatic | 314 (79.49%) | 170 (78.0%) | 144 (81.4%) | |

| Locally advanced/Unresectable | 52 (13.16%) | 35 (16.1%) | 17 (9.6%) | |

| Recurrent | 29 (7.34%) | 13 (6.0%) | 16 (9.0%) | |

| Bevacizumab/biosimilar administration | 0.3935 | |||

| First line | 180 (45.57%) | 95 (43.6%) | 85 (48.0%) | |

| Second line | 146 (36.96) | 84 (38.5%) | 62 (35.0%) | |

| Subsequent therapeutic lines | 54 (13.67%) | 28 (12.8%) | 26 (14.7%) | |

| Maintenance | 15 (3.80%) | 11 (5.0%) | 4 (2.3%) |

| Variable | Study Group (with Complications) | Witness Group (Without Complications) | p-Value * |

|---|---|---|---|

| Hemoglobin | 11.84 ± 1.74 | 12.38 ± 1.56 | 0.0009 |

| Leucocytes | 7.44 ± 2.70 | 7.07 ± 2.66 | 0.1378 |

| Thrombocytes | 286.110 ± 108.420 | 272.790 ± 111.240 | 0.2500 |

| Glycemia | 101.83 ± 26.25 | 103.79 ± 27.01 | 0.3049 |

| Creatinine | 0.77 ± 0.21 | 0.82 ± 0.25 | 0.0845 |

| Urea | 32.16 ± 13.29 | 34.55 ± 15.48 | 0.1354 |

| TGO | 23.67 ± 13.30 | 26.38 ± 23.24 | 0.8259 |

| TGP | 22.29 ± 13.29 | 26.04 ± 38.48 | 0.5718 |

| GGT | 67.38 ± 94.07 | 75.01 ± 106.16 | 0.0773 |

| Ca2+ | 9.35 ± 0.59 | 9.30 ± 0.57 | 0.1655 |

| Coagulation modifications | 29 (13.3%) | 39 (22.0%) | 0.0314 |

| Tumoral markers alterations | 74 (33.9%) | 72 (40.7%) | 0.2027 |

| Type of Complications | Low-Medium Severity (Total Number of Cases = 245) | High Severity (Total Number of Cases = 60 *) | Total |

|---|---|---|---|

| Septic/Infectious complications | 35 | 14 | 49 |

| Sepsis | 2 | 5 | 7 |

| Abscess | 7 | 3 | 10 |

| Urinary infection | 11 | 2 | 13 |

| Wound complications | 4 | 0 | 4 |

| Pneumonia | 1 | 2 | 3 |

| Others | 10 | 2 | 12 |

| Cardiovascular complications | 51 | 7 | 58 |

| Thromboembolic events | 12 | 1 | 13 |

| Hemorrhagic events (other than digestive) | 8 | 2 | 10 |

| Arrythmia | 1 | 0 | 1 |

| Arterial hypertension | 30 | 4 | 34 |

| Gastro-intestinal complications | 34 | 14 | 48 |

| Digestive hemorrhage | 4 | 2 | 6 |

| Ileus | 2 | 0 | 2 |

| Fistulas/perforative complications | 3 | 9 | 12 |

| Abdominal pain | 6 | 1 | 7 |

| Gastritis | 1 | 0 | 1 |

| Stomatitis | 3 | 0 | 3 |

| Nausea/vomiting | 10 | 0 | 10 |

| Diarrhea | 5 | 2 | 7 |

| Genito-urinary complications | 29 | 13 | 42 |

| Proteinuria | 29 | 4 | 33 |

| Renal insufficiency | 0 | 7 | 7 |

| Genito-urinary fistula | 0 | 2 | 2 |

| Hematologic complications | 72 | 9 | 81 |

| Anemia | 34 | 0 | 34 |

| Leucopenia | 18 | 6 | 24 |

| Thrombocytopenia | 18 | 2 | 20 |

| Neutropenia | 2 | 0 | 0 |

| Pancytopenia | 0 | 1 | 1 |

| Others | 24 | 3 | 27 |

| Headache/Migraine | 5 | 1 | 6 |

| Neuropathies | 6 | 0 | 6 |

| Rhinitis | 1 | 0 | 1 |

| Dyspnea | 3 | 2 | 5 |

| Dehydration | 3 | 0 | 3 |

| Anorexia | 1 | 0 | 1 |

| Cutaneous complications | 5 | 0 | 5 |

| Performance Metrics | Initial Clinical Score | Logistic Derived Score |

|---|---|---|

| AUC ROC | 0.555 | 0.720 |

| Accuracy | 0.590 | 0.680 |

| Sensitivity (Recall) | 0.472 | 0.642 |

| Specificity | 0.608 | 0.698 |

| F1-Score | 0.502 | 0.662 |

| Variable | Category and Points Awarded for Each Category |

|---|---|

| Age | <65 years—0 points ≥65 years—1 point |

| Serum Urea | <40 mg/dL—0 points ≥40 mg/dL—1 point |

| Leucocytes | <10.000/mmc—0 points ≥10.000/mmc—1 point |

| Hemoglobin | ≥10 g/dL—0 points <10 g/dL—1 point |

| Transaminases (TGO/TGP) | <40 U/L—0 points ≥40 U/L—1 point |

| Creatinine | <1.5 mg/dL—0 points ≥1.5 mg/dL—1 point |

| Stage | Stage I–II—0 points Stage III–IV—1 point |

| Differentiation degree | G1–G2—0 points G3—1 point |

| Lymphovascular invasion | Absent—0 points Present—1 point |

| Type of cancer | Breast, Ovarian, Cervical—0 points Colo-rectal, Pulmonary, Others—1 point |

| Points | Risk Group | Probability of Complications |

|---|---|---|

| 0–3 points | Low risk | <25% |

| 4–6 points | Intermediate risk | 25–60% |

| 7–10 points | High risk | >60% |

| CDSS/Test | Oncology Domain | AI Type | Key Results |

|---|---|---|---|

| Yonsei Cancer Data Library [42] | Multiple cancer types | Multimodal Deep Learning (Electronic Health Record + Genomic data) | Personalized care for >170K patients; streamlined workflow |

| AI for Trial Eligibility [45] | Breast cancer | NLP + Rule-based Screening | Higher sensitivity and specificity vs manual screening |

| MammaPrint [46,47,48] | Breast cancer | Genomic Risk Classifier (ML-based) | Avoids chemotherapy in low-risk patients (MINDACT validation) |

| DecisionDx-UM [49,50] | Uveal melanoma | Gene Expression Classifier (ML-based) | Accurate metastasis risk classification; accepted standard of care |

| VeriStrat [51,52] | NSCLC | Proteomic Classifier (ML-based) | Predicts response to EGFR inhibitors like erlotinib |

| LLM Agents [33,53] | Oncology simulations | LLM + Retrieval-Augmented Generation | >91% accuracy in simulated oncologic decisions with explainability |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chitoran, E.; Rotaru, V.; Gelal, A.; Ionescu, S.-O.; Gullo, G.; Stefan, D.-C.; Simion, L. Using Artificial Intelligence to Develop Clinical Decision Support Systems—The Evolving Road of Personalized Oncologic Therapy. Diagnostics 2025, 15, 2391. https://doi.org/10.3390/diagnostics15182391

Chitoran E, Rotaru V, Gelal A, Ionescu S-O, Gullo G, Stefan D-C, Simion L. Using Artificial Intelligence to Develop Clinical Decision Support Systems—The Evolving Road of Personalized Oncologic Therapy. Diagnostics. 2025; 15(18):2391. https://doi.org/10.3390/diagnostics15182391

Chicago/Turabian StyleChitoran, Elena, Vlad Rotaru, Aisa Gelal, Sinziana-Octavia Ionescu, Giuseppe Gullo, Daniela-Cristina Stefan, and Laurentiu Simion. 2025. "Using Artificial Intelligence to Develop Clinical Decision Support Systems—The Evolving Road of Personalized Oncologic Therapy" Diagnostics 15, no. 18: 2391. https://doi.org/10.3390/diagnostics15182391

APA StyleChitoran, E., Rotaru, V., Gelal, A., Ionescu, S.-O., Gullo, G., Stefan, D.-C., & Simion, L. (2025). Using Artificial Intelligence to Develop Clinical Decision Support Systems—The Evolving Road of Personalized Oncologic Therapy. Diagnostics, 15(18), 2391. https://doi.org/10.3390/diagnostics15182391