1. Introduction

Intensive care unit (ICU) patients are at a heightened risk of infections, partly due to gut microbiota imbalances [

1]. Accurate identification of pathogens is crucial for diagnosis and treatment. High-resolution classification of bacterial colonies, essential for morphological analysis, necessitates substantial computational resources [

2].

This study employs a high-resolution bacterial colony dataset [

3] comprising 24 clinically relevant pathogens, annotated to facilitate precise morphological differentiation. The dataset enables algorithmic development for automated pathogen detection in critical care, addressing the computational challenges associated with real-time classification in resource-constrained environments. However, CNNs [

4,

5,

6,

7] encounter significant obstacles when processing such complex imagery, primarily owing to high computational demands and parameter redundancy. This makes it difficult to achieve both real-time inference and high classification accuracy, potentially resulting in missed or erroneous detection of critical pathogens. For instance, despite its robust feature extraction capability, ResNet-50 [

8] exhibits substantial parameter redundancy due to its deep architecture and fully connected layers, rendering it unsuitable for low-power embedded systems. MobileNet V2 [

9,

10,

11] reduces parameter count via depthwise separable convolutions; however, its lightweight design may compromise the extraction of fine-grained local features necessary for distinguishing intricate colony morphologies. The Inception family of architecture enhances feature diversity through multi-scale convolutions, yet its branched structure frequently introduces redundant parameters, thereby increasing computational overhead. Although earlier models such as AlexNet [

12,

13] have fewer parameters, they lack sufficient representational capacity to capture subtle details in high-resolution colony images, leading to suboptimal classification performance.

To address these limitations, this study proposes a two-stage hierarchical pruning framework for CNN model compression [

14]. The framework achieves a significant reduction in computational complexity while maintaining reliability in colony image recognition. This approach presents a novel framework for automatic and lightweight pathogen detection.

2. Materials and Methods

Conventional techniques for pruning superfluous parameters and accelerating inference in CNNs often face critical limitations:

Imprecise assessment of kernel significance: Heuristic or statistical methods for quantifying the contribution of convolutional kernels to feature extraction frequently fail to distinguish between critical and non-critical components, potentially discarding essential information inadvertently [

15,

16].

Overhead from auxiliary model training: Many approaches rely on auxiliary large-scale models to distill knowledge into compressed architectures. This dependency introduces additional computational and memory costs as training such models demands substantial time and resources, thereby inflating algorithmic complexity [

17].

Decoupled optimization of compression and acceleration: Traditional strategies typically address parameter pruning and inference speedup independently, neglecting the interplay between these objectives. This disjointed approach overlooks potential synergies between network compression and architectural simplification [

18].

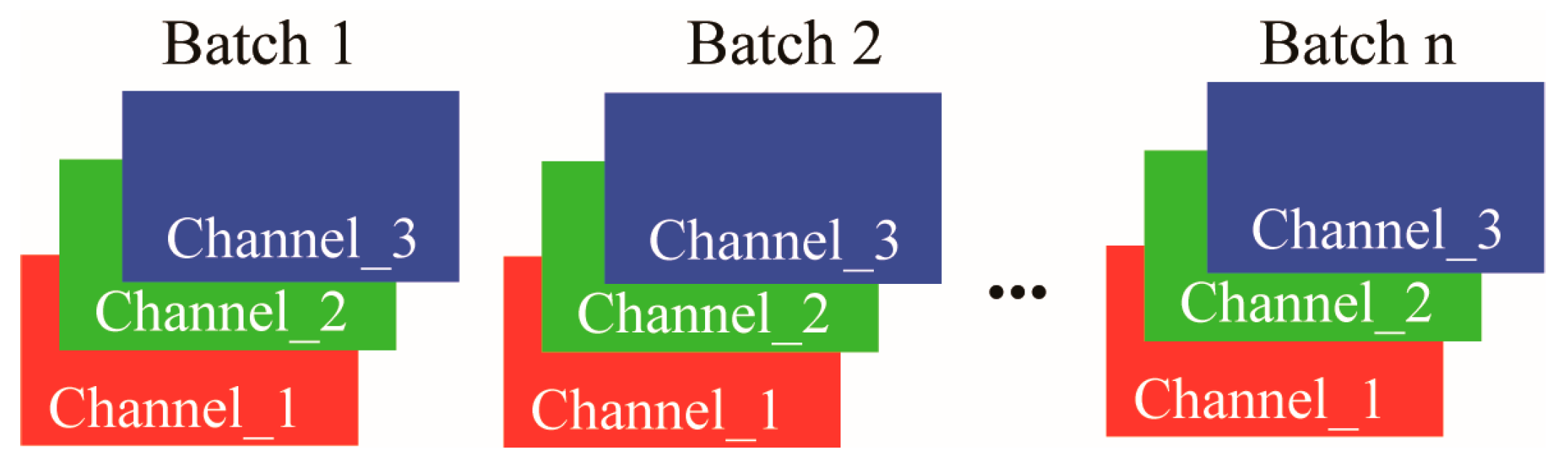

To address these challenges, the THP-CNN framework evaluates convolutional kernel importance through L2-norm quantification of the scaling parameters

γ in batch normalization (BN) [

19] layers. In contrast to conventional static pruning methods that depend on heuristic thresholds or dynamic pruning approaches that incur additional computational costs, THP-CNN innovatively integrates

γ into the gradient computation during backpropagation. This mechanism facilitates an algorithm-driven decay process that progressively drives redundant kernel weights toward zero, thereby eliminating the subjectivity associated with manually defined thresholds. By leveraging the normalization properties of BN layers, THP-CNN preserves feature integrity after pruning without requiring extensive retraining, effectively mitigating the performance degradation observed in traditional structured pruning methods, such as filter pruning [

20], which often arises due to dimensional mismatches.

Furthermore, THP-CNN introduces a principle of receptive field equivalence for convolutional layer fusion. This principle leverages the equivalence in spatial coverage between cascaded convolutional operations and a single extended convolution, enabling structural compression through the consolidation of sequential layers into a unified operation with an equivalent receptive field. The fusion reduces both network depth and parameter count while preserving representational capacity. In comparison to low-rank decomposition techniques such as singular value decomposition, the proposed approach avoids approximation errors inherent in matrix factorization and demonstrates effectiveness for chain-structured architectures including VGG. It enables reduction in network depth and departs from the narrow optimization pathway adopted by conventional lightweight models such as MobileNet, which are primarily based on depth-wise separable convolutions.

THP-CNN also adopts a lightweight design that does not rely on auxiliary components, thereby circumventing the limitations of knowledge distillation, which requires a separate teacher model, and dynamic pruning, which depends on specialized hardware support [

21]. The method directly produces a compact and deployable model upon completion of pruning. Its core contribution resides in the integration of mathematically equivalent transformations into the model compression pipeline, enabling synergistic optimization of pruning and layer fusion. This allows for substantial parameter reduction while preserving the model’s feature representation capability.

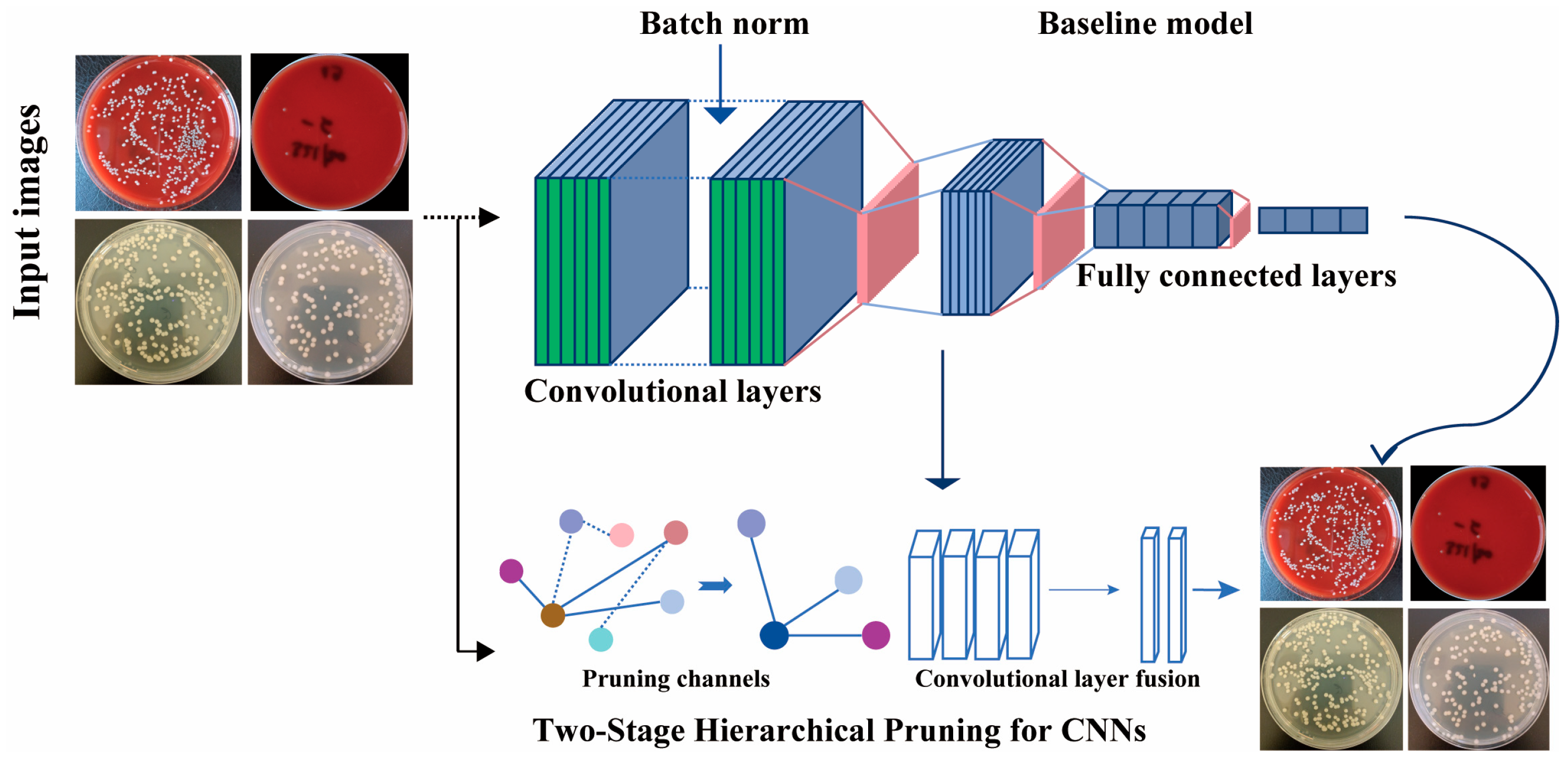

The proposed methodology

Figure 1 seamlessly integrates BN layers with convolutional operations, eliminating the necessity for additional parameters. By incorporating the L2 norm of the scaling factor

into the training process, the model dynamically adjusts

through gradient updates, enabling its progressive reduction across training epochs. This mechanism assigns implicit importance scores to convolutional kernels based on

values, facilitating the systematic pruning of low-priority components. The retained kernels, deemed most salient, are prioritized for their sensitivity to feature extraction. Furthermore, the framework leverages receptive field equivalence

Figure 2 to consolidate convolutional layers, achieving structural simplification without compromising model performance.

2.1. Dataset

We conducted evaluations using a publicly available dataset of bacterial colonies [

3], specifically curated for deep-learning-based colony detection in clinical microbiology contexts. This dataset comprises 369 high-resolution digital images of 24 bacterial species relevant to infectious diseases, including pathogens commonly associated with infections in patients. Each image is annotated with precise bounding boxes around individual colonies, enabling accurate object detection and classification tasks. Notably, the dataset was acquired under non-standardized, real-world laboratory conditions using mobile phone cameras, with variations in lighting and background to enhance generalizability. While the dataset includes metadata specifying the background type for each image, it does not provide segmentation masks for non-colony regions such as the Petri dish walls or other artifacts. In our experiments, we leveraged the background information provided to minimize interference from non-biological elements during preprocessing.

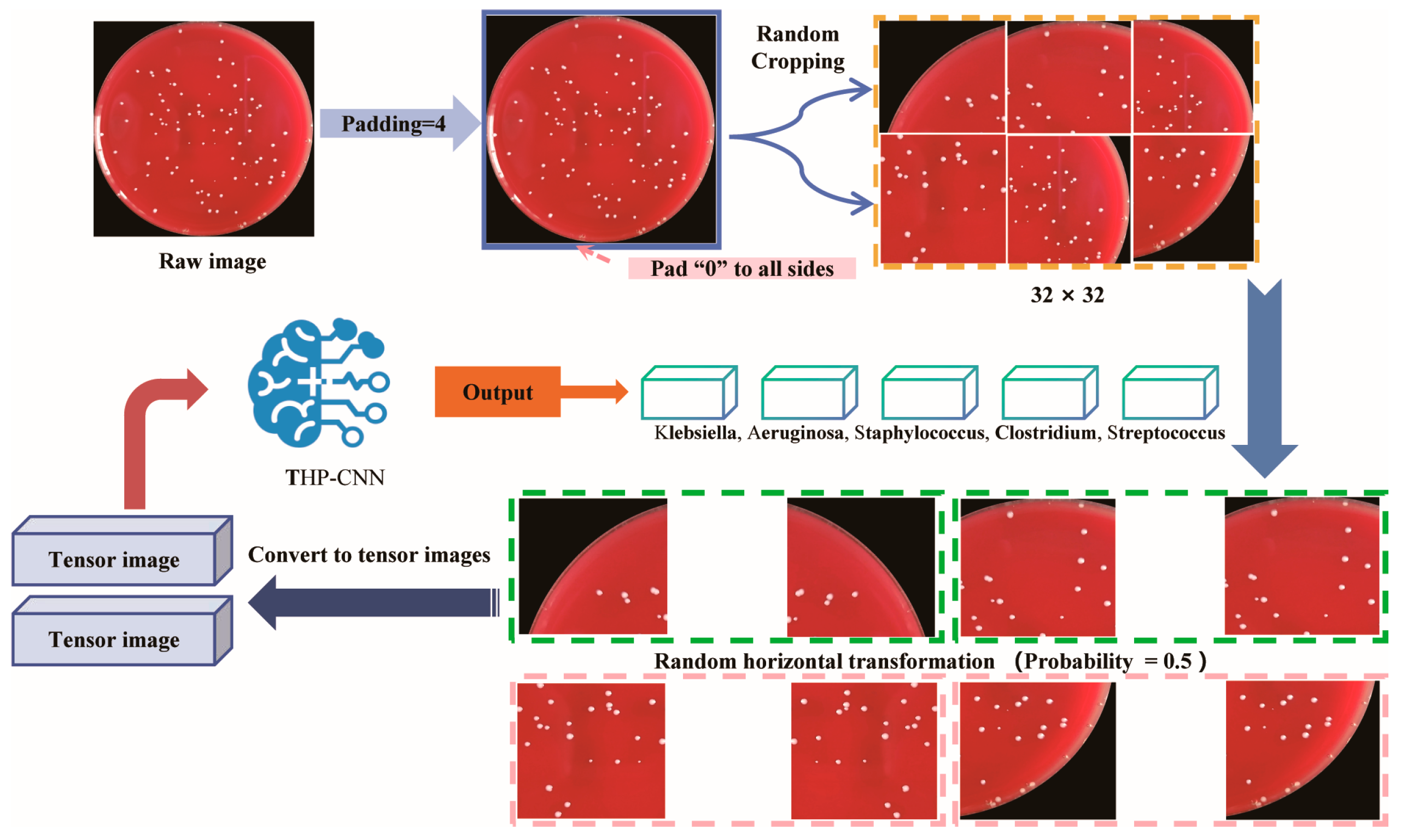

2.2. Data Augmentation

Data preprocessing is crucial for ensuring the accuracy of experimental results and enhancing the generalization capability of models [

22]. To ensure the transparency of our research and the reproducibility of our experiments, we systematically disclose the complete preprocessing pipeline applied to the original images. Prior to feeding the images into the model for training, initial screening and categorization were performed based on the metadata provided by the dataset (such as background color and Petri dish type) to exclude samples with abnormal imaging conditions or lower annotation quality.

Subsequently, a series of standardization and data augmentation operations were applied to all retained images. Specifically, original images were resized to a fixed resolution to meet network input requirements, and normalization was conducted to adjust pixel value distributions to fit the numerical range required for model training. To further enhance the model’s adaptability to diverse morphologies and spatial distributions, various data augmentation strategies were introduced. These included random cropping to simulate colonies located in different regions of the field of view, thereby improving the model’s sensitivity to local features; horizontal and vertical flipping [

20,

23,

24,

25] to increase sample diversity and improve the model’s robustness to orientation invariance; and additional augmentations, such as random rotations, brightness adjustments, and contrast perturbations, to mimic the effects of uneven lighting or angular deviations encountered during actual image capture

Figure 3.

2.3. Training Strategy

2.3.1. Hardware Configuration

The system is equipped with 256 GB of memory. The CPU is an Intel Xeon Silver 4510 processor (Intel, Santa Clara, CA, USA), featuring two physical sockets with 12 cores per socket and 2 threads per core, resulting in a total of 48 logical CPU threads. It operates within a frequency range of 800 MHz (minimum) to 4.10 GHz (maximum) and is based on the x86_64 architecture, ensuring sufficient computational and scheduling capabilities on the CPU side during the training process. For GPU acceleration, the system integrates two NVIDIA RTX A6000 graphics cards (NVIDIA, Santa Clara, CA, USA), each with approximately 48 GB (49,140 MiB) of VRAM. The GPU driver version is 570.133.07, supporting CUDA 12.8.

2.3.2. Hyperparameter Configuration

The experimental setup is configured as follows: the weight decay is set to

, momentum is fixed at 0.9, the initial learning rate is 0.001, the batch size is 32, and the total training duration is 160 epochs. The learning rate is decayed by a factor of 10 at 80 and 120 epochs to facilitate convergence in later stages of training. For all models, the Mish [

26] activation function is adopted, which has been shown to improve gradient flow and model performance through its smooth, non-monotonic properties. This configuration is consistently applied across all baseline and proposed models to ensure a fair comparison.

2.3.3. Pruning Ratio

The determination of the pruning ratio depends on the original pre-trained CNN model, requiring the assessment of channel importance based on the weights of the pre-trained model. First, the parameters of all BN layers are extracted. During the pre-training phase, the weights are subjected to regularization decay involving the γ coefficient; thus, the magnitude of their absolute values reflects the importance of the corresponding channels, with larger absolute values indicating more significant contributions. Subsequently, the absolute values of the weights from every two consecutive BN layers are grouped together. Each group corresponds to two successive 3 × 3 convolutional layers, which are prepared for a subsequent equivalent merge into a 5 × 5 convolution. All such groups are then aggregated into a single global set. Finally, the absolute weight values within this global set are sorted in ascending order, and the pruning index is determined according to a predefined pruning ratio (e.g., 50%, 60%, or 70%), based on which pruning is executed.

2.4. Cross-Validation and Statistical Evaluation of Model Performance

For the classification tasks, we reported the best-fold metrics for all models

Table 1. Additionally, all models were evaluated using cross-validation [

27]

Table 2, with each fold partitioned into training, validation, and test sets at a ratio of 6:2:2. The standard deviation (SD) [

28] quantifies the variability of evaluation metrics—such as accuracy and precision—across different folds of cross-validation (i.e., across distinct data subsets). A smaller SD indicates that model performance is less sensitive to minor variations in data distribution and demonstrates greater stability. The 95% confidence interval (95% CI) defines the statistically estimated range within which the true model performance is expected to lie; a narrower interval reflects higher estimation precision and increased confidence in generalizing model performance to real-world scenarios. The combined use of SD and 95% CI enables a comprehensive assessment of model robustness and reliability, providing a more complete statistical foundation for evaluating model performance.

3. Results

This study evaluates the 24-class classification performance of the THP-CNN model (with pruning rates of 50%, 60%, and 70%) against four benchmark compact models—ResNet-50, MobileNet V2, Inception, and AlexNet—on an annotated dataset comprising 24 clinically relevant bacterial species (369 images; 56,865 annotated colonies). Cross-validation was employed to assess model stability, and the key findings are shown below.

3.1. Superiority of the THP-CNN Model

3.1.1. Best-Fold Performance Comparison

Regarding best-fold metrics, the THP-CNN series demonstrated significant advantages. The 70% pruned THP-CNN achieved the highest accuracy of 0.86, significantly outperforming MobileNet V2 (0.81), ResNet-50 (0.72), Inception (0.74), and AlexNet (0.62). The 70% pruned THP-CNN led with a precision of 0.85, surpassing the other models (0.61–0.82). The 60% pruned THP-CNN achieved a recall of 0.84, outperforming MobileNet V2 (0.81) and ResNet-50 (0.72). Both the 60% and 70% pruned THP-CNN models attained an F1 score of 0.82, outperforming MobileNet V2 (0.80) and the remaining models (0.59–0.74).

Regarding model compactness, the parameter count of THP-CNN decreased substantially with an increasing pruning rate: the 70% pruned version had only 0.62 M parameters, which was considerably lower than ResNet-50 (23.56 M), Inception (21.83 M), AlexNet (57.10 M), and MobileNet V2 (2.25 M), demonstrating superior parameter efficiency.

3.1.2. Cross-Validation Stability Analysis

The cross-validation results (Mean ± SD, 95% CI) further confirm the overall superiority of THP-CNN. The 50% and 60% pruned THP-CNN models both achieved a mean accuracy of 0.79, which was significantly higher than ResNet-50 (0.69 ± 0.02), MobileNet V2 (0.76 ± 0.03), Inception (0.62 ± 0.11), and AlexNet (0.58 ± 0.02). Moreover, their narrower 95% confidence intervals (0.74–0.84) suggest more precise and consistent performance estimates compared to the wider interval of the more volatile Inception model (0.48–0.75).

The mean trends for precision, recall, and F1 score align with those of accuracy: the 50% pruned THP-CNN achieved a precision of 0.77 ± 0.03, recall of 0.78 ± 0.04, and an F1 score of 0.75 ± 0.04, outperforming most baseline models. The 60% pruned THP-CNN showed a slight decrease in precision (0.75 ± 0.06) but maintained strong overall performance. Regarding model size, the THP-CNN variants exhibited significantly lower mean parameter counts (0.77–3.01 M) compared to the benchmark models, with low standard deviations (0.18–0.50), indicating controllable and consistent parameter reduction during pruning.

In sum, the THP-CNN model achieves a favorable balance between performance and efficiency in the 24-class bacterial colony classification task. Through its pruning strategy, it maintains or surpasses the classification accuracy of benchmark models while drastically reducing model size. Furthermore, the 50% and 60% pruned variants exhibit enhanced generalization stability, thereby providing a robust and efficient solution for the automated detection and classification of bacterial colonies.

3.2. Mathematical Modeling

To identify and prune superfluous convolutional kernels [

26,

27,

28], we impose an L2 penalty on the scaling factors (

γ) of the batch normalization (BN) layers associated with each kernel. This penalty is incorporated into the overall loss function during training. The magnitude of each

γ coefficient thereby serves as a proxy for its corresponding kernel’s importance; kernels associated with

γ values driven towards zero by the L2 regularization are deemed redundant and are subsequently pruned. The modified objective function is formulated as follows:

For a weight matrix

, where

, the L2 norm objective function is defined as follows:

The role of BN [

21,

29,

30] in optimizing convolutional neural network training is well-documented. This study incorporates a post-convolutional normalization layer to stabilize the distributions of intermediate features. By mitigating intra-batch statistical variability and accelerating gradient propagation, this strategy improves the efficiency of model training. The significance of convolutional kernels is evaluated using the

coefficients defined in Equation (2), which inform the selective elimination of filters with minimal contribution. The proposed pruning framework is illustrated in

Figure 2, highlighting the integration of normalization metrics into the model compression pipeline:

Although the normalization step alleviates gradient saturation issues in part, it concurrently suppresses nonlinear representational capacity, thereby compromising model generalization. To overcome this constraint, the BN layer integrates an adaptive linear transformation post-whitening with the objective of reconstructing the inherent nonlinear properties of the input data. This strategy incorporates learnable parameters, γ (scaling coefficient) and b (offset parameter), to restore feature diversity and maintain expressive capacity.

This study proposes an approach to dynamically adjust the number of filters while preserving the effective receptive field dimensions in CNNs. The receptive field denotes the spatial extent in the input image that contributes to a specific feature map element at a given network layer. From the input perspective, it also characterizes the region where the CNN extracts discriminative patterns. Decreasing the filter count reduces the total multiplication–accumulation operations, thereby enhancing computational efficiency and accelerating inference speed. The pruned architecture retains filters essential for maintaining model accuracy, necessitating careful optimization to prevent performance degradation. To balance computational efficiency with feature representation fidelity, we utilize receptive field equivalence transformation methods to minimize redundant filters. The mathematical formulation for calculating receptive field size is as follows:

In this context, denotes the spatial extent of the receptive field in the layer, represents the kernel size of the convolutional filter in the -th layer, and corresponds to the stride value applied during convolution in the -th layer. By exploiting the equivalence in receptive field characteristics between two sequential convolutional operations and a unified convolutional operation, the proposed approach facilitates architectural simplification through layer reduction.

4. Discussion

This study systematically compares THP-CNN (with pruning rates of 50%, 60%, and 70%) against ResNet-50, MobileNet V2, Inception, and AlexNet on a 24-class bacterial colony classification task. THP-CNN achieves significantly better balance between performance and parameter efficiency than baseline models, maintaining an accuracy of 0.86 at 70% pruning

Table 1, with this advantage rooted in its unique structured pruning framework and network optimization logic.

THP-CNN employs a two-stage hierarchical pruning framework that quantifies convolutional kernel importance via the L2 norm of scaling parameters γ in batch normalization (BN) layers, integrating γ into gradient computation during backpropagation. This mechanism enables redundant kernel weights to naturally decay to zero during training, substantially mitigating the subjectivity associated with manual thresholds in traditional static pruning and eliminating the additional computational cost associated with dynamic pruning. Consequently, THP-CNN precisely identifies and retains the most salient convolutional kernels highly relevant to bacterial colony features (e.g., edge textures and color gradients) while eliminating noise-sensitive redundant parameters—explaining its high accuracy of 0.86 at 70% pruning.

In contrast, ResNet-50 (23.56 M parameters) mitigates vanishing gradients via residual connections but suffers from inherent redundancy in standard convolutional layers, resulting in lower accuracy (0.72) due to excessive feature channels, including noise irrelevant to colony classification. MobileNet V2 (2.25 M parameters) relies on depthwise separable convolutions for parameter compression, a strategy that may compromise the extraction of critical colony features. It yields an accuracy of 0.81—below that of THP-CNN’s 70% pruned model (0.86). THP-CNN innovatively introduces a receptive field fusion-based convolutional layer fusion principle: by leveraging the spatial equivalence between cascaded 3 × 3 convolutions and a single larger 5 × 5 convolution, consecutive layers are consolidated into unified operations with equivalent receptive fields. This reduces network depth and parameters to 0.62 M at 70% pruning—a value far lower than MobileNet V2′s 2.25 M—while avoiding approximation errors from low-rank decompositions (e.g., singular value decomposition), fully demonstrating “precise compression rather than uniform simplification”.

Inception theoretically accommodates morphological diversity via parallel multi-scale convolutions but exhibits high sensitivity to data variability (accuracy: 62 ± 11%; 95% CI: 0.48–0.75), whereas THP-CNN leverages BN layer normalization to maintain feature integrity after pruning, requiring minimal retraining and ensuring stable generalization (95% CI: 0.74–0.84) with less performance fluctuation. AlexNet (57.10 M parameters; accuracy: 0.62) lacks feature reuse mechanisms due to simple layer stacking, highlighting the limitations of traditional architectures in fine-grained feature extraction.

THP-CNN achieves extreme parameter reduction (0.62–3.01 M) without auxiliary components (e.g., teacher models or specialized hardware), using γ-guided kernel se-lection and receptive field fusion to precisely capture subtle colony features. Moderate pruning (50% and 60%) maintains a mean accuracy of 0.79 in cross-validation, confirming that γ-guided decay preserves core feature channels. However, 70% pruning shows reduced stability (95% CI: 0.59–0.88), validating that pruning prioritizes feature preservation over minimal parameter count.

This study’s dataset comprises 369 images and 56,865 annotated colonies, representing a relatively limited scale that may constrain the learning of rare morphologies. To mitigate overfitting, various data augmentation techniques (e.g., rotation and brightness adjustment) were implemented. Future work will explore joint training with multiple bacterial colony datasets to enhance sample diversity. Rigorous testing demonstrates that THP-CNN’s lightweight design is particularly suitable for small-data scenarios.

In conclusion, THP-CNN establishes a highly efficient framework for bacterial colony classification through γ-driven dynamic pruning, receptive field fusion-based structural optimization, and dependency-free lightweight design. It achieves extreme parameter reduction while preserving key features, demonstrating significant advantages over ResNet-50 (residual connections), MobileNet V2 (uniform compression), Inception (multi-scale sensitivity), and AlexNet (traditional stacking), even under limited data conditions. It is thus a generalizable paradigm for lightweight model design in microbiological image classification.

5. Conclusions

This study proposes THP-CNN, a structured model compression framework that integrates channel importance estimation with receptive field transformation via layer fusion. The framework achieves an optimal efficiency–accuracy trade-off: at 60% pruning, THP-CNN attains an accuracy of 0.85 with 1.19 million parameters, outperforming all four baseline CNN models; at 70% pruning, it maintains a high accuracy of 0.86 with only 0.62 million parameters, demonstrating exceptional compression efficiency. THP-CNN eliminates the reliance on manual thresholding in structured pruning through a γ-guided dynamic decay mechanism during training, which adaptively suppresses redundant convolutional kernels while preserving essential feature representations. This approach resolves inherent dimensional mismatches in conventional pruning methods and ensures structural coherence in the pruned network. The mechanism effectively removes noise-sensitive parameters while retaining critical morphological features such as edge textures and color gradients; this efficacy is evidenced by the model’s high accuracy even under aggressive pruning. Experimental results confirm the effectiveness of THP-CNN in enabling efficient model compression for resource-constrained inference. By seamlessly integrating importance quantification and structural optimization, this work explores a new avenue for structured pruning, providing insights into creating generalizable, lightweight models for microbiological image classification.

Author Contributions

K.L. conceived the project, analyzed the dataset utilization methodology, and revised the manuscript; C.X. implemented the code, organized the experimental data, and wrote the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the fund from Macao Polytechnic University (RP/FCA-14/2023), The Science and Technology Development Funds (FDCT) of Macao (0033/2023/RIB2), and Macau Science and Technology Development Fund and the Department of Science and Technology of Guangdong Province (FDCT-GDST, 0009/2024/AGJ) with the submission approval code of fca.d1f0.f0d3.f. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Acknowledgments

We would like to acknowledge the support of the Faculty of Applied Sciences, Macao Polytechnic University, and note that the submission number for this manuscript is [fca.d1f0.f0d3.f].

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

| CNN | Convolutional Neural Network |

| THP-CNN | Two-Stage Hierarchical Pruning CNN method |

| ICU | Intensive Care Unit |

| BN | Batch Normalization |

| 95% CI | 95% Confidence Interval |

| SD | Standard Deviation |

References

- Neag, M.A.; Mitre, A.O.; Pomana, I.G.; Velescu, M.A.; Militaru, C.; Nagy, G.; Melincovici, C.S. Host–Microbiome Interaction in the Intensive Care Unit. Diseases 2025, 13, 250. [Google Scholar] [CrossRef]

- Bravo-Frank, N.; Zende, R.; Feng, L.; Mesyngier, N.; Pachpute, A.; Hong, J. Realtime bacteria detection and analysis in sterile liquid products using deep learning holographic imaging. NPJ Biosens. 2024, 1, 8. [Google Scholar] [CrossRef]

- Makrai, L.; Fodróczy, B.; Nagy, S.Á.; Czeiszing, P.; Csabai, I.; Szita, G.; Solymosi, N. Annotated dataset for deep-learning-based bacterial colony detection. Sci. Data 2023, 10, 497. [Google Scholar] [CrossRef]

- Aboukhair, M.; Alsheref, F.; Assiri, A.; Koura, A.; Kayed, M. CNN filter sizes, effects, limitations, and challenges: An exploratory study. In Network: Computation in Neural Systems; Taylor & Francis: London, UK, 2025; pp. 1–29. [Google Scholar] [CrossRef]

- Ma, X.; Wang, W.; Li, W.; Wang, J.; Ren, G.; Ren, P.; Liu, B. An Ultralightweight Hybrid CNN Based on Redundancy Removal for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5506212. [Google Scholar] [CrossRef]

- Lu, J.; Wang, R.; Zuo, G.; Zhang, W.; Jin, X.; Rao, Y. Enhancing CNN efficiency through mutual information-based filter pruning. Digit. Signal Process. 2024, 151, 104547. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, Y. BFP: Balanced filter pruning via knowledge distillation for efficient deployment of CNNs on edge devices. Neurocomputing 2025, 650, 130946. [Google Scholar] [CrossRef]

- Srivastava, R.; Kumar, N.; Sandhan, T. Binary Classification of Laryngeal Images Utilising ResNet-50 CNN Architecture. Indian J. Otolaryngol. Head Neck Surg. 2025, 77, 644–651. [Google Scholar] [CrossRef] [PubMed]

- Huang, C.; Sarabi, M.; Ragab, A.E. MobileNet-V2/IFHO model for Accurate Detection of early-stage diabetic retinopathy. Heliyon 2024, 10, e37293. [Google Scholar] [CrossRef]

- Nirupama; Virupakshappa. MobileNet-V2: An Enhanced Skin Disease Classification by Attention and Multi-Scale Features. J. Imaging Inf. Med. 2025, 38, 1734–1754. [Google Scholar] [CrossRef]

- Cheng, X.; Lu, F.; Liu, Y. Lightweight hybrid model based on MobileNet-v2 and Vision Transformer for human–robot interaction. Eng. Appl. Artif. Intell. 2024, 127, 107288. [Google Scholar] [CrossRef]

- Arias-Serrano, I.; Velásquez-López, P.A.; Avila-Briones, L.N.; Laurido-Mora, F.C.; Villalba-Meneses, F.; Tirado-Espin, A.; Cruz-Varela, J.; Almeida-Galárraga, D. Artificial intelligence based glaucoma and diabetic retinopathy detection using MATLAB—Retrained AlexNet convolutional neural network. F1000Research 2023, 12, 14. [Google Scholar] [CrossRef]

- Wei, W.; Tao, H.; Chen, W.; Wu, X. Automatic recognition of micronucleus by combining attention mechanism and AlexNet. BMC Med. Inf. Decis. Mak. 2022, 22, 138. [Google Scholar] [CrossRef]

- Xie, C.; Zhai, X.; Chi, H.; Li, W.; Li, X.; Sha, Y.; Li, K. A Novel Fusion Pruning-Processed Lightweight CNN for Local Object Recognition on Resource-Constrained Devices. IEEE Trans. Consum. Electron. 2024, 70, 6713–6724. [Google Scholar] [CrossRef]

- Xue, Y.; Yao, W.; Peng, S.; Yang, S.; Zhou, K. One-shot Hierarchical Global Pruning via Channel Relation-aware Attention. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; pp. 1–8. [Google Scholar]

- Gao, S.; Zhang, Y.; Huang, F.; Huang, H. BilevelPruning: Unified Dynamic and Static Channel Pruning for Convolutional Neural Networks. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 16090–16100. [Google Scholar]

- Sun, S.; Ren, W.; Li, J.; Wang, R.; Cao, X. Logit Standardization in Knowledge Distillation. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 15731–15740. [Google Scholar]

- Cheng, H.; Zhang, M.; Shi, J.Q. A Survey on Deep Neural Network Pruning: Taxonomy, Comparison, Analysis, and Recommendations. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 10558–10578. [Google Scholar] [CrossRef] [PubMed]

- Cai, Z.; Zhu, H.; Shen, Q.; Wang, X.; Cao, X. Batch Normalization Alleviates the Spectral Bias in Coordinate Networks. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 25160–25171. [Google Scholar]

- Zhang, L.; Zhang, J.; Li, Z.; Song, Y. A multiple-channel and atrous convolution network for ultrasound image segmentation. Med. Phys. 2020, 47, 6270–6285. [Google Scholar] [CrossRef]

- Ma, Y.; Klabjan, D. Diminishing Batch Normalization. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 6544–6557. [Google Scholar] [CrossRef]

- Habib, M.; Okayli, M. Evaluating the Sensitivity of Machine Learning Models to Data Preprocessing Technique in Concrete Compressive Strength Estimation. Arab. J. Sci. Eng. 2024, 49, 13709–13727. [Google Scholar] [CrossRef]

- Nguyen, K.C.T.; Duong, D.Q.; Almeida, F.T.; Major, P.W.; Kaipatur, N.R.; Pham, T.T.; Lou, E.H.M.; Noga, M.; Punithakumar, K.; Le, L.H. Alveolar Bone Segmentation in Intraoral Ultrasonographs with Machine Learning. J. Dent. Res. 2020, 99, 1054–1061. [Google Scholar] [CrossRef]

- Zhou, G.; Chen, Y.; Chien, C. On the analysis of data augmentation methods for spectral imaged based heart sound classification using convolutional neural networks. BMC Med. Inf. Decis. Mak. 2022, 22, 226. [Google Scholar] [CrossRef]

- Mutar, M.T.; Majid, M.; Ibrahim, M.J.; Obaid, A.H.; Alsammarraie, A.Z.; Altameemi, E.; Kareem, T.F. Transfer learning with different modified convolutional neural network models for classifying digital mammograms utilizing Local Dataset. Gulf J. Oncol. 2023, 1, 66–71. [Google Scholar]

- Wang, X.; Ren, H.; Wang, A. Smish: A Novel Activation Function for Deep Learning Methods. Electronics 2022, 11, 540. [Google Scholar] [CrossRef]

- Bates, S.; Hastie, T.; Tibshirani, R. Cross-Validation: What Does It Estimate and How Well Does It Do It? J. Am. Stat. Assoc. 2023, 119, 1434–1445. [Google Scholar] [CrossRef]

- Darling, H.S. Do you have a standard way of interpreting the standard deviation? A narrative review. Cancer Res. Stat. Treat. 2022, 5, 728–733. [Google Scholar] [CrossRef]

- Peng, H.; Yu, Y.; Yu, S. Re-Thinking the Effectiveness of Batch Normalization and Beyond. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 465–478. [Google Scholar] [CrossRef] [PubMed]

- Bao, Y.; Kang, G.; Yang, L.; Duan, X.; Zhao, B.; Zhang, B. Normalizing Batch Normalization for Long-Tailed Recognition. IEEE Trans. Image Process. 2024, 34, 209–220. [Google Scholar] [CrossRef] [PubMed]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).