1. Introduction

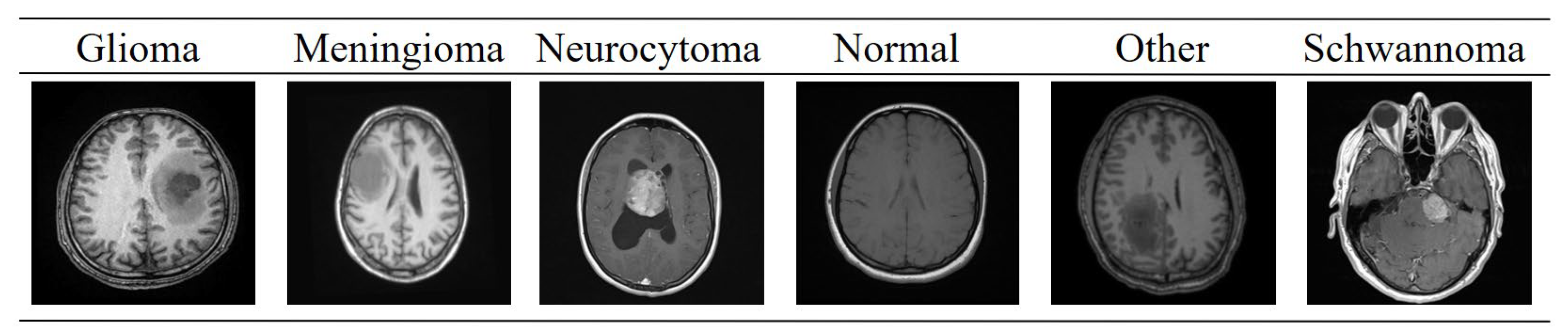

The growth of abnormal cells in the brain can lead to the formation of brain tumors, which are a major cause of morbidity and mortality worldwide. Gliomas, originating from glial cells, are broadly categorized into Higher Grade Gliomas (HGG) and Lower Grade Gliomas (LGG) [

1]. HGGs grow rapidly with extensive cellular infiltration, whereas LGGs exhibit slower progression. Accurate and timely diagnosis is crucial for determining the appropriate treatment strategy. Non-invasive imaging techniques such as Magnetic Resonance Imaging (MRI), Positron Emission Tomography (PET), and Computed Tomography (CT) are widely used for tumor detection and monitoring. Among these, MRI has emerged as the preferred modality due to its superior ability to visualize soft tissue structures and provide high-resolution anatomical details [

2].

Manual segmentation of brain tumors in MRI images requires expert radiological knowledge and is prone to inter- and intra-observer variability. Automatic segmentation methods offer a reliable alternative by enabling the quantitative assessment of the tumor size, location, morphology, and grade [

3]. However, brain tumors, particularly gliomas, present significant challenges for segmentation due to their diffuse growth patterns, poor contrast, and intensity heterogeneity in MRI scans. Early approaches for MRI segmentation relied on classical image processing techniques, such as region growing, clustering-based methods, and watershed algorithms. Despite their utility, these methods are sensitive to noise and artifacts, limiting their overall effectiveness [

4].

Recent advances in Deep Learning (DL) have demonstrated remarkable performance in brain tumor segmentation by learning complex hierarchical features from MRI data [

5]. Convolutional Neural Networks (CNNs) and Fully Convolutional Networks (FCNs), including models such as U-Net [

6], DenseNet121 [

7], Xception [

8], Deep Neural Networks (DNNs) [

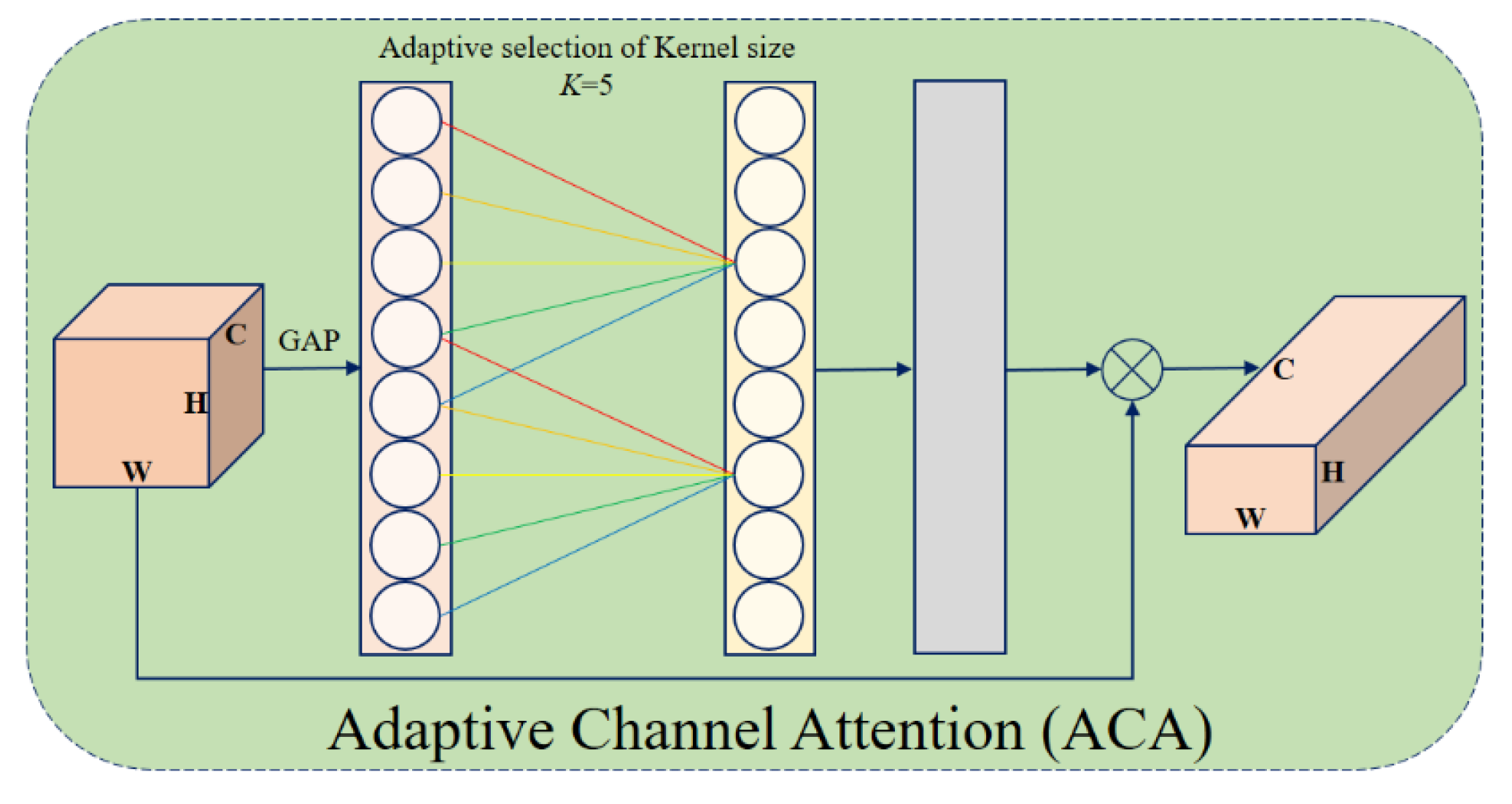

9], and their variants, have been successfully applied to this task, showing substantial improvements over traditional techniques. Building upon these advances, attention mechanisms such as Adaptive Channel Attention (ACA) [

10] and Triplet Attention [

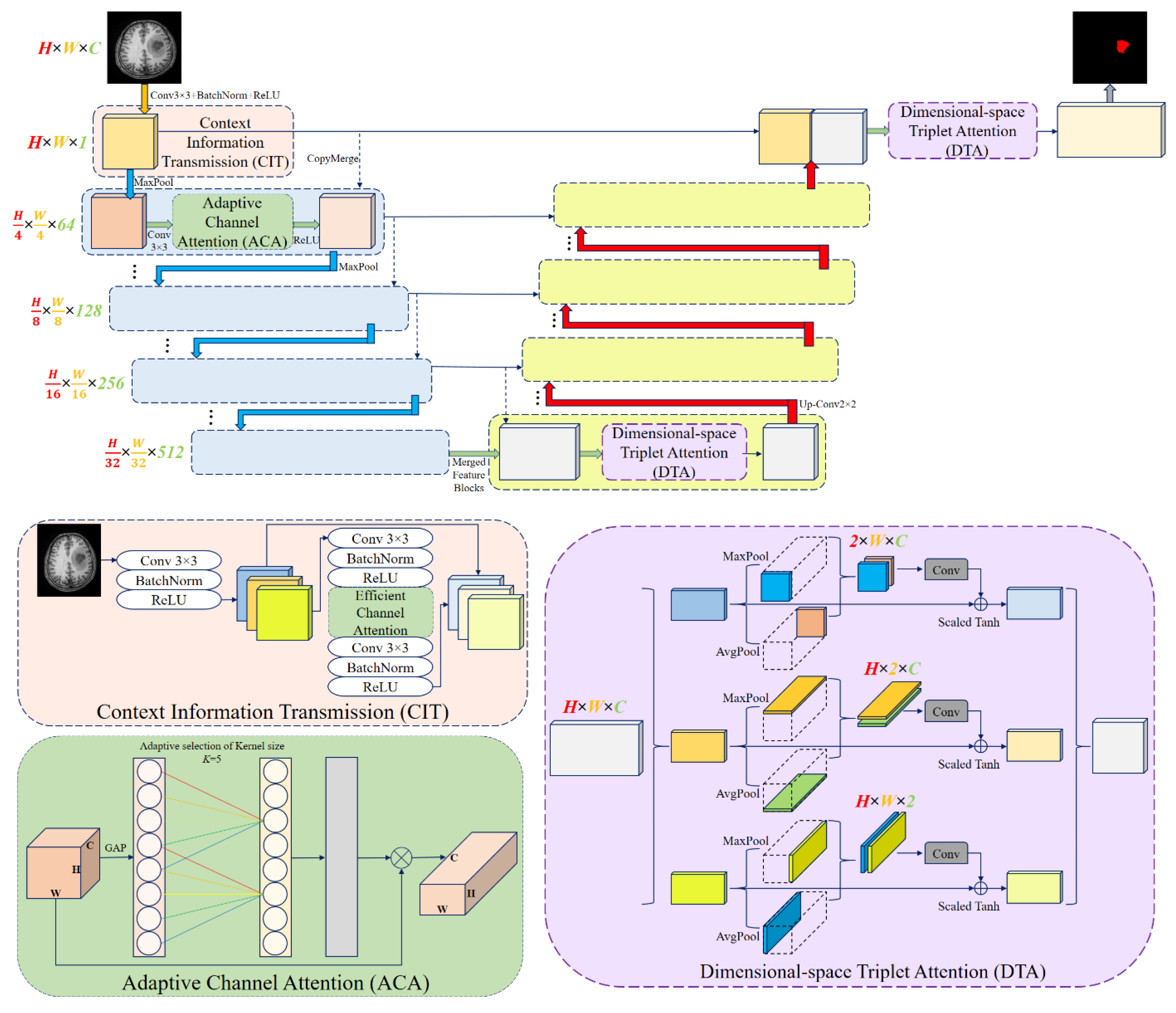

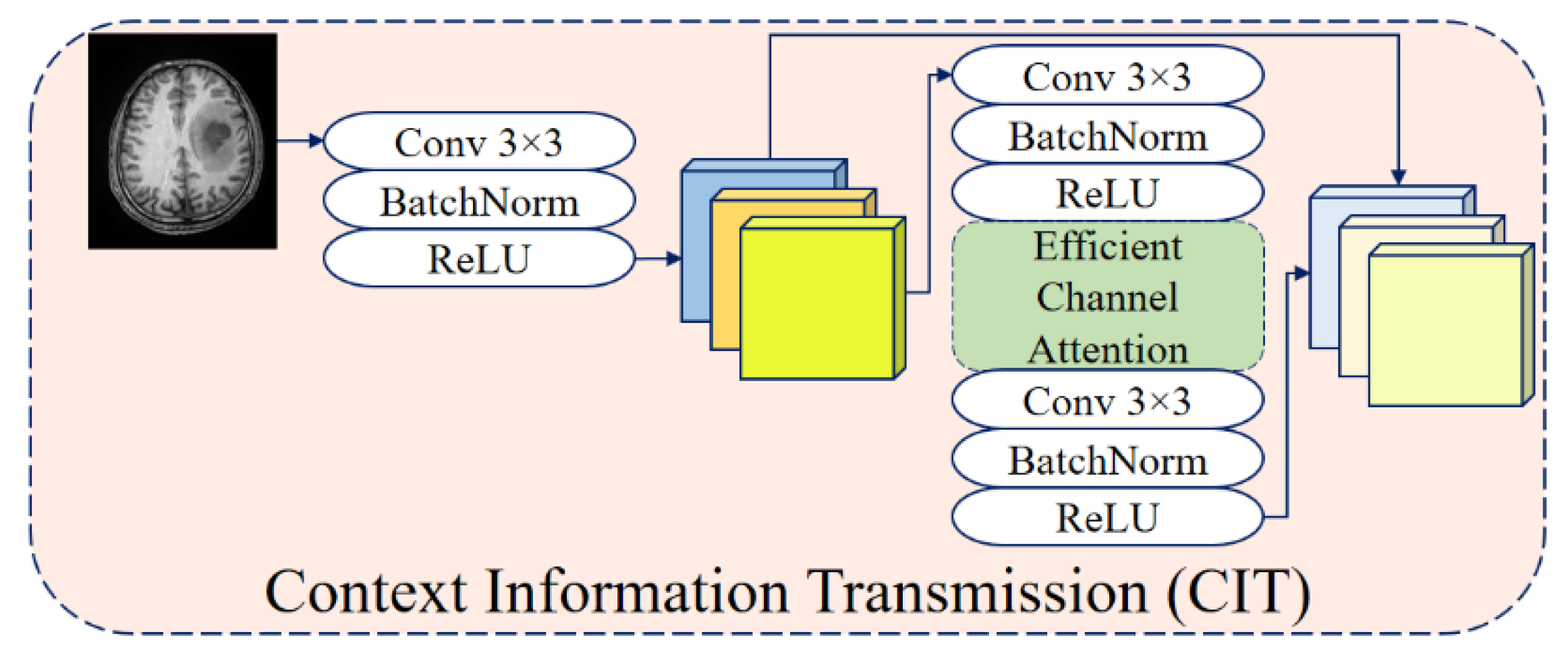

11] have been employed to enhance feature representation and multi-scale contextual understanding. Inspired by these developments, this study proposes Attention Res-UNet (ARU-Net), an improved U-Net-based [

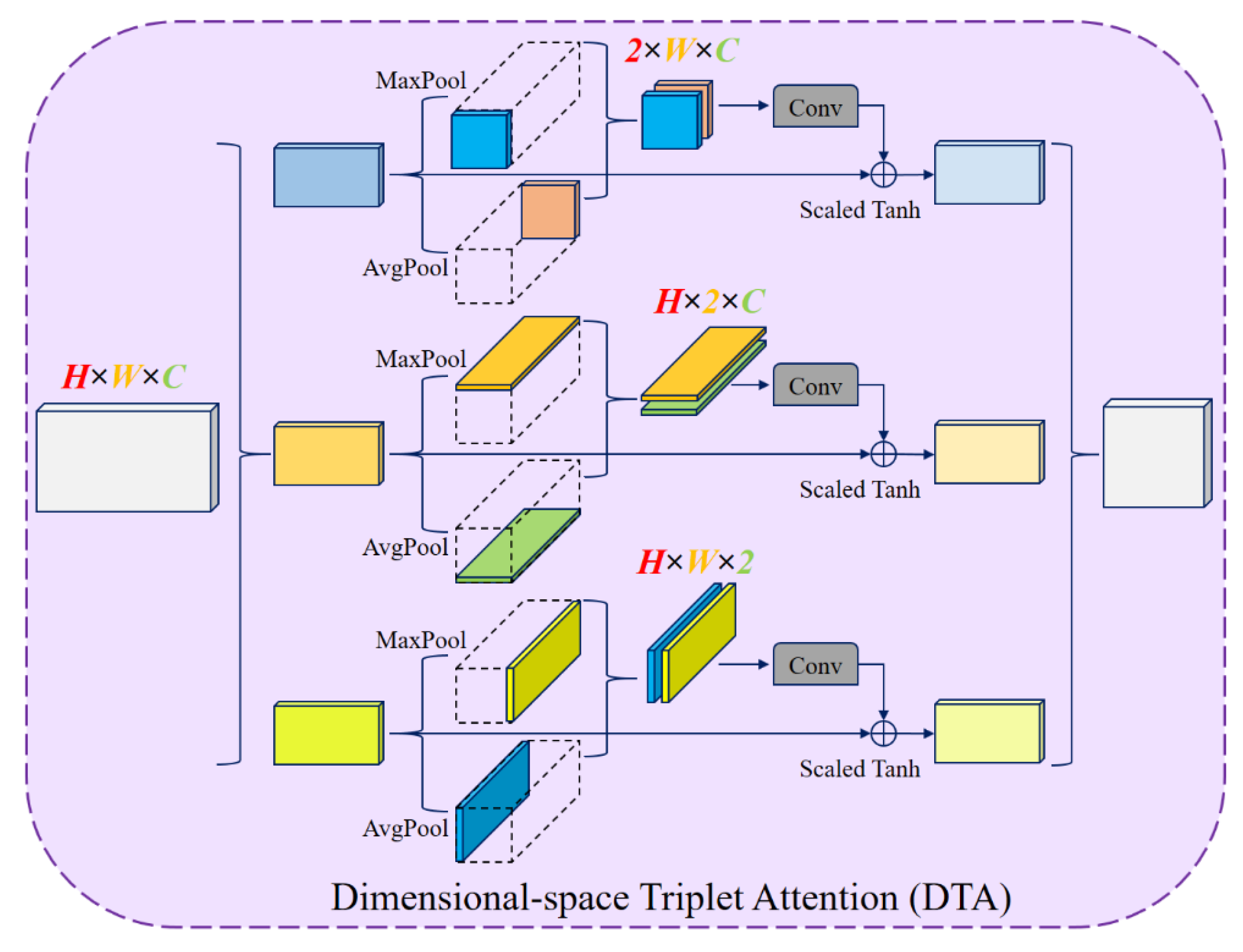

12] architecture incorporating residual connections, ACA, and Dimensional-space Triplet Attention (DTA) [

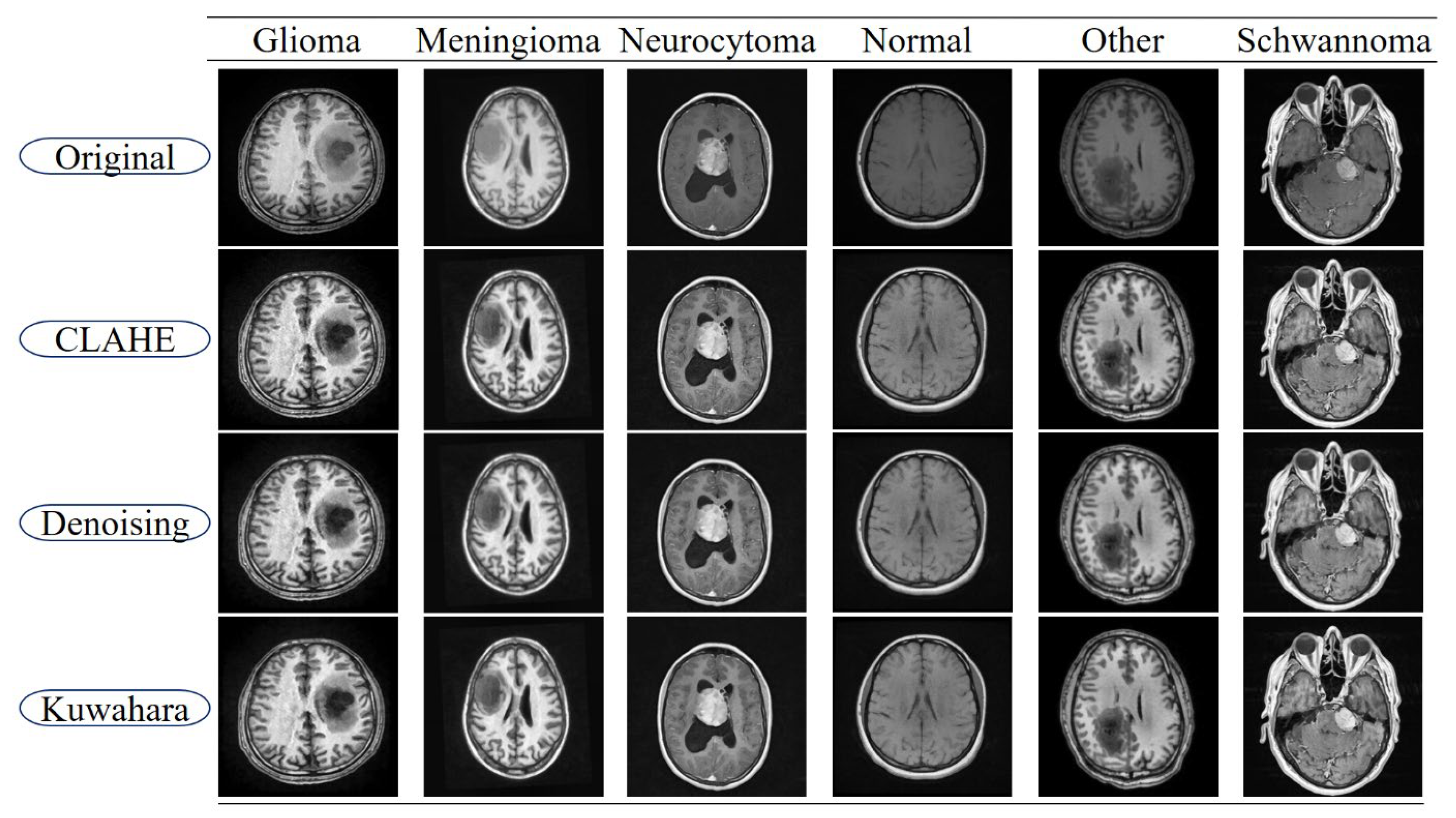

13] modules. Accurate tumor identification and segmentation are made possible by the network’s design, which maximizes local cross-channel interactions while reducing the model complexity. Furthermore, the study emphasizes the importance of pre-processing steps, such as Contrast Limited Adaptive Histogram Equalization (CLAHE), denoising, and Linear Kuwahara filtering, to enhance image quality, improve feature extraction, and facilitate more accurate segmentation [

14]. The main contributions of this paper are summarized as follows:

Pre-processing effectiveness: We demonstrate that applying CLAHE, denoising, and Linear Kuwahara filtering significantly enhances the MRI image quality and directly improves the segmentation performance across multiple tumor classes.

Novel ARU-Net architecture: We propose a U-shaped deep neural network that integrates residual connections with dual attention modules to better capture both channel and spatial dependencies.

Adaptive Channel Attention (ACA) in the encoder: Applied to the lower convolutional and residual layers, ACA improves the feature refinement and strengthens the representation learning.

Dimensional-space Triplet Attention (DTA) in the decoder: Applied to the upper convolutional layers, DTA enables more effective extraction and fusion of multi-scale features, leading to smoother and more accurate tumor boundaries.

Comprehensive evaluation: Experimental results on the BTMRII dataset demonstrate that ARU-Net consistently outperforms U-Net, DenseNet121, and Xception, achieving superior quantitative metrics and qualitative segmentation quality.

The remainder of the paper is organized as follows:

Section 2 reviews the related DL approaches for brain tumor segmentation.

Section 3 details the methodology, including the network architecture and component descriptions.

Section 4 presents the experimental results, ablation studies, and comparative analyses.

Section 5 discusses the experimental results and explains the limitations of the proposed method. Finally,

Section 6 concludes the study and discusses future research directions.

2. Related Works

Brain tumor segmentation has long been a critical research area in medical image analysis due to its importance in clinical decision-making. Early studies were dominated by classical image processing techniques such as region-growing [

15], clustering-based approaches [

16], and watershed algorithms [

17]. However, these methods were highly sensitive to noise, low contrast, and heterogeneous tumor appearance in MRI scans [

18], limiting their accuracy, particularly for complex glioma structures [

19].

With the advent of deep learning, CNN-based methods have rapidly replaced traditional approaches, achieving superior segmentation performance [

20]. Several architectures have extended the U-Net by incorporating residual learning and advanced modules. Examples include cascaded residual multi-scale convolutions [

21], multi-scale contextual attention [

22], multimodal spatial-boundary integration [

23], and deep residual encoders within U-Net [

24]. Other works, such as ResUNet-a [

25] and EffUNet++ [

26], enhanced feature fusion and skip connections, while hybrid models like 3DUV-NetR+ [

27] combined U-Net, V-Net, and Transformer encoders for richer contextual representation. These CNN-based approaches demonstrate the potential of hierarchical feature extraction but often face challenges in balancing computational complexity and segmentation detail.

Attention-based CNN extensions have also shown strong performance. Khorasani et al. embedded pre-trained backbones into U-Net for glioma subregion segmentation [

28], while DTASUnet [

29] applied dual-transformer attention to capture 3D volumetric context. Multi-task frameworks, such as the ensemble model by Wen et al. [

30], combined segmentation with tumor grading, and modality-specific studies [

31] highlighted the critical impact of input MRI sequences on accuracy.

More recently, Transformer and Mamba-based designs have gained attention. The SLCA-UNet [

32] incorporated spatial and local channel attention into the U-Net, while the Adaptive Cascaded Transformer U-Net [

33] employed cascaded transformer blocks for long-range dependency modeling. Wang et al. proposed S

3-Mamba [

34], optimized for small tumor segmentation, and Zhang et al. introduced the Edge-Interaction Mamba Network [

35] for refined boundary delineation. These works emphasize global context modeling and edge-sensitive learning, offering strong performance in heterogeneous tumor cases.

Overall, CNNs and FCNs have laid the foundation for modern brain tumor segmentation [

5,

6,

7,

8,

36], while recent efforts have focused on integrating attention mechanisms [

37,

38,

39], residual learning [

40], and hybrid architectures [

41]. Despite these advances, multi-scale tumor morphology and fine-grained boundaries remain challenging [

42]. Motivated by these limitations, the proposed ARU-Net integrates residual connections with dual attention mechanisms and enhanced pre-processing to achieve robust segmentation performance on the BTMRII dataset.

4. Experimental Results

In order to evaluate the effectiveness of the proposed model, a series of experiments were conducted under a controlled computational environment. The training and testing processes were implemented in Python 3.10 using the PyTorch 2.1.0 framework, running on a workstation equipped with an Intel Core i7 4.70 GHz processor, an NVIDIA RTX 4060Ti GPU, and 32 GB of RAM. During training, a batch size of six samples was employed to stabilize the gradient updates, while the Adam optimizer was adopted to achieve efficient convergence. In the pre-processing stage, all images from the BTMRII dataset were resized to ensure uniformity across the samples. To guarantee a fair evaluation, the dataset was randomly divided into three independent subsets: 60% for training, 20% for validation, and 20% for testing, following a 6:2:2 ratio. The results obtained from this setup are presented and analyzed in the subsequent subsections, with comparisons drawn against the related works in the literature.

In addition to the proposed framework, several well-established DL architectures were also trained for comparative analysis. DenseNet121 and Xception models were implemented using the TensorFlow/Keras 2.15 environment. The input resolution was set to 224 × 224 for DenseNet121 and 299 × 299 for Xception, respectively. Both models employed the Adamax optimizer (learning rate = 0.001), along with an early stopping strategy (patience = 10, based on validation accuracy) to prevent overfitting. The best-performing checkpoints were stored during training for subsequent evaluation. For the U-Net configuration, the network was structured as a segmentation-based classifier, where the encoder backbone was initialized with EfficientNetB0. The input resolution was set to 224 × 224, and training was performed using the Adamax optimizer (learning rate = 1 × 10−4). To enhance the training stability, the encoder weights were frozen in the initial stages before fine-tuning. As with the classification models, the training and validation losses and accuracies were monitored, while the performance was further evaluated using classification reports, confusion matrices, and multi-class ROC curves generated on the test set. Both the best-performing models (based on validation metrics) and the final trained models were preserved. The proposed ARU-Net architecture was developed and trained within the PyTorch/timm framework. For this model, an input resolution of 256 × 256 was selected, and the Adam optimizer (learning rate = 1 × 10−4) was used during training. Unlike the baseline models, the checkpoint selection criterion for ARU-Net was based on the validation weighted F1-score, enabling a more balanced performance assessment across multiple tumor classes. For the final evaluation, 20% of the BTMRII dataset was reserved as the independent test set, ensuring that no data leakage occurred between training and evaluation. This subset contained representative samples from all classes, thereby enabling a balanced assessment of classification and segmentation performance. Specifically, the test set consisted of 258 Glioma, 260 Meningioma, 108 Neurocytoma, 113 Normal, 51 Other, and 94 Schwannoma classes. The inclusion of multiple tumor subtypes in the evaluation phase ensured that the models were validated under diverse clinical scenarios, reflecting both common and relatively rare brain tumor categories.

In this study, DenseNet121, Xception, U-Net, and the proposed ARU-Net were selected for evaluation based on their complementary strengths and established performance in medical image analysis. DenseNet121, with its densely connected convolutional blocks, enables efficient feature reuse and mitigates vanishing gradient problems, making it a strong candidate for extracting discriminative features from MRI data. Xception, on the other hand, leverages depthwise separable convolutions to capture fine-grained spatial features while maintaining computational efficiency, which is particularly advantageous for high-resolution medical imaging tasks. For segmentation-oriented classification, U-Net was incorporated due to its widespread success in biomedical image segmentation, where the encoder–decoder structure and skip connections allow precise localization of tumor regions. To further advance this line of research, the proposed ARU-Net model was developed, integrating architectural refinements and an optimized training strategy to better handle class imbalance and heterogeneous tumor morphology. The inclusion of these models provided both baseline comparisons with well-established DL architectures and a platform to demonstrate the added value of the proposed ARU-Net in brain tumor classification and segmentation.

To address the class imbalance within the BTMRII dataset, we employed two complementary strategies. First, we utilized a class-weighted categorical cross-entropy loss function, where higher weights were assigned to underrepresented classes. This adjustment penalized misclassification of rare tumor types more strongly, guiding the model toward improved balance across categories. Second, a balanced mini-batch sampling procedure was adopted to ensure that each training batch contained approximately equal representation from both majority and minority tumor classes. This reduced the risk of biased learning toward dominant categories. During the training phase, categorical cross-entropy loss was employed as the objective function for all classification-based models, including DenseNet121, Xception, U-Net, and the proposed ARU-Net. Cross-entropy is widely recognized as a suitable loss function for multi-class classification problems, as it measures the dissimilarity between the true label distribution and the predicted probability distribution [

55]. Penalizing incorrect predictions more strongly enables stable convergence and effective optimization when combined with gradient-based learning. For the proposed ARU-Net, enhanced from the U-Net architecture, which was adapted as a segmentation-oriented classifier in this study, cross-entropy loss was also adopted to maintain consistency in evaluation and to ensure comparability across models. Its mathematical formula is given in Equation (11):

Here, the total number of samples is defined by

N,

C defines the total number of classes,

y defines the true label, and the probability that it is predicted by the model. To comprehensively assess the segmentation performance of the proposed ARU-Net model, multiple evaluation metrics were employed. These include the accuracy, which is given in Equation (12); the precision, which is given in Equation (13), the recall, which is given in Equation (14); the F1-score, which is given in Equation (15) [

56]; the IoU, which is given in Equation (16); and the DSC, which is given in Equation (17) [

57]. Each of these metrics provides complementary insights into the effectiveness of the segmentation by quantifying different aspects of the overlap between the predicted and ground truth regions. Their mathematical definitions are given as follows:

Here, TP (True Positive), TN (True Negative), FP (False Positive), and FN (False Negative) denote the fundamental components of the confusion matrix. While the IoU and DSC focus on evaluating the overlap between the predicted and true tumor regions, the precision and recall quantify the model’s ability to correctly detect positive samples without excessive false alarms. The accuracy gives an overall measure of the classification correctness, whereas the F1-score balances the precision and recall, making it especially useful in the presence of class imbalance.

Performance Analysis

To systematically compare the effectiveness of different DL approaches in brain tumor segmentation, a series of experiments were conducted using the BTMRII dataset, and the corresponding results are organized into comprehensive tables and visualizations.

Table 1 presents a detailed summary of eight evaluation metrics including the accuracy, precision, recall, F1-score, IoU, DSC, GFlops, and the number of parameters (Param) obtained from four state-of-the-art DL-based methods. These metrics collectively provide both computational and predictive performance insights, enabling a thorough assessment of each model. To visually illustrate the performance differences among the evaluated methods, representative segmentation results are provided, demonstrating the models’ ability to delineate tumor boundaries accurately. Additionally, to validate the quality and reliability of the segmentation outcomes, various diagnostic tools were employed, including confusion matrices, accuracy and loss curves, and multiclass ROC curves, which further highlight the precision and robustness of the segmented regions across different tumor categories. Furthermore, to assess the effectiveness of the pre-processing pipeline applied to the BTMRII dataset,

Table 1 also includes comparisons between the segmentation results obtained from the original images and those pre-processed through CLAHE, denoising, and Linear Kuwahara filtering. This comparative analysis provides a clear demonstration of how the pre-processing steps enhance the model performance and improve the quality of tumor delineation.

In this study, the performance of four DL models, DenseNet121, Xception, U-Net, and the proposed ARU-Net, was systematically evaluated on the BTMRII dataset using both original (raw) and pre-processed MRI images.

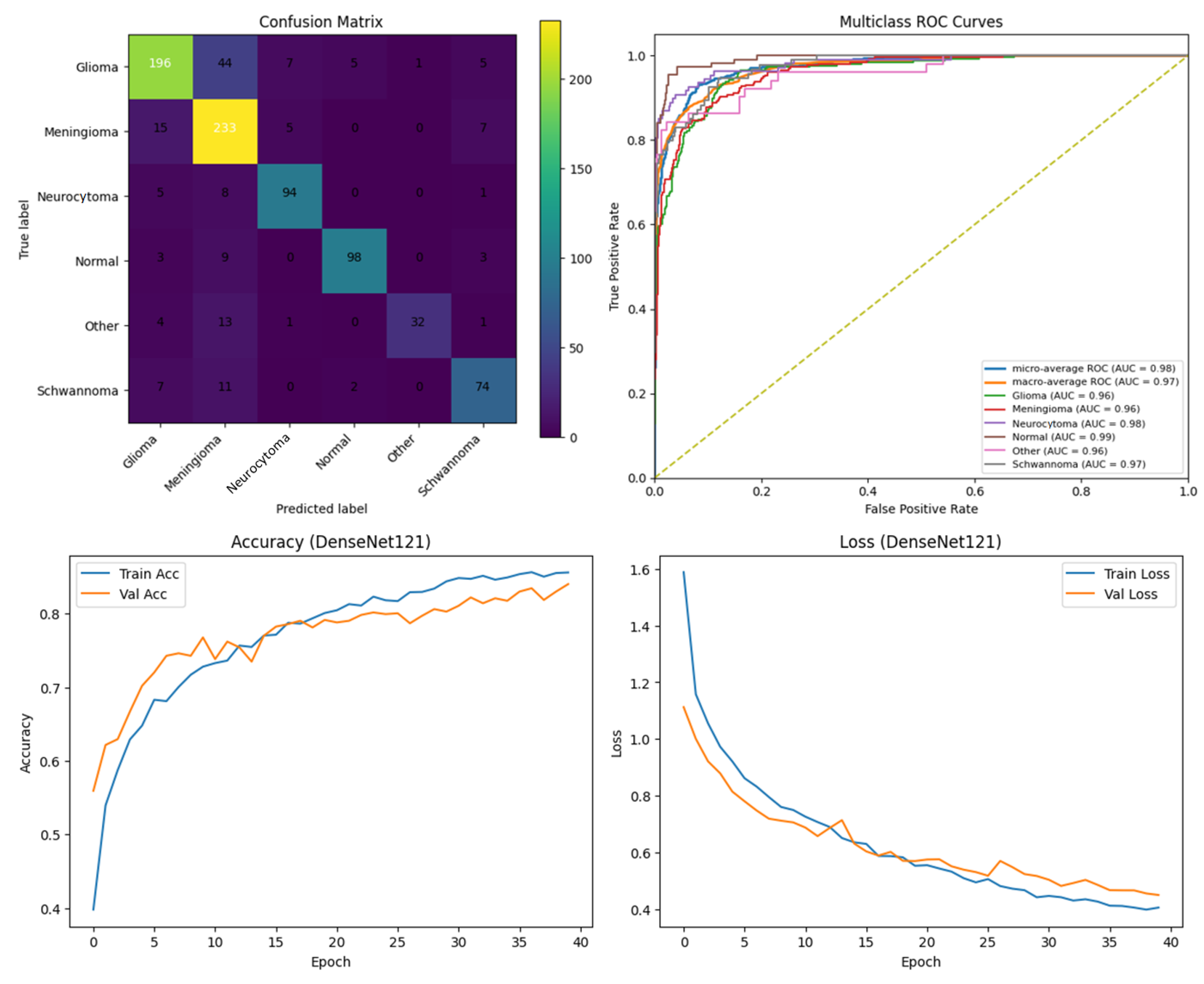

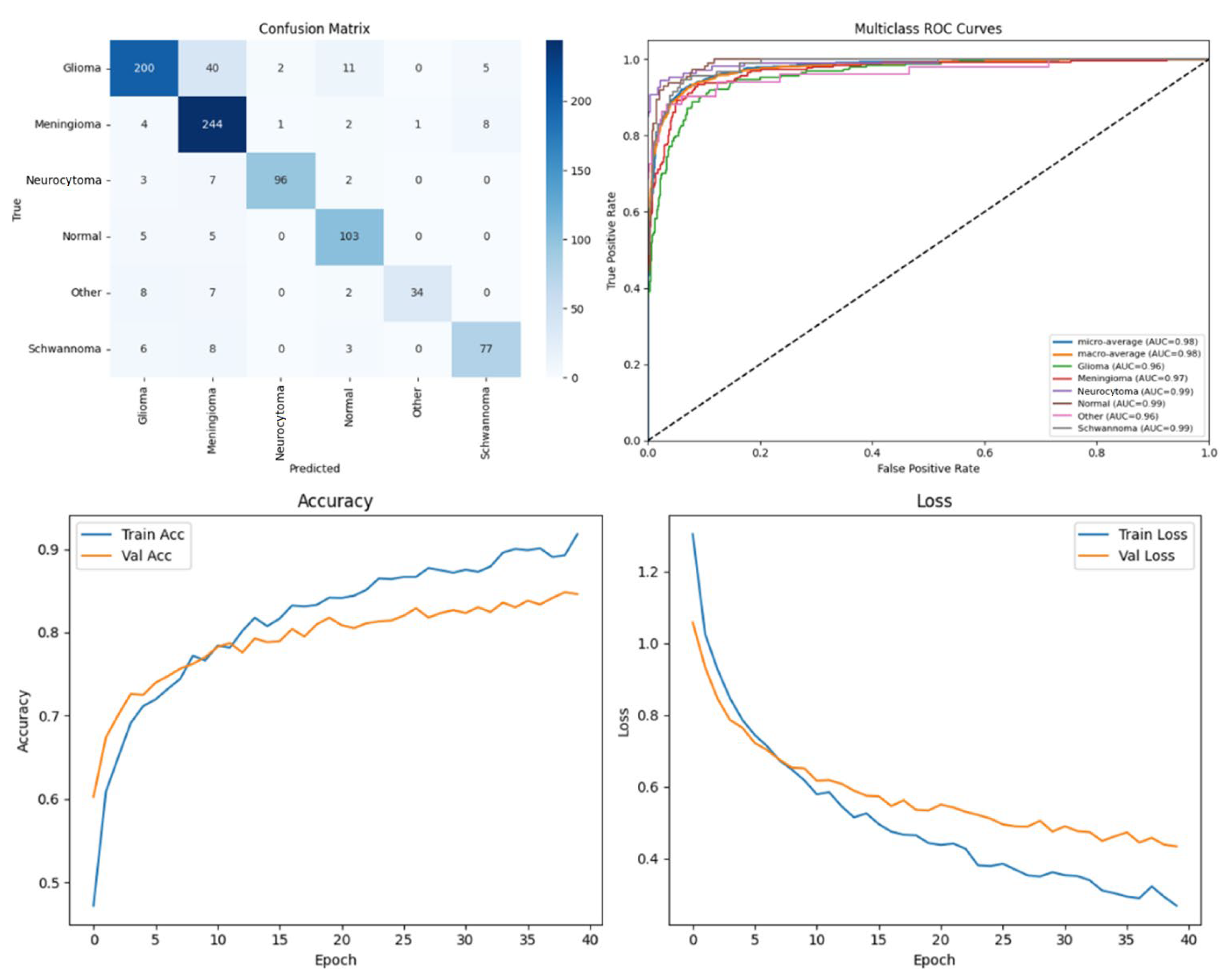

Table 1 summarizes the comparative performance metrics. The experimental results indicate a consistent improvement in segmentation and classification performance when pre-processing techniques (CLAHE, denoising, and Linear Kuwahara filtering) were applied. For instance, DenseNet121 achieved an accuracy increase from 77.5% on the raw images to 82.2% on the pre-processed images, accompanied by improvements in the precision (82.0–86.3%) and F1-score (78.4–82.4%). Similarly, Xception showed a gain in accuracy from 81.0% to 85.3%, and U-Net improved from 90.0% to 93.9%. The ARU-Net model, which already demonstrated the highest baseline performance, benefited further from pre-processing, achieving 98.3% accuracy compared to 96.0% on the raw images. These results clearly demonstrate that appropriate pre-processing enhances the feature contrast, reduces noise, and preserves structural details, which collectively contribute to more accurate segmentation and classification outcomes.

U-Net also performed strongly, achieving 93.9% accuracy on pre-processed images, which underscores the advantage of encoder–decoder architectures for pixel-wise segmentation tasks. DenseNet121 and Xception, while primarily designed for image-level classification, achieved reasonable performance improvements with pre-processing but were outperformed by U-Net and ARU-Net in metrics sensitive to spatial segmentation quality (IoU and DSC).

To evaluate the computational efficiency of each network, the training time per epoch was measured on an NVIDIA RTX 4060Ti GPU using a batch size of 8. This metric complements the reported accuracy and model complexity (GFlops and parameter count) by providing practical insights into the relative training speed of each architecture.

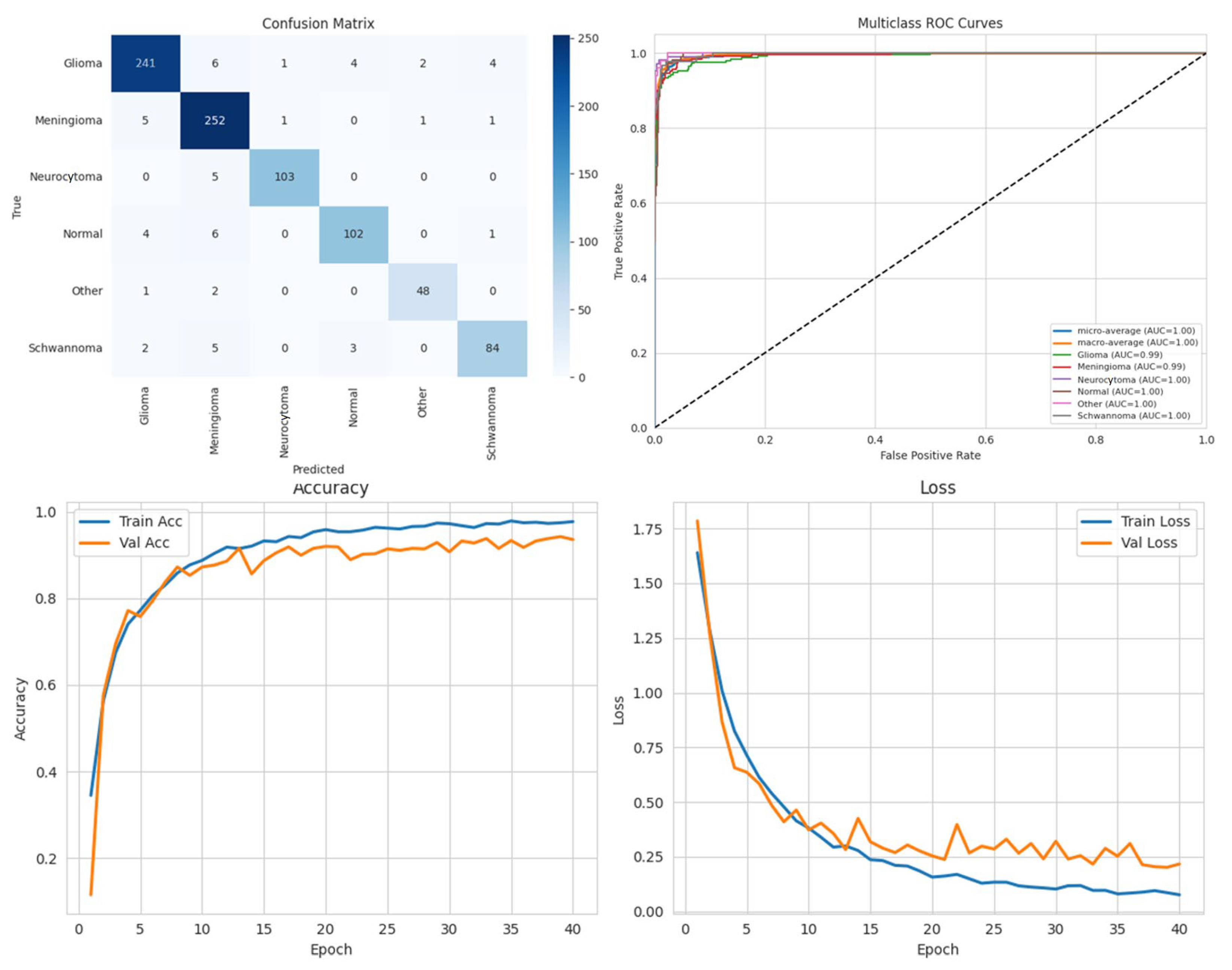

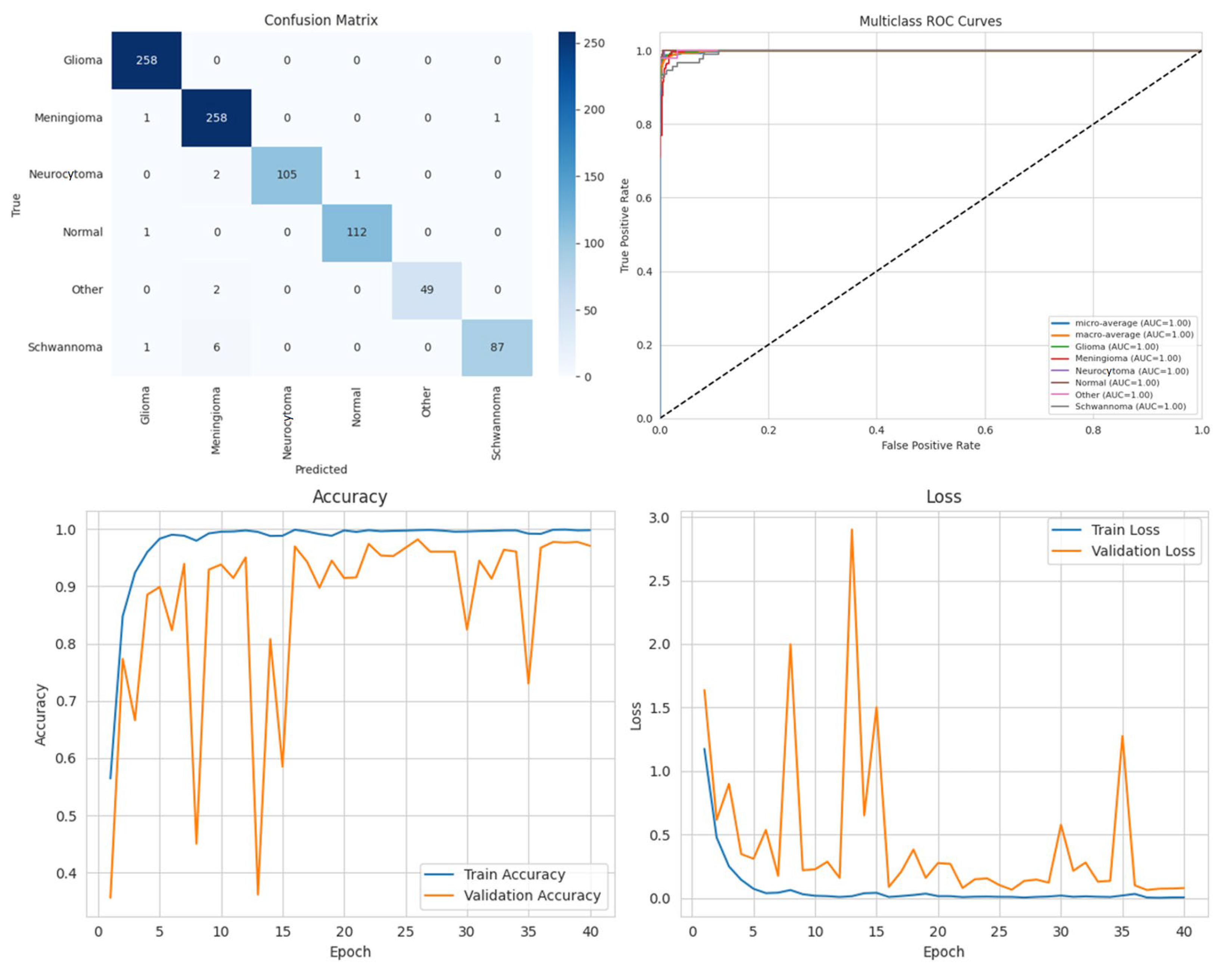

Among the four models, ARU-Net consistently outperformed the others across all metrics and dataset types. On the pre-processed images, ARU-Net achieved the highest precision (99.0%), recall (95.7%), F1-score (98.1%), IoU (96.3%), and DSC (98.1%), while maintaining moderate computational complexity (788.4 GFlops) and a manageable number of parameters (30.45 M). This demonstrates the efficacy of its attention-based architecture and EfficientNetB0 encoder in capturing multi-scale contextual information. Confusion matrices, accuracy and loss curves, and multiclass ROC curves of the pre-processed experiment results of the BTMRII dataset for four different models are shown in

Figure 7,

Figure 8,

Figure 9,

Figure 10 and

Figure 11.

Comparing the models on the raw versus pre-processed images highlights the importance of data enhancement strategies. All models exhibited lower performance on the raw images, particularly in IoU and DSC metrics, which are directly related to the segmentation quality. For example, DenseNet121’s IoU decreased from 70.3% (pre-processed) to 65.0% (raw), and ARU-Net’s DSC decreased from 98.1% to 95.0%. These differences suggest that pre-processing not only improves the classification accuracy but also enables models to better delineate tumor boundaries, which is critical for clinical applicability.

To mitigate the potential overfitting observed in the training curves spanning 0 to 40 epochs (plotted in increments of 5), early stopping was applied based on validation loss with a patience of 10 epochs. Additionally, the input images were normalized, and the pre-processing steps including CLAHE, denoising, and Linear Kuwahara filtering were used to enhance the feature quality. A mini batch size of eight provided balanced gradient stability with model generalization.

The comparative analysis also includes the computational efficiency. While U-Net has higher GFlops (796.5) due to its encoder–decoder design, ARU-Net achieves superior performance with lower computational cost (788.4 GFlops), demonstrating a favorable balance between accuracy and efficiency. DenseNet121 and Xception, although deeper and parameter-heavy, did not achieve comparable segmentation metrics, indicating that the model architecture and suitability for segmentation tasks are as important as the model depth and parameter count.

Overall, these results emphasize three key points: (i) the application of pre-processing significantly enhances the model performance by improving the image quality and tumor visibility; (ii) attention-based encoder–decoder architectures such as ARU-Net are particularly effective for multi-class brain tumor segmentation; (iii) computationally efficient models can achieve a high segmentation performance without excessive parameter overhead, which is crucial for practical deployment in clinical settings. In conclusion, the combination of pre-processing strategies and carefully designed DL architectures can substantially improve automated brain tumor segmentation, enabling more accurate diagnosis and treatment planning.

In this section, we present the experimental results obtained from the benchmark models (DenseNet121, Xception, and U-Net) and the proposed ARU-Net architecture. To comprehensively evaluate the performance, confusion matrices, multiclass ROC curves, and accuracy/loss curves were generated for each model. In addition, the quantitative performance metrics reported in

Table 1 are supported by qualitative segmentation visualizations, enabling a holistic comparison across models.

Table 1 summarizes the comparative performance of all models on both the original and pre-processed BTMRII datasets. As expected, the pre-processing pipeline comprising CLAHE enhancement, denoising, and Kuwahara filtering yielded significant improvements across all evaluation metrics. The proposed ARU-Net consistently outperformed the other models, achieving the highest accuracy (98.3%), F1-score (98.1%), and DSC (98.1%) on the pre-processed BTMRII dataset. Notably, ARU-Net also maintained superior performance when trained on raw unprocessed data, where it still outperformed U-Net, DenseNet121, and Xception by a considerable margin.

The observed volatility in validation loss and accuracy in

Figure 10 may be attributed to the moderate batch size of eight, the complexity of the ARU-Net architecture, and the heterogeneity of the BTMRII dataset. To address this instability, the learning rate was carefully tuned to 1 × 10

−4 with the Adam optimizer, and early stopping based on validation loss was employed. Additionally, pre-processing steps such as CLAHE, denoising, and Linear Kuwahara filtering improved the input consistency, helping to stabilize the training and enhance the generalization.

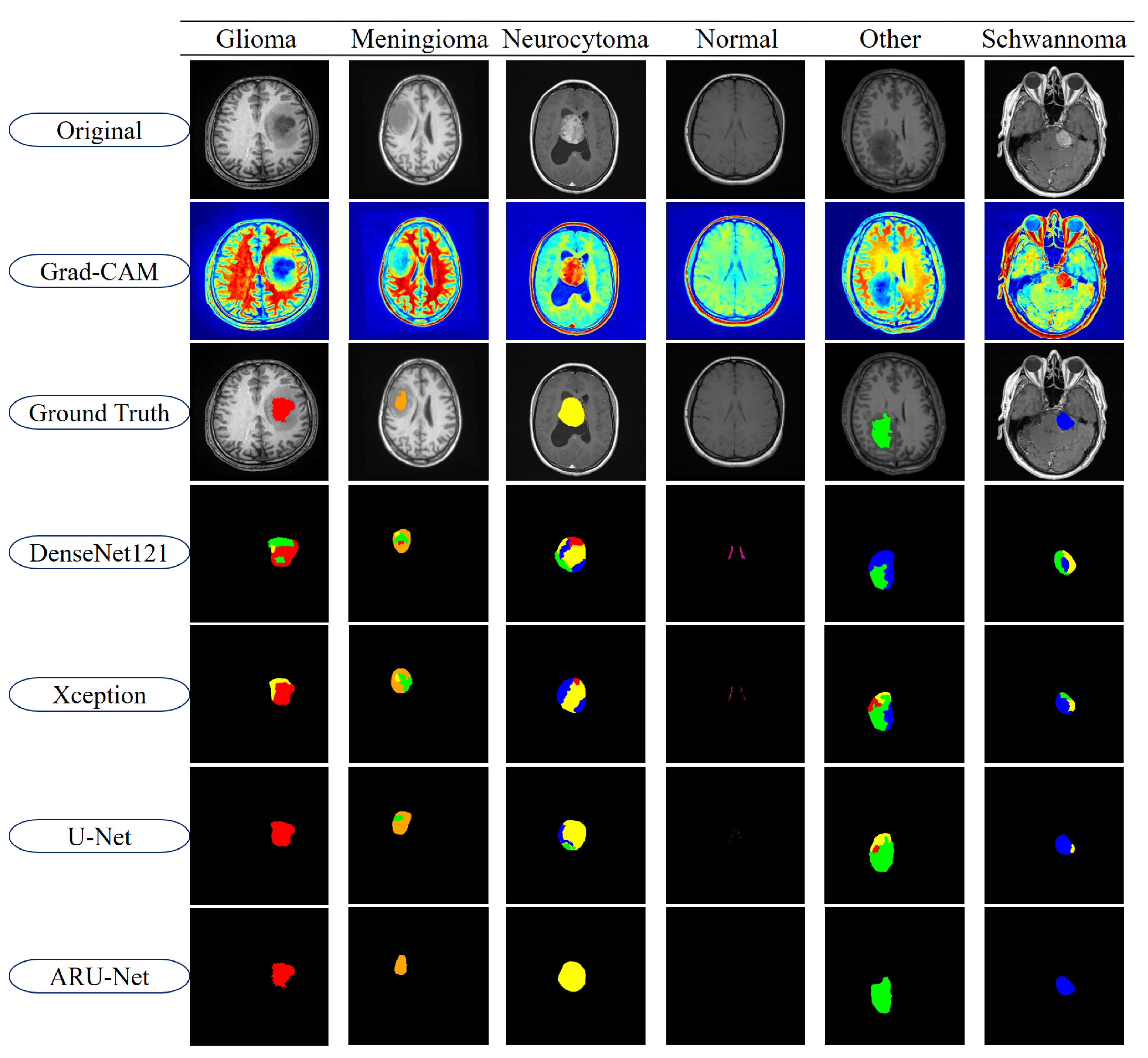

To further highlight the differences between models, segmentation outputs are visualized in

Figure 11. The figure displays representative examples from each of the six tumor classes in the BTMRII dataset. For each case, the original input, Grad-CAM heatmaps, ground truth annotations, and segmentation predictions from DenseNet121, Xception, U-Net, and ARU-Net are shown. The color-coded masks illustrate class-specific tumor regions and highlight discrepancies between the model predictions and ground truth boundaries.

As illustrated in

Figure 11, DenseNet121 and Xception exhibited noticeable misclassifications and boundary inconsistencies, particularly in glioma and meningioma cases. These models frequently produced incomplete or noisy segmentations, leading to reduced reliability in clinical applications. U-Net demonstrated considerably stronger segmentation capabilities, successfully capturing most tumor structures with relatively smoother boundaries. However, minor deviations, including boundary misalignments and occasional regional misclassifications, were observed in classes such as Meningioma and Other. In contrast, the proposed ARU-Net model achieved highly accurate and robust segmentation across all tumor classes. Its predictions were characterized by smooth contours, precise tumor boundaries, and minimal false classifications, even in challenging cases where other models failed. ARU-Net’s enhanced attention mechanism allowed it to adapt effectively to intra-class variability, providing superior generalization and stability. This advantage was particularly evident in complex tumor regions, where ARU-Net closely replicated the ground truth shapes without over-segmentation or under-segmentation.

Overall, these findings confirm that ARU-Net not only improves upon traditional CNN-based architectures but also extends the capabilities of U-Net in both accuracy and robustness. The integration of cross-dimensional attention and residual connections in ARU-Net enables more discriminative feature representation, contributing to its superior segmentation quality. This strong performance across both quantitative metrics and qualitative evaluations underscores ARU-Net’s potential as a reliable model for automated brain tumor segmentation in clinical practice.

As shown in

Table 2, ablation experiments were conducted on the BTMRII dataset to assess the contribution of each proposed module to the overall segmentation performance. The baseline U-Net model achieved an accuracy of 93.9%, a precision of 94.3%, an IoU of 86.6%, and a DSC of 93.7%. However, it showed limitations in segmenting tumors with irregular boundaries and heterogeneous structures. After integrating the residual module (U-Net + Res), the model’s performance significantly improved across all metrics, with the DSC increasing from 93.7% to 95.6% (a relative gain of +1.9%). This demonstrates that the residual module enhances the network’s feature extraction capability and improves its ability to capture fine boundary details, thereby achieving more complete tumor segmentation. Adding the ACA module (U-Net + Res + ACA) provided a further boost, increasing the DSC to 97.0% and IoU from 91.6% to 94.3% (a gain of +2.7%), which highlights the effectiveness of ACA in refining channel attention, allowing the model to focus on the most relevant features and suppress redundant information. Despite these improvements, minor segmentation errors remained in certain fine-grained regions. Finally, by incorporating the DTA module (ARU-Net), the model achieved the best overall performance, with an accuracy of 98.3%, DSC of 98.1%, and IoU of 96.3%. These results confirm that the DTA further strengthens the model’s contextual understanding by effectively integrating multi-scale information, thereby leading to the most robust and accurate segmentation performance among all variants.

5. Discussion

The results demonstrate that ARU-Net provides notable advantages over traditional U-Net and other deep learning architectures. The consistent performance gains indicate that residual connections effectively mitigate gradient degradation, while ACA and DTA modules enhance multi-scale feature extraction and channel–spatial dependency modeling. These findings align with recent studies that have emphasized the role of attention mechanisms in refining segmentation accuracy [

21,

22,

23,

24,

25,

26,

27]. Furthermore, the visual segmentation improvements observed in boundary delineation highlight ARU-Net’s potential clinical utility, as precise contours are critical for treatment planning. However, the performance variations across tumor classes also reflect the challenges posed by class imbalance, suggesting the need for future work incorporating balanced sampling or advanced augmentation strategies.

Although our framework does not include a separate feature selection or classifier stage, the impact of each architectural component was assessed through ablation studies. Specifically, residual connections, ACA, and DTA modules were incrementally added to the baseline U-Net, and their respective contributions to segmentation performance were quantified. This provides a clear understanding of how each design choice contributes to the overall effectiveness of ARU-Net.

One limitation of this study is the inherent class imbalance present in the BTMRII dataset, where certain tumor types are significantly underrepresented. To mitigate this issue, we adopted balanced mini-batch sampling and a class-weighted loss function rather than applying extensive data augmentation. This choice was made to preserve the original distributional characteristics of the dataset while still preventing bias toward the majority classes. Although effective in improving segmentation consistency across categories, future work could further investigate advanced imbalance handling strategies, such as focal loss or synthetic data generation, to enhance robustness.

While the BTMRII dataset provides high-quality manual annotations, one limitation is that intra-tumoral heterogeneity (e.g., necrosis, edema, enhancing regions) was not separately annotated. Instead, tumors were annotated as whole regions within their respective categories. This choice simplifies the segmentation task and ensures inter-rater consistency across a large dataset, but it does not fully capture the biological complexity of tumor sub-regions. Future work could leverage datasets such as BraTS, where multi-label annotations of tumor sub-structures are available, to extend ARU-Net for more fine-grained clinical applications.

Recent studies have also highlighted the effectiveness of integrating advanced deep learning strategies to enhance medical image segmentation. For instance, Sharif et al. demonstrated that hybrid convolutional recurrent architectures can significantly improve the modeling of spatial and contextual dependencies in medical images [

58]. Similarly, Khalil et al. proposed an attention-guided residual network that leverages channel and spatial information to achieve more accurate delineation of tumor regions [

59]. In addition, Khan et al. emphasized the importance of robust U-Net variants and demonstrated their capability to generalize effectively across diverse tumor subtypes [

60]. These findings are consistent with our results, as the proposed ARU-Net also benefits from residual and attention mechanisms, confirming their value in capturing heterogeneous tumor structures and improving segmentation performance.

In comparison with recent advanced architectures, the proposed ARU-Net demonstrates distinct advantages in multi-class brain tumor segmentation. For instance, the SLCA-UNet [

32] and Adaptive Cascaded Transformer U-Net [

33] primarily focus on integrating transformer modules to capture global contextual information, which can improve the segmentation of large homogeneous regions but may struggle with fine-grained tumor boundaries or highly heterogeneous tumor classes. Similarly, S

3-Mamba [

34] and the Edge-interaction Mamba Network [

35] leverage Mamba-based attention to enhance feature representation; however, their evaluation predominantly targets lesion sensitivity or glioma-specific datasets. In contrast, ARU-Net integrates residual connections, Adaptive Channel Attention (ACA), and Dimensional-space Triplet Attention (DTA) within a U-Net framework, explicitly designed to capture both channel-wise and spatial dependencies across multi-scale features. Experimental results on the BTMRII dataset show that ARU-Net not only achieves superior quantitative metrics (accuracy: 98.3%, DSC: 98.1%, IoU: 96.3%) compared to baseline U-Net, DenseNet121, and Xception but also provides smoother and more precise tumor boundary delineation across six heterogeneous tumor types. These findings suggest that, while transformer and Mamba-based models excel in capturing global contextual or edge-focused information, ARU-Net offers a more balanced approach by combining robust attention mechanisms with residual connections, effectively enhancing both segmentation accuracy and generalization across diverse tumor classes.

In conclusion, the experimental results demonstrate that ARU-Net delivers outstanding performance in brain tumor segmentation. Compared to the traditional U-Net and other attention-based variants, ARU-Net not only enhances the segmentation accuracy but also shows superior generalization capability. This establishes ARU-Net as an effective and practical solution for automated brain tumor image analysis, making it a strong candidate for clinical applications. Nevertheless, future work should focus on expanding evaluations to broader tumor types and larger datasets, with particular attention to addressing potential information loss in complex tumor shapes.

6. Conclusions

Brain tumors are among the most critical and life-threatening medical conditions, making accurate and automated segmentation of tumor regions in MRI scans essential for clinical diagnosis and treatment planning. In this study, we proposed ARU-Net, a novel DL architecture combining residual connections, ACA, and DTA modules to enhance the segmentation accuracy and robustness. The pre-processing pipeline, incorporating CLAHE, denoising, and Linear Kuwahara filtering, proved effective in improving the image quality, enhancing the feature clarity, and facilitating precise boundary delineation. Quantitative results on the BTMRII dataset show that ARU-Net significantly outperforms conventional methods, achieving 98.3% accuracy, 98.1% DSC, 96.3% IoU, and superior F1-scores, compared to DenseNet121, Xception, and U-Net. Moreover, visualizations of tumor regions demonstrate that ARU-Net provides smoother boundaries, more accurate contour delineation, and clearer differentiation of heterogeneous tumor structures than the other models. These findings confirm that the proposed architecture, combined with effective pre-processing, leads to highly reliable and precise tumor segmentation. Overall, ARU-Net presents a robust practical solution for automated brain tumor image analysis, contributing novel methodological insights to the literature and exhibiting strong potential for clinical application. Future work will focus on extending evaluations to more diverse tumor subtypes and larger datasets, while addressing challenges related to complex tumor morphology and information loss in segmentation.