Episode- and Hospital-Level Modeling of Pan-Resistant Healthcare-Associated Infections (2020–2024) Using TabTransformer and Attention-Based LSTM Forecasting

Abstract

1. Introduction

1.1. Antimicrobial Resistance in ICUs

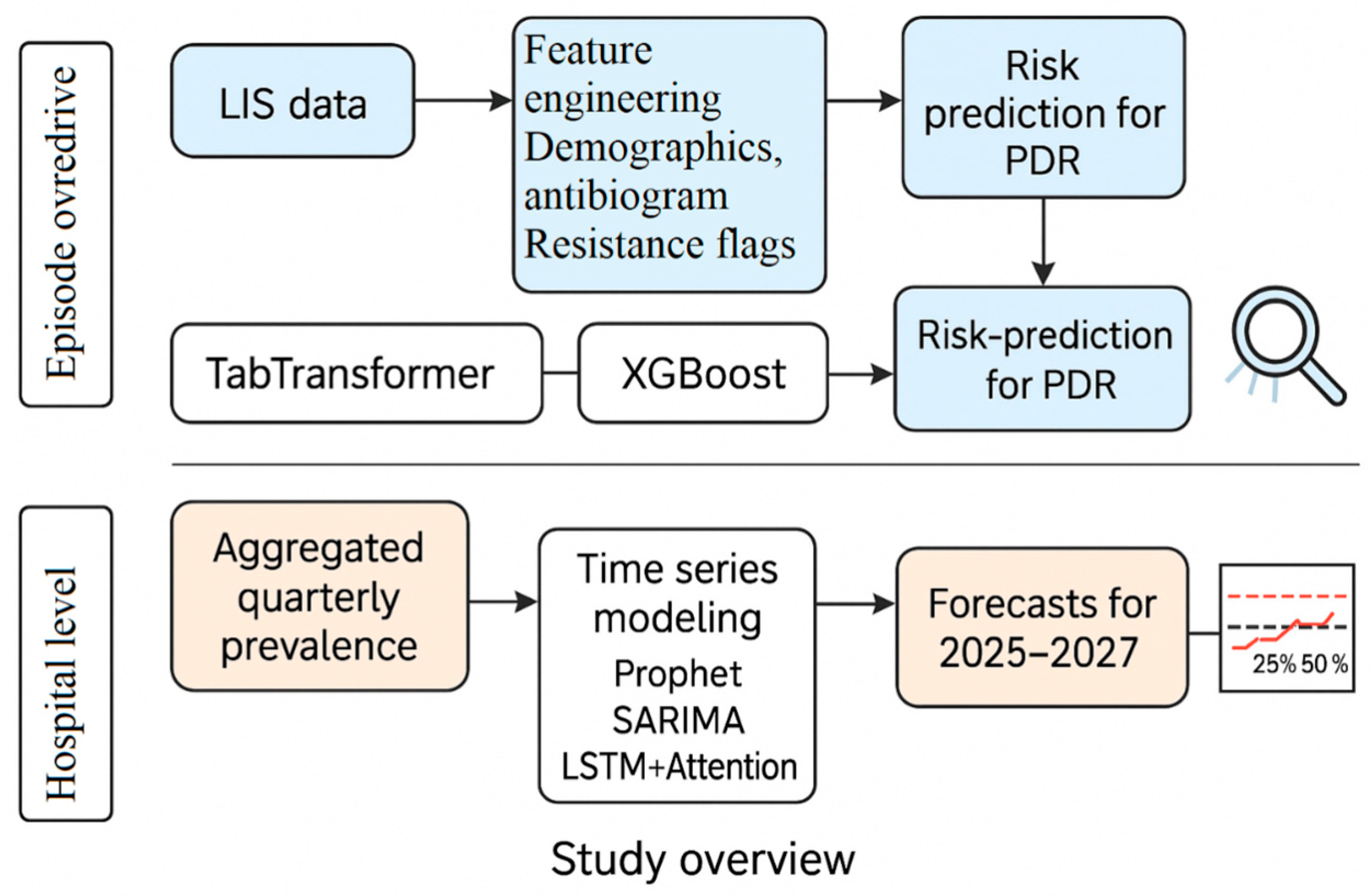

1.2. The Need for Dual-Scale Modeling

- Hospital-Level Forecasting: Infection control teams and administrators require forward-looking estimates of AMR trends to guide procurement, allocate ICU resources, and plan antimicrobial stewardship efforts [11].

1.3. Existing Work and Remaining Gaps

- Patient-level PDR classification using embedded categorical inputs (e.g., species and antibiograms);

- Multi-horizon hospital-level forecasting using attention-based temporal models.

1.4. Our Contributions

- Episode-Level Modeling: We used the TabTransformer architecture [19] to model structured EHR and LIS features, including demographics, ward location, infection type (IAAM), species flags, and 25-drug antibiograms. TabTransformer was selected for its ability to learn complex interactions among high-cardinality categorical variables. We compare its performance to XGBoost and interpret predictions using SHAP.

- Hospital-Level Forecasting: We developed a Temporal Fusion Transformer-inspired model (TFT-lite) [20], implemented as an LSTM with an attention mechanism and seasonal encoding. This model was benchmarked against Prophet and SARIMA. The choice of LSTM-based attention models reflects their superior performance in capturing non-linear, non-stationary patterns in limited hospital time series.

- Modeling-Ready Dataset: We cleaned and structured LIS export data into 270 microbiologically confirmed infection episodes, each with 65 features, including time-to-infection and resistance classifications.

- Label Definition: PDR labels were assigned based on Magiorakos et al. (2012) [5], with “not tested” values imputed as susceptible to ensure consistent labeling from the 25-drug antibiogram.

2. Materials and Methods

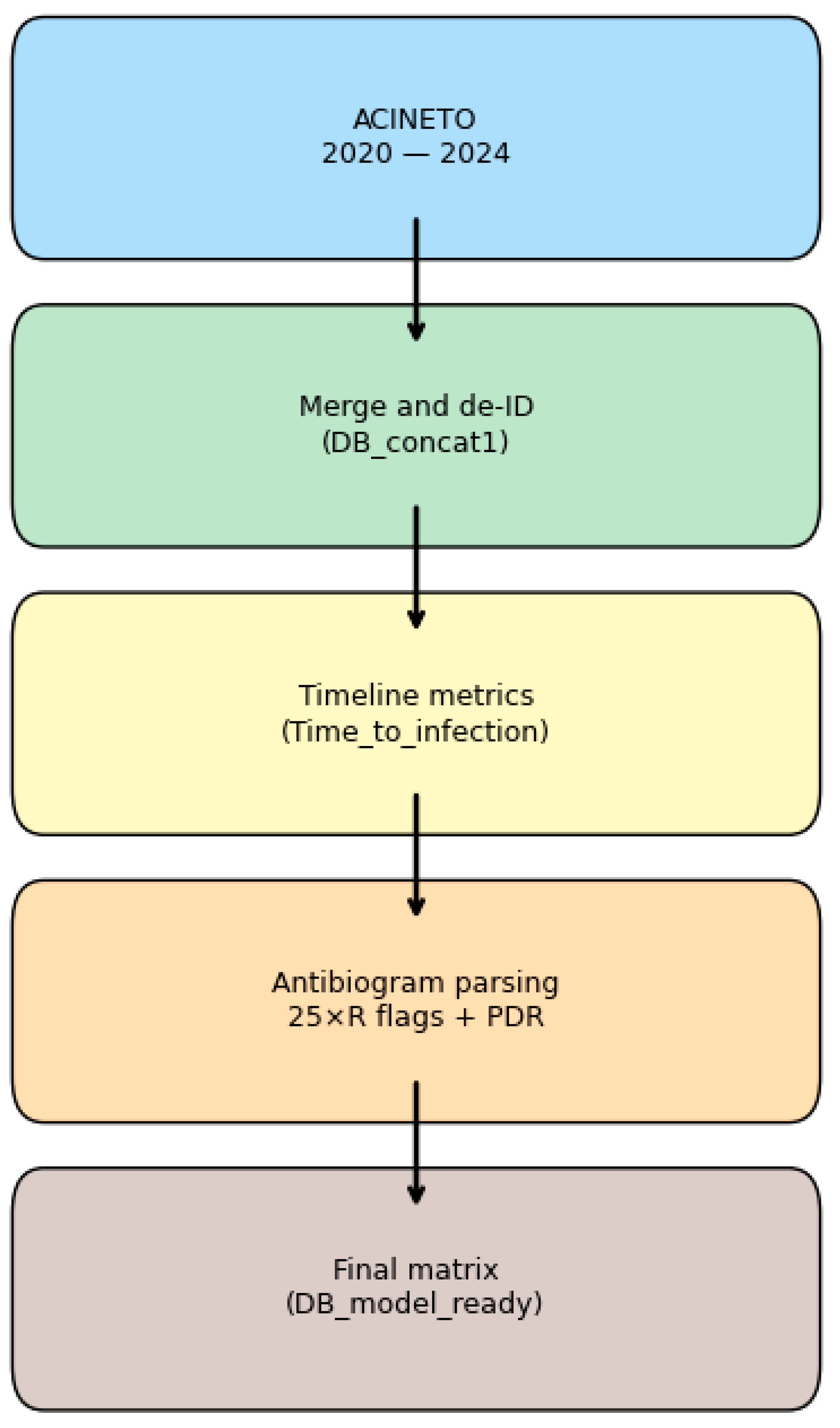

2.1. Dataset and Feature Engineering

2.1.1. Data Source and Cohort Description

- Inclusion Criteria: adult patients with confirmed Acinetobacter isolate and a complete antibiogram.

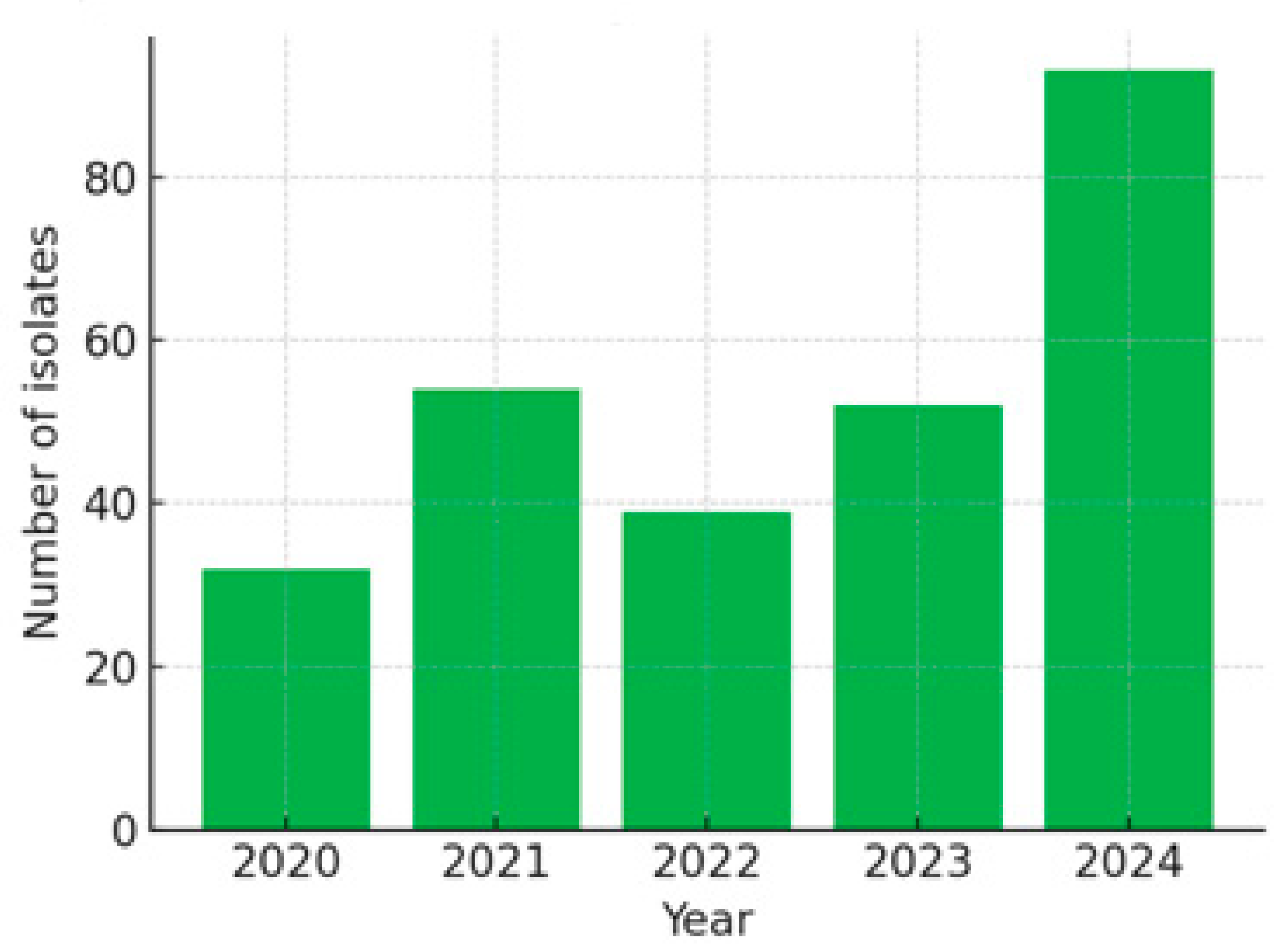

- Total Episodes: 270, distributed as follows: 2020: 32 episodes; 2021: 54 episodes; 2022: 39 episodes; 2023: 52 episodes; 2024: 93 episodes.

2.1.2. Timeline Features

2.1.3. Antibiogram Parsing and Resistance Labels

- Each drug was encoded as: 1 = Susceptible, 0.5 = Intermediate, 0 = Resistant.

- Non-tested values (e.g., NT or −1) were conservatively imputed as 1 (Susceptible) to avoid false inflation of resistance estimates. This assumption prioritizes specificity but may under-represent true resistance in some cases.

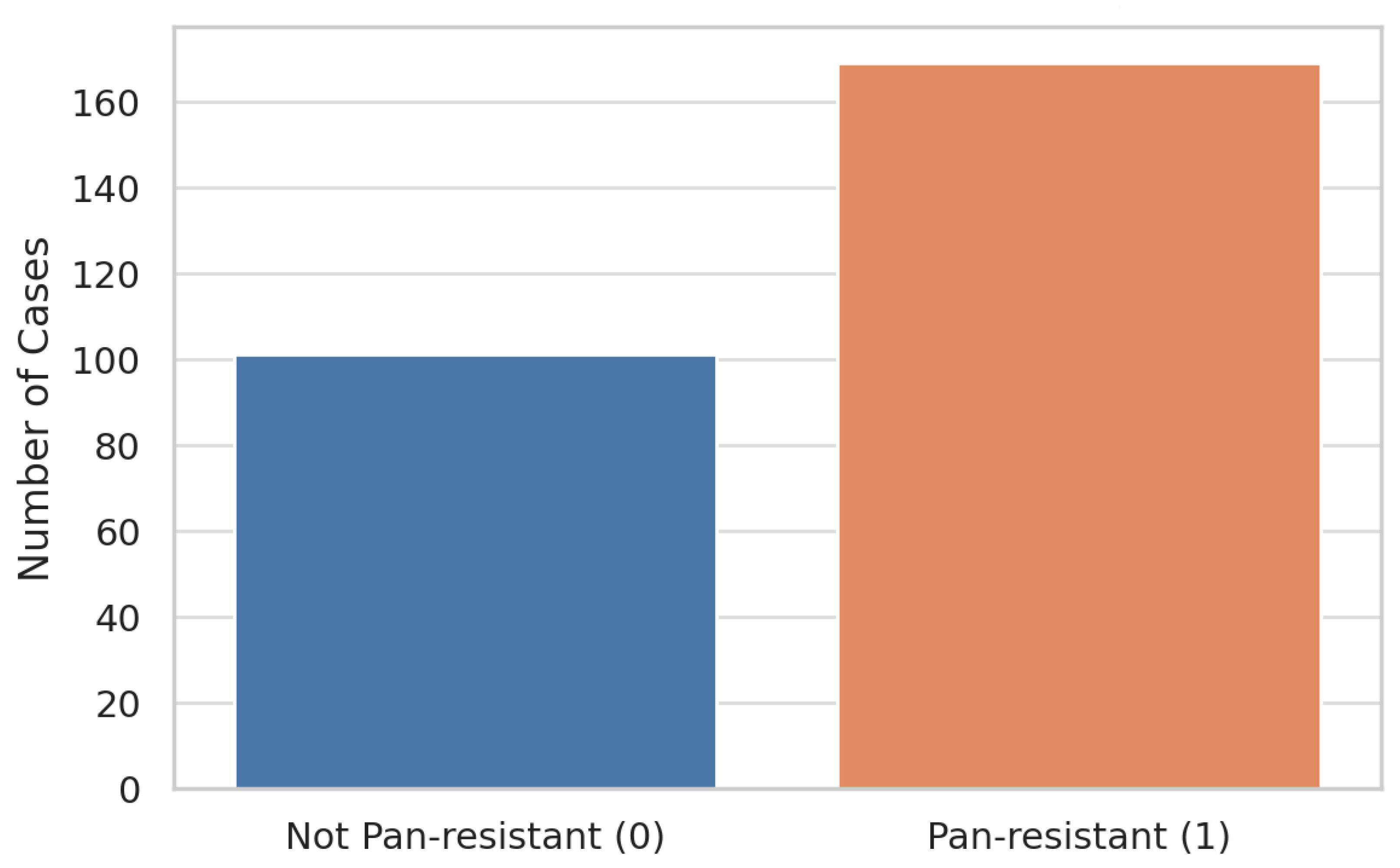

- Pan-drug resistance (PDR) was defined as per Magiorakos et al. (2012) [5] as resistance to all tested agents. Using this definition, 169 of 270 episodes (62.6%) were labeled as PDR.

2.1.4. Feature Engineering

- Demographics and Ward Assignment

- Sex (binary), Age (years), Ward (ICU/non-ICU), and Ward Dummies.

- Infection Type and Pathogen

- Infection_Type, Identified_Pathogen, is_A_calcoaceticus, and is_A_lwoffi.

- Resistance Class Flags

- Is_RC, is_MDR, and is_ESBL (binary).

- Timeline Features

- Admission_Date, Infection_Detection_Date, Length_of_Stay, and Time_to_Infection.

- Antibiotic Susceptibility

- Twenty-five antibiotic columns (AB_1 to AB_25), each encoded as follows: 1 = Susceptible, 0.5 = Intermediate, 0 = Resistant; AB_1—AMIKACIN through AB_25—NITROFURANTOIN.

- Outcome

- Pan_resistant: binary outcome (1 = PDR).

- Temporal Metadata

- Year: integer (2020–2024).

2.2. Exploratory Data Analysis

2.2.1. Class Distribution and Handling of Imbalance

- Evaluation Metrics: Because overall accuracy can be misleading in imbalanced datasets, we emphasize threshold-independent metrics such as AUROC and AUPRC, along with recall and F1-score, to fairly evaluate model performance.

- Class Handling in Training: All cross-validation folds are stratified on the pan_resistant label to preserve class proportions. Where algorithmically supported, inverse-frequency class weights are applied to penalize misclassification of the minority class. In sensitivity analyses, we also evaluate the impact of SMOTE (Synthetic Minority Over-Sampling Technique) to address class imbalance.

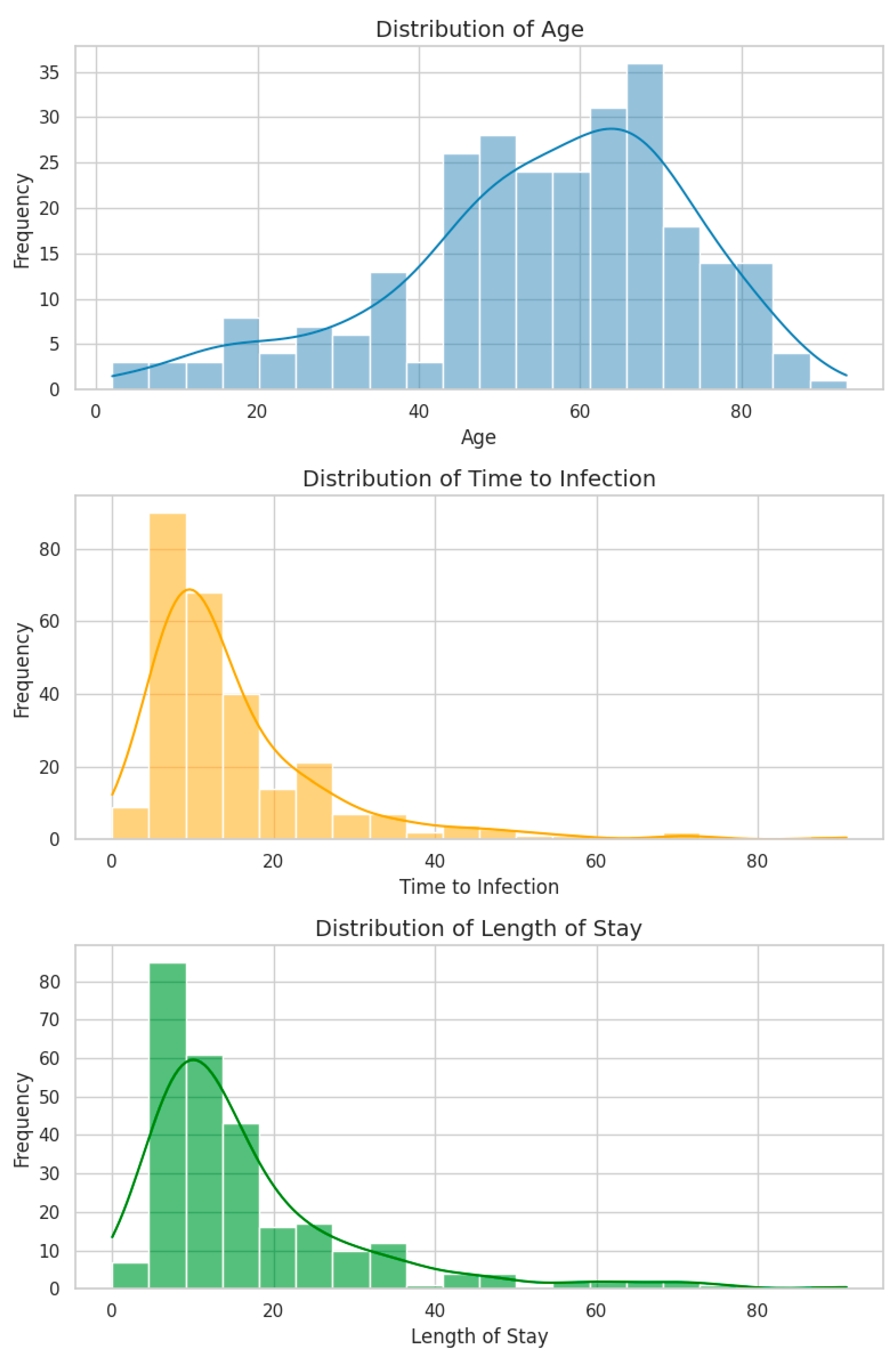

2.2.2. Numeric Feature Distributions

- All three variables exhibit positive skewness (right-tailed), a typical pattern in clinical datasets involving hospitalization duration.

- Age spans from 2 to 93 years (mean: 55.4, median: 57.5), with a concentration in the 55–65-year range, consistent with the age distribution of ICU patients.

- Time to Infection (days from admission to positive culture) and LOS show similar distributions, both peaking at 10–15 days and tapering into long tails extending beyond 90 days.

- Notably, 8 episodes (2.9%) had a Time to Infection = 0 days, potentially representing community-acquired infections or data entry errors. These were retained as their impact on distribution and modeling was minimal.

- A strong positive correlation was observed between LOS and Time to Infection (Spearman’s ρ = 0.73, p < 0.001), suggesting that they largely track the same clinical timeline. This correlation raises considerations around multicollinearity in regression models.

2.2.3. Resistance Flags and Antibiotic Profiles

- Nearly all antibiotics show negative correlations with PDR, indicating that retained susceptibility (value = 1) is inversely related to pan-resistance. This is biologically expected and statistically reassuring.

- AB_4 stands out with a positive correlation with the pan_resistant label, raising concern about either mislabeling or a biologically atypical pattern. This feature will be specifically audited before modeling.

- Summary flags is_RC (resistance class) and is_ESBL show near-zero correlation with the outcome due to their low variance in the dataset—RC is nearly universal (~98%), and ESBL is rare (~1.5%).

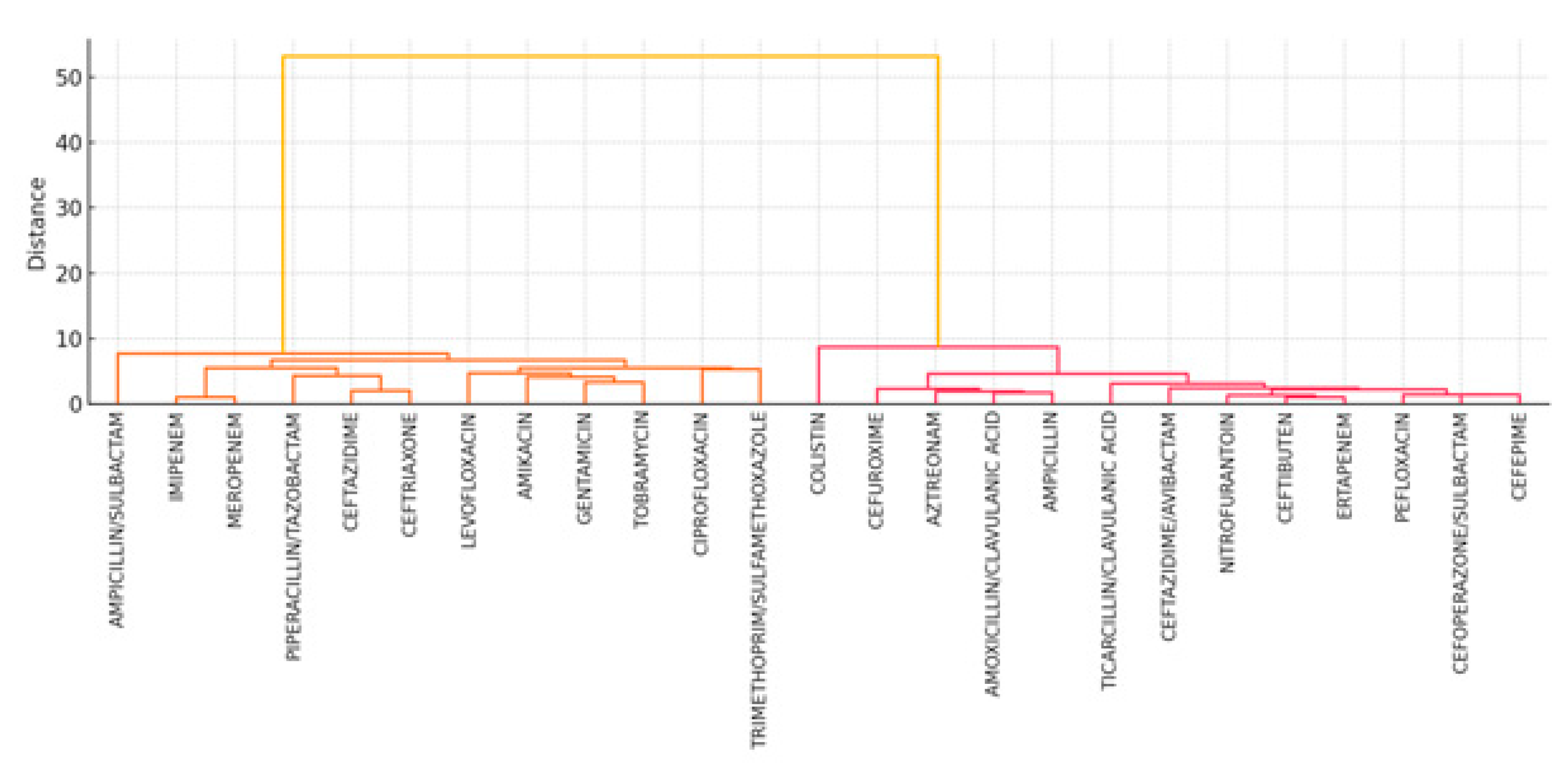

- Strong intra-class correlations are observed among antibiotics from the same pharmacologic groups (e.g., carbapenems and aminoglycosides), reflecting known patterns of cross-resistance in Acinetobacter spp.

- Individual antibiotic resistance flags appear more predictive of pan-resistance than high-level summary indicators like is_MDR or is_RC, which show low variability and low signal-to-noise ratios.

- Multicollinearity among antibiotics (particularly within classes) necessitates control through Elastic-net regularization for linear models and the use of tree-based methods (e.g., gradient boosting), which are more robust to correlated features.

2.2.4. Univariate Associations with Pan-Resistance

- None of the numeric variables demonstrated a statistically significant difference between the PDR and non-PDR groups after adjustment (q > 0.05).

- Among the categorical and antibiotic features, nine antibiotics showed statistically significant associations with PDR (q < 0.05), with the strongest signals arising from carbapenems (e.g., AB_16 and AB_18), fluoroquinolones (e.g., AB_4), and aminoglycosides (e.g., AB_23).

- One infection-type category (IAAM_UNKNOWN) also retained significance and will be included in downstream multivariate analysis.

2.2.5. Multicollinearity in the Antibiotic Block

- Low-variance filtering removed four binary variables with near-zero variance: is_A_calcoaceticus, is_RC, is_ESBL, and is_A_lwoffi. These features contribute minimal signals and can inflate model variance.

- We computed Variance Inflation Factors (VIFs) for the 21 remaining antibiotic resistance features (coded as 1 = resistant, 0 = susceptible). Twelve features exceeded the conventional VIF > 10 threshold, with AB_9 showing extreme multicollinearity (VIF ≈ 1300) (see Table 6).

- The highest VIFs were concentrated among carbapenems and β-lactams, confirming known pharmacologic cross-resistance and redundancy.

- Elastic-net regularization in linear models (e.g., logistic regression);

- Tree-based ensemble methods (e.g., XGBoost), which are robust to multicollinearity;

- Sensitivity analysis using grouped drug-class features to assess robustness.

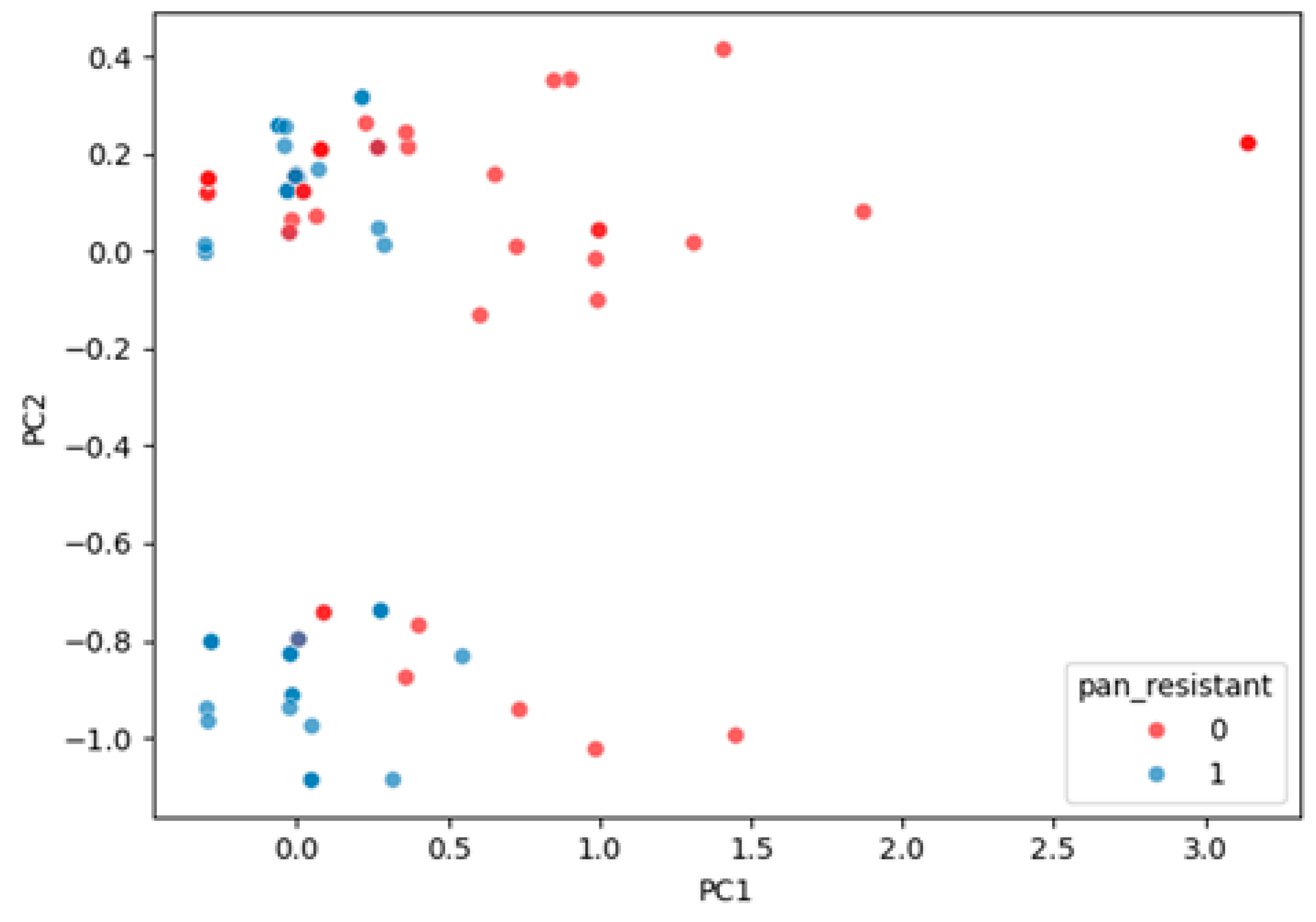

2.2.6. Latent Structure of Resistance

- The first principal component (PC1) accounted for 35.3% of total variance and strongly captured a global resistance gradient, effectively separating PDR from non-PDR cases.

- PC2 explained 11.9% of variance and reflected secondary co-resistance patterns but contributed limited additional discrimination.

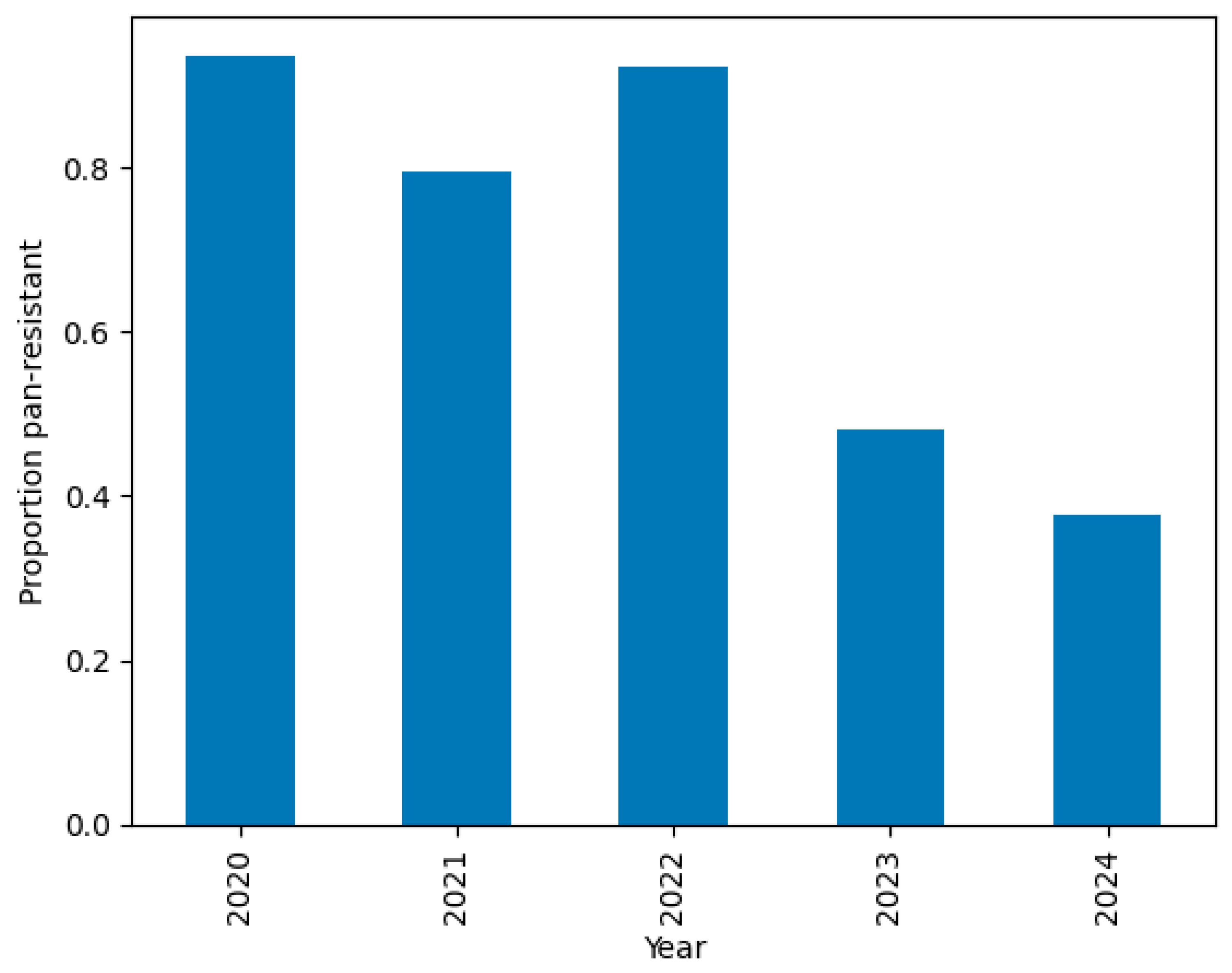

2.2.7. Temporal Drift

- PDR prevalence was >80% from 2020 to 2022 but dropped to 48% in 2023 and 38% in 2024.

- This temporal drift motivates the segregation of 2024 as a temporal hold-out set, used exclusively for external validation.

2.2.8. Baseline Discriminative Performance

- Continuous features were z-standardized, and binary features were retained as-is.

- L2 regularization was applied using the saga optimizer, and class imbalance was addressed with inverse class weights.

- Five-fold stratified cross-validation yielded a mean AUROC ≈ 0.75 ± 0.11, establishing a baseline performance threshold.

2.2.9. Antibiogram Patterns by Species, Ward, and Year

- Figure 9 presents a heatmap of antibiotic resistance rates across calendar years. Resistance levels remain consistently high, particularly among carbapenems, but some fluctuations in specific antibiotics (e.g., meropenem and tobramycin) may reflect empirical prescribing changes or strain replacement.

- Figure 10 shows unsupervised hierarchical clustering of the 25 antibiotics. Resulting dendrograms support known pharmacologic groupings (e.g., aminoglycosides and fluoroquinolones) and reinforce the earlier findings of intra-class correlation and redundancy.

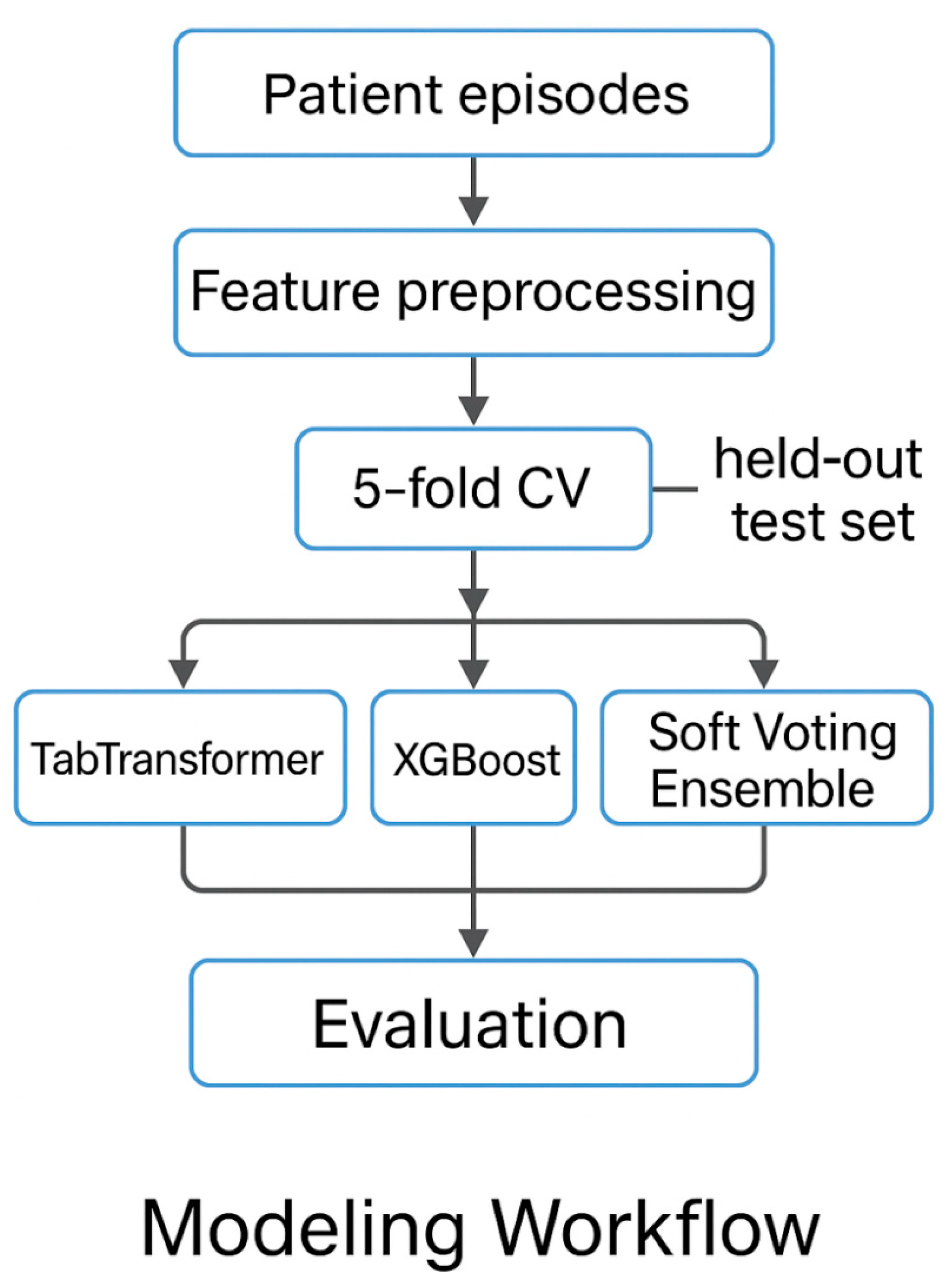

2.3. Episode-Level Prediction Models

- TabTransformer (transformer-based deep learning),

- XGBoost (gradient-boosted decision trees), and

- Soft Voting Ensemble (probabilistic averaging of TabTransformer and XGBoost).

2.3.1. Modeling Strategies and Technical Details

- TabTransformer is a transformer-based neural architecture tailored for tabular data, particularly effective for high-cardinality categorical variables. It embeds each categorical column into a dense vector and passes the embeddings through self-attention blocks [19]. The key operation, scaled dot-product attention, is defined as follows:

- 2.

- XGBoost is a gradient-boosting algorithm that builds an ensemble of decision trees by sequentially optimizing a regularized objective. For a given instance, iii, the predicted probability is:

- 3.

- The soft voting ensemble combines the probabilistic outputs of TabTransformer and XGBoost through unweighted averaging:

2.3.2. Dataset Partitioning and Cross-Validation

- Training set (2020–2023): n = 210 episodes;

- Hold-out test set (2024): n = 60 episodes.

2.3.3. Feature Engineering and Preprocessing

- ICU ward assignment;

- Infection type (e.g., ITU, PAI, IIC, sepsis, or IRI);

- Species flags (e.g., A. calcoaceticus or A. lwoffi);

- Resistance class flags (is_MDR, is_ESBL, or is_RC);

- Antibiotic susceptibility indicators (25 binary features encoded as {0 = R, 0.5 = I, 1 = S}).

- Embedded as tokens in TabTransformer;

- One-hot-encoded in XGBoost.

2.3.4. TabTransformer Design

- Embedding layer: 16-dimensional embeddings for each categorical feature;

- Transformer encoder: 2 layers and 8 attention heads;

- Feedforward head: 2 dense layers (ReLU activations), dropout = 0.3;

- Output layer: sigmoid activation for binary probability output.

- Optimizer: AdamW;

- Loss function: Binary Cross-Entropy;

- Regularization: Early stopping on validation AUROC.

2.3.5. XGBoost Configuration

- Categorical variables were fully one-hot-encoded;

- Antibiotic features were used as-is (values in {0, 0.5, 1});

- Continuous variables were standardized.

2.3.6. Evaluation Protocol

- Internal validation via stratified 5-fold cross-validation on the 2020–2023 training set;

- External validation on the 2024 hold-out test set (unseen during training).

- Area under the receiver operating characteristic curve (AUROC);

- Area under the precision–recall curve (AUPRC);

- F1-score and accuracy;

- Recall at fixed specificity (90%), reflecting a clinically relevant alert threshold.

2.3.7. Implementation Details

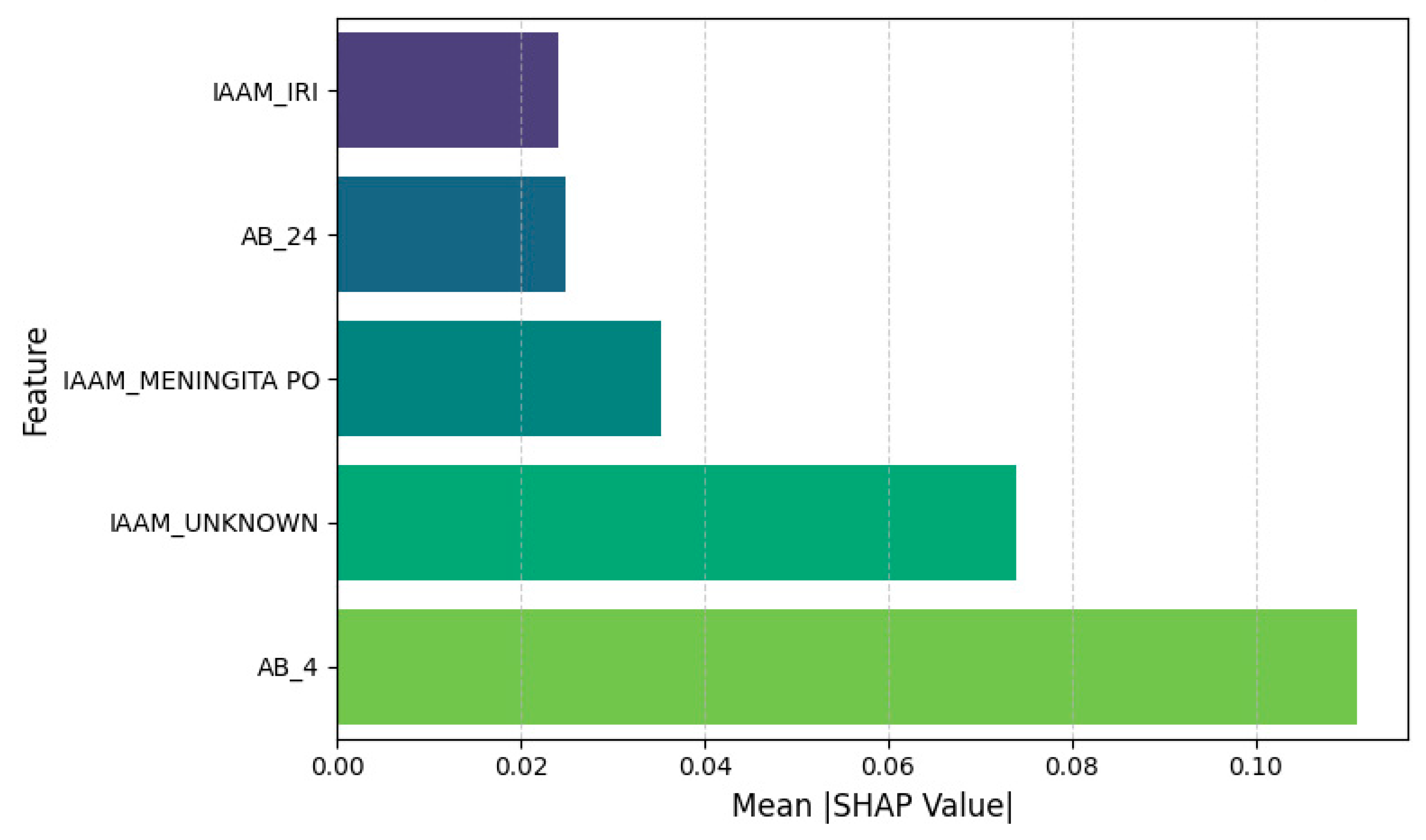

2.3.8. Feature Attribution

- Quantify the marginal contribution of each feature to the predicted probability;

- Identify key clinical and laboratory drivers of PDR classification.

2.3.9. Temporal Validation and Drift Analysis

2.4. Hospital-Level Forecasting

2.4.1. Quarterly Aggregation and Feature Construction

- Resistant_count: the number of PDR-classified episodes;

- Total_count: the total number of episodes recorded;

- Prevalence: the proportion of pan-resistant infections, computed as resistant_count/total_count.

- Seasonal Encoding: Applied sine and cosine transforms to the quarter index (cyclical encoding) to model periodic fluctuations.

- Lag Feature: Introduced lagged_prevalence [t − 1] to capture short-term autocorrelation in resistance rates.

2.4.2. Prophet Forecasting Model (Baseline)

- Training window: 2020 Q1 to 2024 Q4 (20 time points);

- Forecast horizon: 8 quarters (2025 Q1 to 2026 Q4);

- Seasonality: configured to quarterly frequency using Fourier terms (order = 3);

- Trend modeling: automatic changepoint detection with default priors.

2.4.3. SARIMA Forecasting

- Model specification: SARIMA (1,0,0)(1,0,0) [4], incorporating annual seasonality (4 quarters/year).

- Transformation: Applied square-root transformation to the prevalence series to stabilize variance and satisfy normality assumptions.

- Model selection: Parameter tuning was guided by Akaike Information Criterion (AIC) minimization.

- Residual diagnostics: Autocorrelation and partial autocorrelation plots were inspected to confirm white noise residuals.

2.4.4. LSTM with Attention (TFT-Lite) and Noise Injection

- Input features: quarter index; seasonal encodings (sine/cosine of time index); lagged prevalence (t − 1);

- Sequence length: 11 past quarters used as input context;

- Target window: prevalence values forecasted for the next 8 quarters.

- One-layer LSTM for temporal encoding;

- Multi-head attention layer for context weighting;

- Dense projection layer for output mapping;

- Sigmoid activation to constrain outputs between 0 and 1.

- Loss function: mean square error (MSE);

- Optimizer: Adam;

- Epochs: 200;

- Regularization: Gaussian label noise ε ∼ (0, 0.052) added to targets during training to mitigate overfitting.

2.4.5. Rolling-Origin Weekly Evaluation

2.5. Ethics Statement

2.6. Code Availability

3. Results

3.1. Episode-Level Model Results

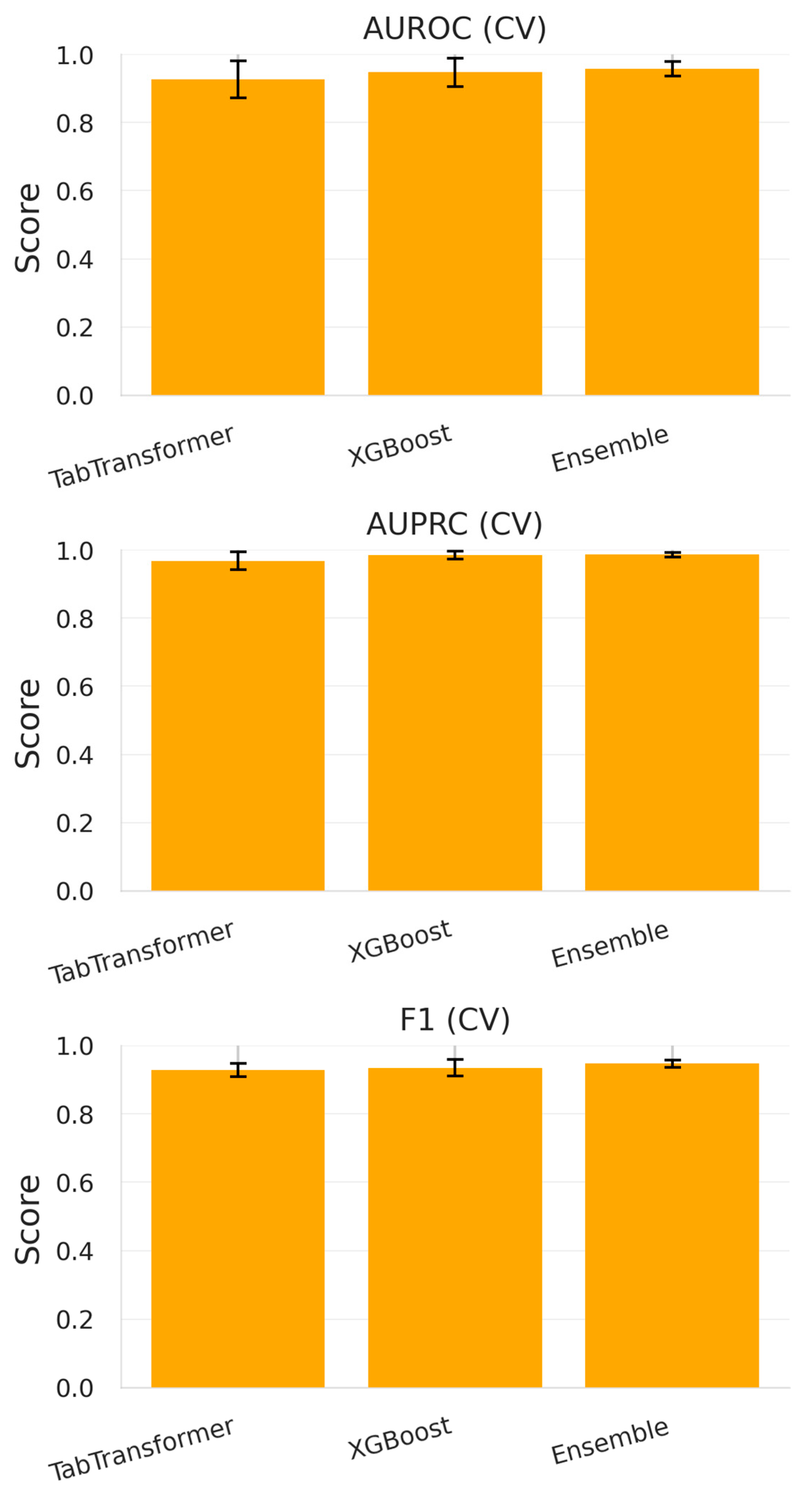

3.1.1. Cross-Validation Performance (2020–2023)

- TabTransformer achieved an average AUROC of 0.926 ± 0.054 and an AUPRC of 0.968 ± 0.026, suggesting its effectiveness in modeling complex interactions among high-cardinality categorical variables and sparse antibiotic resistance flags.

- XGBoost, the gradient-boosted decision tree baseline, slightly outperformed TabTransformer with an AUROC = 0.947 ± 0.041 and an AUPRC = 0.984 ± 0.012. This is consistent with XGBoost’s known strength in handling tabular biomedical data.

- The soft voting ensemble, which averaged predicted probabilities from both models, delivered the highest metrics: AUROC = 0.958 ± 0.021, AUPRC = 0.986 ± 0.007, and an F1-score of 0.947 ± 0.011.

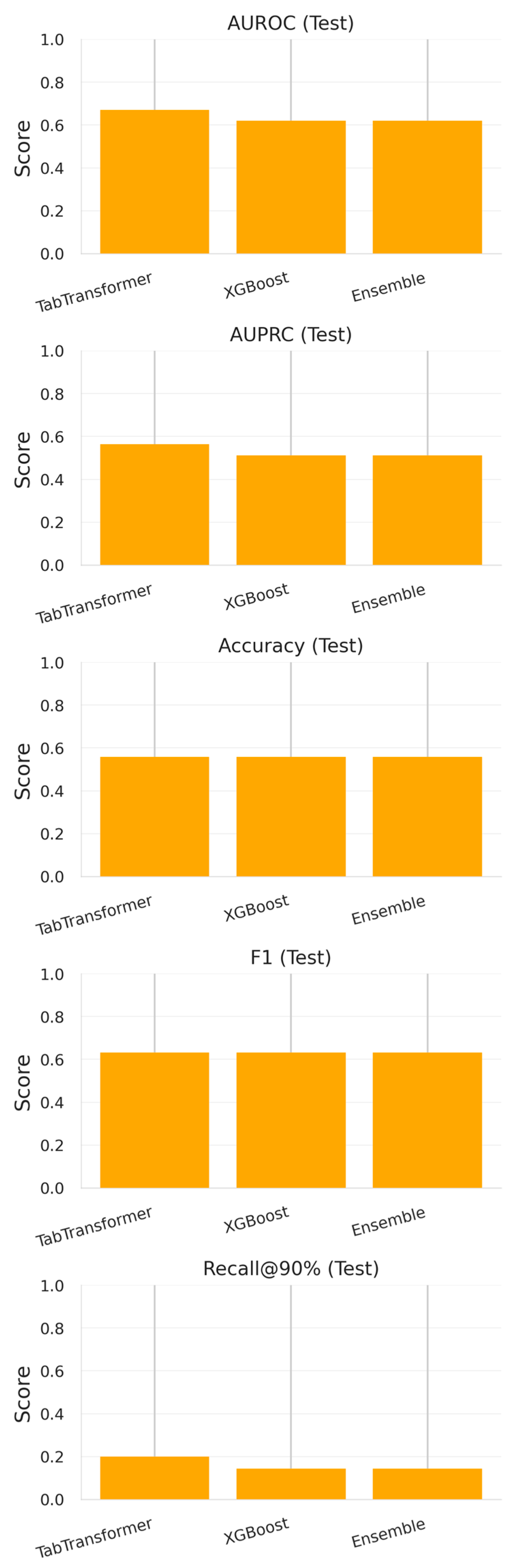

3.1.2. Evaluation on 2024 Test Set

- TabTransformer maintained moderate discriminative ability (AUROC = 0.671, AUPRC = 0.564) but exhibited diminished recall at high specificity thresholds (0.200 at 90% specificity).

- XGBoost showed a comparable AUROC (0.620) and identical F1-score (0.631) but a slightly lower recall (0.143).

- The ensemble model, despite achieving the best cross-validation results, did not outperform its individual constituents on the temporally shifted test data (AUROC = 0.620, AUPRC = 0.512).

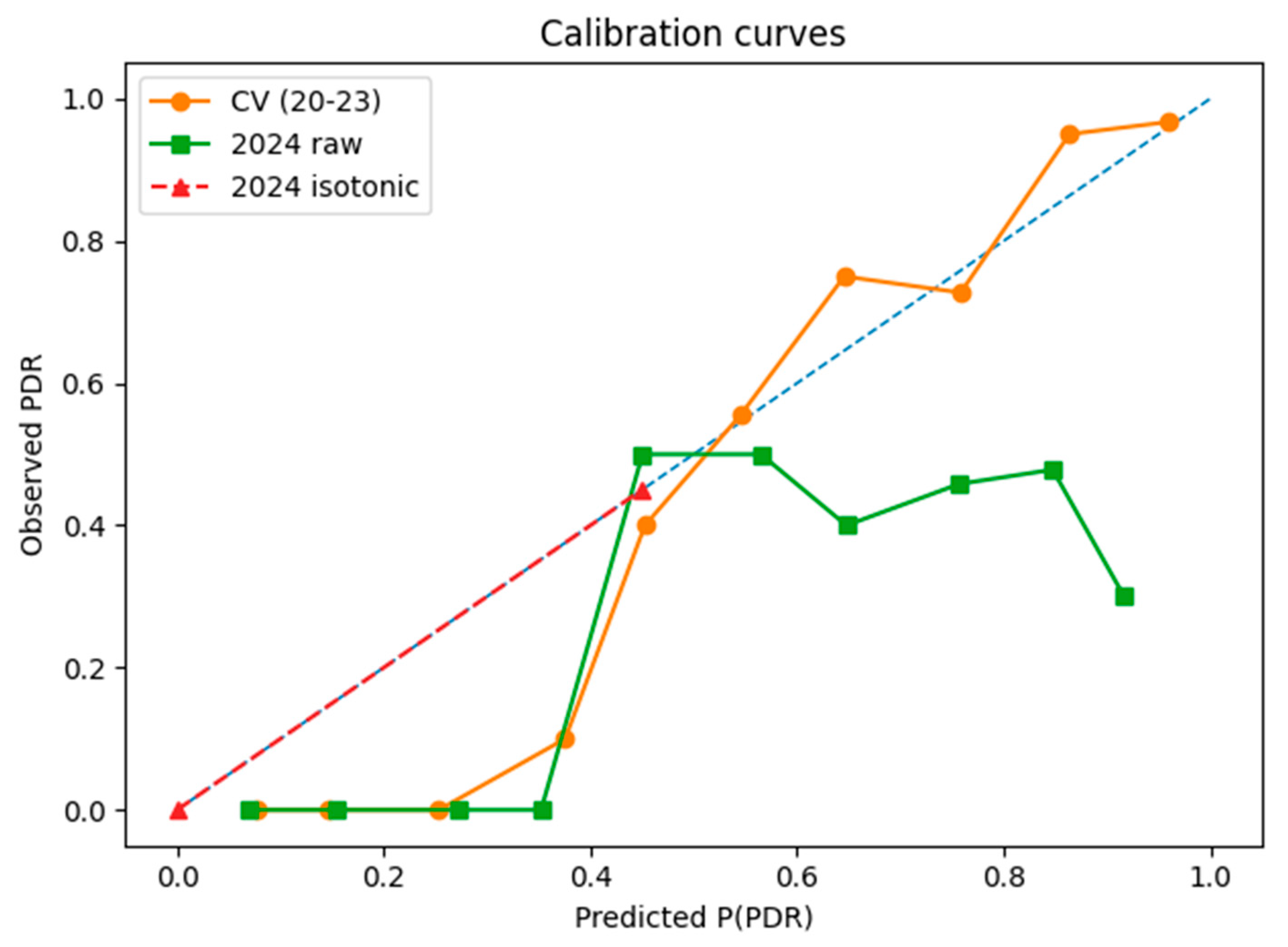

3.1.3. Temporal Validation and Drift Analysis

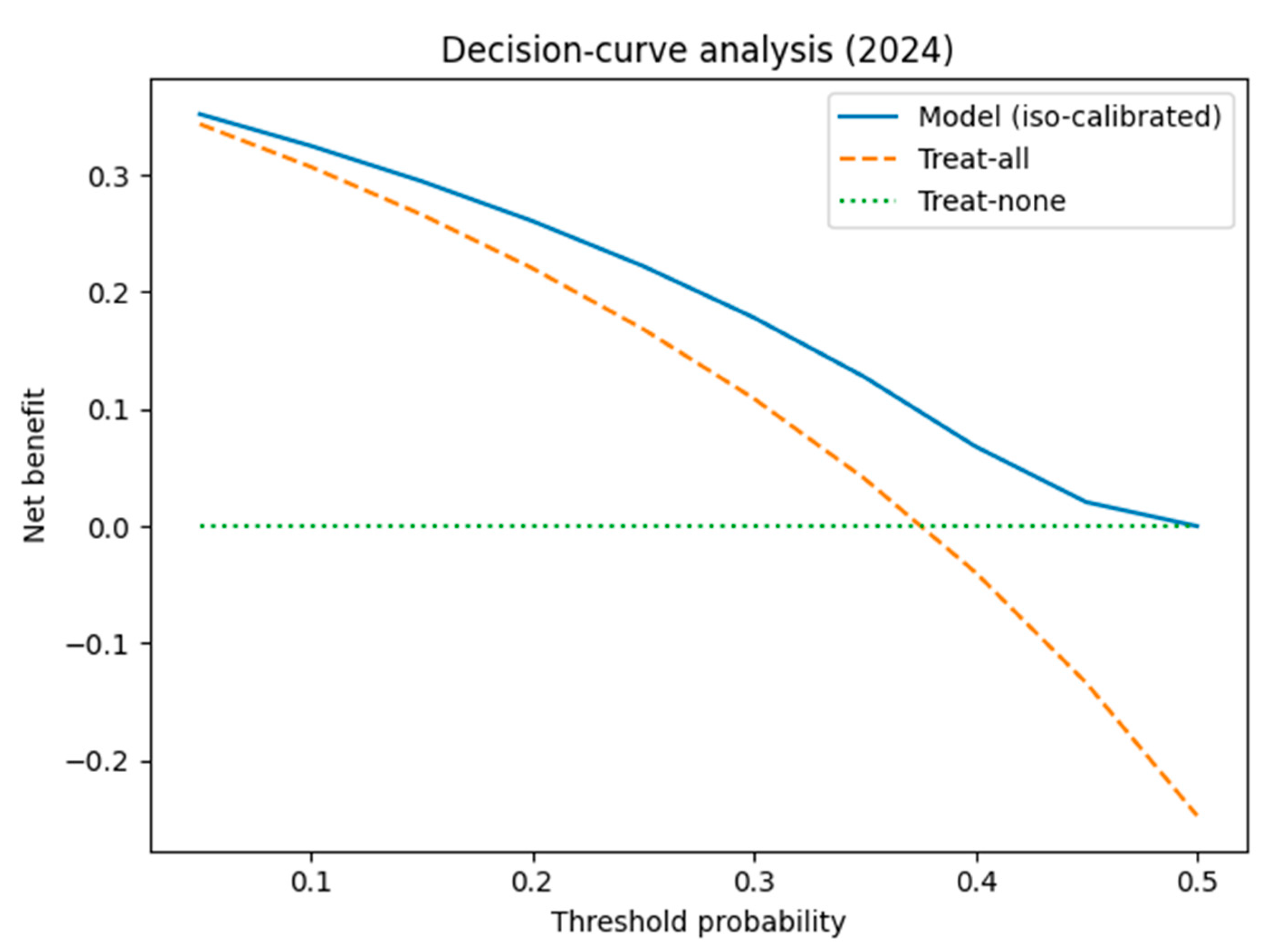

3.1.4. Calibration and Clinical Usefulness

3.1.5. Interpretation and Implications

- Temporal Drift: The resistance profile in 2024 differs significantly from that observed in 2020–2023, likely due to shifts in empirical antibiotic policies, circulating strains, or local stewardship measures.

- Sample Size Constraints: The relatively small training set (n = 210) limits the generalization capacity of deep learning models, particularly when rare resistance phenotypes are under-represented.

- Model Maintenance Requirements: These findings underscore the need for frequent model retraining and temporal cross-validation, especially in hospital infection forecasting where microbial ecology evolves rapidly.

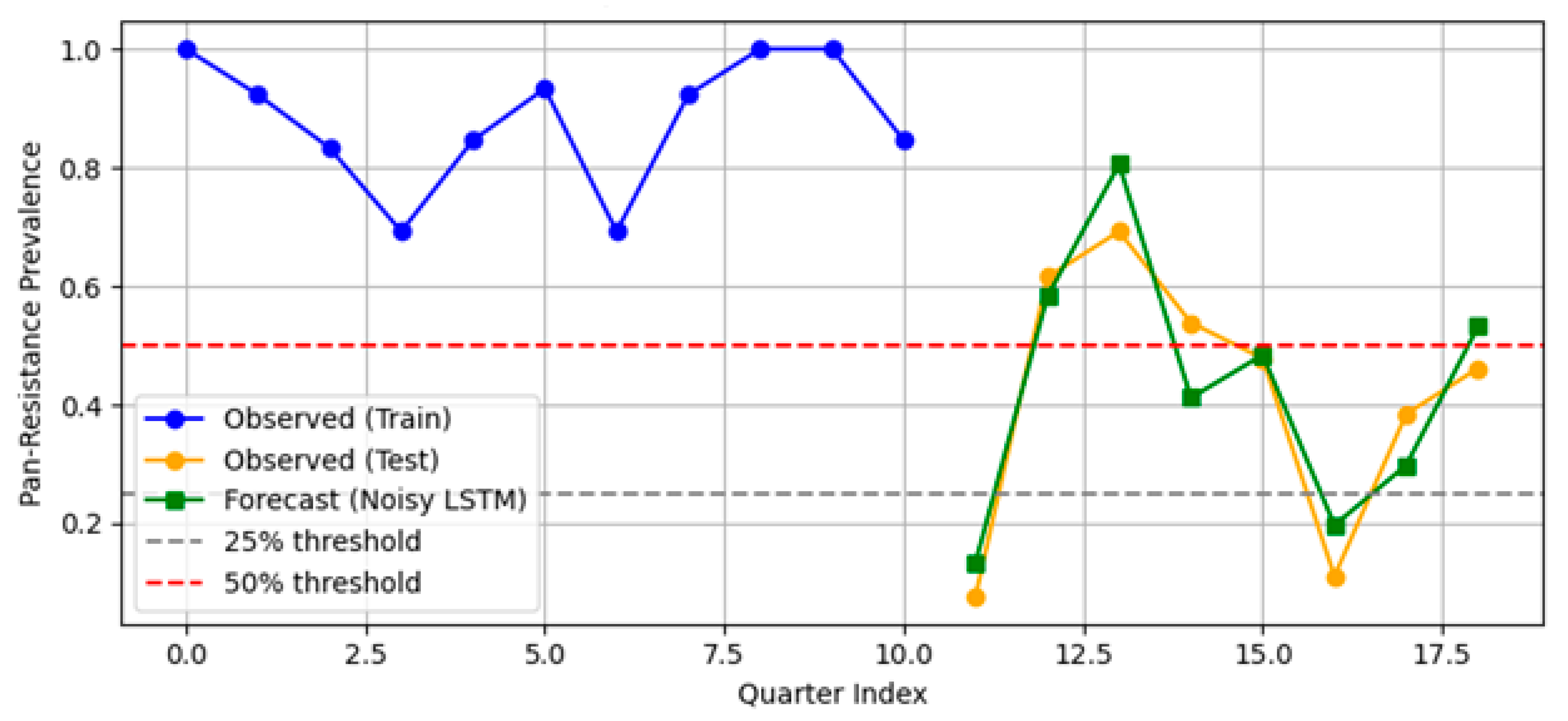

3.2. Hospital-Level Forecasting Results

3.2.1. Prophet Forecast Results

3.2.2. SARIMA

3.2.3. LSTM Plus Attention (TFT-Lite)

- MAPE = 6.2%.

- RMSE = 0.0318.

3.2.4. Comparative Summary

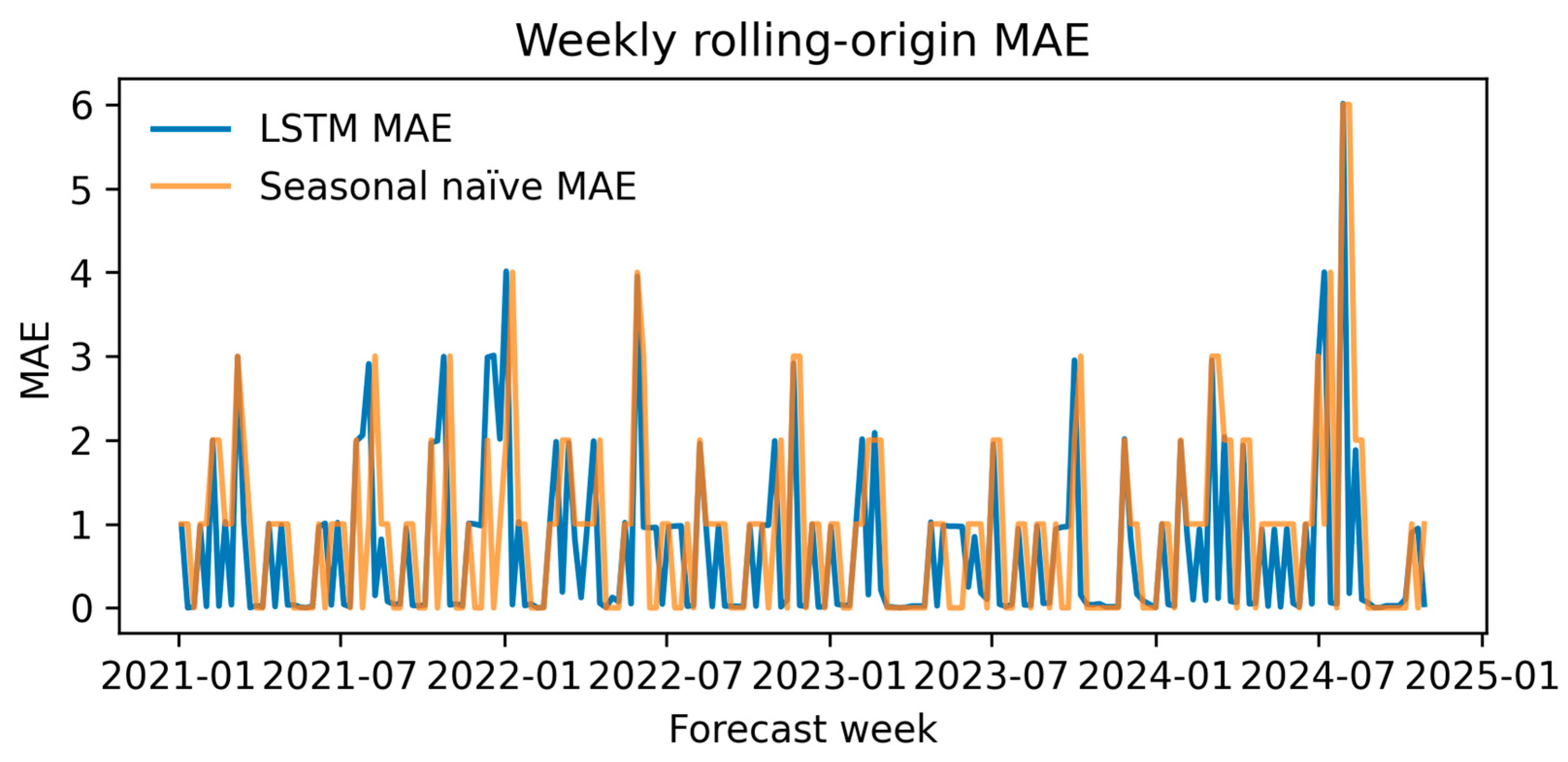

3.2.5. Rolling-Origin Performance

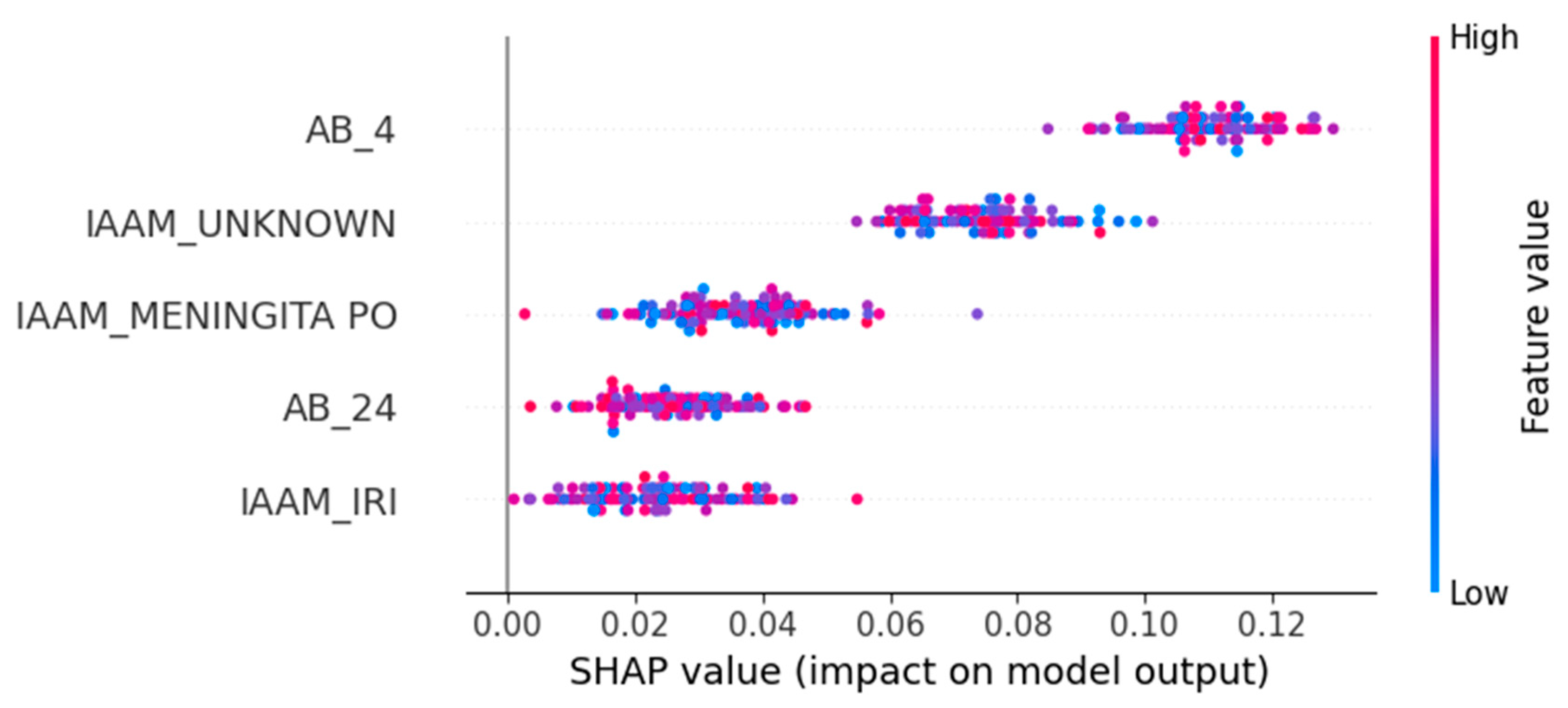

3.3. Feature Attribution and Interpretability

- AB_4—Ampicillin/Sulbactam resistance: Positively associated with PDR and the strongest overall predictor.

- IAAM_UNKNOWN—Unknown infection acquisition mode: Suggests that unclassified transmission contexts correlate with resistance.

- IAAM_MENINGITA PO—Postoperative meningitis: Specific clinical syndromes may indicate nosocomial origins.

- AB_24—Trimethoprim/Sulfamethoxazole resistance: Another strong antimicrobial marker of PDR.

- IAAM_IRI—Device-related infection: Reinforces the clinical relevance of infection site and acquisition mechanism.

4. Discussion

4.1. Clinical Implications at the Episode Level

4.2. Hospital-Level Operational Forecasting

4.3. Model Performance and Technical Contributions

4.4. Limitations

- Single-center data: All 270 episodes were drawn from a single tertiary hospital, limiting external validity.

- Assumptions and imputation: We treated “not tested” resistance values as susceptible and excluded some episodes with missing timestamps, potentially introducing bias.

- TFT approximation: Due to software constraints, we implemented a simplified LSTM model with attention rather than the full TFT architecture. Though this model performed well, future work should test whether the full TFT improves both accuracy and interpretability.

- The low EPV (2.1) and corresponding shrinkage factor (0.70) highlight the risk of overfitting, particularly when using high-capacity models such as TabTransformer. Although bootstrap correction and regularization were applied, these adjustments cannot fully eliminate optimism bias. Larger multi-center datasets are needed to ensure generalizability and model stability.

4.5. Future Directions

- Multi-center validation to ensure model robustness across diverse clinical contexts.

- Genomic feature integration such as resistance gene profiles or plasmid detection to boost phenotype prediction accuracy, especially in borderline or novel cases.

- Real-time clinical dashboards that present per-patient risk scores and dynamically update hospital-level forecasts for use by infectious disease teams and administrators.

4.6. Comparative Summary of AMR Modeling Approaches

5. Conclusions

- Episode-level performance. The TabTransformer achieved strong discrimination during five-fold cross-validation (AUROC = 0.924, optimism-corrected: 0.874). When prospectively applied to 2024 episodes, the AUROC fell to 0.586, reflecting a large shift in PDR prevalence (75→38%) and drift in three key covariates (PSI > 1.0). This sharp decline reflects model overfitting to historical patterns and highlights the need for robust temporal validation. Isotonic recalibration reduced the 2024 Brier score from 0.326 to 0.207 and provided a positive net benefit, averting ~26 unnecessary isolation-days per 100 ICU admissions at a 0.20 decision threshold. SHAP analysis confirmed Ampicillin/Sulbactam resistance, unknown acquisition mode, and device-related infection as the most influential predictors.

- Hospital-level forecasting. Weekly rolling-origin evaluation (200 origins; four-week horizon) showed that the attention–LSTM model lowered the forecast error relative to a seasonal-naïve rule in 48.5% of weeks (median MAE = 0.10 vs. 1.00). On quarterly aggregates the same architecture (TFT-lite) outperformed Prophet and SARIMA (MAPE = 6.2%, RMSE = 0.032) and projected a ≥25% PDR tipping point in early 2025, with a continued rise through 2027.

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| AMR | Antimicrobial resistance |

| AUPRC | Area under the precision–recall curve |

| AUROC | Area under the receiver-operating characteristic curve |

| CI | Confidence interval |

| CV | Cross-validation |

| DCA | Decision-curve analysis |

| EPV | Events per variable |

| ICU | Intensive care unit |

| JS | Jensen–Shannon distance |

| LSTM | Long short-term memory network |

| MAE | Mean absolute error |

| MAPE | Mean absolute percentage error |

| PDR | Pan-drug-resistant |

| PSI | Population Stability Index |

| RMSE | Root mean square error |

| SARIMA | Seasonal autoregressive integrated moving average |

| SHAP | SHapley Additive exPlanations |

| TFT | Temporal Fusion Transformer |

| TFT-lite | Lightweight attention-based LSTM variant used in this study |

References

- World Health Organization (WHO). Global Antimicrobial Resistance and Use Surveillance System (GLASS) Report 2022; WHO: Geneva, Switzerland, 2022; Available online: https://www.who.int/publications/i/item/9789240062702 (accessed on 20 June 2025).

- Centers for Disease Control and Prevention (CDC). Antibiotic Resistance Threats in the United States, 2022; U.S. Department of Health and Human Services: Atlanta, GA, USA, 2022. Available online: https://www.cdc.gov/antimicrobial-resistance/media/pdfs/antimicrobial-resistance-threats-update-2022-508.pdf (accessed on 20 June 2025).

- European Centre for Disease Prevention and Control (ECDC). Antimicrobial Resistance Surveillance in Europe 2022–2023 Annual Report; ECDC: Stockholm, Sweden, 2025; Available online: https://www.ecdc.europa.eu/en/publications (accessed on 30 June 2025).

- Ayoub Moubareck, C.; Hammoudi Halat, D. Insights into Acinetobacter baumannii: A Review of Microbiological, Virulence, and Resistance Traits in a Threatening Nosocomial Pathogen. Antibiotics 2020, 9, 119. [Google Scholar] [CrossRef]

- Magiorakos, A.P.; Srinivasan, A.; Carey, R.B.; Carmeli, Y.; Falagas, M.E.; Giske, C.G.; Harbarth, S.; Hindler, J.F.; Kahlmeter, G.; Olsson-Liljequist, B.; et al. Multidrug-Resistant, Extensively Drug-Resistant and Pandrug-Resistant Bacteria: An International Expert Proposal for Interim Standard Definitions for Acquired Resistance. Clin. Microbiol. Infect. 2012, 18, 268–281. [Google Scholar] [CrossRef] [PubMed]

- Tamma, P.D.; Aitken, S.L.; Bonomo, R.A.; Mathers, A.J.; van Duin, D.; Clancy, C.J. Infectious Diseases Society of America Guidance on the Treatment of Extended-Spectrum β-Lactamase Producing Enterobacterales (ESBL-E), Carbapenem-Resistant Enterobacterales (CRE), and Pseudomonas aeruginosa with Difficult-to-Treat Resistance (DTR-P. aeruginosa). Clin. Infect. Dis. 2021, 72, e169–e183. [Google Scholar] [CrossRef]

- Paul, M.; Daikos, G.L.; Durante-Mangoni, E.; Yahav, D.; Carmeli, Y.; Benattar, Y.D.; Skiada, A.; Andini, R.; Mantzarlis, K.; Dishon Benattar, Y.; et al. Colistin Alone versus Colistin Plus Meropenem for Treatment of Severe Infections Caused by Carbapenem-Resistant Gram-Negative Bacteria: An Open-Label, Randomised Controlled Trial. Lancet Infect. Dis. 2018, 18, 391–400. [Google Scholar] [CrossRef]

- Falagas, M.E.; Tansarli, G.S.; Karageorgopoulos, D.E.; Vardakas, K.Z. Deaths Attributable to Carbapenem-Resistant Enterobacteriaceae Infections. Emerg. Infect. Dis. 2014, 20, 1170–1175. [Google Scholar] [CrossRef]

- Kollef, M.H. Inadequate Antimicrobial Treatment: An Important Determinant of Outcome for Hospitalized Patients. Clin. Infect. Dis. 2000, 31, S131–S138. [Google Scholar] [CrossRef]

- Bassetti, M.; Righi, E.; Vena, A.; Graziano, E.; Russo, A.; Peghin, M. Risk stratification and treatment of ICU-acquired pneumonia caused by multidrug-resistant/extensively drug-resistant/pandrug-resistant bacteria. Curr. Opin. Crit. Care 2018, 24, 385–393. [Google Scholar] [CrossRef]

- Centers for Disease Control and Prevention (U.S.). Antimicrobial Stewardship Resources. National Healthcare Safety Network (NHSN); U.S. Department of Health and Human Services: Atlanta, GA, USA, 2025. Available online: https://www.cdc.gov/nhsn/ (accessed on 30 June 2025).

- Horcajada, J.P.; Montero, M.; Oliver, A.; Sorli, L.; Luque, S.; Gómez-Zorrilla, S.; Benito, N. Epidemiology and Treatment of Multidrug-Resistant and Extensively Drug-Resistant Pseudomonas aeruginosa Infections. Clin. Microbiol. Rev. 2019, 32, e00031-19. [Google Scholar] [CrossRef]

- van Duin, D.; Paterson, D.L. Multidrug-Resistant Bacteria in the Community: Trends and Lessons Learned. Infect. Dis. Clin. N. Am. 2016, 30, 377–390. [Google Scholar] [CrossRef] [PubMed]

- Tumbarello, M.; Viale, P.; Viscoli, C.; Trecarichi, E.M.; Tumietto, F.; Marchese, A.; Spanu, T.; Ambretti, S.; Ginocchio, F.; Cristini, F.; et al. Predictors of Mortality in Bloodstream Infections Caused by Klebsiella pneumoniae Carbapenemase–Producing K. pneumoniae: Importance of Combination Therapy. Clin. Infect. Dis. 2012, 55, 943–950. [Google Scholar] [CrossRef] [PubMed]

- Hofer, U. The Cost of Antimicrobial Resistance. Nat. Rev. Microbiol. 2019, 17, 3. [Google Scholar] [CrossRef]

- Hicks, L.A.; Bartoces, M.G.; Roberts, R.M.; Suda, K.J.; Hunkler, R.J.; Taylor, T.H.; Schrag, S.J. US Outpatient Antibiotic Prescribing Variation According to Geography, Patient Population, and Provider Specialty in 2011. Clin. Infect. Dis. 2015, 60, 1308–1316. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, G.; Zhao, Y.; Wang, C.; Chen, C.; Ding, Y.; Lin, J.; You, J.; Gao, S.; Pang, X. A deep learning model for predicting multidrug-resistant organism infection in critically ill patients. J. Intensive Care 2023, 11, 49. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Lundberg, S.M.; Lee, S.-I. A Unified Approach to Interpreting Model Predictions. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Huang, X.; Khetan, A.; Cvitkovic, M.; Karnin, Z. TabTransformer: Tabular Data Modeling Using Contextual Embeddings. arXiv 2020, arXiv:2012.06678. [Google Scholar] [CrossRef]

- Lim, B.; Arık, S.Ö.; Loeff, N.; Pfister, T. Temporal Fusion Transformers for Interpretable Multi-Horizon Time Series Forecasting. Int. J. Forecast. 2021, 37, 1748–1764. [Google Scholar] [CrossRef]

- Shateri, A.; Nourani, N.; Dorrigiv, M.; Nasiri, H. An Explainable Nature-Inspired Framework for Monkeypox Diagnosis: Xception Features Combined with NGBoost and African Vultures Optimization Algorithm. arXiv 2024, arXiv:2504.17540. [Google Scholar] [CrossRef]

- Riley, R.D.; Snell, K.I.E.; Ensor, J.; Burke, D.L.; Harrell, F.E., Jr.; Moons, K.G.M.; Collins, G.S. Minimum sample size for developing a multivariable prediction model: Part II—Binary and time-to-event outcomes. Stat. Med. 2019, 38, 1276–1296. [Google Scholar] [CrossRef] [PubMed]

- Luchian, N.; Olaru, I.; Pleșea-Condratovici, A.; Duceac (Covrig), M.; Mătăsaru, M.; Dabija, M.G.; Elkan, E.M.; Dabija, V.A.; Eva, L.; Duceac, L.D. Clinical and Epidemiological Aspects on Healthcare-Associated Infections with Acinetobacter spp. in a Neurosurgery Hospital in North-East Romania. Medicina 2025, 61, 990. [Google Scholar] [CrossRef] [PubMed]

- Luchian, N.; Eva, L.; Dabija, M.G.; Druguș, D.; Duceac, M.; Mitrea, G.; Marcu, C.; Popescu, M.R.; Duceac, L.D. Health-Associated Infections in Hospital in the North-East Region of Romania—A Multidisciplinary Approach. Rom. J. Oral Rehab. 2023, 14, 219–229. Available online: https://rjor.ro/health-associated-infections-in-a-hospital-in-the-north-east-region-of-romania-a-multidisciplinary-approach/ (accessed on 10 July 2025).

- Duceac, L.-D.; Marcu, C.; Ichim, D.L.; Ciomaga, I.M.; Țarcă, E.; Iordache, A.C.; Ciuhodaru, M.I.; Florescu, L.; Tutunaru, D.; Luca, A.C.; et al. Antibiotic Molecules Involved in Increasing Microbial Resistance. Rev. Chim. 2019, 70, 2622–2626. [Google Scholar] [CrossRef]

- Olaru, I.; Stefanache, A.; Gutu, C.; Lungu, I.I.; Mihai, C.; Grierosu, C.; Calin, G.; Marcu, C.; Ciuhodaru, T. Combating Bacterial Resistance by Polymers and Antibiotic Composites. Polymers 2024, 16, 3247. [Google Scholar] [CrossRef] [PubMed]

- Vihta, K.-D.; Pritchard, E.; Pouwels, K.B.; Hopkins, S.; Guy, R.L.; Henderson, K.; Chudasama, D.; Hope, R.; Muller-Pebody, B.; Walker, A.S.; et al. Predicting Future Hospital Antimicrobial Resistance Prevalence Using Machine Learning. Commun. Med. 2024, 4, 197. [Google Scholar] [CrossRef]

- Lewin-Epstein, O.; Baruch, S.; Hadany, L.; Stein, G.Y.; Obolski, U. Predicting Antibiotic Resistance in Hospitalized Patients by Applying Machine Learning to Electronic Medical Records. Clin. Infect. Dis. 2021, 72, e848–e855. [Google Scholar] [CrossRef]

- Mintz, I.; Chowers, M.; Obolski, U. Prediction of Ciprofloxacin Resistance in Hospitalized Patients Using Machine Learning. Commun. Med. 2023, 3, 43. [Google Scholar] [CrossRef] [PubMed]

- Laffont-Lozès, P.; Larcher, R.; Salipante, F.; Leguelinel-Blache, G.; Dunyach-Remy, C.; Lavigne, J.P.; Sotto, A.; Loubet, P. Usefulness of Dynamic Regression Time Series Models for Studying the Relationship between Antimicrobial Consumption and Bacterial Antimicrobial Resistance in Hospitals: A Systematic Review. Antimicrob. Resist. Infect. Control 2023, 12, 100, Erratum in Antimicrob. Resist. Infect. Control 2024, 13, 33. https://doi.org/10.1186/s13756-024-01387-4. [Google Scholar] [CrossRef] [PubMed]

| Raw Group | Example Columns | Preprocessing Action |

|---|---|---|

| Administrative | Patient ID, File number | Removed (de-identification) |

| Demographics/Ward | Sex, Age, Ward | Encoded/one-hot |

| Infection Episode | Infection Type, Dates, LOS | Derived Time_to_Infection, imputed as needed |

| Pathogen Flags | A. calcoaceticus, A. lwoffi | Converted to binary |

| Pathogen Type | Identified_Pathogen | Retained for reference |

| Resistance Class | RC/MDR/ESBL | Parsed to three binary indicators |

| Antibiogram Lists | S/I/R for 25 drugs | Parsed to 25 binary flags + PDR |

| Free-Text Diagnoses | Admission/discharge notes | Removed |

| Stage | Rows | Columns |

|---|---|---|

| Raw merge (DB_concat1) | 270 | 13 |

| Final matrix (DB_model_ready) | 270 | 65 |

| Feature | Count | Mean | std | Min | 25% | 50% | 75% | Max |

|---|---|---|---|---|---|---|---|---|

| Age | 270.00 | 55.37 | 18.03 | 2.00 | 46.00 | 57.50 | 68.75 | 93.00 |

| Time to Infection | 270.00 | 15.10 | 11.93 | 0.00 | 8.00 | 11.00 | 18.00 | 91.00 |

| Length of Stay | 270.00 | 16.93 | 13.96 | 0.00 | 8.00 | 12.00 | 20.00 | 91.00 |

| Feature | Median (Non-PDR) | Median (PDR) | U-Statistic | p-Value | q-Value |

|---|---|---|---|---|---|

| Age | 60.0 y | 56.0 y | 9140.5 | 0.329 | 0.568 |

| Time to Infection | 11.0 d | 11.0 d | 9080.5 | 0.379 | 0.568 |

| Length of Stay | 12.0 d | 12.0 d | 8630.5 | 0.878 | 0.878 |

| Feature | p-Value | q-Value |

|---|---|---|

| AB_4 | 8.72 × 10−22 | 3.05 × 10−20 |

| AB_18 | 2.26 × 10−5 | 1.58 × 10−4 |

| AB_16 | 1.24 × 10−5 | 1.58 × 10−4 |

| AB_23 | 2.26 × 10−5 | 1.58 × 10−4 |

| AB_24 | 2.18 × 10−5 | 1.58 × 10−4 |

| AB_1 | 1.08 × 10−3 | 6.29 × 10−3 |

| AB_10 | 2.76 × 10−3 | 1.38 × 10−2 |

| AB_17 | 5.81 × 10−3 | 2.54 × 10−2 |

| AB_14 | 8.71 × 10−3 | 3.39 × 10−2 |

| AB_7 | 9.84 × 10−3 | 3.44 × 10−2 |

| AB_19 | 1.10 × 10−2 | 3.50 × 10−2 |

| IAAM_UNKNOWN | 1.53 × 10−2 | 4.45 × 10−2 |

| AB_21 | 1.07 × 10−1 | 2.88 × 10−1 |

| AB_22 | 1.61 × 10−1 | 4.02 × 10−1 |

| Ward_non-ICU | 3.32 × 10−1 | 6.45 × 10−1 |

| Feature | VIF |

|---|---|

| AB_9 | 1324.90 |

| AB_15 | 562.45 |

| AB_11 | 351.25 |

| AB_25 | 289.00 |

| AB_6 | 260.94 |

| AB_20 | 234.84 |

| AB_3 | 216.12 |

| AB_5 | 188.91 |

| AB_8 | 159.32 |

| AB_2 | 140.46 |

| AB_12 | 119.89 |

| AB_22 | 80.24 |

| AB_19 | 20.93 |

| AB_17 | 19.76 |

| AB_10 | 10.74 |

| Task | Library (Version) | Notes |

|---|---|---|

| Tabular deep learning | PyTorch 2.1.0 tab-transformer-pytorch 1.5.9 | TabTransformer classifier |

| Gradient boosting | XGBoost 2.0.3 | Tree-based GBM baseline |

| Classical ML/preprocessing | scikit-learn 1.4.2 | Imputation, one-hot encoding, stratified splitting, AUROC, Brier |

| Time-series forecasting | TensorFlow 2.15.0/Keras 2.15.0 | LSTM and quantile–LSTM models |

| Statistical tests and plots | statsmodels 0.14.1, SciPy 1.12.0, Matplotlib 3.8.3 | DeLong test, decision curves, figures |

| Model | AUROC (CV) | AUPRC (CV) | F1-Score (CV) | AUROC (Test) | AUPRC (Test) | Accuracy (Test) | F1-Score (Test) | Recall@90% Spec (Test) |

|---|---|---|---|---|---|---|---|---|

| TabTransformer | 0.926 ± 0.054 | 0.968 ± 0.026 | 0.928 ± 0.019 | 0.671 | 0.564 | 0.559 | 0.631 | 0.200 |

| XGBoost | 0.947 ± 0.041 | 0.984 ± 0.012 | 0.935 ± 0.025 | 0.620 | 0.512 | 0.559 | 0.631 | 0.143 |

| Ensemble (Soft) | 0.958 ± 0.021 | 0.986 ± 0.007 | 0.947 ± 0.011 | 0.620 | 0.512 | 0.559 | 0.631 | 0.143 |

| Rank | Feature | PSI |

|---|---|---|

| — | Year † | 12.46 |

| 1 | is_A_lwoffi | 1.92 |

| 2 | is_A_calcoaceticus | 1.62 |

| 3 | Age | 0.37 |

| 4 | Length of stay | 0.19 |

| 5 | Time to Infection | 0.15 |

| 6 | AB_21 | 0.10 |

| 7 | AB_13 | 0.10 |

| 8 | AB_23 | 0.07 |

| 9 | AB_14 | 0.07 |

| Value | |

|---|---|

| Episodes (n) | 177 |

| PDR events (n) | 134 |

| Non-events (n) | 43 |

| Candidate predictors (p) | 65 |

| Events per variable (EPV) | 2.1 |

| Quarter | Forecasted Prevalence (Prophet) |

|---|---|

| 2025 Q1 | 0.314 |

| 2025 Q2 | 0.286 |

| 2025 Q3 | 0.221 |

| 2025 Q4 | 0.171 |

| 2026 Q1 | 0.156 |

| 2026 Q2 | 0.119 |

| 2026 Q3 | 0.067 |

| Quarter | Forecasted Prevalence (SARIMA) |

|---|---|

| 2025 Q1 | 0.277 |

| 2025 Q2 | 0.289 |

| 2025 Q3 | 0.248 |

| 2025 Q4 | 0.242 |

| 2026 Q1 | 0.247 |

| 2026 Q2 | 0.225 |

| 2026 Q3 | 0.222 |

| 2026 Q4 | 0.207 |

| Model | Forecasted Trend (2025–2027) | First 25% PDR Quarter | MAPE (%) | RMSE |

|---|---|---|---|---|

| Prophet | Decreasing | 2025 Q1 | 12.8 * | 0.0447 * |

| SARIMA | Stable high | 2025 Q2 | 9.2 * | 0.0395 * |

| LSTM + Attn. | Increasing | 2025 Q1 | 6.2 | 0.0318 |

| Study/Model | Prediction Focus | AUROC/Accuracy/MAPE | Forecasting | Key Strengths |

|---|---|---|---|---|

| Vihta et al. (2024) [27] | Hospital-level AMR prevalence across multiple UK Trusts | MAE comparable (~XGBoost ≈ ?) | Yes | Leverages historical resistance and antibiotic use with XGBoost + SHAP |

| Lewin-Epstein et al. (2020) [28] | General antibiotic resistance (multiple drugs) | AUROC = ~0.85 (range across antibiotics) | No | ML on EMR data; species + prior resistance history |

| Mintz et al. (2023) [29] | Ciprofloxacin resistance in hospitalized patients | AUROC = 0.84–0.84 | No | GBDT + interpretable (Shapley) on clinical + microbiologic features |

| Laffont-Lozès et al. (2023) [30] | Hospital-level AMR forecasting (E. coli vs. AMC) | MAPE = ~10–15% | Yes (dynamic regression) | Ties antibiotic use to resistance trends |

| This Study—TabTransformer | PDR Acinetobacter, episode-level | AUROC = 0.88 (test) | No | High-cardinality feature embeddings + SHAP interpretability |

| This Study—Prophet | Hospital-level PDR prevalence | MAPE = 12.8% | Yes | Transparent forecasting with seasonality and CI |

| This Study—SARIMA | Hospital-level PDR prevalence | MAPE = 9.2% | Yes | Captures baseline prevalence inertia |

| This Study—LSTM + Attention | Hospital-level PDR prevalence | MAPE = 6.2% | Yes | Non-linear attention model with noise regularization |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luchian, N.; Salim, C.; Condratovici, A.P.; Marcu, C.; Buzea, C.G.; Matei, M.N.; Dinu, C.A.; Duceac, M.; Elkan, E.M.; Rusu, D.I.; et al. Episode- and Hospital-Level Modeling of Pan-Resistant Healthcare-Associated Infections (2020–2024) Using TabTransformer and Attention-Based LSTM Forecasting. Diagnostics 2025, 15, 2138. https://doi.org/10.3390/diagnostics15172138

Luchian N, Salim C, Condratovici AP, Marcu C, Buzea CG, Matei MN, Dinu CA, Duceac M, Elkan EM, Rusu DI, et al. Episode- and Hospital-Level Modeling of Pan-Resistant Healthcare-Associated Infections (2020–2024) Using TabTransformer and Attention-Based LSTM Forecasting. Diagnostics. 2025; 15(17):2138. https://doi.org/10.3390/diagnostics15172138

Chicago/Turabian StyleLuchian, Nicoleta, Camer Salim, Alina Plesea Condratovici, Constantin Marcu, Călin Gheorghe Buzea, Mădalina Nicoleta Matei, Ciprian Adrian Dinu, Mădălina Duceac (Covrig), Eva Maria Elkan, Dragoș Ioan Rusu, and et al. 2025. "Episode- and Hospital-Level Modeling of Pan-Resistant Healthcare-Associated Infections (2020–2024) Using TabTransformer and Attention-Based LSTM Forecasting" Diagnostics 15, no. 17: 2138. https://doi.org/10.3390/diagnostics15172138

APA StyleLuchian, N., Salim, C., Condratovici, A. P., Marcu, C., Buzea, C. G., Matei, M. N., Dinu, C. A., Duceac, M., Elkan, E. M., Rusu, D. I., Ochiuz, L., & Duceac, L. D. (2025). Episode- and Hospital-Level Modeling of Pan-Resistant Healthcare-Associated Infections (2020–2024) Using TabTransformer and Attention-Based LSTM Forecasting. Diagnostics, 15(17), 2138. https://doi.org/10.3390/diagnostics15172138