1. Introduction

In recent years, artificial intelligence (AI) has become increasingly embedded in everyday life and has assumed a central role in both scientific research and real-world applications. In medicine, AI-based technologies are being integrated into clinical workflows with the aim of improving diagnostic accuracy, optimizing treatment planning, and enhancing the efficiency of healthcare delivery [

1].

Radiology, in particular, has emerged as a leading domain for AI implementation due to its reliance on high-volume data interpretation [

2,

3]. AI algorithms, trained on large-scale datasets, are now capable of detecting pathological findings in medical images, recognizing rare conditions, and offering evidence-based treatment suggestions in real time [

4,

5,

6]. The potential of AI to reduce diagnostic errors—estimated to affect 5–15% of all medical decisions—and to increase clinical throughput underscores its transformative potential across both inpatient and outpatient settings [

7,

8].

Among clinical environments, emergency departments (EDs) are especially well-suited for AI-supported systems due to their high patient turnover, limited availability of specialists, and demand for rapid and accurate decision-making [

9]. One key area of vulnerability is fracture detection on conventional radiographs, where diagnostic oversight remains a significant concern. Studies report miss rates ranging from 2% to 9%, highlighting the clinical importance of improving detection accuracy [

7].

In response to these challenges, several AI-powered diagnostic tools have received regulatory approval for acute fracture detection in recent years, including the Aidoc Fracture Detection Module (Tel Aviv, Israel) and BoneView by Gleamer (Saint-Mandé, France). These tools, designed as clinical decision support systems (CDSS), employ convolutional neural network (CNN) architectures to autonomously analyze radiographic images and flag critical findings—such as vertebral fractures, pulmonary embolisms, or intracranial hemorrhages—in real time [

10]. Despite regulatory clearance and promising performance metrics, the widespread adoption of such AI tools remains limited. Reported barriers include challenges with system integration into hospital infrastructure, non-intuitive user interfaces, insufficient training, and concerns about automation bias or overreliance on AI outputs [

11,

12].

In addition to technical and infrastructural challenges, clinician acceptance has emerged as a pivotal determinant for the successful implementation of AI-based tools in clinical settings. Factors such as perceived reliability, clinical utility, and compatibility with existing workflows significantly influence adoption decisions [

13]. Within the European Union, the significance of these human factors is further emphasized by the AI Act and Medical Device Regulation (MDR), which mandate transparency, user training, and accountability as prerequisites for safe and ethical AI deployment in healthcare [

2,

14].

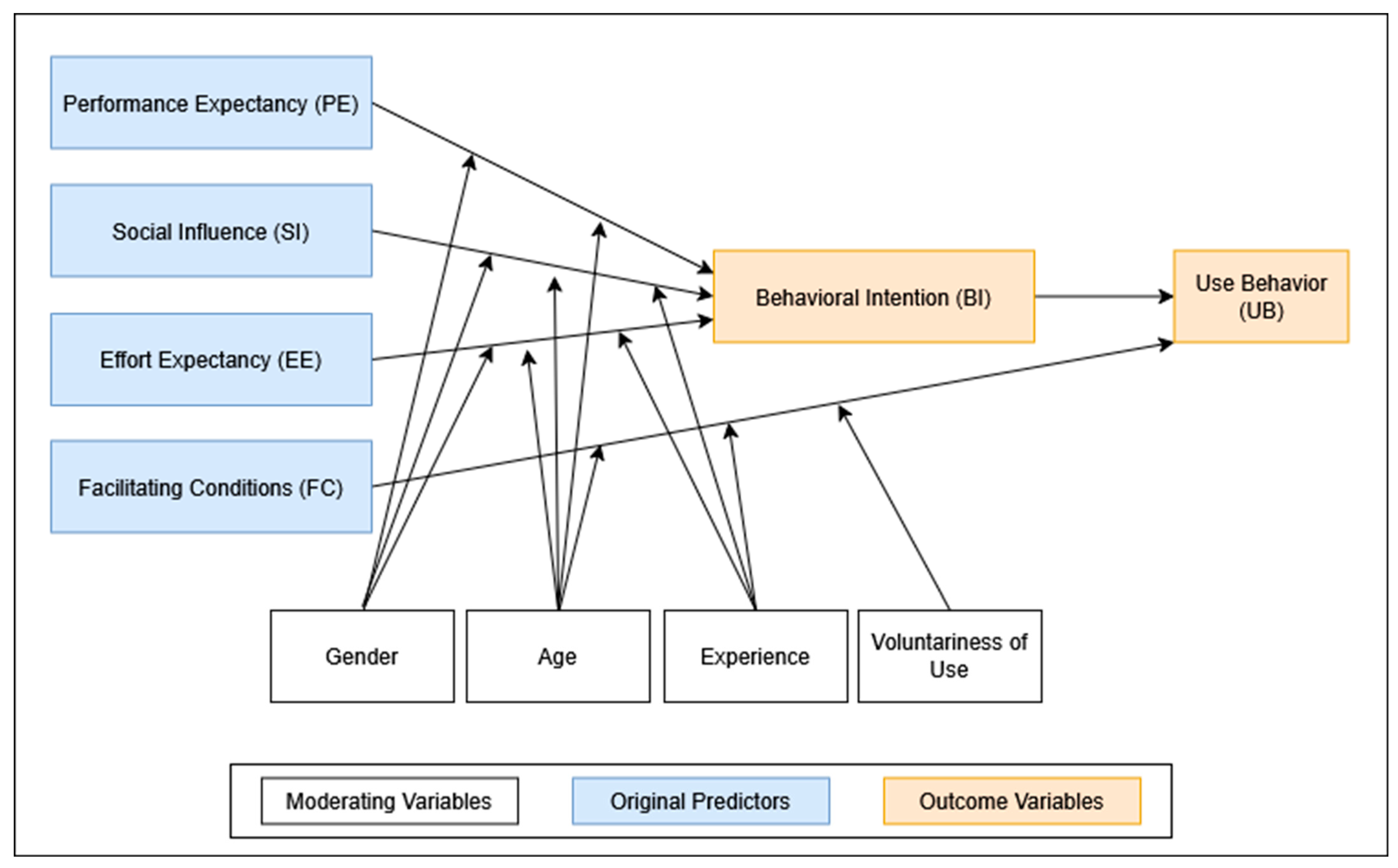

To systematically examine and predict technology adoption behavior, several theoretical frameworks have been developed. Among the most influential is the Unified Theory of Acceptance and Use of Technology (UTAUT), introduced by Venkatesh et al. in 2003 [

15]. Synthesizing eight prior models, UTAUT identifies four key predictors of behavioral intention to use technology: performance expectancy (PE), effort expectancy (EE), social influence (SI), and facilitating conditions (FC). These predictors are further moderated by demographic and experiential variables such as age, gender, professional experience, and voluntariness of use. The UTAUT framework has been extensively validated in healthcare research, including studies on clinician and patient adoption of digital innovations [

16,

17,

18]. Applications range from telemedicine platforms [

19] to digital learning environments [

20]. UTAUT’s adaptability has also allowed for extensions and modifications, such as age-specific analyses by Palas et al. [

21], and patient-focused perspectives explored by Zhang et al. [

22].

Despite the recognized promise of AI in radiologic diagnostics, particularly for fracture detection, empirical studies exploring clinician acceptance prior to clinical implementation remain scarce. Existing research often suffers from small sample sizes, technological heterogeneity, and insufficient theoretical grounding. Moreover, little is known about how acceptance varies by clinical specialty, years of experience, or previous exposure to AI technologies [

23,

24,

25]. Understanding these variables—and how they influence behavioral intention (BI) and subsequent use behavior (UB)—is critical for designing targeted implementation strategies and achieving successful integration into clinical practice.

To address these gaps, the present study conducts a pre-implementation assessment of physicians’ acceptance of AI-assisted fracture detection as part of the SMART Fracture Trial, a multicenter randomized controlled trial conducted in emergency care settings (ClinicalTrials.gov Identifier: NCT06754137). As one of the first theory-driven investigations in this area, the study aims to generate actionable insights to inform clinician training, facilitate effective implementation, and support the broader integration of AI tools into routine clinical workflows.

2. Materials and Methods

2.1. Study Design and Setting

This multicenter, cross-sectional survey represents the baseline (pre-implementation) assessment of physician attitudes within the SMART Fracture Trial—a prospective, randomized controlled study examining the influence of AI-assisted fracture-detection software on emergency department workflows. Data were collected from three sites: the Departments of Orthopedics and Traumatology, Pediatric Surgery, and Radiology at the University Hospital Salzburg (Austria); the corresponding departments at the University Hospital Nuremberg (Germany); and the Department of Orthopedics and Traumatology at the Regional Hospital Hallein (Austria). The two university hospitals are Level I trauma centers, each managing approximately 180,000 emergency presentations and more than 120,000 radiographic examinations annually, while Hallein functions as a regional acute-care hospital with approximately 50,000 emergency presentations and over 30,000 radiographic examinations per year. As these three sites are located within one Alpine healthcare region, the findings reflect a geographically confined physician cohort.

2.2. Survey Design and Conceptual Framework

The survey instrument was based on the Unified Theory of Acceptance and Use of Technology (UTAUT), proposed by Venkatesh et al. (2003) [

15] (see

Figure 1). The survey was administered to physicians participating in the SMART Fracture Trial (

n = 92) and included 21 items covering the five canonical UTAUT constructs: Performance Expectancy (PE), Effort Expectancy (EE), Social Influence (SI), Facilitating Conditions (FC), and Behavioral Intention (BI). Responses were recorded on a five-point Likert scale (1 = “strongly disagree”; 5 = “strongly agree”).

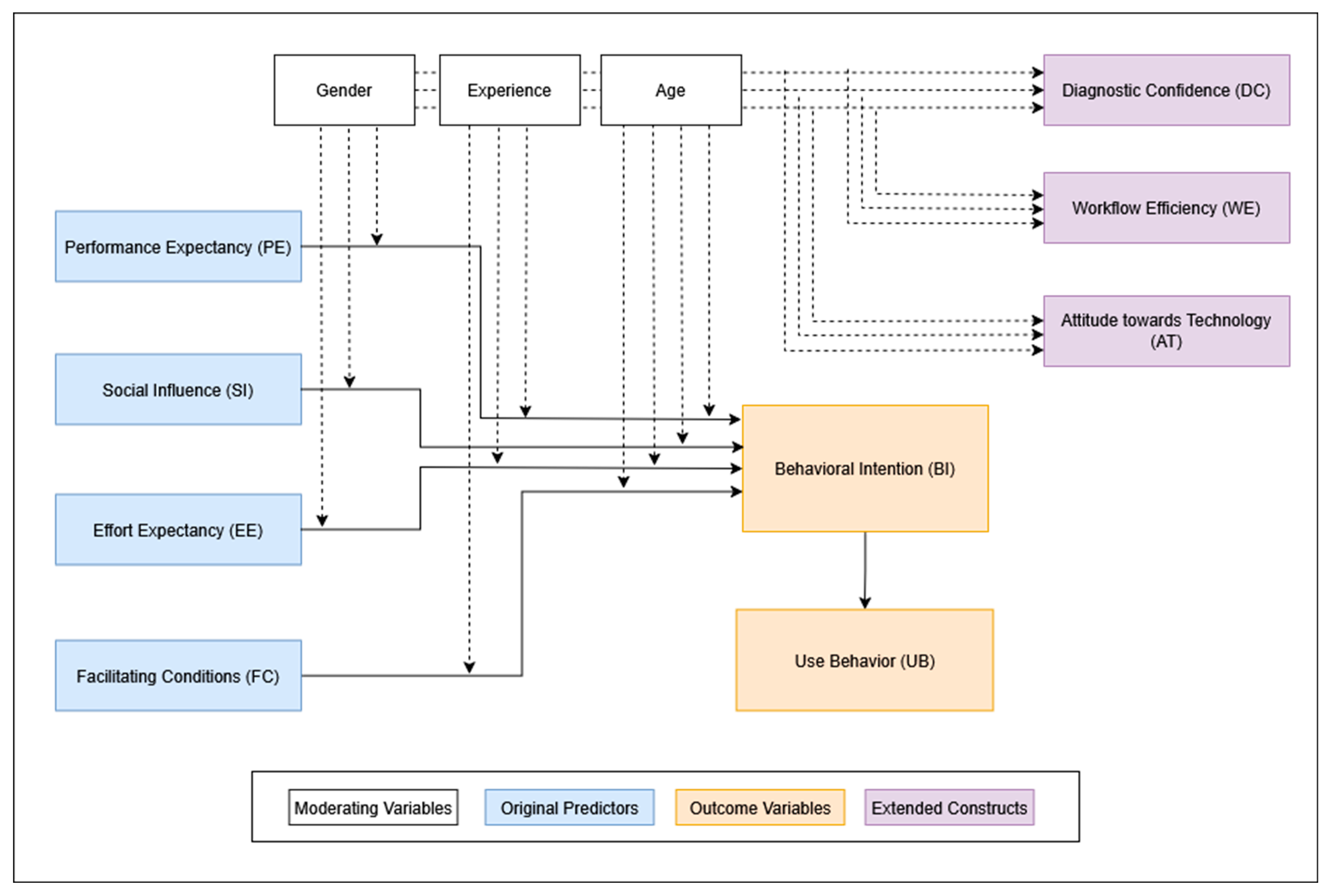

In line with the original UTAUT framework, these five constructs were modeled as predictors of BI, which was assumed to influence actual Use Behavior. To adapt the UTAUT model for the clinical context of AI-assisted fracture detection, we added three additional constructs: Attitude toward Technology (AT), Workflow Efficiency (WE), and Diagnostic Confidence (DC) (see

Figure 2). These were chosen to capture clinically relevant dimensions such as openness to innovation, perceived impact on daily workflow, and confidence in diagnostic support. To keep the statistical model predicting BI reasonably simple, AT, WE, and DC were not directly included as predictors but were analyzed in relation to various moderating variables.

The questionnaire also captured demographic data including age and gender, as well as clinical specialty, professional grade, years of postgraduate experience, and prior experience with AI in general and AI-based fracture detection in particular. These variables are well-established moderators within the UTAUT framework. Building on this foundation, we incorporated additional constructs relevant to AI in clinical settings, as recommended by Venkatesh, who emphasizes the importance of adapting UTAUT to the contextual, technological, and psychological characteristics of the target application [

26].

The construct Voluntariness of Use was deliberately excluded. As the survey was conducted before the AI tool was implemented, no usage policy had been established, and participants evaluated the tool hypothetically. Moreover, empirical evidence suggests that voluntariness has limited predictive value in protocol-driven clinical environments, such as hospitals, where usage decisions are often determined by institutional policies rather than personal discretion [

27].

The original English items were translated into German using OpenAI’s large language model (ChatGPT) and subsequently reviewed by a language editor with statistical expertise to ensure semantic and conceptual equivalence. Face validity was established through pilot testing with five physicians not involved in the main study, resulting in only minor wording adjustments. The final German questionnaire is included in the project files.

2.3. Participant Recruitment and Data Handling

All licensed physicians actively involved in fracture diagnosis or management within the participating departments during the SMART Trial recruitment period were eligible to participate. Exclusion criteria included medical students, visiting observers, and physicians not directly involved in patient care. From 1 March to 1 June 2025, each eligible clinician was invited to complete the survey. The invitation outlined study objectives and instructions. Respondents were asked to generate a four-character linkage code to enable anonymous pairing with the post-trial survey. Non-responders received reminders on days 14 and 28. Participation was voluntary, and no incentives were offered.

Paper-based questionnaires were double-entered by trained research staff. All records were anonymized and stored on EU-based servers in full compliance with the General Data Protection Regulation (GDPR). Only de-identified data were used for analysis.

2.4. Outcomes

The primary outcome was physicians’ Behavioral Intention (BI) to use AI-assisted fracture-detection software. We examined the extent to which BI could be predicted by the four UTAUT constructs—PE, EE, SI, and FC—and report the percentage of variance in BI explained by these predictors.

As a secondary aim, we evaluated whether the effects of PE, EE, SI, and FC on BI varied across four background variables: clinical specialty (radiology vs. surgical specialties), professional experience (<7 years vs. ≥7 years), prior experience with AI in general, and prior experience with AI-assisted fracture detection. If these variables significantly influenced the strength of the relationships, they were considered important moderators.

2.5. Statistical Analysis

The final analytic dataset included 92 physicians—sufficient for structural modeling under the guideline of approximately 10 observations per estimated parameter [

28]. To ensure model stability, we trimmed the structural equation model (SEM) and treated subsequent results as exploratory.

All analyses were conducted in R version 4.4.1 using the

lavaan,

semTools,

psych,

likert, and

ggplot2 packages. The analytic workflow (see

Figure 1) followed five sequential steps:

Descriptive summaries and scale reliability (Cronbach’s α; Spearman–Brown for two-item scales);

Confirmatory factor analysis (CFA) using robust diagonally weighted least squares (DWLS; ordered = TRUE, std.lv = TRUE), appropriate for ordinal data and small samples (<100 cases) [

29];

Trimmed SEM: BI_mean ≈ PE + EE + FC + SI;

Ordinary least squares regression analyses testing three binary moderators (sex, >6 years of experience, prior AI-fracture exposure);

Simplification strategies included the following: (i) no moderators within the SEM, (ii) dichotomization of moderator variables, and (iii) replacing the latent BI factor with a composite score (BI_mean), calculated as the arithmetic mean of its two items. Each latent construct comprised only two or three indicators to maintain a favorable parameter-to-sample ratio.

Descriptive and subgroup analyses followed standard procedures. Pairwise deletion was used for <1% missing values, and listwise deletion removed two incomplete responses from model estimation. Measurement quality was assessed via Cronbach’s α (target ≥ 0.70) and CFA fit indices (CFI ≥ 0.95, TLI ≥ 0.95, RMSEA ≤ 0.06, SRMR ≤ 0.08).

All statistical tests were two-sided, with significance set at p < 0.05. Factor loadings were classified as strong (≥0.70), moderate (≥0.50), or weak (<0.50).

2.6. Ethical Considerations

The SMART Trial was approved by the Ethics Committee of the Federal State of Salzburg (EK No. 1135/2024) on 8 January 2025. Participation in the pre-trial survey was voluntary; completion and return of the questionnaire were taken as informed consent. No identifiable personal data were collected, and the study conformed to the principles of the Declaration of Helsinki (2013 revision).

2.7. Use of Generative AI

OpenAI’s ChatGPT-4o (San Francisco, CA, USA), DeepSeek (Shanghai, China), and Grammarly AI (San Francisco, CA, USA) were used to translate and adapt the UTAUT questionnaire into German and to assist with grammar and style editing of the manuscript. These tools were not involved in study design, data collection, statistical analysis, or interpretation.

2.8. Transparency, Reproducibility, and Data Availability

All anonymized data, the German and English survey instruments, and the full R analysis script will be made available upon reasonable request, in line with MDPI’s open data policy.

3. Results

3.1. Response Rate and Participant Characteristics

A total of 92 physicians completed the baseline questionnaire, yielding a response rate of 83.8% among those invited during the SMART Trial pre-implementation phase. The mean age of respondents was 41.1 years (SD = 11.3), with a median age of 38.0 years.

Of the respondents, 58 (63.0%) identified as male and 34 (37.0%) as female. Clinical specialties were distributed as follows: 27 (29.3%) in Pediatric Surgery, 51 (55.4%) in Orthopedics or Trauma Surgery, 12 (13.0%) in Radiology, and 2 (2.2%) in other disciplines. Regarding professional roles, 44 (47.8%) were board-certified consultants, 41 (44.6%) were residents or physicians in training, and 4 (4.3%) worked as hospital-based general practitioners. As males and surgical specialists predominated (63% and 85%, respectively), the dataset is demographically unbalanced.

In terms of clinical experience, 43 (46.7%) reported more than 10 years of postgraduate experience, 7 (7.6%) had 7–10 years, 33 (35.9%) had 1–6 years, and 9 (9.8%) had less than 1 year. Regarding prior exposure to artificial intelligence in clinical practice, 27 (29.3%) reported no experience, 44 (47.8%) limited exposure, 13 (14.1%) moderate exposure, and 8 (8.7%) extensive exposure. More specifically, 51 physicians (55.4%) had no prior experience with AI-based fracture detection software such as Aidoc’s CE-marked fracture detection module (Version 1.x), while 27 (29.3%) reported limited, 10 (10.9%) moderate, and 4 (4.3%) extensive experience (see

Table 1).

3.2. Construct Scores and Internal Consistency

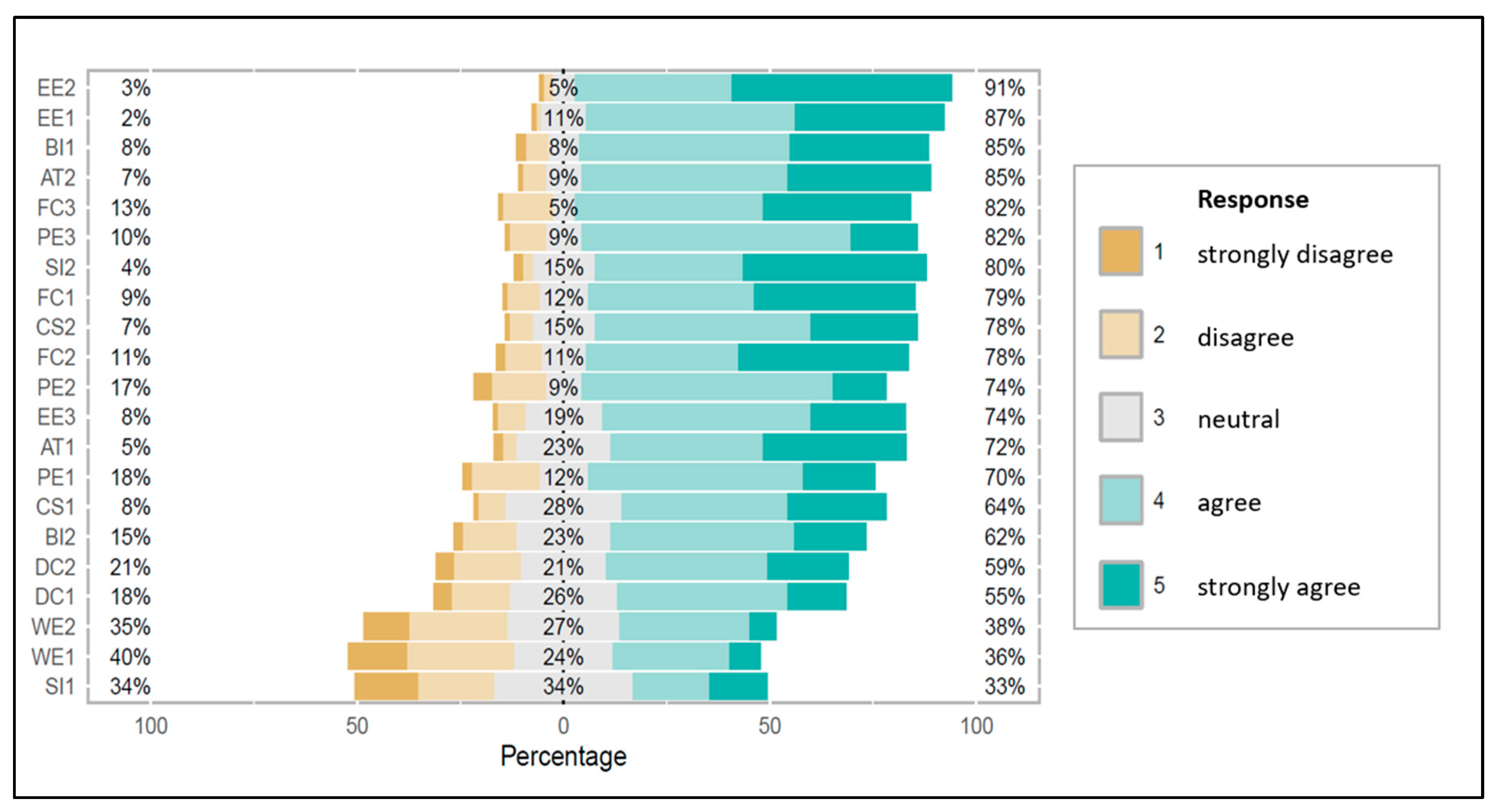

All 21 UTAUT items were assessed using five-point Likert scales (see

Figure 3). Mean scores ranged from 2.94 (WE) to 4.16 (EE), with standard deviations between 0.63 and 1.06, reflecting moderate variability in response patterns. The constructs with the highest mean agreement were EE (mean = 4.16, SD = 0.63), AT (mean = 4.05, SD = 0.80), and PE (mean = 3.71, SD = 0.83). The lowest agreement was observed for WE (mean = 2.94, SD = 1.05), indicating greater skepticism about the software’s potential impact on throughput and time savings (see

Table 2).

Internal consistency was assessed using Cronbach’s α for each of the nine latent constructs. The highest reliability was observed for CS (α = 0.854) and WE (α = 0.780), both interpreted as good. PE (α = 0.794) and DC (α = 0.783) also demonstrated good reliability. EE (α = 0.686), FC (α = 0.654), and AT (α = 0.695) showed borderline acceptable reliability, suggesting moderate item consistency with potential for refinement in future studies.

In contrast, the two-item scales SI (α = 0.537) and BI (α = 0.560) fell below the conventional acceptability threshold, indicating poor internal consistency. This is not uncommon for two-item scales; notably, all CFA loadings exceeded 0.62 and overall model fit remained acceptable, indicating that content validity was not compromised (see

Section 3.3).

3.3. Confirmatory Factor Analysis

A confirmatory factor analysis (CFA) was conducted to evaluate the construct validity of the variables derived from the UTAUT model (see

Table 3).

Three of the four fit indices—CFI (0.993), TLI (0.990), and SRMR (0.080)—met or were very close to commonly accepted thresholds for good model fit. The RMSEA (0.068) slightly exceeded the recommended threshold (≤0.06), indicating a marginal degree of model misfit.

All latent constructs showed statistically significant factor loadings (p < 0.001), indicating strong relationships between observed indicators and their respective latent dimensions. Overall, the hypothesized factor structure provided a reasonably good representation of the observed data, supporting its use for further model-based analyses, albeit with caution regarding residual error.

3.4. Structural Equation Modeling

To test the core assumptions of the UTAUT model, an SEM was specified with PE, EE, FC, and SI modeled as direct predictors of Behavioral Intention (BI). Due to convergence issues related to sample size and weak internal consistency of some constructs, BI could not be estimated as a latent variable. Instead, the mean of its two items (BI_mean) was used as a single observed outcome. This allowed estimation of a simplified SEM using the DWLS estimator.

All four canonical UTAUT predictors were statistically significant predictors of BI_mean. PE was the strongest predictor (β = 0.588,

p < 0.001), followed by SI (β = 0.391,

p < 0.001), FC (β = 0.263,

p < 0.001), and EE (β = 0.202,

p = 0.001) (

Table 4).

These results provide strong support for the UTAUT model in explaining physicians’ intention to use AI-assisted fracture detection tools in a clinical setting.

3.5. Moderator Effects

To explore whether physician characteristics influence the latent UTAUT constructs and the outcome variable (BI_mean), moderator analyses were performed using separate regression models using three binary moderators: gender, clinical experience (≤6 vs. >6 years), and prior experience with AI-based fracture detection. The results are presented below for each moderator.

3.5.1. Gender

None of the gender-based effects reached statistical significance at

p < 0.05, although associations with Clinical Satisfaction (

p = 0.075) and Diagnostic Confidence (

p = 0.109) approached significance (

Appendix A Table A1). This suggests a possible trend toward higher satisfaction and confidence among male physicians, though the effects were not robust. Gender had no effect on BI_mean.

3.5.2. Years of Experience

Physicians with more than six years of experience reported significantly higher perceived facilitating conditions (

p = 0.038) but lower workflow efficiency (

p = 0.030) and lower diagnostic confidence (

p = 0.023) (

Appendix A Table A2). These findings suggest that more experienced clinicians recognize stronger institutional support but remain more skeptical about AI’s efficiency and its ability to enhance diagnostic certainty. No significant effect was observed on BI_mean.

3.5.3. Experience with AI-Based Fracture Detection

The only significant moderation effect of prior AI-based fracture detection experience was on Effort Expectancy (

p = 0.008), indicating that physicians with hands-on experience perceived the software as easier to use (

Appendix A Table A3). No other latent constructs or BI_mean were significantly affected, though most coefficients trended positive.

4. Discussion

This multicenter, cross-sectional study assessed physicians’ acceptance of an AI-assisted fracture detection tool prior to its clinical deployment, applying an extended UTAUT model. A total of 92 physicians from orthopedics and trauma surgery, pediatric surgery, and radiology completed the pre-implementation survey as part of the SMART Fracture Trial, a randomized controlled study evaluating the impact of AI-supported fracture detection in emergency care.

The findings strongly support the UTAUT framework: All four core constructs—Performance Expectancy (PE), Effort Expectancy (EE), Social Influence (SI), and Facilitating Conditions (FC)—were significant predictors of Behavioral Intention (BI). These results align with prior UTAUT applications in clinical AI, including decision-support tools, where PE and EE have consistently emerged as the strongest determinants of BI and key drivers of technology adoption [

30,

31,

32,

33,

34].

Among the predictors, PE exerted the greatest influence on BI, indicating that physicians are more likely to adopt AI tools when they anticipate clear improvements in clinical performance. This mirrors earlier findings in radiology, primary care, and AI-enabled decision-making, where PE was consistently identified as the most important factor shaping intention to use digital health technologies [

30,

31,

32,

33]. In the present study, PE also ranked among the highest-scoring constructs, underscoring its central role in driving adoption.

EE was another strong predictor of BI and received high agreement scores. Previous UTAUT-based studies in clinical AI have emphasized that ease of use is essential for fostering trust and encouraging adoption, especially when technology demonstrably reduces cognitive workload [

31,

32,

33,

35,

36]. Moderator analysis revealed that physicians with prior experience in AI-assisted fracture detection reported significantly higher EE, suggesting that direct exposure enhances perceived usability.

Although FC had a smaller effect size than PE or EE, it remained a meaningful predictor of BI. This is consistent with evidence that institutional readiness, infrastructure, and IT support facilitate the transition from intention to actual use [

33]. Physicians with more than six years of experience rated FC significantly higher, possibly reflecting a greater awareness of available institutional resources to support AI implementation.

SI also significantly predicted BI, but its internal consistency was low. This finding is consistent with Lee et al. (2023), who observed similarly limited effects of social influence on AI acceptance among emergency physicians [

37]. In fast-paced, high-pressure environments such as emergency care, clinical decision-making tends to be autonomous, potentially reducing the influence of peer or supervisory expectations [

38,

39].

The BI construct itself showed relatively low internal consistency, suggesting that the two survey items used may not have fully captured a single underlying dimension. This limitation likely reflects the pre-implementation context, where physicians were asked to assess their intentions without hands-on experience with the tool. Similar challenges have been reported in other pre-implementation studies, where BI is shaped more by assumptions or external messaging than by direct experience [

32]. Despite this, BI scores were relatively high, indicating general openness to the technology. Moderator analyses revealed no significant effects of gender, professional experience, or prior exposure to AI-based fracture detection on BI. A comparative overview of our findings with those of other AI studies is provided in

Appendix A Table A4.

Beyond the core UTAUT constructs, this study examined three additional variables—Attitude toward Technology (AT), Workflow Efficiency (WE), and Diagnostic Confidence (DC)—to provide a more nuanced understanding of clinicians’ expectations before implementation. Although not part of the original UTAUT model, these constructs hold substantial clinical relevance. A positive AT can facilitate openness and reduce resistance to new digital systems. WE captures the extent to which AI is expected to streamline clinical routines—an especially critical factor in emergency settings. DC reflects confidence in clinical judgment when aided by AI, a determinant of both decision-making and patient safety [

35].

Moderator analysis revealed that physicians with more than six years of experience, while reporting higher FC, gave lower ratings for both DC and WE. This contrast may reflect experience-driven skepticism: less experienced clinicians may be more receptive to new technologies and more inclined to rely on AI support, whereas more experienced clinicians may be more aware of potential limitations, risks, and the possibility of automation bias. Such attitudes are consistent with prior research showing that clinical experience, perceived risk, and prior exposure shape how decision-support tools are evaluated [

40,

41,

42].

WE received the lowest agreement scores of all constructs. Although AI tools are often promoted as improving efficiency and reducing workload, many participants were uncertain whether these benefits would materialize in daily practice. This caution mirrors findings from emergency care studies, where clinicians tend to reserve judgment on workflow impact until a technology has demonstrated value in routine use [

33].

In contrast, AT was rated highly, suggesting a generally positive stance toward technological innovation among respondents. Combined with the strong ratings for PE and EE, these results indicate that acceptance is greatest when AI tools are perceived as clinically valuable, user-friendly, and supported by the broader institutional framework.

These findings underscore that effective implementation strategies must extend beyond leadership endorsement or regulatory approval. Successful adoption will require targeted peer-led training, opportunities for hands-on use, and transparent communication about the system’s capabilities and limitations. A gradual rollout, coupled with continuous feedback, may help align expectations with actual performance and increase acceptance through direct demonstration in clinical practice.

Several limitations must be acknowledged. First, the study exclusively reflects physicians’ perspectives and does not capture patient views, which are also relevant to the adoption of AI in clinical decision-making. Issues such as patient trust in AI-generated diagnoses, disclosure of AI involvement, and perceived transparency remain unexplored. Second, the cross-sectional design captures only stated intentions, not actual usage behavior. Many respondents had no direct experience with the AI tool, which may have affected their ratings, particularly for constructs such as BI. Consequently, no conclusions can be drawn about whether initial intentions will translate into sustained use after implementation.

Third, generalizability is limited by the specific clinical and technological context of this study. The focus on AI-assisted fracture detection in emergency care means results may not extend to other specialties, clinical settings, or AI applications, where determinants of acceptance may differ. Future research should assess whether the identified patterns hold in other domains, especially where time constraints and diagnostic complexity vary.

Fourth, despite careful translation of the UTAUT items into German, subtle differences in meaning or cultural interpretation may have influenced responses, particularly for constructs such as SI and FC. Constructs with only two items were especially susceptible to reliability issues. In addition, voluntary participation may have introduced selection bias toward respondents more favorably inclined toward digital technologies.

Future research should replicate these findings in larger and more diverse samples to enhance generalizability and allow more detailed subgroup analyses—particularly for constructs with lower reliability such as SI and BI. The planned second phase of this study will evaluate actual usage patterns following implementation, including usage frequency, clinical contexts, and user profiles, as well as follow-up survey data on satisfaction, perceived impact on diagnostic workflow, and changes in diagnostic confidence. This post-implementation phase will help determine whether initial acceptance translates into real-world utilization and sustained value and will identify barriers and opportunities for improving training, integration, and long-term adoption.