Abstract

Background: Myasthenia gravis (MG), a chronic autoimmune disorder with variable disease trajectories, presents considerable challenges for clinical stratification and acute care management. This systematic review evaluated machine learning models developed for prognostic assessment in patients with MG. Methods: Following PRISMA guidelines, we systematically searched PubMed, Embase, and Scopus for relevant articles published from January 2010 to May 2025. Studies using machine learning techniques to predict MG-related outcomes based on structured or semi-structured clinical variables were included. We extracted data on model targets, algorithmic strategies, input features, validation design, performance metrics, and interpretability methods. The risk of bias was assessed using the Prediction Model Risk of Bias Assessment Tool. Results: Eleven studies were included, targeting ICU admission (n = 2), myasthenic crisis (n = 1), treatment response (n = 2), prolonged mechanical ventilation (n = 1), hospitalization duration (n = 1), symptom subtype clustering (n = 1), and artificial intelligence (AI)-assisted examination scoring (n = 3). Commonly used algorithms included extreme gradient boosting, random forests, decision trees, multivariate adaptive regression splines, and logistic regression. Reported AUC values ranged from 0.765 to 0.944. Only two studies employed external validation using independent cohorts; others relied on internal cross-validation or repeated holdout. Of the seven prognostic modeling studies, four were rated as having high risk of bias, primarily due to participant selection, predictor handling, and analytical design issues. The remaining four studies focused on unsupervised symptom clustering or AI-assisted examination scoring without predictive modeling components. Conclusions: Despite promising performance metrics, constraints in generalizability, validation rigor, and measurement consistency limited their clinical application. Future research should prioritize prospective multicenter studies, dynamic data sharing strategies, standardized outcome definitions, and real-time clinical workflow integration to advance machine learning-based prognostic tools for MG and support improved patient care in acute settings.

1. Introduction

Myasthenia gravis (MG) is a chronic, low-prevalence autoimmune neuromuscular disorder characterized by impaired neuromuscular transmission arising from autoantibodies targeting the acetylcholine receptor (AChR) or other components of the neuromuscular junction [1,2]. Clinically, MG manifests as reversible, fluctuating skeletal muscle weakness, affecting extraocular muscles, bulbar muscle groups, and respiratory muscles. The condition may progress from isolated ocular involvement to life-threatening respiratory failure [3]. A subset of patients may experience early and rapid deterioration, advancing to the myasthenic crisis, which necessitates mechanical ventilation and intensive immunotherapy. Approximately 15% to 20% of patients with MG experience a crisis during their lifetime, with mortality rates reaching 4% to 12% in the absence of timely intervention [3,4,5].

Despite the availability of various immunomodulatory and supportive therapies, MG exhibits considerable clinical heterogeneity, with marked interindividual variability in treatment response. Even with standard therapy, some patients experience frequent relapses or rapid deterioration, complicating prognostication [1,6,7]. This unpredictability presents significant challenges for risk stratification, resource allocation, and personalized therapy, creating an urgent need for reliable, data-driven systems to identify high-risk patients and inform care decisions.

Recent advancements in artificial intelligence (AI) have significantly transformed clinical decision making across various medical fields. AI encompasses a broad spectrum of technologies, including expert systems, natural language processing, machine learning, and computer vision. Among these technologies, machine learning has emerged as a compelling risk prediction and disease management tool, demonstrating exceptional utility in addressing complex and heterogeneous medical conditions [8,9,10,11]. Machine learning algorithms offer distinct advantages over traditional statistical methods by processing high-dimensional data, capturing intricate inter-variable relationships, and uncovering hidden prognostic patterns that conventional approaches may fail to detect [12,13,14,15]. The integration of explainability tools, such as SHapley Additive exPlanations (SHAP), has further enhanced transparency and acceptance among clinical stakeholders [10,11,14,16].

In the context of MG, several studies have applied machine learning models to support clinical decision making by predicting outcomes such as intensive care unit (ICU) admission, crisis occurrence, hospitalization duration, and treatment response [17,18,19,20,21,22,23]. Many of these models leverage routinely collected clinical data, including structured features (e.g., demographics, medications, and laboratory results) and semi-structured inputs (e.g., examination videos and smartphone logs) [24,25,26,27], rather than relying on specialized imaging or genomic modalities. However, to our knowledge, no comprehensive systematic reviews have examined this subset of machine learning-based prognostic models in MG. This gap continues to limit their clinical translation and standardization.

In this systematic review and risk-of-bias appraisal, we evaluated machine learning-based prognostic models developed for patients with MG, focusing on those trained using routinely available structured and semi-structured clinical data. To ensure clinical relevance and generalizability, we excluded studies relying primarily on imaging, genomic, or other specialized inputs.

We synthesized model characteristics, including data sources, algorithmic choices, performance metrics, interpretability strategies, and validation methods. Additionally, we performed a structured assessment of bias across included studies. By consolidating current evidence, our review identifies key limitations in existing tools and outlines priorities for future research aimed at developing clinically actionable, personalized prognostic models for MG.

2. Materials and Methods

2.1. Review Protocol and Registration

This systematic review adhered to the 2020 Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines [28]. A completed PRISMA checklist is available in Supplementary Table S1. The study protocol was developed before the initiation of the review and included predefined objectives, article selection criteria, search strategies, data extraction procedures, and risk-of-bias assessment methods. The protocol was prospectively registered in the International Prospective Register of Systematic Reviews (PROSPERO) database (registration number: CRD420251026876), available at https://www.crd.york.ac.uk/PROSPERO/view/CRD420251026876 (accessed on 8 June 2025).

2.2. Eligibility Criteria

Studies were included if they (1) included patients diagnosed with MG; (2) applied machine learning algorithms or AI algorithms for predictive model development, validation, or automated clinical assessment; (3) assessed at least one clinical outcome or relevant physiological feature linked to disease progressions, such as exacerbation, hospitalization, or myasthenic crisis; and (4) utilized structured clinical or semi-structured observational data as input features, including demographic information, antibody status, disease severity, medication use, or laboratory results.

To ensure clinical applicability and focus on prognostic models based on routinely accessible clinical data, we deliberately excluded studies under the following conditions: (1) studies relying primarily on imaging data (e.g., magnetic resonance imaging, computed tomography, or positron emission tomography), genomic data, or electromyography; (2) studies that employed only conventional statistical methods (e.g., Cox regression or logistic regression without machine learning integration); (3) studies that focused exclusively on diagnostic classification without contributing to disease monitoring or prognostic inference; and (4) non-original research articles, including conference abstracts, commentaries, editorials, case reports, or review articles.

The exclusion of imaging, genomic, and electrophysiological studies intentionally prioritized models that can be readily applied in real-world clinical settings using easily obtainable data without specialized equipment or advanced processing techniques. This design choice aligns with our goal to promote practical and widely implementable machine learning solutions for MG prognostication.

2.3. Data Source and Search Strategy

PubMed, Embase, and Scopus were comprehensively searched to identify relevant studies published between 1 January 2010 and 31 May 2025. The search strategy was tailored to each database using controlled vocabulary (e.g., MeSH and Emtree terms) and keyword combinations related to myasthenia gravis, machine learning, artificial intelligence, and specific supervised and unsupervised algorithms, including support vector machines (SVMs), neural networks, random forests, extreme gradient boosting (XGBoost), linear discriminant analysis (LDA), soft independent modeling of class analogy (SIMCA), and chemometric approaches. The search was restricted to English-language publications. All retrieved records were imported into reference management software (EndNote 20, Clarivate Analytics, Philadelphia, PA, USA), and duplicates were removed before screening. The full search strategy for each database is presented in Supplementary Table S2.

2.4. Article Selection

Two independent reviewers screened all titles and abstracts retrieved from the database searches. Full-text articles were obtained and reviewed for studies meeting the inclusion criteria or when eligibility could not be determined from the abstract alone. Discrepancies were resolved through discussion until a consensus was reached. Studies meeting all predefined criteria were included in the analysis.

2.5. Data Extraction

The following data were extracted from each included study: first author, publication year, study design, sample size, machine learning approach, predicted outcomes, input variables, performance metrics, external validation status, and explainability method use. All data were recorded in a standardized spreadsheet. Additional data, such as information on cross-validation, calibration, and specific techniques (e.g., SHAP and feature importance rankings), were collected.

2.6. Risk-of-Bias and Quality Assessments

Risk of bias was systematically evaluated using the Prediction Model Risk of Bias Assessment Tool (PROBAST) across four domains: participants, predictors, outcome definition, and statistical analysis [29]. Two researchers independently conducted the assessments. Between-reviewer discrepancies were resolved through discussion, with arbitration by a third reviewer when necessary. All studies were included in the analysis. The results were used to interpret model performance and evaluate evidence strength.

2.7. Data Synthesis and Analysis

All extracted data were organized using a standardized format and reviewed narratively for structured comparison. Given the substantial heterogeneity observed across the included studies in designs, data sources, outcome definitions, population characteristics, and modeling approaches, a quantitative meta-analysis was not feasible. Instead, a thematic classification based on prediction targets and algorithm types was performed. Performance metrics and interpretability methods were compared among the studies to comprehensively assess clinical relevance and transparency.

3. Results

3.1. Review Sample

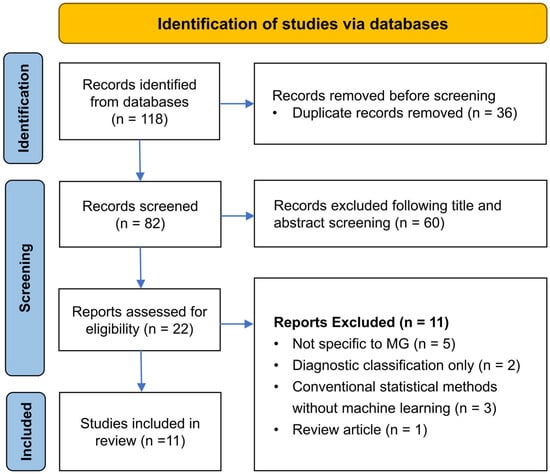

The systematic search identified a total of 118 records across three major databases (PubMed: 22, Embase: 52, and Scopus: 44). After the removal of 36 duplicates, 82 records were subjected to title and abstract screening. Of these records, 60 were excluded based on predefined eligibility criteria. The remaining 22 articles underwent full-text review, resulting in the exclusion of 11 studies for the following reasons: lack of relevance to MG (n = 5), emphasis solely on diagnostic modeling (n = 2), reliance on conventional statistical methods without machine learning integration (n = 3), and classification as non-original research (n = 1). Ultimately, 11 studies met the inclusion criteria and were thus included in the final systematic review. The selection process is detailed in Figure 1.

Figure 1.

PRISMA flow diagram of study selection process.

3.2. Study Characteristics

This systematic review included 11 original articles published between 2022 and 2025 (Table 1). Of these, seven developed predictive models while four centered on clustering or automated scoring strategies. The study cohorts comprised 51 to 890 patients with MG. All studies employed structured clinical or semi-structured observational data as input features—for example, demographic characteristics, autoantibody status, Myasthenia Gravis Foundation of America (MGFA) classification, comorbidities, medication use, laboratory values, and digital monitoring logs. Several studies incorporated novel data modalities, such as repeated video recordings or smartphone-based digital phenotyping. Four studies implemented prospective or pseudo-prospective designs, while the remaining were retrospective. Three studies integrated AI pipelines into telemedicine-based Myasthenia Gravis Core Examination (MG-CE) video examinations. One study developed a smartphone-based digital diary system for longitudinal symptom monitoring, while others focused on predicting clinical outcomes such as ICU admission, hospital stay length, myasthenic crisis, or prolonged mechanical ventilation.

Table 1.

Summary of included studies for myasthenia gravis prognostication using structured clinical data.

Regarding geographic distribution, five studies were conducted in Asia (three in Taiwan and two in China), three in the United States, and three in Europe (two in Germany, one in the Netherlands). All studies applied machine learning or AI algorithms to classify patients or predict clinical trajectories, with performance evaluated using cross-validation, external validation cohorts, or inter-rater reliability comparisons. While some studies incorporated interpretability frameworks (e.g., SHAP or decision rules), others focused on feasibility and algorithm development without formal performance benchmarking.

3.3. Model Characteristics and Performance

Of the 11 included studies, 7 developed predictive models for clinical outcomes. Among these, the highest-performing models were the C5.0 decision tree, random forest, XGBoost, multivariate adaptive regression splines (MARS), and k-means clustering. Six studies employed classification algorithms, with the area under the receiver operating characteristic curve (AUC) used as the primary metric for evaluating model performance. The highest performance was exhibited by a model developed by Xu et al. [21], an XGBoost framework for predicting 6-month post-intervention status (PIS) in AChR antibody-positive patients with generalized MG. This model achieved AUC values of 0.944 and 0.908 in internal and external validation tests. Kuo et al. [18] also used the XGBoost algorithm to develop a model for predicting ICU admission; their model achieved an AUC value of 0.894 in cross-validation tests. Chang et al. [17] used C5.0 decision trees to anticipate the need for ICU admission; their model achieved an AUC value of 0.814. Zhong et al. [19] used a random forest model to differentiate between patients based on treatment response; this model achieved AUC values of 0.84, 0.74, and 0.79. Bershan et al. [22] used a random forest model to identify patients at increased risk of crisis; their model achieved an AUC value of 0.765. Heider et al. [23] used logistic regression to identify risk factors for prolonged mechanical ventilation during myasthenic crisis, yielding a cross-validated AUC of 0.78.

Only Chang et al. [20] analyzed a continuous outcome using MARS to predict hospitalization duration. Their reported error metrics included a mean absolute percentage error of 0.524, a symmetric mean absolute percentage error of 0.409, and a relative absolute error of 1.133. Steyaert et al. [24] used unsupervised learning techniques, combining principal component analysis with k-means clustering, to perform exploratory digital phenotyping and identify patient subtypes.

Among the seven prognostic modeling studies, only two (Xu et al. and Zhong et al.) [19,21] performed external validation using independent cohorts, while the remaining five relied on internal validation methods such as cross-validation or dataset splitting. Kuo et al. and Heider et al. [18,23] presented comprehensive information on calibration analyses, with Kuo using calibration plots and Brier scores, and Heider et al. utilizing the Generalized Unbiased Evaluation of Scoring Systems (GUESS) algorithm for probability calibration. Four studies used SHAP values for interpretability analysis (Table 1).

Distinct from the predictive modeling studies, four investigations explored either unsupervised clustering or AI-assisted clinical assessments. Steyaert et al. [24] applied k-means clustering with principal component analysis (PCA) visualization to stratify symptom fluctuation subtypes using smartphone-based monitoring. The remaining three studies (Lesport et al. [25]; Garbey et al. [26,27]) developed automated MG-CE scoring pipelines leveraging computer vision and natural language processing techniques. Although predictive performance was not evaluated, one study reported up to 25% inter-rater variability, underscoring the potential of AI-based scoring to enhance clinical consistency [27].

3.4. Risk of Bias and Applicability

We used PROBAST to assess study quality and risk of bias across four domains: participants, predictors, outcome definitions, and statistical analysis. Only the studies by Zhong et al. [19], Xu et al. [21], and Heider et al. [23] were rated as having low risks across all domains, whereas the remaining studies exhibited high risks in two or more domains.

The risk of bias was predominantly concentrated in the predictor and analysis domains. Common methodological limitations identified by Chang et al. [5,6], Kuo et al. [18], Steyaert et al. [24], and Bershan et al. [22] included insufficient sample size, class imbalance, lack of external validation or calibration analysis, and temporal confounding between input variables and outcomes, which introduced a risk of information bias. The study by Steyaert et al. [24] diverged from conventional model development; the researchers used daily self-reported functional deterioration status as a prognostic variable and combined it with MG-affected activities of daily living scores and symptom clustering for feature analysis. Despite being conceptually innovative, the study mentioned above was primarily exploratory in nature, lacking clinical intervention or validation through standard event-based outcomes. Its methodological characteristics differed from those of the other studies we reviewed, thereby warranting contextual interpretation of its assessment results according to the research objectives. By contrast, Xu et al. [21], Zhong et al. [19], and Heider et al. [23] applied well-defined inclusion criteria and structured input selection, used clinically relevant outcome definitions, and incorporated internal or external validation tests, partially mitigating limitations inherent to retrospective designs.

In terms of applicability, although the majority of studies relied on clinical data, many encountered implementation barriers attributable to restricted data sources, insufficient variable coverage, and undefined deployment scenarios. The studies by Xu et al. [21], Zhong et al. [19], and Heider et al. [23] exhibited alignment between data composition, model structure, and target populations, indicating increased feasibility for integration into real-world settings. Despite being published as a Letter to the Editor, the study by Heider et al. [23] met our inclusion criteria with formal model development, multicenter data, and validation results and was therefore included.

Three studies by Lesport et al. [25] and Garbey et al. [26,27] focused on AI-assisted video-based scoring of neurological examinations, specifically the MG-CE and MG-ADL scales. These studies aimed to demonstrate technical feasibility and inter-rater consistency rather than clinical outcome prediction. While methodologically innovative, their reliance on audiovisual input features and absence of comparator models highlight the need for further validation in diverse clinical contexts.

A detailed summary is provided in Table 2.

Table 2.

Risk of bias and applicability assessment using PROBAST.

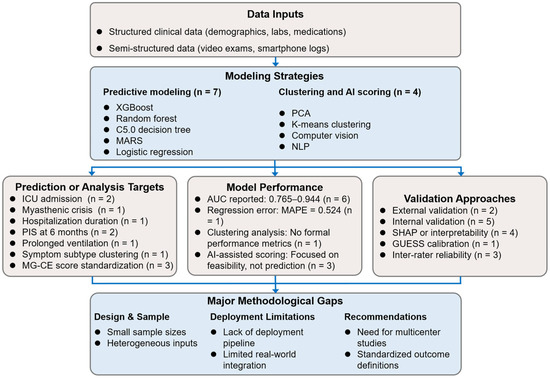

Collectively, the included studies predominantly employed ensemble and decision tree-based algorithms, with ICU admission and short-term functional outcomes being the most frequently targeted endpoints. However, substantial heterogeneity was observed in data sources, sample sizes, model architectures, and validation strategies, underscoring the fragmented and exploratory nature of current MG prognostic research. In response, a comprehensive conceptual framework was developed to synthesize data sources, machine learning methodologies, prediction targets, validation strategies, and principal methodological limitations across the included studies (Figure 2). This framework visually integrates the analytical workflow and key findings of this systematic review, providing a structured overview for future research development.

Figure 2.

Synthesis of data types, model strategies, outcome targets, and methodological considerations across included studies. AUC, area under the receiver operating characteristic curve; ICU, intensive care unit; MAPE, mean absolute percentage error; MARS, multivariate adaptive regression splines; MG-CE, Myasthenia Gravis Core Examination; NLP, natural language processing; PCA, principal component analysis; PIS, post-intervention status; SHAP, SHapley Additive exPlanations; XGBoost, eXtreme Gradient Boosting.

4. Discussion

This systematic review synthesized current evidence on machine learning and AI applications in MG, encompassing prognostic modeling and automated clinical assessment. While machine learning applications in MG remain nascent, our findings indicate that these models demonstrate potential for supporting clinical decision making, particularly in predicting adverse outcomes, including disease deterioration, hospitalization, and myasthenic crisis.

Most studies centered on prognostic modeling using structured, routinely collected clinical data—such as demographics, MGFA classification, and laboratory results—highlighting a focus on practical, implementation-ready inputs. A smaller subset explored non-predictive uses of AI, including automated examination scoring and symptom-based patient subtyping. These studies reflect a broadening interest in integrating AI into various stages of MG care, from early risk prediction to standardized assessment and remote monitoring.

While AI encompasses a broad spectrum of technologies, this review concentrated on machine learning applications with low technical barriers to adoption. Studies relying primarily on high-cost or specialized modalities—such as imaging for anatomical assessment, genomics, or electrophysiology—were excluded. For example, purely imaging-based AI applications in MG have mainly focused on thymoma detection or classification, often involving non-MG patients and offering limited prognostic utility [30,31,32]. In contrast, we prioritized models based on clinically pragmatic input sources with higher translational relevance and broader potential for deployment across healthcare settings.

Despite favorable performance metrics (AUC: 0.765–0.944), considerable methodological heterogeneity was observed. Only three studies [19,21,23] demonstrated low risk of bias across all PROBAST domains, reflecting greater analytical rigor and potential generalizability. Single-center designs, small sample sizes, lack of calibration assessment, and minimal deployment planning limited the remaining studies. While some employed interpretability techniques to enhance clinical relevance, few addressed execution-phase concerns such as workflow integration, model usability, or implementation logistics. None of the included models demonstrated readiness for validated deployment in routine practice.

A persistent barrier to progress in this field is the lack of large, diverse, and openly accessible clinical datasets. A total of 6 of the 11 studies included in this review were derived from just two small and overlapping sources. Three studies [17,18,20] were conducted using data from the same single-center registry in Taiwan, resulting in substantial duplication of patient cohorts and input variables. Similarly, three studies analyzing AI-assisted quantification of MG-CE and MG-ADL used the same video dataset [25,26,27]. Despite differing research aims, these studies were not based on independent data collections. This limited data diversity constrains the variability of model training inputs and the assessment of model performance across different clinical settings and patient populations. Moreover, reusing the same cohort across multiple publications risks overstating evidence strength and can misrepresent the field’s maturity. These findings underscore a structural bottleneck in MG AI research: the urgent need for collaborative multicenter efforts to build broader, demographically inclusive datasets. Advancing the development and accessibility of such repositories will be essential for enabling robust, generalizable, and clinically deployable AI applications in MG.

4.1. Targeted Outcomes and Their Relevance to Critical Care

Several targeted outcomes were directly related to acute care triage and resource allocation in the included studies. Chang et al. and Kuo et al. used the C5.0 decision tree and XGBoost algorithms, respectively, to develop models for predicting the risk of ICU admission [17,18]. Both groups identified MGFA classification and disease duration as significant predictors. The model developed by Kuo et al. [18] achieved an AUC value of 0.894 and revealed a nonlinear effect of age and the influence of thymectomy status through SHAP analysis. In their pseudoprospective study, Bershan et al. [22] used a random forest classifier to estimate myasthenic crisis; they highlighted serum creatinine fluctuations and interunit transfers as key indicators. Xu et al. [21] constructed a rigorously validated framework for determining 6-month MGFA-PIS for AChR antibody-positive patients with generalized MG. The framework incorporated inflammatory indices, such as the systemic immune-inflammation index, neutrophil-to-lymphocyte ratio, platelet-to-lymphocyte ratio, and quantitative MG scores, highlighting the complementary utility of biological markers in personalized prognostication. Using a multicenter dataset and Ensemble Feature Selection, Heider et al. [23] developed a logistic regression model to predict prolonged mechanical ventilation (>15 days) during myasthenic crisis. Their model, calibrated using GUESS, achieved an AUC of 0.78 and incorporated clinically accessible predictors such as age, MGFA classification, comorbidities, pneumonia, delirium, and cardiopulmonary resuscitation. In a secondary analysis, Chang et al. [20] used MARS to estimate hospitalization duration, the only continuous outcome assessed in our review. Steyaert et al. [24] conducted a prospective digital phenotyping investigation combining electronic patient-reported outcomes with wearable sensor data. They used k-means clustering to identify symptom fluctuation patterns. Despite being exploratory, this approach showcases the integration of remote monitoring with unsupervised learning in the management of MG.

4.2. Balancing Transparency and Accuracy in Algorithm Design

The tension between algorithmic performance and interpretability remains crucial in MG prognostic modeling. Chang et al. [17] used C5.0 decision trees to create explicit clinical rules with visualization capabilities suitable for rapid clinical judgment. Kuo et al. adopted a gradient-boosting approach paired with SHAP to explain complex relationships, achieving higher accuracy at the cost of higher logical complexity [16]. Heider et al. [23] demonstrated logistic regression combined with GUESS-based calibration, balancing predictive performance with transparency through clinically grounded variables and probability refinement techniques. Rule-based systems offer advantages in comprehensibility and adoption flexibility but struggle with high-dimensional data and nonlinear interactions. Ensemble approaches overcome these limitations but require interpretive mechanisms to fulfill clinical and operational needs [14,16,33]. Model architecture should be selected according to task characteristics and application scenarios rather than relying solely on performance metrics or algorithmic form. Outside the scope of prognostic modeling, automation-oriented studies by Lesport and Garbey [25,26,27] adopted computer vision and natural language processing pipelines, underscoring the interpretability limitations of AI systems applied beyond traditional prognostic modeling.

In acute care settings, clarity and workflow compatibility often outweigh marginal gains in predictive power. By contrast, long-term management and personalized treatment benefit more from systems that can handle layered clinical and behavioral inputs and support nuanced patient stratification [12,34]. Embedding domain-specific considerations and task-oriented design principles during early model development phases can substantially enhance clinical relevance and implementation feasibility.

4.3. Bridging the Gaps Between Explainability and Clinical Acceptability

Four studies used interpretation techniques such as SHAP values (Kuo et al., Xu et al., and Bershan et al. [18,21,22]) and decision tree rules (Chang et al. [17]). These methods enhance result visualization and variable interpretation transparency, addressing explainability needs in clinical AI applications [33,34,35]. However, the maturity of interpretation techniques does not necessarily translate to accurate understanding and practical application by clinical teams. Xu et al. identified inflammatory indicators as key prognostic factors. Kuo et al. highlighted the effect of age on MGFA classification in ICU triage. However, graphs depicting feature contribution can illustrate model logic; the knowledge required for interpreting graphical outputs may impede clinical adoption [16,36]. Bershan et al. conducted a SHAP analysis but did not define trigger conditions or operational guidelines. This suggests that most explanatory modules remain focused on post hoc analysis rather than supporting real-time decision making [12,36].

Our review indicates that interpretation techniques should be designed synchronously with clinical workflows, such as setting trigger points, standardizing output formats, and mapping clinical terminologies, to improve usability and integration into decision-making processes.

4.4. The Blurred Boundary Between Prediction and Early Warning

The included studies primarily focused on risk assessment, with model performance evaluated using metrics such as the AUC value. Once applied in clinical practice, risk assessment models often shift from retrospective analyses to real-time alert mechanisms designed to flag high-risk individuals and trigger timely interventions [34]. This shift involves reconstructing the fundamental nature of the task: prediction focuses on long-term performance and accuracy, whereas early warning requires explicit definitions of thresholds, output frequency, and decision-triggering processes [33,34]. Bershan et al. used routine clinical data to stratify patients based on the risk of severe exacerbation; however, the approach remained a proof of concept without integrating real-time monitoring or response protocols. Similarly, Chang et al. and Kuo et al. [17,18] developed ICU-focused models but did not specify how outputs were incorporated into frontline decision-making processes, such as rapid response team activation or ICU assessment initiation. As machine learning tools transition from retrospective analysis to real-time decision support, their effectiveness depends not only on algorithmic precision but also on seamless integration into clinical workflows, translating outputs into actionable signals [14,33,34].

4.5. Critical Care Applications and Implementation Challenges

Machine learning methods often outperform traditional statistical approaches by capturing complex patterns in high-dimensional clinical, laboratory, and physiological data, enabling tailored assessments in acute neuromuscular conditions such as myasthenic crises [37,38,39,40]. These approaches leverage diverse clinical, laboratory, and physiological inputs, facilitating risk stratification and optimizing intervention timing during episodes of severe neuromuscular deterioration. Despite these findings, the relationship between algorithm-generated output and clinical accountability remains unclear. Most included studies reported classification probabilities or risk assessments without explicitly stating whether these outputs were intended to serve as reference recommendations or as determinants for resource allocation and care decisions. In terms of ICU admission [17,18], the substantive effect of high-accuracy risk assessment on care level depends on whether outputs can be rapidly interpreted, incorporated into current decision frameworks, and interfaced with standardized clinical trigger points. Without operational guidelines and response protocols, even highly accurate systems may remain disconnected from care processes and raise questions regarding accountability and patient rights. Zhong et al. [19] used a multicenter random forest model as an online, real-time prediction platform, marking a rare example of preliminary clinical deployment. Bershan et al. [22] published their complete analysis code, becoming the only study with full reproducibility and open-source characteristics, demonstrating a commitment to transparency. Heider et al. [23] also developed a web-based interface (POLAR) to support probability estimation for prolonged mechanical ventilation, which may assist in clinical decision making pending further prospective validation and workflow integration.

Although improving analytical accuracy is essential, real-world use demands clearly defined integration points, operational clarity, and shared responsibility between algorithm developers and clinical teams.

4.6. Research Limitations and Future Directions

Despite promising results, current machine learning models face substantial barriers to clinical integration and demonstrate insufficient methodological rigor.

First, we excluded data modalities not routinely leveraged for prognostic decision making in MG to enhance generalizability. Studies utilizing genetic analyses or specialized diagnostics were omitted. While this ensures broad applicability, it may exclude complementary prognostic insights. Additionally, AI studies in MG utilizing imaging predominantly focus on thymoma detection rather than prognostic prediction, often restricted to thymoma subgroups and potentially including non-MG patients, limiting generalizability. Thus, excluding primarily imaging-based studies was pragmatic and aligned with our focus on broad, subtype-inclusive prognostic modeling.

Second, most included studies were retrospective, single-center investigations with limited sample sizes. Only two studies performed external validation using multicenter data. Six of the eleven studies utilized only two overlapping data sources, constraining training variability and raising generalizability concerns. This reflects the broader challenge of scarce, high-quality multicenter datasets. Future research requires expanded data-sharing initiatives and collaborative infrastructure to improve model robustness and real-world applicability.

Third, although several models applied interpretability tools such as SHAP or decision trees, these remained disconnected from clinical workflows. Most lacked real-time interfaces, operational triggers, or integration into routine decision-making processes, limiting their impact on actual care delivery [34,36].

Fourth, existing models emphasize acute outcomes (ICU admission, mechanical ventilation) and rely heavily on static, clinician-reported variables. This approach limits their ability to capture the temporal and individualized nature of MG progression. Intermediate and patient-centered endpoints, such as long-term functional status, quality of life, and rehospitalization, remain understudied. Although few studies incorporated time-series data, advanced sequence-based architectures (recurrent neural networks, Transformers) remain underexplored [41,42]. Additionally, patient-reported parameters including fatigue, emotional distress, and sleep quality are rarely included, despite their potential value for prognostic assessment [36,43,44,45].

It is worth noting that an increasing body of recent research has applied novel machine learning techniques to diverse aspects of MG, including infrared spectral imaging [46], facial recognition-based assessment [47], multi-omics profiling (e.g., metabolomics, microbiome, genomics) [48,49,50,51], and health economic modeling [52]. While methodologically innovative, these studies were excluded as the scope of this review focused on prognostic models built on routinely available structured clinical data, whereas most targeted diagnosis, multi-omics biomarker discovery, or cost prediction, and often relied on non-structured inputs. Despite being outside our inclusion criteria, these research directions hold substantial potential. As clinical workflows increasingly integrate sensor-based technologies, multi-omics analyses, and multimodal data fusion, such models may become integral to MG monitoring and personalized care. Continued AI advancements will likely enable hybrid frameworks that combine structured clinical data with richer, non-traditional inputs, offering new opportunities for comprehensive prognostic modeling in MG.

Despite these limitations, the scarcity of machine learning studies utilizing structured clinical data for MG prognostic modeling underscores this systematic review’s unique contribution. Our findings provide a foundational framework for future research and may guide emerging model development while supporting the integration of diverse clinical data sources for eventual implementation.

In summary, MG presents clear diagnostic criteria, quantifiable outcomes, and variable disease trajectories, positioning it as an optimal candidate for artificial intelligence-driven precision neuroimmunology. Through continued integration of high-quality data repositories and advanced algorithms, intelligent systems may become fundamental components for predicting disease exacerbations, treatment responses, and care optimization, ultimately improving clinical outcomes for MG patients.

5. Conclusions

This systematic review comprehensively evaluated machine learning models for prognostication in MG, providing detailed insights into predictive targets, methodologies, and application readiness based on structured and semi-structured clinical data. The 11 studies assessed ICU admission, myasthenic crisis, treatment response, hospitalization duration, symptom fluctuations, and AI-assisted clinical scoring. Prognostic models showed potential for acute care applications, especially in risk stratification and resource allocation, while non-predictive studies illustrated opportunities for standardizing neurological assessments. However, substantial methodological variability was observed across studies. Common limitations included reliance on retrospective single-center data, insufficient calibration reporting, limited external validation, and a lack of fairness assessments. Additionally, few studies provided interfaces or pathways for real-time clinical integration, limiting their practical deployment.

This review introduces a comparative framework highlighting heterogeneity in prediction targets, data sources, algorithmic strategies, and validation approaches across current MG AI studies. It underscores critical gaps in deployment readiness, including trade-offs between model complexity and interpretability, and the absence of early warning integration and fairness considerations. These insights extend beyond MG, providing an evidence-based roadmap for developing adaptable tools that combine real-world utility with methodological rigor, particularly in critical care environments where timely, responsible implementation is essential.

Future studies should prioritize multicenter collaboration, dynamic data pipelines, and clear implementation pathways to enhance the clinical interpretability and usability of predictive tools. As data accessibility and algorithmic transparency advance, machine learning holds strong potential for improving risk assessment and enabling timely, personalized care in patients with MG.

Supplementary Materials

The following supporting information can be downloaded at https://www.mdpi.com/article/10.3390/diagnostics15162044/s1, Supplementary Table S1. PRISMA 2020 Checklist; Supplementary Table S2. Search strategy for each database.

Author Contributions

Conceptualization, methodology, data curation, screening and assessment, writing—original draft preparation, visualization, C.-C.C.; methodology, data curation, data analysis, screening and assessment, writing—original draft preparation, visualization, I.-C.W.; data curation, screening and assessment, writing—review and editing, O.A.B.; methodology, screening and assessment, supervision, writing—review and editing, C.-T.H.; conceptualization, supervision, project administration, writing—review and editing, H.-C.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study is a systematic review based entirely on previously published data. It does not involve any new data collection from human participants. Therefore, ethical approval from an institutional review board (IRB) was not required.

Informed Consent Statement

Because this study analyzed aggregated data from previously published studies and did not involve any individual-level or identifiable participant information, informed consent from participants was not required.

Data Availability Statement

All data utilized in this systematic review were derived exclusively from previously published studies available in the public domain. Data were extracted from peer-reviewed articles indexed in major databases, including PubMed, Embase, and Scopus. No new datasets were generated or collected since this review is based solely on secondary data. Consequently, data sharing is not applicable.

Acknowledgments

We sincerely thank the editors and reviewers for their invaluable insights and constructive feedback, which have substantially improved the quality and rigor of this manuscript.

Conflicts of Interest

The authors have no conflicts of interest to declare.

Abbreviations

The following abbreviations are used in this manuscript:

| AChR | Acetylcholine receptor |

| ADL | Activities of daily living |

| AUC | Area under the curve |

| AI | Artificial intelligence |

| CART | Classification and regression tree |

| ICU | Intensive care unit |

| LR | Logistic regression |

| MAPE | Mean absolute percentage error |

| MARS | Multivariate adaptive regression splines |

| MG | Myasthenia gravis |

| MG-CE | Myasthenia Gravis Core Examination |

| MGFA | Myasthenia Gravis Foundation of America |

| MLR | Multiple linear regression |

| NLP | Natural language processing |

| NLR | Neutrophil-to-lymphocyte ratio |

| PCA | Principal component analysis |

| PIS | Postintervention status |

| PLR | Platelet-to-lymphocyte ratio |

| QMG | Quantitative myasthenia gravis |

| RAE | Relative absolute error |

| SHAP | SHapley Additive exPlanations |

| SII | Systemic immune-inflammation index |

| SMAPE | Symmetric mean absolute percentage error |

| SVM | Support vector machine |

| XGBoost | eXtreme gradient boosting |

References

- Gilhus Nils, E. Myasthenia Gravis. N. Engl. J. Med. 2016, 375, 2570–2581. [Google Scholar] [CrossRef]

- Gilhus, N.E.; Verschuuren, J.J. Myasthenia Gravis: Subgroup Classification and Therapeutic Strategies. Lancet Neurol. 2015, 14, 1023–1036. [Google Scholar] [CrossRef]

- Claytor, B.; Cho, S.M.; Li, Y. Myasthenic Crisis. Muscle Nerve 2023, 68, 8–19. [Google Scholar] [CrossRef]

- Neumann, B.; Angstwurm, K.; Mergenthaler, P.; Kohler, S.; Schönenberger, S.; Bösel, J.; Neumann, U.; Vidal, A.; Huttner, H.B.; Gerner, S.T.; et al. Stetefeld. Myasthenic Crisis Demanding Mechanical Ventilation: A Multicenter Analysis of 250 Cases. Neurology 2020, 94, e299–e313. [Google Scholar] [CrossRef]

- Wang, Y.; Huan, X.; Zhu, X.; Song, J.; Yan, C.; Yang, L.; Xi, C.; Xu, Y.; Xi, J.; Zhao, C.; et al. Independent Risk Factors for in-Hospital Outcome of Myasthenic Crisis: A Prospective Cohort Study. Ther. Adv. Neurol. Disord. 2024, 17, 17562864241226745. [Google Scholar] [CrossRef] [PubMed]

- Schneider-Gold, C.; Hagenacker, T.; Melzer, N.; Ruck, T. Understanding the Burden of Refractory Myasthenia Gravis. Ther. Adv. Neurol. Disord. 2019, 12, 1756286419832242. [Google Scholar] [CrossRef] [PubMed]

- Tsai, N.W.; Chien, L.N.; Hung, C.; Kuo, A.; Chiu, Y.T.; Lin, H.W.; Jian, L.S.; Chou, K.P.; Yeh, J.H. Epidemiology, Patient Characteristics, and Treatment Patterns of Myasthenia Gravis in Taiwan: A Population-Based Study. Neurol. Ther. 2024, 13, 809–824. [Google Scholar] [CrossRef] [PubMed]

- Patel, U.K.; Anwar, A.; Saleem, S.; Malik, P.; Rasul, B.; Patel, K.; Yao, R.; Seshadri, A.; Yousufuddin, M.; Arumaithurai, K. Artificial Intelligence as an Emerging Technology in the Current Care of Neurological Disorders. J. Neurol. 2021, 268, 1623–1642. [Google Scholar] [CrossRef]

- Myszczynska, M.A.; Ojamies, P.N.; Lacoste, A.M.B.; Neil, D.; Saffari, A.; Mead, R.; Hautbergue, G.M.; Holbrook, J.D.; Ferraiuolo, L. Applications of Machine Learning to Diagnosis and Treatment of Neurodegenerative Diseases. Nat. Rev. Neurol. 2020, 16, 440–456. [Google Scholar] [CrossRef]

- Hong, C.T.; Bamodu, O.A.; Chiu, H.W.; Chiu, W.T.; Chan, L.; Chung, C.C. Personalized Predictions of Therapeutic Hypothermia Outcomes in Cardiac Arrest Patients with Shockable Rhythms Using Explainable Machine Learning. Diagnostics 2025, 15, 267. [Google Scholar] [CrossRef]

- Chung, C.C.; Su, E.C.Y.; Chen, J.H.; Chen, Y.T.; Kuo, C.Y. Xgboost-Based Simple Three-Item Model Accurately Predicts Outcomes of Acute Ischemic Stroke. Diagnostics 2023, 13, 842. [Google Scholar] [CrossRef] [PubMed]

- Rajkomar, A.; Dean, J.; Kohane, I. Machine Learning in Medicine. N. Engl. J. Med. 2019, 380, 1347–1358. [Google Scholar] [CrossRef] [PubMed]

- Bamodu, O.A.; Chan, L.; Wu, C.H.; Yu, S.F.; Chung, C.C. Beyond Diagnosis: Leveraging Routine Blood and Urine Biomarkers to Predict Severity and Functional Outcome in Acute Ischemic Stroke. Heliyon 2024, 10, e26199. [Google Scholar] [CrossRef] [PubMed]

- Band, S.S.; Yarahmadi, A.; Hsu, C.-C.; Biyari, M.; Sookhak, M.; Ameri, R.; Dehzangi, I.; Chronopoulos, A.T.; Liang, H.-W. Application of Explainable Artificial Intelligence in Medical Health: A Systematic Review of Interpretability Methods. Inform. Med. Unlocked 2023, 40, 101286. [Google Scholar] [CrossRef]

- Chou, S.-Y.; Bamodu, O.A.; Chiu, W.-T.; Hong, C.-T.; Chan, L.; Chung, C.-C. Artificial Neural Network-Boosted Cardiac Arrest Survival Post-Resuscitation in-Hospital (Caspri) Score Accurately Predicts Outcome in Cardiac Arrest Patients Treated with Targeted Temperature Management. Sci. Rep. 2022, 12, 7254. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Erion, G.; Chen, H.; DeGrave, A.; Prutkin, J.M.; Nair, B.; Katz, R.; Himmelfarb, J.; Bansal, N.; Lee, S.-I. From Local Explanations to Global Understanding with Explainable Ai for Trees. Nat. Mach. Intell. 2020, 2, 56–67. [Google Scholar] [CrossRef]

- Chang, C.C.; Yeh, J.H.; Chiu, H.C.; Chen, Y.M.; Jhou, M.J.; Liu, T.C.; Lu, C.J. Utilization of Decision Tree Algorithms for Supporting the Prediction of Intensive Care Unit Admission of Myasthenia Gravis: A Machine Learning-Based Approach. J. Pers. Med. 2022, 12, 32. [Google Scholar] [CrossRef]

- Kuo, C.-Y.; Su, E.C.-Y.; Yeh, H.-L.; Yeh, J.-H.; Chiu, H.-C.; Chung, C.-C. Predictive Modeling and Interpretative Analysis of Risks of Instability in Patients with Myasthenia Gravis Requiring Intensive Care Unit Admission. Heliyon 2024, 10, e41084. [Google Scholar] [CrossRef]

- Zhong, H.; Ruan, Z.; Yan, C.; Lv, Z.; Zheng, X.; Goh, L.-Y.; Xi, J.; Song, J.; Luo, L.; Chu, L.; et al. Short-Term Outcome Prediction for Myasthenia Gravis: An Explainable Machine Learning Model. Ther. Adv. Neurol. Disord. 2023, 16, 17562864231154976. [Google Scholar] [CrossRef]

- Chang, C.C.; Yeh, J.H.; Chiu, H.C.; Liu, T.C.; Chen, Y.M.; Jhou, M.J.; Lu, C.J. Assessing the Length of Hospital Stay for Patients with Myasthenia Gravis Based on the Data Mining Mars Approach. Front Neurol. 2023, 14, 1283214. [Google Scholar] [CrossRef]

- Xu, Y.; Li, Q.; Pan, M.; Jia, X.; Wang, W.; Guo, Q.; Luan, L. Interpretable Machine Learning Models for Predicting Short-Term Prognosis in Achr-Ab+ Generalized Myasthenia Gravis Using Clinical Features and Systemic Inflammation Index. Front. Neurol. 2024, 15, 1459555. [Google Scholar] [CrossRef]

- Bershan, S.; Meisel, A.; Mergenthaler, P. Data-Driven Explainable Machine Learning for Personalized Risk Classification of Myasthenic Crisis. Int. J. Med. Inf. 2025, 194, 105679. [Google Scholar] [CrossRef]

- Heider, D.; Stetefeld, H.; Meisel, A.; Bösel, J.; Artho, M.; Linker, R.; Angstwurm, K.; Neumann, B. Polar: Prediction of Prolonged Mechanical Ventilation in Patients with Myasthenic Crisis. J. Neurol. 2024, 271, 2875–2879. [Google Scholar] [CrossRef]

- Steyaert, S.; Lootus, M.; Sarabu, C.; Framroze, Z.; Dickinson, H.; Lewis, E.; Steels, J.C.; Rinaldo, F. A Decentralized, Prospective, Observational Study to Collect Real-World Data from Patients with Myasthenia Gravis Using Smartphones. Front. Neurol. 2023, 14, 1144183. [Google Scholar] [CrossRef] [PubMed]

- Lesport, Q.; Palmie, D.; Öztosun, G.; Kaminski, H.J.; Garbey, M. Ai-Powered Telemedicine for Automatic Scoring of Neuromuscular Examinations. Bioengineering 2024, 11, 942. [Google Scholar] [CrossRef] [PubMed]

- Garbey, M.; Lesport, Q.; Girma, H.; Öztosun, G.; Abu-Rub, M.; Guidon, A.C.; Juel, V.; Nowak, R.J.; Soliven, B.; Aban, I.; et al. Application of Digital Tools and Artificial Intelligence in the Myasthenia Gravis Core Examination. Front Neurol. 2024, 15, 1474884. [Google Scholar] [CrossRef] [PubMed]

- Garbey, M.; Lesport, Q.; Girma, H.; Öztosun, G.; Kaminski, H.J. A Quantitative Study of Factors Influencing Myasthenia Gravis Telehealth Examination Score. Muscle Nerve 2025, 72, 34–41. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The Prisma 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, n71. [Google Scholar]

- Wolff, R.F.; Moons, K.G.; Riley, R.D.; Whiting, P.F.; Westwood, M.; Collins, G.S.; Reitsma, J.B.; Kleijnen, J.; Mallett, S. Probast: A Tool to Assess the Risk of Bias and Applicability of Prediction Model Studies. Ann. Intern. Med. 2019, 170, 51–58. [Google Scholar] [CrossRef]

- Liu, Z.; Zhu, Y.; Yuan, Y.; Yang, L.; Wang, K.; Wang, M.; Yang, X.; Wu, X.; Tian, X.; Zhang, R.; et al. 3d Densenet Deep Learning Based Preoperative Computed Tomography for Detecting Myasthenia Gravis in Patients with Thymoma. Front. Oncol. 2021, 11, 631964. [Google Scholar] [CrossRef]

- Ozkan, E.; Orhan, K.; Soydal, C.; Kahya, Y.; Tunc, S.S.; Celik, O.; Sak, S.D.; Cangir, A.K. Combined Clinical and Specific Positron Emission Tomography/Computed Tomography-Based Radiomic Features and Machine-Learning Model in Prediction of Thymoma Risk Groups. Nucl. Med. Commun. 2022, 43, 529–539. [Google Scholar] [CrossRef]

- Liu, W.; Wang, W.; Guo, R.; Zhang, H.; Guo, M. Deep Learning for Risk Stratification of Thymoma Pathological Subtypes Based on Preoperative Ct Images. BMC Cancer 2024, 24, 651. [Google Scholar] [CrossRef] [PubMed]

- Abgrall, G.; Holder, A.L.; Chelly Dagdia, Z.; Zeitouni, K.; Monnet, X. Should Ai Models Be Explainable to Clinicians? Crit. Care 2024, 28, 301. [Google Scholar] [CrossRef]

- Gonzalez, F.A.; Santonocito, C.; Lamas, T.; Costa, P.; Vieira, S.M.; Ferreira, H.A.; Sanfilippo, F. Is Artificial Intelligence Prepared for the 24-H Shifts in the Icu? Anaesth. Crit. Care Pain Med. 2024, 43, 101431. [Google Scholar] [CrossRef] [PubMed]

- Shamsutdinova, D.; Stamate, D.; Stahl, D. Balancing Accuracy and Interpretability: An R Package Assessing Complex Relationships Beyond the Cox Model and Applications to Clinical Prediction. Int. J. Med. Inform. 2025, 194, 105700. [Google Scholar] [CrossRef] [PubMed]

- Cutillo, C.M.; Sharma, K.R.; Foschini, L.; Kundu, S.; Mackintosh, M.; Mandl, K.D. Machine Intelligence in Healthcare-Perspectives on Trustworthiness, Explainability, Usability, and Transparency. npj Digit. Med. 2020, 3, 47. [Google Scholar] [CrossRef]

- Alshekhlee, A.; Miles, J.D.; Katirji, B.; Preston, D.C.; Kaminski, H.J. Incidence and Mortality Rates of Myasthenia Gravis and Myasthenic Crisis in Us Hospitals. Neurology 2009, 72, 1548–1554. [Google Scholar] [CrossRef]

- Kalita, J.; Kohat, A.K.; Misra, U.K. Predictors of Outcome of Myasthenic Crisis. Neurol. Sci. 2014, 35, 1109–1114. [Google Scholar] [CrossRef]

- Liu, F.; Wang, Q.; Chen, X. Myasthenic Crisis Treated in a Chinese Neurological Intensive Care Unit: Clinical Features, Mortality, Outcomes, and Predictors of Survival. BMC Neurol. 2019, 19, 172. [Google Scholar] [CrossRef]

- Nelke, C.; Stascheit, F.; Eckert, C.; Pawlitzki, M.; Schroeter, C.B.; Huntemann, N.; Mergenthaler, P.; Arat, E.; Öztürk, M.; Foell, D.; et al. Independent Risk Factors for Myasthenic Crisis and Disease Exacerbation in a Retrospective Cohort of Myasthenia Gravis Patients. J. Neuroinflammation 2022, 19, 89. [Google Scholar] [CrossRef]

- Rajkomar, A.; Oren, E.; Chen, K.; Dai, A.M.; Hajaj, N.; Hardt, M.; Liu, P.J.; Liu, X.; Marcus, J.; Sun, M.; et al. Scalable and Accurate Deep Learning with Electronic Health Records. npj Digit. Med. 2018, 1, 18. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Rao, S.; Solares, J.R.A.; Hassaine, A.; Ramakrishnan, R.; Canoy, D.; Zhu, Y.; Rahimi, K.; Salimi-Khorshidi, G. Behrt: Transformer for Electronic Health Records. Sci. Rep. 2020, 10, 7155. [Google Scholar] [CrossRef] [PubMed]

- Ruiter, A.M.; Verschuuren, J.J.G.M.; Tannemaat, M.R. Fatigue in Patients with Myasthenia Gravis. A Systematic Review of the Literature. Neuromuscul. Disord. 2020, 30, 631–639. [Google Scholar] [CrossRef] [PubMed]

- Marbin, D.; Piper, S.K.; Lehnerer, S.; Harms, U.; Meisel, A. Mental Health in Myasthenia Gravis Patients and Its Impact on Caregiver Burden. Sci. Rep. 2022, 12, 19275. [Google Scholar] [CrossRef]

- Gelinas, D.; Parvin-Nejad, S.; Phillips, G.; Cole, C.; Hughes, T.; Silvestri, N.; Govindarajan, R.; Jefferson, M.; Campbell, J.; Burnett, H. The Humanistic Burden of Myasthenia Gravis: A Systematic Literature Review. J. Neurol. Sci. 2022, 437, 120268. [Google Scholar] [CrossRef]

- Severcan, F.; Ozyurt, I.; Dogan, A.; Severcan, M.; Gurbanov, R.; Kucukcankurt, F.; Elibol, B.; Tiftikcioglu, I.; Gursoy, E.; Yangin, M.N.; et al. Decoding Myasthenia Gravis: Advanced Diagnosis with Infrared Spectroscopy and Machine learning. Sci. Rep. 2024, 14, 19316. [Google Scholar] [CrossRef]

- Ruiter, A.M.; Wang, Z.; Yin, Z.; Naber, W.C.; Simons, J.; Blom, J.T.; van Gemert, J.C.; Verschuuren, J.J.G.M.; Tannemaat, M.R. Assessing Facial Weakness in Myasthenia Gravis with Facial Recognition Software and Deep Learning. Ann. Clin. Transl. Neurol. 2023, 10, 1314–1325. [Google Scholar] [CrossRef]

- Zhou, G.; Wang, S.; Lin, L.; Lu, K.; Lin, Z.; Zhang, Z.; Zhang, Y.; Cheng, D.; Szeto, K.; Peng, R.; et al. Screening for Immune-Related Biomarkers Associated with Myasthenia Gravis and Dilated Cardiomyopathy Based on Bioinformatics Analysis and Machine Learning. Heliyon 2024, 10, e28446. [Google Scholar] [CrossRef]

- Liu, H.; Liu, G.; Guo, R.; Li, S.; Chang, T. Identification of Potential Key Genes for the Comorbidity of Myasthenia Gravis with Thymoma by Integrated Bioinformatics Analysis and Machine Learning. Bioinform. Biol. Insights 2024, 18, 11779322241281652. [Google Scholar] [CrossRef]

- Sha, Q.; Zhang, Z.; Li, H.; Xu, Y.; Wang, J.; Du, A. Serum Metabolomic Profile of Myasthenia Gravis and Potential Values as Biomarkers in Disease Monitoring. Clin. Chim. Acta 2024, 562, 119873. [Google Scholar] [CrossRef]

- Chang, C.C.; Liu, T.C.; Lu, C.J.; Chiu, H.C.; Lin, W.N. Explainable Machine Learning Model for Identifying Key Gut Microbes Metabolites Biomarkers Associated with Myasthenia Gravis. Comput. Struct. Biotechnol. J. 2024, 23, 1572–1583. [Google Scholar] [CrossRef]

- Zhdanava, M.; Pesa, J.; Boonmak, P.; Schwartzbein, S.; Cai, Q.; Pilon, D.; Choudhry, Z.; Lafeuille, M.-H.; Lefebvre, P.; Souayah, N. Predictors of High Healthcare Cost among Patients with Generalized Myasthenia Gravis: A Combined Machine Learning and Regression Approach from a Us Payer Perspective. Appl. Health Econ. Health Policy 2024, 22, 735–747. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).