1. Introduction

Abnormal cell proliferation in the brain is referred to as a brain tumor. The human brain has a complex structure, with different areas devoted to different nervous system functions. Any part of the brain, involving the protective membranes, the base of the brain, the brain stem, the sinuses, the nasal cavity, and many other places, can develop tumors [

1].

In the United States, brain tumors are detected in roughly 30 out of 100,000 people. Because they have the potential to penetrate or put strain on healthy brain tissue, these tumors present serious hazards. It is possible for some brain tumors to be malignant or to eventually turn malignant. Because they eliminate the flow of cerebral fluid, they can cause problems by raising the pressure inside the brain. Furthermore, certain tumor types may spread to distant brain regions through cerebrospinal fluid [

2].

Gliomas are classified as primary tumors since they are derived from glial cells, which assist neurons in the brain. Astrocytomas, oligodendrogliomas, and ependymomas are among the various kinds of meningiomas that are commonly seen in the adult population. Usually benign, meningiomas grow slowly and develop from the protective coverings that envelop the brain [

3].

In order to detect the problem, diagnostic methods include MRI or CT scans followed by biopsies. Potential treatment options could include chemotherapy, radiation therapy, particular treatments, surgery, or a combination of these treatments. There are two types of tumors: malignant and benign. The word “cancer” refers only to malignant tumors [

4]. Although all types of cancer present as tumors, it is crucial to remember that not all tumors are malignant. Compared to other cancer forms, brain tumors in particular are linked to a more severe survival rate. Brain tumors’ uneven forms, varying morphology, various locations, and indistinct borders make early identification extremely difficult [

5]. For medical specialists to make accurate treatment decisions, accurate tumor identification at this early stage is essential. Primary and secondary tumors are types of tumors. While secondary cancer, or metastases, develop from cells that start in other body areas and then move to the brain, primary tumors cause the tumor cells to begin with inside the brain so as to spread to different parts of the body [

6].

Manual, semi-automatic, and fully automated systems requiring user input handle magnetic resonance (MR) images. For medical image processing, precise segmentation and classification are critical, but they often require manual intervention by physicians, which can take a lot of time. A precise diagnosis allows patients to start with the right treatments, which may result in longer lifespans. As a result, the development and application of novel frameworks targeted at lessening the workload of radiologists in detecting and classifying tumors is of vital importance in the field of artificial intelligence [

7].

Assessing the grade and type of a tumor is crucial, especially at the onset of a treatment strategy. The precise identification of abnormality is vital for accurate diagnoses [

8], which underscores the demand for effective classification and segmentation methods, or a combination that qualitatively analyzes the brain.

The precision and reliability challenges that affect traditional imaging techniques frequently require the implementation of complex computational methods in order to improve analysis. Deep learning has become well known in this domain due to its excellent ability to extract hierarchical features from data without any processing functions, which significantly improves image classification tasks in medical diagnostics [

9].

The CNN appears to have ended up being a standard approach in clinical image classifications. With its great classification performance, a CNN is a popular advanced strategy that primarily relies on classical function extractions, mainly on large datasets [

10].

VGG was designed by the Visual Geometry Research Group at Oxford University. Its primary role is to improve network depth. VGG16 and VGG19 are types of VGG structures [

11]. VGG16 is very popular, and VGG19 is a deep network that invloves 16 convolutional layers and 3 fully connected layer, which can extract reliable features. So, VGG16 was selected for the classification of MRI brain tumor images [

12].

Despite the high performance of a conventional neural network, it is restricted by its usual receptive performance. Meanwhile, the vision transformer network relies on self-attention to extract global data. Every transformer module is composed of a multi-head attention layer [

13]. Although vision transformer networks lack the capabilities of conventional neural network, their results tend to be competitive. Unlike conventional fine-tuning techniques, in which they are most effective, the FTVT structure involves a custom classifier head block that includes BN, ReLU, and dropout layers, which has an alternate structure to analyze particular representations immediately from the dataset [

14].

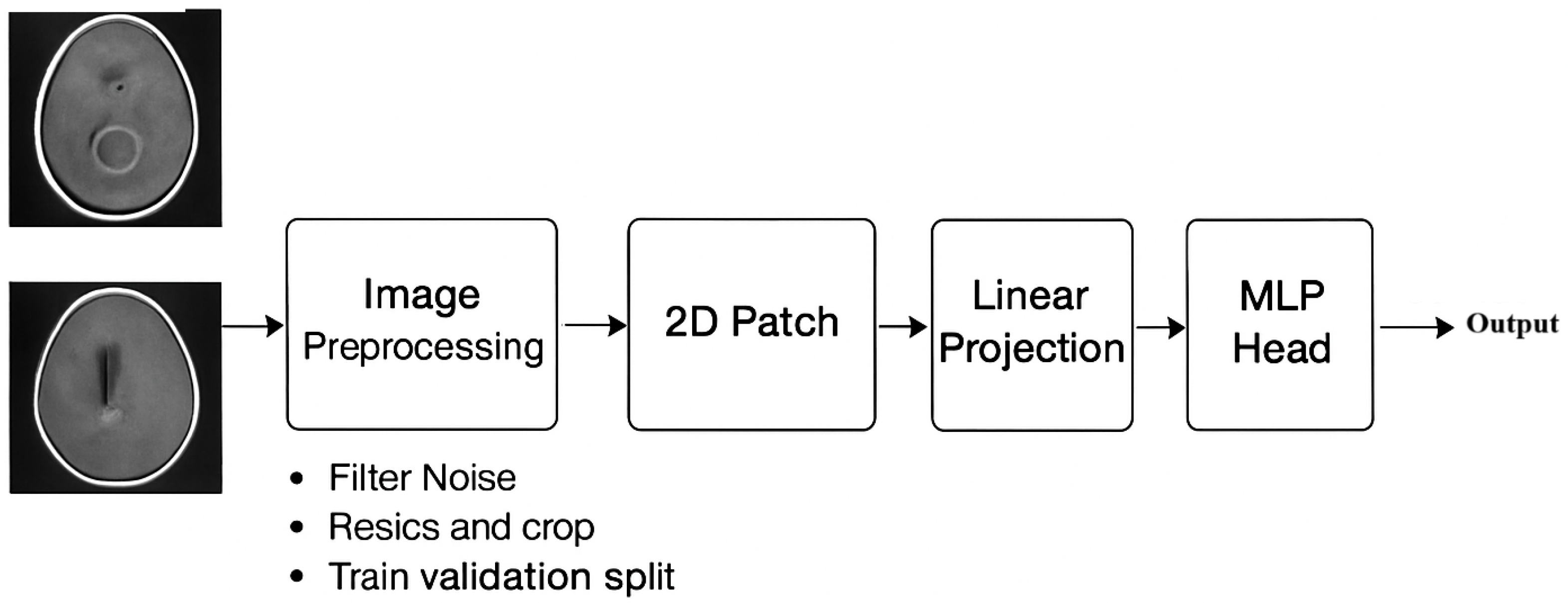

An automated system developed particularly for the classification and segmentation of brain tumors is presented in this paper. This advanced technology has the potential to greatly improve specialists’ and other medical professionals’ diagnostic abilities, especially when it comes to analyzing cancers found in the brain for prompt and precise diagnosis. Facilitating effective and accessible communication is one of the main goals of this study. The technology seeks to narrow the knowledge gap between medical experts by simplifying the way magnetic resonance imaging (MRI) results are presented [

15].

We introduce a hybrid of VGG-16 with fine-tuned vision transformer models to enhance brain tumor classification. Through the use of MR images, the fusion framework demonstrated outstanding performance, suggesting a promising direction for automated brain tumor detection:

The fusion framework demonstrated outstanding performance, suggesting a promising direction for automated brain tumor detection.

By leveraging VGG-16’s deep convolutional feature extraction and FTVT-B16’s (or ViT-B16’s) transformer-based attention mechanisms, the hybrid model can capture both local and global features in MRI images more effectively than standalone models.

The combination helps in better distinguishing among tumor types (e.g., glioma, meningioma, pituitary tumors) by enhancing feature representation.

The fusion of a CNN and transformer features can reduce overfitting compared to using only one architecture, leading to better performance on unseen datasets.

The transformer component (FTVT-B16/ViT-B16) provides attention maps that highlight tumor regions, aiding in model interpretability and helping clinicians understand classification decisions.

The hybrid VGG-16 + FTVT-B16 (or ViT-B16) model offers a powerful fusion of convolutional and transformer-based learning, significantly improving brain tumor classification in MRI.

Its contributions lie in higher accuracy, better feature fusion, and improved interpretability, making it a promising tool for medical imaging AI.

The effectiveness of mixing deep and handmade features is demonstrated by recent developments in deep learning for medical imaging, such as hybrid feature extraction for ulcer classification in WCE data. However, these methods frequently lack interpretation ability; our work addresses this shortcoming using GRAD-CAM visualizations for the MRI of brain tumors. CNN features and texture descriptors were combined to enable robust ulcer classification in non-neuroimaging areas. These techniques are useful, but they do not explain why a model predicts a particular class. This is furthered by our work, which satisfies clinical demands for transparent AI by offering spatial explanations for brain tumor forecasts.

Combining MRI with PET-CT improves the sensitivity of tumor identification, as shown by medical imaging fusion approaches. However, a crucial deficiency in high-stakes neuro-oncology is that these approaches do not provide mechanisms to explain how fused characteristics contribute to diagnosis. By showing model attention in single- or multi-modal data, our GRAD-CAM framework fills this need. Interoperability is not included in recent studies that benchmark fusion approaches for tumor analysis. On the other hand, our work ensures that fusion preserves diagnostically significant traits by classifying tumors and producing spatial explanations.

Recent hybrid techniques show that combining deep and handmade features increases the accuracy of tumor identification. These approaches, however, do not reveal which characteristics (such as texture versus shape) influenced predictions. Our GRAD-CAM study overcomes this drawback by displaying region-specific model attention.

The structure of this paper is as follows:

Section 2 introduces related research.

Section 3 illustrates materials and methods.

Section 4 discusses the experimental results. A discussion is presented in

Section 5. Finally, conclusions are presented in

Section 6.

2. Related Work

The classification of brain tumors through MRI has witnessed vast improvements through the combination of deep learning techniques, but chronic demanding situations in feature representation, generalizability, and computational performance necessitate ongoing innovation. This section synthesizes seminal and contemporary works, delineating their contributions and barriers to contextualize the proposed hybrid framework. A lot of the papers presented were selected for the detection of brain tumors, and discussions in this section cover the last five years.

The capacity of convolutional neural networks (CNNs) to autonomously obtain structural attributes makes them an essential component of medical image analysis. Ozkaraca et al. (2023) [

16] used VGG16 and DenseNet to obtain 97% accuracy, while Sharma et al. (2022) [

17] used VGG-19 with considerable data augmentation to achieve 98% accuracy. For tumors with diffuse boundaries (like gliomas), CNNs are excellent at capturing local information but have trouble with global contextual linkages. VGG-19 and other deeper networks demand a lot of resources without corresponding increases in accuracy [

16]. Data Dependency: Without extensive, annotated datasets, performance significantly declines [

17]. Eman et al. (2024) [

18] proposed a framework using a CNN and EfficientNetV2B3’s flattened outputs before feeding them into a KNN classifier. In a study with ResNet50 and DenseNet (97.32% accuracy), Sachdeva et al. (2024) [

19] observed that skip connections enhanced gradient flow but added redundancy to feature maps. The necessity for architectures that strike a balance between discriminative capability and depth was brought to light by their work. Rahman et al. (2023) [

20] proposed PDCNN (98.12% accuracy) to fuse multi-scale features. While effective, the model’s complexity increased training time by 40% compared to standalone CNNs. Ullah et al. (2022) [

21] and Alyami et al. (2024) [

22] paired CNNs with SVMs (98.91% and 99.1% accuracy, respectively). Global Context at a Cost ViTs, introduced by Dosovitskiy et al. (2020) [

14], revolutionized image analysis with self-attention mechanisms. Tummala et al. (2022) [

15] achieved 98.75% accuracy but noted ViTs’ dependency on large-scale datasets (>100 K images). Training from scratch on smaller medical datasets led to overfitting [

23]. Reddy et al. (2024) [

24] fine-tuned ViTs (98.8% accuracy) using transfer learning, mitigating data scarcity. However, computational costs remained high (30% longer inference times than CNNs).

Amin et al. (2022) [

25] merged Inception-v3 with a Quantum Variational Classifier (99.2% accuracy), showcasing quantum computing’s potential. However, quantum implementations require specialized infrastructure, limiting clinical deployment. CNNs and ViTs excel at local and global features, respectively, but no framework optimally combined both [

14,

15,

16,

17,

19,

24]. Most models operated as “black boxes,” failing to meet clinical transparency needs [

21,

22]. Hybrid models often sacrificed speed for accuracy [

20,

24]. The hybrid model of VGG-16 and FTVT-b16 is innovative through its use of VGG-16 to capture local textures (e.g., tumor margins), while FTVT-b16 models the global context (e.g., anatomical relationships).

While existing studies advanced classification accuracy, critical limitations persisted:

CNNs excelled in local texture analysis but ignored long-range dependencies, while ViTs prioritized global context at the expense of granular details.

Pure ViTs demanded large datasets, impractical for clinical settings with limited annotated MRIs.

Most frameworks operated as “black boxes,” lacking mechanisms with which to align predictions with radiological reasoning.

The proposed hybrid VGG-16 and FTVT-b16 framework addresses these gaps by harmonizing CNN-derived local features with ViT-driven global attention maps, using transfer learning to optimize data efficiency. and generating interpretable attention maps for clinical transparency. This work builds on foundational studies while introducing a novel fusion strategy that outperforms existing benchmarks, as detailed in

Section 4.

4. Experimental Results

The overall accuracy of the proposed framework may be computed using preferred metrics, which include the accuracy and precision, as listed in

Table 4,

Table 5 and

Table 6. The experiments implied that the hybrid structure surpasses the overall performance of both standalone versions, specifically in dealing with many tumor shows and noise in MRI images.

The training and execution of the three models employed Google Co-laboratory (Co-lab), which is totally based on Jupyter Notebooks, a virtual GPU powered with the aid of an Nvidia Tesla K80 (manufactured by NVIDIA Corporation, Santa Clara, CA, USA) with 16 GB of RAM, the Keras library, and TensorFlow. Our proposed models, employed datasets, and all source codes are publicly available at

https://github.com/mahmoudnasseraboali/VGG16-FTVTB16-for-brain-tumor-classifcation, accessed on 1 July 2025.

Table 1 and

Table 2 summarize the experimental results of the three models (VGG-16, FTVT-B16, and VGG-16-FTVT-B16).

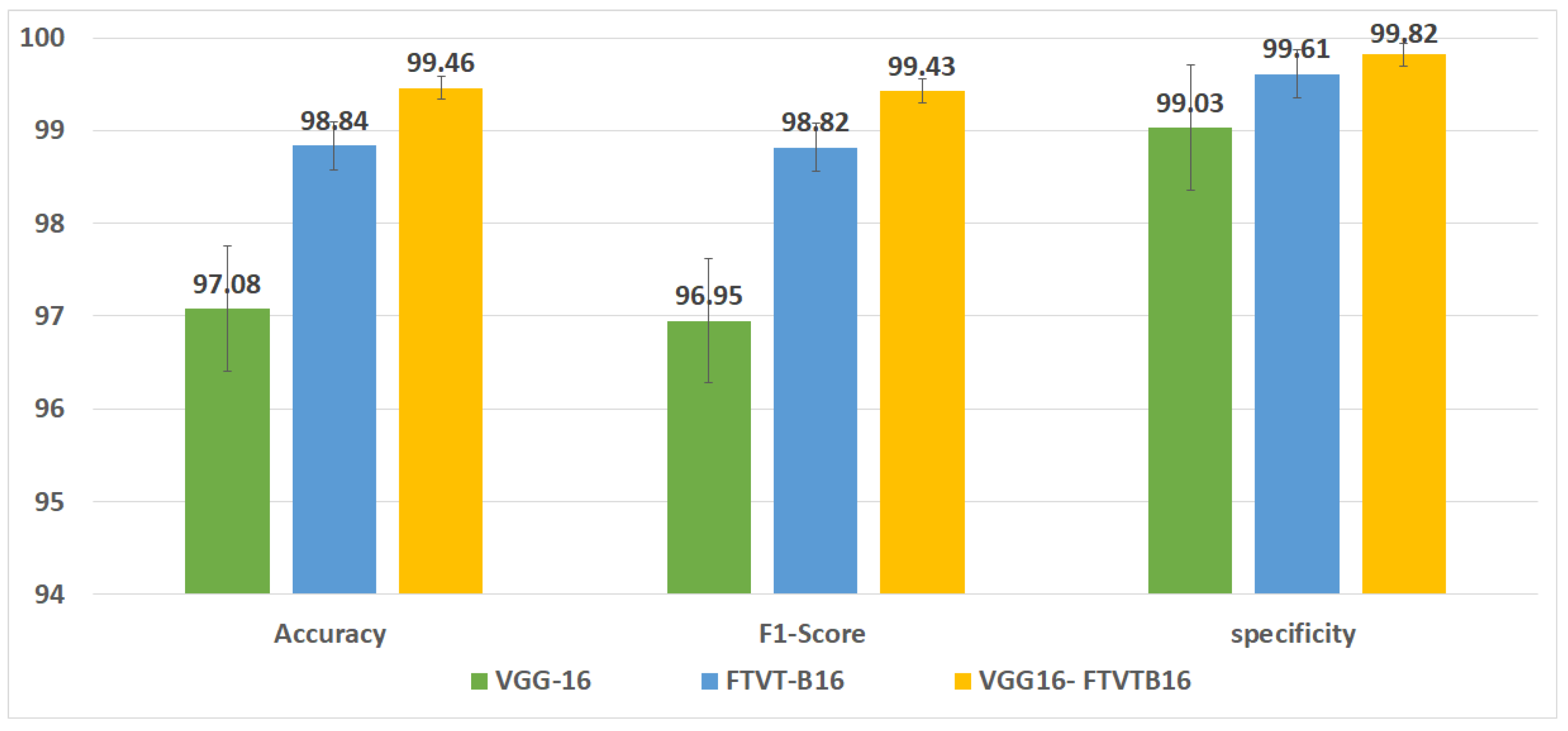

Figure 5 shows the accuracy according to each class of the dataset.

Figure 6 shows the overall accuracy of the suggested models.

Table 7 shows the computational complexity of three models (VGG-16, FTVT-B16, hybrid model).

Table 8 explained Paired

t-test results of hybrid model (five-fold CV) on dataset no.1.

4.1. Evaluation Metrics

Precision measures how often the model correctly predicted the disease, and it is calculated using the following equation:

Recall is defined as the number of true positives (TP) divided by the number of true positives plus the number of false negatives (FN) using the following equation:

F1-score: A weighted average of true positive rates (recall rates) using the following formula:

Focal loss: is a dynamically weighted variant of the standard Cross-Entropy Loss, designed to address class imbalance by down-weighting easy-to-classify examples and focusing training on difficult misclassified samples. The Focal Loss for class-imbalanced classification is defined as

where

balances class importance;

down-weights easy examples;

controls the focusing strength.

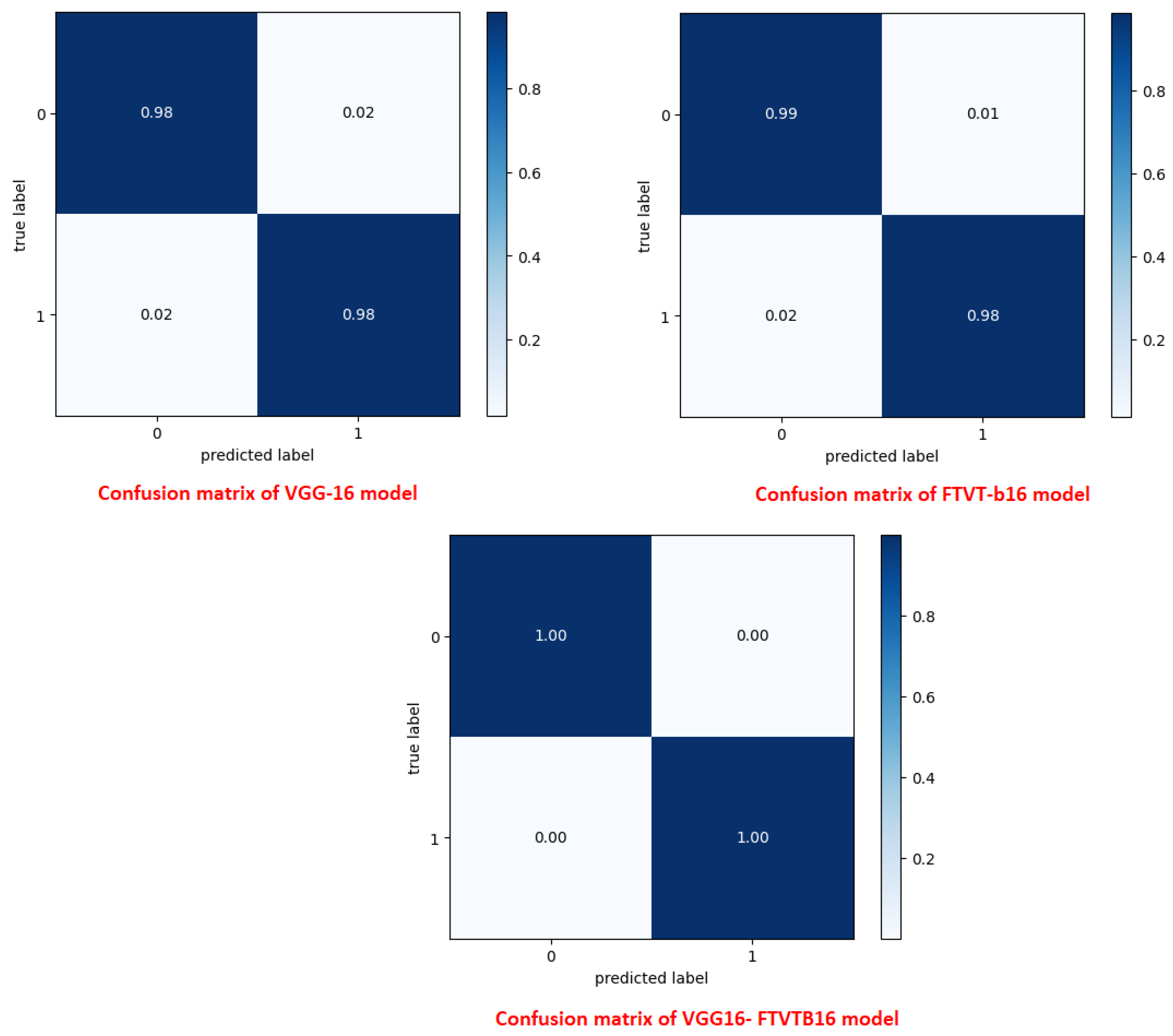

A confusion matrix was normalized by dividing every element’s cost in each class, improving the visible illustration of misclassifications in every class, which shows that G, P, M, and no-tumor, or 0, 1, 2, and 3, confer with glioma, pituitary tumor, meningioma, and no tumor, respectively. Distinct values may be used from the confusion matrix to illustrate the overall classification accuracy and recall for every class in the dataset.

Figure 7 and

Figure 8 illustrate the confusion matrix for each model.

4.2. Visualization Analysis Using Grad-CAM

An effective technique for illustrating which parts of an input image are crucial is used in the brain tumor classification with the proposed hybrid model of VGG-16 and FTVT-B16. Predictions were carried out using GRAD-CAM (Gradient-weighted Class Activation Mapping). GRAD-CAM aids in highlighting the discriminative regions the model focuses on when employed for brain tumor classification, as shown in

Figure 9 on dataset no.1.

The classifications of brain tumors using GRAD-CAM analysis are as follows:

No tumors: no localized highlights, tumor diffuse, or potential misleading highlights in artifacts or normal variants.

Pituitary tumors: The heatmap focuses on the area around the pituitary gland; if it is large, it may indicate compression of the optic chiasm.

Glioma tumors: The heatmap emphasizes edema and the tumor core.

Meningioma tumors: The hheatmap emphasizes strong activation close to the dural tail (if present) and smooth, rounded tumor borders.

5. Results and Discussion

This study concerned classifying brain tumor types through a preprocessing stage using a hybrid approach of VGG-16 with fine-tuning vision transformer models, and by comparing overall performance metrics such as the accuracy, precision, and recall. The fusion model demonstrates 99.46% for classification accuracy, surpassing the performance of standalone VGG-16 (97.08%) and FTVT-b16 (98.84%). In this paper, we introduce a hybrid of VGG-16 with fine-tuned vision transformer models to improve brain tumor classification using MR images. The suggested framework demonstrated outstanding performance, suggesting a promising direction of automated brain tumor detection in terms of sensitivity, precision, and recall.

Figure 10 shows the performance metrics (precision, recall, F1-score, specificity) from the fusion of the VGG-16 and FTVT-b16 models.

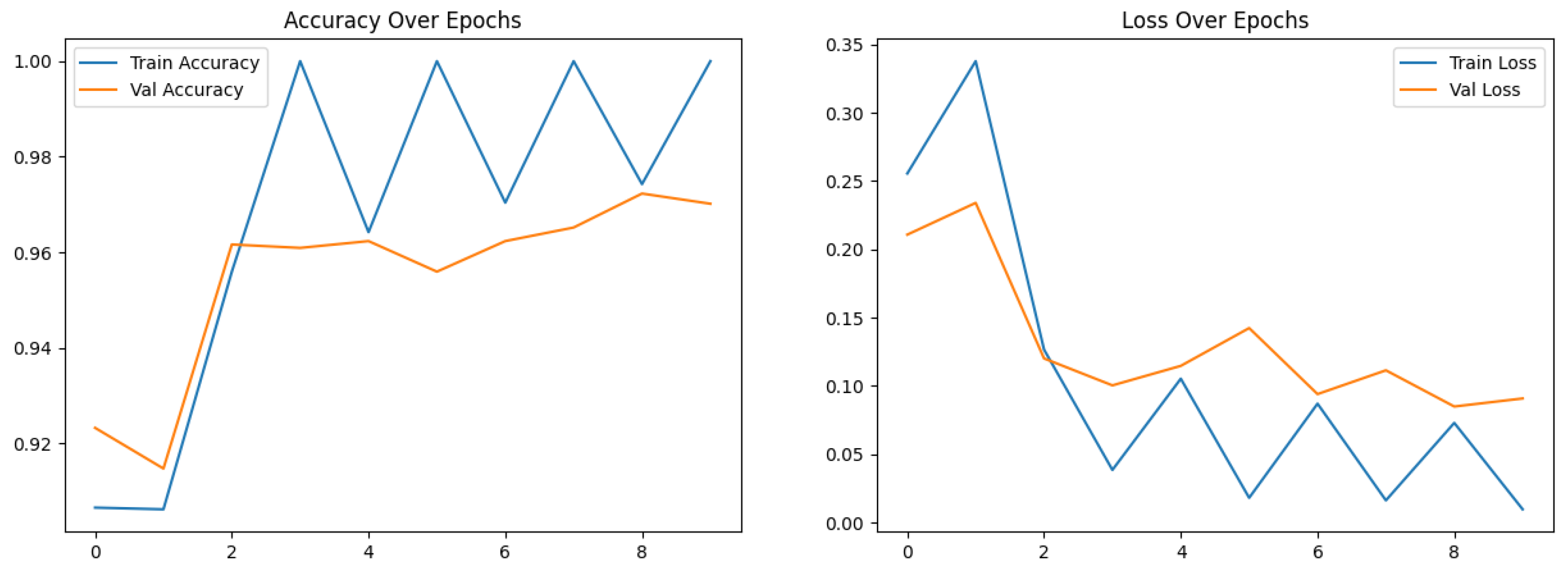

Figure 11 shows the accuracy and loss over epochs for the proposed hybrid model.

Table 9 Comparison of related work with achieved accuracy on the same dataset no.1 (Kaggle dataset).

Table 10 Comparison of the obtained accuracy with that achieved in past work on the same dataset (dataset no.2 (BR35H)).

The hybrid model performs better than current benchmarks when compared to modern approaches. For example, it outperformed the parallel deep convolutional neural network (PDCNN, 98.12%; Rahman et al., 2023 [

17]) and the Inception-QVR framework (99.2%; Amin et al., 2022 [

22]), both of which depend on homogenous designs, in terms of accuracy. While pure ViTs require large amounts of training data (Khan et al., 2022 [

13]) and conventional CNNs like VGG-19 are limited by their localized receptive fields (Mascarenhas & Agarwal, 2021 [

11]), the suggested hybrid makes use of FTVT-b16’s ability to resolve long-range pixel interactions and VGG-16 effectiveness in hierarchical feature extraction. Confusion matrix analysis also showed that 15 pituitary cases were incorrectly classified by the solo VGG-16, most likely as a result of its incapacity to contextualize anatomical relationships outside of local regions. To illustrate the transforming function of transformer-driven attention in disambiguating heterogeneous tumor appearances, the hybrid model, on the other hand, decreased misclassifications to two cases per class (Tummala et al., 2022 [

23]).

The model’s balanced class-wise measures and excellent specificity (99.82%) offer significant promise for improving diagnostic workflows from a clinical standpoint. The methodology tackles a widespread issue in neuro-oncology, where incorrect diagnosis can result in unnecessary surgical procedures, by reducing false positives (van den Bent et al., 2023 [

1]). For instance, the model’s incorporation of both global and local variables improves the accuracy of distinguishing pituitary adenomas from non-neoplastic cysts, a task that has historically been subjective. Additionally, the FTVT-b16 component produces attention maps that show the locations of tumors, providing radiologists with visually understandable explanations.

6. Conclusions

Brain tumor classification on MRI is considered a critical issue in neuro-oncology, necessitating advanced computational frameworks to enhance diagnostic performance. This paper proposed a hybrid deep learning framework that extracts features using VGG-16 with the global contextual modeling of FTVT-b16, a fine-tuned vision transformer (ViT), to address the limitations of standalone convolutional neural networks (CNNs) and transformer-based architectures. The proposed framework was tested on a dataset of 7023 MRI images spanning four classes, glioma, meningioma, pituitary, and no-tumor. It achieved a classification accuracy of 99.46% on Kaggle dataset no.1 and achieved 99.90% on Br35H dataset no.2, surpassing both VGG-16 (97.08%) and FTVT-b16 (98.84%). Key performance metrics, including precision (99.43%), recall (99.46%), and specificity (99.82%), underscore the model’s robustness, particularly in reducing misclassifications of anatomically complex cases such as pituitary tumors.

Table 11 shows ROC-AUC comparison (macro-averaged) on dataset no1.

The “black-box” constraints of traditional deep learning systems were addressed by combining CNN-derived local features with ViT-driven global attention maps, which improved interpretability through displayed interest regions and increased diagnostic precision. This dual feature gives radiologists both excellent accuracy and useful insights into model decisions, which is in line with clinical processes.

Despite these developments, generalization is limited by the study’s reliance on a single-source dataset because demographic variety and scanner variability were not taken into consideration. Multi-institutional validation should be given top priority in future studies in order to evaluate robustness across diverse demographics and imaging procedures. Furthermore, investigating advanced explainability tools (e.g., Grad-CAM, SHAP) and multi-modal data integration (e.g., diffusion-weighted imaging, PET) could improve diagnostic value and clinical acceptance. To advance AI-driven precision in neuro-oncology, real-world application through collaborations with healthcare organizations and benchmarking against advanced technology like transformers will be essential.

In conclusion, this paper lays a foundational paradigm for hybrid deep learning in medical image analysis, by showing that the fusion of a CNN and ViT can bridge the gap between computational innovation and clinical utility. By enhancing both accuracy and interpret ability, the proposed framework has great potential to improve patient outcomes through earlier, more accurate diagnosis by increasing both accuracy and interpretability.