Exploration of 3D Few-Shot Learning Techniques for Classification of Knee Joint Injuries on MR Images

Abstract

1. Introduction

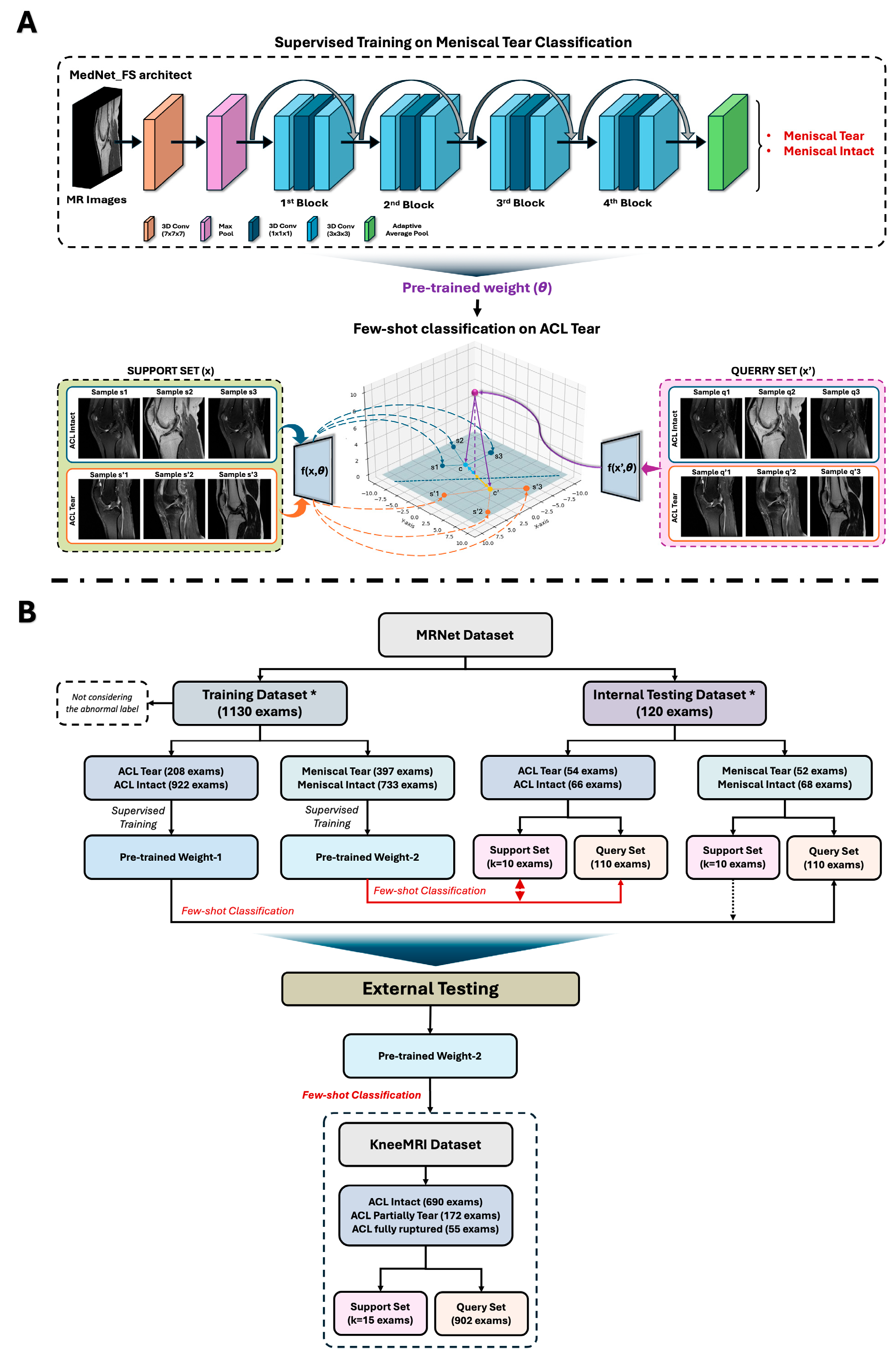

2. Materials and Methods

2.1. Data Selection

2.2. Data Usage

- Pre-trained Weight 1: obtained from supervised training to distinguish ACL tear from intact ACL.

- Pre-trained Weight 2: obtained from supervised training to distinguish meniscal injuries from non-meniscal injuries.

2.3. MedNet-FS Model Construction

2.4. Performance Metrics

2.5. Statistical Analysis

3. Results

3.1. Patient Characteristics

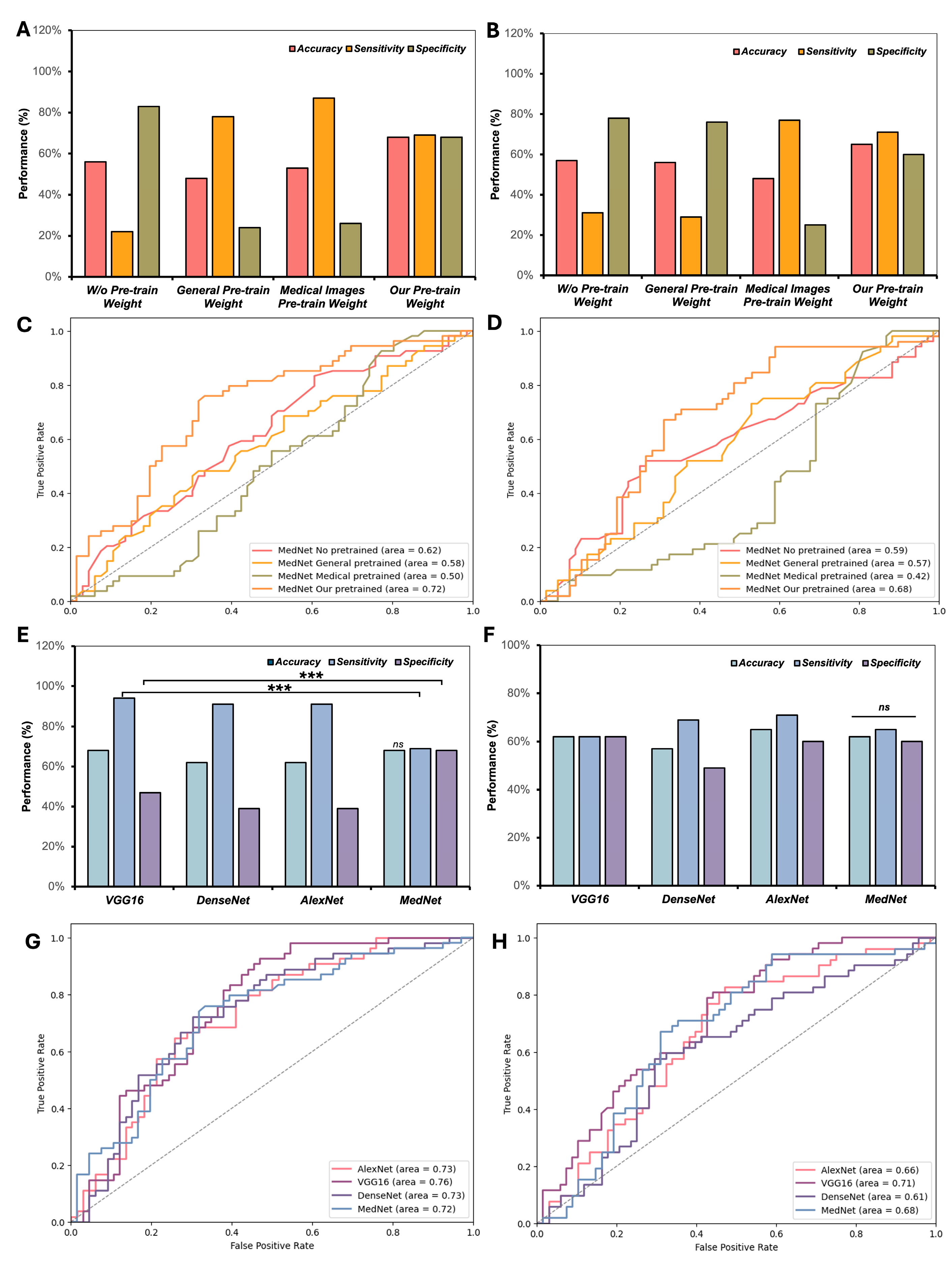

3.2. Investigation of Various Pre-Trained Weights and CNN Frameworks in Few-Shot Classification of Knee Injuries

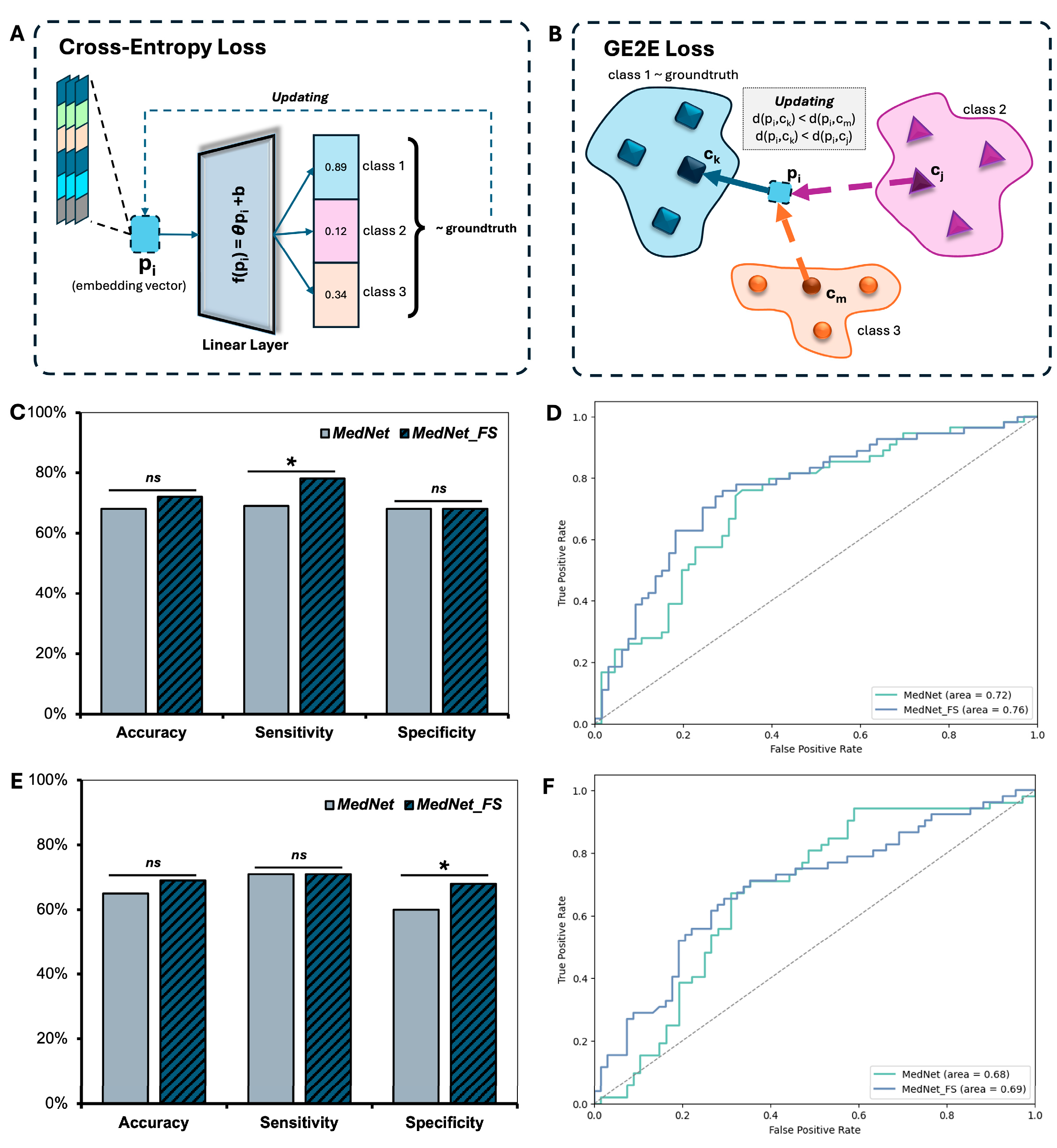

3.3. Performance of the MedNet-FS Model with GE2E Loss

3.4. Effectiveness of Few-Shot Learning Technique Compared to Supervised Learning on Small Samples

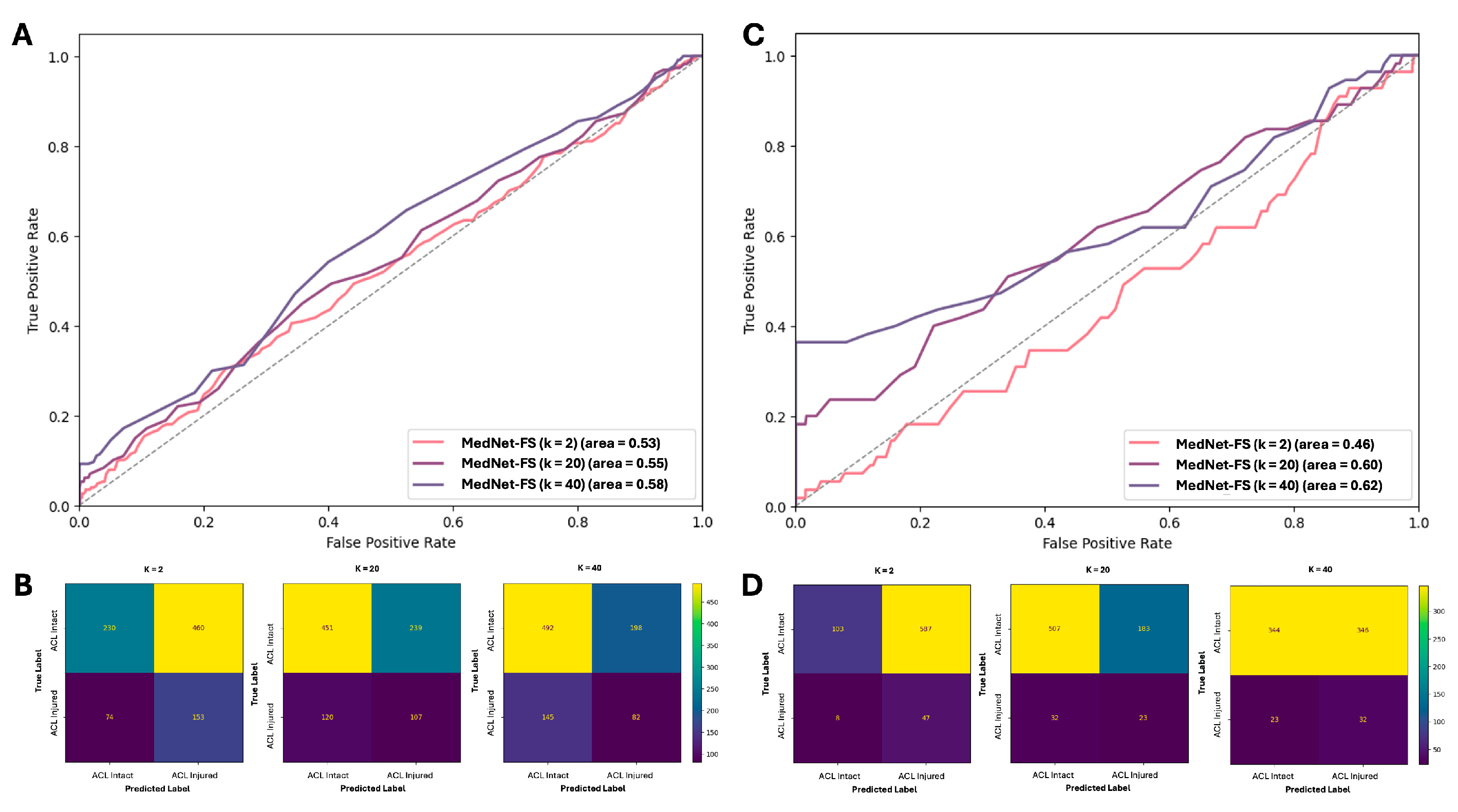

3.5. Performances of MedNet-FS Model on External Testing Datasets

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ACL | Anterior cruciate ligament |

| PCL | Posterior cruciate ligament |

| MCL | Medial collateral ligament |

| LCL | Lateral collateral ligament |

| MR | Magnetic resonance |

| MRI | Magnetic resonance image |

| CNN | Convolutional neural network |

| DL | Deep learning |

| GE2E | Generalized end-to-end |

| ACC | Accuracy |

| AUC | Area under the curve |

| SEN | Sensitivity |

| SPE | Specificity |

| ROC-AUC | Area under the receiver operating characteristic curve |

| XAI | Explainable AI |

| FSL | Few-shot learning |

| SAG | Sagittal |

References

- Maniar, N.; Verhagen, E.; Bryant, A.L.; Opar, D.A. Trends in Australian knee injury rates: An epidemiological analysis of 228,344 knee injuries over 20 years. Lancet Reg. Health–West. Pac. 2022, 21, 100409. [Google Scholar] [CrossRef] [PubMed]

- Snoeker, B.; Turkiewicz, A.; Magnusson, K.; Frobell, R.; Yu, D.; Peat, G.; Englund, M. Risk of knee osteoarthritis after different types of knee injuries in young adults: A population-based cohort study. Br. J. Sports Med. 2020, 54, 725–730. [Google Scholar] [CrossRef] [PubMed]

- Fanelli, G.C.; Orcutt, D.R.; Edson, C.J. The multiple-ligament injured knee: Evaluation, treatment, and results. Arthrosc. J. Arthrosc. Relat. Surg. 2005, 21, 471–486. [Google Scholar] [CrossRef] [PubMed]

- Moatshe, G.; Chahla, J.; LaPrade, R.F.; Engebretsen, L. Diagnosis and treatment of multiligament knee injury: State of the art. J. ISAKOS 2017, 2, 152–161. [Google Scholar] [CrossRef]

- Papp, D.F.; Khanna, A.J.; McCarthy, E.F.; Carrino, J.A.; Farber, A.J.; Frassica, F.J. Magnetic resonance imaging of soft-tissue tumors: Determinate and indeterminate lesions. JBJS 2007, 89, 103–115. [Google Scholar] [CrossRef]

- Hung, T.N.K.; Vy, V.P.T.; Tri, N.M.; Hoang, L.N.; Tuan, L.V.; Ho, Q.T.; Le, N.Q.K.; Kang, J.H. Automatic detection of meniscus tears using backbone convolutional neural networks on knee MRI. J. Magn. Reson. Imaging 2023, 57, 740–749. [Google Scholar] [CrossRef] [PubMed]

- Dung, N.T.; Thuan, N.H.; Van Dung, T.; Van Nho, L.; Tri, N.M.; Vy, V.P.T.; Hoang, L.N.; Phat, N.T.; Chuong, D.A.; Dang, L.H. End-to-end deep learning model for segmentation and severity staging of anterior cruciate ligament injuries from MRI. Diagn. Interv. Imaging 2023, 104, 133–141. [Google Scholar] [CrossRef] [PubMed]

- Namiri, N.K.; Flament, I.; Astuto, B.; Shah, R.; Tibrewala, R.; Caliva, F.; Link, T.M.; Pedoia, V.; Majumdar, S. Deep learning for hierarchical severity staging of anterior cruciate ligament injuries from MRI. Radiol. Artif. Intell. 2020, 2, e190207. [Google Scholar] [CrossRef] [PubMed]

- Bien, N.; Rajpurkar, P.; Ball, R.L.; Irvin, J.; Park, A.; Jones, E.; Bereket, M.; Patel, B.N.; Yeom, K.W.; Shpanskaya, K. Deep-learning-assisted diagnosis for knee magnetic resonance imaging: Development and retrospective validation of MRNet. PLoS Med. 2018, 15, e1002699. [Google Scholar] [CrossRef] [PubMed]

- Liu, F.; Guan, B.; Zhou, Z.; Samsonov, A.; Rosas, H.; Lian, K.; Sharma, R.; Kanarek, A.; Kim, J.; Guermazi, A. Fully automated diagnosis of anterior cruciate ligament tears on knee MR images by using deep learning. Radiol. Artif. Intell. 2019, 1, 180091. [Google Scholar] [CrossRef] [PubMed]

- Liu, F.; Zhou, Z.; Samsonov, A.; Blankenbaker, D.; Larison, W.; Kanarek, A.; Lian, K.; Kambhampati, S.; Kijowski, R. Deep learning approach for evaluating knee MR images: Achieving high diagnostic performance for cartilage lesion detection. Radiology 2018, 289, 160–169. [Google Scholar] [CrossRef] [PubMed]

- Ambellan, F.; Tack, A.; Ehlke, M.; Zachow, S. Automated segmentation of knee bone and cartilage combining statistical shape knowledge and convolutional neural networks: Data from the Osteoarthritis Initiative. Med. Image Anal. 2019, 52, 109–118. [Google Scholar] [CrossRef] [PubMed]

- Štajduhar, I.; Mamula, M.; Miletić, D.; Uenal, G. Semi-automated detection of anterior cruciate ligament injury from MRI. Comput. Methods Programs Biomed. 2017, 140, 151–164. [Google Scholar] [CrossRef] [PubMed]

- Shin, Y.; Yang, J.; Lee, Y.H. Deep generative adversarial networks: Applications in musculoskeletal imaging. Radiol. Artif. Intell. 2021, 3, e200157. [Google Scholar] [CrossRef] [PubMed]

- Singh, M.; Prabhakar, K.R.; Pamulakanty Sudarshan, V.; Poduval, M.; Pal, A.; Gubbi, J. A Few-shot approach to MRI-based Knee Disorder Diagnosis using Fuzzy Layers. In Proceedings of the Thirteenth Indian Conference on Computer Vision, Graphics and Image Processing, Gandhinagar, India, 8–10 December 2022; pp. 1–9. [Google Scholar]

- Wang, H.-H.; Mai, T.-H.; Ye, N.-X.; Lin, W.-I.; Lin, H.-T. CLImage: Human-Annotated Datasets for Complementary-Label Learning. arXiv 2023, arXiv:2305.08295. [Google Scholar]

- Cheng, W.-C.; Mai, T.-H.; Lin, H.-T. From SMOTE to mixup for deep imbalanced classification. In Proceedings of the International Conference on Technologies and Applications of Artificial Intelligence, Yunlin, China, 1–2 December 2023; pp. 75–96. [Google Scholar]

- Feng, Y.; Chen, J.; Xie, J.; Zhang, T.; Lv, H.; Pan, T. Meta-learning as a promising approach for few-shot cross-domain fault diagnosis: Algorithms, applications, and prospects. Knowl.-Based Syst. 2022, 235, 107646. [Google Scholar] [CrossRef]

- Işık, G.; Paçal, İ. Few-shot classification of ultrasound breast cancer images using meta-learning algorithms. Neural Comput. Appl. 2024, 20, 12047–12059. [Google Scholar] [CrossRef]

- Singh, R.; Bharti, V.; Purohit, V.; Kumar, A.; Singh, A.K.; Singh, S.K. MetaMed: Few-shot medical image classification using gradient-based meta-learning. Pattern Recognit. 2021, 120, 108111. [Google Scholar] [CrossRef]

- Kataoka, H.; Wakamiya, T.; Hara, K.; Satoh, Y. Would mega-scale datasets further enhance spatiotemporal 3D CNNs? arXiv 2020, arXiv:2004.04968. [Google Scholar]

- Hood, D.C.; Birch, D.G. Human cone receptor activity: The leading edge of the a–wave and models of receptor activity. Vis. Neurosci. 1993, 10, 857–871. [Google Scholar] [CrossRef] [PubMed]

- Alzubaidi, L.; Santamaría, J.; Manoufali, M.; Mohammed, B.; Fadhel, M.A.; Zhang, J.; Al-Timemy, A.H.; Al-Shamma, O.; Duan, Y. MedNet: Pre-trained convolutional neural network model for the medical imaging tasks. arXiv 2021, arXiv:2110.06512. [Google Scholar]

- Wan, L.; Wang, Q.; Papir, A.; Moreno, I.L. Generalized end-to-end loss for speaker verification. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 4879–4883. [Google Scholar]

- Iandola, F.; Moskewicz, M.; Karayev, S.; Girshick, R.; Darrell, T.; Keutzer, K. Densenet: Implementing efficient convnet descriptor pyramids. arXiv 2014, arXiv:1404.1869. [Google Scholar]

- Qassim, H.; Verma, A.; Feinzimer, D. Compressed residual-VGG16 CNN model for big data places image recognition. In Proceedings of the 2018 IEEE 8th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 8–10 January 2018; pp. 169–175. [Google Scholar]

- Alom, M.Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Sidike, P.; Nasrin, M.S.; Van Esesn, B.C.; Awwal, A.A.S.; Asari, V.K. The history began from alexnet: A comprehensive survey on deep learning approaches. arXiv 2018. [Google Scholar] [CrossRef]

- Swift, M.L. GraphPad prism, data analysis, and scientific graphing. J. Chem. Inf. Comput. Sci. 1997, 37, 411–412. [Google Scholar] [CrossRef]

- Ge, Y.; Guo, Y.; Das, S.; Al-Garadi, M.A.; Sarker, A. Few-shot learning for medical text: A review of advances, trends, and opportunities. J. Biomed. Inform. 2023, 144, 104458. [Google Scholar] [CrossRef] [PubMed]

- Littlefield, N.; Moradi, H.; Amirian, S.; Kremers, H.M.; Plate, J.F.; Tafti, A.P. Enforcing explainable deep few-shot learning to analyze plain knee radiographs: Data from the osteoarthritis initiative. In Proceedings of the 2023 IEEE 11th International Conference on Healthcare Informatics (ICHI), Houston, TX, USA, 26–29 June 2023; pp. 252–260. [Google Scholar]

- Dai, Z.; Yi, J.; Yan, L.; Xu, Q.; Hu, L.; Zhang, Q.; Li, J.; Wang, G. PFEMed: Few-shot medical image classification using prior guided feature enhancement. Pattern Recognit. 2023, 134, 109108. [Google Scholar] [CrossRef]

- Gull, S.; Kim, J. Metric-Based Meta-Learning Approach for Few-Shot Classification of Brain Tumors Using Magnetic Resonance Images. Electronics 2025, 14, 1863. [Google Scholar] [CrossRef]

- Wang, W.; Li, Y.; Lu, K.; Zhang, J.; Chen, P.; Yan, K.; Wang, B. Medical tumor image classification based on Few-shot learning. IEEE/ACM Trans. Comput. Biol. Bioinform. 2023, 21, 715–724. [Google Scholar] [CrossRef] [PubMed]

- York, T.J.; Szyszka, B.; Brivio, A.; Musbahi, O.; Barrett, D.; Cobb, J.P.; Jones, G.G. A radiographic artificial intelligence tool to identify candidates suitable for partial knee arthroplasty. Arch. Orthop. Trauma Surg. 2024, 144, 4963–4968. [Google Scholar] [CrossRef] [PubMed]

- Bizzo, B.C.; Almeida, R.R.; Michalski, M.H.; Alkasab, T.K. Artificial intelligence and clinical decision support for radiologists and referring providers. J. Am. Coll. Radiol. 2019, 16, 1351–1356. [Google Scholar] [CrossRef] [PubMed]

- Hussain, D.; Al-Masni, M.A.; Aslam, M.; Sadeghi-Niaraki, A.; Hussain, J.; Gu, Y.H.; Naqvi, R.A. Revolutionizing tumor detection and classification in multimodality imaging based on deep learning approaches: Methods, applications and limitations. J. X-Ray Sci. Technol. 2024, 32, 857–911. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Li, J.; Zhu, Z.; Zhao, L.; Wang, H.; Song, C.; Chen, Y.; Zhao, Q.; Yang, J.; Pei, Y. A comprehensive review on synergy of multi-modal data and ai technologies in medical diagnosis. Bioengineering 2024, 11, 219. [Google Scholar] [CrossRef] [PubMed]

| Datasets Name | MRNet [9] | KneeMRI [13] |

|---|---|---|

| Usage | Training/Internal testing | External testing |

| Total of exams | 1250 exams | 917 exams |

| Number of patients | 1199 patients | N/A |

| Number of women (%) | 530 (44.20) | N/A |

| Age, mean (SD) | 38.11 (16.90) | N/A |

| BMI (kg/m2), mean (SD) | N/A | N/A |

| Source | Stanford University Medical Center, Stanford, CA, USA | Clinical Hospital Centre Rijeka, Rijeka, Croatia |

| Scanner | GE scanner (GE healthcare) | Siemens Avanto scanner (Siemens Healthineers) |

| Magnetic field | 1.5 T and 3.0 T | 1.5 T |

| Number of classes | 3 classes | 3 classes |

| Class distribution: case number (%) | - ACL tear: 262 (20.96%) | - Intact ACL: 690 (75.25%) |

| - Meniscal tear: 449 (35.92%) | - ACL partial tear: 172 (18.76%) | |

| - Abnormal: 1008 (80.64%) | - ACL completely ruptured: 55 (5.99%) |

| Model | Classification Target | ACC | SEN | SPE | AUC |

|---|---|---|---|---|---|

| Supervise training 1 | ACL Tear | 0.67 | 0.48 | 0.79 | 0.75 |

| Meniscus Tear | 0.67 | 0.32 | 0.93 | 0.64 | |

| MedNet-FS (k = 2) | ACL Tear | 0.43 | 0.22 | 0.57 | 0.31 |

| Meniscus Tear | 0.39 | 0.13 | 0.61 | 0.25 | |

| MedNet-FS (k = 20) | ACL Tear | 0.68 | 0.65 | 0.72 | 0.70 |

| Meniscus Tear | 0.73 | 0.72 | 0.74 | 0.76 | |

| MedNet-FS (k = 40) | ACL Tear | 0.72 | 0.73 | 0.72 | 0.76 |

| Meniscus Tear | 0.69 | 0.67 | 0.72 | 0.70 |

| Initial Input Sample (k) | ACC | SEN | SPE | AUC | |

|---|---|---|---|---|---|

| Including partially torn cases | k = 2 | 0.42 | 0.67 | 0.33 | 0.53 |

| k = 20 | 0.63 | 0.36 | 0.71 | 0.55 | |

| k = 40 | 0.61 | 0.47 | 0.65 | 0.58 | |

| Excluding partially torn cases | k = 2 | 0.20 | 0.85 | 0.15 | 0.46 |

| k = 20 | 0.71 | 0.42 | 0.73 | 0.6 | |

| k = 40 | 0.50 | 0.58 | 0.50 | 0.62 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dang, V.H.; Nguyen, M.T.; Le, N.H.; Nguyen, T.P.; Tran, Q.-V.; Mai, T.H.; Vy, V.P.T.; Hung, T.N.K.; Lee, C.-Y.; Tseng, C.-L.; et al. Exploration of 3D Few-Shot Learning Techniques for Classification of Knee Joint Injuries on MR Images. Diagnostics 2025, 15, 1808. https://doi.org/10.3390/diagnostics15141808

Dang VH, Nguyen MT, Le NH, Nguyen TP, Tran Q-V, Mai TH, Vy VPT, Hung TNK, Lee C-Y, Tseng C-L, et al. Exploration of 3D Few-Shot Learning Techniques for Classification of Knee Joint Injuries on MR Images. Diagnostics. 2025; 15(14):1808. https://doi.org/10.3390/diagnostics15141808

Chicago/Turabian StyleDang, Vinh Hiep, Minh Tri Nguyen, Ngoc Hoang Le, Thuan Phat Nguyen, Quoc-Viet Tran, Tan Ha Mai, Vu Pham Thao Vy, Truong Nguyen Khanh Hung, Ching-Yu Lee, Ching-Li Tseng, and et al. 2025. "Exploration of 3D Few-Shot Learning Techniques for Classification of Knee Joint Injuries on MR Images" Diagnostics 15, no. 14: 1808. https://doi.org/10.3390/diagnostics15141808

APA StyleDang, V. H., Nguyen, M. T., Le, N. H., Nguyen, T. P., Tran, Q.-V., Mai, T. H., Vy, V. P. T., Hung, T. N. K., Lee, C.-Y., Tseng, C.-L., Le, N. Q. K., & Nguyen, P.-A. (2025). Exploration of 3D Few-Shot Learning Techniques for Classification of Knee Joint Injuries on MR Images. Diagnostics, 15(14), 1808. https://doi.org/10.3390/diagnostics15141808