Robust Autism Spectrum Disorder Screening Based on Facial Images (For Disability Diagnosis): A Domain-Adaptive Deep Ensemble Approach

Abstract

1. Introduction

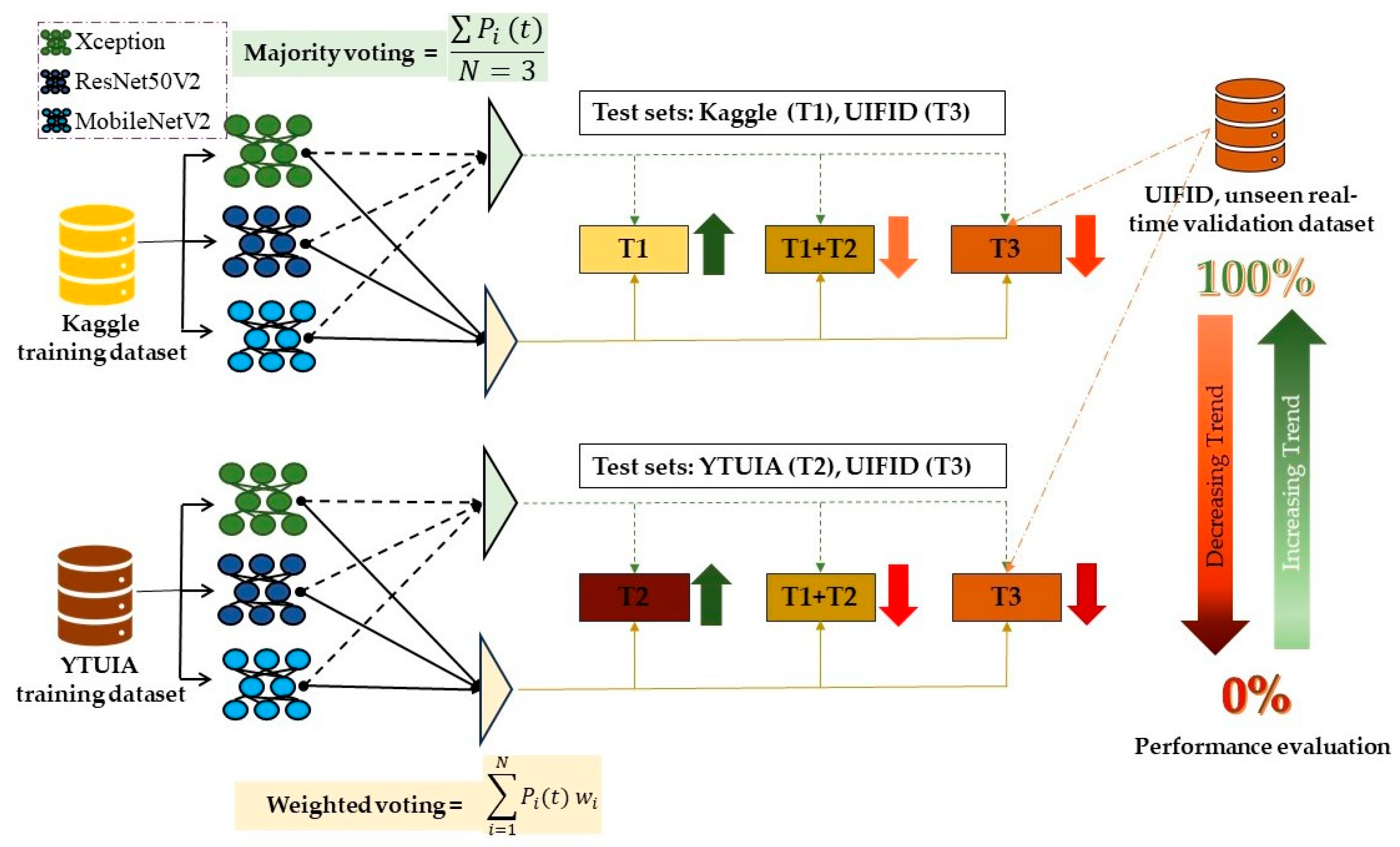

- Develop and evaluate a novel deep ensemble learning framework that integrates domain adaptation techniques to enhance the robustness and generalisation capabilities of facial image-based ASD diagnosis across diverse real-world data. Our selection of pre-trained MobileNetV2, ResNet50V2, and Xception models for this framework was driven by an ablation study [15] that identified their optimal balance of high accuracy and computational efficiency for facial image classification, making them suitable for real-time application.

- Introduce and validate the UIFID, a new clinically validated facial image dataset, to provide a more robust and non-artificial benchmark for evaluating ASD screening models.

- Implement and assess the Fifty Percent Priority (FPP) algorithm within the ensemble framework to intelligently prioritise model contributions, thereby improving diagnostic accuracy and reliability.

- I.

- Acquisition of a Novel Facial Image Dataset (UIFID): To address the shortcomings of earlier ASD diagnosis research, which often used artificial or limited datasets, we introduce UIFID as a means to validate how well diagnostic tools perform in real-world scenarios. Because UIFID is based on clinical assessments performed before individuals were even admitted, it offers a more reliable way to test the accuracy of ASD screening models.

- II.

- Optimisation and Validation of Domain Adaptation: In this study, we employed a technique called ensemble learning to fine-tune pre-trained AI models. We achieved this by feeding them data from different sources—specifically, facial images from the Kaggle ASD and YTUIA datasets. This helped our models to learn to recognise the different facial features associated with ASD in various groups of people and under different image qualities. Our strategic integration of diverse and efficient CNN architectures (MobileNetV2, ResNet50V2, Xception) within this ensemble framework is critical for capturing complementary insights across domains. We then tested how well our models could adapt to real-world clinical settings using our UIFID dataset. This step was crucial to ensure that our approach could be effectively applied in practice.

- III.

- Development and Implementation of the FPPR for Weighted Ensemble Learning: We have developed a new algorithm called Fifty Percent Priority (FPP) to help our AI better understand the important features of facial images. FPP works by cleverly picking various examples from each of our data sources. Unlike typical methods of combining different AI models, FPP places greater importance on the models that performed best during initial testing. This leads to more accurate and reliable ASD screening.

2. Literature Review

3. Materials and Methods

3.1. Dataset

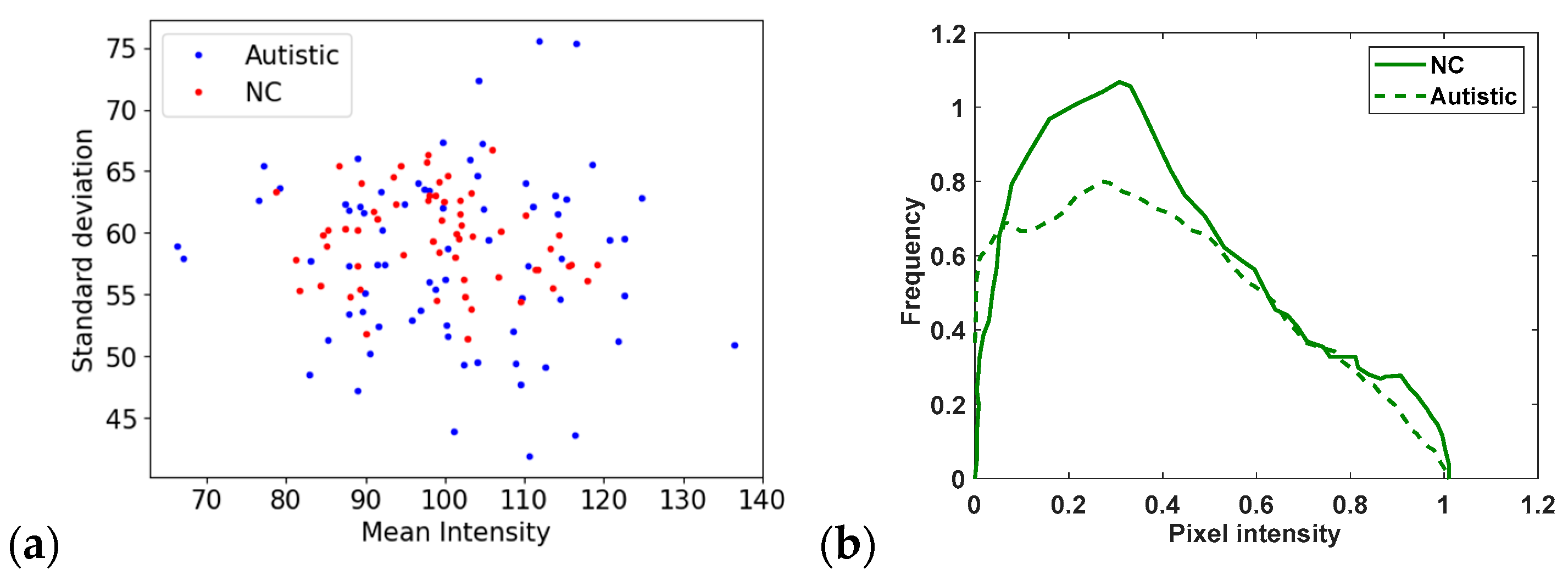

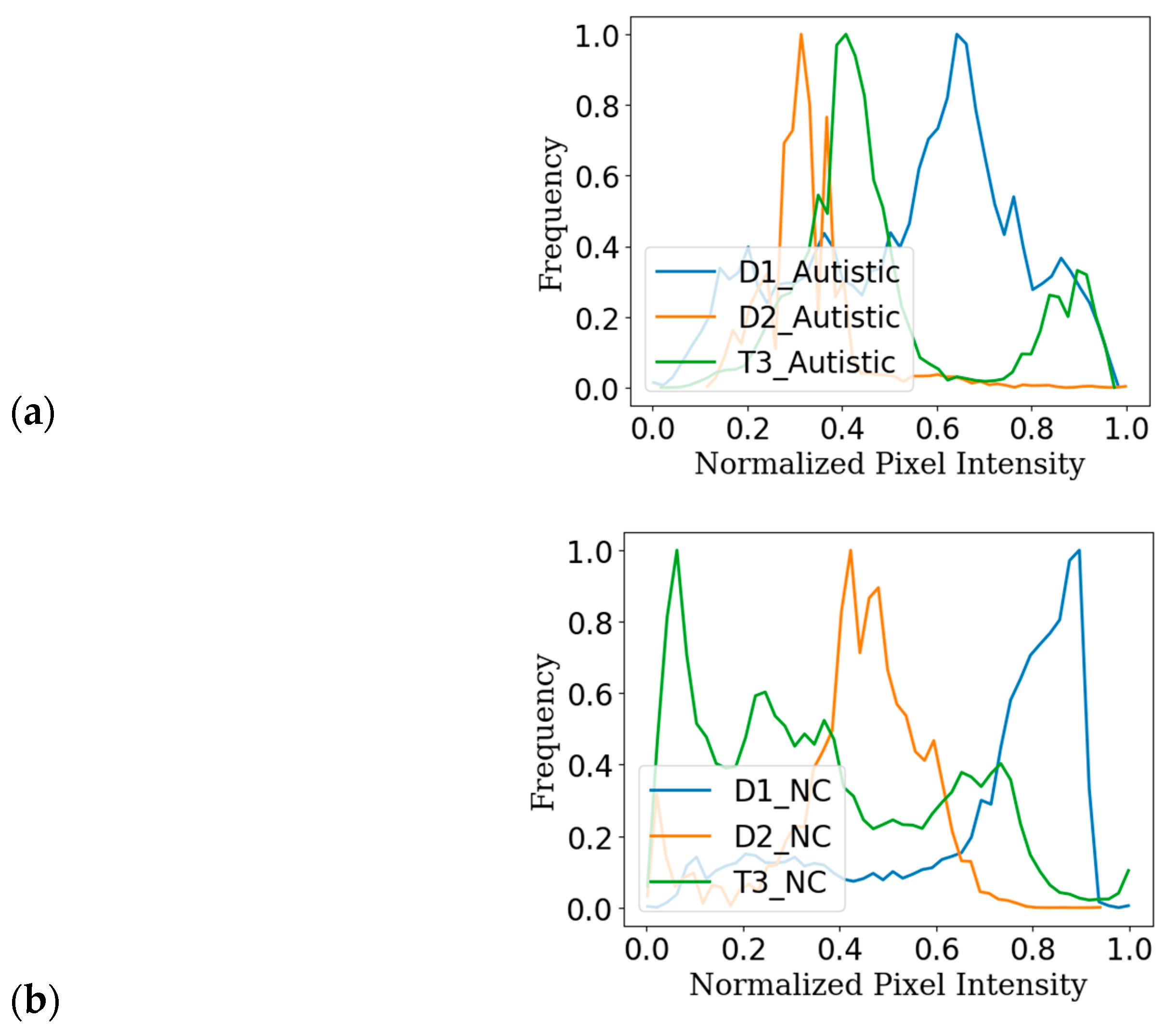

3.1.1. Kaggle ASD Dataset (D1)

3.1.2. YouTube Dataset Developed in UIA (YTUIA) (D2)

3.1.3. University Islam Facial Image Dataset (UIFID)

- Information Sharing: Parents/guardians received a thorough explanation of the study’s purpose, methods, risks, benefits, and confidentiality, with a signature section for formal consent.

- Voluntary Participation: They were notified of the voluntary nature of the study and their right to decline or withdraw at any point without consequence.

- Consent for Data Collection: Explicit parental/guardian consent via signature on the Certificate of Consent was required for recording facial RGB imaging data. All personal data remained confidential and used solely for research.

- Consent for Children Unable to Provide Assent: For children unable to provide assent, only the parent or guardian’s signature was required, along with documentation of the representative’s relationship.

- Right to Withdraw: Parents/guardians retained the right to withdraw their child at any time, without penalty.

- Gender-based groups: Male and Female.

- Age-based groups within each gender:

- ○

- Group 1 (G1): 2–6 years old.

- ○

- Group 2 (G2): 7–11 years old.

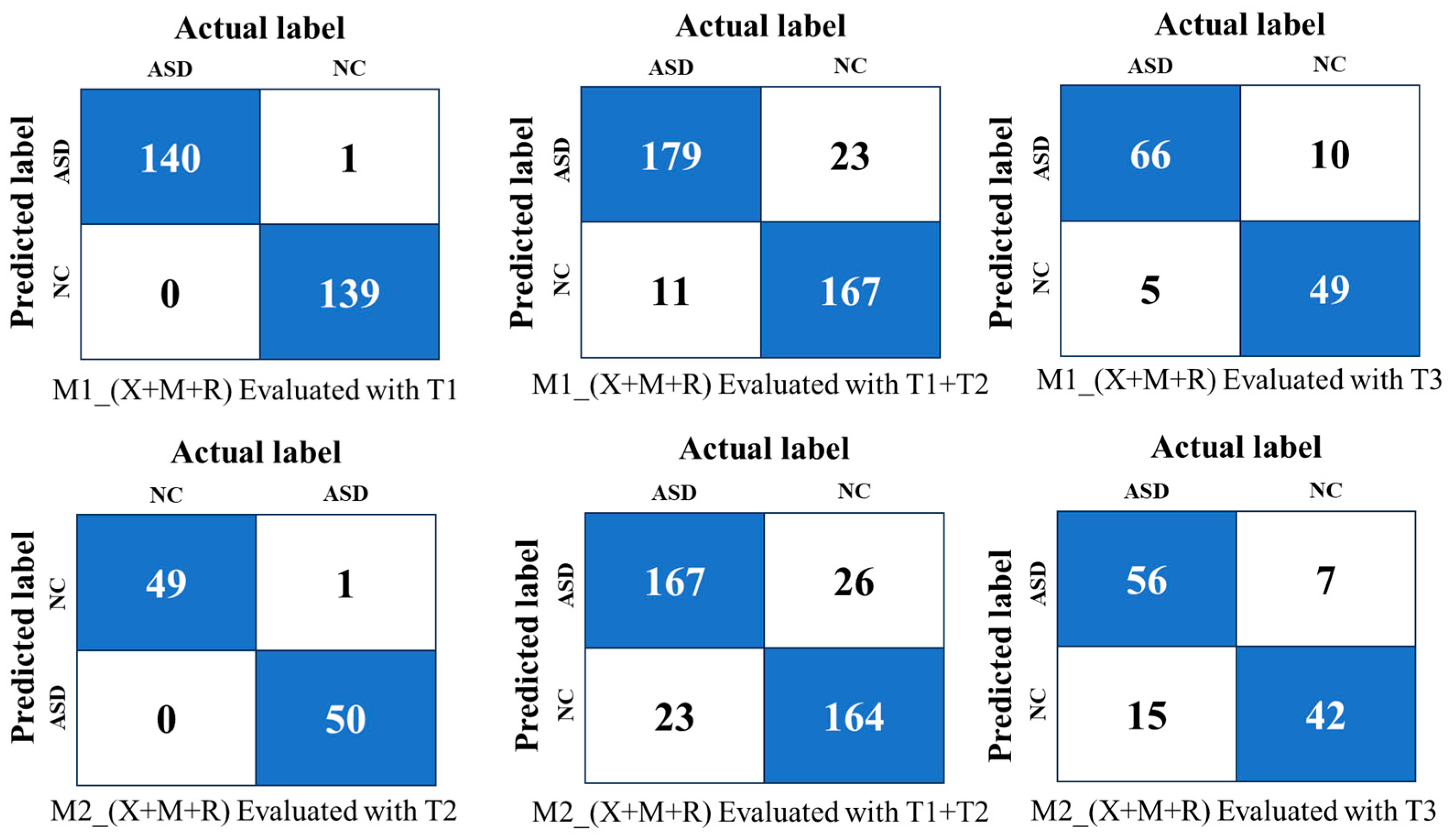

- M1_X: An ensemble model trained using dataset D1 (Kaggle) as the primary source.

- M2_R: An ensemble model trained using dataset D2 (YTUIA) with additional feature refinement techniques.

- M1_X+M2_R: A combined ensemble integrating M1_X (trained on D1) and M2_R (trained on D2), leveraging complementary learning patterns from both datasets to improve generalisation and mitigate gender- and age-related biases.

3.2. Domain Adaptation Strategies

3.3. CNN Models and Transfer Learning

3.4. Ensemble Learning

3.4.1. Majority Voting

3.4.2. Weighted Voting

3.5. Experimental Setup

- Optimiser: Adagrad was selected, with a learning_rate of 0.001 and an initial_accumulator_value of 0.1.

- Regularisation: No weight decay was applied.

- Batch Size: A batch size of 32 was used.

- Epochs: The models were trained for a fixed count of 50 epochs. No early stopping criteria were used, as the epoch count was set in consistency with the methodology to maintain a standardised training duration across all models.

- Stage 1: Initial Training and Evaluation

- Stage 2: Ensemble Learning within Domains

- Stage 3: Cross-Domain Ensemble Modelling

3.6. Evaluation Matrices

4. Results

4.1. Performance Evaluation of Transfer Learning

- (i)

- T1 Evaluation: Xception achieved the highest accuracy (95%) and AUC (98%), while MobileNetV2 and ResNet50V2 followed closely with accuracies of 92% and 94%, respectively.

- (ii)

- T1+T2 Evaluation: All models showed decreased performance, with the accuracy dropping to 83% (Xception), 82% (MobileNetV2), and 81% (ResNet50V2). This indicates challenges in adapting to more diverse combined-domain data.

- (iii)

- T3 Evaluation: Performance further declined, highlighting difficulties in generalising unseen datasets with potentially different characteristics.

- (i)

- T2 Evaluation: ResNet50V2 (95%) and Xception (94%) outperformed MobileNetV2 (74%) in accuracy, demonstrating strong performance on same-domain data.

- (ii)

- T1+T2 Evaluation: Performance metrics dropped for all algorithms, suggesting reduced generalisability to combined-domain datasets.

- (iii)

- T3 Evaluation: Accuracy fell below 50% for all models, underscoring significant challenges in predicting samples from unseen real-time datasets (refer to Table 6).

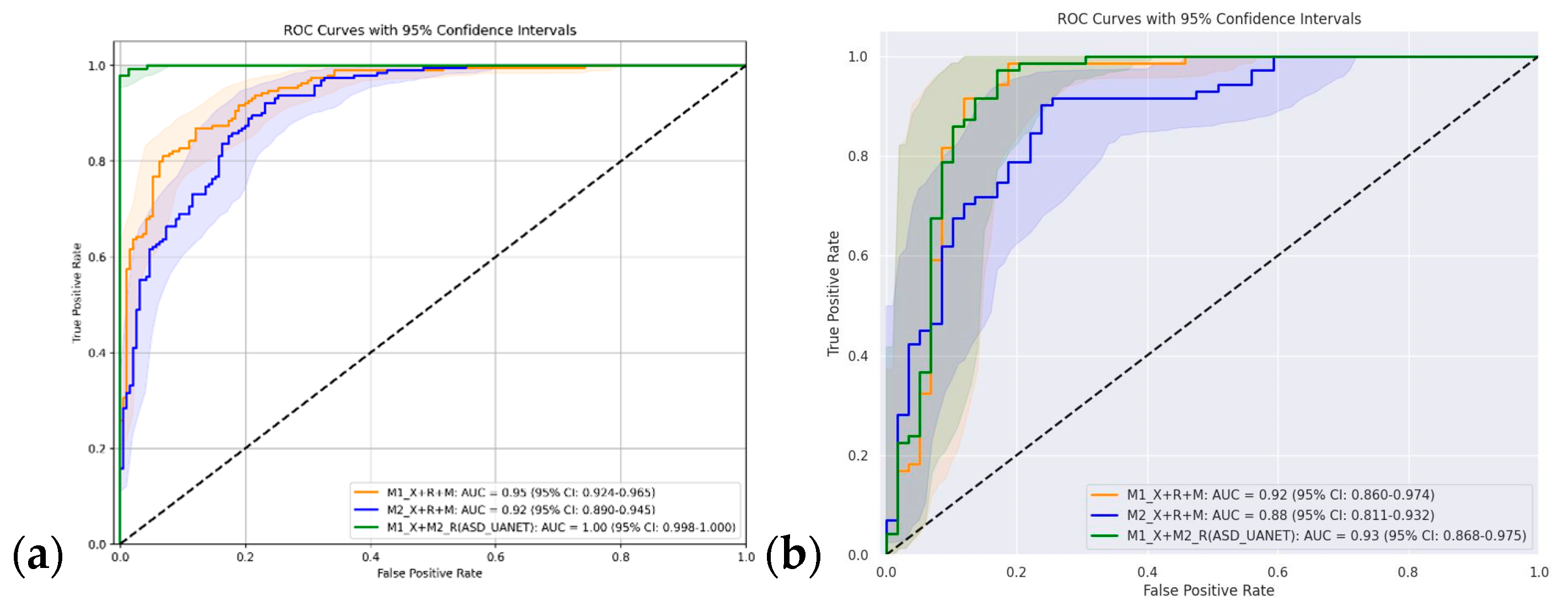

4.2. Same-Domain Ensemble Evaluation

- (i)

- Performance on T1 (Same-Domain):The weighted ensemble of Xception, MobileNetV2, and ResNet50V2 powered by FPPR achieved the highest accuracy (99.6%) and AUC (99.9%), outperforming majority voting (95.7% accuracy, 99.4% AUC) due to better integration of model predictions.

- (ii)

- Performance on T1+T2 (Cross-Domain):On the combined dataset, the weighted ensemble maintained robustness with the highest accuracy of 91.0% and an AUC of 94.5%, surpassing majority voting’s performance (86.7% accuracy, 94.3% AUC). This demonstrates the weighted ensemble’s ability to adapt to domain shifts.

- (iii)

- Performance on T3 (Unseen Validation):For unseen data, the weighted ensemble achieved 82.0% accuracy and an AUC of 89.0%, reflecting its ability to generalise better than majority voting (79.6% accuracy, 86.0% AUC).

4.3. Cross-Domain Ensemble Evaluation

5. Discussion

5.1. Domain Adaptation Insights

5.2. Challenges of Transfer Learning in Diverse Domains

5.3. Advancements Through Ensemble Learning and FPPR

5.4. Domain Adaptation and Ensemble Learning

5.5. Comparison with Previous Research

5.6. Gender Bias and Age-Range Differences

- Increasing Female ASD Representation: Expanding datasets through targeted recruitment efforts and utilising synthetic augmentation techniques to improve female sample diversity.

- Age-Specific Feature Extraction: Exploring age-stratified deep learning models that adjust feature representations based on developmental stages.

5.7. Practical, Clinical, and Ethical Implications

A Proposed Clinical Screening Workflow

- Camera and Data Collection:

- A standard clinical RGB camera (e.g., a 1080p webcam) captures frontal facial images of children (ages 2–11) during structured interactions, such as watching visual stimuli.

- An appointed ASD specialist or a trained technician supervises the session to ensure proper presentation of stimuli and adherence to data quality protocols.

- Automated Inference Process:

- Images are securely uploaded to a HIPAA-compliant server, where the ASD-UANet ensemble model, as described in Section 3, performs the analysis.

- The system generates a preliminary risk score (e.g., “High/Low ASD Probability”) in near-real-time. This is supplemented with explainable AI outputs, such as attention maps highlighting the facial regions that are most influential in the model’s decision.

- Clinician Hand-off and Review:

- The results are populated in a secure clinician dashboard. This report includes the risk score, model confidence level, the raw images, and any contextual notes from the supervising specialist. Crucially, the report should also highlight the patient’s demographic group (e.g., age and gender) to provide context for the clinician, given the observed performance variations across subgroups.

- A paediatric neurologist or a qualified clinician reviews the case. This “human-in-the-loop” approach is critical. For cases where the model’s output and the specialist’s initial impression may differ, pre-approved medical protocols guide the next steps for a comprehensive diagnostic assessment, ensuring the AI tool serves as a decision-support system, not a final arbiter.

- Ethical Safeguards:

- Strict institutional oversight ensures that the entire process complies with established diagnostic standards and data privacy regulations.

- Informed parental consent is mandatory before any data collection begins, with clear opt-out options made available to the family at any stage of the process.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lord, C.; Brugha, T.S.; Charman, T.; Cusack, J.; Dumas, G.; Frazier, T.; Jones, E.J.; Jones, R.M.; Pickles, A.; State, M.W. Autism spectrum disorder. Nat. Rev. Dis. Primers 2020, 6, 5. [Google Scholar] [CrossRef]

- Edition, F. Diagnostic and statistical manual of mental disorders. Am. Psychiatric Assoc. 2013, 21, 591–643. [Google Scholar]

- WHO. Autism. Available online: https://www.who.int/news-room/fact-sheets/detail/autism-spectrum-disorders (accessed on 9 June 2025).

- Ghosh, T.; Al Banna, M.H.; Rahman, M.S.; Kaiser, M.S.; Mahmud, M.; Hosen, A.S.; Cho, G.H. Artificial intelligence and internet of things in screening and management of autism spectrum disorder. Sustain. Cities Soc. 2021, 74, 103189. [Google Scholar] [CrossRef]

- Al Banna, M.H.; Ghosh, T.; Taher, K.A.; Kaiser, M.S.; Mahmud, M. A monitoring system for patients of autism spectrum disorder using artificial intelligence. In Proceedings of the International Conference on Brain Informatics, Padua, Italy, 19 September 2020; pp. 251–262. [Google Scholar]

- Kojovic, N.; Natraj, S.; Mohanty, S.P.; Maillart, T.; Schaer, M. Using 2D video-based pose estimation for automated prediction of autism spectrum disorders in young children. Sci. Rep. 2021, 11, 15069. [Google Scholar] [CrossRef] [PubMed]

- Rashid, M.M.; Alam, M.S. Power of Alignment: Exploring the Effect of Face Alignment on Asd Diagnosis Using Facial Images. IIUM Eng. J. 2024, 25, 317–327. [Google Scholar] [CrossRef]

- Waltes, R.; Freitag, C.M.; Herlt, T.; Lempp, T.; Seitz, C.; Palmason, H.; Meyer, J.; Chiocchetti, A.G. Impact of autism-associated genetic variants in interaction with environmental factors on ADHD comorbidities: An exploratory pilot study. J. Neural Transm. 2019, 126, 1679–1693. [Google Scholar] [CrossRef]

- Schaaf, C.P.; Betancur, C.; Yuen, R.K.; Parr, J.R.; Skuse, D.H.; Gallagher, L.; Bernier, R.A.; Buchanan, J.A.; Buxbaum, J.D.; Chen, C.-A. A framework for an evidence-based gene list relevant to autism spectrum disorder. Nat. Rev. Genet. 2020, 21, 367–376. [Google Scholar] [CrossRef]

- Shan, J.; Eliyas, S. Exploring ai facial recognition for real-time emotion detection: Assessing student engagement in online learning environments. In Proceedings of the 2024 3rd International Conference on Artificial Intelligence For Internet of Things (AIIoT), Vellore, India, 3–4 May 2024; pp. 1–6. [Google Scholar]

- LokeshNaik, S.; Punitha, A.; Vijayakarthik, P.; Kiran, A.; Dhangar, A.N.; Reddy, B.J.; Sudheeksha, M. Real time facial emotion recognition using deep learning and CNN. In Proceedings of the 2023 International Conference on Computer Communication and Informatics (ICCCI), Coimbatore, India, 23–25 January 2023; pp. 1–5. [Google Scholar]

- Alkahtani, H.; Aldhyani, T.H.; Alzahrani, M.Y. Deep learning algorithms to identify autism spectrum disorder in children-based facial landmarks. Appl. Sci. 2023, 13, 4855. [Google Scholar] [CrossRef]

- Al-Nefaie, A.H.; Aldhyani, T.H.; Ahmad, S.; Alzahrani, E.M. Application of artificial intelligence in modern healthcare for diagnosis of autism spectrum disorder. Front. Med. 2025, 12, 1569464. [Google Scholar] [CrossRef]

- Guan, H.; Liu, M. Domain adaptation for medical image analysis: A survey. IEEE Trans. Biomed. Eng. 2021, 69, 1173–1185. [Google Scholar] [CrossRef]

- Alam, M.S.; Rashid, M.M.; Roy, R.; Faizabadi, A.R.; Gupta, K.D.; Ahsan, M.M. Empirical study of autism spectrum disorder diagnosis using facial images by improved transfer learning approach. Bioengineering 2022, 9, 710. [Google Scholar] [CrossRef] [PubMed]

- Mahajan, P.; Uddin, S.; Hajati, F.; Moni, M.A. Ensemble learning for disease prediction: A review. Healthcare 2023, 11, 1808. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Lv, H.; Chen, N. A survey on ensemble learning under the era of deep learning. Artif. Intell. Rev. 2023, 56, 5545–5589. [Google Scholar] [CrossRef]

- Asif, S.; Zheng, X.; Zhu, Y. An optimized fusion of deep learning models for kidney stone detection from CT images. J. King Saud Univ. Comput. Inf. Sci. 2024, 36, 102130. [Google Scholar] [CrossRef]

- Khodatars, M.; Shoeibi, A.; Sadeghi, D.; Ghaasemi, N.; Jafari, M.; Moridian, P.; Khadem, A.; Alizadehsani, R.; Zare, A.; Kong, Y. Deep learning for neuroimaging-based diagnosis and rehabilitation of autism spectrum disorder: A review. Comput. Biol. Med. 2021, 139, 104949. [Google Scholar] [CrossRef]

- Mujeeb Rahman, K.; Subashini, M.M. Identification of autism in children using static facial features and deep neural networks. Brain Sci. 2022, 12, 94. [Google Scholar] [CrossRef] [PubMed]

- Mohanta, A.; Mittal, V.K. Classifying speech of ASD affected and normal children using acoustic features. In Proceedings of the 2020 National Conference on Communications (NCC), Kharagpur, India, 21–23 February 2020; pp. 1–6. [Google Scholar]

- Ahmed, M.R.; Zhang, Y.; Liu, Y.; Liao, H. Single volume image generator and deep learning-based ASD classification. IEEE J. Biomed. Health Inform. 2020, 24, 3044–3054. [Google Scholar] [CrossRef]

- Budarapu, A.; Kalyani, N.; Maddala, S. Early Screening of Autism among Children Using Ensemble Classification Method. In Proceedings of the 2021 3rd International Conference on Advances in Computing, Communication Control and Networking (ICAC3N), Greater Noida, India, 17–18 December 2021; pp. 162–169. [Google Scholar]

- Kim, J.H.; Hong, J.; Choi, H.; Kang, H.G.; Yoon, S.; Hwang, J.Y.; Park, Y.R.; Cheon, K.-A. Development of deep ensembles to screen for autism and symptom severity using retinal photographs. JAMA Netw. Open 2023, 6, e2347692. [Google Scholar] [CrossRef]

- Kang, H.; Yang, M.; Kim, G.-H.; Lee, T.-S.; Park, S. DeepASD: Facial Image Analysis for Autism Spectrum Diagnosis via Explainable Artificial Intelligence. In Proceedings of the 2023 Fourteenth International Conference on Ubiquitous and Future Networks (ICUFN), Paris, France, 4–7 July 2023; pp. 625–630. [Google Scholar]

- Jaby, A.; Islam, M.B.; Ahad, M.A.R. ASD-EVNet: An Ensemble Vision Network based on Facial Expression for Autism Spectrum Disorder Recognition. In Proceedings of the 2023 18th International Conference on Machine Vision and Applications (MVA), Hamamatsu, Japan, 23–25 July 2023; pp. 1–5. [Google Scholar]

- Alam, M.S.; Elsheikh, E.A.; Suliman, F.; Rashid, M.M.; Faizabadi, A.R. Innovative Strategies for Early Autism Diagnosis: Active Learning and Domain Adaptation Optimization. Diagnostics 2024, 14, 629. [Google Scholar] [CrossRef]

- Feng, Y.; Xu, X.; Wang, Y.; Lei, X.; Teo, S.K.; Sim, J.Z.T.; Ting, Y.; Zhen, L.; Zhou, J.T.; Liu, Y. Deep supervised domain adaptation for pneumonia diagnosis from chest x-ray images. IEEE J. Biomed. Health Inform. 2021, 26, 1080–1090. [Google Scholar] [CrossRef]

- Musser, M. Detecting Autism Spectrum Disorder in Children with Computer Vision. Available online: https://github.com/mm909/Kaggle-Autism (accessed on 14 June 2024).

- Yutia_2D. Available online: https://drive.google.com/drive/u/2/folders/1g8iyO2Q0jnWLj6w6l5Nb8vm7GogTzK0J (accessed on 31 March 2025).

- UIFID. Available online: https://drive.google.com/drive/u/1/folders/16U9r_4s90NtoHJKdmxopDYrN42vSlp4V (accessed on 31 March 2025).

- Rashid, M.M.; Alam, M.S.; Ali, M.Y.; Yvette, S. Developing a multi-modal dataset for deep learning-based neural networks in autism spectrum disorder diagnosis. AIP Conf. Proc. 2024, 3161, 020123. [Google Scholar]

- Alam, M.S.; Tasneem, Z.; Khan, S.A.; Rashid, M.M. Effect of Different Modalities of Facial Images on ASD Diagnosis using Deep Learning-Based Neural Network. J. Adv. Res. Appl. Sci. Eng. Technol. 2023, 32, 59–74. [Google Scholar] [CrossRef]

- Singhal, P.; Walambe, R.; Ramanna, S.; Kotecha, K. Domain adaptation: Challenges, methods, datasets, and applications. IEEE Access 2023, 11, 6973–7020. [Google Scholar] [CrossRef]

- Karim, S.; Iqbal, M.S.; Ahmad, N.; Ansari, M.S.; Mirza, Z.; Merdad, A.; Jastaniah, S.D.; Kumar, S. Gene expression study of breast cancer using Welch Satterthwaite t-test, Kaplan-Meier estimator plot and Huber loss robust regression model. J. King Saud Univ. Sci. 2023, 35, 102447. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, W.; Li, Y.; Pearce, R.; Zhang, C.; Bell, E.W.; Zhang, G.; Zhang, Y. I-TASSER-MTD: A deep-learning-based platform for multi-domain protein structure and function prediction. Nat. Protoc. 2022, 17, 2326–2353. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A.; Bengio, Y. Deep Learning; MIT Press: Cambridge, UK, 2016; Volume 1. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 2002, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part IV 14, 2016. pp. 630–645. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Müller, D.; Soto-Rey, I.; Kramer, F. An analysis on ensemble learning optimized medical image classification with deep convolutional neural networks. IEEE Access 2022, 10, 66467–66480. [Google Scholar] [CrossRef]

- Gunasekaran, H.; Ramalakshmi, K.; Swaminathan, D.K.; Mazzara, M. GIT-Net: An ensemble deep learning-based GI tract classification of endoscopic images. Bioengineering 2023, 10, 809. [Google Scholar] [CrossRef] [PubMed]

- Hayat, M.; Bennamoun, M.; An, S. Deep reconstruction models for image set classification. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 713–727. [Google Scholar] [CrossRef] [PubMed]

- Alam, S.; Rashid, M.M. Enhanced Early Autism Screening: Assessing Domain Adaptation with Distributed Facial Image Datasets and Deep Federated Learning. IIUM Eng. J. 2025, 26, 113–128. [Google Scholar] [CrossRef]

- Alam, M.S.; Rashid, M.M.; Faizabadi, A.R.; Mohd Zaki, H.F.; Alam, T.E.; Ali, M.S.; Gupta, K.D.; Ahsan, M.M. Efficient Deep Learning-Based Data-Centric Approach for Autism Spectrum Disorder Diagnosis from Facial Images Using Explainable AI. Technologies 2023, 11, 115. [Google Scholar] [CrossRef]

- Sarafraz, G.; Behnamnia, A.; Hosseinzadeh, M.; Balapour, A.; Meghrazi, A.; Rabiee, H.R. Domain adaptation and generalization of functional medical data: A systematic survey of brain data. ACM Comput. Surv. 2024, 56, 1–39. [Google Scholar] [CrossRef]

- Zhou, K.; Liu, Z.; Qiao, Y.; Xiang, T.; Loy, C.C. Domain generalization: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 4396–4415. [Google Scholar] [CrossRef] [PubMed]

- Rane, N.; Choudhary, S.P.; Rane, J. Ensemble deep learning and machine learning: Applications, opportunities, challenges, and future directions. Stud. Med. Health Sci. 2024, 1, 18–41. [Google Scholar]

- Zhou, Z.-H. Ensemble Methods: Foundations and Algorithms; CRC Press: Boca Raton, FL, USA, 2025. [Google Scholar]

- Supekar, K.; Iyer, T.; Menon, V. The influence of sex and age on prevalence rates of comorbid conditions in autism. Autism Res. 2017, 10, 778–789. [Google Scholar] [CrossRef]

- Fombonne, E. Epidemiology of pervasive developmental disorders. Pediatr. Res. 2009, 65, 591–598. [Google Scholar] [CrossRef]

- Loomes, R.; Hull, L.; Mandy, W.P.L. What is the male-to-female ratio in autism spectrum disorder? A systematic review and meta-analysis. J. Am. Acad. Child Adolesc. Psychiatry 2017, 56, 466–474. [Google Scholar] [CrossRef]

- Kreiser, N.L.; White, S.W. ASD in females: Are we overstating the gender difference in diagnosis? Clin. Child Fam. Psychol. Rev. 2014, 17, 67–84. [Google Scholar] [CrossRef]

- Sánchez-Pedroche, A.; Aguilar-Mediavilla, E.; Valera-Pozo, M.; Adrover-Roig, D.; Valverde-Gómez, M. A preliminary study on the relationship between symptom severity and age of diagnosis in females versus males with autistic spectrum disorder. Front. Psychol. 2025, 16, 1472646. [Google Scholar] [CrossRef]

- Ibadi, H.; Lakizadeh, A. ASDvit: Enhancing autism spectrum disorder classification using vision transformer models based on static features of facial images. Intell. Based Med. 2025, 11, 100226. [Google Scholar] [CrossRef]

- Zhou, X.; Siddiqui, H.; Rutherford, M. Face perception and social cognitive development in early autism: A prospective longitudinal study from 3 months to 7 years of age. Child Dev. 2025, 96, 104–121. [Google Scholar] [CrossRef] [PubMed]

- Goktas, P.; Grzybowski, A. Shaping the future of healthcare: Ethical clinical challenges and pathways to trustworthy AI. J. Clin. Med. 2025, 14, 1605. [Google Scholar] [CrossRef] [PubMed]

- Alderman, J.E.; Palmer, J.; Laws, E.; McCradden, M.D.; Ordish, J.; Ghassemi, M.; Pfohl, S.R.; Rostamzadeh, N.; Cole-Lewis, H.; Glocker, B. Tackling algorithmic bias and promoting transparency in health datasets: The STANDING Together consensus recommendations. Lancet Digit. Health 2025, 7, e64–e88. [Google Scholar] [CrossRef]

| Ref. | Multiple Datasets | Algorithms | Domain Adaptation | Reported Accuracy |

|---|---|---|---|---|

| [21] | No (speech data) | SVM, KNN | No | 93.00 |

| [22] | No (ABIDE) | Xception, VGG16 | No | 87.00 |

| [23] | Yes (Kaggle ASD, FER2013, own eye gaze data) | Own CNN | No | 84.00 |

| [24] | No (eye gaze) | ResNet | No | 66.00 |

| [25] | No (kaggle ASD) | MobileNet, EfficientNet, Xception | No | 80.00 |

| [26] | Yes (kaggle ASD, FADC) | ViT, SVM | No | 99.81 |

| Dataset | Total Samples | Male–Female Ratio | Age Range (Years) | ASD–NC Ratio | Notes |

|---|---|---|---|---|---|

| Kaggle | 3014 | 3:1 | 2–8 | 1:1 | Freely available online [29] |

| YTUIA | 1168 | 3:1 | 1–11 | 1:1 | Freely available online [30] |

| UIFID | 130 | 2:1 | 3–11 | 3:2 | Used only for validation [31] |

| (a) | ||||||||

| Class | Age (Years) | Sample Population | ||||||

| Minimum | Maximum | Standard dev | Average | Female | Male | Total | ||

| Autistic (ASD) | 3 | 12 | 2.4 | 8.03 | 7 | 24 | 31 | |

| Normal (NC) | 5 | 8 | 1 | 6.95 | 10 | 10 | 20 | |

| (b) | ||||||||

| Class | Male | Female | Age (2–6) Male | Age (7–11) Male | Age (2–6) Female | Age (7–11) Female | ||

| Before Balancing (130 samples) | ||||||||

| ASD | 24 | 7 | 5 | 19 | 6 | 1 | ||

| NC | 10 | 10 | 2 | 8 | 4 | 6 | ||

| After Balancing (118 samples) | ||||||||

| ASD | 19 | 7 | 5 | 14 | 6 | 1 | ||

| NC | 10 | 10 | 2 | 8 | 4 | 6 | ||

| Split | Kaggle | YTUIA | UIFID | Binary Class |

|---|---|---|---|---|

| Training set | 2654 (D1) | 1068 (D2) | 0—non-ASD 1—ASD | |

| Testing set | 280 (T1) | 100 (T2) | 130 (T3) | |

| Validation set | 80 | - |

| Sl No | Same-Domain Models | Cross-Domain Models |

|---|---|---|

| 1 | Xception + MobileNetV2 + ResNet50V2 | M1-Xception + M2-Xception |

| 2 | Xception + MobileNetV2 | M1-ResNet50V2 + M2-ResNet50V2 |

| 3 | Xception + ResNet50V2 | M1-MobileNetV2 + M2-MobileNetV2 |

| 4 | ResNet50V2 + MobileNetV2 | M1-Xception + M2-ResNet50V2 |

| Algorithm | Model | Evaluated on | Accuracy | AUC | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| M1 (Trained with D1) | Xception | T1 | 0.95 | 0.98 | 0.95 | 0.95 | 0.95 |

| T1+T2 | 0.83 | 0.9 | 0.83 | 0.83 | 0.83 | ||

| T3 | 0.792 | 0.882 | 0.792 | 0.792 | 0.792 | ||

| MobileNetV2 | T1 | 0.92 | 0.96 | 0.92 | 0.92 | 0.92 | |

| T1+T2 | 0.82 | 0.86 | 0.82 | 0.82 | 0.82 | ||

| T3 | 0.723 | 0.801 | 0.723 | 0.723 | 0.723 | ||

| ResNet50V2 | T1 | 0.94 | 0.96 | 0.94 | 0.94 | 0.94 | |

| T1+T2 | 0.81 | 0.87 | 0.81 | 0.81 | 0.81 | ||

| T3 | 0.769 | 0.84 | 0.769 | 0.769 | 0.769 | ||

| M2 (Trained with D2) | Xception | T2 | 0.94 | 0.98 | 0.94 | 0.94 | 0.94 |

| T1+T2 | 0.64 | 0.66 | 0.64 | 0.64 | 0.64 | ||

| T3 | 0.46 | 0.53 | 0.46 | 0.46 | 0.46 | ||

| MobileNetV2 | T2 | 0.74 | 0.83 | 0.74 | 0.74 | 0.74 | |

| T1+T2 | 0.6 | 0.64 | 0.6 | 0.6 | 0.6 | ||

| T3 | 0.6 | 0.67 | 0.6 | 0.6 | 0.6 | ||

| ResNet50V2 | T2 | 0.95 | 0.96 | 0.95 | 0.95 | 0.95 | |

| T1+T2 | 0.66 | 0.69 | 0.66 | 0.66 | 0.66 | ||

| T3 | 0.45 | 0.5 | 0.45 | 0.45 | 0.45 |

| Ensemble Configurations M1 (Trained with D1) | Majority Voting | Weighted Ensemble | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | AUC | Precision | Recall | F1-Score | Accuracy | AUC | Precision | Recall | F1-Score | |

| Evaluated on T1 | ||||||||||

| Xception + MobileNetV2 + ResNet50V2 | 0.957 | 0.994 | 0.957 | 0.957 | 0.957 | 0.996 | 0.999 | 0.996 | 0.996 | 0.996 |

| Xception + MobileNetV2 | 0.988 | 0.999 | 0.988 | 0.988 | 0.988 | 0.988 | 0.999 | 0.988 | 0.988 | 0.988 |

| Xception + ResNet50V2 | 0.969 | 0.991 | 0.969 | 0.969 | 0.969 | 0.984 | 0.994 | 0.984 | 0.984 | 0.984 |

| ResNet50V2 + MobileNetV2 | 0.984 | 0.997 | 0.984 | 0.984 | 0.984 | 0.953 | 0.996 | 0.953 | 0.953 | 0.953 |

| Evaluated on T1+T2 | ||||||||||

| Xception + MobileNetV2 + ResNet50V2 | 0.867 | 0.943 | 0.867 | 0.867 | 0.867 | 0.91 | 0.945 | 0.91 | 0.91 | 0.91 |

| Xception + MobileNetV2 | 0.879 | 0.925 | 0.879 | 0.879 | 0.879 | 0.901 | 0.944 | 0.901 | 0.901 | 0.901 |

| Xception + ResNet50V2 | 0.897 | 0.94 | 0.897 | 0.897 | 0.897 | 0.909 | 0.956 | 0.909 | 0.909 | 0.909 |

| ResNet50V2 + MobileNetV2 | 0.845 | 0.947 | 0.845 | 0.845 | 0.845 | 0.901 | 0.945 | 0.901 | 0.901 | 0.901 |

| Evaluated on T3 | ||||||||||

| Xception + MobileNetV2 + ResNet50V2 | 0.796 | 0.86 | 0.796 | 0.796 | 0.796 | 0.82 | 0.89 | 0.82 | 0.82 | 0.82 |

| Xception + MobileNetV2 | 0.766 | 0.86 | 0.766 | 0.766 | 0.766 | 0.81 | 0.875 | 0.81 | 0.81 | 0.81 |

| Xception + ResNet50V2 | 0.8 | 0.88 | 0.8 | 0.8 | 0.8 | 0.79 | 0.851 | 0.79 | 0.79 | 0.79 |

| ResNet50V2 + MobileNetV2 | 0.758 | 0.78 | 0.758 | 0.758 | 0.758 | 0.76 | 0.828 | 0.76 | 0.76 | 0.76 |

| Ensemble Configurations M2 (Trained with D2) | Majority Voting | Weighted Ensemble | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | AUC | Precision | Recall | F1-Score | Accuracy | AUC | Precision | Recall | F1-Score | |

| Evaluated on T1 | ||||||||||

| Xception + MobileNetV2 + ResNet50V2 | 0.958 | 0.979 | 0.958 | 0.958 | 0.958 | 0.989 | 0.986 | 0.989 | 0.989 | 0.989 |

| Xception + MobileNetV2 | 0.958 | 0.982 | 0.958 | 0.958 | 0.958 | 0.969 | 0.987 | 0.969 | 0.969 | 0.969 |

| Xception + ResNet50V2 | 0.969 | 0.996 | 0.969 | 0.969 | 0.969 | 0.979 | 0.988 | 0.979 | 0.979 | 0.979 |

| ResNet50V2 + MobileNetV2 | 0.906 | 0.968 | 0.906 | 0.906 | 0.906 | 0.979 | 0.988 | 0.979 | 0.979 | 0.979 |

| Evaluated on T1+T2 | ||||||||||

| Xception + MobileNetV2 + ResNet50V2 | 0.787 | 0.871 | 0.787 | 0.787 | 0.787 | 0.869 | 0.921 | 0.869 | 0.869 | 0.869 |

| Xception + MobileNetV2 | 0.81 | 0.901 | 0.81 | 0.81 | 0.81 | 0.855 | 0.916 | 0.855 | 0.855 | 0.855 |

| Xception + ResNet50V2 | 0.855 | 0.9 | 0.855 | 0.855 | 0.855 | 0.855 | 0.915 | 0.855 | 0.855 | 0.855 |

| ResNet50V2 + MobileNetV2 | 0.81 | 0.899 | 0.81 | 0.81 | 0.81 | 0.844 | 0.918 | 0.844 | 0.844 | 0.844 |

| Evaluated on T3 | ||||||||||

| Xception + MobileNetV2 + ResNet50V2 | 0.797 | 0.864 | 0.797 | 0.797 | 0.797 | 0.82 | 0.877 | 0.82 | 0.82 | 0.82 |

| Xception + MobileNetV2 | 0.758 | 0.818 | 0.758 | 0.758 | 0.758 | 0.81 | 0.839 | 0.81 | 0.81 | 0.81 |

| Xception + ResNet50V2 | 0.719 | 0.789 | 0.719 | 0.719 | 0.719 | 0.79 | 0.817 | 0.79 | 0.79 | 0.79 |

| ResNet50V2 + MobileNetV2 | 0.687 | 0.74 | 0.687 | 0.687 | 0.687 | 0.76 | 0.802 | 0.76 | 0.76 | 0.76 |

| Ensemble of Models (M1 Trained on D1 and M2 Trained on D2) | Majority Voting | Weighted Ensemble | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | AUC | Precision | Recall | F1-Score | Accuracy | AUC | Precision | Recall | F1-Score | |

| Evaluated on T1+T2 | ||||||||||

| (M1+M2) Xception | 0.957 | 0.990 | 0.957 | 0.957 | 0.957 | 0.950 | 0.991 | 0.950 | 0.950 | 0.950 |

| (M1+M2) ResNet50V2 | 0.910 | 0.976 | 0.910 | 0.910 | 0.910 | 0.943 | 0.989 | 0.943 | 0.943 | 0.943 |

| (M1+M2) MobileNetV2 | 0.912 | 0.961 | 0.912 | 0.912 | 0.912 | 0.946 | 0.978 | 0.946 | 0.946 | 0.946 |

| M1_Xception + M2_ResNet50V2 (ASD-UANet) | 0.951 | 0.990 | 0.951 | 0.951 | 0.951 | 0.960 | 0.990 | 0.960 | 0.960 | 0.960 |

| Evaluated on T3 | ||||||||||

| Xception + MobileNetV2 + ResNet50V2 | 0.813 | 0.881 | 0.813 | 0.813 | 0.813 | 0.867 | 0.921 | 0.867 | 0.867 | 0.867 |

| Xception + MobileNetV2 | 0.742 | 0.796 | 0.742 | 0.742 | 0.742 | 0.750 | 0.801 | 0.750 | 0.750 | 0.750 |

| Xception + ResNet50V2 | 0.742 | 0.831 | 0.742 | 0.742 | 0.742 | 0.860 | 0.920 | 0.860 | 0.860 | 0.860 |

| ResNet50V2 + MobileNetV2 | 0.812 | 0.877 | 0.812 | 0.812 | 0.812 | 0.906 | 0.930 | 0.906 | 0.906 | 0.906 |

| Ref. | Method | Algorithm | Training/Validation Dataset | Accuracy |

|---|---|---|---|---|

| [27] | Active learning (using w12) | MobileNetV2 | Kaggle + YTUIA/UIFID | 0.828 |

| [46] | Dataset federation | Xception | Kaggle + YTUIA/UIFID | 0.680 |

| [47] | Data-centric approach (Align only) | Xception | Kaggle/UIFID | 0.823 |

| Current work | Proposed weighted Ensemble | ASD-UANet | Kaggle + YTUIA/UIFID | 0.906 |

| Ref. | Algorithms | Accuracy | Improvement (%) | Numbers and Names of Datasets | Validation Dataset |

|---|---|---|---|---|---|

| [22] | Xception, VGG16, own CNN | 87.00 | 0.5 | 01 (ABIDE) | Same-domain (15%) |

| [23] | Own CNN | 84.00 | 7.5 | 03 (Kaggle ASD, FER2013, eye gaze data) | Same-domain (17%) |

| [24] | ResNet | 66.00 | 6.3 | 01 (eye gaze) | Same-domain |

| [25] | MobileNet, EfficientNet, and Xception | 80.00 | 4.9 | 01 (kaggle ASD) | Same-domain (10%) |

| [26] | ASD-EVNet | 99.81 | 2.7 | 02 (kaggle ASD, FADC) | Same-domain (20%) |

| A proposed method for domain adaptation with ensemble learning | |||||

| Current work | Xception + MobileNetV2 + ResNet50V2 | 99.60 | 4.6 | 02 (kaggle ASD, YTUIA) | Same-domain (T1) |

| Current work | (ASD-UANet) | 96.0 | 30 | 02 (kaggle ASD, YTUIA) | Cross-domain (T1+T2) |

| Current work | (ASD-UANet) | 90.6 | 30 | 02 (kaggle ASD, YTUIA) | UIFID (T3) |

| Model | Male (G1) | Male (G2) | Female (G1) | Female (G2) | Overall Accuracy |

|---|---|---|---|---|---|

| M1_X | 86.50% | 91.20% | 79.80% | 83.50% | 88.30% |

| M2_R | 84.10% | 89.50% | 81.20% | 82.70% | 86.90% |

| M1_X+M2_R | 87.30% | 90.80% | 80.50% | 84.20% | 89.10% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alam, M.S.; Rashid, M.M.; Jazlan, A.; Alahi, M.E.E.; Kchaou, M.; Alharthi, K.A.B. Robust Autism Spectrum Disorder Screening Based on Facial Images (For Disability Diagnosis): A Domain-Adaptive Deep Ensemble Approach. Diagnostics 2025, 15, 1601. https://doi.org/10.3390/diagnostics15131601

Alam MS, Rashid MM, Jazlan A, Alahi MEE, Kchaou M, Alharthi KAB. Robust Autism Spectrum Disorder Screening Based on Facial Images (For Disability Diagnosis): A Domain-Adaptive Deep Ensemble Approach. Diagnostics. 2025; 15(13):1601. https://doi.org/10.3390/diagnostics15131601

Chicago/Turabian StyleAlam, Mohammad Shafiul, Muhammad Mahbubur Rashid, Ahmad Jazlan, Md Eshrat E. Alahi, Mohamed Kchaou, and Khalid Ayed B. Alharthi. 2025. "Robust Autism Spectrum Disorder Screening Based on Facial Images (For Disability Diagnosis): A Domain-Adaptive Deep Ensemble Approach" Diagnostics 15, no. 13: 1601. https://doi.org/10.3390/diagnostics15131601

APA StyleAlam, M. S., Rashid, M. M., Jazlan, A., Alahi, M. E. E., Kchaou, M., & Alharthi, K. A. B. (2025). Robust Autism Spectrum Disorder Screening Based on Facial Images (For Disability Diagnosis): A Domain-Adaptive Deep Ensemble Approach. Diagnostics, 15(13), 1601. https://doi.org/10.3390/diagnostics15131601