Interpretable Detection of Diabetic Retinopathy, Retinal Vein Occlusion, Age-Related Macular Degeneration, and Other Fundus Conditions

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Collection

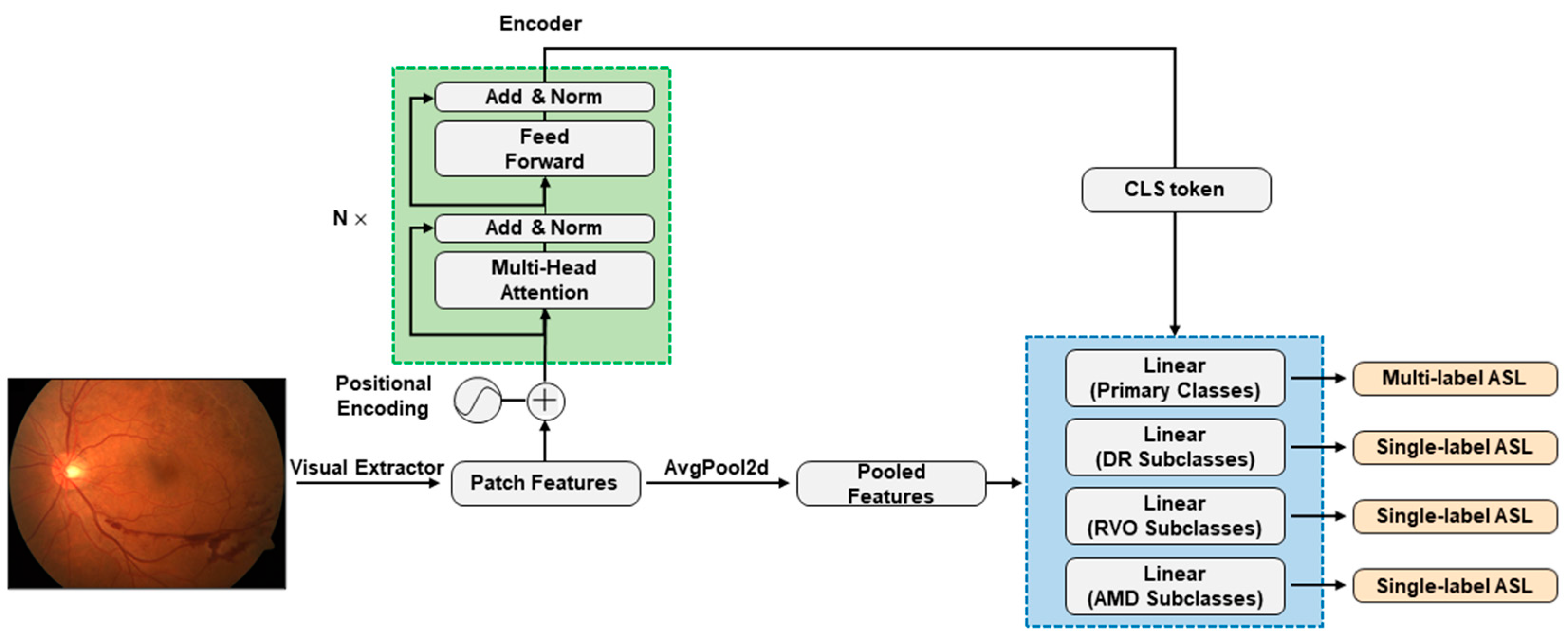

2.2. Model Construction and Configurations

3. Results

3.1. Data Characteristics

3.2. Performance of the Models

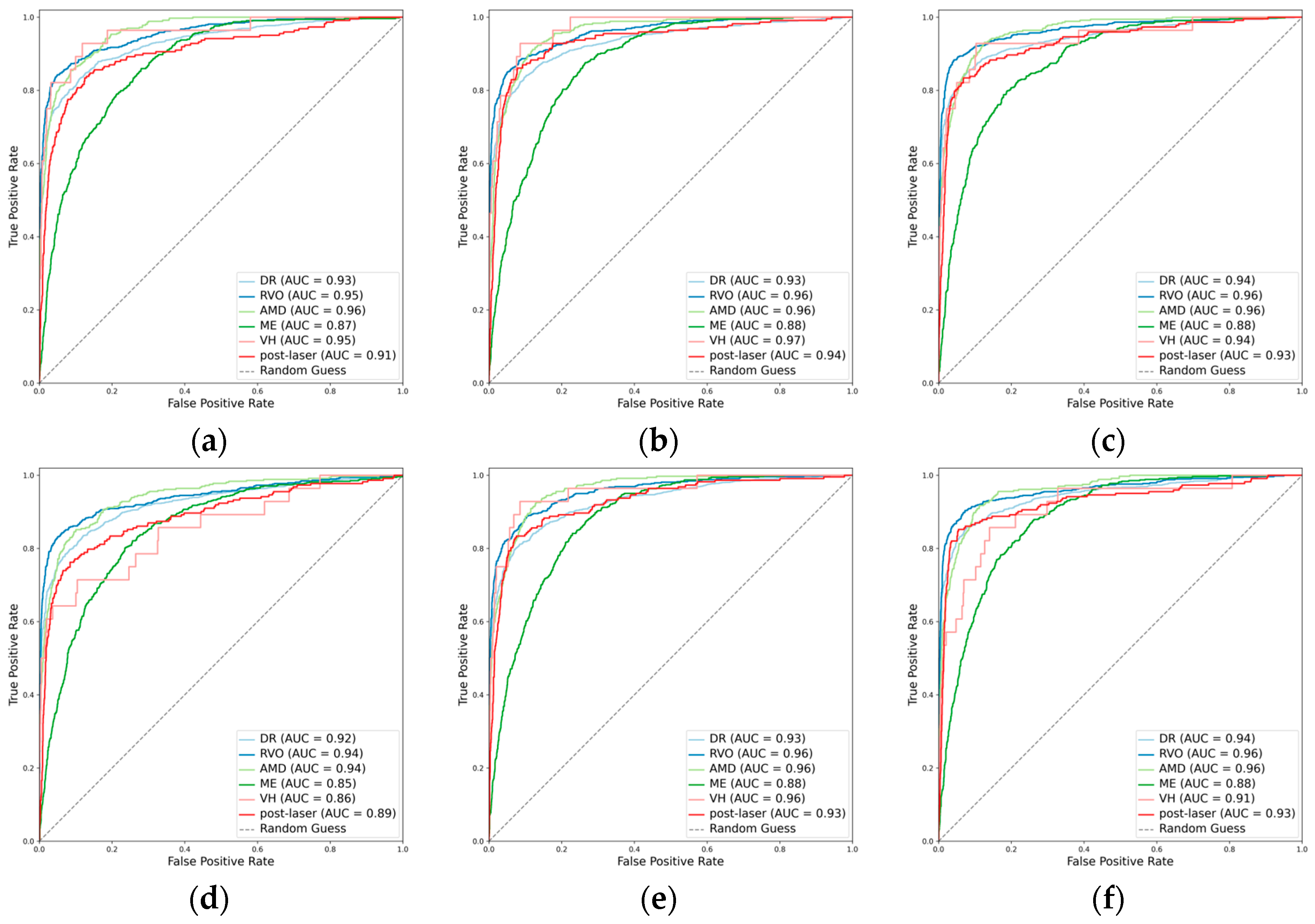

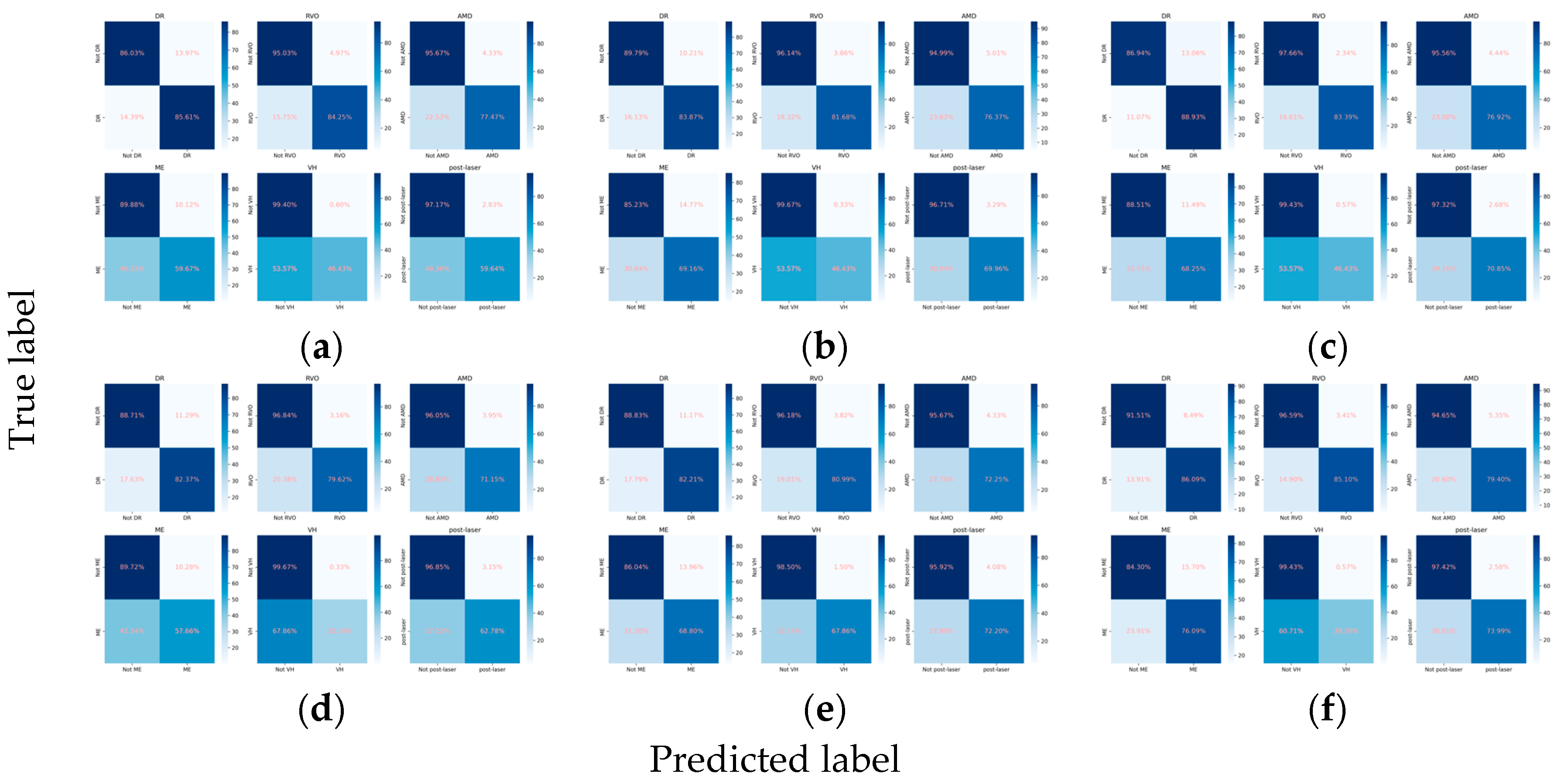

3.2.1. Classification Performance for the Primary Classes

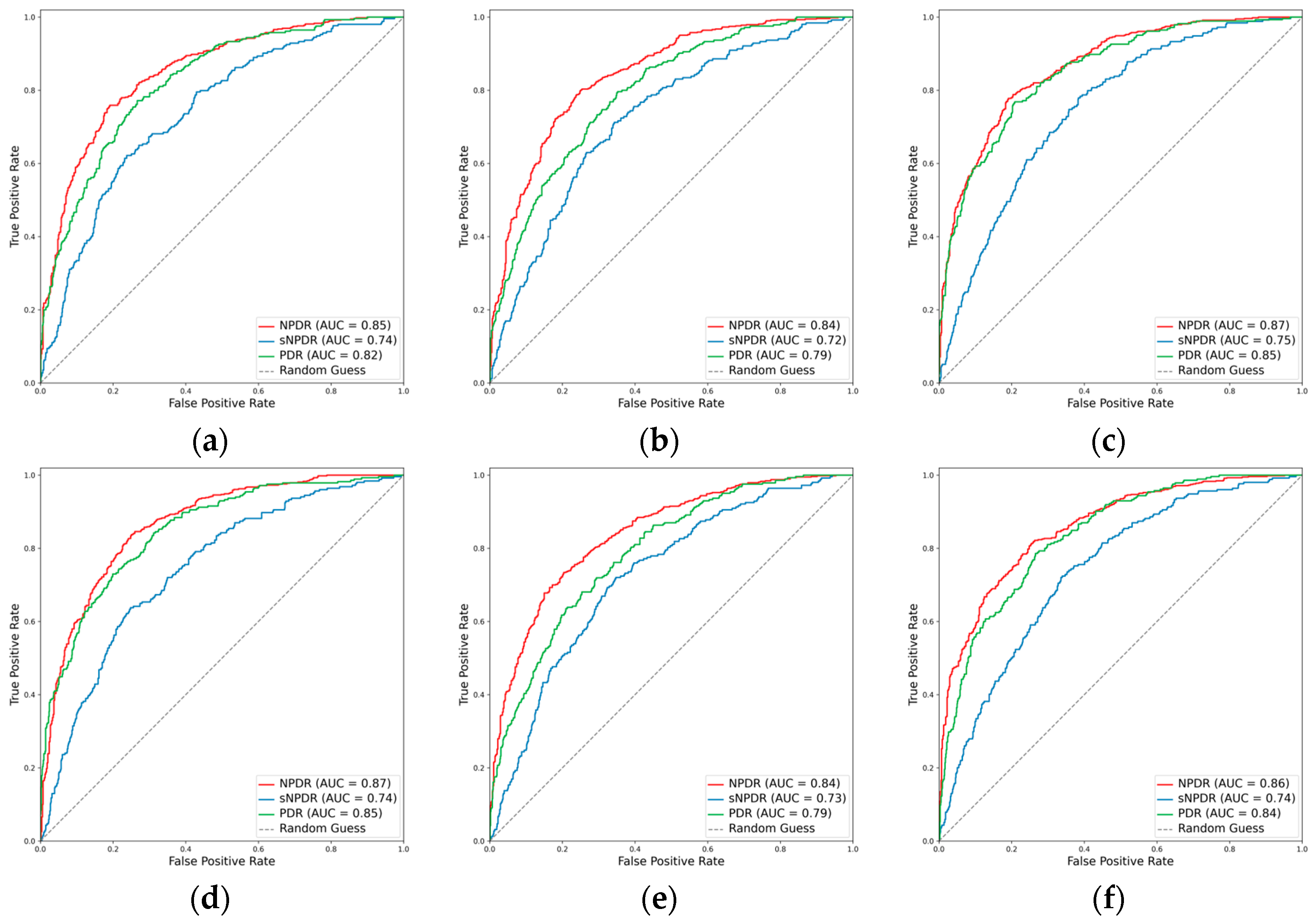

3.2.2. Classification Performance for the DR Subclasses

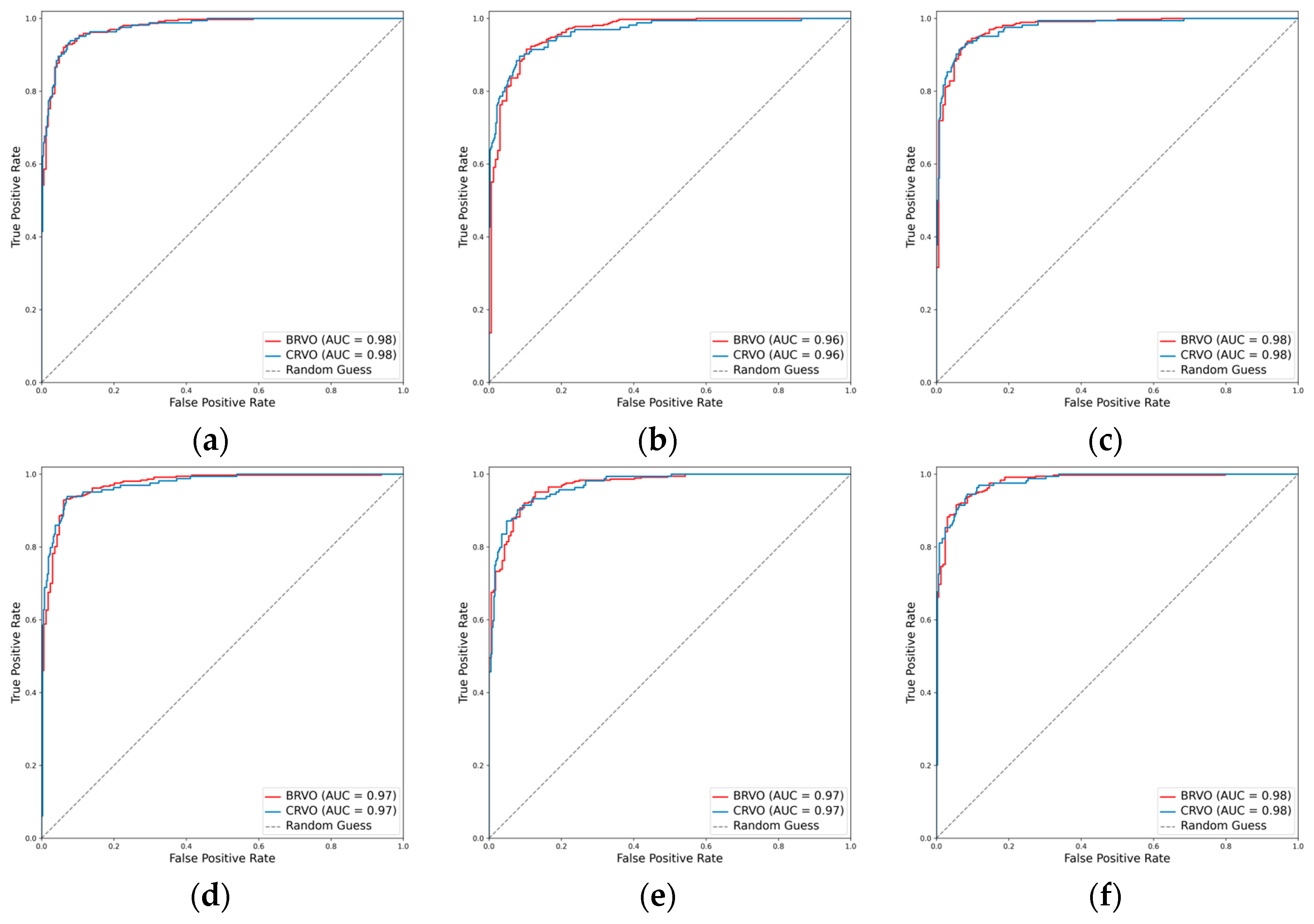

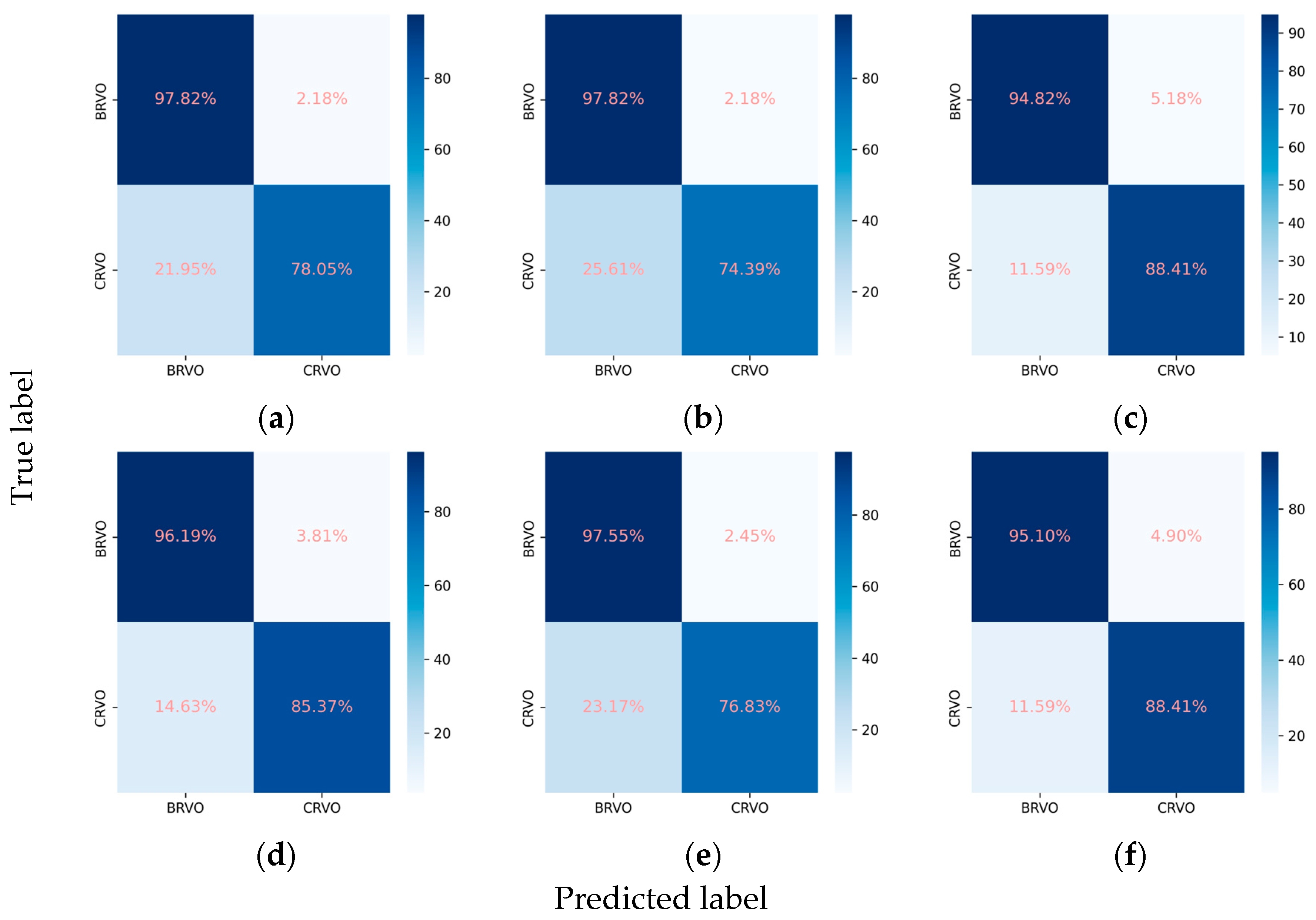

3.2.3. Classification Performance for the RVO Subclasses

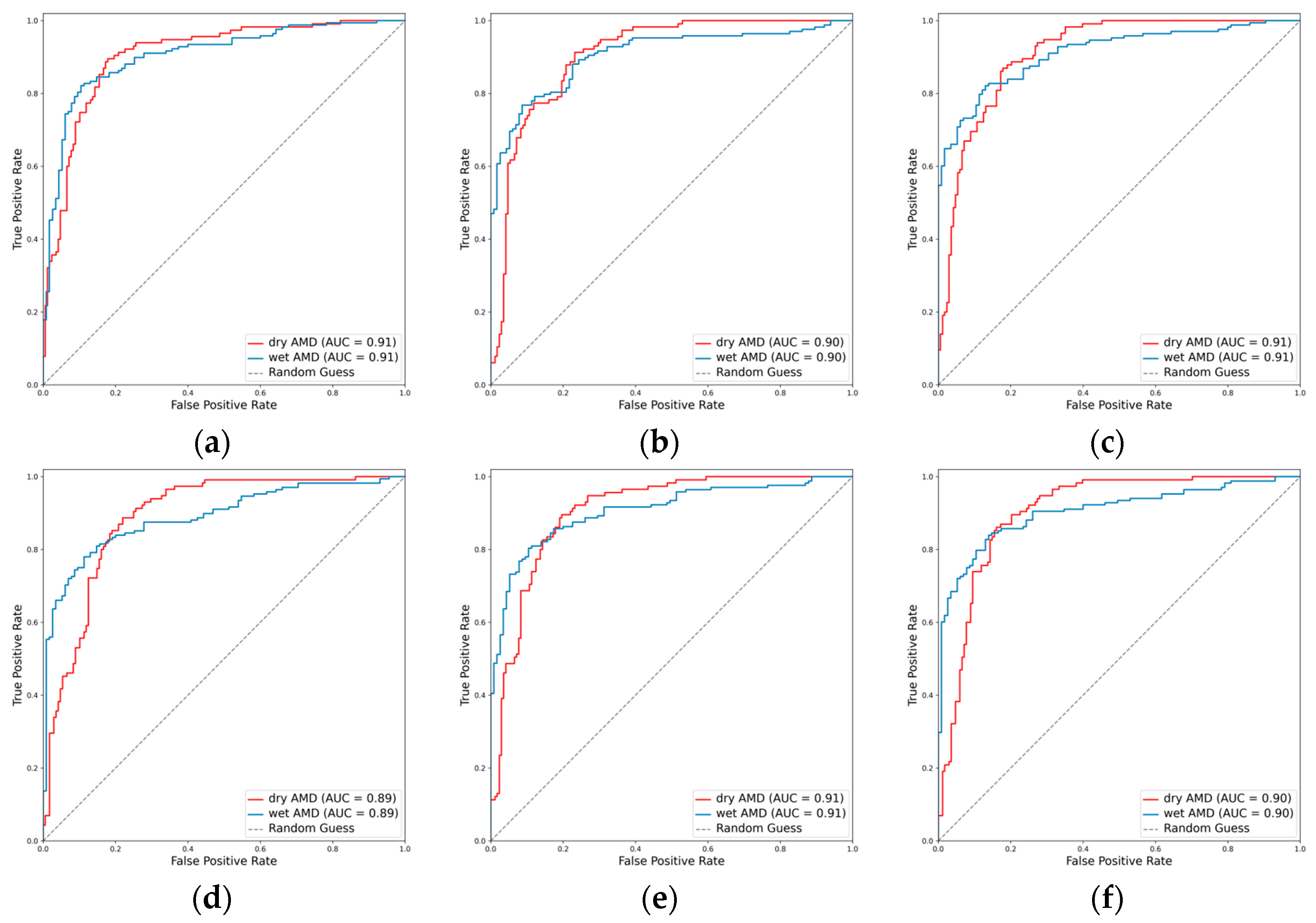

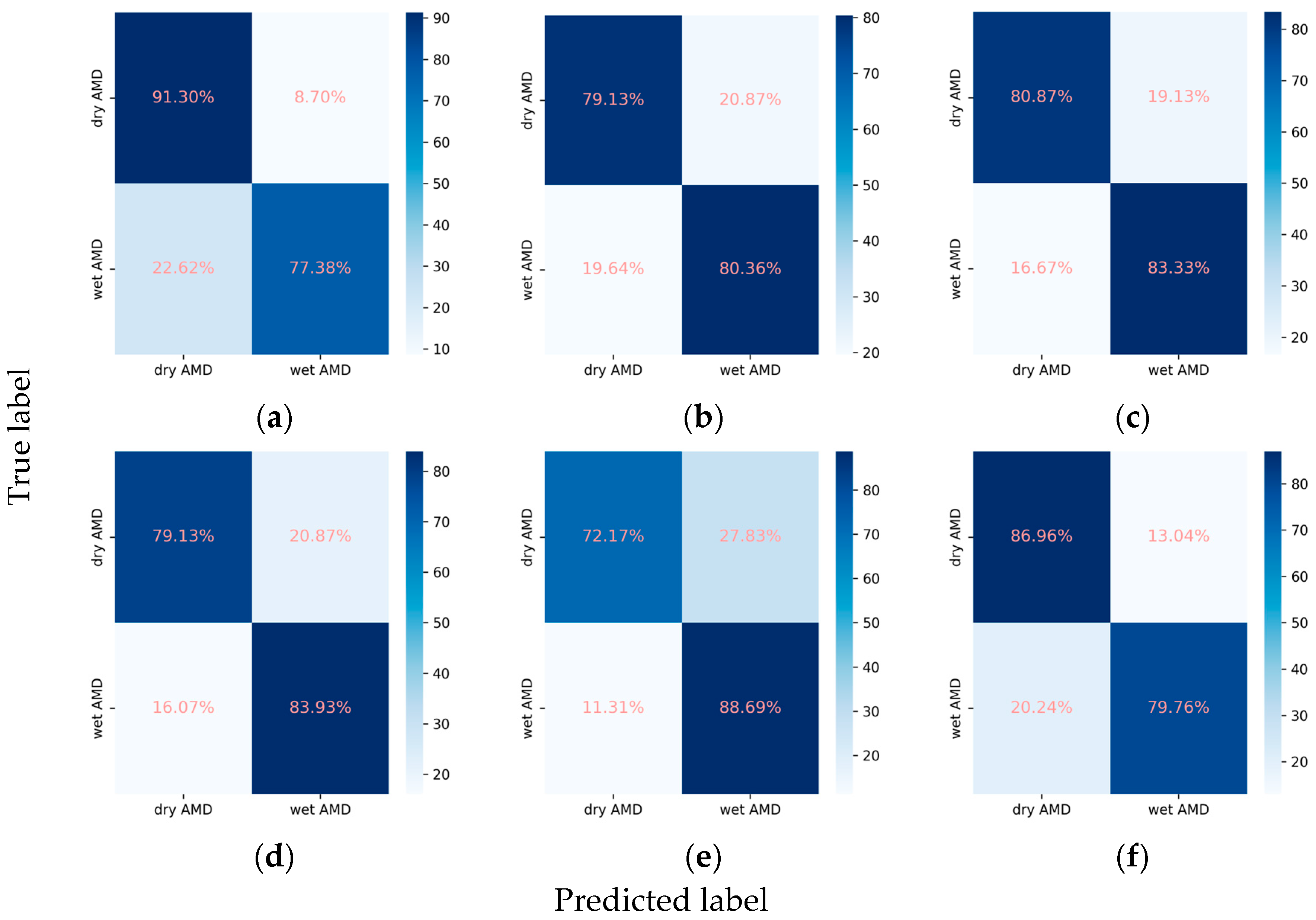

3.2.4. Classification Performance for the AMD Subclasses

3.3. Ablation Studies of Key Parameters

4. Discussion

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cheung, N.; Mitchell, P.; Wong, T.Y. Diabetic retinopathy. Lancet 2010, 376, 124–136. [Google Scholar] [CrossRef] [PubMed]

- Song, P.; Xu, Y.; Zha, M.; Zhang, Y.; Rudan, I. Global epidemiology of retinal vein occlusion: A systematic review and meta-analysis of prevalence, incidence, and risk factors. J. Glob. Health 2019, 9, 010427. [Google Scholar] [CrossRef] [PubMed]

- Laouri, M.; Chen, E.; Looman, M.; Gallagher, M. The burden of disease of retinal vein occlusion: Review of the literature. Eye 2011, 25, 981–988. [Google Scholar] [CrossRef] [PubMed]

- Mitchell, P.; Liew, G.; Gopinath, B.; Wong, T.Y. Age-related macular degeneration. Lancet 2018, 392, 1147–1159. [Google Scholar] [CrossRef] [PubMed]

- Jaulim, A.; Ahmed, B.; Khanam, T.; Chatziralli, I.P. Branch retinal vein occlusion: Epidemiology, pathogenesis, risk factors, clinical features, diagnosis, and complications. An update of the literature. Retina 2013, 33, 901–910. [Google Scholar] [CrossRef]

- Lee, R.; Wong, T.Y.; Sabanayagam, C. Epidemiology of diabetic retinopathy, diabetic macular edema and related vision loss. Eye Vis. 2015, 2, 17. [Google Scholar] [CrossRef]

- Ip, M.; Hendrick, A. Retinal Vein Occlusion Review. Asia-Pac. J. Ophthalmol. 2018, 7, 40–45. [Google Scholar] [CrossRef]

- Sivaprasad, S.; Amoaku, W.M.; Hykin, P. The Royal College of Ophthalmologists Guidelines on retinal vein occlusions: Executive summary. Eye 2015, 29, 1633–1638. [Google Scholar] [CrossRef]

- Grading Diabetic Retinopathy from Stereoscopic Color Fundus Photographs—An Extension of the Modified Airlie House Classification: ETDRS Report Number 10. Ophthalmology 2020, 127, S99–S119. [CrossRef]

- Hou, X.; Wang, L.; Zhu, D.; Guo, L.; Weng, J.; Zhang, M.; Zhou, Z.; Zou, D.; Ji, Q.; Guo, X.; et al. Prevalence of diabetic retinopathy and vision-threatening diabetic retinopathy in adults with diabetes in China. Nat. Commun. 2023, 14, 4296. [Google Scholar] [CrossRef]

- Nicholson, L.; Talks, S.J.; Amoaku, W.; Talks, K.; Sivaprasad, S. Retinal vein occlusion (RVO) guideline: Executive summary. Eye 2022, 36, 909–912. [Google Scholar] [CrossRef] [PubMed]

- Ma, R.C.W. Epidemiology of diabetes and diabetic complications in China. Diabetologia 2018, 61, 1249–1260. [Google Scholar] [CrossRef]

- Zhang, M.; Shi, Y.; Zhou, B.; Huang, Z.; Zhao, Z.; Li, C.; Zhang, X.; Han, G.; Peng, K.; Li, X.; et al. Prevalence, awareness, treatment, and control of hypertension in China, 2004–2018: Findings from six rounds of a national survey. BMJ 2023, 380, e071952. [Google Scholar] [CrossRef] [PubMed]

- Balyen, L.; Peto, T. Promising Artificial Intelligence-Machine Learning-Deep Learning Algorithms in Ophthalmology. Asia Pac. J. Ophthalmol. 2019, 8, 264–272. [Google Scholar] [CrossRef]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.J.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.A.; et al. Development and Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs. JAMA 2016, 316, 2402–2410. [Google Scholar] [CrossRef] [PubMed]

- Ting, D.; Cheung, C.; Lim, G.; Tan, G.; Quang, N.; Gan, A.; Hamzah, H.; García-Franco, R.; San Yeo, I.Y.; Lee, S.-Y.; et al. Development and Validation of a Deep Learning System for Diabetic Retinopathy and Related Eye Diseases Using Retinal Images from Multiethnic Populations with Diabetes. JAMA 2017, 318, 2211–2223. [Google Scholar] [CrossRef]

- Topol, E.J. High-performance medicine: The convergence of human and artificial intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef]

- Poschkamp, B.; Stahl, A.; Poschkamp, B.; Stahl, A. Application of deep learning algorithms for diabetic retinopathy screening. Ann. Transl. Med. 2022, 10, 1298. [Google Scholar] [CrossRef]

- Gunasekeran, D.V.; Ting, D.S.W.; Tan, G.; Wong, T.Y. Artificial intelligence for diabetic retinopathy screening, prediction and management. Curr. Opin. Ophthalmol. 2020, 31, 357–365. [Google Scholar] [CrossRef]

- Grzybowski, A.; Brona, P.; Lim, G.; Ruamviboonsuk, P.; Tan, G.; Abràmoff, M.D.; Ting, D.S.J. Artificial intelligence for diabetic retinopathy screening: A review. Eye 2020, 34, 451–460. [Google Scholar] [CrossRef]

- Bellemo, V.; Lim, G.; Rim, T.H.; Tan, G.; Cheung, C.Y.; Sadda, S.R.; He, M.; Tufail, A.; Lee, M.L.; Hsu, W.; et al. Artificial Intelligence Screening for Diabetic Retinopathy: The Real-World Emerging Application. Curr. Diabetes Rep. 2019, 19, 72. [Google Scholar] [CrossRef] [PubMed]

- Gargeya, R.; Leng, T. Automated Identification of Diabetic Retinopathy Using Deep Learning. Ophthalmology 2017, 124, 962–969. [Google Scholar] [CrossRef] [PubMed]

- Kose, U.; Deperlioglu, O.; Alzubi, J.A.; Patrut, B. Diagnosing Diabetic Retinopathy by Using a Blood Vessel Extraction Technique and a Convolutional Neural Network. Stud. Comput. Intell. 2021, 909, 53–72. [Google Scholar] [CrossRef]

- Abràmoff, M.; Lou, Y.; Erginay, A.; Clarida, W.; Amelon, R.; Folk, J.; Niemeijer, M. Improved Automated Detection of Diabetic Retinopathy on a Publicly Available Dataset through Integration of Deep Learning. Investig. Ophthalmol. Vis. Sci. 2016, 57, 5200–5206. [Google Scholar] [CrossRef] [PubMed]

- Abràmoff, M.D.; Lavin, P.T.; Birch, M.R.; Shah, N.; Folk, J.C. Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. NPJ Digit. Med. 2018, 1, 39. [Google Scholar] [CrossRef] [PubMed]

- Tong, Y.; Lu, W.; Yu; Shen, Y. Application of machine learning in ophthalmic imaging modalities. Eye Vis. 2020, 7, 22. [Google Scholar] [CrossRef] [PubMed]

- Chen, Q.; Yu, W.H.; Lin, S.; Liu, B.S.; Wang, Y.; Wei, Q.J.; He, X.X.; Ding, F.; Yang, G.; Chen, Y.X.; et al. Artificial intelligence can assist with diagnosing retinal vein occlusion. Int. J. Ophthalmol. 2021, 14, 1895–1902. [Google Scholar] [CrossRef] [PubMed]

- Ren, X.; Feng, W.; Ran, R.; Gao, Y.; Lin, Y.; Fu, X.; Tao, Y.; Wang, T.; Wang, B.; Ju, L.; et al. Artificial intelligence to distinguish retinal vein occlusion patients using color fundus photographs. Eye 2023, 37, 2026–2032. [Google Scholar] [CrossRef]

- Nagasato, D.; Tabuchi, H.; Ohsugi, H.; Masumoto, H.; Enno, H.; Ishitobi, N.; Sonobe, T.; Kameoka, M.; Niki, M.; Mitamura, Y. Deep-learning classifier with ultrawide-field fundus ophthalmoscopy for detecting branch retinal vein occlusion. Int. J. Ophthalmol. 2019, 12, 94–99. [Google Scholar] [CrossRef]

- Cai, L.Z.; Hinkle, J.W.; Arias, D.; Gorniak, R.; Lakhani, P.; Flanders, A.; Kuriyan, A. Applications of Artificial Intelligence for the Diagnosis, Prognosis, and Treatment of Age-related Macular Degeneration. Int. Ophthalmol. Clin. 2020, 60, 147–168. [Google Scholar] [CrossRef]

- Kankanahalli, S.; Burlina, P.; Wolfson, Y.; Freund, D.E.; Bressler, N. Automated classification of severity of age-related macular degeneration from fundus photographs. Investig. Ophthalmol. Vis. Sci. 2013, 54, 1789–1796. [Google Scholar] [CrossRef] [PubMed]

- Burlina, P.; Joshi, N.; Pekala, M.; Pacheco, K.D.; Freund, D.E.; Bressler, N. Automated Grading of Age-Related Macular Degeneration from Color Fundus Images Using Deep Convolutional Neural Networks. JAMA Ophthalmol. 2017, 135, 1170–1176. [Google Scholar] [CrossRef] [PubMed]

- Burlina, P.; Pacheco, K.D.; Joshi, N.; Freund, D.E.; Bressler, N.M. Comparing humans and deep learning performance for grading AMD. Comput. Biol. Med. 2017, 82, 80–86. [Google Scholar] [CrossRef] [PubMed]

- Grassmann, F.; Mengelkamp, J.; Brandl, C.; Harsch, S.; Zimmermann, M.; Linkohr, B.; Peters, A.; Heid, I.M.; Palm, C.; Weber, B.H.F. A Deep Learning Algorithm for Prediction of Age-Related Eye Disease Study Severity Scale for Age-Related Macular Degeneration from Color Fundus Photography. Ophthalmology 2018, 125, 1410–1420. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.; Dharssi, S.; Chen, Q.; Keenan, T.D.L.; Agrón, E.; Wong, W.T.; Chew, E.Y.; Lu, Z. DeepSeeNet: A Deep Learning Model for Automated Classification of Patient-based Age-related Macular Degeneration Severity from Color Fundus Photographs. Ophthalmology 2019, 126, 565–575. [Google Scholar] [CrossRef]

- Govindaiah, A.; Smith, R.T.; Bhuiyan, A. A New and Improved Method for Automated Screening of Age-Related Macular Degeneration Using Ensemble Deep Neural Networks, In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018. [CrossRef]

- Vaswani, A.; Shazeer, N.M.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Zhou, Y.; Wagner, S.K.; Chia, M.A.; Zhao, A.; Woodward-Court, P.; Xu, M.; Struyven, R.R.; Alexander, D.C.; Keane, P.A. AutoMorph: Automated Retinal Vascular Morphology Quantification via a Deep Learning Pipeline. Transl. Vis. Sci. Technol. 2022, 11, 12. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNetV2: Smaller Models and Faster Training. arXiv 2021, arXiv:2104.00298. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18 June–24 June 2022; pp. 11966–11976. [Google Scholar]

- Ben-Baruch, E.; Ridnik, T.; Zamir, N.; Noy, A.; Friedman, I.; Protter, M.; Zelnik-Manor, L. Asymmetric Loss For Multi-Label Classification. arXiv 2020, arXiv:2009.14119. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.S.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2014, 115, 211–252. [Google Scholar] [CrossRef]

- Cohen’s Kappa. The SAGE Encyclopedia of Research Design; SAGE Publications: New York, NY, USA, 2022. [Google Scholar]

- Chaurasia, B.K.; Raj, H.; Rathour, S.S.; Singh, P.B. Transfer learning-driven ensemble model for detection of diabetic retinopathy disease. Med. Biol. Eng. Comput. 2023, 61, 2033–2049. [Google Scholar] [CrossRef]

- Mondal, S.S.; Mandal, N.; Singh, K.K.; Singh, A.; Izonin, I. EDLDR: An Ensemble Deep Learning Technique for Detection and Classification of Diabetic Retinopathy. Diagnostics 2022, 13, 124. [Google Scholar] [CrossRef] [PubMed]

- Heisler, M.; Karst, S.; Lo, J.; Mammo, Z.; Yu, T.; Warner, S.; Maberley, D.; Beg, M.F.; Navajas, E.V.; Sarunic, M.V. Ensemble Deep Learning for Diabetic Retinopathy Detection Using Optical Coherence Tomography Angiography. Transl. Vis. Sci. Technol. 2020, 9, 20. [Google Scholar] [CrossRef] [PubMed]

- Le, D.; Alam, M.; Yao, C.K.; Lim, J.I.; Hsieh, Y.T.; Chan, R.V.P.; Toslak, D.; Yao, X. Transfer Learning for Automated OCTA Detection of Diabetic Retinopathy. Transl. Vis. Sci. Technol. 2020, 9, 35. [Google Scholar] [CrossRef] [PubMed]

| Characteristic | Datasets | ||||

|---|---|---|---|---|---|

| Train | Validate | Test | Whole | ||

| Number of images (eyes) | 9656 | 2414 | 3019 | 15,089 | |

| Number of unique patients a | 6768 | 2245 | 2719 | 8110 | |

| Age, mean (SD b) c | 66.40 (14.35) | 66.24 (14.50) | 66.30 (14.17) | 66.40 (14.35) | |

| Sex, female (%) c | 3317 (49.01) | 1093 (45.28) | 1342 (49.36) | 3963 (48.87) | |

| Diagnoses c | |||||

| Primary Classes | DR | 3973 | 953 | 1265 | 6191 |

| RVO | 1848 | 477 | 584 | 2909 | |

| AMD | 1131 | 314 | 364 | 1809 | |

| ME | 1775 | 454 | 548 | 2777 | |

| VH | 111 | 28 | 28 | 167 | |

| Laser spots | 759 | 199 | 223 | 1181 | |

| DR subclasses | NPDR | 1820 | 422 | 590 | 2832 |

| sNPDR | 777 | 201 | 254 | 1232 | |

| PDR | 928 | 223 | 285 | 1436 | |

| Unspecified | 448 | 107 | 136 | 691 | |

| RVO subclasses | BRVO | 1081 | 290 | 367 | 1738 |

| CRVO | 580 | 147 | 164 | 891 | |

| Unspecified | 187 | 40 | 53 | 280 | |

| AMD subclasses | Dry AMD | 328 | 105 | 115 | 548 |

| Wet AMD | 507 | 127 | 168 | 802 | |

| Unspecified | 296 | 82 | 81 | 459 | |

| Diagnoses/Metrics | Models | AUC | Accuracy | Recall | Precision | F1 Score | Specificity | Cohen’s Kappa | |

|---|---|---|---|---|---|---|---|---|---|

| Primary classes | DR | R | 0.930 | 0.859 | 0.856 | 0.816 | 0.835 | 0.860 | 0.712 |

| R + A | 0.923 | 0.861 | 0.824 | 0.840 | 0.832 | 0.887 | 0.713 | ||

| E | 0.933 | 0.873 | 0.839 | 0.856 | 0.847 | 0.898 | 0.739 | ||

| E + A | 0.929 | 0.861 | 0.822 | 0.841 | 0.832 | 0.888 | 0.713 | ||

| C | 0.942 | 0.878 | 0.889 | 0.831 | 0.859 | 0.869 | 0.751 | ||

| C + A | 0.943 | 0.892 | 0.861 | 0.880 | 0.870 | 0.915 | 0.778 | ||

| RVO | R | 0.954 | 0.929 | 0.842 | 0.803 | 0.822 | 0.950 | 0.778 | |

| R + A | 0.939 | 0.935 | 0.796 | 0.858 | 0.826 | 0.968 | 0.786 | ||

| E | 0.958 | 0.933 | 0.817 | 0.835 | 0.826 | 0.961 | 0.785 | ||

| E + A | 0.955 | 0.932 | 0.810 | 0.836 | 0.823 | 0.962 | 0.781 | ||

| C | 0.965 | 0.949 | 0.834 | 0.895 | 0.863 | 0.977 | 0.832 | ||

| C + A | 0.960 | 0.944 | 0.851 | 0.857 | 0.854 | 0.966 | 0.819 | ||

| AMD | R | 0.960 | 0.935 | 0.775 | 0.710 | 0.741 | 0.957 | 0.704 | |

| R + A | 0.941 | 0.930 | 0.712 | 0.712 | 0.712 | 0.960 | 0.672 | ||

| E | 0.958 | 0.927 | 0.764 | 0.676 | 0.717 | 0.950 | 0.676 | ||

| E + A | 0.960 | 0.928 | 0.723 | 0.696 | 0.709 | 0.957 | 0.668 | ||

| C | 0.962 | 0.933 | 0.769 | 0.704 | 0.735 | 0.956 | 0.697 | ||

| C + A | 0.959 | 0.928 | 0.794 | 0.671 | 0.727 | 0.947 | 0.686 | ||

| ME | R | 0.874 | 0.844 | 0.597 | 0.567 | 0.581 | 0.899 | 0.486 | |

| R + A | 0.851 | 0.839 | 0.577 | 0.554 | 0.565 | 0.897 | 0.467 | ||

| E | 0.877 | 0.823 | 0.692 | 0.509 | 0.587 | 0.852 | 0.477 | ||

| E + A | 0.881 | 0.829 | 0.688 | 0.522 | 0.594 | 0.860 | 0.488 | ||

| C | 0.880 | 0.848 | 0.682 | 0.568 | 0.620 | 0.885 | 0.526 | ||

| C + A | 0.885 | 0.828 | 0.761 | 0.518 | 0.616 | 0.843 | 0.511 | ||

| VH | R | 0.955 | 0.989 | 0.464 | 0.419 | 0.441 | 0.994 | 0.435 | |

| R + A | 0.857 | 0.990 | 0.321 | 0.474 | 0.383 | 0.997 | 0.378 | ||

| E | 0.969 | 0.992 | 0.464 | 0.565 | 0.510 | 0.997 | 0.506 | ||

| E + A | 0.956 | 0.982 | 0.679 | 0.297 | 0.413 | 0.985 | 0.405 | ||

| C | 0.943 | 0.989 | 0.464 | 0.433 | 0.448 | 0.994 | 0.443 | ||

| C + A | 0.911 | 0.989 | 0.393 | 0.393 | 0.393 | 0.994 | 0.387 | ||

| Laser spots | R | 0.906 | 0.944 | 0.596 | 0.627 | 0.611 | 0.972 | 0.581 | |

| R + A | 0.888 | 0.943 | 0.628 | 0.614 | 0.621 | 0.969 | 0.590 | ||

| E | 0.935 | 0.947 | 0.700 | 0.629 | 0.662 | 0.967 | 0.634 | ||

| E + A | 0.928 | 0.942 | 0.722 | 0.585 | 0.647 | 0.959 | 0.615 | ||

| C | 0.932 | 0.954 | 0.709 | 0.678 | 0.693 | 0.973 | 0.668 | ||

| C + A | 0.928 | 0.957 | 0.740 | 0.696 | 0.717 | 0.974 | 0.694 | ||

| Weighted metrics and mean Cohen’s kappa for the primary classes | R | - | - | 0.774 | 0.737 | 0.755 | - | 0.616 | |

| R + A | - | - | 0.741 | 0.756 | 0.748 | - | 0.601 | ||

| E | - | - | 0.785 | 0.748 | 0.763 | - | 0.636 | ||

| E + A | - | - | 0.775 | 0.741 | 0.754 | - | 0.612 | ||

| C | - | - | 0.809 | 0.765 | 0.785 | - | 0.653 | ||

| C + A | - | - | 0.819 | 0.766 | 0.788 | - | 0.646 | ||

| Subset accuracy | R | - | 0.610 | - | - | - | - | - | |

| R + A | - | 0.604 | - | - | - | - | - | ||

| E | - | 0.600 | - | - | - | - | - | ||

| E + A | - | 0.589 | - | - | - | - | - | ||

| C | - | 0.644 | - | - | - | - | - | ||

| C + A | - | 0.629 | - | - | - | - | - | ||

| DR subclasses | NPDR | R | 0.850 | 0.748 | 0.900 | 0.702 | 0.789 | 0.583 | - |

| R + A | 0.865 | 0.775 | 0.863 | 0.746 | 0.800 | 0.679 | - | ||

| E | 0.843 | 0.746 | 0.851 | 0.716 | 0.778 | 0.631 | - | ||

| E + A | 0.839 | 0.752 | 0.844 | 0.726 | 0.781 | 0.651 | - | ||

| C | 0.865 | 0.764 | 0.855 | 0.736 | 0.792 | 0.664 | - | ||

| C + A | 0.859 | 0.775 | 0.773 | 0.792 | 0.782 | 0.777 | - | ||

| sNPDR | R | 0.741 | 0.775 | 0.362 | 0.500 | 0.420 | 0.895 | - | |

| R + A | 0.743 | 0.751 | 0.429 | 0.445 | 0.437 | 0.845 | - | ||

| E | 0.725 | 0.731 | 0.406 | 0.402 | 0.404 | 0.825 | - | ||

| E + A | 0.725 | 0.736 | 0.429 | 0.416 | 0.422 | 0.825 | - | ||

| C | 0.751 | 0.750 | 0.413 | 0.441 | 0.427 | 0.848 | - | ||

| C + A | 0.741 | 0.724 | 0.531 | 0.412 | 0.464 | 0.779 | - | ||

| PDR | R | 0.823 | 0.798 | 0.432 | 0.651 | 0.519 | 0.922 | - | |

| R + A | 0.849 | 0.815 | 0.488 | 0.688 | 0.571 | 0.925 | - | ||

| E | 0.794 | 0.774 | 0.354 | 0.587 | 0.442 | 0.916 | - | ||

| E + A | 0.789 | 0.775 | 0.372 | 0.587 | 0.455 | 0.911 | - | ||

| C | 0.853 | 0.821 | 0.505 | 0.702 | 0.588 | 0.928 | - | ||

| C + A | 0.837 | 0.807 | 0.512 | 0.649 | 0.573 | 0.906 | - | ||

| Weighted metrics and Cohen’s kappa for the DR subclasses | R | - | - | 0.661 | 0.644 | 0.638 | - | 0.491 | |

| R + A | - | - | 0.671 | 0.664 | 0.661 | - | 0.570 | ||

| E | - | - | 0.625 | 0.613 | 0.609 | - | 0.467 | ||

| E + A | - | - | 0.632 | 0.621 | 0.618 | - | 0.476 | ||

| C | - | - | 0.668 | 0.661 | 0.658 | - | 0.566 | ||

| C + A | - | - | 0.653 | 0.670 | 0.658 | - | 0.575 | ||

| RVO subclasses | BRVO | R | 0.976 | 0.917 | 0.978 | 0.909 | 0.942 | 0.780 | - |

| R + A | 0.972 | 0.928 | 0.962 | 0.936 | 0.949 | 0.854 | - | ||

| E | 0.963 | 0.906 | 0.978 | 0.895 | 0.935 | 0.744 | - | ||

| E + A | 0.969 | 0.911 | 0.975 | 0.904 | 0.938 | 0.768 | - | ||

| C | 0.976 | 0.928 | 0.948 | 0.948 | 0.948 | 0.884 | - | ||

| C + A | 0.981 | 0.930 | 0.951 | 0.948 | 0.950 | 0.884 | - | ||

| CRVO | R | 0.976 | 0.917 | 0.780 | 0.941 | 0.853 | 0.978 | - | |

| R + A | 0.972 | 0.928 | 0.854 | 0.909 | 0.881 | 0.962 | - | ||

| E | 0.963 | 0.906 | 0.744 | 0.938 | 0.830 | 0.978 | - | ||

| E + A | 0.969 | 0.911 | 0.768 | 0.933 | 0.843 | 0.975 | - | ||

| C | 0.976 | 0.928 | 0.884 | 0.884 | 0.884 | 0.948 | - | ||

| C + A | 0.981 | 0.930 | 0.884 | 0.890 | 0.887 | 0.951 | - | ||

| Weighted metrics and Cohen’s kappa for the RVO subclasses | R | - | - | 0.917 | 0.919 | 0.915 | - | 0.796 | |

| R + A | - | - | 0.928 | 0.928 | 0.928 | - | 0.829 | ||

| E | - | - | 0.906 | 0.907 | 0.902 | - | 0.766 | ||

| E + A | - | - | 0.911 | 0.913 | 0.909 | - | 0.782 | ||

| C | - | - | 0.928 | 0.928 | 0.928 | - | 0.832 | ||

| C + A | - | - | 0.930 | 0.930 | 0.930 | - | 0.837 | ||

| AMD subclasses | Dry AMD | R | 0.906 | 0.830 | 0.913 | 0.734 | 0.814 | 0.774 | - |

| R + A | 0.890 | 0.820 | 0.791 | 0.771 | 0.781 | 0.839 | - | ||

| E | 0.905 | 0.799 | 0.791 | 0.734 | 0.762 | 0.804 | - | ||

| E + A | 0.907 | 0.820 | 0.722 | 0.814 | 0.765 | 0.887 | - | ||

| C | 0.912 | 0.823 | 0.809 | 0.769 | 0.788 | 0.833 | - | ||

| C + A | 0.905 | 0.827 | 0.870 | 0.746 | 0.803 | 0.798 | - | ||

| Wet AMD | R | 0.906 | 0.830 | 0.774 | 0.926 | 0.844 | 0.913 | - | |

| R + A | 0.890 | 0.820 | 0.839 | 0.855 | 0.847 | 0.791 | - | ||

| E | 0.905 | 0.799 | 0.804 | 0.849 | 0.826 | 0.791 | - | ||

| E + A | 0.907 | 0.820 | 0.887 | 0.823 | 0.854 | 0.722 | - | ||

| C | 0.912 | 0.823 | 0.833 | 0.864 | 0.848 | 0.809 | - | ||

| C + A | 0.905 | 0.827 | 0.798 | 0.899 | 0.845 | 0.870 | - | ||

| Weighted metrics and Cohen’s kappa for the AMD subclasses | R | - | - | 0.830 | 0.850 | 0.832 | - | 0.661 | |

| R + A | - | - | 0.820 | 0.821 | 0.820 | - | 0.628 | ||

| E | - | - | 0.799 | 0.802 | 0.800 | - | 0.588 | ||

| E + A | - | - | 0.820 | 0.820 | 0.818 | - | 0.620 | ||

| C | - | - | 0.823 | 0.825 | 0.824 | - | 0.637 | ||

| C + A | - | - | 0.827 | 0.837 | 0.828 | - | 0.650 | ||

| Mean Cohen’s kappa (best epoch/100) | R | - | - | - | - | - | - | 0.627 (16) | |

| R + A | - | - | - | - | - | - | 0.626 (73) | ||

| E | - | - | - | - | - | - | 0.626 (12) | ||

| E + A | - | - | - | - | - | - | 0.616 (9) | ||

| C | - | - | - | - | - | - | 0.661 (24) | ||

| C + A | - | - | - | - | - | - | 0.660 (27) | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, W.; Bian, L.; Ma, B.; Sun, T.; Liu, Y.; Sun, Z.; Zhao, L.; Feng, K.; Yang, F.; Wang, X.; et al. Interpretable Detection of Diabetic Retinopathy, Retinal Vein Occlusion, Age-Related Macular Degeneration, and Other Fundus Conditions. Diagnostics 2024, 14, 121. https://doi.org/10.3390/diagnostics14020121

Li W, Bian L, Ma B, Sun T, Liu Y, Sun Z, Zhao L, Feng K, Yang F, Wang X, et al. Interpretable Detection of Diabetic Retinopathy, Retinal Vein Occlusion, Age-Related Macular Degeneration, and Other Fundus Conditions. Diagnostics. 2024; 14(2):121. https://doi.org/10.3390/diagnostics14020121

Chicago/Turabian StyleLi, Wenlong, Linbo Bian, Baikai Ma, Tong Sun, Yiyun Liu, Zhengze Sun, Lin Zhao, Kang Feng, Fan Yang, Xiaona Wang, and et al. 2024. "Interpretable Detection of Diabetic Retinopathy, Retinal Vein Occlusion, Age-Related Macular Degeneration, and Other Fundus Conditions" Diagnostics 14, no. 2: 121. https://doi.org/10.3390/diagnostics14020121

APA StyleLi, W., Bian, L., Ma, B., Sun, T., Liu, Y., Sun, Z., Zhao, L., Feng, K., Yang, F., Wang, X., Chan, S., Dou, H., & Qi, H. (2024). Interpretable Detection of Diabetic Retinopathy, Retinal Vein Occlusion, Age-Related Macular Degeneration, and Other Fundus Conditions. Diagnostics, 14(2), 121. https://doi.org/10.3390/diagnostics14020121