Feasibility of Encord Artificial Intelligence Annotation of Arterial Duplex Ultrasound Images

Abstract

:1. Introduction

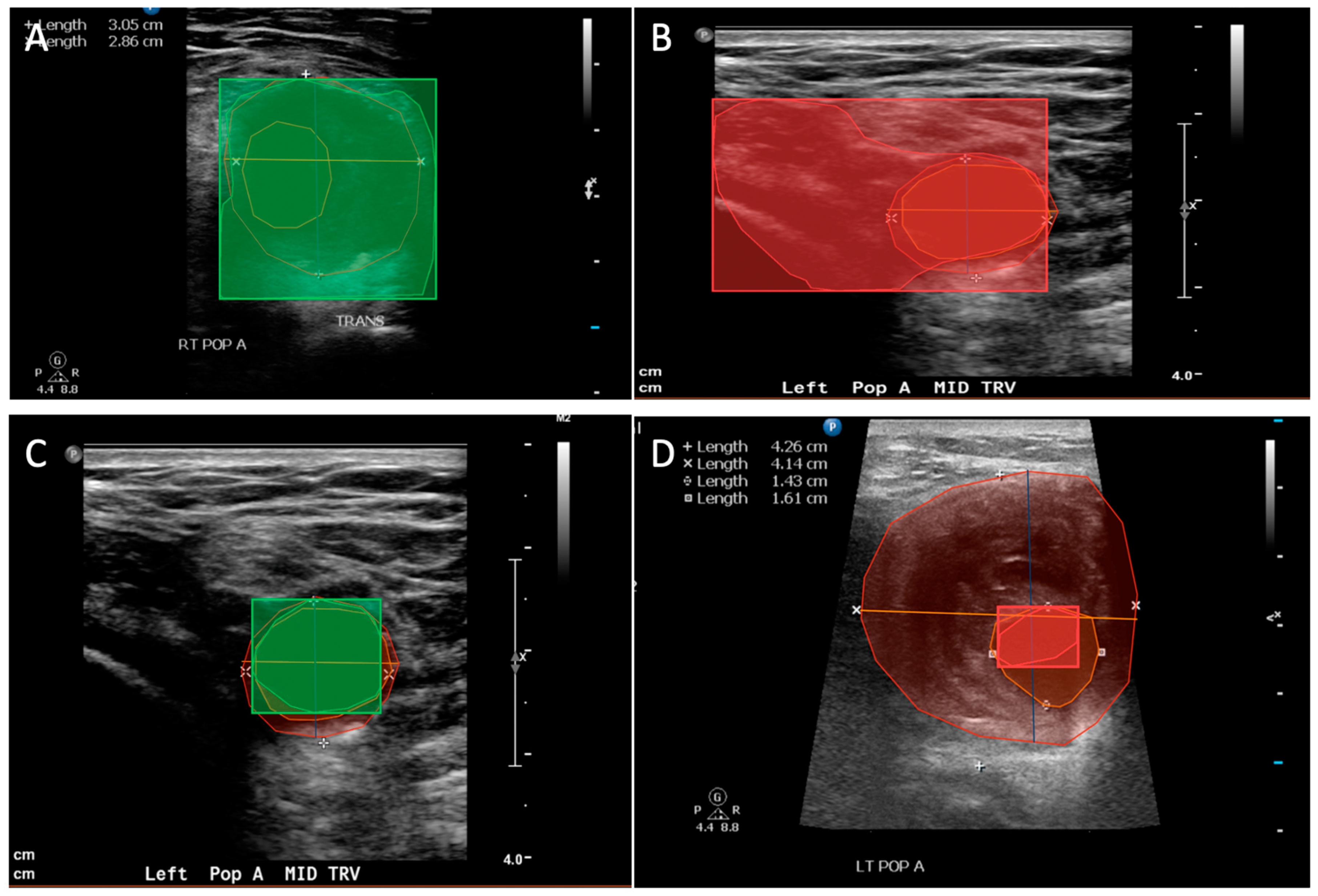

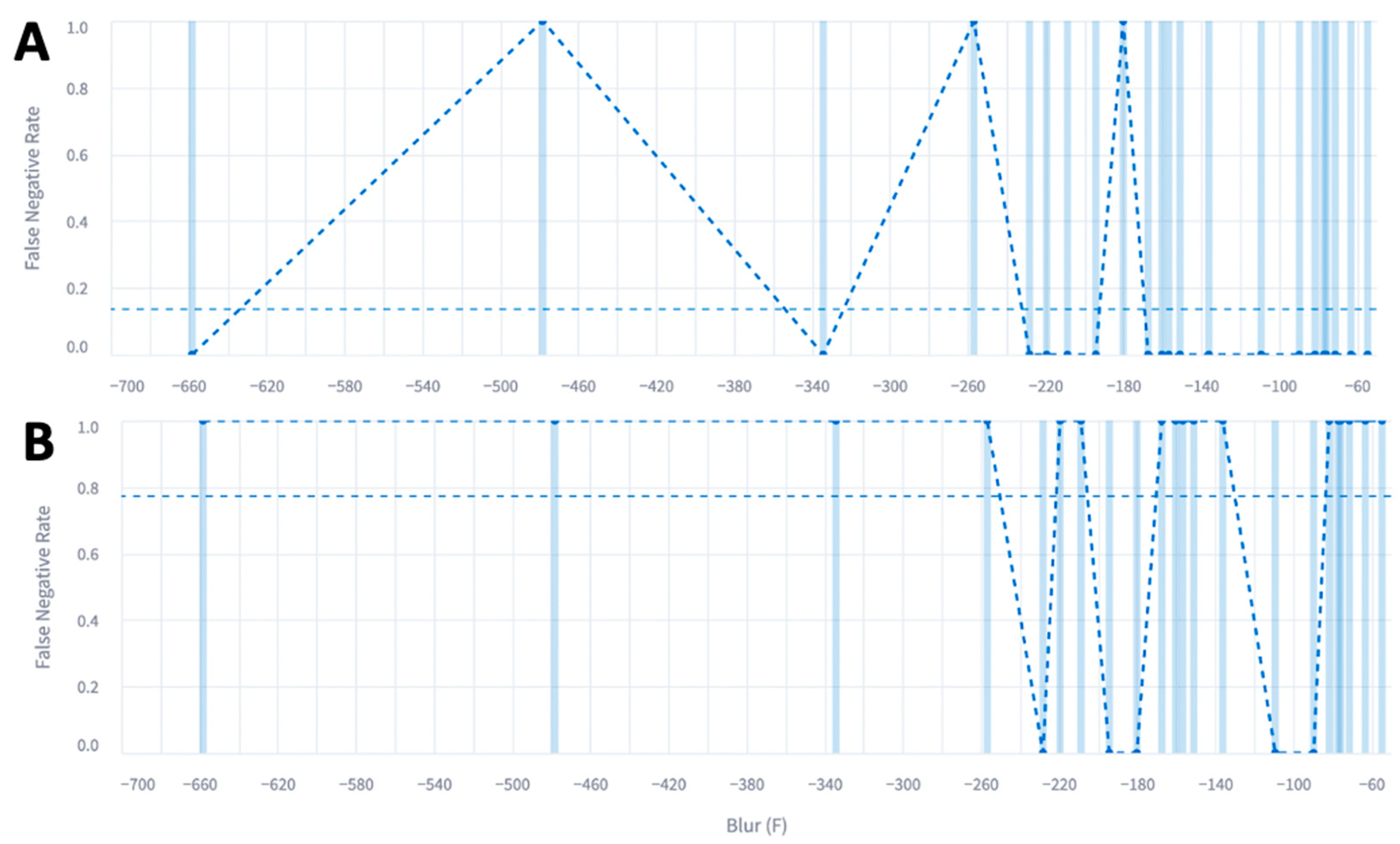

2. Materials and Methods

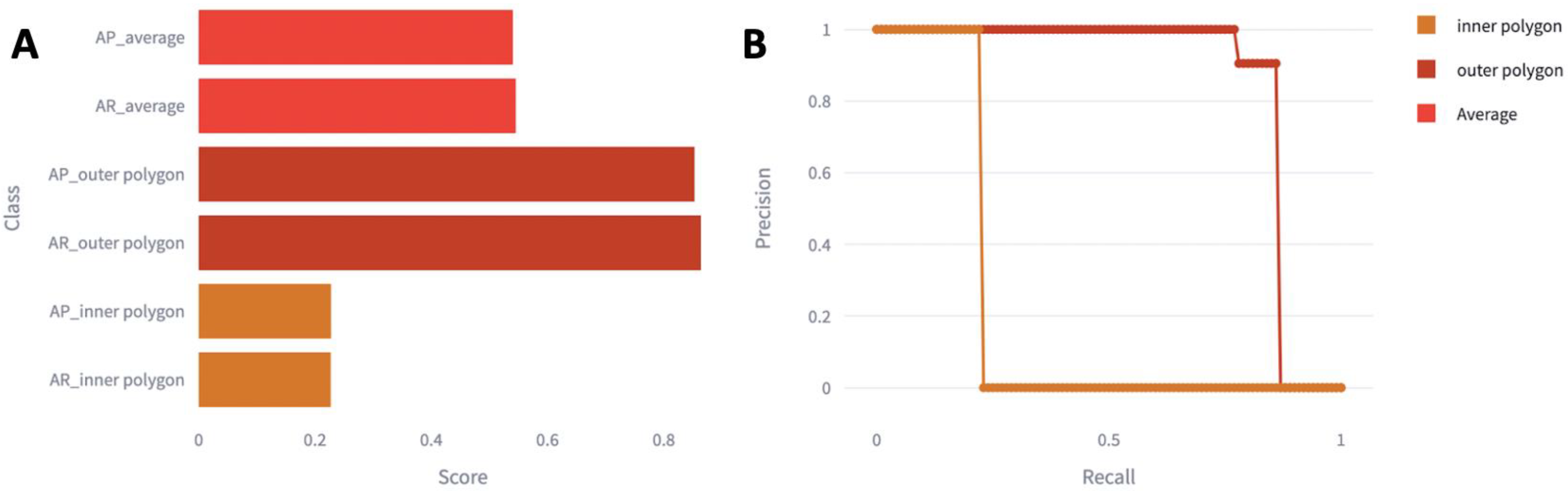

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Criqui, M.H.; Matsushita, K.; Aboyans, V.; Hess, C.N.; Hicks, C.W.; Kwan, T.W.; McDermott, M.M.; Misra, S.; Ujueta, F.; on behalf of the American Heart Association Council on Epidemiology and Prevention. Lower Extremity Peripheral Artery Disease: Contemporary Epidemiology, Management Gaps, and Future Directions: A Scientific Statement From the American Heart Association. Circulation 2021, 144, E171–E191. [Google Scholar] [CrossRef] [PubMed]

- Allison, M.A.; Ho, E.; Denenberg, J.O.; Langer, R.D.; Newman, A.B.; Fabsitz, R.R.; Criqui, M.H. Ethnic-Specific Prevalence of Peripheral Arterial Disease in the United States. Am. J. Prev. Med. 2007, 32, 328–333. [Google Scholar] [CrossRef] [PubMed]

- Anand, S.S.; Caron, F.; Eikelboom, J.W.; Bosch, J.; Dyal, L.; Aboyans, V.; Abola, M.T.; Branch, K.R.H.; Keltai, K.; Bhatt, D.L.; et al. Major Adverse Limb Events and Mortality in Patients With Peripheral Artery Disease: The COMPASS Trial. J. Am. Coll. Cardiol. 2018, 71, 2306–2315. [Google Scholar] [CrossRef] [PubMed]

- Beuschel, B.; Nayfeh, T.; Kunbaz, A.; Haddad, A.; Alzuabi, M.; Vindhyal, S.; Farber, A.; Murad, M.H. A systematic review and meta-analysis of treatment and natural history of popliteal artery aneurysms. J. Vasc. Surg. 2022, 75, 121S–125S.e14. [Google Scholar] [CrossRef] [PubMed]

- Pulli, R.; Dorigo, W.; Troisi, N.; Innocenti, A.A.; Pratesi, G.; Azas, L.; Pratesi, C. Surgical management of popliteal artery aneurysms: Which factors affect outcomes? J. Vasc. Surg. 2006, 43, 481–487. [Google Scholar] [CrossRef] [PubMed]

- Gerhard-Herman, M.D.; Gornik, H.L.; Barrett, C.; Barshes, N.R.; Corriere, M.A.; Drachman, D.E.; Fleisher, L.A.; Fowkes, F.G.R.; Hamburg, N.M.; Kinlay, S.; et al. 2016 AHA/ACC Guideline on the Management of Patients With Lower Extremity Peripheral Artery Disease: A Report of the American College of Cardiology/American Heart Association Task Force on Clinical Practice Guidelines. J. Am. Coll. Cardiol. 2017, 69, e71–e126. [Google Scholar] [CrossRef] [PubMed]

- Farber, A.; Angle, N.; Avgerinos, E.; Dubois, L.; Eslami, M.; Geraghty, P.; Haurani, M.; Jim, J.; Ketteler, E.; Pulli, R.; et al. The Society for Vascular Surgery clinical practice guidelines on popliteal artery aneurysms. J. Vasc. Surg. 2022, 75, 109S–120S. [Google Scholar] [CrossRef] [PubMed]

- Biswas, M.; Kuppili, V.; Saba, L.; Edla, D.R.; Suri, H.S.; Sharma, A.; Cuadrado-Godia, E.; Laird, J.R.; Nicolaides, A.; Suri, J.S. Deep learning fully convolution network for lumen characterization in diabetic patients using carotid ultrasound: A tool for stroke risk. Med. Biol. Eng. Comput. 2019, 57, 543–564. [Google Scholar] [CrossRef]

- Mitchell, D.G. Color Doppler imaging: Principles, limitations, and artifacts. Radiology 1990, 177, 1–10. [Google Scholar] [CrossRef]

- Jones, S.A.; Leclerc, H.; Chatzimavroudis, G.P.; Kim, Y.H.; Scott, N.A.; Yoganathan, A.P. The influence of acoustic impedance mismatch on post-stenotic pulsed- Doppler ultrasound measurements in a coronary artery model. Ultrasound Med. Biol. 1996, 22, 623–634. [Google Scholar] [CrossRef]

- Starmans, M.P.A.; Voort, S.R.; van der Tovar, J.M.C.; Veenland, J.F.; Klein, S.; Niessen, W.J. Radiomics. In Handbook of Medical Image Computing and Computer Assisted Intervention; Elsevier: Amsterdam, The Netherlands, 2019; pp. 429–456. [Google Scholar]

- Harmon, S.A.; Sanford, T.H.; Xu, S.; Turkbey, E.B.; Roth, H.; Xu, Z.; Yang, D.; Myronenko, A.; Anderson, V.; Amalou, A.; et al. Artificial intelligence for the detection of COVID-19 pneumonia on chest CT using multinational datasets. Nat. Commun. 2020, 11, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Das, S.; Nayak, G.K.; Saba, L.; Kalra, M.; Suri, J.S.; Saxena, S. An artificial intelligence framework and its bias for brain tumor segmentation: A narrative review. Comput. Biol. Med. 2022, 143, 105273. [Google Scholar] [CrossRef] [PubMed]

- Shen, Y.T.; Chen, L.; Yue, W.W.; Xu, H.X. Artificial intelligence in ultrasound. Eur. J. Radiol. 2021, 139, 109717. [Google Scholar] [CrossRef] [PubMed]

- Akkus, Z.; Cai, J.; Boonrod, A.; Zeinoddini, A.; Weston, A.D.; Philbrick, K.A.; Erickson, B.J. A Survey of Deep-Learning Applications in Ultrasound: Artificial Intelligence–Powered Ultrasound for Improving Clinical Workflow. J. Am. Coll. Radiol. 2019, 16, 1318–1328. [Google Scholar] [CrossRef] [PubMed]

- O’Connell, A.M.; Bartolotta, T.V.; Orlando, A.; Jung, S.H.; Baek, J.; Parker, K.J. Diagnostic Performance of an Artificial Intelligence System in Breast Ultrasound. J. Ultrasound Med. 2022, 41, 97–105. [Google Scholar] [CrossRef] [PubMed]

- Gomes Ataide, E.J.; Agrawal, S.; Jauhari, A.; Boese, A.; Illanes, A.; Schenke, S.; Kreissl, M.C.; Friebe, M. Comparison of Deep Learning Algorithms for Semantic Segmentation of Ultrasound Thyroid Nodules. Curr. Dir. Biomed. Eng. 2021, 7, 879–882. [Google Scholar] [CrossRef]

- Ma, J.; Wu, F.; Jiang, T.; Zhao, Q.; Kong, D. Ultrasound image-based thyroid nodule automatic segmentation using convolutional neural networks. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 1895–1910. [Google Scholar] [CrossRef]

- Mishra, D.; Chaudhury, S.; Sarkar, M.; Manohar, S.; Soin, A.S. Segmentation of Vascular Regions in Ultrasound Images: A Deep Learning Approach. In Proceedings of the 2018 IEEE International Symposium on Circuits and Systems (ISCAS), Florence, Italy, 27–30 May 2018. [Google Scholar]

- Akkus, Z.; Aly, Y.H.; Attia, I.Z.; Lopez-Jimenez, F.; Arruda-Olson, A.M.; Pellikka, P.A.; Pislaru, S.V.; Kane, G.C.; Friedman, P.A.; Oh, J.K. Artificial Intelligence (AI)-Empowered Echocardiography Interpretation: A State-of-the-Art Review. J. Clin. Med. 2021, 10, 1391. [Google Scholar] [CrossRef]

- Yang, J.; Faraji, M.; Basu, A. Robust segmentation of arterial walls in intravascular ultrasound images using Dual Path U-Net. Ultrasonics 2019, 96, 24–33. [Google Scholar] [CrossRef]

- Lo Vercio, L.; del Fresno, M.; Larrabide, I. Lumen-intima and media-adventitia segmentation in IVUS images using supervised classifications of arterial layers and morphological structures. Comput. Methods Programs Biomed. 2019, 177, 113–121. [Google Scholar] [CrossRef]

- Savaş, S.; Topaloğlu, N.; Kazcı, Ö.; Koşar, P.N. Classification of Carotid Artery Intima Media Thickness Ultrasound Images with Deep Learning. J. Med. Syst. 2019, 43, 273. [Google Scholar] [CrossRef] [PubMed]

- Bellomo, T.; Goudot Guillaume Gaston Brandon Lella, S.; Jessula, S.; Sumetsky, N.; Beardsley, J.; Patel, S.; Fischetti, C.; Zacharias, N.; Dua, A. Popliteal artery aneurysm ultrasound criteria for reporting characteristics. J. Vasc. Med. 2023. [Google Scholar] [CrossRef] [PubMed]

- Saini, K.; Dewal, M.; Rohit, M. Ultrasound Imaging and Image Segmentation in the area of Ultrasound: A Review. Int. J. Adv. Sci. Technol. 2010, 24, 41–60. [Google Scholar]

- Huang, C.; Zhou, Y.; Tan, W.; Qiu, Z.; Zhou, H.; Song, Y.; Zhao, Y.; Gao, S. Applying deep learning in recognizing the femoral nerve block region on ultrasound images. Ann. Transl. Med. 2019, 7, 453. [Google Scholar] [CrossRef] [PubMed]

- Shaked, D.; Tastl, I. Sharpness measure: Towards automatic image enhancement. In Proceedings of the IEEE International Conference on Image Processing 2005, Genova, Italy, 14 September 2005; Volume 1, pp. 937–940. [Google Scholar]

- Bong, D.B.L.; Ee Khoo, B. An efficient and training-free blind image blur assessment in the spatial domain. IEICE Trans. Inf. Syst. 2014, E97, 1864–1871. [Google Scholar] [CrossRef]

- Bong, D.B.L.; Khoo, B.E. Blind image blur assessment by using valid reblur range and histogram shape difference. Signal Process. Image Commun. 2014, 29, 699–710. [Google Scholar] [CrossRef]

- Adke, D.; Karnik, A.; Berman, H.; Mathi, S. Detection and Blur-Removal of Single Motion Blurred Image using Deep Convolutional Neural Network. In Proceedings of the 2021 International Conference on Artificial Intelligence and Computer Science Technology (ICAICST), Yogyakarta, Indonesia, 29–30 June 2021; pp. 79–83. [Google Scholar]

- Nathaniel, N.K.C.; Poo, A.N.; Ang, J. Practical issues in pixel-based autofocusing for machine vision. In Proceedings of the Proceedings 2001 ICRA. IEEE International Conference on Robotics and Automation (Cat. No.01CH37164), Seoul, Republic of Korea, 21–26 May 2001; Volume 3, pp. 2791–2796. [Google Scholar]

- Molokovich, O.; Morozov, A.; Yusupova, N.; Janschek, K. Evaluation of graphic data corruptions impact on artificial intelligence applications. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1069, 012010. [Google Scholar] [CrossRef]

- Sassaroli, E.; Crake, C.; Scorza, A.; Kim, D.S.; Park, M.A. Image quality evaluation of ultrasound imaging systems: Advanced B-modes. J. Appl. Clin. Med. Phys. 2019, 20, 115–124. [Google Scholar] [CrossRef]

- Contreras Ortiz, S.H.; Chiu, T.; Fox, M.D. Ultrasound image enhancement: A review. Biomed. Signal Process. Control. 2012, 7, 419–428. [Google Scholar] [CrossRef]

- Entrekin, R.R.; Porter, B.A.; Sillesen, H.H.; Wong, A.D.; Cooperberg, P.L.; Fix, C.H. Real-time spatial compound imaging: Application to breast, vascular, and musculoskeletal ultrasound. Semin. Ultrasound CT MR 2001, 22, 50–64. [Google Scholar] [CrossRef]

- Brahee, D.D.; Ogedegbe, C.; Hassler, C.; Nyirenda, T.; Hazelwood, V.; Morchel, H.; Patel, R.S.; Feldman, J. Body Mass Index and Abdominal Ultrasound Image Quality. J. Diagn. Med. Sonogr. 2013, 29, 66–72. [Google Scholar] [CrossRef]

- Shmulewitz, A.; Teefey, S.A.; Robinson, B.S. Factors affecting image quality and diagnostic efficacy in abdominal sonography: A prospective study of 140 patients. J. Clin. Ultrasound 1993, 21, 623–630. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Du, M.; Chang, S.; Chen, Z. Artificial intelligence in echocardiography: Detection, functional evaluation, and disease diagnosis. Cardiovasc. Ultrasound 2021, 19, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Gajjala, S.; Agrawal, P.; Tison, G.H.; Hallock, L.A.; Beussink-Nelson, L.; Lassen, M.H.; Fan, E.; Aras, M.A.; Jordan, C.R.; et al. Fully Automated Echocardiogram Interpretation in Clinical Practice. Circulation 2018, 138, 1623–1635. [Google Scholar] [CrossRef] [PubMed]

- Bellomo, T.R.; Goudot, G.; Lella, S.K.; Gaston, B.; Sumetsky, N.; Bs, S.P.; Brunson Bs, A.; Beardsley Bs, J.; Zacharias, N.; Dua, A. Percent Thrombus Outperforms Size in Predicting Popliteal Artery Aneurysm Related Thromboembolic Events. medRxiv 2023, 2023, 283–289. [Google Scholar] [CrossRef]

- Jergovic, I.; Cheesman, M.A.; Siika, A.; Khashram, M.; Paris, S.M.; Roy, J.; Hultgren, R. Natural history, growth rates, and treatment of popliteal artery aneurysms. J. Vasc. Surg. 2022, 75, 205–212.e3. [Google Scholar] [CrossRef]

- Trickett, J.P.; Scott, R.A.P.; Tilney, H.S. Screening and management of asymptomatic popliteal aneurysms. J. Med. Screen. 2002, 9, 92–93. [Google Scholar] [CrossRef]

- Lekadir, K.; Galimzianova, A.; Betriu, A.; Del Mar Vila, M.; Igual, L.; Rubin, D.L.; Fernandez, E.; Radeva, P.; Napel, S. A Convolutional Neural Network for Automatic Characterization of Plaque Composition in Carotid Ultrasound. IEEE J. Biomed. Health Inform. 2017, 21, 48–55. [Google Scholar] [CrossRef]

| Total Number of Patients | |

|---|---|

| Race n(%) | |

| White | 42 (95) |

| African American | 2 (5) |

| Sex n(%) | |

| Male | 44 (100) |

| Age (median years (IQR)) | 76 (56, 93) |

| Laterality of PAA n(%) | |

| Left n (%) | 11 (25) |

| Right n (%) | 33 (75) |

| Ever Smoker | 27 (61) |

| Hyperlipidemia | 40 (91) |

| Hypertension | 39 (89) |

| Type 2 Diabetes | 13 (30) |

| 20-Image Model | 60-Image Model | 80-Image Model | |

|---|---|---|---|

| Outer Polygon | |||

| mAP | 0.85 | 0.058 | 0 |

| True-Positive Rate | 0.86 | 0.18 | 0 |

| Inner Polygon | |||

| mAP | 0.29 | 0.29 | 0.29 |

| True-Positive Rate | 0.23 | 0.23 | 0.23 |

| Clinician-Labeled US | ||

|---|---|---|

| Accurate | Non-Accurate | |

| Outer Polygon | ||

| Positive (Correct) | 19 | 3 |

| Negative (Incorrect) | 3 | NA |

| Inner Polygon | ||

| Positive (Correct) | 5 | 1 |

| Negative (Incorrect) | 17 | NA |

| 20-Image Model | 60-Image Model | 80-Image Model | 100 Images Total | |

|---|---|---|---|---|

| Blur Metric (Median, IQR) | −138 (-268, 8) | −190 (−367, 19) | −161 (−314, 4) | −183 (−315, 5) |

| Doppler Images (%, n) | 35% (7) | 33% (20) | 33% (26) | 35% (35) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bellomo, T.R.; Goudot, G.; Lella, S.K.; Landau, E.; Sumetsky, N.; Zacharias, N.; Fischetti, C.; Dua, A. Feasibility of Encord Artificial Intelligence Annotation of Arterial Duplex Ultrasound Images. Diagnostics 2024, 14, 46. https://doi.org/10.3390/diagnostics14010046

Bellomo TR, Goudot G, Lella SK, Landau E, Sumetsky N, Zacharias N, Fischetti C, Dua A. Feasibility of Encord Artificial Intelligence Annotation of Arterial Duplex Ultrasound Images. Diagnostics. 2024; 14(1):46. https://doi.org/10.3390/diagnostics14010046

Chicago/Turabian StyleBellomo, Tiffany R., Guillaume Goudot, Srihari K. Lella, Eric Landau, Natalie Sumetsky, Nikolaos Zacharias, Chanel Fischetti, and Anahita Dua. 2024. "Feasibility of Encord Artificial Intelligence Annotation of Arterial Duplex Ultrasound Images" Diagnostics 14, no. 1: 46. https://doi.org/10.3390/diagnostics14010046

APA StyleBellomo, T. R., Goudot, G., Lella, S. K., Landau, E., Sumetsky, N., Zacharias, N., Fischetti, C., & Dua, A. (2024). Feasibility of Encord Artificial Intelligence Annotation of Arterial Duplex Ultrasound Images. Diagnostics, 14(1), 46. https://doi.org/10.3390/diagnostics14010046