Automatic Analysis of MRI Images for Early Prediction of Alzheimer’s Disease Stages Based on Hybrid Features of CNN and Handcrafted Features

Abstract

1. Introduction

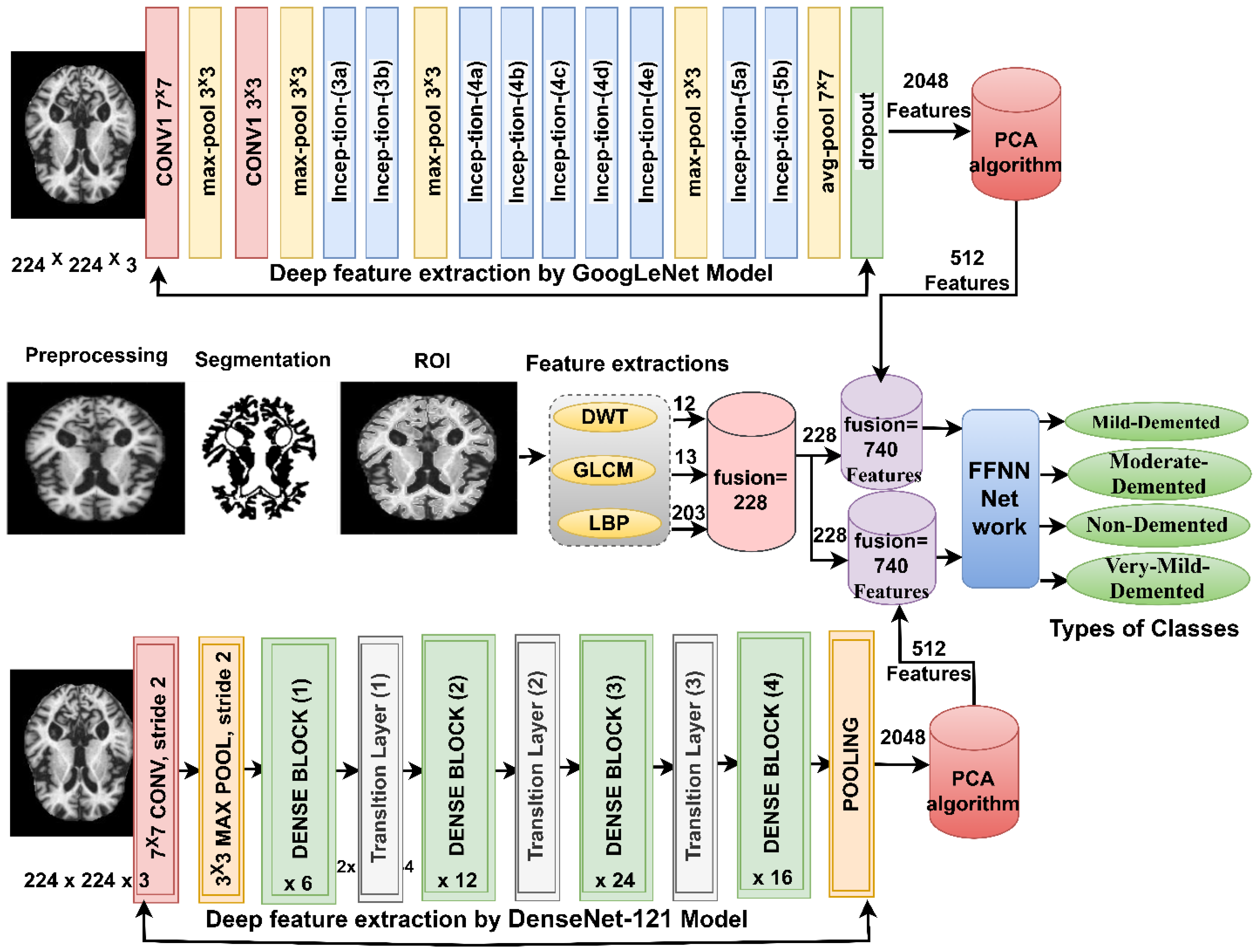

- Improving MRI images of Alzheimer’s disease by overlapping between the average filter and the contrast limited adaptive histogram equalization (CLAHE) method.

- Combined the features of the GoogLeNet and Dense-121 models before and after the high-dimensionality reduction of the features and then fed them to the FFNN network to detect Alzheimer’s and predict its progression.

- Combined the features of the GoogLeNet and Dense-121 models separately with the handcrafted features and then fed them to FFNN to detect Alzheimer’s and predict its progression.

- Developing effective systems to help physicians and radiologists diagnose Alzheimer’s early and predict its progression.

- Section 2 discusses a range of previous studies for the early detection of Alzheimer’s disease. Section 3 presents methodologies and materials for analyzing MRI images of Alzheimer’s disease. Section 4 presents a summary of the performance results of the proposed systems to detect Alzheimer’s and predict its progression. Section 5 presents a discussion of the performance of all systems. Section 6 outlines the conclusions of this work.

2. Related Work

3. Methods and Materials

3.1. Description of the MRI Dataset

3.2. Enhancement of MRI Images

3.3. Evaluating Systems

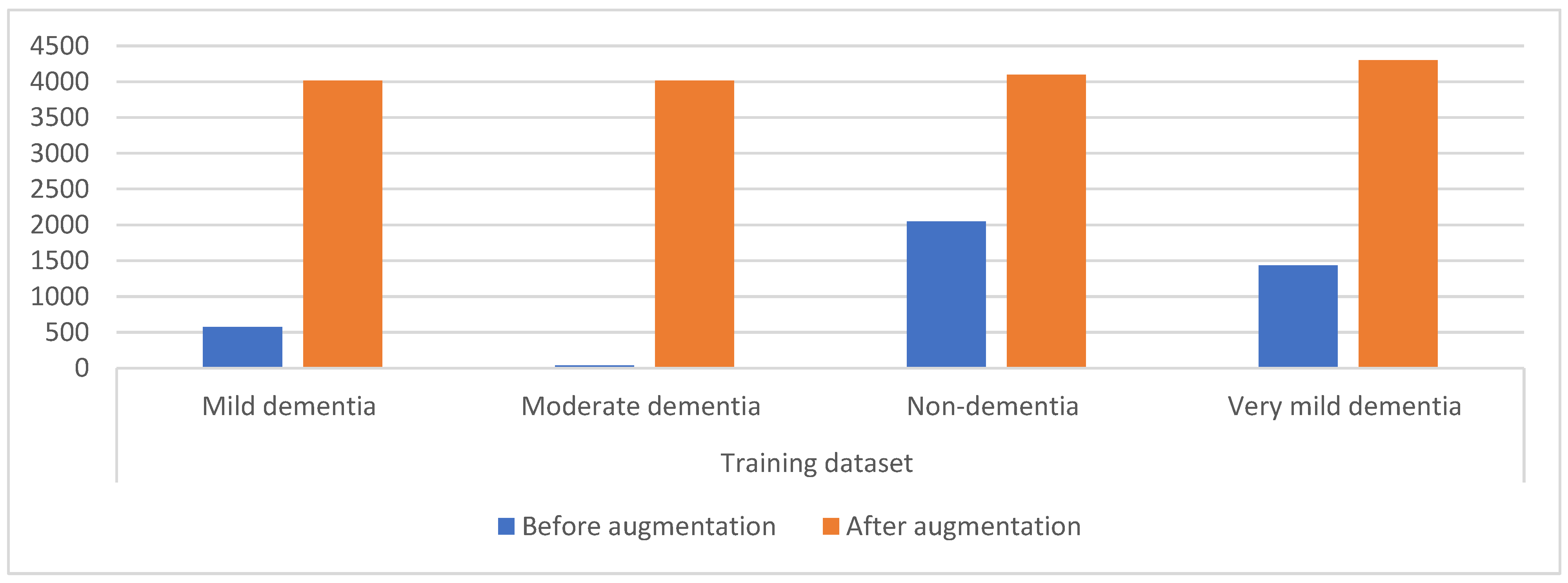

3.4. Data Augmentation and Balancing Dataset

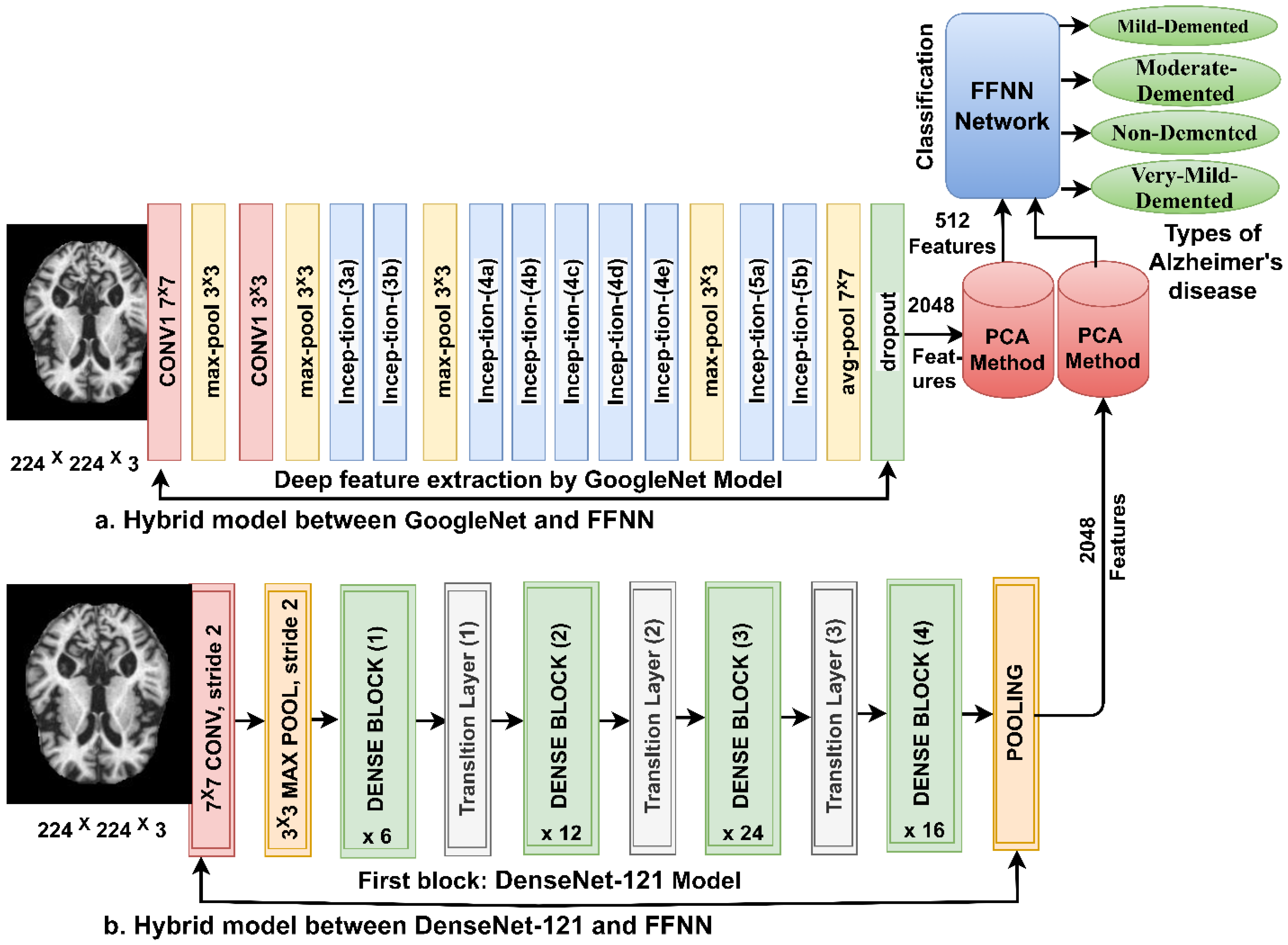

3.5. FFNN Network According to the Features of CNN Models

3.5.1. Extracting the Deep Features

3.5.2. FFNN Network

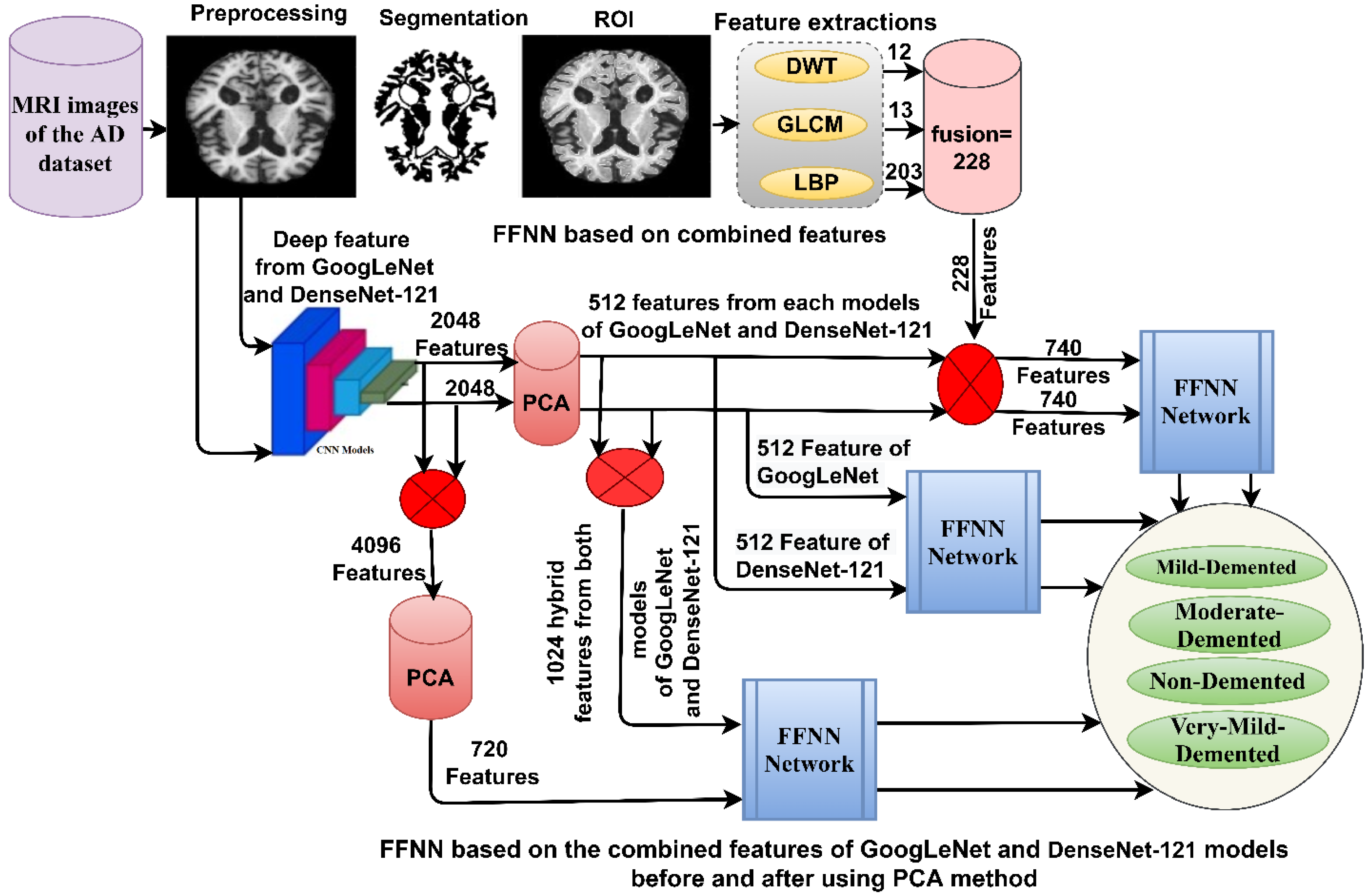

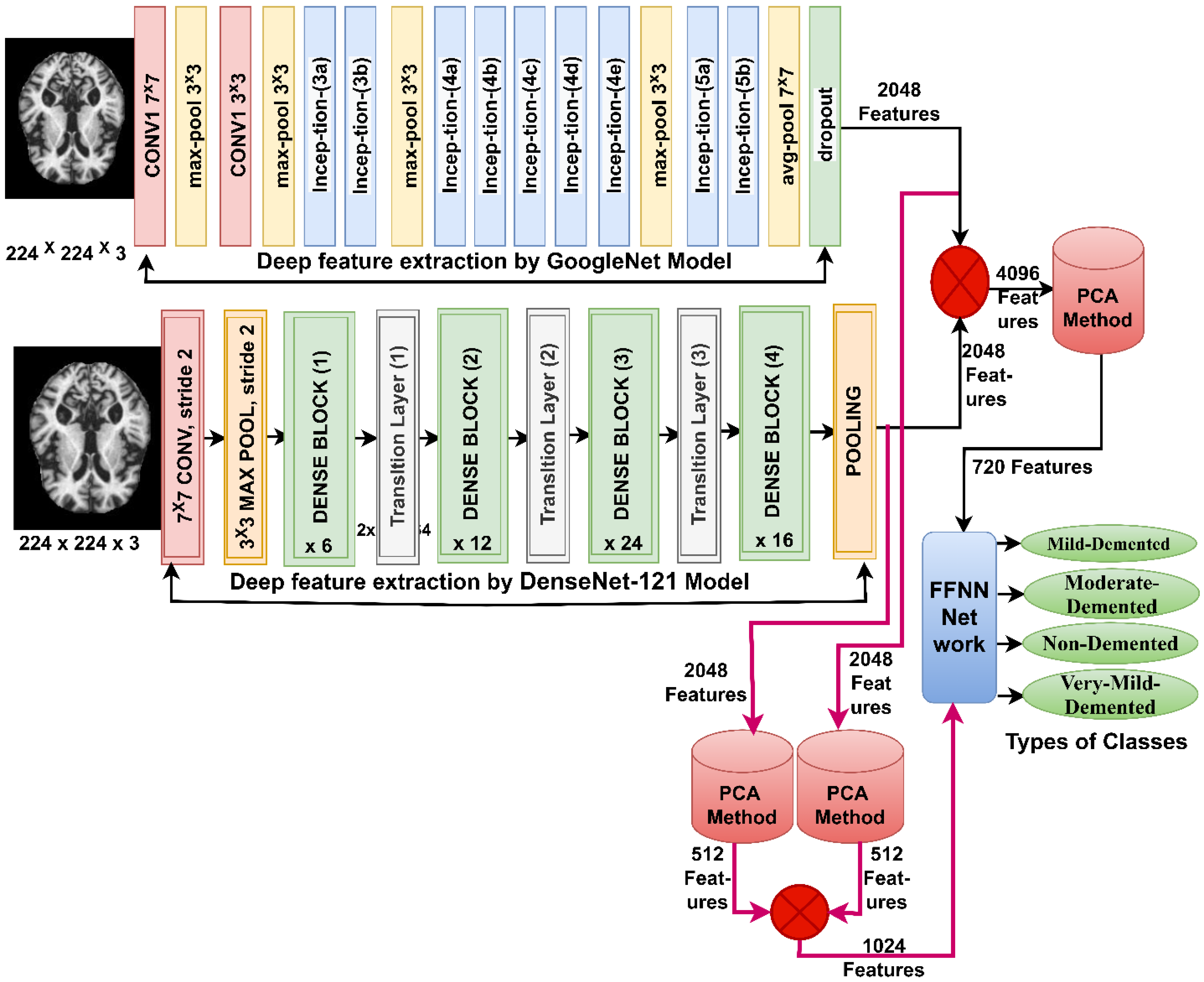

3.6. FFNN Network According to Fusing Features of CNN Models

3.7. FFNN Network According to Fusing the Features of CNN Models with Handcrafted Features

4. Results of the Performance of the Systems

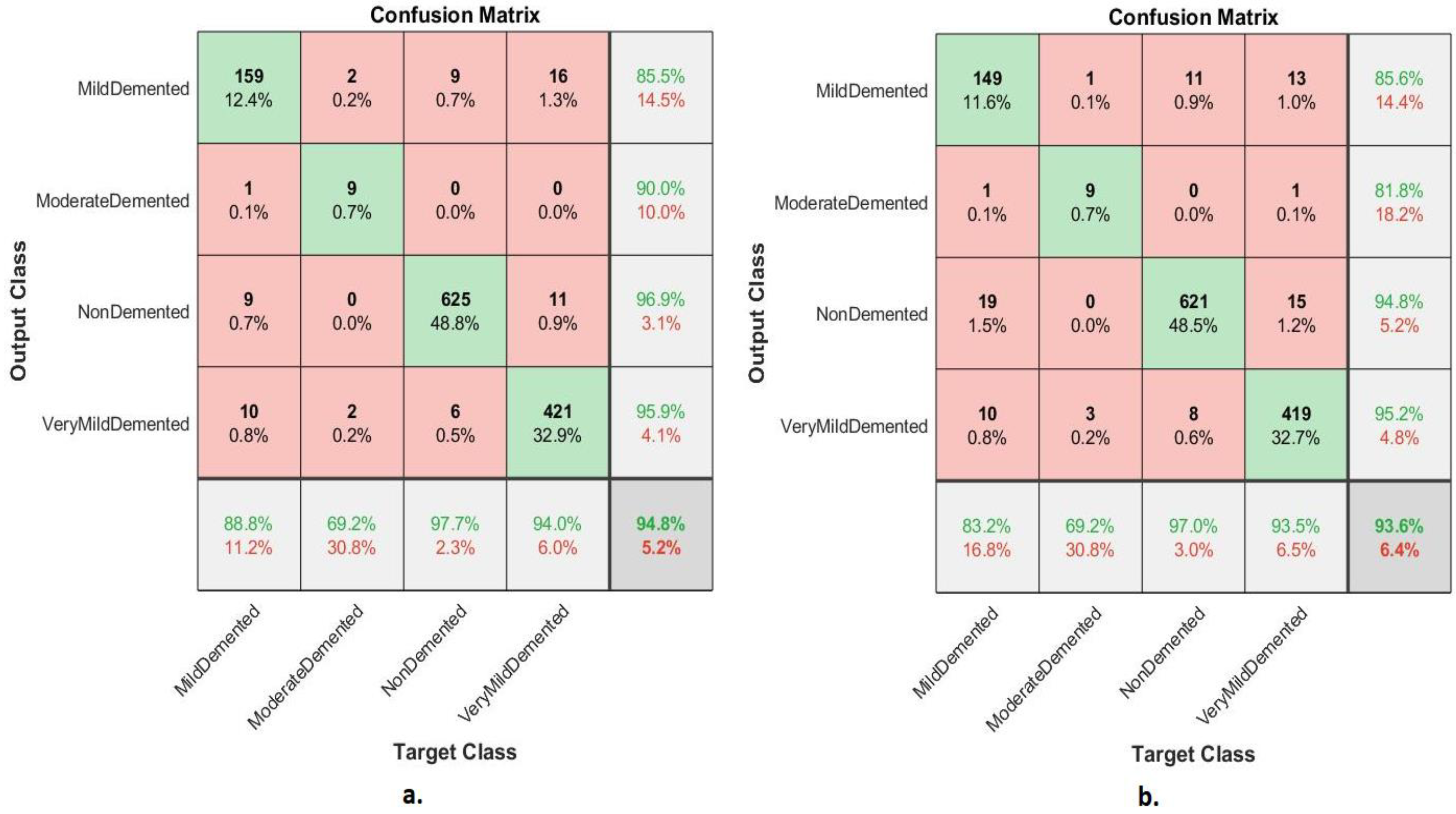

4.1. Results of FFNN Network According to the Features of CNN Models

4.2. Result of FFNN According to Fusing the Features of CNN Models

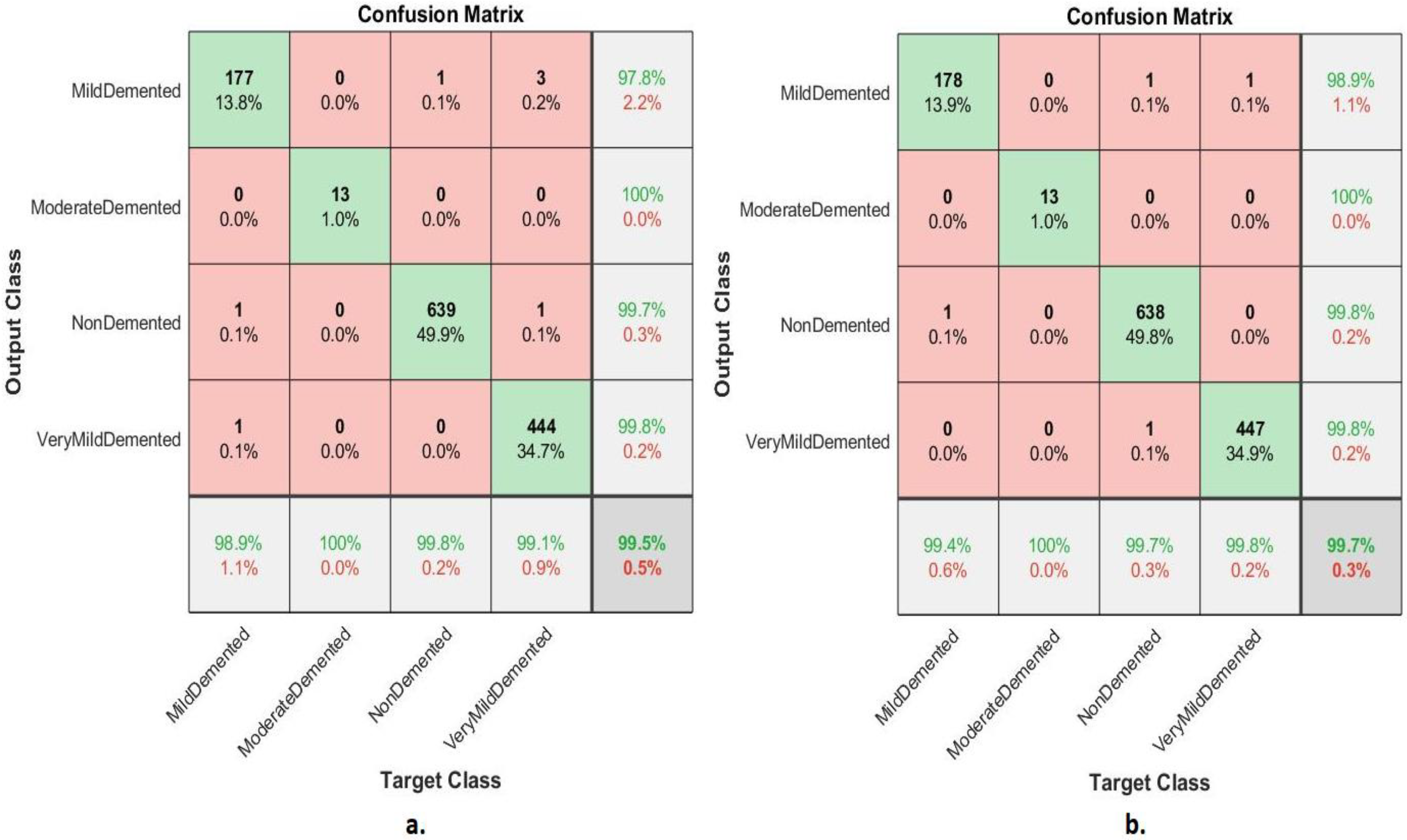

4.3. Results of FFNN According to Fusing the CNN Features with Handcrafted Features

4.3.1. Confusion Matrix

4.3.2. Error Histogram

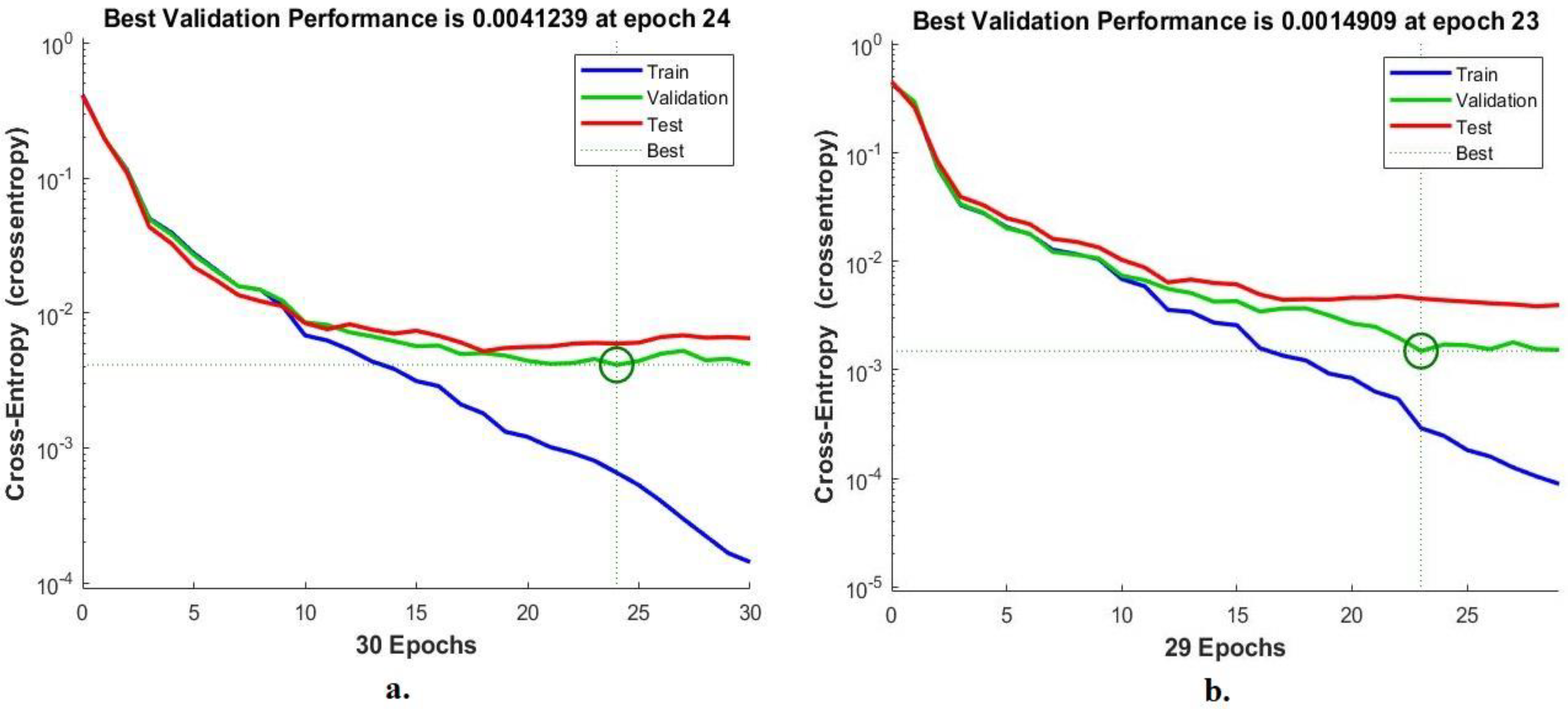

4.3.3. Cross-Entropy

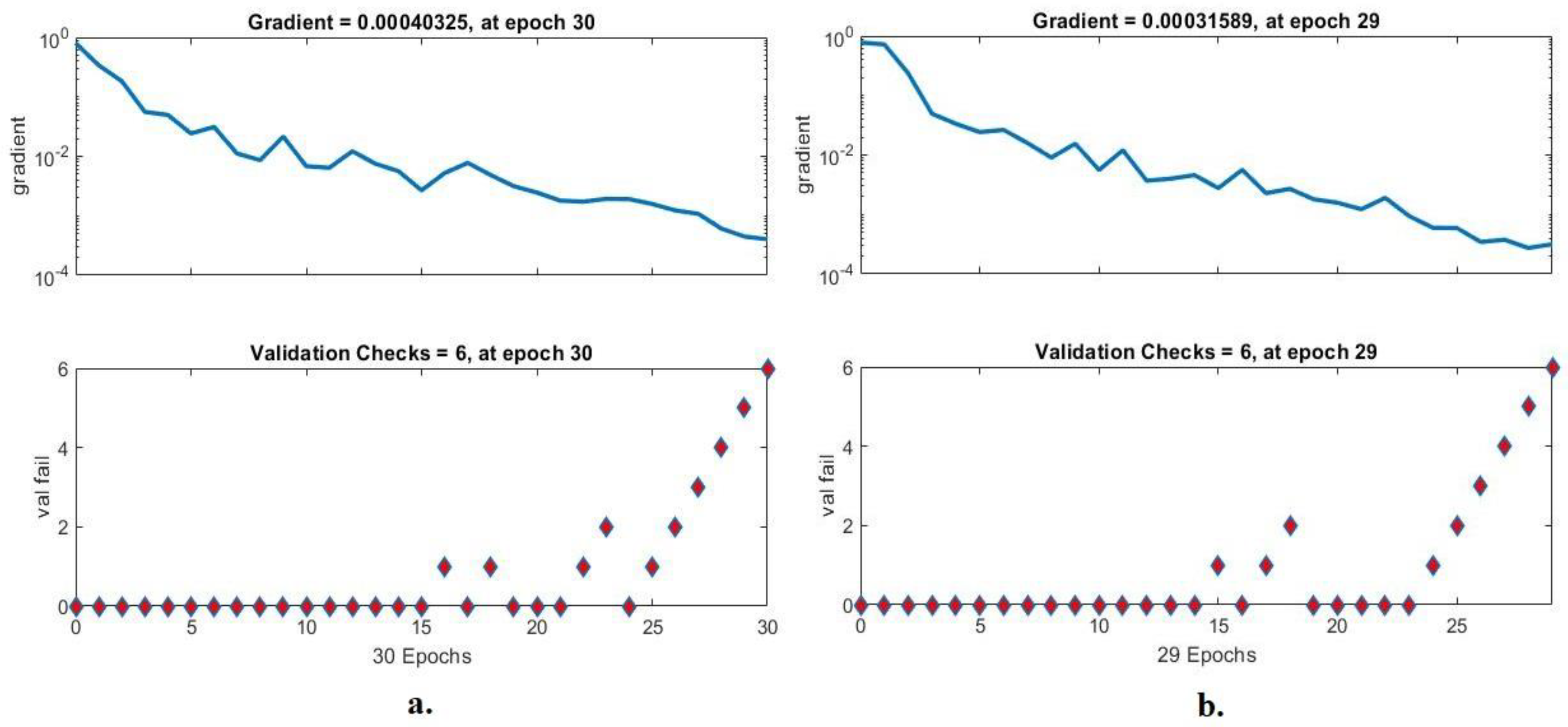

4.3.4. Gradient and Validation Checks

4.4. Results of Systems Generalization on the ADNI Dataset

5. Discussion of the Performance of Approaches

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Grimmer, T.; Henriksen, G.; Wester, H.J.; Förstl, H.; Klunk, W.E.; Mathis, C.A.; Kurz, A.; Drzezga, A. The clinical severity of Alzheimer’s disease is associated with PIB uptake in PET. Neurobiol. Aging 2009, 30, 1902–1909. [Google Scholar] [CrossRef] [PubMed]

- Aël Chetelat, G.; Baron, J.C. Early diagnosis of Alzheimer’s disease: Contribution of structural neuroimaging. Neuroimage 2003, 18, 525–541. Available online: https://www.sciencedirect.com/science/article/pii/S1053811902000265 (accessed on 15 August 2022). [CrossRef] [PubMed]

- Duchesne, S.; Caroli, A.; Geroldi, C.; Barillot, C.; Frisoni, G.B.; Collins, D.L. MRI-based automated computer classification of probable AD versus normal controls. IEEE 2008, 27, 509–520. [Google Scholar] [CrossRef]

- Goren, A.; Montgomery, W.; Kahle-Wrobleski, K.; Nakamura, T.; Ueda, K. Impact of caring for persons with Alzheimer’s disease or dementia on caregivers’ health outcomes: Findings from a community-based survey in Japan. BMC Geriatr. 2016, 16, 1–12. [Google Scholar] [CrossRef]

- Eisenmenger, L.B.; Peret, A.; Famakin, B.M.; Spahic, A.; Roberts, G.S.; Bockholt, J.H.; Paulsen, J.S. Vascular Contributions to Alzheimer’s Disease. Transl. Res. 2022. [Google Scholar] [CrossRef]

- Piller, C. Blots on a field? Science 2022, 377, 358–363. [Google Scholar] [CrossRef]

- Mayeux, R. Early Alzheimer’s disease. N. Engl. J. Med. 2020, 362, 2194–2201. [Google Scholar] [CrossRef]

- Jicha, G.A.; Parisi, J.E.; Dickson, D.W.; Johnson, K.; Cha, R.; Ivnik, R.J.; Petersen, R.C. Neuropathologic outcome of mild cognitive impairment following progression to clinical dementia. Arch. Neurol. 2022, 63, 674–681. [Google Scholar] [CrossRef]

- Alzheimer’s Facts and Figures Report | Alzheimer’s Association. Available online: https://www.alz.org/alzheimers-dementia/facts-figures (accessed on 15 August 2022).

- Kim, J.; Jeong, M.; Stiles, W.R.; Choi, H.S. Neuroimaging Modalities in Alzheimer’s Disease: Diagnosis and Clinical Features. Int. J. Mol. Sci. 2022, 23, 6079. [Google Scholar] [CrossRef]

- Díaz, M.; Mesa-Herrera, F.; Marín, R. DHA and its elaborated modulation of antioxidant defenses of the brain: Implications in aging and AD neurodegeneration. Antioxidants 2021, 10, 907. [Google Scholar] [CrossRef]

- Vaisvilaite, L.; Hushagen, V.; Grønli, J.; Specht, K. Time-of-day effects in resting-state functional magnetic resonance imaging: Changes in effective connectivity and blood oxygenation level dependent signal. Brain Connect. 2022, 12, 515–523. [Google Scholar] [CrossRef] [PubMed]

- Jung, H.; Sung, K.; Nayak, K.S.; Kim, E.Y.; Ye, J.C. k-t FOCUSS: A general compressed sensing framework for high resolution dynamic MRI. Magn. Reson. Med. 2009, 61, 103–116. [Google Scholar] [CrossRef] [PubMed]

- Protonotarios, N.E.; Tzampazidou, E.; Kastis, G.A.; Dikaios, N. Discrete Shearlets as a Sparsifying Transform in Low-Rank Plus Sparse Decomposition for Undersampled (k, t)-Space MR Data. J. Imaging 2022, 8, 29. [Google Scholar] [CrossRef] [PubMed]

- Gorges, M.; Müller, H.P.; Kassubek, J. Structural, and functional brain mapping correlates of impaired eye movement control in parkinsonian syndromes: A systems-based concept. Front. Neurol. 2018, 9, 319. [Google Scholar] [CrossRef]

- Odusami, M.; Maskeliūnas, R.; Damaševičius, R.; Krilavičius, T. Analysis of features of alzheimer’s disease: Detection of early stage from functional brain changes in magnetic resonance images using a finetuned ResNet18 network. Diagnostics 2022, 11, 1071. [Google Scholar] [CrossRef]

- Mofrad, S.A.; Lundervold, A.; Lundervold, A.S.; Alzheimer’s Disease Neuroimaging Initiative. A predictive framework based on brain volume trajectories enabling early detection of Alzheimer’s disease. Comput. Med. Imaging Graph. 2022, 90, 101910. [Google Scholar] [CrossRef]

- Zhu, Y.; Kim, M.; Zhu, X.; Kaufer, D.; Wu, G.; Alzheimer’s Disease Neuroimaging Initiative. Long-range early diagnosis of Alzheimer’s disease using longitudinal MR imaging data. Med. Image Anal. 2022, 67, 101825. [Google Scholar] [CrossRef]

- Buyrukoğlu, S. Early detection of Alzheimer’s disease using data mining: Comparison of ensemble feature selection approaches. Konya Mühendislik Bilim. Derg. 2021, 1, 2667–8055. [Google Scholar] [CrossRef]

- Kumar, S.S.; Nandhini, M. Entropy slicing extraction and transfer learning classification for early diagnosis of Alzheimer diseases with sMRI. ACM Trans. Multimed. Comput. Commun. Appl. 2021, 17, 2. [Google Scholar] [CrossRef]

- Sharma, S.; Mandal, P.K. A comprehensive report on machine learning-based early detection of Alzheimer’s disease using multi-modal neuroimaging data. ACM Comput. Surv. 2023, 55, 1–44. [Google Scholar] [CrossRef]

- Song, M.; Jung, H.; Lee, S.; Kim, D.; Ahn, M. Diagnostic classification and biomarker identification of Alzheimer’s disease with random forest algorithm. Brain Sci. 2022, 11, 453. [Google Scholar] [CrossRef] [PubMed]

- Murugan, S.; Venkatesan, C.; Sumithra, M.G.; Gao, X.Z.; Elakkiya, B.; Akila, M.; Manoharan, S. DEMNET: A deep learning model for early diagnosis of Alzheimer diseases and dementia from MR images. IEEE Accessed 2022, 16, 90319–90329. [Google Scholar] [CrossRef]

- Battineni, G.; Hossain, M.A.; Chintalapudi, N.; Traini, E.; Dhulipalla, V.R.; Ramasamy, M.; Amenta, F. Improved Alzheimer’s disease detection by MRI using multimodal machine learning algorithms. Diagnostics 2022, 11, 2103. [Google Scholar] [CrossRef] [PubMed]

- Mohammed, B.A.; Senan, E.M.; Rassem, T.H.; Makbol, N.M.; Alanazi, A.A.; Al-Mekhlafi, Z.G.; Almurayziq, T.S.; Ghaleb, F.A. Multi-Method Analysis of Medical Records and MRI Images for Early Diagnosis of Dementia and Alzheimer’s Disease Based on Deep Learning and Hybrid Methods. Electronics 2021, 10, 2860. [Google Scholar] [CrossRef]

- Sun, H.; Wang, A.; Wang, W.; Liu, C. An Improved Deep Residual Network Prediction Model for the Early Diagnosis of Alzheimer’s Disease. Sensors 2021, 21, 4182. [Google Scholar] [CrossRef]

- AlSaeed, D.; Omar, S.F. Brain MRI Analysis for Alzheimer’s Disease Diagnosis Using CNN-Based Feature Extraction and Machine Learning. Sensors 2022, 22, 2911. [Google Scholar] [CrossRef]

- Alzheimer’s Dataset (4 Class of Images)|Kaggle. Available online: https://www.kaggle.com/datasets/tourist55/alzheimers-dataset-4-class-of-images (accessed on 21 August 2022).

- Abunadi, I.; Senan, E.M. Multi-Method Diagnosis of Blood Microscopic Sample for Early Detection of Acute Lymphoblastic Leukemia Based on Deep Learning and Hybrid Techniques. Sensors 2022, 22, 1629. [Google Scholar] [CrossRef]

- Ahmed, I.A.; Senan, E.M.; Rassem, T.H.; Ali, M.A.; Shatnawi, H.S.A.; Alwazer, S.M.; Alshahrani, M. Eye Tracking-Based Diagnosis and Early Detection of Autism Spectrum Disorder Using Machine Learning and Deep Learning Techniques. Electronics 2022, 11, 530. [Google Scholar] [CrossRef]

- Fati, S.M.; Senan, E.M.; ElHakim, N. Deep and Hybrid Learning Technique for Early Detection of Tuberculosis Based on X-ray Images Using Feature Fusion. Appl. Sci. 2022, 12, 7092. [Google Scholar] [CrossRef]

- Dai, Y.; Bai, W.; Tang, Z.; Xu, Z.; Chen, W. Computer-Aided Diagnosis of Alzheimer’s Disease via Deep Learning Models and Radiomics Method. Appl. Sci. 2021, 11, 8104. [Google Scholar] [CrossRef]

- Solano-Rojas, B.; Villalón-Fonseca, R. A Low-Cost Three-Dimensional DenseNet Neural Network for Alzheimer’s Disease Early Discovery. Sensors 2021, 21, 1302. [Google Scholar] [CrossRef] [PubMed]

- Saleem, T.J.; Zahra, S.R.; Wu, F.; Alwakeel, A.; Alwakeel, M.; Jeribi, F.; Hijji, M. Deep Learning-Based Diagnosis of Alzheimer’s Disease. J. Pers. Med. 2022, 12, 815. [Google Scholar] [CrossRef] [PubMed]

- Lin, E.; Lin, C.-H.; Lane, H.-Y. Deep Learning with Neuroimaging and Genomics in Alzheimer’s Disease. Int. J. Mol. Sci. 2021, 22, 7911. [Google Scholar] [CrossRef] [PubMed]

- Odusami, M.; Maskeliūnas, R.; Damaševičius, R. An Intelligent System for Early Recognition of Alzheimer’s Disease Using Neuroimaging. Sensors 2022, 22, 740 . [Google Scholar] [CrossRef]

- Tufail, A.B.; Anwar, N.; Othman, M.T.B.; Ullah, I.; Khan, R.A.; Ma, Y.-K.; Adhikari, D.; Rehman, A.U.; Shafiq, M.; Hamam, H. Early-Stage Alzheimer’s Disease Categorization Using PET Neuroimaging Modality and Convolutional Neural Networks in the 2D and 3D Domains. Sensors 2022, 22, 4609. [Google Scholar] [CrossRef]

- Pellicer-Valero, Ó.J.; Massaro, G.A.; Casanova, A.G.; Paniagua-Sancho, M.; Fuentes-Calvo, I.; Harvat, M.; Martín-Guerrero, J.D.; Martínez-Salgado, C.; López-Hernández, F.J. Neural Network-Based Calculator for Rat Glomerular Filtration Rate. Biomedicines 2022, 10, 610. [Google Scholar] [CrossRef]

- Abunadi, I.; Senan, E.M. Deep Learning and Machine Learning Techniques of Diagnosis Dermoscopy Images for Early Detection of Skin Diseases. Electronics 2021, 10, 3158. [Google Scholar] [CrossRef]

- Yang, L.; Wang, X.; Guo, Q.; Gladstein, S.; Wooten, D.; Li, T. Deep learning based multimodal progression modeling for Alzheimer’s disease. Stat. Biopharm. Res. 2021, 13, 337–343. [Google Scholar] [CrossRef]

- Candès, E.J.; Li, X.; Ma, Y.; Wright, J. Robust principal component analysis? J. ACM (JACM) 2011, 58, 1–37. [Google Scholar] [CrossRef]

- Ahmed, I.A.; Senan, E.M.; Shatnawi, H.S.A.; Alkhraisha, Z.M.; Al-Azzam, M.M.A. Multi-Techniques for Analyzing X-ray Images for Early Detection and Differentiation of Pneumonia and Tuberculosis Based on Hybrid Features. Diagnostics 2023, 13, 814. [Google Scholar] [CrossRef]

- Araújo, T.; Teixeira, J.P.; Rodrigues, P.M. Smart-Data-Driven System for Alzheimer Disease Detection through Electroencephalographic Signals. Bioengineering 2022, 9, 141. [Google Scholar] [CrossRef]

- Ge, J.; Liu, H.; Yang, S.; Lan, J. Laser Cleaning Surface Roughness Estimation Using Enhanced GLCM Feature and IPSO-SVR. Photonics 2022, 9, 510. [Google Scholar] [CrossRef]

- Aligholi, S.; Khajavi, R.; Khandelwal, M.; Armaghani, D.J. Mineral Texture Identification Using Local Binary Patterns Equipped with a Classification and Recognition Updating System (CARUS). Sustainability 2022, 14, 11291. [Google Scholar] [CrossRef]

- Jamil, S.; Abbas, M.S.; Roy, A.M. Distinguishing Malicious Drones Using Vision Transformer. AI 2022, 3, 260–273. [Google Scholar] [CrossRef]

- Wu, X.; Gao, S.; Sun, J.; Zhang, Y.; Wang, S. Classification of Alzheimer’s Disease Based on Weakly Supervised Learning and Attention Mechanism. Brain Sci. 2022, 12, 1601. [Google Scholar] [CrossRef] [PubMed]

- Younes, L.; Albert, M.; Moghekar, A.; Soldan, A.; Pettigrew, C.; Miller, M.I. Identifying changepoints in biomarkers during the preclinical phase of Alzheimer’s disease. Front. Aging Neurosci. 2019, 11, 74. [Google Scholar] [CrossRef]

| Phase | Training Dataset | |||

|---|---|---|---|---|

| Classes | Mild Dementia | Moderate Dementia | Non-Dementia | Very Mild Dementia |

| Before augmentation | 574 | 41 | 2048 | 1434 |

| After augmentation | 4018 | 4018 | 4096 | 4302 |

| Models | Classes of AD | Accuracy % | Sensitivity % | AUC % | Precision % | Specificity % |

|---|---|---|---|---|---|---|

| FFNN basec on features from GoogLeNet | Mild_Demented | 88.8 | 89.12 | 92.51 | 85.5 | 97.68 |

| Moderate_Demented | 69.2 | 69.36 | 85.72 | 90 | 99.73 | |

| Non_Demented | 97.7 | 98.29 | 97.25 | 96.9 | 97.18 | |

| Very_Mild_Demented | 94 | 93.87 | 94.69 | 95.9 | 98.41 | |

| average ratio | 94.80 | 87.66 | 92.54 | 92.08 | 98.25 | |

| FFNN basec on features from DenseNet-121 | Mild_Demented | 83.2 | 83.34 | 91.49 | 85.6 | 98.44 |

| Moderate_Demented | 69.2 | 69.1 | 89.12 | 81.8 | 99.55 | |

| Non_Demented | 97 | 97.21 | 96.84 | 94.8 | 95.16 | |

| Very_Mild_Demented | 93.5 | 93.97 | 95.79 | 95.2 | 97.38 | |

| average ratio | 93.60 | 85.91 | 93.31 | 89.35 | 97.63 |

| Models | Classes of AD | Accuracy % | Sensitivity % | AUC % | Precision % | Specificity % |

|---|---|---|---|---|---|---|

| FFNN based on the merging of CNN features before PCA | Mild_Demented | 94.4 | 94.27 | 97.52 | 93.9 | 98.96 |

| Moderate_Demented | 69.2 | 69.44 | 84.56 | 69.2 | 99.62 | |

| Non_Demented | 98.6 | 98.84 | 98.25 | 98.9 | 99.1 | |

| Very_Mild_Demented | 97.1 | 97.24 | 96.67 | 96.75 | 98.37 | |

| average ratio | 97.20 | 89.95 | 94.25 | 89.69 | 99.01 | |

| FFNN based on the merging of CNN features after PCA | Mild_Demented | 96.1 | 96.29 | 97.95 | 96.1 | 99.4 |

| Moderate_Demented | 92.3 | 92.1 | 94.64 | 92.3 | 99.82 | |

| Non_Demented | 99.2 | 98.78 | 98.1 | 99.8 | 99.71 | |

| Very_Mild_Demented | 99.3 | 99.24 | 97.54 | 98.5 | 99.22 | |

| average ratio | 98.80 | 96.60 | 97.06 | 96.68 | 99.54 |

| Models | Classes of AD | Accuracy % | Sensitivity % | AUC % | Precision % | Specificity % |

|---|---|---|---|---|---|---|

| FFNN with features of GoogleNet and handcrafted | Mild_Demented | 98.9 | 99.4 | 99.12 | 97.8 | 99.52 |

| Moderate_Demented | 100 | 99.56 | 98.52 | 100 | 99.68 | |

| Non_Demented | 99.8 | 99.87 | 99.56 | 99.7 | 99.86 | |

| Very_Mild_Demented | 99.1 | 98.72 | 99.46 | 99.8 | 99.72 | |

| average ratio | 99.50 | 99.39 | 99.17 | 99.33 | 99.70 | |

| FFNN with features of DenseNet-121 and handcrafted | Mild_Demented | 99.4 | 99.3 | 99.63 | 98.9 | 99.8 |

| Moderate_Demented | 100 | 99.98 | 99.84 | 100 | 99.55 | |

| Non_Demented | 99.7 | 99.58 | 99.28 | 99.8 | 99.71 | |

| Very_Mild_Demented | 99.8 | 99.68 | 99.49 | 99.8 | 99.6 | |

| average ratio | 999.70 | 99.64 | 99.56 | 99.63 | 99.67 |

| Systems | Classes of ADNI Dataset | Accuracy % | Sensitivity % | AUC % | Precision % | Specificity % |

|---|---|---|---|---|---|---|

| FFNN with features of GoogLeNet and handcrafted | AD | 88.2 | 88.2 | 89.2 | 85.7 | 97.7 |

| CN | 98.3 | 98.1 | 93.2 | 95 | 96.4 | |

| EMCI | 87.5 | 87.7 | 93.9 | 95.5 | 98.6 | |

| LMCI | 71.4 | 71.2 | 87.6 | 83.3 | 99 | |

| MCI | 91.5 | 90.9 | 92.1 | 89.6 | 97.9 | |

| Average ratio | 92.3 | 87.22 | 91.2 | 89.82 | 97.92 | |

| FFNN with features of Dense-Net-121 and handcrafted | AD | 94.1 | 94.2 | 95.1 | 82.1 | 96.9 |

| CN | 95.7 | 96.1 | 97.2 | 98.2 | 99.2 | |

| EMCI | 95.8 | 95.8 | 96.1 | 95.8 | 98.7 | |

| LMCI | 78.6 | 79.4 | 88.4 | 73.3 | 98.1 | |

| MCI | 89.4 | 88.9 | 94.9 | 95.5 | 99.2 | |

| Average ratio | 93.4 | 90.88 | 94.34 | 88.98 | 98.42 |

| Techniques | Features | Mild-Dementia | Moderate-Dementia | Non-Dementia | Very-Mild-Dementia | Accuracy % | |

|---|---|---|---|---|---|---|---|

| FFNN network | GoogLeNet | 88.8 | 69.2 | 97.7 | 94 | 94.8 | |

| DenseNet-121 | 83.2 | 69.2 | 97 | 93.5 | 93.6 | ||

| FFNN network | Combined features before PCA | GoogLeNet + DenseNet-121 | 94.4 | 69.2 | 98.6 | 97.1 | 97.2 |

| Combined of features after PCA | GoogLeNet + DenseNet-121 | 96.1 | 92.3 | 99.2 | 99.3 | 98.8 | |

| Combined features | GoogLeNet and handcrafted | 98.9 | 100 | 99.8 | 99.1 | 99.5 | |

| DenseNet-121 and handcrafted | 99.4 | 100 | 99.7 | 99.8 | 99.7 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khalid, A.; Senan, E.M.; Al-Wagih, K.; Al-Azzam, M.M.A.; Alkhraisha, Z.M. Automatic Analysis of MRI Images for Early Prediction of Alzheimer’s Disease Stages Based on Hybrid Features of CNN and Handcrafted Features. Diagnostics 2023, 13, 1654. https://doi.org/10.3390/diagnostics13091654

Khalid A, Senan EM, Al-Wagih K, Al-Azzam MMA, Alkhraisha ZM. Automatic Analysis of MRI Images for Early Prediction of Alzheimer’s Disease Stages Based on Hybrid Features of CNN and Handcrafted Features. Diagnostics. 2023; 13(9):1654. https://doi.org/10.3390/diagnostics13091654

Chicago/Turabian StyleKhalid, Ahmed, Ebrahim Mohammed Senan, Khalil Al-Wagih, Mamoun Mohammad Ali Al-Azzam, and Ziad Mohammad Alkhraisha. 2023. "Automatic Analysis of MRI Images for Early Prediction of Alzheimer’s Disease Stages Based on Hybrid Features of CNN and Handcrafted Features" Diagnostics 13, no. 9: 1654. https://doi.org/10.3390/diagnostics13091654

APA StyleKhalid, A., Senan, E. M., Al-Wagih, K., Al-Azzam, M. M. A., & Alkhraisha, Z. M. (2023). Automatic Analysis of MRI Images for Early Prediction of Alzheimer’s Disease Stages Based on Hybrid Features of CNN and Handcrafted Features. Diagnostics, 13(9), 1654. https://doi.org/10.3390/diagnostics13091654