Abstract

It is crucial to accurately categorize cancers using microarray data. Researchers have employed a variety of computational intelligence approaches to analyze gene expression data. It is believed that the most difficult part of the problem of cancer diagnosis is determining which genes are informative. Therefore, selecting genes to study as a starting point for cancer classification is common practice. We offer a novel approach that combines the Runge Kutta optimizer (RUN) with a support vector machine (SVM) as the classifier to select the significant genes in the detection of cancer tissues. As a means of dealing with the high dimensionality that characterizes microarray datasets, the preprocessing stage of the ReliefF method is implemented. The proposed RUN–SVM approach is tested on binary-class microarray datasets (Breast2 and Prostate) and multi-class microarray datasets in order to assess its efficacy (i.e., Brain Tumor1, Brain Tumor2, Breast3, and Lung Cancer). Based on the experimental results obtained from analyzing six different cancer gene expression datasets, the proposed RUN–SVM approach was found to statistically beat the other competing algorithms due to its innovative search technique.

Keywords:

feature selection; Runge Kutta optimizer; microarray; gene expression; support vector machines; cancer classification MSC:

68T99; 68U99

1. Introduction

For the past few years, gene expression profiling has allowed scientists to simultaneously examine thousands of genes. As a result, significant knowledge has been gained regarding the processes that take place within cells. In situations involving cancer, having access to such particular information is helpful for both diagnosing the disease and evaluating the patient’s prognosis. Microarray data features such as low sample size, high noise levels, and high dimensionality persist in making gene selection a challenging task. Identifying the best candidates for gene classification is essential to resolving this problem. This method will not only cut down on computational costs, but it will also enable physicians to focus on a small subset of physiologically significant genes that are linked to specific cancers, allowing for the formulation of more cost-effective studies [1]. In addition, a method with a high degree of accuracy can aid people with cancer by facilitating early detection and the development of new treatments [2].

The “curse of dimensionality”, manifested by small sample sizes and the presence of genes that are unrelated to one another, plagues the microarray data [3,4,5]. Due to the presence of this condition, it is more challenging to classify the typical gene expression profile for any given sample. Gene selection has been applied to microarray gene expression analysis as a means of overcoming the “curse of dimensionality” by determining the subset of features that are most significant [6].

There are a few different ways to go about selecting genes, but they can all be broken down into three broad categories: embedding, wrapping, and filtering. Using statistical methods like minimum redundancy maximum relevance, information gain, and ReliefF, the filter strategy collects intrinsic gene properties in order to differentiate the targeted phenotypic class [7]. This approach is easy to implement, but it does not take into account the complex web of interactions between genes. In order to evaluate potential new feature gene subsets, the “wrapper” strategy [8] attempts to pick a tiny subset of the initial feature set, typically through the use of an induction algorithm. Wrapper methods typically outperform filters because they account for multivariate inter-correlation among genes. The optimum number of feature genes for a given classifier can be calculated automatically using the wrapper approach. The embedded method, like the wrapper strategy, permits the combination of many strategies for doing feature subset selection (Kahavi and John, 1997; [9]). Genetic algorithms (GAs) are commonly employed as the search engine for the feature subset in the embedding approach (GAs) [10], while other classification techniques, such as GA-SVM [11], GA-K closest neighbors (GA-KNN) [12], and so on, are used to pick the smallest feature set.

Selecting the significant genes from gene expression data has been implemented using the wellknown machine learning approaches, including Neural Networks (NN), support vector machines (SVMs), and K-nearest neighbor (KNN). There is some evidence that gene selection can improve the accuracy of sample classification [13]. According to studies, the “no free lunch theory” states that no single optimization strategy is generally accepted as providing a globally optimal solution to any given optimization problem [14]. Different practical engineering applications were used to evaluate the RUN algorithm’s efficacy in comparison to numerous metaheuristic algorithms. The RUN’s outcomes were encouraging and on par with previous competitions [15]. Consequently, the objective of this study is to select the genes in the gene expression profile that are the most predictive and beneficial by making use of RUN, a recently proposed optimization method. A preprocessing stage that uses the ReliefF filtering strategy is used to reduce the dimensionality of gene expression datasets and select the valuable genes from among these datasets. As they perform better than other classification methods [16], SVMs have become the most widely used classification method in the field of cancer research, most notably in the analysis of gene expression data [17,18]. in this study, SVM is used in a hybrid cancer classification algorithm. The article also compares the effectiveness of the RUN algorithm as a generation selection algorithm to the effectiveness of other well-known selection algorithms [19], such as the Whale Optimization Algorithm (WOA) [20], the Artificial Bee Colony (ABC) [21], the Harris Hawks Optimization (HHO) [22], the Hunger Games Search (HGS) [23], the Golden Jackal Optimization (GJO) [24], and Manta Ray Foraging Optimization (MRFO) [25]. To evaluate how well the suggested RUN—SVM strategy performs in comparison to existing selection algorithms, the experimental study makes use of six unique microarray datasets.

To emphasize the significance of this study, the following are the contributions that it makes:

- Here we provide RUN—SVM, a novel approach to classifying gene expression data that combines the SVM classifier with the RUN feature selection optimizer.

- Overcomes the “feature dimensionality curse” in order to eliminate unnecessary features and focus on the most informative ones when classifying cancers based on gene expression data.

- Assesses the effectiveness of the introduced classifier in binary and multiclass classification tasks using six benchmark datasets.

- Compares the capability and feasibility of the proposed classification algorithm to existing approaches through a comprehensive evaluation.

The remaining sections of the article are presented in the following order: The review of the available literature can be found in Section 2. In Section 3, we will go over the first stages of the classification methods that make use of ReliefF, RUN, and SVM. The RUN–SVM technique that has been suggested is discussed in Section 4. In Section 5, In Section, both the experimental design and the results of the experiments are described. Section 6 contains a summary of the findings as well as recommendations for further research.

2. Literature Review

A number of different gene selection optimization approaches have been presented in the literature as a way to improve the accuracy with which cancers are diagnosed. The following is a list of the most recent studies that have been conducted for the purpose of making suggestions as to which genes should be employed in the classification and diagnosis of cancer.

Li [1], proposed a strategy for gene selection that included a GA and a PSO with support vector machine (SVM) classification. Their proposed methodology was put to the test using data from microarray samples. The authors’ intention with their combined strategy was to steer the proposed method away from the local optimum. Using an improved binary PSO and an SVM classifier.

In order to analyze microarray datasets, it was suggested that combining SVM with the artificial bee colony approach might be beneficial [26]. The proposed model was implemented on the group of genes that were chosen in order to evaluate how well it worked. The high computing cost associated with the large dimensionality of the microarray data was a hindrance for all of the strategies, despite the fact that the proposed model performed better than earlier studies that used this methodology. The authors of this study conducted experiments with both genetic algorithm, and particle swarm optimization [2]. It was determined that the suggested approach of GA augmented with SVM is the best option. Instead, both of the techniques required training a classifier with every gene simultaneously due to the high complexity of gene expression datasets. Comparisons with sundry state-of-the-art techniques revealed outcomes that were competitive when measured against industry standards. In the majority of executions, they achieved results with a classification rate of 100% and few genes per subgroup (3 and 4). By combining IG and SVM, the authors of [27] achieved the best results possible for classifying cancers. The most informative and important genes were selected using IG from the original datasets, and then redundant genes were omitted using SVM. They then used the LIBSVM classifier to evaluate the quality of the collected informative genes [28]. For this investigation, the authors prioritized selecting the fewest genes while ignoring the pursuit of the highest accuracy.

In [29], the authors applied filter-, wrapper-, and correlation-based feature selector (CFS) techniques to the acute leukemia microarray dataset. These methods had been used with a number of ML algorithms, such as naive Bayes, decision tree (DT), and SVM. They demonstrated that by integrating several feature selection and classification techniques, relevant genes can be selected with strong certainty. This was the first paper to discuss the biological and computational evidence for zyxin’s role in leukaemogenesis.

In [30], The researchers used a 1-norm support vector machine with squared loss and a second predictor made up of an SVM and the nearest neighbor to classify cancers rapidly using NN. When it comes to assessment and storage that algorithm just needs a few genes. This, of course, ignores the question of how many genes were actually selected.

Mohamad et al., [31], suggested a near-optimal, small selection of the most beneficial genes relevant to cancer classification. The current rule for updating velocity and particle position was updated by the method of Mohamad et al.

Wrapper strategies combining the SVM classification method and the FireFly algorithm (FF-SVM) have been proposed as a means to classify cancer microarray gene expression profiles [32]. The experimental results showed that the suggested algorithm performed better in terms of classification accuracy and selected fewer genes than the other existing techniques. Also, the top five biologically significant genes associated with cancer across all ten datasets were determined.

The authors of [33] concentrated on the filter-based feature selection approach. The primary goals of the proposed effort were to shorten computation times and improve categorization and prediction accuracy. They suggested work that decreases the complexity of data collection as well as the redundancy between different features in order to accomplish this. They employed a score-based criterion fusion approach for feature selection that increased prediction accuracy and cut down on calculation time.

A hybrid model, SVM-mRMRe, was presented for gene selection by the authors of [34]. The most important genes from high-throughput microarrays have been extracted using the proposed model, which combines the feature selection approach, mRMRe, with the SVM classifier. The introduced algorithm was evaluated using eight benchmarking microarray datasets. Several aspects of the selected data set were analyzed by four different classifiers including NN, KNN, SVM, and random forest (RF). As demonstrated experimentally, the suggested approach enhances the current state of the art in cancer tissue classification while using fewer data.

In [35], a number of combinations of wrapping approaches (using NB and KNN with rank search, greedy stepwise, and best first) and ranking methods (using information gain [IG] with threshold of 1% and 5%) were employed for choosing the most crucial genes for microarray datasets. Brain cancer, CNS, breast cancer, and lung cancer datasets were among these datasets. Comparing the scenario where all features were employed with the experimental findings, all feature selection strategies consistently performed well (no feature selection methods). The NB with IG and wrapper (NB and best first) and the KNN with IG and wrapper (KNN and best first) acquired the best performance and defeated all other approaches out of the several techniques that were utilized.

A new hybrid feature selection technique was introduced in [36]. It involved five filters and a wrapper mechanism. It was discovered that the hybrid collection of individual filters has yielded better performance than the retrieved one of the single filter. The suggested method had been tried out and evaluated against well-known hybrid features. Using 10 benchmark micro-array datasets, the suggested strategy was evaluated and contrasted with cutting-edge approaches. Experimental results showed that the proposed strategy exhibited superior performance to state-of-the-art methods in regards to precision in classification.

3. The Methodology

Here, we provide an overview of the methods that would be employed in the proposed RUN—SVM classification technique. These methods include the SVM classification method, the RUN optimization method, and the ReliefF feature selection method for microarray data.

3.1. ReliefF Filter Method

The first Relief algorithm [37] has been developed further with the addition of ReliefF [38]. Selecting one instance at random from the data, the primary structure of Relief then finds its nearest neighbor from the other class as well as its nearest neighbor from the same class. Sampled instances’ attribute values are compared to those of their nearest neighbors in order to revise the relevance scores for each attribute. According to this line of thinking, a valuable feature should be able to distinguish between instances of several classes while maintaining the same value for instances of the same class. With its enhanced capabilities, ReliefF is better able to handle multiclass situations and input that is illegible or noisy. This methodology has global applicability, low bias, involves feature interaction, and has the potential to uncover local dependencies that are missed by other methodologies.

3.2. Runge Kutta Optimizer (RUN)

The goal of optimization algorithms is to enhance the efficiency with which they solve various optimization problems by striking a balance between exploiting and exploring the problem space. This is done in order to improve the efficiency with which they tackle various optimization problems. Iman Ahmadianfar and her colleagues recently presented the RUN, which is one example of such an optimization method [15]. This algorithm makes use of a specialized search mechanism that is founded on the Runge Kutta method, and RUN is an essential component of this algorithm. The second stage is termed solution quality enhancement, and its purpose is to increase the quality of the solutions produced for the researched optimization problem while simultaneously minimizing the hazards of becoming trapped in a locally optimal solution. The mathematical formulation of RUN is explained with the help of an example that can be found in the next subsection.

3.2.1. Initialization

The decision variables of the optimization problem are initialized at random to begin the optimization process in RUN by:

where the lowest and highest ranges of the decision variable v of dimensions 1,2,…, and M are, respectively, , and .

3.2.2. Solution Update

The well-known RUN mechanism is applied to the following equations in order to modify the solutions at each iteration:

where , and are solutions selected at random and is a typical distributed random number. is a random number in the range of [0, 1]. r is an integer number that may be 1 or −1. g is a random number in the range of [0, 2]. and are solutions around which the local search is performed in order to explore the promising regions in the search space. was used to achieve an appropriate balance between exploration and exploitation.

Additionally, using , which is the position increment, and RUN coefficients through , the subsequent equation can be used to calculate , an adaptable factor, and , a RUN-guided search method:

3.2.3. Enhanced Solution Quality (ESQ)

Using the ESQ technique, RUN incorporates better solution quality, escape from the local optima, and quick convergence. In order to use this approach, three new solutions—, , and —will be created based on the equation shown below:

where the value of is a random number between [0, 1]. is the global best solution throughout iterations. r is an integer that can be either 1, 0, or −1. is the best solution per iteration. A random number v is equal to 2 × . The random number w gets smaller as the number of iterations rises.

The pseudo-code steps of the RUN method is depicted in Algorithm 1.

| Algorithm 1 The RUN algorithm |

|

3.3. Support Vector Machine (SVM)

In the realm of supervised machine learning, SVMs are a helpful tool for dealing with classification and regression issues [39]. Despite this, the majority of their applications are found in classification-related debates. It is difficult to find a linear classifier in the dataset that can differentiate between the different classes. The SVM, widely regarded as the only classification method capable of handling this situation, discovers a linear classifier model for categorizing the input data within an excess margin limit after mapping and modifying the input space to turn it into a high-dimensional space. This model is used to determine where the data falls within the space. When dealing with data that has a lot of dimensions, SVM performs the best as a classifier [40]. In light of this, an SVM classification technique is utilized in this investigation in order to assess how useful RUN is when applied to microarrays classification.

The results of a support vector machine are controlled by two variables. C regulates the compromise between accurate training-data classification and a consistently smooth resolution limit. Meanwhile, represents the effect of a single training session. Algorithm 2 demonstrates the pseudo-code for the SVM model.

| Algorithm 2 The SVM algorithm |

|

In order to partition the data into K separate sets, a statistical technique known as K-fold cross-validation is utilized. As a result, only the training subsets were employed at first; however, more recently, other subsets have been used to test or validate the training subsets. When testing the model, a pending subsample will be used for validating the data, and K-1 sets would be employed for training points.

4. The Proposed RUN-SVM Approach

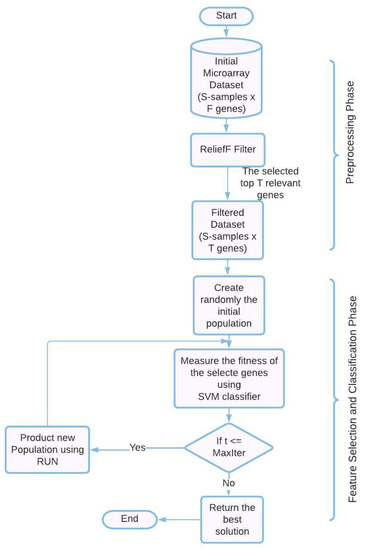

The combination of ReliefF, RUN, and SVM into a single framework for the effective classification of microarray datasets is novel, as far as we are aware. Not only is RUN being used for the first time as a gene selection method for cancer gene expression datasets, but it is also being used in a novel way. Here, we offer an overview of the hybrid cancer classification method we’ve proposed for picking the most precise SVM-classified genes from cancer gene expression datasets. In order to offer a set of genes that are predictive as well as informative, the proposed RUN–SVM approach, which is depicted in Figure 1, includes two processes: (1) a preprocessing step, and (2) a feature selection (FS) and classification Step. These steps are displayed in order. It is also possible to evaluate the predictability and informability of the other gene selection strategies by using the same two steps of the RUN–SVM approach that we have presented.

Figure 1.

The proposed RUN-SVM approach.

4.1. Preprocessing Phase

Applying algorithms directly to microarray datasets is a real challenge due to the fact that these datasets are of a high dimension and contain thousands of genes that need to be filtered. As a result of this, using these kinds of datasets without first filtering them makes it difficult to accurately train the SVM classification algorithm. For its excellent performance, great efficiency, and widespread application in the field of resolving problems with huge dimensions, we decided to make use of the ReliefF algorithm in this particular piece of work. As a result, the first stage of the proposed RUN–SVM methodology is called ReliefF. It can be utilized for the purpose of removing redundant genes, which contribute to the background noise and lower the classification accuracy (for more information on ReliefF, see Section 3.1).

4.2. Feature Welection and Classification Phase

To pick the most important genes from the smaller pool of genes generated in the first stage, we use wrapper approaches like the RUN algorithm and the SVM. First, RUN generates a randomly selected initial population, which is then subjected to an evaluation based on a fitness function. When the FS and classification phase procedures have been completed, the criterion has been met, and the ideal solution has been created, one with the highest classification performance and the fewest informative genes. This guarantees a productive outcome from the procedure. The SVM classifier performs an evaluation of the RUN solutions through the repetition phase in order to gauge how effective they are. The fitness function is evaluated based on the percentage of samples that are correctly categorized in comparison to the total number of samples (for more information on RUN and SVM, see Section 3.2 and Section 3.3).

where C indicates samples that were successfully classified, and T stands for the overall number of input samples.

The pseudo-code of the proposed RUN–SVM approach is demonstrated in Algorithm 3.

| Algorithm 3 Pseudo code of the proposed RUN-SVM approach |

|

5. Experimental Evaluation and Discussion

5.1. Datasets and the Running Environment

In this investigation, we obtained the gene expression datasets by downloading them from a public database referred to as the GEMS Database. This database is a repository for gene expression data [41,42]. There are two types of gene expression datasets that have been downloaded: multi-class microarray datasets (i.e., Brain Tumor1, Brain Tumor2, Breast3, and Lung Cancer) and binary-class microarray datasets (Breast2 and Prostate). The dataset has been preprocessed to produce the gene expression matrix—S by F matrix, where S is the number of samples and F is the number of genes present in the given samples—which is used to represent the gene expression dataset. The six cancer gene expression datasets employed to evaluate the effectiveness of the proposed RUN–SVM approach are shown in Table 1. A computer with Microsoft Windows 8.1 with core i-5, 2.50 GHz, 64-bit, and 8 GB main memory was employed for the user testing environment, and Matlab R2015a served as the executable environment. Each of the 30 independent runs of the proposed RUN–SVM approach includes 30 search agents and 100 iterations.

Table 1.

An overview of the datasets acquired by the microarrays.

5.2. Evaluation Metrics

The proposed RUN—SVM method was compared to the other approaches using four different measures of accuracy: best fitness function, standard deviation (STD), worst fitness function, and mean fitness function. In general, these are the four accuracy measurements that were used. The best fitness score at a run I is .

- 1.

- Mean: is calculated by multiplying the number of times the algorithm is run by its fitness value (N).

- 2.

- Best fitness function: is a value that, after running the algorithm N times, outperforms all other best fitness values.

- 3.

- Worst fitness function: the smallest value for each maximum fitness metric that can be achieved by repeated iterations of the method N times

- 4.

- The standard deviation (STD): is a quantity that can be obtained by running the algorithm N times and measuring the amount of change in the fitness values. Data points with a smaller STD value show that they cluster tightly around the mean, while data points with a larger STD value suggest that they may deviate greatly from the mean.

5.3. Experimental Findings

The cross-validation method was implemented in this investigation in order to enhance classification performance, minimize bias in point selection for testing and training, and improve overall accuracy. Therefore, 10-fold cross-validation was utilized during the process of training the SVM classifier. In addition, the ReliefF filter and SVM classifier-improved comparison algorithms were each subjected to 30 separate runs with each run consisting of 100 iterations.

5.3.1. Statistical Results Analysis

According to the results displayed in Table 2, Table 3 and Table 4, RUN ranks first in terms of best fitness values, worst fitness values, and mean in the Brain Tumor1, Brain Tumor2, and Breast3 datasets, respectively. Table 5 displays that the three fitness metrics with the highest rankings in Beast2 are RUN, MRFO, and HGS, with RUN being the gold standard. Table 6 displays that RUN and HGS have the highest fitness values in the Lung Cancer dataset, but RUN also has the lowest standard deviation. While HGS is rated highest for greatest fitness, Table 7 shows that RUN ranks highest for mean, worst, and standard deviation on the Prostate dataset.

Table 2.

Performance evaluation of the proposed algorithm on the Brain Tumor 1 dataset.

Table 3.

Performance evaluation of the proposed algorithm on the Brain Tumor 2 dataset.

Table 4.

Performance evaluation of the proposed algorithm on the Breast 3 dataset.

Table 5.

Performance evaluation of the proposed algorithm on the Breast 2 dataset.

Table 6.

Performance evaluation of the proposed algorithm on the Lung Cancer dataset.

Table 7.

Performance evaluation of the proposed algorithm on the Prostate dataset.

5.3.2. Convergence Behavior Analysis

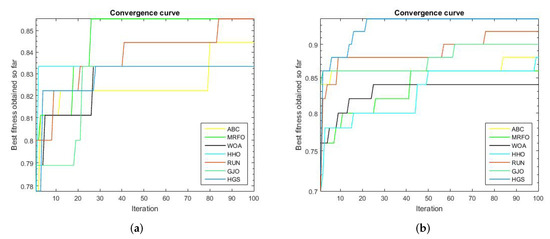

We compared the proposed RUN–SVM approach to some other popular optimization techniques and showed how it converges after 100 iterations. Based on the results shown in Figure 2a, the proposed RUN–SVM approach and the MRFO algorithm are the only ones that can be recognized to have the best accuracy for the Brain Tumor1 dataset. According to Figure 2c, only the proposed RUN–SVM technique and the GJO algorithm are able to achieve the maximum accuracy for the Breast2 dataset within the 100 iterations.

Figure 2.

Convergence curves for the comparison algorithms. (a) Brain Tumor1 Convergence Curve. (b) Brain Tumor2 Convergence Curve. (c) Breast2 Convergence Curve. (d) Breast3 Convergence Curve. (e) Lung Cancer Convergence Curve. (f) Prostate Convergence Curve.

Figure 2d, shows that the introduced RUN–SVM algorithm, together with the HGS algorithm, are the only algorithms that can be said to attain the best performance in the classification of samples in the Breast3 dataset. In addition, Figure 2e, the proposed RUN–SVM approach is the sole algorithm to achieve the greatest accuracy for the Lung Cancer dataset. Only the WOA algorithm and the suggested RUN—SVM method have been shown to achieve the maximum precision on the Prostate database, as depicted in Figure 2f.

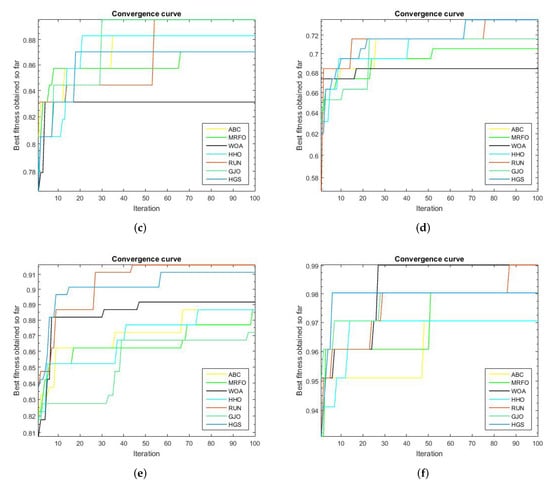

5.3.3. Boxplot Behavior Analysis

In this section, we draw attention to the results of the proposed RUN–SVM approach. The proposed RUN–SVM approach outperformed the competition in this evaluation, as is shown by the boxplot curves in Figure 3a–f.

Figure 3.

Boxplots curves for the comparison algorithms. (a) Brain Tumor1 Boxplot. (b) Brain Tumor2 Boxplot. (c) Breast2 Boxplot. (d) Breast3 Boxplot. (e) Lung Cancer Boxplot. (f) Prostate Boxplot.

5.4. Discussion

The purpose of this section is to examine the numerous systems now in use for classifying cancers. The goal of this research is to propose a search strategy for the FS problem that takes into account the challenges posed by high-dimensional datasets. The research suggested using a ReliefF filtering approach for preprocessing and then combining RUN and SVM for the FS and classification stages. The effectiveness of the proposed RUN–SVM approach is demonstrated by experimental analysis and a comparison study. The following is a list of benefits that come with using our proposed RUN–SVM technique:

- The datasets that were utilized for the analysis of this study contain feature sizes that range anywhere from 4869 to 12,600 features, which makes for an excellent testing environment for an optimization method.

- Any chance for improving RUN–SVM can easily be implemented due to its straightforward architecture.

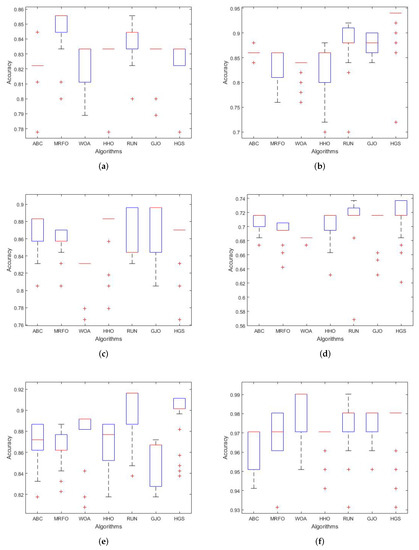

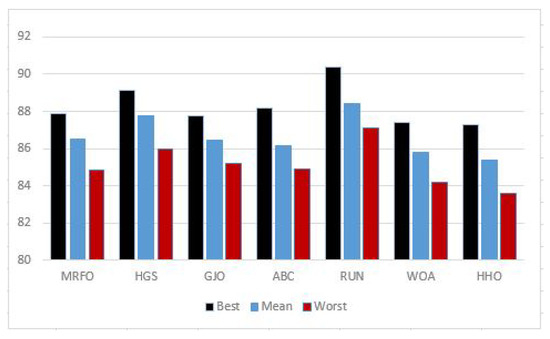

- Figure 4 shows how RUN–SVM outperforms other optimization algorithms in terms of classifying cancer.

Figure 4. Average accuracy percentage.

Figure 4. Average accuracy percentage.

On the other hand, the proposed RUN–SVM method offers certain restrictions, and these are listed below.

- Due to the fact that RUN–SVM uses a randomization-based optimization technique, the features it uses may vary from run to run. The feature subset picked in one run may not be present in another.

- As most genes are related to one another either directly or indirectly, microarray data processing is quite complex.

- The limited number of samples available in microarray datasets, the presence of noise and genes that are not relevant to the study, and the “curse of dimensionality” all add to the difficulty of classifying a particular sample.

6. Conclusions and Future Work

Using the Runge Kutta optimizer (RUN) approach, this investigation presents an improved way for choosing significant genes from cancer microarray patterns. The first primary goal was to pick the genes from the microarray datasets that were the most relevant and helpful with the least amount of effort, and the second primary goal was to select the genes that were the most accurate with the least amount of effort. In this paper, a wrapper gene selection approach called RUN—SVM is proposed. RUN—SVM combines the RUN algorithm with the support vector machine (SVM) classifier in order to identify the most relevant genes from a small dataset after removing redundant genes and selecting related genes from gene expression datasets. Six standard microarray datasets were used to evaluate the proposed RUN–SVM approach, namely Brain Tumor1, Brain Tumor2, Breast2, Breast3, Lung Cancer, and Prostate. The findings of the experiments showed that the RUN-SVM methodology obtains the highest level of accuracy in comparison to other comparative methodologies, such as HHO, MRFO, GJO, HGS, WOA, and ABC. The effectiveness of recent deep learning classifiers, such as transformer-based approaches and self-attention methods, could be examined for cancer classification using microarray datasets as part of future work that is associated with this research. Also, the performance of those classifiers could be evaluated in order to determine whether or not it would be enhanced by making use of the optimizer that was proposed in this study.

Author Contributions

Supervision, E.H.H.; conceptualization, N.A.S. and M.M.J.; methodology, E.H.H. and H.N.H.; software, E.H.H. and H.N.H.; validation, N.A.S. and M.M.J.; formal analysis, E.H.H. and H.N.H.; investigation, E.H.H. and H.N.H.; resources, N.A.S. and M.M.J.; data curation, N.A.S. and M.M.J.; writing—original draft preparation, E.H.H., H.N.H., N.A.S. and M.M.J.; writing—review and editing, E.H.H., H.N.H., N.A.S. and M.M.J.; visualization, E.H.H. and H.N.H.; funding acquisition, N.A.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2023R104), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Institutional Review Board Statement

This article does not contain any studies with human participants or animals performed by any authors.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this article, as no datasets were generated or analyzed during the current study.

Acknowledgments

The authors would like to express their gratitude to Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2023R104), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Conflicts of Interest

The authors declare that there are no conflict of interest.

References

- Li, S.; Wu, X.; Tan, M. Gene selection using hybrid particle swarm optimization and genetic algorithm. Soft. Comput. 2008, 12, 1039–1048. [Google Scholar] [CrossRef]

- Alba, E.; Garcia-Nieto, J.; Jourdan, L.; Talbi, E.G. Gene selection in cancer classification using PSO/SVM and GA/SVM hybrid algorithms. In Proceedings of the 2007 IEEE Congress on Evolutionary Computation, Singapore, 25–28 September 2007; pp. 284–290. [Google Scholar]

- Alshamlan, H.M.; Badr, G.H.; Alohali, Y. A study of cancer microarray gene expression profile: Objectives and approaches. In Proceedings of the World Congress on Engineering, London, UK, 3–5 July 2013; Volume 2, pp. 1–6. [Google Scholar]

- Ghorai, S.; Mukherjee, A.; Sengupta, S.; Dutta, P.K. Multicategory cancer classification from gene expression data by multiclass NPPC ensemble. In Proceedings of the 2010 International Conference on Systems in Medicine and Biology, Kharagpur, India, 16–18 December 2010; pp. 41–48. [Google Scholar]

- Guo, S.B.; Lyu, M.R.; Lok, T.M. Gene selection based on mutual information for the classification of multi-class cancer. In Proceedings of the International Conference on Intelligent Computing, Kunming, China, 16–19 August 2006; pp. 454–463. [Google Scholar]

- Alanni, R.; Hou, J.; Azzawi, H.; Xiang, Y. A novel gene selection algorithm for cancer classification using microarray datasets. BMC Med. Genom. 2019, 12, 10. [Google Scholar] [CrossRef]

- Su, Y.; Murali, T.; Pavlovic, V.; Schaffer, M.; Kasif, S. RankGene: Identification of diagnostic genes based on expression data. Bioinformatics 2003, 19, 1578–1579. [Google Scholar] [CrossRef]

- Ron, K.; George, H.J. Wrappers for feature subset selection. Artif. Intell. 1997, 97, 273–324. [Google Scholar]

- Li, X.; Rao, S.; Wang, Y.; Gong, B. Gene mining: A novel and powerful ensemble decision approach to hunting for disease genes using microarray expression profiling. Nucleic Acids Res. 2004, 32, 2685–2694. [Google Scholar] [CrossRef]

- Zhao, X.M.; Cheung, Y.M.; Huang, D.S. A novel approach to extracting features from motif content and protein composition for protein sequence classification. Neural Netw. 2005, 18, 1019–1028. [Google Scholar] [CrossRef]

- Li, L.; Jiang, W.; Li, X.; Moser, K.L.; Guo, Z.; Du, L.; Wang, Q.; Topol, E.J.; Wang, Q.; Rao, S. A robust hybrid between genetic algorithm and support vector machine for extracting an optimal feature gene subset. Genomics 2005, 85, 16–23. [Google Scholar] [CrossRef]

- Li, L.; Darden, T.A.; Weingberg, C.; Levine, A.; Pedersen, L.G. Gene assessment and sample classification for gene expression data using a genetic algorithm/k-nearest neighbor method. Comb. Chem. High Throughput Screen. 2001, 4, 727–739. [Google Scholar] [CrossRef]

- Alshamlan, H.M.; Badr, G.H.; Alohali, Y.A. The performance of bio-inspired evolutionary gene selection methods for cancer classification using microarray dataset. Int. J. Biosci. Biochem. Bioinform. 2014, 4, 166. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No Free Lunch Theorems for Search; Technical Report SFI-TR-95-02-010; Santa Fe Institute: Santa Fe, NM, USA, 1995. [Google Scholar]

- Ahmadianfar, I.; Heidari, A.A.; Gandomi, A.H.; Chu, X.; Chen, H. RUN beyond the metaphor: An efficient optimization algorithm based on Runge Kutta method. Expert Syst. Appl. 2021, 181, 115079. [Google Scholar] [CrossRef]

- Alshamlan, H.; Badr, G.; Alohali, Y. A comparative study of cancer classification methods using microarray gene expression profile. In Proceedings of the First International Conference on Advanced Data and Information Engineering (DaEng-2013); Springer: Berlin/Heidelberg, Germany, 2014; pp. 389–398. [Google Scholar]

- Guyon, I.; Weston, J.; Barnhill, S.; Vapnik, V. Gene selection for cancer classification using support vector machines. Mach. Learn. 2002, 46, 389–422. [Google Scholar] [CrossRef]

- Huerta, E.B.; Duval, B.; Hao, J.K. A hybrid GA/SVM approach for gene selection and classification of microarray data. In Proceedings of the Workshops on Applications of Evolutionary Computation, Budapest, Hungary, 10–12 April 2006; pp. 34–44. [Google Scholar]

- Houssein, E.H.; Sayed, A. Dynamic Candidate Solution Boosted Beluga Whale Optimization Algorithm for Biomedical Classification. Mathematics 2023, 11, 707. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Karaboga, D. An Idea Based on Honey Bee Swarm for Numerical Optimization; Technical Report-tr06; Department of Computer Engineering, Engineering Faculty, Erciyes University: Kayseri, Turkey, 2005. [Google Scholar]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Yang, Y.; Chen, H.; Heidari, A.A.; Gandomi, A.H. Hunger games search: Visions, conception, implementation, deep analysis, perspectives, and towards performance shifts. Expert Syst. Appl. 2021, 177, 114864. [Google Scholar] [CrossRef]

- Chopra, N.; Ansari, M.M. Golden jackal optimization: A novel nature-inspired optimizer for engineering applications. Expert Syst. Appl. 2022, 198, 116924. [Google Scholar] [CrossRef]

- Zhao, W.; Zhang, Z.; Wang, L. Manta ray foraging optimization: An effective bio-inspired optimizer for engineering applications. Eng. Appl. Artif. Intell. 2020, 87, 103300. [Google Scholar] [CrossRef]

- Alshamlan, H.M.; Badr, G.H.; Alohali, Y.A. Abc-svm: Artificial bee colony and svm method for microarray gene selection and multi class cancer classification. Int. J. Mach. Learn. Comput. 2016, 6, 184. [Google Scholar] [CrossRef]

- Gao, L.; Ye, M.; Lu, X.; Huang, D. Hybrid method based on information gain and support vector machine for gene selection in cancer classification. Genom. Proteom. Bioinform. 2017, 15, 389–395. [Google Scholar] [CrossRef]

- Chang, C.C.; Lin, C.J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. (TIST) 2011, 2, 1–27. [Google Scholar] [CrossRef]

- Wang, Y.; Tetko, I.V.; Hall, M.A.; Frank, E.; Facius, A.; Mayer, K.F.; Mewes, H.W. Gene selection from microarray data for cancer classification—A machine learning approach. Comput. Biol. Chem. 2005, 29, 37–46. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Zhou, W.; Wang, B.; Zhang, Z.; Li, F. Applying 1-norm SVM with squared loss to gene selection for cancer classification. Appl. Intell. 2018, 48, 1878–1890. [Google Scholar] [CrossRef]

- Mohamad, M.S.; Omatu, S.; Deris, S.; Yoshioka, M. Particle swarm optimization for gene selection in classifying cancer classes. Artif. Life Robot. 2009, 14, 16–19. [Google Scholar] [CrossRef]

- Almugren, N.; Alshamlan, H. FF-SVM: New FireFly-based gene selection algorithm for microarray cancer classification. In Proceedings of the 2019 IEEE Conference on Computational Intelligence in Bioinformatics and Computational Biology (CIBCB), Siena, Italy, 9–11 July 2019; pp. 1–6. [Google Scholar]

- Kavitha, K.; Prakasan, A.; Dhrishya, P. Score-based feature selection of gene expression data for cancer classification. In Proceedings of the 2020 Fourth International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 11–13 March 2020; pp. 261–266. [Google Scholar]

- El Kafrawy, P.; Fathi, H.; Qaraad, M.; Kelany, A.K.; Chen, X. An efficient SVM-based feature selection model for cancer classification using high-dimensional microarray data. IEEE Access 2021, 9, 155353–155369. [Google Scholar] [CrossRef]

- Qasem, S.N.; Saeed, F. Hybrid feature selection and ensemble learning methods for gene selection and cancer classification. Int. J. Adv. Comput. Sci. Appl. 2021, 12. [Google Scholar] [CrossRef]

- Chaudhuri, A.; Sahu, T.P. A hybrid feature selection method based on Binary Jaya algorithm for micro-array data classification. Comput. Electr. Eng. 2021, 90, 106963. [Google Scholar] [CrossRef]

- Kira, K.; Rendell, L.A. The feature selection problem: Traditional methods and a new algorithm. In Proceedings of the Tenth National Conference on Artificial Intelligence, San Jose, CA, USA, 12–16 July 1992; Volume 2, pp. 129–134. [Google Scholar]

- Kononenko, I. Estimating attributes: Analysis and extensions of RELIEF. In Proceedings of the European Conference on Machine Learning, Catania, Italy, 6–8 April 1994; pp. 171–182. [Google Scholar]

- Rodríguez-Peérez, R.; Vogt, M.; Bajorath, J. Support vector machine classification and regression prioritize different structural features for binary compound activity and potency value prediction. ACS Omega 2017, 2, 6371–6379. [Google Scholar] [CrossRef]

- Wang, X.; Gotoh, O. Microarray-based cancer prediction using soft computing approach. Cancer Inform. 2009, 7, CIN-S2655. [Google Scholar] [CrossRef]

- Statnikov, A.; Tsamardinos, I.; Dosbayev, Y.; Aliferis, C.F. GEMS: A system for automated cancer diagnosis and biomarker discovery from microarray gene expression data. Int. J. Med. Inform. 2005, 74, 491–503. [Google Scholar] [CrossRef]

- Bolón-Canedo, V.; Sánchez-Marono, N.; Alonso-Betanzos, A.; Benítez, J.M.; Herrera, F. A review of microarray datasets and applied feature selection methods. Inf. Sci. 2014, 282, 111–135. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).