Specific Binding Ratio Estimation of [123I]-FP-CIT SPECT Using Frontal Projection Image and Machine Learning

Abstract

1. Introduction

2. Materials and Methods

2.1. SPECT Imaging

2.2. SBR Calculation

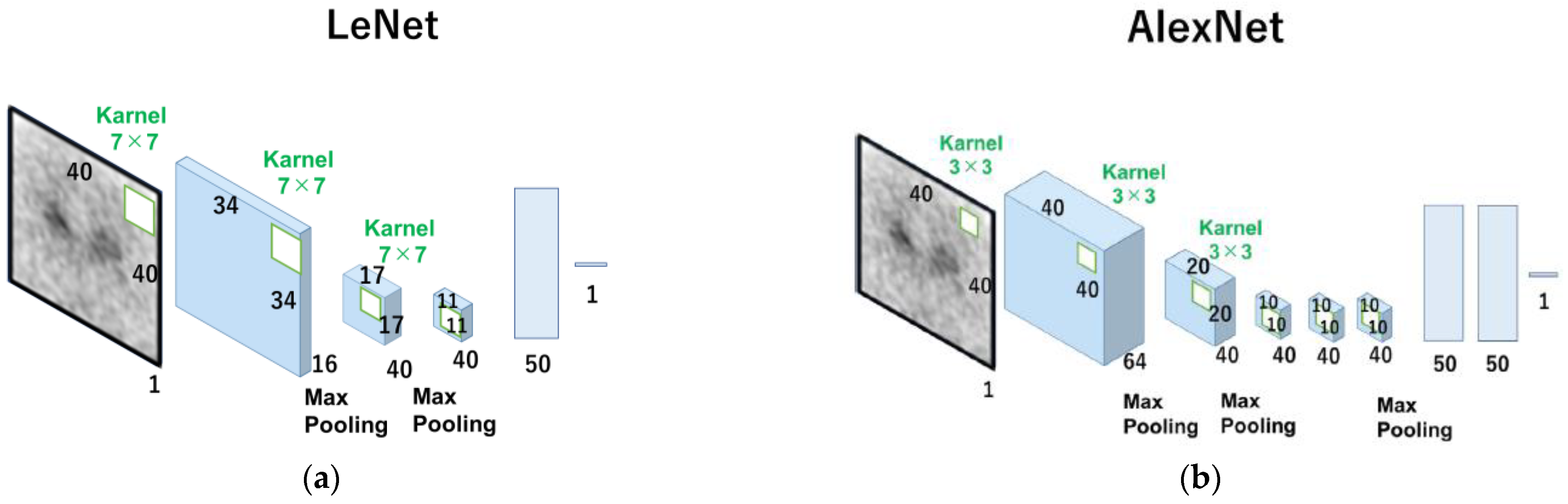

2.3. Network Architecture

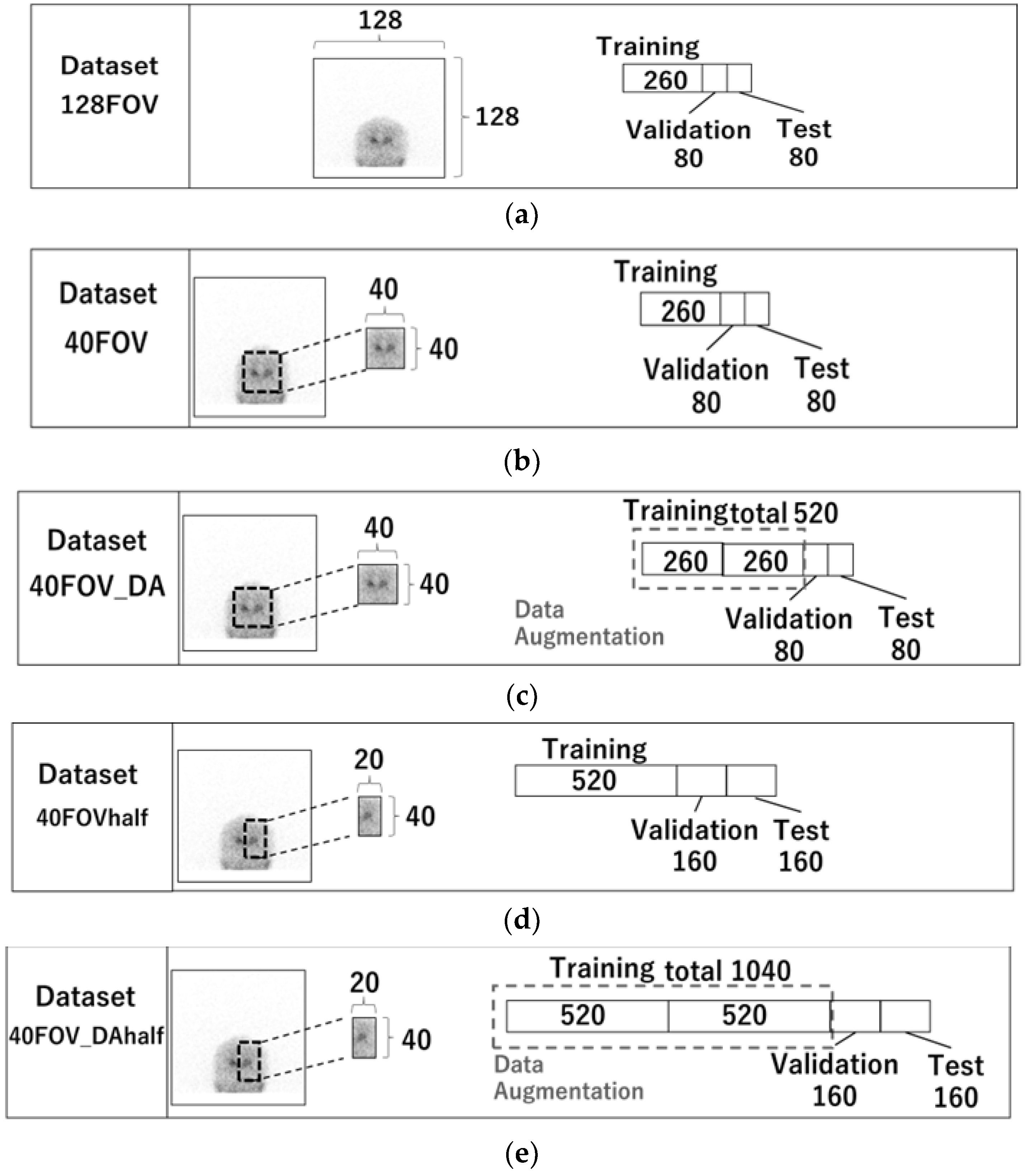

2.4. Datasets

2.5. Evolution

2.6. Statistical Analysis

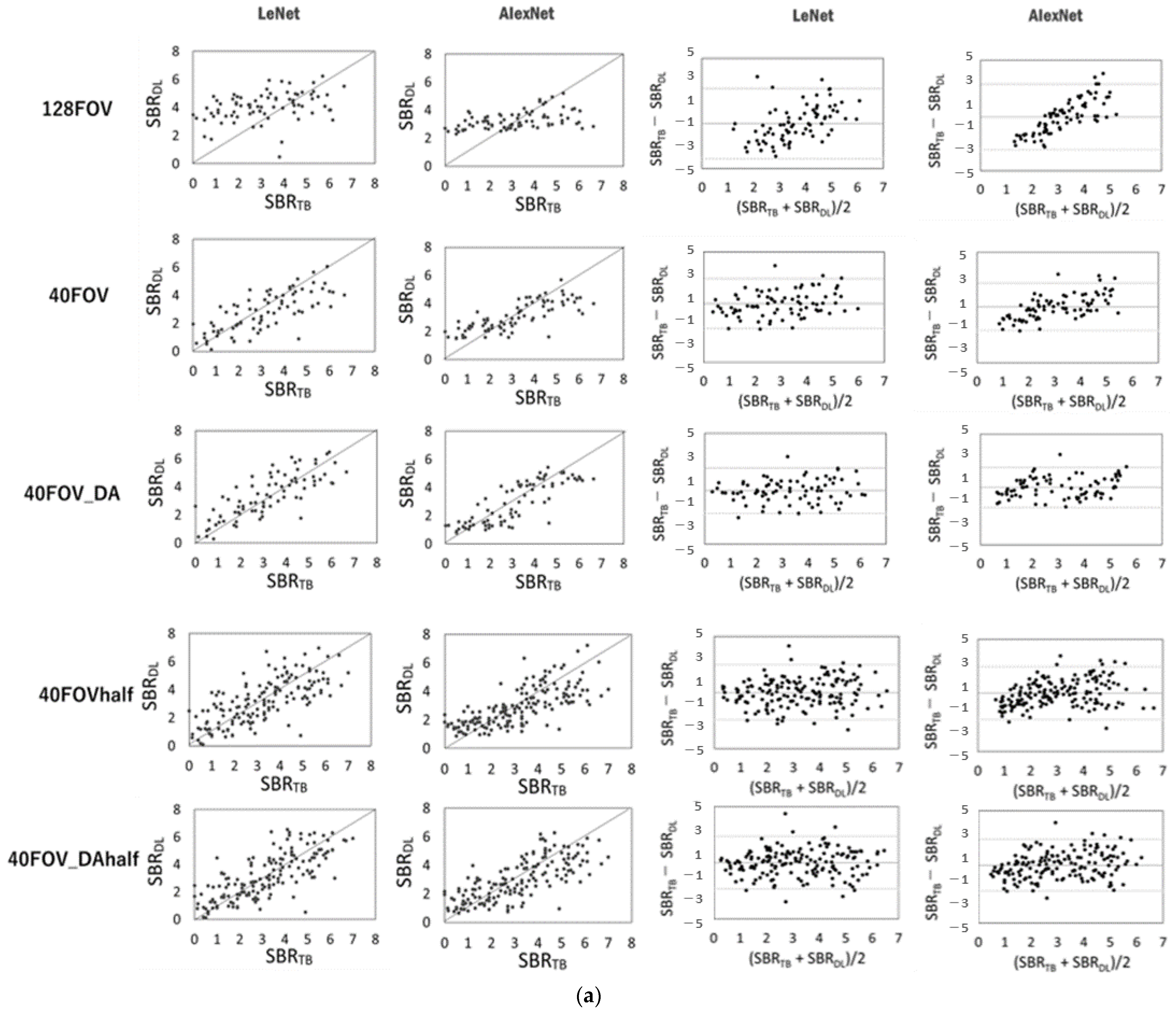

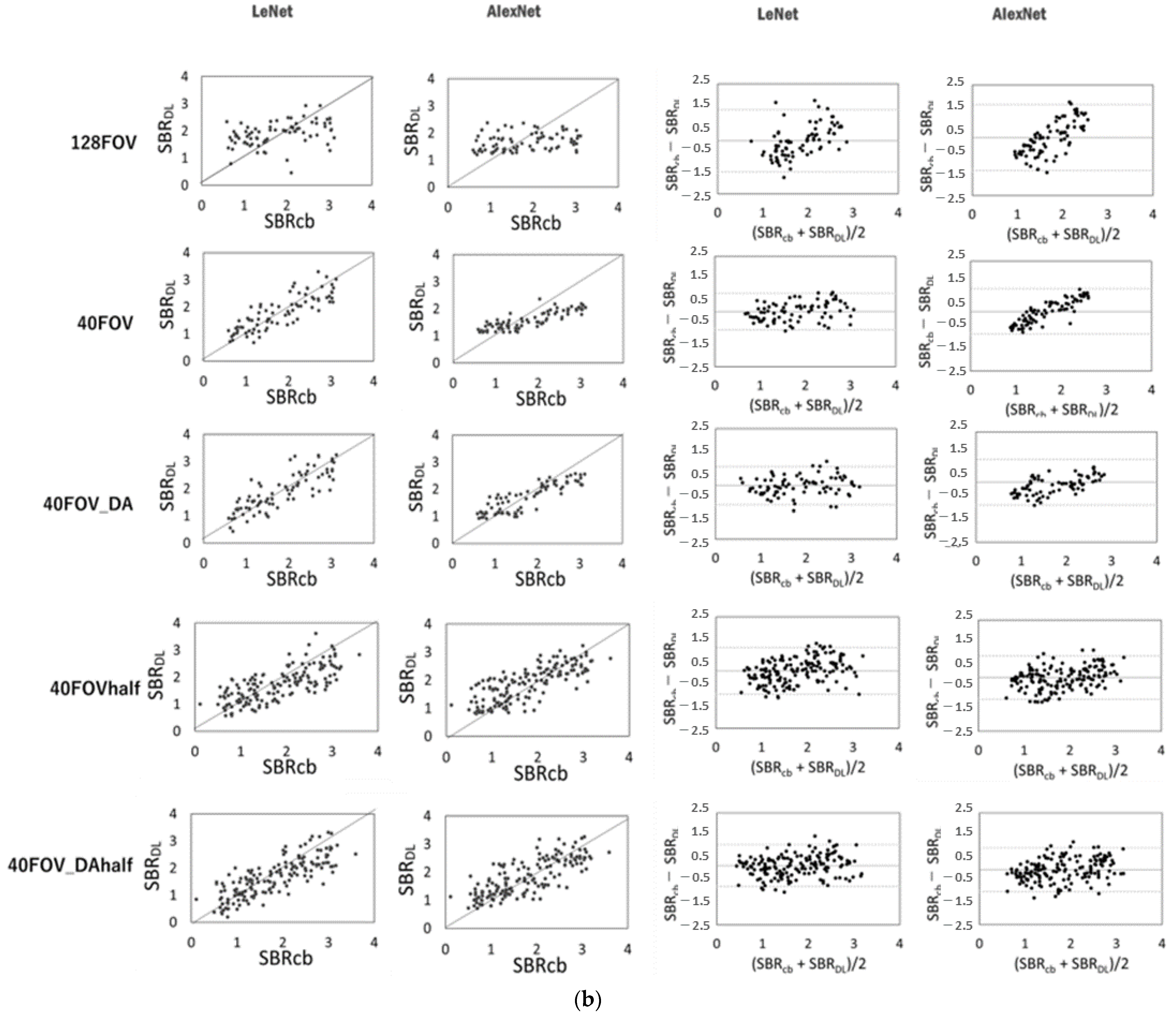

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Catafau, A.M.; Tolosa, E.; DaTSCAN Clinically Uncertain Parkinsonian Syndromes Study Group. Impact of dopamine transporter SPECT using 123I-Ioflupane on diagnosis and management of patients with clinically uncertain Parkinsonian syndromes. Mov. Disord. 2004, 19, 1175–1182. [Google Scholar] [CrossRef] [PubMed]

- Walker, Z.; Jaros, E.; Walker, R.W.H.; Lee, L.; Costa, D.C.; Livingston, G.; Ince, P.G.; Perry, R.; Mckeith, I.; Katona, C.L.E. Dementia with Lewy bodies: A comparison of clinical diagnosis, FP-CIT single photon emission computed tomography imaging and autopsy. J. Neurol. Neurosurg. Psychiatry 2007, 78, 1176–1181. [Google Scholar] [CrossRef] [PubMed]

- McKeith, I.; O’Brien, J.; Walker, Z.; Tatsch, K.; Booij, J.; Darcourt, J.; Padovani, A.; Giubbini, R.; Bonuccelli, U.; Volterrani, D.; et al. Sensitivity and specificity of dopamine transporter imaging with 123I-FP-CIT SPECT in dementia with Lewy bodies: A phase III, multicentre study. Lancet Neurol. 2007, 6, 305–313. [Google Scholar] [CrossRef] [PubMed]

- Benamer, T.S.; Patterson, J.; Grosset, D.G.; Booij, J.; de Bruin, K.; van Royen, E.; Speelman, J.D.; Horstink, M.H.I.M.; Sips, H.J.W.A.; Dierckx, R.A.; et al. Accurate differentiation of parkinsonism and essential tremor using visual assessment of [123I]FP-CIT SPECT imaging. Mov. Disord. 2000, 15, 503–510. [Google Scholar] [CrossRef] [PubMed]

- Badiavas, K.; Molyvda, E.; Iakovou, I.; Tsolaki, M.; Psarrakos, K.; Karatzas, N. SPECT imaging evaluation in movement disorders: Far beyond visual assessment. Eur. J. Nucl. Med. Mol. Imaging 2011, 38, 764–773. [Google Scholar] [CrossRef]

- Booij, J.; Habraken, J.B.; Bergmans, P.; Tissingh, G.; Winogrodzka, A.; Wolters, E.C.; Janssen, A.G.; Stoof, J.C.; van Royen, E.A. Imaging of dopamine transporters with iodine-123-FP-CIT SPECT in healthy controls and patients with Parkinson’s disease. J. Nucl. Med. 1998, 39, 1879–1884. [Google Scholar]

- Soderlund, T.A.; Dickson, J.C.; Prvulovich, E.; Ben-Haim, S.; Kemp, P.; Booij, J.; Nobili, F.; Thomsen, G.; Sabri, O.; Koulibaly, P.M.; et al. Value of semiquantitative analysis for clinical reporting of 123I-2-β-carbomethoxy-3β-(4-iodophenyl)-N-(3-fluoropropyl) nortropane SPECT studies. J. Nucl. Med. 2013, 54, 714–722. [Google Scholar] [CrossRef]

- Djang, D.S.; Janssen, M.J.; Bohnen, N.; Booij, J.; Henderson, T.A.; Herholz, K.; Minoshima, S.; Rowe, C.C.; Sabri, O.; Seibyl, J.; et al. SNM Practice Guideline for Dopamine Transporter Imaging with I-123-Ioflupane SPECT 1. 0. J. Nucl. Med. 2012, 53, 154–163. [Google Scholar] [CrossRef]

- Darcourt, J.; Booij, J.; Tatsch, K.; Varrone, A.; Vander Borght, T.; Kapucu, O.L.; Nagren, K.; Nobili, F.; Walker, Z.; Van Laere, K. EANM procedure guidelines for brain neurotransmission SPECT using 123I-labelled dopamine transporter ligands, version 2. Eur. J. Nucl. Med. Mol. Imaging 2010, 37, 443–450. [Google Scholar] [CrossRef]

- Kita, A.; Okazawa, H.; Sugimoto, K.; Kaido, R.; Kidoya, E.; Kimura, H. Acquisition count dependence of the specific binding ratio in 123I-FP-CIT SPECT. Ann. Nucl. Med. 2021, 62, 3028. [Google Scholar] [CrossRef]

- Kita, A.; Onoguchi, M.; Shibutani, T.; Horita, H.; Oku, Y.; Kashiwaya, S.; Isaka, M.; Saitou, M. Standardization of the specific binding ratio in [123I]FP-CIT SPECT: Study by striatum phantom. Nucl. Med. Commun. 2019, 40, 484–490. [Google Scholar] [CrossRef]

- Nakajima, K.; Saito, S.; Chen, Z.; Komatsu, J.; Maruyama, K.; Shirasaki, N.; Watanabe, S.; Inaki, A.; Ono, K.; Kinuya, S. Diagnosis of Parkinson syndrome and Lewy-body disease using 123I-ioflupane images and a model with image features based on machine learning. Ann. Nucl. Med. 2022, 36, 765–776. [Google Scholar] [CrossRef]

- Ogawa, K.; Harata, Y.; Ichihara, T.; Kubo, A.; Hashimoto, S.; Kashiwaya, S. A practical method for position-dependent Compton-scatter correction in single photon emission CT. IEEE Trans. Med. Imaging 1991, 10, 408–412. [Google Scholar] [CrossRef]

- Tossici-Bolt, L.; Hoffmann, S.M.; Kemp, P.M.; Mehta, R.L.; Fleming, J.S. Quantification of [123I]FP-CIT SPECT brain images: An accurate technique measurement of the specific binding ratio. Eur. J. Nucl. Med. Mol. Imaging 2006, 33, 1491–1499. [Google Scholar] [CrossRef]

- Rahman, M.G.M.; Islam, M.M.; Tsujikawa, T.; Kiyono, Y.; Okazawa, H. Count-based method for specific binding ratio calculation in [I-123]FP-CIT SPECT analysis. Ann. Nucl. Med. 2019, 33, 14–21. [Google Scholar] [CrossRef]

- Rahman, M.G.M.; Islam, M.M.; Tsujikawa, T.; Okazawa, H. A novel automatic approach for calculation of the specific binding ratio in [i-123]fp-citspect. Diagnostics 2020, 10, 289. [Google Scholar] [CrossRef]

- Iwabuchi, Y.; Nakahara, T.; Kameyama, M.; Yamada, Y.; Hashimoto, M.; Matsusaka, Y.; Osada, T.; Ito, D.; Tabuchi, H.; Jinzaki, M. Impact of a combination of quantitative indices representing uptake intensity, shape, and asymmetry in DAT SPECT using machine learning: Comparison of different volume of interest settings. EJNMMI Res. 2019, 9, 7. [Google Scholar] [CrossRef]

- Murakami, H.; Kimura, A.; Yasumoto, T.; Miki, A.; Yamamoto, K.; Ito, N.; Momma, Y.; Owan, Y.; Yano, S.; Ono, K. Usefulness differs between the visual assessment and specific binding ratio of 123i-ioflupane spect in assessing clinical symptoms of drug-naïve parkinson’s disease patients. Front. Aging Neurosci. 2018, 10, 412. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, E.G. ImageNet classification with deep convolutional neural networks. In Proceedings of the NIPS’12 25th International Conference on Neural Information Processing Systems, New York, NY, USA, 3 December 2012; Volume 1, pp. 1097–1105. [Google Scholar]

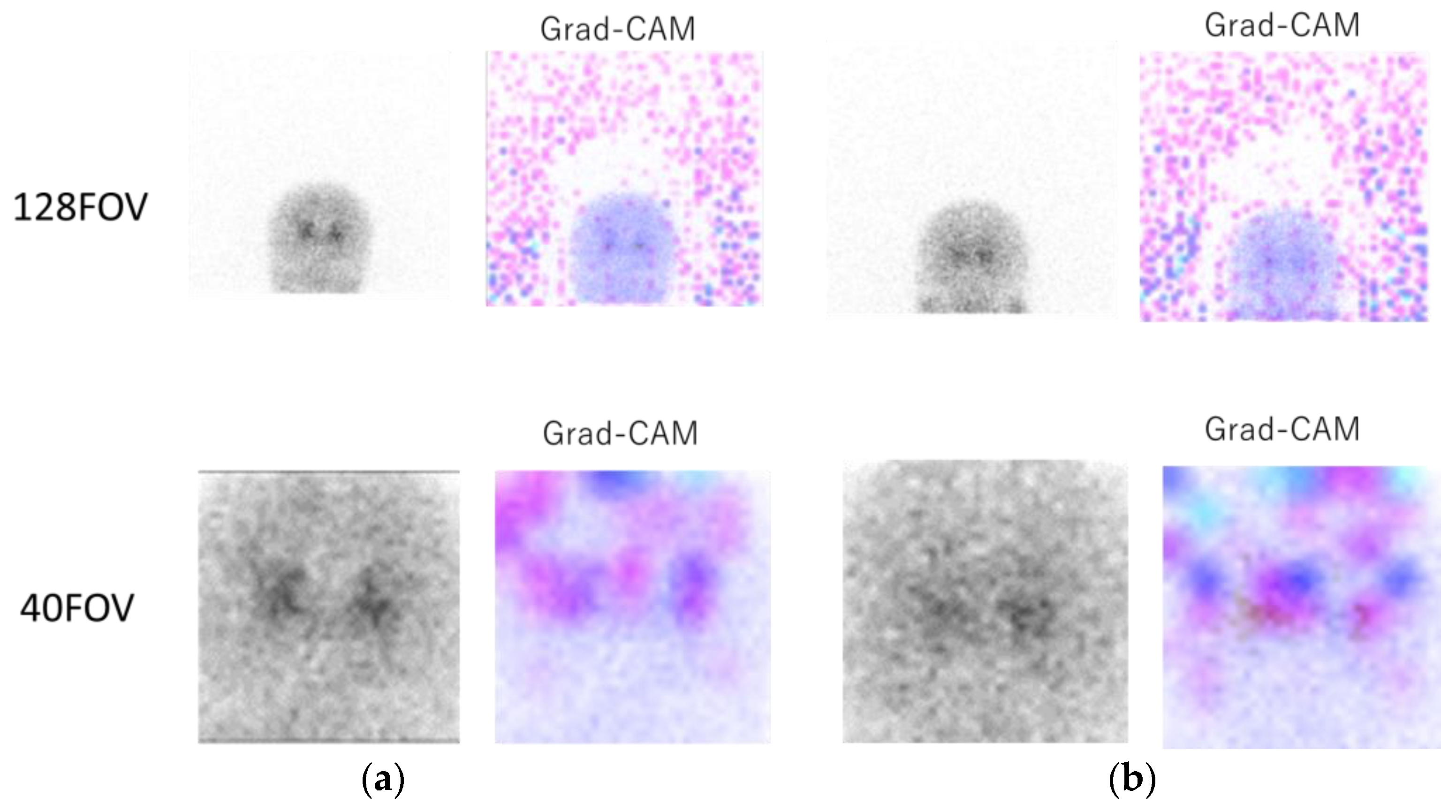

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Kanda, Y. Investigation of the freely available easy-to-use software “EZR” for medical statistics. Bone Marrow Transpl. 2013, 48, 452–458. [Google Scholar] [CrossRef] [PubMed]

- Shigekiyo, T.; Arawaka, S. Laterality of specific binding ratios on DAT-SPECT for differential diagnosis of degenerative parkinsonian syndromes. Sci. Rep. 2020, 10, 15761. [Google Scholar] [CrossRef] [PubMed]

- Booij, J.; Busemann Sokole, E.; Stabin, M.G.; Janssen, A.G.; de Bruin, K.; van Royen, E.A. Human biodistribution and dosimetry of [123I]FP-CIT: A potent radioligand for imaging of dopamine transporters. Eur. J. Nucl. Med. 1998, 25, 24–30. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Wei, L.; Yangqing, J.; Pierre, S.; Scott, R.; Scott, R.; Dragomir, A.; Dumitru, E.; Vincent, V.; Andrew, R. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2015; pp. 770–778. [Google Scholar] [CrossRef]

| Training | Validation | Test | |

|---|---|---|---|

| Number of cases | 260 | 80 | 80 |

| Age (y, mean ± SD) | 72.2 ± 9.6 | 71.8 ± 10.3 | 72.4 ± 9.8 |

| Men/women (N) | 137/123 | 49/31 | 44/36 |

| LeNet | AlexNet | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Dataset | MAE | RMSE | Corr | Slope | MAE | RMSE | Corr | Slope | |

| DaT View | 128FOV | 1.56 | 1.87 | 0.36 | 0.21 | 1.21 | 1.51 | 0.44 | 0.16 |

| 40FOV | 0.92 | 1.18 | 0.74 | 0.60 | 0.86 | 1.09 | 0.78 | 0.47 | |

| 40FOV_DA | 0.83 | 1.05 | 0.80 | 0.74 | 0.76 | 0.94 | 0.84 | 0.74 | |

| 40FOVhalf | 1.00 | 1.25 | 0.71 | 0.67 | 0.98 | 1.21 | 0.72 | 0 60 | |

| 40FOV_DAhalf | 0.94 | 1.20 | 0.74 | 0.73 | 0.95 | 1.18 | 0.73 | 0.61 | |

| Count-based methods | 128FOV | 0.58 | 0.72 | 0.41 | 0.23 | 0.63 | 0.76 | 0.25 | 0.10 |

| 40FOV | 0.35 | 0.42 | 0.84 | 0.70 | 0.48 | 0.57 | 0.84 | 0.36 | |

| 40FOV_DA | 0.35 | 0.44 | 0.83 | 0.76 | 0.35 | 0.42 | 0.87 | 0.59 | |

| 40FOVhalf | 0.45 | 0.55 | 0.73 | 0.59 | 0.39 | 0.50 | 0.78 | 0.64 | |

| 40FOV_DAhalf | 0.40 | 0.49 | 0.80 | 0.71 | 0.40 | 0.50 | 0.77 | 0.62 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kita, A.; Okazawa, H.; Sugimoto, K.; Kosaka, N.; Kidoya, E.; Tsujikawa, T. Specific Binding Ratio Estimation of [123I]-FP-CIT SPECT Using Frontal Projection Image and Machine Learning. Diagnostics 2023, 13, 1371. https://doi.org/10.3390/diagnostics13081371

Kita A, Okazawa H, Sugimoto K, Kosaka N, Kidoya E, Tsujikawa T. Specific Binding Ratio Estimation of [123I]-FP-CIT SPECT Using Frontal Projection Image and Machine Learning. Diagnostics. 2023; 13(8):1371. https://doi.org/10.3390/diagnostics13081371

Chicago/Turabian StyleKita, Akinobu, Hidehiko Okazawa, Katsuya Sugimoto, Nobuyuki Kosaka, Eiji Kidoya, and Tetsuya Tsujikawa. 2023. "Specific Binding Ratio Estimation of [123I]-FP-CIT SPECT Using Frontal Projection Image and Machine Learning" Diagnostics 13, no. 8: 1371. https://doi.org/10.3390/diagnostics13081371

APA StyleKita, A., Okazawa, H., Sugimoto, K., Kosaka, N., Kidoya, E., & Tsujikawa, T. (2023). Specific Binding Ratio Estimation of [123I]-FP-CIT SPECT Using Frontal Projection Image and Machine Learning. Diagnostics, 13(8), 1371. https://doi.org/10.3390/diagnostics13081371