1. Introduction

Blood is the dynamic engine and one of the basic elements of the human body, which consists of three main components with different weights: red blood cells (45%), plasma (55%), and white blood cells (WBC) (less than 1%) [

1]. These components differ according to their color, shape, texture, composition, size, and any increase or decrease in the percentage of each element in the blood that causes a specific disease [

2]. Leukemia is a deadly blood cancer that produces a malignant WBC that attacks normal blood cells and causes death [

3]. It is one of the most common cancers among children and adults. The bone marrow produces the basic components of blood [

4]. Therefore, malignant WBC affects the bone marrow due to its ability to make the basic components of blood normally and causes a weakening of the immune system. In addition, malignant WBC travels through the bloodstream and causes damage to the liver, spleen, kidneys, brain, etc., and causes other forms of fatal diseases [

5]. The affected WBC type determines the type of leukemia: lymphoid (Acute myeloidleukemia) if the cells are monocytes, or myelogenous (acute lymphoblastic leukemia) if the cells are lymphocytes [

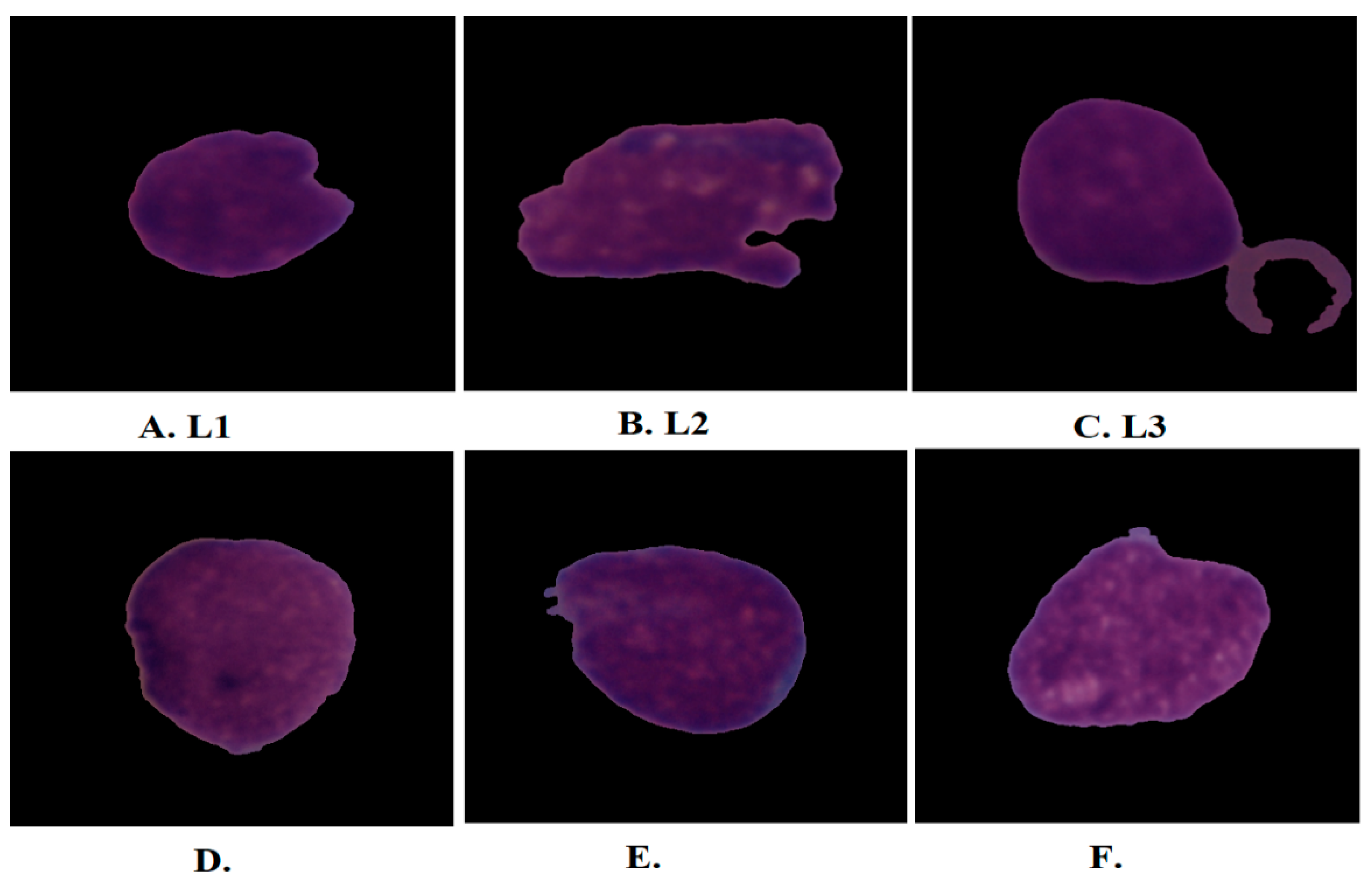

6]. Acute lymphoblastic leukemia (ALL) is classified into three forms according to its shape and size: L1, L2, and L3 as shown in

Figure 1. Type L1 cells are symmetrical in shape, of a regular small round size, and surrounded by little cytoplasm. Type L2 cells are asymmetric in form, irregular, larger than L1, and have different cytoplasm. L3-type cells have a normal shape and size, an oval or circular nucleus, its size is larger than L1, and a quantity of cytoplasm surrounds it with vacuoles [

7]. When lymphoid or myeloid cells grow abnormally and uncontrollably, they cause leukemia. These cancer cells reduce the possibility of the growth of normal blood cells in the bone marrow [

8]. The few normal blood cells are discharged into the bloodstream which cannot supply the body’s organs with sufficient oxygen, so that the immune system will weaken and the blood clotting will be weak. ALL represents 25% of childhood cancers; 74% of leukemia cases in people under 20 have ALL. The five-year survival rate for children under 14 is 91%, while people between the ages of 15 and 20 have a five-year survival rate of 75%. In all cases diagnosed early and treated, the ALL disease cannot return, meaning that children diagnosed with ALL, after five years, became healthy and recovered completely. However, the recovery rate for adults five years after their injury is not high, ranging between 20–35% [

9]. ALL spreads quickly if left undiagnosed and can lead to death within months. ALL is usually diagnosed by a complete blood count test, in which a doctor checks for certain clinical signs of leukemia [

10]. Sometimes the doctor is unsatisfied with the symptoms of a complete blood count smear to decide on leukemia. Therefore, the doctor resorts to suctioning a sample of bone marrow and examining it under a microscope to confirm the presence of leukemia. All manual methods for diagnosing leukemia depend on the experience of specialists and doctors. The procedures for manual leukemia diagnosis are difficult, complex, prone to human error, costly, and time-consuming. To overcome the limitations of manual diagnosis, it would be beneficial to automate the examination processes of blood and bone marrow samples. Deep and machine-learning networks have proven their ability to address the limitations of manual diagnosis for early detection of ALL. Machine-learning algorithms are highly capable of classifying handcrafted attributes. Pre-processing is one of the most important steps of AI to improve images and remove artefacts that degrade system performance. Additionally, the blood slice images contain many blood components and non-target cells, so the segmentation method works to crop white blood cells only, called regions of interest (ROI), which are sent to feature extraction methods to extract features of white blood cells only. In recent years, CNN networks have inputted the medical field, which has high capabilities to identify many diseases, including distinguishing between normal and blasted blood cells. CNN automatically learns the hierarchy of spatial features from the input images. CNN has millions of parameters, biases, weights, and connections that can be learned and adapted while training the data. The weights and parameters are adjusted by minimizing the difference between the actual and expected data through the backpropagation of the network. The main motivation of this work is to develop effective microscopic blood slide analysis models for the diagnosis of ALL. The medical dataset lacks huge images, making it difficult to train the CNN network from scratch so that pre-trained CNN models can extract features from the C-NMC 2019 and ALL_IDB2 datasets. To improve the performance of the proposed systems, PCA was used to select only important features, combine features of CNN, and feed them to RF and XGBoost algorithms.

The main major contributions of this study are as follows:

Enhancement of microscopic blood images by two consecutive filters;

Applying the active contour algorithm to only extract WBC regions and feed them to CNN models;

Analysis and diagnosis of ALL images using hybrid techniques of CNN-RF and CNN-XGBoost;

Selection of highly representative features and removal of redundant ones using PCA;

Fusion of deep feature maps of CNN models to obtain hybrid deep feature map vectors for DenseNet121-ResNet50, ResNet50-MobileNet, DenseNet121-MobileNet, and DenseNet121-ResNet50-MobileNet models and their classification by RF and XGBoost classifiers.

The rest of the paper is organized as follows:

Section 2 analyzes previous studies’ techniques for diagnosing ALL and summarizes their findings.

Section 3 explains proposed strategies for analyzing microscopic blood images to diagnose ALL.

Section 4 summarizes the results of the proposed methods.

Section 5 discusses the performances of all systems and compares them.

Section 6 concludes the study.

2. Related Work

This section presents a collection of previous studies in which researchers focused on diagnosing glass slide images for ALL detection.

Ghada et al. [

11] used a CNN based on a Bayesian approach to analyze microscopic smear images to diagnose ALL disease. The Bayesian approach frequently searches for optimal parameters to minimize objective error. Muhammad et al. [

12] used a VGG16 model based on Efficient Channel Attention (ECA) to extract deep features for better classification. The ECA works to overcome the similarities between natural and explosive images. The model achieved an accuracy of 91.1% for diagnosing the C-NMC dataset. Niranjana et al. [

13] converted images to HIS color space, segmented WBC cells, then trained the ALLNET model and tested its performance, which achieved an accuracy of 95.54% and a sensitivity of 95.91%. Pradeep et al. [

14] used three CNN models to extract image features of an ALL dataset and classify them by RF and SVM. Sorayya et al. [

15] modified the weights and parameters of the ResNet50 and VGG16 models to train the ALL dataset. They also proposed six machine-learning algorithms and a convolutional network with ten convolutional layers and a classification layer. The convolutional network achieved an accuracy of 82.1%, while the VGG16 network achieved an accuracy of 84.62%. The best accuracy of the machine-learning algorithm was 81.72% by RF. Gundepudi et al. [

16] used the AlexNet hybrid model with four machine-learning algorithms to analyze microscopic blood images to classify the ALL-IDB2 dataset. AlexNet extracts the features and feeds them into machine-learning algorithms for classification. Raheel et al. [

17] used a hybrid of two CNNs for detecting ALL. The background was removed, noise was reduced, and cells of interest were segmented. The leukemia dataset features were extracted by two CNNs and combined and classified by SVM. Mohamed et al. [

18] used the obstetric adversarial network to classify blood samples for the ALL-IDB dataset and evaluate the results through a hematologist. Rana et al. [

19] used heat mapping and a PCA assessment of prediction of whole blood cell count by ANN which helped to increase the accuracy of diagnosis of morphometric parameters of leukemia samples. A heat map fed with cell population data produced a cluster to separate bone marrow from lymphoblastic leukemia. The network achieved an accuracy of 89.4%. Sanam et al. [

20] used a generative adversarial algorithm to validate data augmentation, feeding the leukemia dataset to the CNN model based on Tversky loss function with controlled convolution and density layers. Tulasi et al. [

21] used the GBHSV–Leuk method for segmenting and classifying ALL cell diseases. The technique consists of two stages: the Gaussian Blurring filter to improve the images and the Hue Saturation Value technique to separate the cell from the rest of the image. The GBHSV–Leuk method achieved an accuracy of 95.41%. Yunfei et al. [

22] used the ternary stream-driven, WT-DFN-augmented data classification network to identify lymphoblasts. An attention map is generated for each image to highlight infected cells. Distinctive features are obtained by erasing and cropping attention. Luis et al. [

23] used the LeukNet model based on the VGG16 model with fewer dense layers. Network parameters were adjusted to evaluate the leukemia dataset, which achieved an accuracy of 82.46%. Mohamed et al. [

24] used a DNN hybrid network trained on CLL MRD from 202 F-DNN patients and 138 L-DNN patients. DNN proved its ability to detect CLL MRD with an overall accuracy of 97.1%. Ibrahim et al. [

25] performed an extraction of handcrafted features and their classifications by FFNN and SVM. They also used a hybrid technique between CNN and SVM to classify the two datasets, ALL_IDB1 and ALL_IDB2.

Jan et al. [

26] used a flow cytometry method which detects and analyzes specific genetic drift through machine-learning algorithms such as decision trees and gradient boosting. The deviation appears in t(12;21)/ETV6-RUNX1 blast cells with high CD10 and CD34 and low CD81 expression. Nada et al. [

27] used a machine-learning algorithm for acute leukemia classification based on a feature-selection algorithm by the gray-wolf optimization method. Adaptive thresholding was applied to improve images and then classify them by SVM, KNN, and NB. The SVM achieved an accuracy of 96%, a sensitivity of 89.5%, and an accuracy of 94.5%.

Ahmad et al. [

28] proposed four machine-learning algorithms for image analysis of the C-NMC dataset for leukemia prediction. The images were optimized and features were extracted using three DNN models. ANOVA analyzed data, then the features were selected by random forest. The SVM algorithm achieved the best accuracy of 90% compared with other algorithms.

The diversity of methodologies and researchers focused on reaching satisfactory results for the early detection of ALL disease is noted from previous studies. Thus, this study focused on extracting highly representative features by applying active contour technology to obtain the WBC region and sending them to CNN models for analysis. This research also focused on extracting deep feature maps for CNN models and merging feature maps of multiple models. Finally, the RF and XGBoost classifiers classify the fused CNN features.

4. Results of System Evaluation

4.1. Splitting the C-NMC 2019 and ALL_IDB2 Datasets

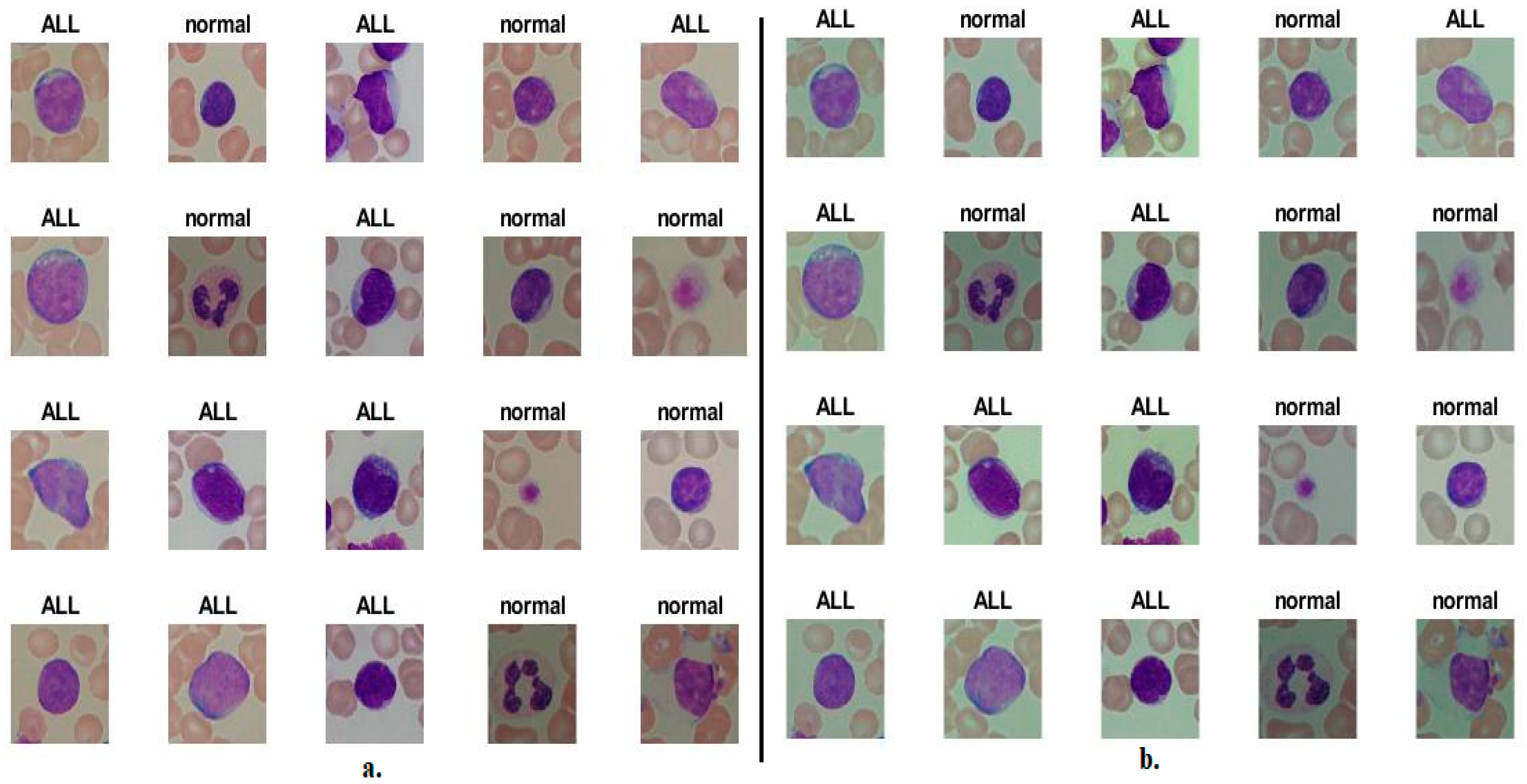

This study developed several hybrid systems of deep and machine-learning based on hybrid features from two datasets, C-NMC 2019 and ALL-IDB2, for acute leukemia. The C-NMC 2019 and ALL-IDB2 datasets contain 10,661 and 260 micrographs, respectively. It is worth noting that the C-NMC 2019 dataset contains microscopic images of WBC cells only, while the ALL-IDB2 dataset contains microscopic images of WBC blood cells along with other essential blood components. During the implementation of the systems, the two datasets were divided into 80% for training the systems and validation (80:20) and feeding it with sufficient information for its readiness to generalize it to any other future dataset, and 20% for testing, as shown in

Table 1.

4.2. Systems Evaluation Metrics and Hyperparameters

The confusion matrix is the core criterion for evaluating systems’ performance on a medical dataset. In this study, the systems received the images of the two datasets and produced the confusion matrix as an evaluation tool. The confusion matrix is in the form of a quadrilateral matrix. The output classes are represented by rows, while the columns represent the target classes. Confusion matrix cells contain all test pictures that the systems correctly classify as TP and TN and pictures that the systems incorrectly classify as FP and FN [

53]. Therefore, the performance of the methods in this study was measured through Equations (7)–(11).

Table 2 shows the hyperparameter options tuning during model training for the two acute leukemia datasets.

4.3. Augmentation Data Technique for Balancing Classes

Achieving satisfactory results with deep learning models requires a large number of images during the training phase to perform well during the testing phase, generalize the systems to another dataset, and avoid overfitting. Unbalanced dataset classes lead to tendencies of accuracy to majority classes, which poses a challenge to artificial intelligence systems. The data augmentation technique addressed these challenges facing deep learning models. This technology solves both challenges in parallel [

54]. To avoid the problem of overfitting and the extent to which systems generalize to other datasets, the data was artificially increased so that new images were artificially created from the same dataset through many operations such as rotating at different angles, shifting up and down, flipping, and others. To solve the problem of class imbalance, the same technique was used, where the images of the majority class were increased by a lesser amount than the minority classes; thus, this technique solved the two challenges in parallel as shown in

Table 3.

4.4. Results of CNN Model

This section presents the performances of the DenseNet121, ResNet50, and MobileNet models for analyzing microscopic blood images for diagnosing two acute leukemia datasets. The DenseNet121, ResNet50, and MobileNet models received acute leukemia images and extracted feature maps through convolutional layers. Fully connected layers received high-level feature maps and put them into single-level feature vectors. The SoftMax activation function labeled each image into its appropriate class and classified all images into ALL or normal classes.

The DenseNet121, ResNet50, and MobileNet models yielded good results for classifying the C-NMC 2019 and ALL-IDB2 acute leukemia datasets, as shown in

Table 4 and

Figure 7.

First, for the C-NMC 2019 dataset, DenseNet121 achieved an AUC of 94.65%, accuracy of 90.3%, sensitivity of 88.8%, precision of 89%, and specificity of 88.35%. ResNet50 achieved an AUC of 95.15%, accuracy of 90.8%, sensitivity of 90.55%, precision of 90.5%, and specificity of 90.53%. In contrast, the MobileNet model achieved an AUC of 93.05%, accuracy of 89.4%, sensitivity of 87.95%, precision of 87.7%, and specificity of 88.3%.

Second, for the ALL-IDB2 dataset, DenseNet121 achieved an AUC of 93.8%, accuracy of 90.4%, sensitivity of 90.15%, precision of 90.45%, and specificity of 90.2%. ResNet50 achieved an AUC of 92.35%, accuracy of 90.3%, sensitivity of 92.25%, precision of 92.55%, and specificity of 91.8%. In contrast, MobileNet achieved an AUC of 93.05%, accuracy of 90.4%, sensitivity of 90.25%, precision of 90.45%, and specificity of 89.95%.

4.5. Results of CNN Model Based on Segmentation Algorithm

This section presents the performances of DenseNet121, ResNet50, and MobileNet models based on WBC region segmentation to analyze blood micrographs diagnosing two acute leukemia datasets. DenseNet121, ResNet50, and MobileNet models received improved images and fed them into the active contour algorithm to isolate the WBC region from the rest of the blood components. The DenseNet121, ResNet50, and MobileNet models received images of regions of interest (WBC cells) and extracted feature maps through convolutional layers. Fully connected layers received high-level feature maps and put them into single-level feature vectors. The SoftMax activation function labeled each image in its appropriate category and classified all images into ALL or normal classes.

Based on the segmentation algorithm, the DenseNet121, ResNet50, and MobileNet models yielded good results for classifying the C-NMC 2019 and ALL-IDB2 acute leukemia datasets, as shown in

Table 5 and

Figure 8.

First, for the C-NMC 2019 dataset, DenseNet121 with features of WBC region achieved an AUC of 95.95%, accuracy of 94%, sensitivity of 93.3%, precision of 93.15%, and specificity of 92.75%. ResNet50 with features of WBC region achieved an AUC of 94.9%, accuracy of 93.5%, sensitivity of 93.1%, precision of 92.7%, and specificity of 92.85%. In contrast, MobileNet with features of WBC region achieved an AUC of 96.5%, accuracy of 94.2%, sensitivity of 93.25%, precision of 93.4%, and specificity of 93.05%.

Second, for the ALL-IDB2 dataset, DenseNet121 with features of WBC region achieved an AUC of 96.95%, accuracy of 84.2%, sensitivity of 93.65%, precision of 94.85%, and specificity of 93.9%. ResNet50 with features of WBC region achieved an AUC of 97.1%, accuracy of 96.2%, sensitivity of 95.8%, precision of 96.45%, and specificity of 95.75%. In contrast, MobileNet with features of WBC region achieved an AUC of 96.65%, accuracy of 96.2%, sensitivity of 96.1%, precision of 96.15%, and specificity of 96.35%.

4.6. Results of Strategy of Machine-Learning with Features CNN

This section presents the performance of hybrid systems between deep and machine-learning for analyzing microscopic blood images for diagnosing two acute leukemia datasets. The mechanism of action of this technique is to segment the WBC cell region and isolate it from the rest of the basic blood components. The DenseNet121, ResNet50, and MobileNet models received the region of interest, extracted the deep feature maps, reduced the dimensions, and saved the high representation features by PCA. RF and XGBoost classifiers received highly representative features and classified them with high accuracy.

The RF and XGBoost classifiers with features DenseNet121, ResNet50, and MobileNet yielded good results for classifying acute leukemia’s C-NMC 2019 and ALL-IDB2 datasets.

First, for the C-NMC 2019 dataset, as shown in

Table 6 and

Figure 9, the RF classifier with DenseNet121 features achieved an AUC of 98.15%, accuracy of 95%, sensitivity of 94.8%, precision of 94.15%, and specificity of 94.3%. In contrast, RF achieved with ResNet50 features achieved an AUC of 97.25%, accuracy of 95.5%, sensitivity of 95.35%, precision of 94.8%, and specificity of 94.7%. RF with MobileNet features achieved an AUC of 96.75%, accuracy of 96.2%, sensitivity of 95.75%, precision of 95.55%, and specificity of 95.65%.

The XGBoost classifier with DenseNet121 features achieved an AUC of 95.5%, accuracy of 94.7%, sensitivity of 96.1%, precision of 93.7%, and specificity of 93.8%. In contrast, XGBoost achieved with ResNet50 features achieved an AUC of 97.65%, accuracy of 95.4%, sensitivity of 94.15%, precision of 95.25%, and specificity of 94.3%. XGBoost with MobileNet features achieved an AUC of 96%, accuracy of 95.3%, sensitivity of 94.35%, precision of 94.45%, and specificity of 94.75%.

Second, for the ALL-IDB2 dataset, RF and XGBoost classifiers with CNN-fused features achieved promising results. The RF classifier with features of DenseNet121, ResNet50, and MobileNet achieved accuracy, AUC, sensitivity, precision, and specificity of 100%.

In contrast, the XGBoost classifier with features of DenseNet121, ResNet50, and MobileNet achieved accuracy, AUC, sensitivity, precision, and specificity of 100%.

When evaluating the systems for the dataset C-NMC 2019: The RF classifier with CNN features produces a confusion matrix, as shown in

Figure 10. The hybrid systems DenseNet121-RF, ResNet50-RF, and MobileNet-RF achieved good results for each class. First, DenseNet121-RF attained an accuracy of 96.1% and 92.6 for ALL and normal classes. Second, ResNet50-RF attained an accuracy of 96.6% and 93.4% for ALL and normal classes. Third, MobileNet-RF attained an accuracy of 97.1% and 94.2% for ALL and normal classes.

Figure 11 shows the confusion matrix generated by the hybrid systems DenseNet121-XGBoost, ResNet50-XGBoost, and MobileNet-XGBoost. First, DenseNet121-XGBoost attained an accuracy of 95.8% and 92.2% for ALL and normal classes. Second, ResNet50-XGBoost attained an accuracy of 97.7% and 90.4% for ALL and normal classes. Third, MobileNet-XGBoost attained an accuracy of 96.3% and 93.2% for ALL and normal classes.

When evaluating the systems for the ALL-IDB2 dataset: the RF classifier with CNN features produces a confusion matrix, as shown in

Figure 12. The hybrid systems DenseNet121-RF, ResNet50-RF, and MobileNet-RF achieved good results at each class level. First, DenseNet121-RF attained an accuracy of 100% and 100% for ALL and normal classes. Second, ResNet50-RF attained an accuracy of 100% and 100% for ALL and normal classes. Third, MobileNet-RF attained an accuracy of 100% and 100% for ALL and normal classes.

Figure 13 shows the confusion matrix generated by the hybrid systems DenseNet121-XGBoost, ResNet50-XGBoost, and MobileNet-XGBoost. First, DenseNet121-XGBoost attained an accuracy of 100% and 100% for ALL and normal classes. Second, ResNet50-XGBoost attained an accuracy of 100% and 100% for ALL and normal classes. Third, MobileNet-XGBoost attained an accuracy of 100% and 100% for ALL and normal classes.

4.7. Results of Strategy of Machine-Learning with Fusion Features CNN

This section presents the performances of hybrid systems based on hybrid CNN features for analyzing blood micrographs for diagnosing two acute leukemia datasets. The mechanism of action of this technique is to improve microscopic blood images, then segment the WBC cell region and isolate it from the rest of the main blood components. DenseNet121, ResNet50, and MobileNet models received a region of interest (WBC cells), extracted feature maps, and then selected the important features and deleted duplicates by PCA. The deep feature maps between CNN models were serially merged as follows: DenseNet121-ResNet50, ResNet50-MobileNet, DenseNet121-MobileNet, and DenseNet121-ResNet50-MobileNet. The hybrid CNN features were fed to RF and XGBoost classifiers to classify them accurately.

The RF and XGBoost classifiers with CNN fusion features yielded superior results for classifying the C-NMC 2019 and ALL-IDB2 acute leukemia datasets.

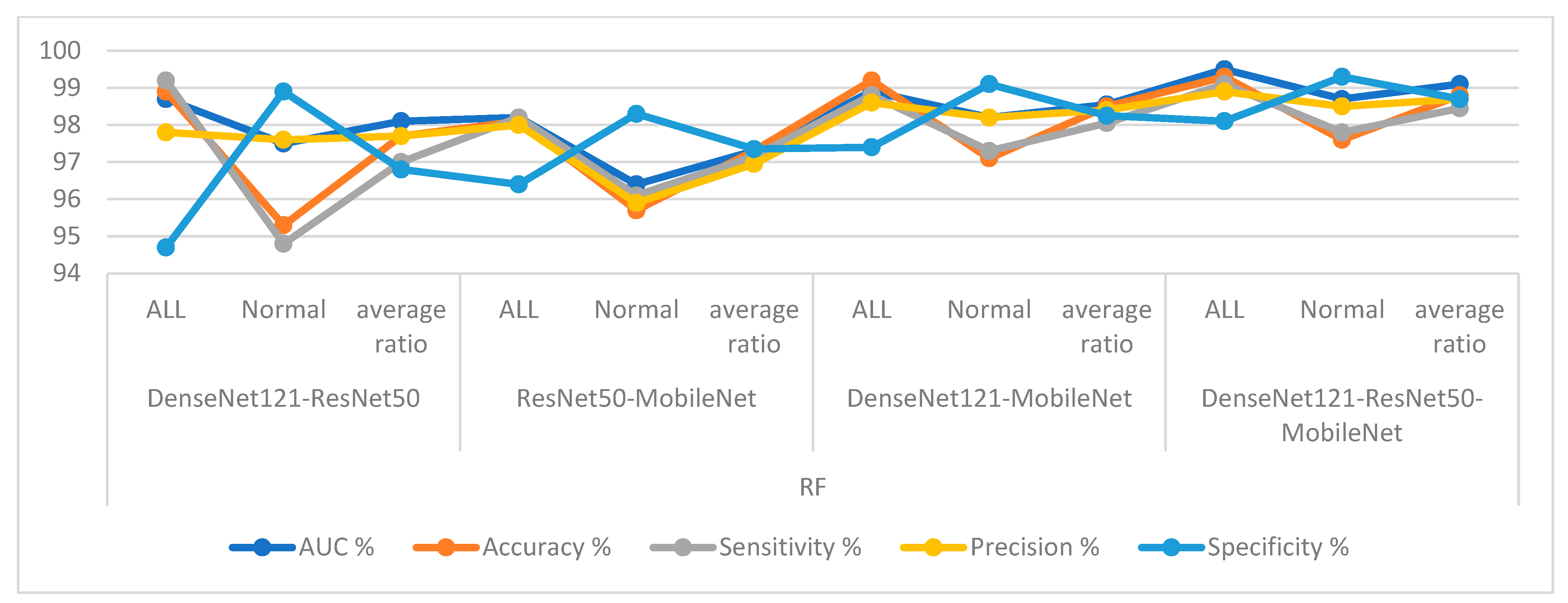

First, for the C-NMC 2019 dataset, RF and XGBoost classifiers with CNN-fused features achieved promising results as shown in

Table 7 and

Figure 14. The RF classifier with fusion features of DenseNet121-ResNet50 achieved an AUC of 98.1%, accuracy of 97.7%, sensitivity of 97%, precision of 97.75%, and specificity of 96.8%. The RF classifier with fusion features of ResNet50-MobileNet achieved an AUC of 97.4%, accuracy of 97.3%, sensitivity of 97.15%, precision of 96.95%, and specificity of 97.35%. In contrast, the RF classifier with fusion features of DenseNet121-MobileNet achieved an AUC of 98.55%, accuracy of 98.5%, sensitivity of 98.05%, precision of 98.4%, and specificity of 98.25%. The RF classifier with fusion features of DenseNet121-ResNet50-MobileNet achieved an AUC of 99.1%, accuracy of 98.8%, sensitivity of 98.45%, precision of 98.7%, and specificity of 98.85%.

In contrast, the XGBoost classifier with fusion features of DenseNet121-ResNet50 achieved an AUC of 97.05%, accuracy of 96.3%, sensitivity of 96.1%, precision of 95.45%, and specificity of 95.8%.

The XGBoost classifier with fusion features of ResNet50-MobileNet achieved an AUC of 97.85%, accuracy of 97.6%, sensitivity of 97.8%, precision of 97.15%, and specificity of 97.35%. In contrast, the XGBoost classifier with fusion features of DenseNet121-MobileNet achieved an AUC of 98.85%, accuracy of 98.1%, sensitivity of 97.8%, precision of 97.85%, and specificity of 97.95%. The XGBoost classifier with fusion features of DenseNet121-ResNet50-MobileNet achieved an AUC of 98.55%, accuracy of 98.2%, sensitivity of 98%, precision of 97.85%, and specificity of 98.15%.

Second, for the ALL-IDB2 dataset, RF and XGBoost classifiers with CNN-fused features achieved promising results. The RF classifier with fusion features of DenseNet121-ResNet50, ResNet50-MobileNet, DenseNet121-MobileNet, and DenseNet121-ResNet50-MobileNet achieved accuracy, AUC, sensitivity, precision, and specificity of 100%.

In contrast, the XGBoost classifier with fusion features of DenseNet121-ResNet50, ResNet50-MobileNet, DenseNet121-MobileNet, and DenseNet121-ResNet50-MobileNet achieved accuracy, AUC, sensitivity, precision, and specificity of 100%.

When evaluating dataset systems C-NMC 2019: The RF classifier with fusion CNN features produces a confusion matrix, as shown in

Figure 15. First, DenseNet121-ResNet50-RF attained an accuracy of 98.9% and 95.3% for ALL and normal classes. Second, ResNet50-MobileNet-RF attained an accuracy of 98.1% and 95.7% for ALL and normal classes. Third, DenseNet121-MobileNet-RF attained an accuracy of 99.2% and 97.1% for ALL and normal classes. Fourth, DenseNet121-ResNet50-MobileNet-RF attained an accuracy of 99.3% and 97.6% for ALL and normal classes.

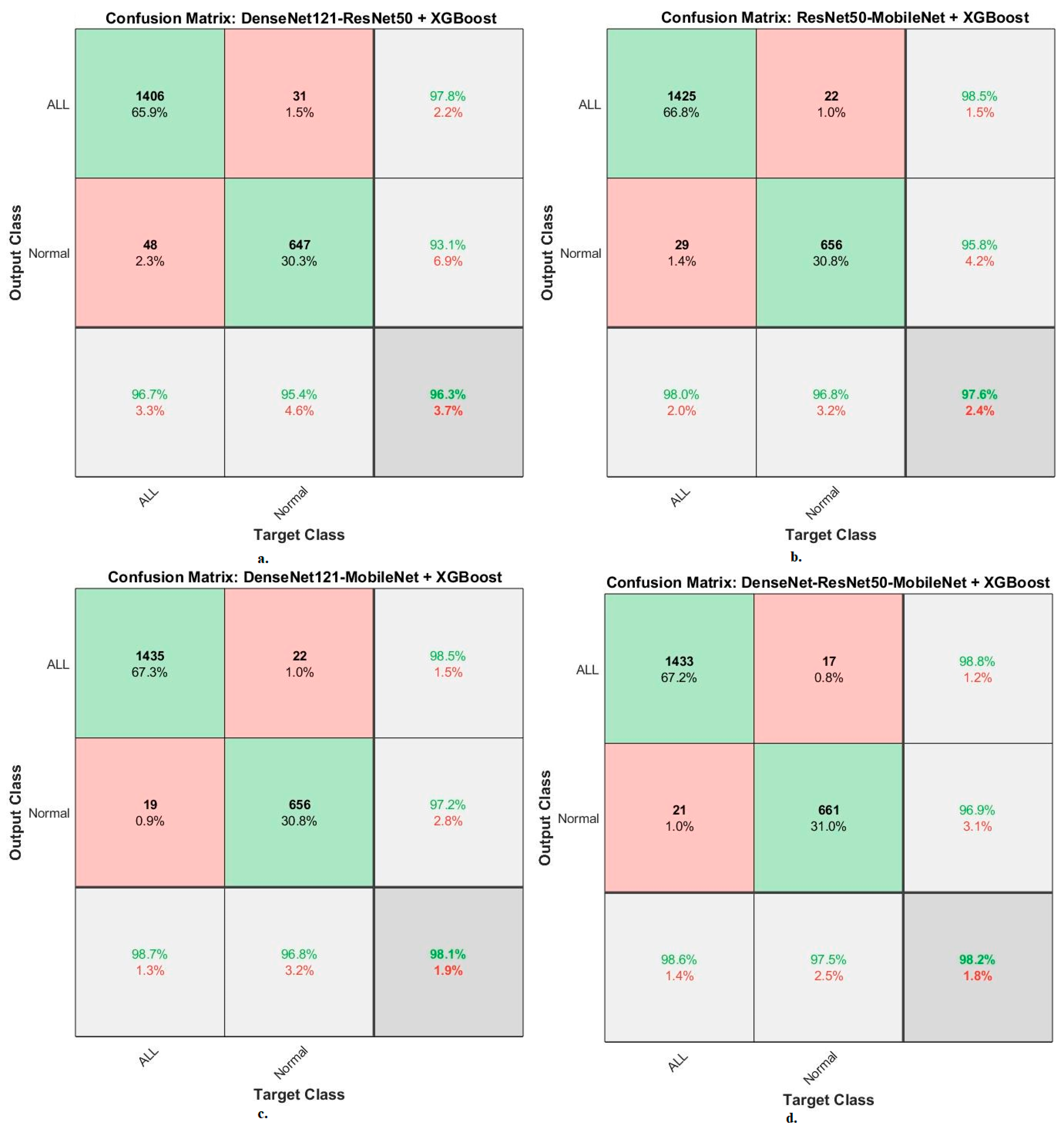

The XGBoost classifier with fusion CNN features produces a confusion matrix, as shown in

Figure 16. First, DenseNet121-ResNet50-XGBoost achieved an accuracy of 96.7% and 95.4% for ALL and normal classes. Second, ResNet50-MobileNet-XGBoost achieved an accuracy of 98% and 96.8 for ALL and normal classes. Third, DenseNet121-MobileNet-XGBoost achieved an accuracy of 98.7% and 96.8% for ALL and normal classes. Fourth, DenseNet121-ResNet50-MobileNet-XGBoost achieved an accuracy of 98.6% and 97.5% for ALL and normal classes.

When evaluating dataset systems ALL-IDB2: The RF classifier with fusion CNN features produces a confusion matrix, as shown in

Figure 17. First, DenseNet121-ResNet50-RF attained an accuracy of 100% and 100% for ALL and normal classes. Second, ResNet50-MobileNet-RF attained an accuracy of 100% and 100% for ALL and normal classes. Third, DenseNet121-MobileNet-RF attained an accuracy of 100% and 100% for ALL and normal classes. Fourth, DenseNet121-ResNet50-MobileNet-RF attained an accuracy of 100% and 100% for ALL and normal classes.

The XGBoost classifier with fusion CNN features produces a confusion matrix, as shown in

Figure 18. First, DenseNet121-ResNet50-XGBoost achieved an accuracy of 100% and 100% for ALL and normal classes. Second, ResNet50-MobileNet-XGBoost achieved an accuracy of 100% and 100% for ALL and normal classes. Third, DenseNet121-MobileNet-XGBoost achieved an accuracy of 100% and 100% for ALL and normal classes. Fourth, DenseNet121-ResNet50-MobileNet-XGBoost achieved an accuracy of 100% and 100% for ALL and normal classes.

5. Discussion of the Results of the Performances of the Systems

Acute leukemia is fatal for children and young adults if not diagnosed early. The characteristics of the blasted white blood cells are similar to normal, especially in the first stage, which causes a challenge for hematologists. Therefore, computer-aided automated systems help in distinguishing infected WBC from normal. This work focused on developing systems consisting of two parts, deep and machine-learning, based on fused features. Microscopic blood images were improved for all systems and WBCs were isolated from the rest of the main blood components using the active contour method.

The first strategy was to evaluate the pre-trained DenseNet121, ResNet50, and MobileNet models of the C-NMC 2019 and ALL-IDB2 acute leukemia datasets. With the C-NMC 2019 dataset, DenseNet121, ResNet50, and MobileNet achieved an accuracy of 90.3%, 91.8%, and 89.4%, respectively. Whereas with the ALL-IDB2 dataset, the DenseNet121, ResNet50, and MobileNet models achieved 90.4%, 92.3%, and 90.3% accuracy, respectively.

The second strategy for analysis of blood micrographs for diagnosing of NMC 2019 and ALL-IDB2 datasets using DenseNet121, ResNet50, and MobileNet models was based on the active contour method. With the C-NMC 2019 dataset, DenseNet121, ResNet50, and MobileNet achieved an accuracy of 94%, 94.2%, and 93.5%, respectively. Whereas with the ALL-IDB2 dataset, the DenseNet121, ResNet50, and MobileNet models achieved 94.2%, 96.2%, and 96.2% accuracy, respectively.

The third strategy was diagnosing images of two NMC 2019 and ALL-IDB2 datasets using hybrid techniques between CNN models (DenseNet121, ResNet50, and MobileNet) and RF and XGBoost classifiers based on the active contour method. With the C-NMC 2019 dataset, the DenseNet121-RF, ResNet50-RF, and MobileNet-RF systems achieved an accuracy of 100%, 100%, and 100%, respectively. In contrast, the DenseNet121-XGBoost, ResNet50-XGBoost, and MobileNet-XGBoost systems achieved an accuracy of 95%, 95.5%, and 96.2%, respectively. Whereas with the ALL-IDB2 dataset, the DenseNet121-RF, ResNet50-RF, and MobileNet-RF systems achieved an accuracy of 100%, 100%, and 100%, respectively. In contrast, DenseNet121-XGBoost, ResNet50-XGBoost, and MobileNet-XGBoost achieved an accuracy of 100%, 100%, and 100%, respectively.

The fourth strategy was to diagnose images of two NMC 2019 and ALL-IDB2 datasets by hybrid techniques between CNN models (DenseNet121, ResNet50 and MobileNet) and RF and XGBoost classifiers based on fused CNN features. With the C-NMC 2019 dataset, the DenseNet121-ResNet50-RF, ResNet50-MobileNet-RF, DenseNet121-MobileNet-RF, and DenseNet121-ResNet50-MobileNet-RF systems achieved accuracy of 97.7%, 97.3%, 98.5%, and 98.8%, respectively. In contrast, the DenseNet121-ResNet50-XGBoost, ResNet50-MobileNet-XGBoost, DenseNet121-MobileNet-XGBoost, and DenseNet121-ResNet50-MobileNet-XGBoost systems achieved accuracy of 96.3%, 97.6%, 98.1%, and 98.2%, respectively. With the ALL-IDB2 dataset, the DenseNet121-ResNet50-RF, ResNet50-MobileNet-RF, DenseNet121-MobileNet-RF, and DenseNet121-ResNet50-MobileNet-RF systems achieved accuracy of 100%, 100%, 100%, and 100%, respectively. In contrast, the DenseNet121-ResNet50-XGBoost, ResNet50-MobileNet-XGBoost, DenseNet121-MobileNet-XGBoost, and DenseNet121-ResNet50-MobileNet-XGBoost systems achieved accuracy of 100%, 100%, 100%, and 100%, respectively.

Table 8 and

Figure 19 summarize the results of the proposed systems for analyzing microscopic blood images of the two C-NMC 2019 and ALL-IDB2 acute leukemia datasets. The table shows each system’s overall accuracy and each class’s accuracy for each system. It is noted that the pre-trained CNN models did not produce promising results for the detection of acute leukemia. While the results improved when the images were optimized, the region of interest (WBC) was segmented and then fed to the CNN models for classification. The pre-trained CNN models on the ImageNet dataset do not achieve superior results because the ImageNet dataset on which the models are trained lacks medical images. Therefore, hybrid systems CNN-RF and CNN-XGBoost were applied, where it was noticed that the results improved and the systems achieved superior results for diagnosing the two leukemia datasets. It is noted that the accuracy is better improved when the CNN features are serially combined and classified with RF and XGBoost classifiers.

For the C-NMC 2019 dataset, RF with fused features of DenseNet121-ResNet50-MobileNet reached the best accuracy for class ALL and normal with 99.3% and 97.6%, respectively. For the ALL-IDB2 dataset, the CNN-RF and CNN-XGBoost systems achieved an accuracy of 100% for both ALL and normal classes. The RF and XGBoost classifiers with fused features of CNN achieved an accuracy of 100% for ALL and normal classes.

6. Conclusions

Acute leukemia is one of the deadliest forms of leukemia affecting children and adults. It is treated through early detection before spreading to other body parts. Early detection of acute leukemia is essential for obtaining appropriate treatment. This research contributed to developing effective strategies for early diagnosis of acute leukemia by analyzing the images of C-NMC 2019 and ALL-IDB2 leukemia datasets. The blood micrographs were optimized and fed into the active contour method to extract only the WBC region for analysis by CNN models. This study focused on WBC area analysis, feature extraction by CNN models (DenseNet121, ResNet50, and MobileNet), and classification using RF and XGBoost classifiers. Each CNN model produces high and redundant features, therefore, PCA was applied to select the most important features and delete the redundant ones. Due to the similarity of the characteristics of infected and normal WBC in the early stages, and to obtain more efficient features, the CNN features were serially combined as follows: DenseNet121-ResNet50, ResNet50-MobileNet, DenseNet121-MobileNet, and DenseNet121-ResNet50-MobileNet and classified using RF and XGBoost classifiers. The systems have achieved promising results and have proven effective in assisting hematologists in the early detection of acute leukemia. RF classifier based on the fused features of DenseNet121-ResNet50-MobileNet achieved an AUC of 99.1%, accuracy of 98.8%, sensitivity of 98.45%, precision of 98.7%, and specificity of 98.85%.

The most important limitation faced in this study is the low number of images in the dataset, which causes overfitting, which was overcome by the data augmentation technique.

In future works, systems for diagnosing acute leukemia by hybrid techniques will be developed based on combining features of CNN models with color, shape, and texture features extracted by traditional feature extraction methods.