The U-Net Approaches to Evaluation of Dental Bite-Wing Radiographs: An Artificial Intelligence Study

Abstract

1. Introduction

2. Materials and Methods

2.1. Patient Selection

2.2. Radiographic Dataset

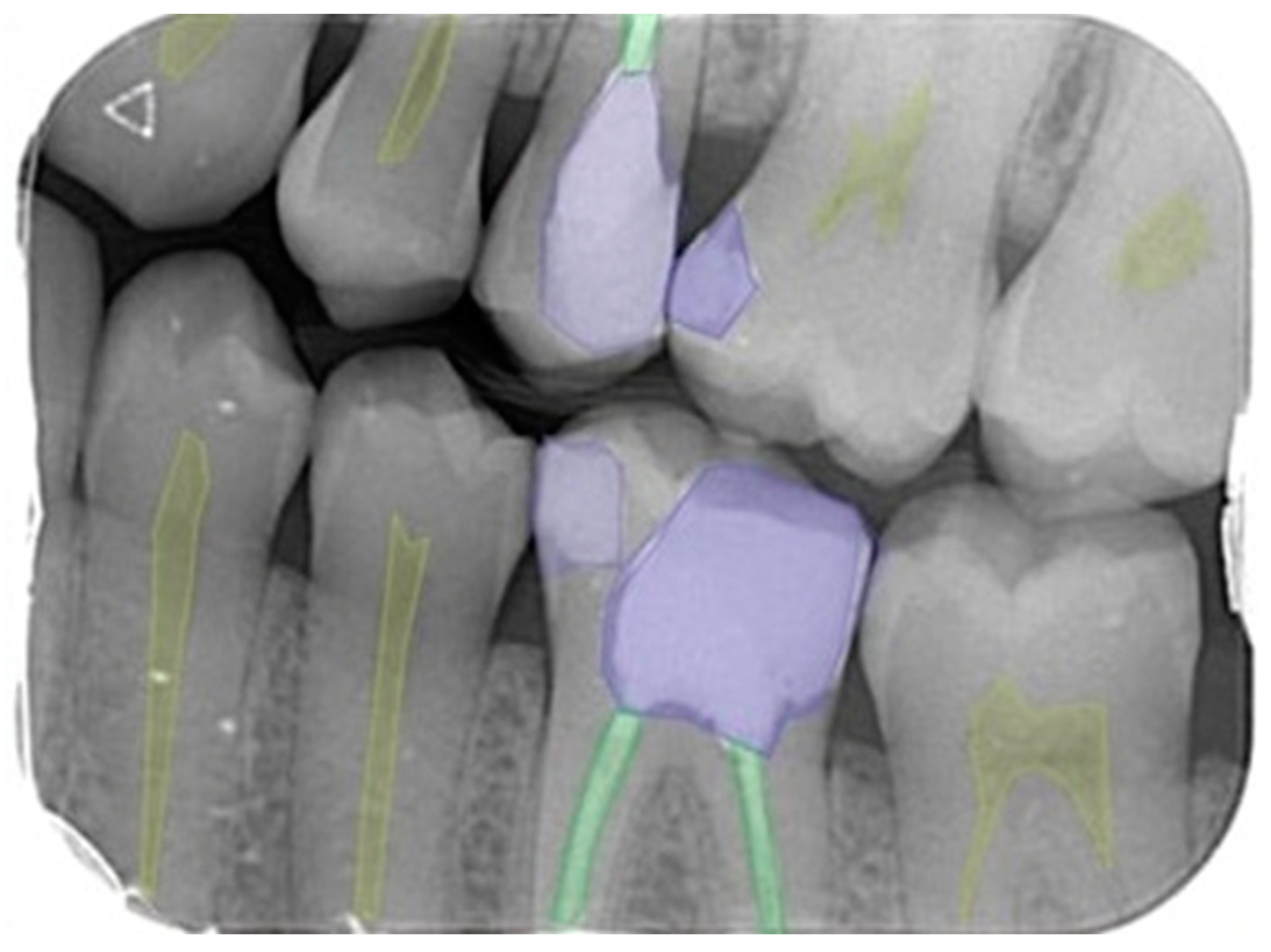

2.3. Image Evaluation

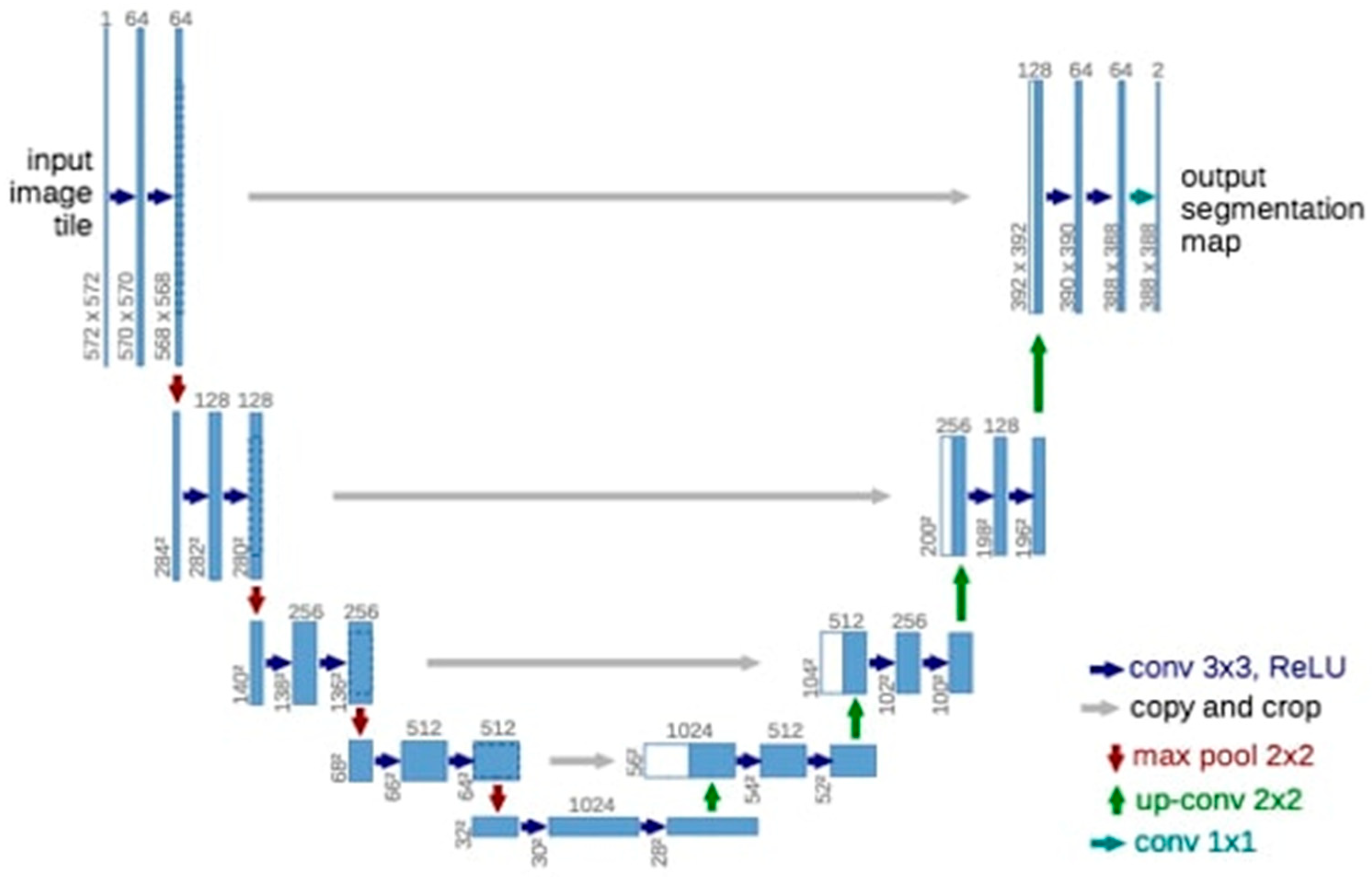

2.4. Deep Convolutional Neural Network

2.5. Model Pipeline

2.6. Training Phase

2.7. Statistical Analysis

2.8. Metrics Calculation Procedure

- True positive (TP): dental diagnoses correctly detected and segmented.

- False positive (FP): dental diagnoses detected but incorrectly segmented.

- False negative (FN): dental diagnoses incorrectly detected and segmented.

- Sensitivity (Recall):

- Precision, positive predictive value (PPV):

- F1 score:

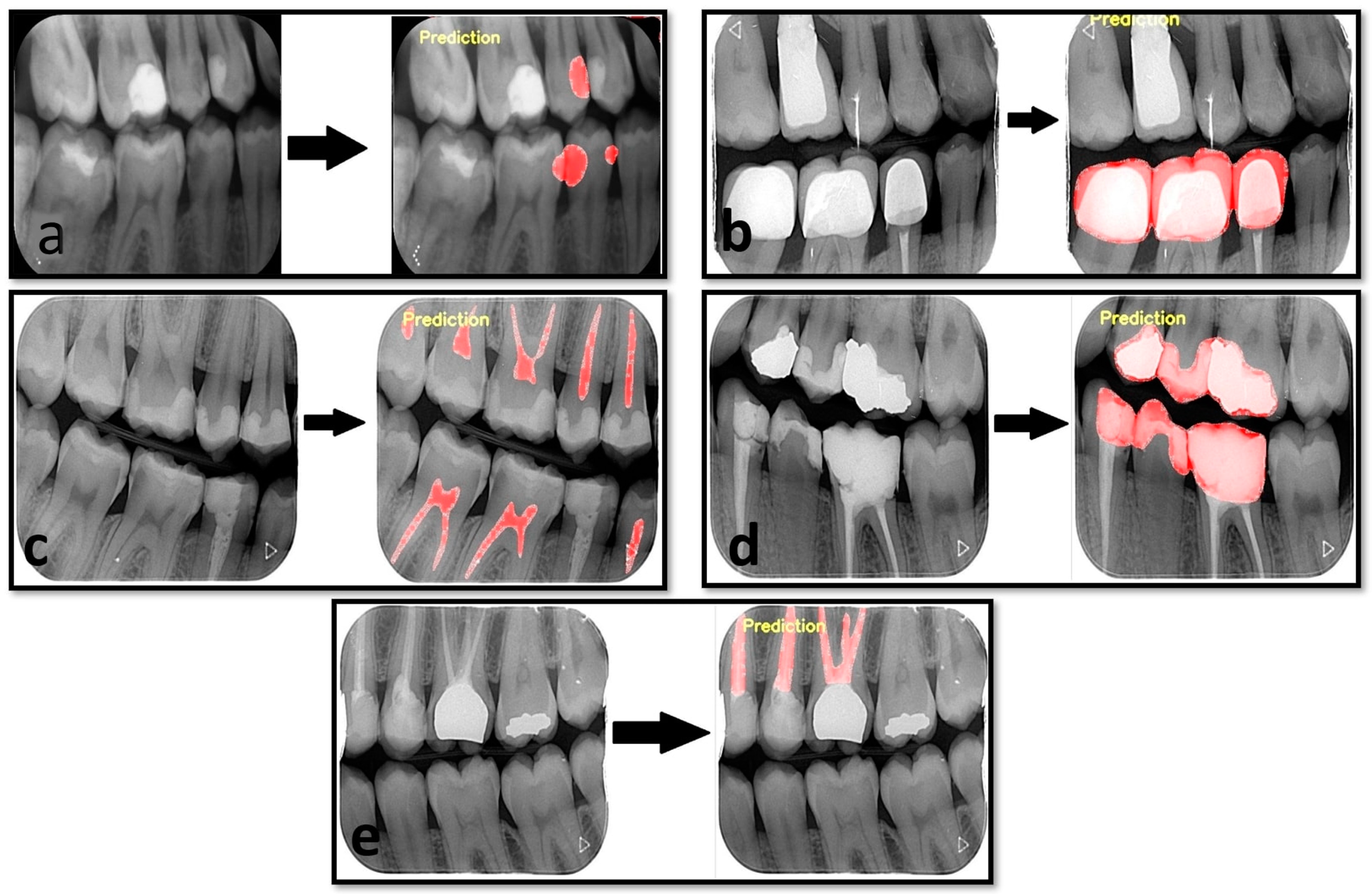

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Russell, S.; Norvig, P. Artificial Intelligence-A Modern Approach, 3rd ed.; Hirsch, M., Ed.; Pearson Education, Inc.: Hoboken, NJ, USA, 2010. [Google Scholar]

- Wong, S.H.; Al-Hasani, H.; Alam, Z.; Alam, A. Artificial intelligence in radiology: How will we be affected? Eur. Radiol. 2019, 29, 141–143. [Google Scholar] [CrossRef] [PubMed]

- Feeney, L.; Reynolds, P.; Eaton, K.; Harper, J. A description of the new technologies used in transforming dental education. Brit. Dent. J. 2008, 204, 19–28. [Google Scholar] [CrossRef] [PubMed]

- Kositbowornchai, S.; Siriteptawee, S.; Plermkamon, S.; Bureerat, S.; Chetchotsak, D. An artificial neural network for detection of simulated dental caries. Int. J. CARS 2006, 1, 91–96. [Google Scholar] [CrossRef]

- Khajanchi, A. Artificial Neural Networks: The Next Intelligence. USC, Technology Commercalization Alliance. Available online: https://globalriskguard.com/resources/market/NN.pdf (accessed on 1 December 2022).

- Brickley, M.; Shepherd, J.; Armstrong, R. Neural networks: A new technique for development of decision support systems in dentistry. J. Dent. 1998, 26, 305–309. [Google Scholar] [CrossRef] [PubMed]

- Hwang, J.-J.; Jung, Y.-H.; Cho, B.-H.; Heo, M.-S. An overview of deep learning in the field of dentistry. Imaging Sci. Dent. 2019, 49, 1. [Google Scholar] [CrossRef] [PubMed]

- Burt, J.R.; Torosdagli, N.; Khosravan, N.; RaviPrakash, H.; Mortazi, A.; Tissavirasingham, F.; Bagci, U. Deep learning beyond cats and dogs: Recent advances in diagnosing breast cancer with deep neural networks. Br. J. Radiol. 2018, 91, 20170545. [Google Scholar] [CrossRef] [PubMed]

- Khanagar, S.B.; Al-Ehaideb, A.; Maganur, P.C.; Vishwanathaiah, S.; Patil, S.; Baeshen, H.A.; Bhandi, S. Developments, application, and performance of artificial intelligence in dentistry–A systematic review. J. Dent. Sci. 2020, 16, 508–522. [Google Scholar] [CrossRef]

- Corbella, S.; Srinivas, S.; Cabitza, F. Applications of deep learning in dentistry. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2020, 132, 225–238. [Google Scholar] [CrossRef]

- White, S.C.; Pharoah, M.J. Oral Radiology-E-Book: Principles and Interpretation; Elsevier Health Sciences: St. Louis, MO, USA, 2014. [Google Scholar]

- Krois, J.; Ekert, T.; Meinhold, L.; Golla, T.; Kharbot, B.; Wittemeier, A.; Dörfer, C.; Schwendicke, F. Deep learning for the radiographic detection of periodontal bone loss. Sci. Rep. 2019, 9, 1–6. [Google Scholar] [CrossRef]

- Davies, A.; Mannocci, F.; Mitchell, P.; Andiappan, M.; Patel, S. The detection of periapical pathoses in root filled teeth using single and parallax periapical radiographs versus cone beam computed tomography–a clinical study. Int. Endod. J. 2015, 48, 582–592. [Google Scholar] [CrossRef]

- Chen, H.; Zhang, K.; Lyu, P.; Li, H.; Zhang, L.; Wu, J.; Lee, C.H. A deep learning approach to automatic teeth detection and numbering based on object detection in dental periapical films. Sci. Rep. 2019, 9, 3840. [Google Scholar] [CrossRef] [PubMed]

- Tuzoff, D.V.; Tuzova, L.N.; Bornstein, M.M.; Krasnov, A.S.; Kharchenko, M.A.; Nikolenko, S.I.; Sveshnikov, M.M.; Bednenko, G.B. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac. Radiol. 2019, 48, 20180051. [Google Scholar] [CrossRef] [PubMed]

- Orhan, K.; Bayrakdar, I.S.; Ezhov, M.; Kravtsov, A.; Özyürek, T. Evaluation of artificial intelligence for detecting periapical pathosis on cone-beam computed tomography scans. Int. Endod. J. 2020, 53, 680–689. [Google Scholar] [CrossRef] [PubMed]

- Kılıc, M.C.; Bayrakdar, I.S.; Çelik, Ö.; Bilgir, E.; Orhan, K.; Aydın, O.B.; Kaplan, F.A.; Sağlam, H.; Odabaş, A.; Aslan, A.F.; et al. Artificial intelligence system for automatic deciduous tooth detection and numbering in panoramic radiographs. Dentomaxillofac. Radiol. 2021, 50, 20200172. [Google Scholar] [CrossRef]

- Akarslan, Z.Z.; Akdevelioglu, M.; Güngör, K.; Erten, H. A comparison of the diagnostic accuracy of bitewing, periapical, unfiltered and filtered digital panoramic images for approximal caries detection in posterior teeth. Dentomaxillofac. Radiol. 2008, 37, 458–463. [Google Scholar] [CrossRef]

- Akpata, E.; Farid, M.; Al-Saif, K.; Roberts, E. Cavitation at radiolucent areas on proximal surfaces of posterior teeth. Caries Res. 1996, 30, 313–316. [Google Scholar] [CrossRef]

- Heaven, T.; Weems, R.; Firestone, A. The use of a computer-based image analysis program for the diagnosis of approximal caries from bitewing radiographs. Caries Res. 1994, 28, 55–58. [Google Scholar] [CrossRef]

- Duncan, R.C.; Heaven, T.; Weems, R.A.; Firestone, A.R.; Greer, D.F.; Patel, J.R. Using computers to diagnose and plan treatment of approval caries detected in radiographs. J. Am. Dent. Assoc. 1995, 126, 873–882. [Google Scholar] [CrossRef]

- Wenzel, A. Computer-automated caries detection in digital bitewings: Consistency of a program and its influence on observer agreement. Caries Res. 2001, 35, 12–20. [Google Scholar]

- Çiçek, Ö.; Abdulkadir, A.; Lienkamp, S.S.; Brox, T.; Ronneberger, O. 3D U-Net: Learning dense volumetric segmentation from sparse annotation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Athens, Greece, 17–21 October 2016; pp. 424–432. [Google Scholar]

- Lee, J.H.; Han, S.S.; Kim, Y.H.; Lee, C.; Kim, I. Application of a fully deep convolutional neural network to the automation of tooth segmentation on panoramic radiographs. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2020, 129, 635–642. [Google Scholar] [CrossRef]

- Harorlı, A.; Akgül, H.M.; Yılmaz, A.B.; Bilge, O.M.; Dağistan, S.; Çakur, B.; Çağlayan, F.; Miloğlu, Ö.; Sümbüllü, M.A. Ağız, Diş ve Çene Radyolojisi; Nobel Tıp Kitabevleri: İstanbul, Turkey, 2014; pp. 149–151. [Google Scholar]

- Yasa, Y.; Çelik, Ö.; Bayrakdar, I.S.; Pekince, A.; Orhan, K.; Akarsu, S.; Atasoy, S.; Bilgir, E.; Odabaş, A.; Aslan, A.F. An artificial intelligence proposal to automatic teeth detection and numbering in dental bite-wing radiographs. Acta Odontol. Scand. 2020, 79, 275–281. [Google Scholar] [CrossRef]

- Lee, S.; Oh, S.-I.; Jo, J.; Kang, S.; Shin, Y.; Park, J.-W. Deep Learning for Early Dental Caries Detection in Bitewing Radiographs. Sci. Rep. 2021, 11, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Bayraktar, Y.; Ayan, E. Diagnosis of interproximal caries lesions with deep convolutional neural network in digital bitewing radiographs. Clin. Oral Investig. 2022, 26, 623–632. [Google Scholar] [CrossRef]

- García-Cañas, Á.; Bonfanti-Gris, M.; Paraíso-Medina, S.; Martínez-Rus, F.; Pradíes, G. Diagnosis of Interproximal Caries Lesions in Bitewing Radiographs Using a Deep Convolutional Neural Network-Based Software. Caries Res. 2022, 56, 503–511. [Google Scholar] [CrossRef] [PubMed]

- Devlin, H.; Williams, T.; Graham, J.; Ashley, M. The ADEPT study: A comparative study of dentists’ ability to detect enamel-only proximal caries in bitewing radiographs with and without the use of AssistDent artificial intelligence software. Br. Dent. J. 2021, 231, 481–485. [Google Scholar] [CrossRef] [PubMed]

- Devito, K.L.; de Souza Barbosa, F.; Filho, W.N.F. An artificial multilayer perceptron neural network for diagnosis of proximal dental caries. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontology 2008, 106, 879–884. [Google Scholar] [CrossRef] [PubMed]

- Cantu, A.G.; Gehrung, S.; Krois, J.; Chaurasia, A.; Rossi, J.G.; Gaudin, R.; Elhennawy, K.; Schwendicke, F. Detecting caries lesions of different radiographic extension on bitewings using deep learning. J. Dent. 2020, 100, 103425. [Google Scholar] [CrossRef]

- Bayrakdar, I.S.; Orhan, K.; Akarsu, S.; Çelik, Ö.; Atasoy, S.; Pekince, A.; Yasa, Y.; Bilgir, E.; Sağlam, H.; Aslan, A.F.; et al. Deep-learning approach for caries detection and segmentation on dental bitewing radiographs. Oral Radiol. 2021, 38, 468–479. [Google Scholar] [CrossRef]

- Srivastava, M.M.; Kumar, P.; Pradhan, L.; Varadarajan, S. Detection of tooth caries in bitewing radiographs using deep learning. arXiv 2017, arXiv:1711.07312. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Livne, M.; Rieger, J.; Aydin, O.U.; Taha, A.A.; Akay, E.M.; Kossen, T.; Sobesky, J.; Kelleher, J.D.; Hildebrand, K.; Frey, D.; et al. A U-Net deep learning framework for high performance vessel segmentation in patients with cerebrovascular disease. Front. Neurosci. 2019, 13, 97. [Google Scholar] [CrossRef] [PubMed]

- Koç, A.B.; Akgün, D. U-net Mimarileri ile Glioma Tümör Segmentasyonu Üzerine Bir Literatür Çalışması. Avrupa Bilim Ve Teknol. Derg. 2021, 26, 407–414. [Google Scholar]

- Moran, M.; Faria, M.; Giraldi, G.; Bastos, L.; Oliveira, L.; Conci, A. Classification of Approximal Caries in Bitewing Radiographs Using Convolutional Neural Networks. Sensors 2021, 21, 5192. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Khan, H.A.; Haider, M.A.; Ansari, H.A.; Ishaq, H.; Kiyani, A.; Sohail, K.; Khurram, S.A. Automated feature detection in dental periapical radiographs by using deep learning. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2021, 131, 711–720. [Google Scholar] [CrossRef]

| Bite-Wing Images | |||

|---|---|---|---|

| Diagnoses | Training Group | Testing Group | Validation Group |

| Dental Caries | 1052 | 33 | 132 |

| Dental Crown | 264 | 9 | 36 |

| Dental pulp | 1560 | 50 | 200 |

| Dental restoration filling material | 1308 | 41 | 164 |

| Dental root-canal filling material | 812 | 25 | 100 |

| Measurement Value | |||

|---|---|---|---|

| Sensitivity (Recall) | Precision | F1 Score | |

| Dental caries | 0.8235 | 0.9491 | 0.8818 |

| Dental crown | 0.9285 | 1 | 0.9629 |

| Dental pulp | 0.9843 | 0.9429 | 0.9631 |

| Dental restoration filling material | 0.9622 | 0.9807 | 0.9714 |

| Dental root-canal filling material | 0.9459 | 1 | 0.9722 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Baydar, O.; Różyło-Kalinowska, I.; Futyma-Gąbka, K.; Sağlam, H. The U-Net Approaches to Evaluation of Dental Bite-Wing Radiographs: An Artificial Intelligence Study. Diagnostics 2023, 13, 453. https://doi.org/10.3390/diagnostics13030453

Baydar O, Różyło-Kalinowska I, Futyma-Gąbka K, Sağlam H. The U-Net Approaches to Evaluation of Dental Bite-Wing Radiographs: An Artificial Intelligence Study. Diagnostics. 2023; 13(3):453. https://doi.org/10.3390/diagnostics13030453

Chicago/Turabian StyleBaydar, Oğuzhan, Ingrid Różyło-Kalinowska, Karolina Futyma-Gąbka, and Hande Sağlam. 2023. "The U-Net Approaches to Evaluation of Dental Bite-Wing Radiographs: An Artificial Intelligence Study" Diagnostics 13, no. 3: 453. https://doi.org/10.3390/diagnostics13030453

APA StyleBaydar, O., Różyło-Kalinowska, I., Futyma-Gąbka, K., & Sağlam, H. (2023). The U-Net Approaches to Evaluation of Dental Bite-Wing Radiographs: An Artificial Intelligence Study. Diagnostics, 13(3), 453. https://doi.org/10.3390/diagnostics13030453