Predicting the Efficacy of Neoadjuvant Chemotherapy for Pancreatic Cancer Using Deep Learning of Contrast-Enhanced Ultrasound Videos

Abstract

:1. Introduction

2. Materials and Methods

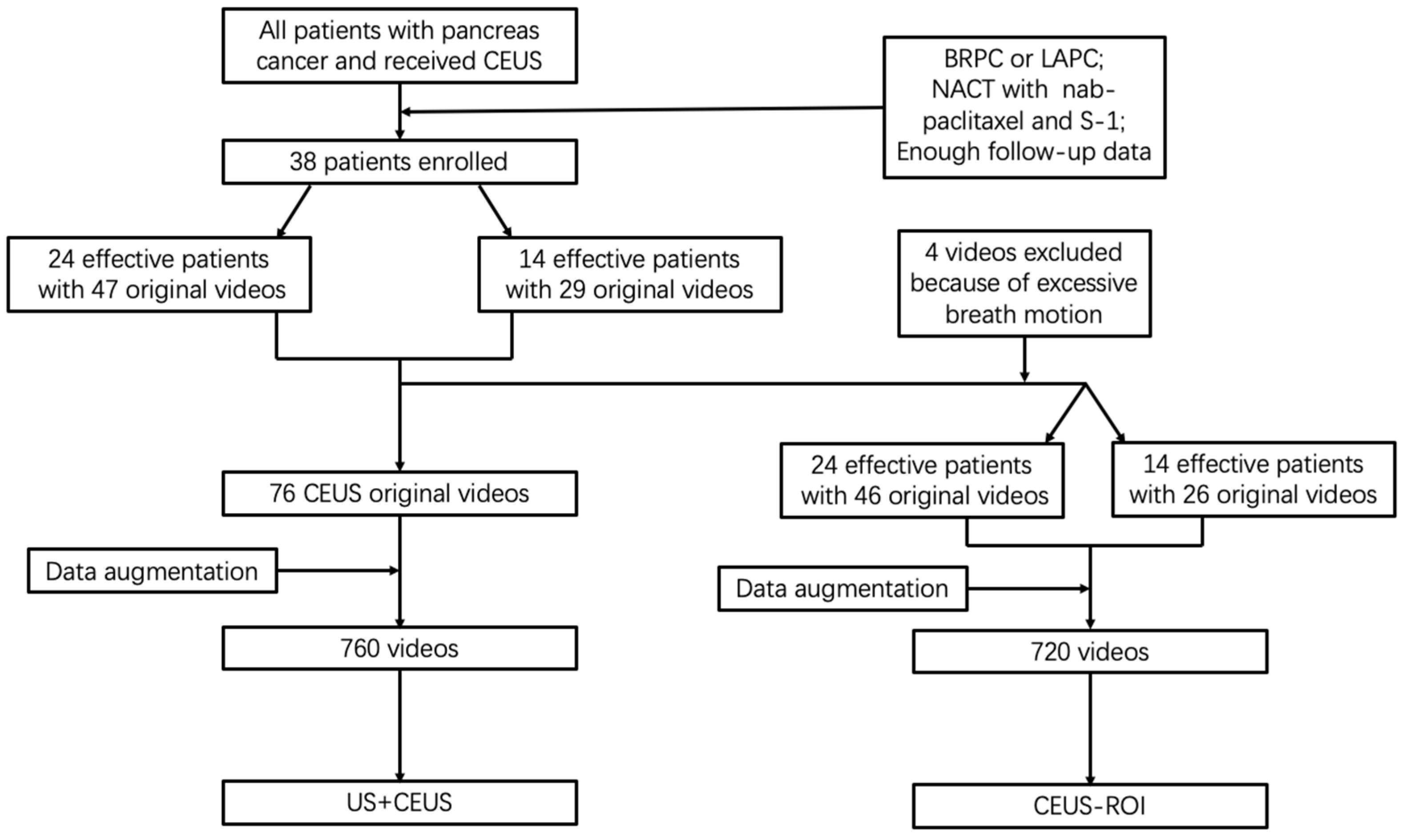

2.1. Patient Enrollment

2.2. Ultrasound and CEUS

2.3. CEUS Video Preprocessing

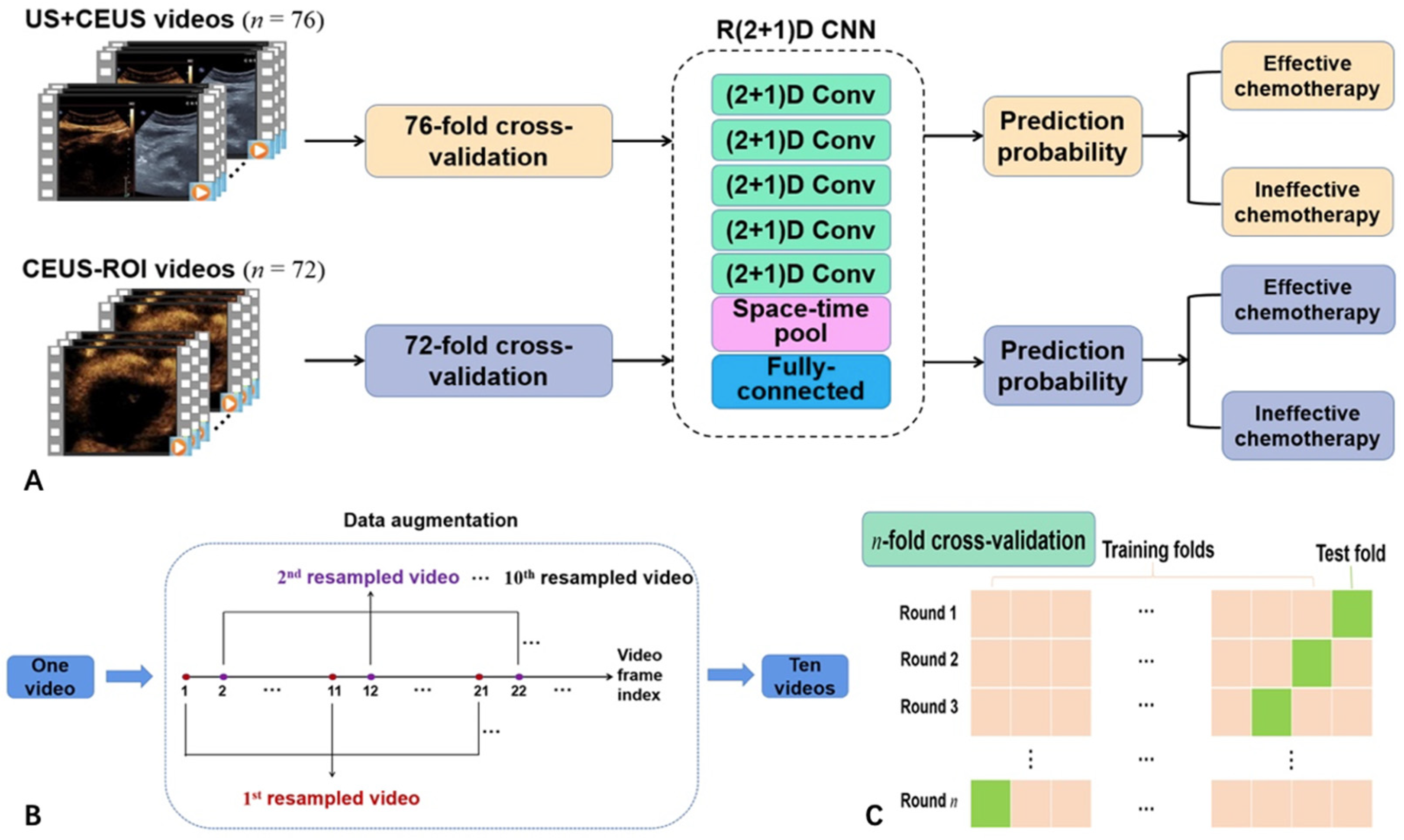

2.4. Network Structure

2.5. Experimental Configuration

2.6. Statistical Analysis

3. Results

3.1. Clinical and Imaging Features of Patients

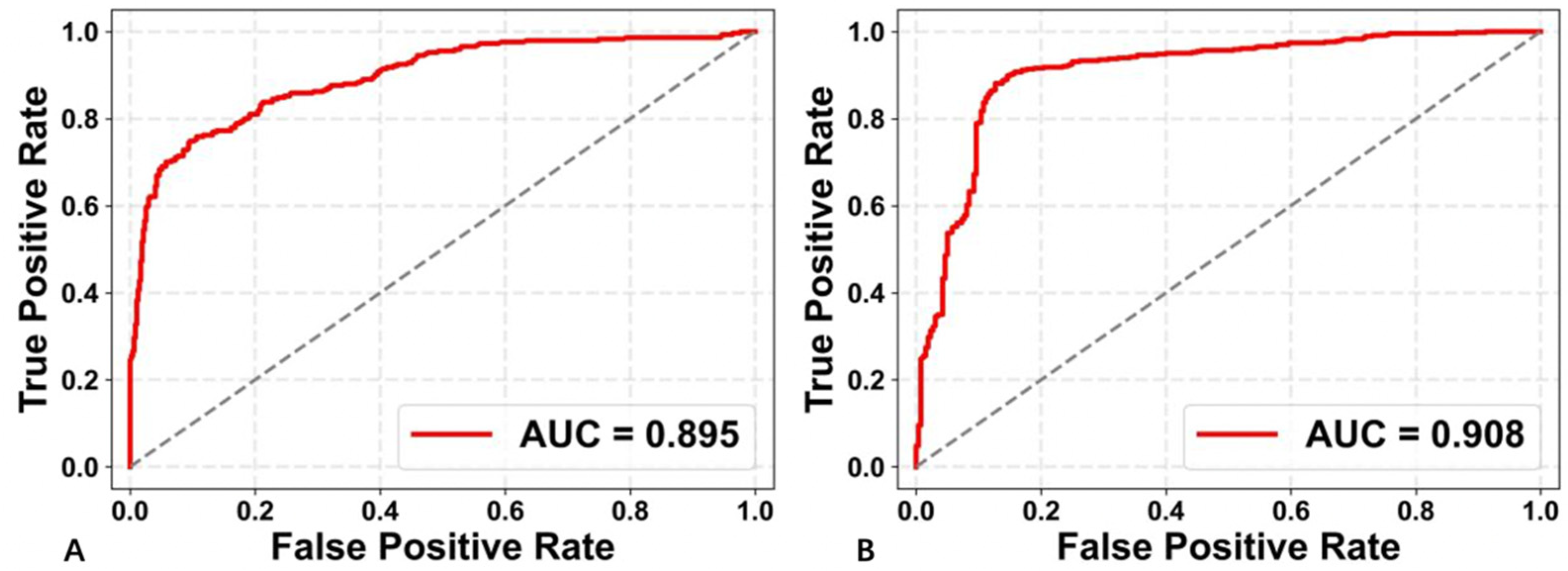

3.2. Performance of the Two Deep Learning Strategies

3.3. Performance of Different 3D CNN Models

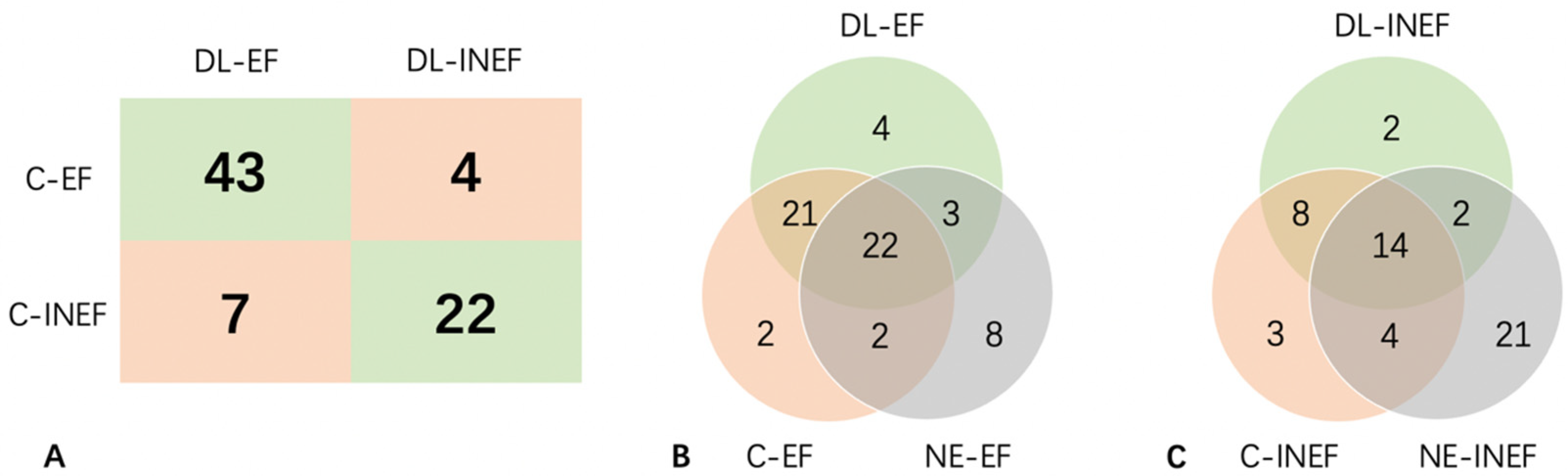

3.4. Prediction of Each Original Video by US+CEUS

3.5. Prediction of Each Original Video by CEUS-ROI

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef] [PubMed]

- Rahib, L.; Smith, B.D.; Aizenberg, R.; Rosenzweig, A.B.; Fleshman, J.M.; Matrisian, L.M. Projecting cancer incidence and deaths to 2030: The unexpected burden of thyroid, liver, and pancreas cancers in the United States. Cancer Res. 2014, 74, 2913–2921. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Heinrich, S.; Lang, H. Neoadjuvant therapy of pancreatic cancer: Definitions and benefits. Int. J. Mol. Sci. 2017, 18, 1622. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tawada, K.; Yamaguchi, T.; Kobayashi, A.; Ishihara, T.; Sudo, K.; Nakamura, K.; Hara, T.; Denda, T.; Matsuyama, M.; Yokosuka, O. Changes in tumor vascularity depicted by contrast-enhanced ultrasonography as a predictor of chemotherapeutic effect in patients with unresectable pancreatic cancer. Pancreas 2009, 38, 30–35. [Google Scholar] [CrossRef] [PubMed]

- Sidhu, P.S.; Cantisani, V.; Dietrich, C.F.; Gilja, O.H.; Saftoiu, A.; Bartels, E.; Bertolotto, M.; Calliada, F.; Clevert, D.A.; Cosgrove, D.; et al. The EFSUMB guidelines and recommendations for the clinical practice of contrast-enhanced ultrasound (CEUS) in non-hepatic applications: Update 2017 (Short Version). Ultraschall Med. 2018, 39, 154–180. [Google Scholar]

- Lee, S.; Kim, S.H.; Park, H.K.; Jang, K.T.; Hwang, J.A.; Kim, S. Pancreatic ductal adenocarcinoma: Rim enhancement at MR imaging predicts prognosis after curative resection. Radiology 2018, 288, 456–466. [Google Scholar] [CrossRef]

- Zhu, L.; Shi, X.; Xue, H.; Wu, H.; Chen, G.; Sun, H.; He, Y.; Jin, Z.; Liang, Z.; Zhang, Z. CT imaging biomarkers predict clinical outcomes after pancreatic cancer surgery. Medicine 2016, 95, e2664. [Google Scholar] [CrossRef] [PubMed]

- Zhou, T.; Tan, L.; Gui, Y.; Zhang, J.; Chen, X.; Dai, M.; Xiao, M.; Zhang, Q.; Chang, X.; Xu, Q.; et al. Correlation between enhancement patterns on transabdominal ultrasound and survival for pancreatic ductal adenocarcinoma. Cancer Manag. Res. 2021, 13, 6823–6832. [Google Scholar] [CrossRef]

- Akasu, G.; Kawahara, R.; Yasumoto, M.; Sakai, T.; Goto, Y.; Sato, T.; Fukuyo, K.; Okuda, K.; Kinoshita, H.; Tanaka, H. Clinicopathological analysis of contrast-enhanced ultrasonography using perflubutane in pancreatic adenocarcinoma. Kurume Med. J. 2012, 59, 45–52. [Google Scholar] [CrossRef] [Green Version]

- Wang, F.; Casalino, L.P.; Khullar, D. Deep learning in medicine-Promise, progress, and challenges. JAMA Intern. Med. 2019, 179, 293–294. [Google Scholar] [CrossRef]

- Huang, Q.; Wang, D.; Lu, Z.; Zhou, S.; Li, J.; Liu, L.; Chang, C. A novel image-to-knowledge inference approach for automatically diagnosing tumors. Expert Syst. Appl. 2023, 229, 120450. [Google Scholar] [CrossRef]

- Liang, X.; Yu, X.; Gao, T. Machine learning with magnetic resonance imaging for prediction of response to neoadjuvant chemotherapy in breast cancer: A systematic review and meta-analysis. Eur. J. Radiol. 2022, 150, 110247. [Google Scholar] [CrossRef]

- Li, Y.; Fan, Y.; Xu, D.; Li, Y.; Zhong, Z.; Pan, H.; Huang, B.; Xie, X.; Yang, Y.; Liu, B. Deep learning radiomic analysis of DCE-MRI combined with clinical characteristics predicts pathological complete response to neoadjuvant chemotherapy in breast cancer. Front. Oncol. 2023, 12, 1041142. [Google Scholar] [CrossRef]

- Wang, S.; Liu, Z.; Rong, Y.; Zhou, B.; Bai, Y.; Wei, W.; Wang, M.; Guo, Y.; Tian, J. Deep learning provides a new computed tomography-based prognostic biomarker for recurrence prediction in high-grade serous ovarian cancer. Radiother. Oncol. 2019, 132, 171–177. [Google Scholar] [CrossRef] [Green Version]

- Bi, W.L.; Hosny, A.; Schabath, M.B.; Giger, M.L.; Birkbak, N.J.; Mehrtash, A.; Allison, T.; Arnaout, O.; Abbosh, C.; Dunn, I.F.; et al. Artificial intelligence in cancer imaging: Clinical challenges and applications. CA Cancer J. Clin. 2019, 69, 127–157. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Huang, J.; Xie, X.; Wu, H.; Zhang, X.; Zheng, Y.; Xie, X.; Wang, Y.; Xu, M. Development and validation of a combined nomogram model based on deep learning contrast-enhanced ultrasound and clinical factors to predict preoperative aggressiveness in pancreatic neuroendocrine neoplasms. Eur. Radiol. 2022, 32, 7965–7975. [Google Scholar] [CrossRef]

- Tong, T.; Gu, J.; Xu, D.; Song, L.; Zhao, Q.; Cheng, F.; Yuan, Z.; Tian, S.; Yang, X.; Tian, J.; et al. Deep learning radiomics based on contrast-enhanced ultrasound images for assisted diagnosis of pancreatic ductal adenocarcinoma and chronic pancreatitis. BMC Med. 2022, 20, 74. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. Adv. Neural Inf. Process. Syst. 2014, 1, 27. [Google Scholar]

- Tran, D.; Wang, H.; Torresan, L.; Ray, J.; LeCun, Y.; Paluri, M. A closer look at spatiotemporal convolutions for action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6450–6459. [Google Scholar]

- Wilson, S.R.; Lyshchik, A.; Piscaglia, F.; Cosgrove, D.; Jang, H.J.; Sirlin, C.; Dietrich, C.F.; Kim, T.K.; Willmann, J.K.; Kono, Y. CEUS LI-RADS: Algorithm, implementation, and key differences from CT/MRI. Abdom. Radiol. 2018, 43, 127–142. [Google Scholar] [CrossRef] [PubMed]

- Lerchbaumer, M.H.; Putz, F.J.; Rübenthaler, J.; Rogasch, J.; Jung, E.M.; Clevert, D.A.; Hamm, B.; Makowski, M.; Fischer, T. Contrast-enhanced ultrasound (CEUS) of cystic renal lesions in comparison to CT and MRI in a multicenter setting. Clin. Hemorheol. Microcirc. 2020, 75, 419–429. [Google Scholar] [CrossRef]

- Liu, L.; Tang, C.; Li, L.; Chen, P.; Tan, Y.; Hu, X.; Chen, K.; Shang, Y.; Liu, D.; Liu, H. Deep learning radiomics for focal liver lesions diagnosis on long-range contrast-enhanced ultrasound and clinical factors. Quant. Imaging Med. Surg. 2022, 12, 3213–3226. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Wei, Q.; Huang, Y.; Yao, Z.; Yan, C.; Zou, X.; Han, J.; Li, Q.; Mao, R.; Liao, Y. Deep learning of liver contrast-enhanced ultrasound to predict microvascular invasion and prognosis in hepatocellular carcinoma. Front. Oncol. 2022, 12, 878061. [Google Scholar] [CrossRef] [PubMed]

- Guang, Y.; He, W.; Ning, B.; Zhang, H.; Yin, C.; Zhao, M.; Nie, F.; Huang, P.; Zhang, R.F.; Yong, Q.; et al. Deep learning-based carotid plaque vulnerability classification with multicentre contrast-enhanced ultrasound video: A comparative diagnostic study. BMJ Open 2021, 11, e047528. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Wang, Y.; Niu, J.; Liu, X.; Li, Q.; Gong, X. Domain knowledge powered deep learning for breast cancer diagnosis based on contrast-enhanced ultrasound videos. IEEE Trans. Med. Imaging 2021, 40, 2439–2451. [Google Scholar] [CrossRef] [PubMed]

- Liu, F.; Liu, D.; Wang, K.; Xie, X.; Su, L.; Kuang, M.; Huang, G.; Peng, B.; Wang, Y.; Lin, M.; et al. Deep learning radiomics based on contrast-enhanced ultrasound might optimize curative treatments for very-early or early-stage hepatocellular carcinoma patients. Liver Cancer 2020, 9, 397–413. [Google Scholar] [CrossRef]

- Soffer, S.; Ben-Cohen, A.; Shimon, O.; Amitai, M.M.; Greenspan, H.; Klang, E. Convolutional neural networks for radiologic images: A radiologist’s guide. Radiology 2019, 290, 590–606. [Google Scholar] [CrossRef] [PubMed]

| Effective (n = 24) | Ineffective (n = 14) | p | |

|---|---|---|---|

| Age (years) | 57.8 ± 7.2 | 55.9 ± 10.7 | 0.58 |

| Gender | 0.56 | ||

| Male | 16 | 8 | |

| Female | 8 | 6 | |

| Tumor size, cm | 4.47 | 4.11 | 0.40 |

| Location | 0.62 | ||

| Head and neck 1 | 10 | 7 | |

| Body and tail | 14 | 7 | |

| CA199 (U/mL) | 672.4 ± 1241.3 | 460.1 ± 726.0 | 0.27 |

| Doppler blood flow signals | 0.68 | ||

| Positive | 6 | 2 | |

| Negative | 18 | 12 | |

| Enhancement pattern | 0.27 | ||

| Iso-enhanced | 13 | 5 | |

| Hypo-enhanced | 11 | 9 |

| AUC | Accuracy | Recall | Precision | F1 Score | |

|---|---|---|---|---|---|

| US+CEUS | 0.895 | 0.829 | 0.759 | 0.786 | 0.772 |

| CEUS-ROI | 0.908 | 0.864 | 0.930 | 0.866 | 0.897 |

| AUC | Accuracy | Recall | Precision | F1 Score | |

|---|---|---|---|---|---|

| R(2 + 1)D | 0.908 | 0.864 | 0.930 | 0.866 | 0.897 |

| R3D [19] | 0.889 | 0.814 | 0.612 | 0.828 | 0.704 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shao, Y.; Dang, Y.; Cheng, Y.; Gui, Y.; Chen, X.; Chen, T.; Zeng, Y.; Tan, L.; Zhang, J.; Xiao, M.; et al. Predicting the Efficacy of Neoadjuvant Chemotherapy for Pancreatic Cancer Using Deep Learning of Contrast-Enhanced Ultrasound Videos. Diagnostics 2023, 13, 2183. https://doi.org/10.3390/diagnostics13132183

Shao Y, Dang Y, Cheng Y, Gui Y, Chen X, Chen T, Zeng Y, Tan L, Zhang J, Xiao M, et al. Predicting the Efficacy of Neoadjuvant Chemotherapy for Pancreatic Cancer Using Deep Learning of Contrast-Enhanced Ultrasound Videos. Diagnostics. 2023; 13(13):2183. https://doi.org/10.3390/diagnostics13132183

Chicago/Turabian StyleShao, Yuming, Yingnan Dang, Yuejuan Cheng, Yang Gui, Xueqi Chen, Tianjiao Chen, Yan Zeng, Li Tan, Jing Zhang, Mengsu Xiao, and et al. 2023. "Predicting the Efficacy of Neoadjuvant Chemotherapy for Pancreatic Cancer Using Deep Learning of Contrast-Enhanced Ultrasound Videos" Diagnostics 13, no. 13: 2183. https://doi.org/10.3390/diagnostics13132183

APA StyleShao, Y., Dang, Y., Cheng, Y., Gui, Y., Chen, X., Chen, T., Zeng, Y., Tan, L., Zhang, J., Xiao, M., Yan, X., Lv, K., & Zhou, Z. (2023). Predicting the Efficacy of Neoadjuvant Chemotherapy for Pancreatic Cancer Using Deep Learning of Contrast-Enhanced Ultrasound Videos. Diagnostics, 13(13), 2183. https://doi.org/10.3390/diagnostics13132183