Developing a Supplementary Diagnostic Tool for Breast Cancer Risk Estimation Using Ensemble Transfer Learning

Abstract

1. Introduction

2. Related Works

3. Materials and Methods

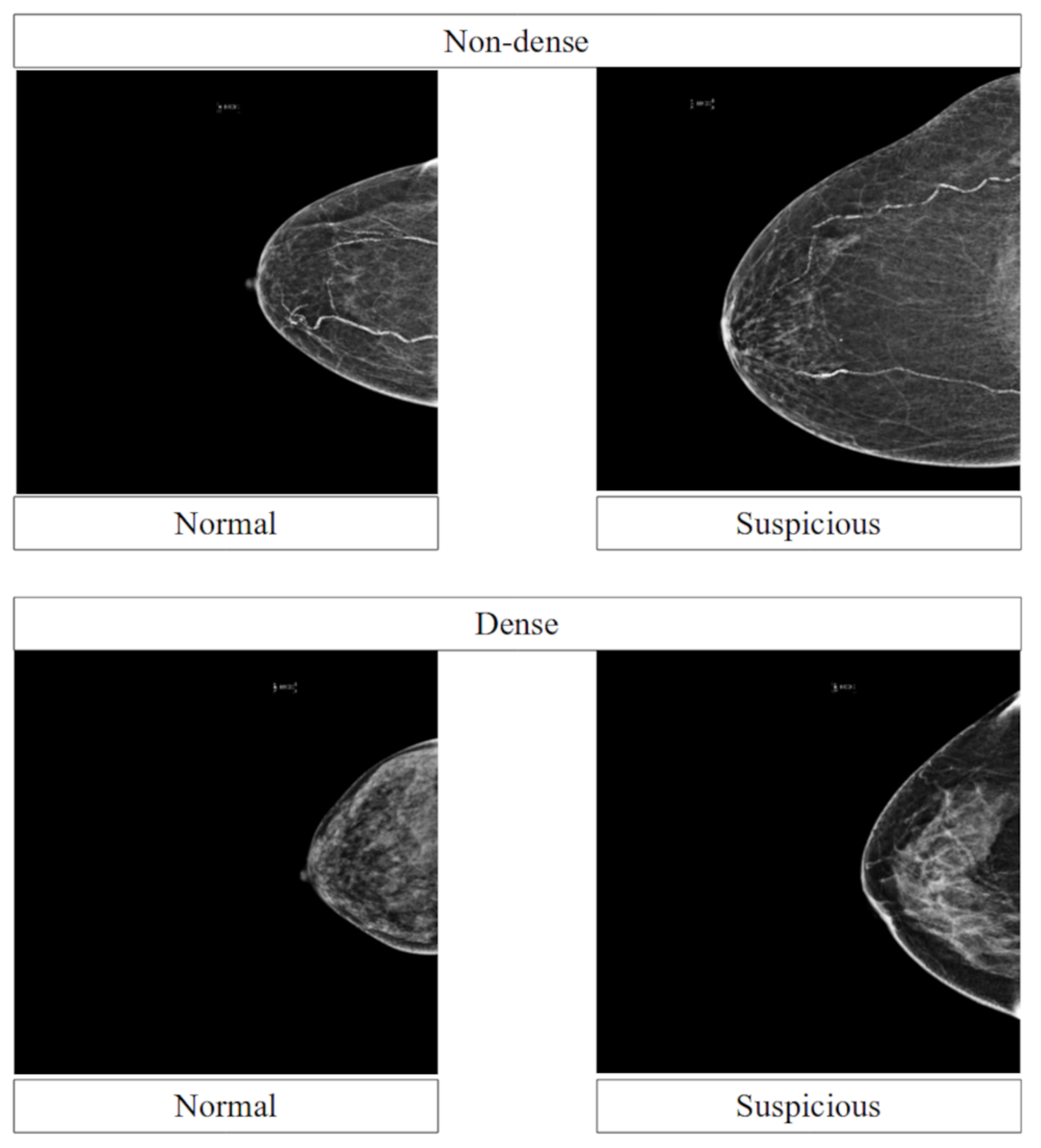

3.1. Data

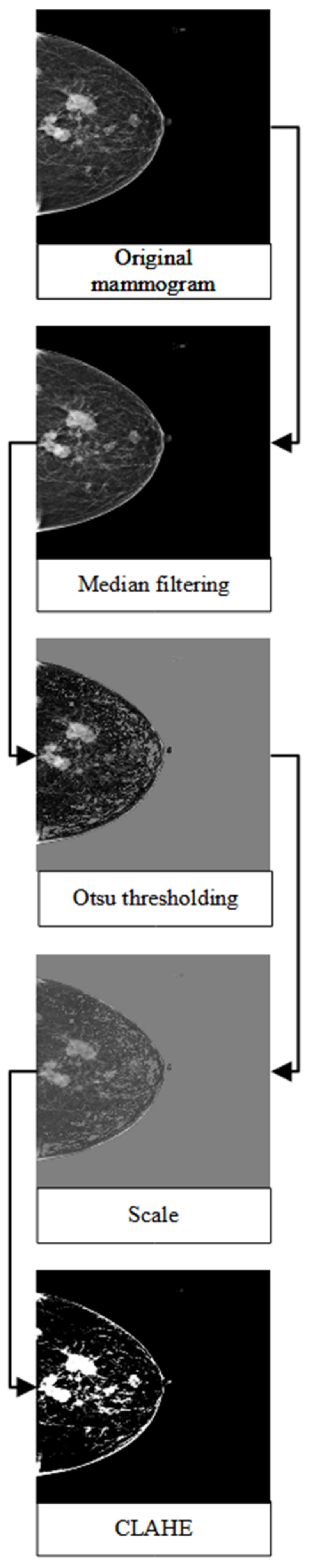

3.2. Pre-Processing Steps

3.3. Pre-Trained Network Architecture

3.4. Model Development and Comparison

3.5. Performance Metrics

3.6. Performance across Breast Densities

4. Results

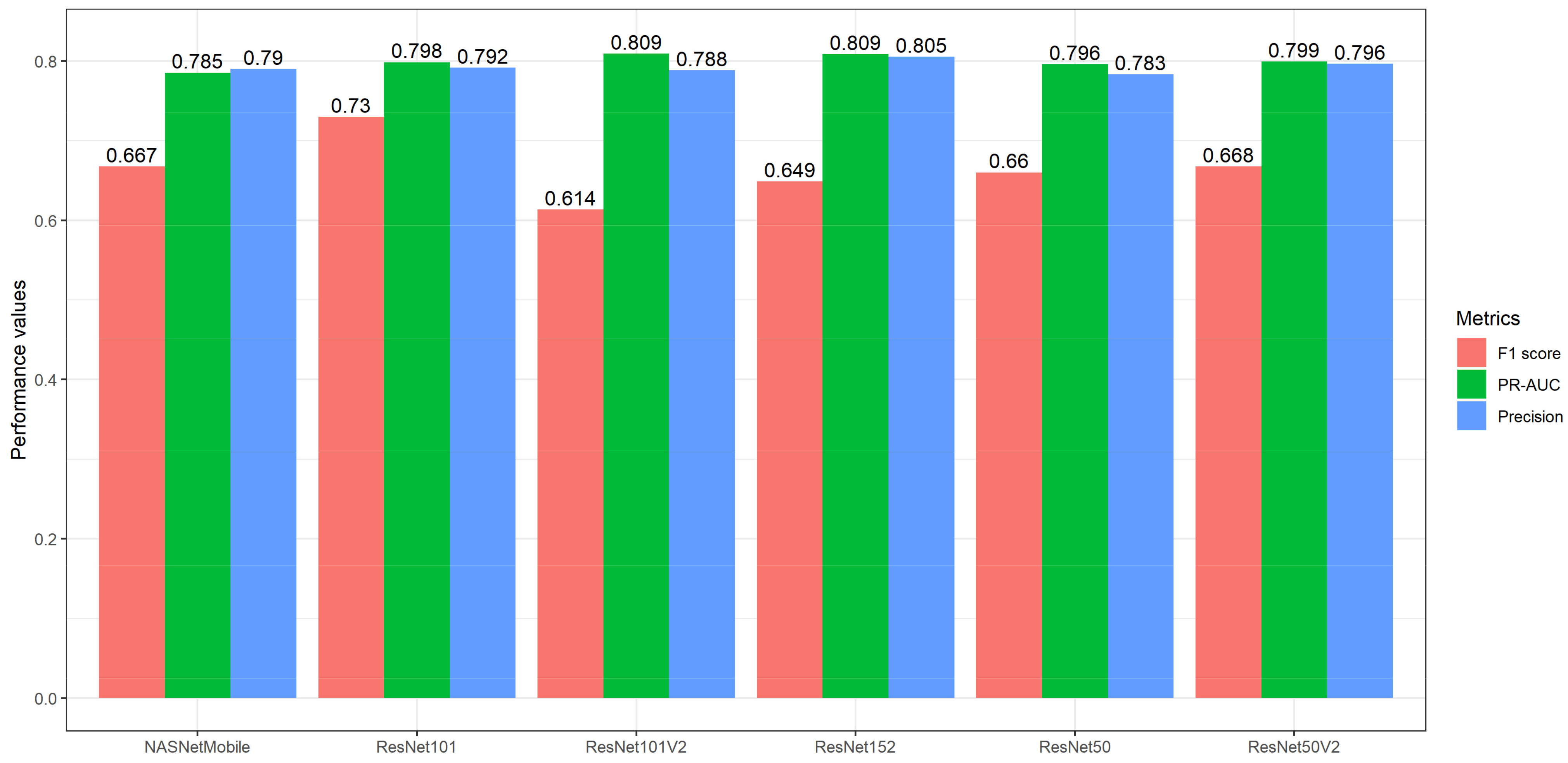

4.1. Model Development

4.2. Ensemble Transfer Learning

4.3. Performance across Breast Densities

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Arnold, M.; Morgan, E.; Rumgay, H.; Mafra, A.; Singh, D.; Laversanne, M.; Vignat, J.; Gralow, J.R.; Cardoso, F.; Siesling, S.; et al. Current and future burden of breast cancer: Global statistics for 2020 and 2040. Breast 2022, 66, 15–23. [Google Scholar] [CrossRef] [PubMed]

- Ferlay, J.; Colombet, M.; Soerjomataram, I.; Parkin, D.M.; Piñeros, M.; Znaor, A.; Bray, F. Cancer statistics for the year 2020: An overview. Int. J. Cancer 2021, 149, 778–789. [Google Scholar] [CrossRef] [PubMed]

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization. Global Breast Cancer Initiative Implementation Framework: Assessing, Strengthening and Scaling-Up of Services for the Early Detection and Management of Breast Cancer; World Health Organization: Geneva, Switzerland, 2023. [Google Scholar]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H.J.W.L. Artificial intelligence in radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef] [PubMed]

- Seely, J.; Alhassan, T. Screening for Breast Cancer in 2018—What Should We be Doing Today? Curr. Oncol. 2018, 25, 115–124. [Google Scholar] [CrossRef] [PubMed]

- Rodríguez-Ruiz, A.; Krupinski, E.; Mordang, J.-J.; Schilling, K.; Heywang-Köbrunner, S.H.; Sechopoulos, I.; Mann, R.M. Detection of Breast Cancer with Mammography: Effect of an Artificial Intelligence Support System. Radiology 2019, 290, 305–314. [Google Scholar] [CrossRef]

- Chollet, F.; Kalinowski, T.; Allaire, J.J. Deep Learning with R, 2nd ed.; Manning: Shelter Island, NY, USA, 2022. [Google Scholar]

- Iman, M.; Arabnia, H.R.; Rasheed, K. A Review of Deep Transfer Learning and Recent Advancements. Technologies 2023, 11, 40. [Google Scholar] [CrossRef]

- Ayana, G.; Dese, K.; Choe, S.-W. Transfer Learning in Breast Cancer Diagnoses via Ultrasound Imaging. Cancers 2021, 13, 738. [Google Scholar] [CrossRef]

- Yu, X.; Wang, J.; Hong, Q.-Q.; Teku, R.; Wang, S.-H.; Zhang, Y.-D. Transfer learning for medical images analyses: A survey. Neurocomputing 2022, 489, 230–254. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015-Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015; Yoshua, B., LeCun, Y., Eds.; 2015; pp. 1–14. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning Transferable Architectures for Scalable Image Recognition. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8697–8710. [Google Scholar]

- Sagi, O.; Rokach, L. Ensemble learning: A survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2018, 8, e1249. [Google Scholar] [CrossRef]

- Ganaie, M.; Hu, M.; Malik, A.; Tanveer, M.; Suganthan, P. Ensemble deep learning: A review. Eng. Appl. Artif. Intell. 2022, 115, 105151. [Google Scholar] [CrossRef]

- Haghanifar, A.; Majdabadi, M.M.; Haghanifar, S.; Choi, Y.; Ko, S.-B. PaXNet: Tooth segmentation and dental caries detection in panoramic X-ray using ensemble transfer learning and capsule classifier. Multimed. Tools Appl. 2023, 1–21. [Google Scholar] [CrossRef]

- Shaik, N.S.; Cherukuri, T.K. Transfer learning based novel ensemble classifier for COVID-19 detection from chest CT-scans. Comput. Biol. Med. 2021, 141, 105127. [Google Scholar] [CrossRef]

- Hasan, K.; Elahi, T.E.; Alam, A.; Jawad, T.; Martí, R. DermoExpert: Skin lesion classification using a hybrid convolutional neural network through segmentation, transfer learning, and augmentation. Inform. Med. Unlocked 2022, 28, 100819. [Google Scholar] [CrossRef]

- Nanni, L.; Interlenghi, M.; Brahnam, S.; Salvatore, C.; Papa, S.; Nemni, R.; Castiglioni, I.; The Alzheimer’s Disease Neuroimaging Initiative. Comparison of Transfer Learning and Conventional Machine Learning Applied to Structural Brain MRI for the Early Diagnosis and Prognosis of Alzheimer’s Disease. Front. Neurol. 2020, 11, 576194. [Google Scholar] [CrossRef]

- Shahabi, M.S.; Shalbaf, A.; Maghsoudi, A. Prediction of drug response in major depressive disorder using ensemble of transfer learning with convolutional neural network based on EEG. Biocybern. Biomed. Eng. 2021, 41, 946–959. [Google Scholar] [CrossRef]

- Burton, A.; Maskarinec, G.; Perez-Gomez, B.; Vachon, C.; Miao, H.; Lajous, M.; López-Ridaura, R.; Rice, M.; Pereira, A.; Garmendia, M.L.; et al. Mammographic density and ageing: A collaborative pooled analysis of cross-sectional data from 22 countries worldwide. PLoS Med. 2017, 14, e1002335. [Google Scholar] [CrossRef]

- Mokhtary, A.; Karakatsanis, A.; Valachis, A. Mammographic Density Changes over Time and Breast Cancer Risk: A Systematic Review and Meta-Analysis. Cancers 2021, 13, 4805. [Google Scholar] [CrossRef]

- Vourtsis, A.; Berg, W.A. Breast density implications and supplemental screening. Eur. Radiol. 2018, 29, 1762–1777. [Google Scholar] [CrossRef]

- Saber, A.; Sakr, M.; Abo-Seida, O.M.; Keshk, A.; Chen, H. A Novel Deep-Learning Model for Automatic Detection and Classification of Breast Cancer Using the Transfer-Learning Technique. IEEE Access 2021, 9, 71194–71209. [Google Scholar] [CrossRef]

- Khamparia, A.; Bharati, S.; Podder, P.; Gupta, D.; Khanna, A.; Phung, T.K.; Thanh, D.N.H. Diagnosis of breast cancer based on modern mammography using hybrid transfer learning. Multidimens. Syst. Signal Process. 2021, 32, 747–765. [Google Scholar] [CrossRef] [PubMed]

- Guan, S.; Loew, M. Using generative adversarial networks and transfer learning for breast cancer detection by convolutional neural networks. SPIE 2019, 10954, 109541C. [Google Scholar] [CrossRef]

- Mendel, K.; Li, H.; Sheth, D.; Giger, M. Transfer Learning From Convolutional Neural Networks for Computer-Aided Diagnosis: A Comparison of Digital Breast Tomosynthesis and Full-Field Digital Mammography. Acad. Radiol. 2019, 26, 735–743. [Google Scholar] [CrossRef] [PubMed]

- Falconi, L.G.; Perez, M.; Aguila, W.G.; Conci, A. Transfer Learning and Fine Tuning in Breast Mammogram Abnormalities Classification on CBIS-DDSM Database. Adv. Sci. Technol. Eng. Syst. J. 2020, 5, 154–165. [Google Scholar] [CrossRef]

- Guan, S.; Loew, M. Breast Cancer Detection Using Transfer Learning in Convolutional Neural Networks. In Proceedings of the 2017 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), Washington, DC, USA, 10–12 October 2017; pp. 1–8. [Google Scholar]

- Yu, X.; Wang, S.-H. Abnormality Diagnosis in Mammograms by Transfer Learning Based on ResNet18. Fundam. Inform. 2019, 168, 219–230. [Google Scholar] [CrossRef]

- Falconi, L.G.; Perez, M.; Aguilar, W.G. Transfer Learning in Breast Mammogram Abnormalities Classification With Mobilenet and Nasnet. In Proceedings of the 2019 International Conference on Systems, Signals and Image Processing (IWSSIP), Osijek, Croatia, 5–7 June 2019; pp. 109–114. [Google Scholar] [CrossRef]

- Ansar, W.; Shahid, A.R.; Raza, B.; Dar, A.H. Breast Cancer Detection and Localization Using MobileNet Based Transfer Learning for Mammograms. In Proceedings of the Intelligent Computing Systems: Third International Symposium, ISICS 2020, Sharjah, United Arab Emirates, 18–19 March 2020; pp. 11–21. [Google Scholar] [CrossRef]

- Jiang, F.; Liu, H.; Yu, S.; Xie, Y. Breast mass lesion classification in mammograms by transfer learning. In Proceedings of the 5th International Conference on Bioinformatics and Computational Biology, ICBCB 2017; Association for Computing Machinery: Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences, Shenzhen, China, 6–8 January 2017; pp. 59–62. [Google Scholar]

- Mednikov, Y.; Nehemia, S.; Zheng, B.; Benzaquen, O.; Lederman, D. Transfer Representation Learning using Inception-V3 for the Detection of Masses in Mammography. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; pp. 2587–2590. [Google Scholar]

- Pattanaik, R.K.; Mishra, S.; Siddique, M.; Gopikrishna, T.; Satapathy, S. Breast Cancer Classification from Mammogram Images Using Extreme Learning Machine-Based DenseNet121 Model. J. Sens. 2022, 2022, 2731364. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- George, M.J.; Dhas, D.A.S. Preprocessing filters for mammogram images: A review. In Proceedings of the 2017 Conference on Emerging Devices and Smart Systems (ICEDSS), Piscataway, NJ, USA, 3–4 March 2017; pp. 1–7. [Google Scholar]

- George, M.J.; Sankar, S.P. Efficient preprocessing filters and mass segmentation techniques for mammogram images. In Proceedings of the 2017 IEEE International Conference on Circuits and Systems (ICCS), Thiruvananthapuram, India, 20–21 December 2017; pp. 408–413. [Google Scholar]

- Lu, H.-C.; Loh, E.-W.; Huang, S.-C. The Classification of Mammogram Using Convolutional Neural Network with Specific Image Preprocessing for Breast Cancer Detection. In Proceedings of the 2019 2nd International Conference on Artificial Intelligence and Big Data (ICAIBD), Chengdu, China, 25–28 May 2019; pp. 9–12. [Google Scholar] [CrossRef]

- Omer, A.M.; Elfadil, M. Preprocessing of Digital Mammogram Image Based on Otsu’s Threshold. Am. Sci. Res. J. Eng. Technol. Sci. 2017, 37, 220–229. [Google Scholar]

- Khairnar, S.; Thepade, S.D.; Gite, S. Effect of image binarization thresholds on breast cancer identification in mammography images using OTSU, Niblack, Burnsen, Thepade’s SBTC. Intell. Syst. Appl. 2021, 10–11, 200046. [Google Scholar] [CrossRef]

- Lbachir, I.A.; Es-Salhi, R.; Daoudi, I.; Tallal, S. A New Mammogram Preprocessing Method for Computer-Aided Diagnosis Systems. In Proceedings of the 2017 IEEE/ACS 14th International Conference on Computer Systems and Applications (AICCSA), Piscataway, NJ, USA, 30 October–3 November 2017; pp. 166–171. [Google Scholar]

- Radzi, S.F.M.; Karim, M.K.A.; Saripan, M.I.; Abd Rahman, M.A.; Osman, N.H.; Dalah, E.Z.; Noor, N.M. Impact of Image Contrast Enhancement on Stability of Radiomics Feature Quantification on a 2D Mammogram Radiograph. IEEE Access 2020, 8, 127720–127731. [Google Scholar] [CrossRef]

- Kharel, N.; Alsadoon, A.; Prasad, P.W.C.; Elchouemi, A. Early diagnosis of breast cancer using contrast limited adaptive histogram equalization (CLAHE) and Morphology methods. In Proceedings of the 2017 8th International Conference on Information and Communication Systems (ICICS), Irbid, Jordan, 4–6 April 2017; pp. 120–124. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing. 2022. Available online: https://www.R-project.org/ (accessed on 23 March 2023).

- Ushey, K.; Allaire, J.J.; Tang, Y. Reticulate: Interface to “Python”. 2023. Available online: https://rstudio.github.io/reticulate/ (accessed on 23 March 2023).

- Mason, D. SU-E-T-33: Pydicom: An Open Source DICOM Library. Med. Phys. 2011, 38, 3493. [Google Scholar] [CrossRef]

- Nolan, R.; Alvarez, L.A.J.; Elegheert, J.; Iliopoulou, M.; Jakobsdottir, G.M.; Rodriguez-Muñoz, M.; Aricescu, A.R.; Padilla-Parra, S. nandb—Number and brightness in R with a novel automatic detrending algorithm. Bioinformatics 2017, 33, 3508–3510. [Google Scholar] [CrossRef]

- Pau, G.; Fuchs, F.; Sklyar, O.; Boutros, M.; Huber, W. EBImage--An R package for image processing with applications to cellular phenotypes. Bioinformatics 2010, 26, 979–981. [Google Scholar] [CrossRef]

- Landini, G.; Randell, D.; Fouad, S.; Galton, A. Automatic thresholding from the gradients of region boundaries. J. Microsc. 2016, 265, 185–195. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity Mappings in Deep Residual Networks. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Amsterdam, The Netherlands, 2016; Volume 9908, pp. 630–645. ISBN 9783319464923. [Google Scholar]

- Allaire, J.J.; Chollet, F. Keras: R Interface to “Keras”. 2022. Available online: https://github.com/rstudio/keras (accessed on 23 March 2023).

- Allaire, J.J.; Tang, Y. Tensorflow: R Interface to “TensorFlow”. 2022. Available online: https://tensorflow.rstudio.com/ (accessed on 23 March 2023).

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015-Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

- Brabec, J.; Machlica, L. Bad practices in evaluation methodology relevant to class-imbalanced problems. arXiv 2018, arXiv:1812.01388. [Google Scholar]

- Saito, T.; Rehmsmeier, M. Precrec: Fast and accurate precision–recall and ROC curve calculations in R. Bioinformatics 2016, 33, 145–147. [Google Scholar] [CrossRef]

- Hanis, T.M.; Islam, A.; Musa, K.I. Diagnostic Accuracy of Machine Learning Models on Mammography in Breast Cancer Classification: A Meta-Analysis. Diagnostics 2022, 12, 1643. [Google Scholar] [CrossRef]

- Khan, S.; Islam, N.; Jan, Z.; Din, I.U.; Rodrigues, J.J.P.C. A novel deep learning based framework for the detection and classification of breast cancer using transfer learning. Pattern Recognit. Lett. 2019, 125, 1–6. [Google Scholar] [CrossRef]

- Zheng, Y.; Li, C.; Zhou, X.; Chen, H.; Xu, H.; Li, Y.; Zhang, H.; Li, X.; Sun, H.; Huang, X.; et al. Application of transfer learning and ensemble learning in image-level classification for breast histopathology. Intell. Med. 2022. [Google Scholar] [CrossRef]

- Shreffler, J.; Huecker, M.R. Diagnostic Testing Accuracy: Sensitivity, Specificity, Predictive Values and Likelihood Ratios; StatPearls Publishing: Treasure Island, FL, USA, 2022. [Google Scholar]

- Lynge, E.; Vejborg, I.; Andersen, Z.; von Euler-Chelpin, M.; Napolitano, G. Mammographic Density and Screening Sensitivity, Breast Cancer Incidence and Associated Risk Factors in Danish Breast Cancer Screening. J. Clin. Med. 2019, 8, 2021. [Google Scholar] [CrossRef] [PubMed]

- Sherratt, M.J.; McConnell, J.C.; Streuli, C.H. Raised mammographic density: Causative mechanisms and biological consequences. Breast Cancer Res. 2016, 18, 45. [Google Scholar] [CrossRef] [PubMed]

- Nazari, S.S.; Mukherjee, P. An overview of mammographic density and its association with breast cancer. Breast Cancer 2018, 25, 259–267. [Google Scholar] [CrossRef] [PubMed]

- Løberg, M.; Lousdal, M.L.; Bretthauer, M.; Kalager, M. Benefits and harms of mammography screening. Breast Cancer Res. 2015, 17, 63. [Google Scholar] [CrossRef]

| Study | Database | Pre-Trained Network | Performance Metrics 1 |

|---|---|---|---|

| Pattanaik (2022) [37] | DDSM | VGG19, MobileNet, Xception, ResNet50V2, InceptionV3, InceptionResNetV2, DenseNet201, DenseNet121, DenseNet121 + ELM 2 | Accuracy = 0.97 Sensitivity = 0.99 Specificity = 0.99 |

| Khamparia (2021 [27] | DDSM | AlexNet, ResNet50, MobileNet, VGG16, VGG19, MVGG16, MVGG16, ImageNet 2 | Accuracy = 0.94 AUC = 0.93 Sensitivity = 0.94 Precision = 0.94 F1 score = 0.94 |

| Sabeer (2021) [26] | MIAS | Inception V3, InceptionV2, ResNet, VGG16 2, VGG19, ResNet50 | Accuracy = 0.99 AUC = 1.00 Sensitivity = 0.98 Specificity = 0.99 Precision = 0.97 F1 score = 0.98 |

| Ansar (2020) [34] | DDSM CBIS-DDSM | AlexNet, VGG16, VGG19, ResNet50, GoogLeNet, MobileNetV1 2, MobileNetV2 | Accuracy = 0.87 Sensitivity = 0.95 Precision = 0.84 |

| Falconi (2020) [30] | CBIS-DDSM | VGG16 2, VGG19, Xception, Resnet101, Resnet152, Resnet50 | Accuracy = 0.84 AUC = 0.84 F1 score = 0.85 |

| Falconi (2019) [33] | CBIS-DDSM | MobileNet, ResNet50 2, InceptionV3, NASNet | Accuracy = 0.78 |

| Guan (2019) [28] | DDSM | VGG16 2 | Accuracy = 0.92 |

| Mendel (2019) [29] | Primary data | VGG19 2 | AUC = 0.81 |

| Yu (2019) [32] | Mini-MIAS | ResNet18 2, ResNet50, ResNet101 | Accuracy = 0.96 |

| Mednikov (2018) [36] | INbreast | InceptionV3 2 | AUC = 0.91 |

| Jiang (2017) [35] | BCDR-F03 | GoogLeNet 2, AlexNet | AUC = 0.88 |

| Guan (2017) [31] | MIAS DDSM | VGG16 2 | Accuracy = 0.91 AUC = 0.96 |

| Architecture | PR-AUC (Mean, SD) | Precision (Mean, SD) | F1 Score (Mean, SD) | Youden J Index (Mean, SD) |

|---|---|---|---|---|

| MobileNets | 0.79 (0.01) | 0.79 (0.00) | 0.49 (0.07) | 0.02 (0.01) |

| MobileNetV2 | 0.79 (0.00) | 0.79 (0.01) | 0.46 (0.11) | 0.02 (0.04) |

| MobileNetV3Small | 0.80 (0.01) | 0.81 (0.02) | 0.56 (0.09) | 0.06 (0.04) |

| NASNetLarge | 0.80 (0.03) | 0.80 (0.03) | 0.68 (0.09) | 0.06 (0.09) |

| NASNetMobile | 0.79 (0.02) | 0.79 (0.02) | 0.67 (0.06) | 0.03 (0.05) |

| ResNet101 | 0.80 (0.03) | 0.79 (0.01) | 0.73 (0.08) | 0.04 (0.04) |

| ResNet101V2 | 0.81 (0.01) | 0.79 (0.01) | 0.61 (0.07) | 0.02 (0.03) |

| ResNet152 | 0.81 (0.01) | 0.81 (0.01) | 0.65 (0.04) | 0.07 (0.03) |

| ResNet152V2 | 0.80 (0.03) | 0.80 (0.03) | 0.60 (0.17) | 0.07 (0.07) |

| ResNet50 | 0.80 (0.03) | 0.78 (0.02) | 0.66 (0.08) | 0.01 (0.03) |

| ResNet50V2 | 0.80 (0.03) | 0.80 (0.01) | 0.67 (0.01) | 0.05 (0.03) |

| VGG16 | 0.79 (0.03) | 0.77 (0.04) | 0.61 (0.14) | −0.01 (0.08) |

| VGG19 | 0.78 (0.02) | 0.78 (0.01) | 0.57 (0.11) | 0.00 (0.04) |

| Model | Precision (Mean, SD) | F1 Score (Mean, SD) | Youden J Index (Mean, SD) |

|---|---|---|---|

| Ensemble model 1 | 0.81 (0.01) | 0.65 (0.01) | 0.09 (0.03) |

| Ensemble model 2 | 0.81 (0.01) | 0.66 (0.01) | 0.09 (0.04) |

| Ensemble model 3 | 0.82 (0.01) | 0.68 (0.01) | 0.12 (0.03) |

| NASNetMobile | 0.79 (0.02) | 0.67 (0.06) | 0.03 (0.05) |

| ResNet101 | 0.79 (0.01) | 0.73 (0.08) | 0.04 (0.04) |

| ResNet101V2 | 0.79 (0.01) | 0.61 (0.07) | 0.02 (0.03) |

| ResNet152 | 0.81 (0.01) | 0.65 (0.04) | 0.07 (0.03) |

| ResNet50V2 | 0.80 (0.01) | 0.67 (0.01) | 0.05 (0.03) |

| Metrics | Overall | Dense | Non-Dense |

|---|---|---|---|

| Precision | 0.82 (0.01) | 0.86 (0.01) | 0.77 (0.00) |

| F1 score | 0.68 (0.01) | 0.75 (0.01) | 0.60 (0.02) |

| Youden J Index | 0.12 (0.03) | 0.21 (0.04) | 0.03 (0.03) |

| Sensitivity | 0.58 (0.02) | 0.67 (0.01) | 0.49 (0.03) |

| Specificity | 0.54 (0.02) | 0.54 (0.03) | 0.54 (0.01) |

| Metrics | Dense Median (IQR) | Non-Dense Median (IQR) | W Statistics | p Value |

|---|---|---|---|---|

| Precision | 0.86 (0.01) | 0.77 (0.00) | 9 | 0.1 |

| F1 score | 0.75 (0.01) | 0.60 (0.02) | 9 | 0.1 |

| Youden J Index | 0.22 (0.04) | 0.03 (0.03) | 9 | 0.1 |

| Sensitivity | 0.67 (0.01) | 0.49 (0.03) | 9 | 0.1 |

| Specificity | 0.55 (0.03) | 0.54 (0.01) | 6 | 0.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hanis, T.M.; Ruhaiyem, N.I.R.; Arifin, W.N.; Haron, J.; Wan Abdul Rahman, W.F.; Abdullah, R.; Musa, K.I. Developing a Supplementary Diagnostic Tool for Breast Cancer Risk Estimation Using Ensemble Transfer Learning. Diagnostics 2023, 13, 1780. https://doi.org/10.3390/diagnostics13101780

Hanis TM, Ruhaiyem NIR, Arifin WN, Haron J, Wan Abdul Rahman WF, Abdullah R, Musa KI. Developing a Supplementary Diagnostic Tool for Breast Cancer Risk Estimation Using Ensemble Transfer Learning. Diagnostics. 2023; 13(10):1780. https://doi.org/10.3390/diagnostics13101780

Chicago/Turabian StyleHanis, Tengku Muhammad, Nur Intan Raihana Ruhaiyem, Wan Nor Arifin, Juhara Haron, Wan Faiziah Wan Abdul Rahman, Rosni Abdullah, and Kamarul Imran Musa. 2023. "Developing a Supplementary Diagnostic Tool for Breast Cancer Risk Estimation Using Ensemble Transfer Learning" Diagnostics 13, no. 10: 1780. https://doi.org/10.3390/diagnostics13101780

APA StyleHanis, T. M., Ruhaiyem, N. I. R., Arifin, W. N., Haron, J., Wan Abdul Rahman, W. F., Abdullah, R., & Musa, K. I. (2023). Developing a Supplementary Diagnostic Tool for Breast Cancer Risk Estimation Using Ensemble Transfer Learning. Diagnostics, 13(10), 1780. https://doi.org/10.3390/diagnostics13101780