Deep versus Handcrafted Tensor Radiomics Features: Prediction of Survival in Head and Neck Cancer Using Machine Learning and Fusion Techniques

Abstract

1. Introduction

2. Methods and Materials

2.1. Dataset and Image Pre-Processing

2.2. Fusion Techniques

2.3. Handcrafted Features

2.4. Deep Features

2.5. Tensor Radiomics Paradigm

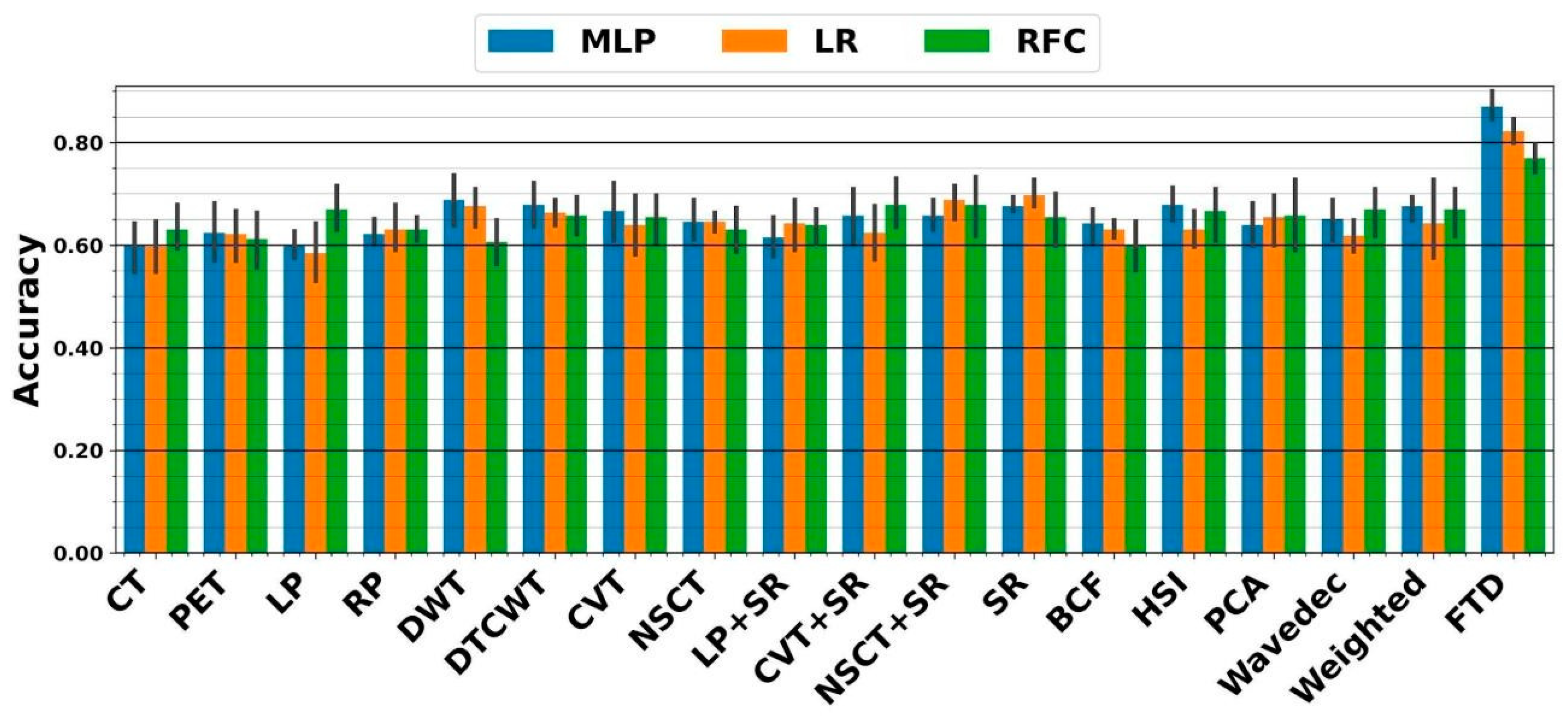

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Feature Selection Using Analysis of Variance (ANOVA)

Appendix A.2. Attribute Extraction Algorithms

Appendix A.3. Classifiers

Appendix A.3.1. Convolutional Neural Network (CNN)

Appendix A.3.2. Logistic Regression (LR)

Appendix A.3.3. Multilayer Perceptron (MLP)

Appendix A.3.4. Random Forest (RFC)

References

- Johnson, D.E.; Burtness, B.; Leemans, C.R.; Lui, V.W.Y.; Bauman, J.E.; Grandis, J.R. Head and neck squamous cell carcinoma. Nat. Res. 2020, 6, 92. [Google Scholar] [CrossRef] [PubMed]

- Pfister, D.G.; Spencer, S.; Brizel, D.M.; Burtness, B.; Busse, P.M.; Caudell, J.J.; Cmelak, A.J.; Colevas, A.D.; Dunphy, F.; Eisele, D.W.; et al. Head and Neck Cancers, Version 1.2015, Featured Updates to the NCCN Guidelines. J. Natl. Compr. Cancer Netw. 2015, 13, 847–856. [Google Scholar] [CrossRef] [PubMed]

- Budach, W.; Bölke, E.; Kammers, K.; Gerber, P.A.; Orth, K.; Gripp, S.; Matuschek, C. Induction chemotherapy followed by concurrent radio-chemotherapy versus concurrent radio-chemotherapy alone as treatment of locally advanced squamous cell carcinoma of the head and neck (HNSCC): A meta-analysis of randomized trials. Radiother. Oncol. 2015, 118, 238–243. [Google Scholar] [CrossRef]

- Polanska, H.; Raudenska, M.; Gumulec, J.; Sztalmachova, M.; Adam, V.; Kizek, R.; Masarik, M. Clinical significance of head and neck squamous cell cancer biomarkers. Oral Oncol. 2013, 50, 168–177. [Google Scholar] [CrossRef] [PubMed]

- Chaturvedi, A.K.; Engels, E.A.; Anderson, W.F.; Gillison, M.L. Incidence Trends for Human Papillomavirus–Related and –Unrelated Oral Squamous Cell Carcinomas in the United States. J. Clin. Oncol. 2008, 26, 612–619. [Google Scholar] [CrossRef] [PubMed]

- Vishwanath, V.; Jafarieh, S.; Rembielak, A. The role of imaging in head and neck cancer: An overview of different imaging modalities in primary diagnosis and staging of the disease. J. Contemp. Brachyther. 2020, 12, 512–518. [Google Scholar] [CrossRef]

- Salmanpour, M.; Hosseinzadeh, M.; Modiri, E.; Akbari, A.; Hajianfar, G.; Askari, D.; Fatan, M.; Maghsudi, M.; Ghaffari, H.; Rezaei, M.; et al. Advanced Survival Prediction in Head and Neck Cancer Using Hybrid Machine Learning Systems and Radiomics Features; Gimi, B.S., Krol, A., Eds.; SPIE: Bellingham, WA, USA, 2022; Volume 12036, p. 45. [Google Scholar]

- Tang, C.; Murphy, J.D.; Khong, B.; La, T.H.; Kong, C.; Fischbein, N.J.; Colevas, A.D.; Iagaru, A.H.; Graves, E.E.; Loo, B.W.; et al. Validation that Metabolic Tumor Volume Predicts Outcome in Head-and-Neck Cancer. Int. J. Radiat. Oncol. 2012, 83, 1514–1520. [Google Scholar] [CrossRef]

- Marcus, C.; Ciarallo, A.; Tahari, A.K.; Mena, E.; Koch, W.; Wahl, R.L.; Kiess, A.P.; Kang, H.; Subramaniam, R.M. Head and neck PET/CT: Therapy response interpretation criteria (Hopkins Criteria)-interreader reliability, accuracy, and survival outcomes. J. Nucl. Med. 2014, 55, 1411–1416. [Google Scholar] [CrossRef]

- Andrearczyk, V.; Oreiller, V.; Jreige, M.; Vallières, M.; Castelli, J.; ElHalawani, H.; Boughdad, S.; Prior, J.O.; Depeursinge, A. Overview of the HECKTOR Challenge at MICCAI 2020: Automatic Head and Neck Tumor Segmentation in PET/CT; LNCS; Springer Science and Business Media Deutschland GmbH: Strasbourg, France, 2020; Volume 12603, pp. 1–21. [Google Scholar]

- Vernon, M.R.; Maheshwari, M.; Schultz, C.J.; Michel, M.A.; Wong, S.J.; Campbell, B.; Massey, B.L.; Wilson, J.F.; Wang, D. Clinical Outcomes of Patients Receiving Integrated PET/CT-Guided Radiotherapy for Head and Neck Carcinoma. Int. J. Radiat. Oncol. 2008, 70, 678–684. [Google Scholar] [CrossRef]

- Jeong, H.-S.; Baek, C.-H.; Son, Y.-I.; Chung, M.K.; Lee, D.K.; Choi, J.Y.; Kim, B.-T.; Kim, H.-J. Use of integrated18F-FDG PET/CT to improve the accuracy of initial cervical nodal evaluation in patients with head and neck squamous cell carcinoma. Head Neck 2007, 29, 203–210. [Google Scholar] [CrossRef]

- Wang, Z.; Ziou, D.; Armenakis, C.; Li, D.; Li, Q. A comparative analysis of image fusion methods. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1391–1402. [Google Scholar] [CrossRef]

- Bi, W.L.; Hosny, A.; Schabath, M.B.; Giger, M.L.; Birkbak, N.J.; Mehrtash, A.; Allison, T.; Arnaout, O.; Abbosh, C.; Dunn, I.F.; et al. Artificial intelligence in cancer imaging: Clinical challenges and applications. CA Cancer J. Clin. 2019, 69, 127–157. [Google Scholar] [CrossRef]

- Leung, K.H.; Salmanpour, M.R.; Saberi, A.; Klyuzhin, I.S.; Sossi, V.; Jha, A.K.; Pomper, M.G.; Du, Y.; Rahmim, A. Using Deep-Learning to Predict Outcome of Patients with Parkinson’s Disease; IEEE: New York, NY, USA, 2018. [Google Scholar]

- Salmanpour, M.; Hosseinzadeh, M.; Akbari, A.; Borazjani, K.; Mojallal, K.; Askari, D.; Hajianfar, G.; Rezaeijo, S.M.; Ghaemi, M.M.; Nabizadeh, A.H.; et al. Prediction of TNM Stage in Head and Neck Cancer Using Hybrid Machine Learning Systems and Radiomics Features; SPIE: Bellingham, WA, USA, 2022; Volume 12033, pp. 648–653. [Google Scholar]

- Javanmardi, A.; Hosseinzadeh, M.; Hajianfar, G.; Nabizadeh, A.H.; Rezaeijo, S.M.; Rahmim, A.; Salmanpour, M. Multi-Modality Fusion Coupled with Deep Learning for Improved Outcome Prediction in Head and Neck Cancer; SPIE: Bellingham, WA, USA, 2022; Volume 12032, pp. 664–668. [Google Scholar]

- Lee, D.H.; Kim, M.J.; Roh, J.; Kim, S.; Choi, S.; Nam, S.Y.; Kim, S.Y. Distant Metastases and Survival Prediction in Head and Neck Squamous Cell Carcinoma. Otolaryngol. Neck Surg. 2012, 147, 870–875. [Google Scholar] [CrossRef] [PubMed]

- Salmanpour, M.R.; Shamsaei, M.; Saberi, A.; Setayeshi, S.; Klyuzhin, I.S.; Sossi, V.; Rahmim, A. Optimized machine learning methods for prediction of cognitive outcome in Parkinson’s disease. Comput. Biol. Med. 2019, 111, 103347. [Google Scholar] [CrossRef] [PubMed]

- Salmanpour, M.R.; Shamsaei, M.; Saberi, A.; Hajianfar, G.; Soltanian-Zadeh, H.; Rahmim, A. Robust identification of Parkinson’s disease subtypes using radiomics and hybrid machine learning. Comput. Biol. Med. 2021, 129, 104142. [Google Scholar] [CrossRef]

- Rahmim, A.; Toosi, A.; Salmanpour, M.R.; Dubljevic, N.; Janzen, I.; Shiri, I.; Ramezani, M.A.; Yuan, R.; Zaidi, H.; MacAulay, C.; et al. Tensor Radiomics: Paradigm for Systematic Incorporation of Multi-Flavoured Radiomics Features. arXiv 2022, arXiv:2203.06314. [Google Scholar]

- Gillies, R.J.; Kinahan, P.E.; Hricak, H. Radiomics: Images Are More than Pictures, They Are Data. Radiology 2016, 278, 563–577. [Google Scholar] [CrossRef] [PubMed]

- Zwanenburg, A.; Leger, S.; Vallières, M.; Löck, S. Initiative, Image biomarker standardisation initiative-feature definitions. arXiv 2016, arXiv:1612.07003. [Google Scholar]

- Lambin, P.; Rios-Velazquez, E.; Leijenaar, R.; Carvalho, S.; van Stiphout, R.G.P.M.; Granton, P.; Zegers, C.M.L.; Gillies, R.; Boellard, R.; Dekker, A.; et al. Radiomics: Extracting more information from medical images using advanced feature analysis. Eur. J. Cancer 2012, 48, 441–446. [Google Scholar] [CrossRef] [PubMed]

- Hajianfar, G.; Kalayinia, S.; Hosseinzadeh, M.; Samanian, S.; Maleki, M.; Rezaeijo, S.M.; Sossi, V.; Rahmim, A.; Salmanpour, M.R. Hybrid Machine Learning Systems for Prediction of Parkinson’s Disease Pathogenic Variants using Clinical Information and Radiomics Features. Soc. Nucl. Med. 2022, 63, 2508. [Google Scholar]

- Salmanpour, M.R.; Shamsaei, M.; Rahmim, A. Feature selection and machine learning methods for optimal identification and prediction of subtypes in Parkinson’s disease. Comput. Methods Programs Biomed. 2021, 206, 106131. [Google Scholar] [CrossRef] [PubMed]

- Salmanpour, M.R.; Shamsaei, M.; Hajianfar, G.; Soltanian-Zadeh, H.; Rahmim, A. Longitudinal clustering analysis and prediction of Parkinson’s disease progression using radiomics and hybrid machine learning. Quant. Imaging Med. Surg. 2021, 12, 906–919. [Google Scholar] [CrossRef] [PubMed]

- Traverso, A.; Wee, L.; Dekker, A.; Gillies, R. Repeatability and Reproducibility of Radiomic Features: A Systematic Review. Int. J. Radiat. Oncol. 2018, 102, 1143–1158. [Google Scholar] [CrossRef]

- Xue, C.; Yuan, J.; Lo, G.G.; Chang, A.T.Y.; Poon, D.M.C.; Wong, O.L.; Zhou, Y.; Chu, W.C.W. Radiomics feature reliability assessed by intraclass correlation coefficient: A systematic review. Quant. Imaging Med. Surg. 2021, 11, 4431–4460. [Google Scholar] [CrossRef] [PubMed]

- Roy, S.; Whitehead, T.D.; Quirk, J.D.; Salter, A.; Ademuyiwa, F.O.; Li, S.; An, H.; Shoghi, K.I. Optimal co-clinical radiomics: Sensitivity of radiomic features to tumour volume, image noise and resolution in co-clinical T1-weighted and T2-weighted magnetic resonance imaging. Ebiomedicine 2020, 59, 102963. [Google Scholar] [CrossRef]

- Roy, S.; Whitehead, T.D.; Li, S.; Ademuyiwa, F.O.; Wahl, R.L.; Dehdashti, F.; Shoghi, K.I. Co-clinical FDG-PET radiomic signature in predicting response to neoadjuvant chemotherapy in triple-negative breast cancer. Eur. J. Nucl. Med. 2021, 49, 550–562. [Google Scholar] [CrossRef]

- Pinaya, W.H.L.; Vieira, S.; Garcia-Dias, R.; Mechelli, A. Autoencoders; Elsevier: Amsterdam, The Netherlands, 2020; pp. 193–208. [Google Scholar]

- Roy, S.; Meena, T.; Lim, S.-J. Demystifying Supervised Learning in Healthcare 4.0: A New Reality of Transforming Diagnostic Medicine. Diagnostics 2022, 12, 2549. [Google Scholar] [CrossRef]

- Roy, S.; Bhattacharyya, D.; Bandyopadhyay, S.K.; Kim, T.-H. An Iterative Implementation of Level Set for Precise Segmentation of Brain Tissues and Abnormality Detection from MR Images. IETE J. Res. 2017, 63, 769–783. [Google Scholar] [CrossRef]

- Salmanpour, M.R.; Hajianfar, G.; Rezaeijo, S.M.; Ghaemi, M.; Rahmim, A. Advanced Automatic Segmentation of Tumors and Survival Prediction in Head and Neck Cancer; LNCS; Springer Science and Business Media Deutschland GmbH: Strasbourg, France, 2022; Volume 13209, pp. 202–210. [Google Scholar]

- Ashrafinia, S. Quantitative Nuclear Medicine Imaging Using Advanced Image Reconstruction and Radiomics. Ph.D. Thesis, The Johns Hopkins University, Baltimore, MD, USA, 2019. [Google Scholar]

- McNitt-Gray, M.; Napel, S.; Jaggi, A.; Mattonen, S.; Hadjiiski, L.; Muzi, M.; Goldgof, D.; Balagurunathan, Y.; Pierce, L.; Kinahan, P.; et al. Standardization in Quantitative Imaging: A Multicenter Comparison of Radiomic Features from Different Software Packages on Digital Reference Objects and Patient Data Sets. Tomography 2020, 6, 118–128. [Google Scholar] [CrossRef]

- Ashrafinia, S.; Dalaie, P.; Yan, R.; Huang, P.; Pomper, M.; Schindler, T.; Rahmim, A. Application of Texture and Radiomics Analysis to Clinical Myocardial Perfusion SPECT Imaging. Soc. Nuclear Med. 2018, 59, 94. [Google Scholar]

- Gardner, M.W.; Dorling, S.R. Artificial neural networks (the multilayer perceptron)—A review of applications in the atmospheric sciences. Atmos. Environ. 1998, 32, 2627–2636. [Google Scholar] [CrossRef]

- Probst, P.; Wright, M.N.; Boulesteix, A. Hyperparameters and Tuning Strategies for Random Forest. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2019, 9, e1301. [Google Scholar] [CrossRef]

- Maalouf, M. Logistic regression in data analysis: An overview. Int. J. Data Anal. Tech. Strat. 2011, 3, 281–299. [Google Scholar] [CrossRef]

- Tharwat, A. Principal Component Analysis—A Tutorial. Int. J. Appl. Pattern Recognit. 2016, 3, 197–240. [Google Scholar] [CrossRef]

- Fraiman, D.; Fraiman, R. An ANOVA approach for statistical comparisons of brain networks. Sci. Rep. 2018, 8, 4746. [Google Scholar] [CrossRef]

- Nussbaumer, H. Fast polynomial transform algorithms for digital convolution. IEEE Trans. Acoust. Speech Signal Process. 1980, 28, 205–215. [Google Scholar] [CrossRef]

- Andrearczyk, V.; Oreiller, V.; Abobakr, M.; Akhavanallaf, A.; Balermpas, P.; Boughdad, S.; Capriotti, L.; Castelli, J.; Cheze Le Rest, C.; Decazes, P.; et al. Overview of the HECKTOR challenge at MICCAI 2022: Automatic head and neck tumor segmentation and outcome prediction in PET/CT. In Proceedings of the Head and Neck Tumor Segmentation and Outcome Prediction: Third Challenge, HECKTOR 2022, Held in Conjunction with MICCAI 2022, Singapore, 22 September 2022; Springer Nature: Cham, Switzerland, 2023; pp. 1–30. [Google Scholar] [CrossRef]

- Iddi, S.; Li, D.; Aisen, P.S.; Rafii, M.S.; Thompson, W.K.; Donohue, M.C. Predicting the course of Alzheimer’s progression. Brain Inform. 2019, 6, 6. [Google Scholar] [CrossRef]

- Salmanpour, M.R.; Hosseinzadeh, M.; Rezaeijo, S.M.; Ramezani, M.M.; Marandi, S.; Einy, M.S.; Hosseinzadeh, M.; Rezaeijo, S.M.; Rahmim, A. Deep versus handcrafted tensor radiomics features: Application to survival prediction in head and neck cancer. In European journal of Nuclear Medicine and Molecular Imaging, One New York Plaza, Suite 4600; Springer: Berlin/Heidelberg, Germany, 2022; Volume 49, pp. S245–S246. [Google Scholar]

- Hu, Z.; Yang, Z.; Zhang, H.; Vaios, E.; Lafata, K.; Yin, F.-F.; Wang, C. A Deep Learning Model with Radiomics Analysis Integration for Glioblastoma Post-Resection Survival Prediction. arXiv 2022, arXiv:2203.05891. [Google Scholar]

- Lao, J.; Chen, Y.; Li, Z.-C.; Li, Q.; Zhang, J.; Liu, J.; Zhai, G. A Deep Learning-Based Radiomics Model for Prediction of Survival in Glioblastoma Multiforme. Sci. Rep. 2017, 7, 10353. [Google Scholar] [CrossRef]

| Category | Algorithms |

|---|---|

| Fusion techniques | Laplacian Pyramid (LP), Ratio of the low-pass Pyramid (RP), Discrete Wavelet Transform (DWT), Dual-Tree Complex Wavelet Transform (DTCWT), Curvelet Transform (CVT), NonSubsampled Contourlet Transform (NSCT), Sparse Representation (SR), DTCWT + SR, CVT + SR, NSCT + SR, Bilateral Cross Filter (BCF), Wavelet Fusion, Weighted Fusion, Principal Component Analysis (PCA), and Hue, Saturation and Intensity fusion (HSI) |

| Dimension reduction algorithms | Analysis of Variance (ANOVA) and Principal Component Analysis (PCA) |

| Classifiers | Multilayer Perceptron (MLP), Random Forest, and Logistic Regression (LR) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Salmanpour, M.R.; Rezaeijo, S.M.; Hosseinzadeh, M.; Rahmim, A. Deep versus Handcrafted Tensor Radiomics Features: Prediction of Survival in Head and Neck Cancer Using Machine Learning and Fusion Techniques. Diagnostics 2023, 13, 1696. https://doi.org/10.3390/diagnostics13101696

Salmanpour MR, Rezaeijo SM, Hosseinzadeh M, Rahmim A. Deep versus Handcrafted Tensor Radiomics Features: Prediction of Survival in Head and Neck Cancer Using Machine Learning and Fusion Techniques. Diagnostics. 2023; 13(10):1696. https://doi.org/10.3390/diagnostics13101696

Chicago/Turabian StyleSalmanpour, Mohammad R., Seyed Masoud Rezaeijo, Mahdi Hosseinzadeh, and Arman Rahmim. 2023. "Deep versus Handcrafted Tensor Radiomics Features: Prediction of Survival in Head and Neck Cancer Using Machine Learning and Fusion Techniques" Diagnostics 13, no. 10: 1696. https://doi.org/10.3390/diagnostics13101696

APA StyleSalmanpour, M. R., Rezaeijo, S. M., Hosseinzadeh, M., & Rahmim, A. (2023). Deep versus Handcrafted Tensor Radiomics Features: Prediction of Survival in Head and Neck Cancer Using Machine Learning and Fusion Techniques. Diagnostics, 13(10), 1696. https://doi.org/10.3390/diagnostics13101696