1. Introduction

Colorectal cancer (CRC) is the second deadliest cancer worldwide [

1]. The precise and timely diagnosis of CRC is critical for improving treatment efficacy. However, conventional CRC diagnosis requires thorough visual examinations by highly experienced endoscopists. Conventional approaches [

2,

3,

4,

5] toward CRC management include the sampling of suspicious lesions via regular colonoscopy and deciding future countermeasures via histological analysis [

6,

7]. Consequently, conventional CRC diagnosis requires a long time for proper examination (ranging from several days to weeks); if an abnormality is identified, it is cumbersome to repeat the secondary endoscopic examination [

7,

8]. Moreover, endoscopists are required to have high concentration during examinations to avoid possible errors. Therefore, it is imperative to develop a reliable system for CRC analysis that can improve clinical efficiency and minimize potential errors during diagnosis.

In recent years, there has been increased interest worldwide in exploring methods for the prevention, diagnosis, and improved visualization of CRC [

2,

3,

4,

5]. Most techniques [

9,

10,

11,

12] integrate confocal laser endomicroscopy (CLE) and narrow banding imaging into the tip of a flexible endoscope to provide high-resolution endomicroscopy for real-time virtual biopsies. For example, the Cellvizio probe-based CLE has been commercialized. However, CRC diagnosis with such a system remains difficult because of the long processing time, inconsistent efficiency, physician disagreement, long learning curves, and low consistency across observers [

13,

14,

15]. To address this issue, computer-assisted assessment that uses artificial intelligence technology has recently been explored extensively for lesion identification [

5,

16,

17,

18].

Specifically, machine learning and deep learning-based methods have been proposed [

2,

19,

20,

21]. Tamaki et al. [

20] extracted image features by using the bag-of-visual-words method and then classified colorectal tumor images into three tumor types (A, B, and C3) by using the support vector machine (SVM) classifier. Zhou et al. [

19] developed a dense convolutional network by using colonoscopic images to classify CRC from normal tissues. To supplement the small training data, Kolligs [

2] and Ito et al. [

2,

21] adopted a deep transfer learning method that pre-trained the model with ImageNet and updated the last layer of the model with CRC data. However, even though these studies achieved high accuracy scores on classification tasks, the scores were not compared with the predictions by endoscopists, thus making it difficult to determine the difficulty of the task they achieved. Furthermore, most methods primarily focused on CRC classification, with relatively little attention paid to inflammatory bowel disease (IBD) [

22]. The number of patients with IBD, which has recently established itself as a global disease, is rapidly increasing [

23,

24,

25,

26]. However, it is difficult to accurately distinguish CRC from colonic inflammation because the patterns appearing in tissue confocal microscopy images look similar [

27,

28,

29,

30]. To the best of our knowledge, no study has classified CRC and IBD by using a machine learning-based method. Therefore, this study presents a deep learning method for classifying confocal microscopy images into colorectal neoplasms, colon inflammation, and normal tissues. We collected 411 confocal microscopy images from normal, CRC, and IBD tissues that were subjected to histological analysis and then trained and tested a deep learning model to classify them with a 4-fold cross-validation setting. We then compared the performance of the proposed model with those of three machine learning-based methods by using radiomic features and the predictions of four endoscopists.

2. Materials and Methods

2.1. Dataset

Fresh colon tissues were collected from 29 individuals who were over 18 years old, provided informed consent, and underwent elective colonoscopies at Korea University Medical Center, Anam Hospital, by following a protocol approved by the institutional review board (2019AN0051). Bright field images of the tissues were obtained using a confocal microscope (Leica TCS SP2, Leica, Solms, Germany) with a mode-locked Ti:sapphire laser source (Chameleon, Coherent Inc., Santa Clara, CA, USA) set at a wavelength of 750 nm and with a 10× dry objective with a numerical aperture of 0.30. A total of 132 ex vivo colon tissues were obtained from 29 patients, including 68 normal colon tissues, 18 inflamed colon tissues, and 46 neoplasm colon tissues. Tissue types were determined using histological analysis, which is the gold standard of diagnosis of colon tumor/inflammation/normal. From the tissues, we imaged 411 confocal microscopy images, including 178 normal images, 173 tumor images, and 60 inflammatory images.

2.2. Assessment by Endoscopists

Four endoscopists performed an anonymous evaluation of each confocal microscopy image of colon biopsy sample to determine whether it was a neoplasm, inflammation, or normal tissue. The four endoscopists were all experts and had performed more than 200 colonoscopies. The colon images were evaluated anonymously, and there was no communication among the endoscopists regarding the classification.

2.3. Classification Using Machine Learning Methods

We extracted image features from the confocal microscopy images and utilized them as inputs for the three machine learning methods. Specifically, the PyRadiomics toolbox [

31] was used to extract radiomic features [

32] from the collected colon images. We extracted first-order statistical features that describe the distribution of individual pixel values, as well as second-order statistical features (also called textural features), and higher-order statistical features by using statistical methods after applying filters or mathematical transforms to the images [

33]. Among the extracted features, we selected some important features to exclude junk information on classification. To determine which feature is fundamental to classification, we computed importance scores by using a machine learning library called scikit-learn. By referring to the importance score, we empirically selected the top 94 important features and used them for classification. Features related to gray-level intensity and textures, such as gray-level non-uniformity, short-run emphasis, and zone percentage feature, were highly selected rather than first-order features. By using the selected radiomic features, we trained three classification models: random forest (RF) [

34], SVM [

35], and extreme gradient boost (XGB) [

36].

2.4. Classification Using Deep Convolutional Neural Networks

Unlike the machine learning methods using hand crafted features, we can learn the relationship between images and labels in end-to-end manner via deep convolutional neural networks. We trained a deep learning model by using the residual network [

37], which is known to achieve high performance in various image classification tasks. We used data augmentation and transfer learning to handle the lack of annotated colon data. Specifically, we sampled 20 images during each mini-batch training and transformed them with random horizontal and vertical flips and random rotations between −180° and 180°. Data augmentation was also performed on machine learning-based methods for fair comparisons. For transfer learning, we used the ImageNet pre-trained model and then froze the model except for the last layer. We additionally trained the model and updated the last layer with confocal colorectal images.

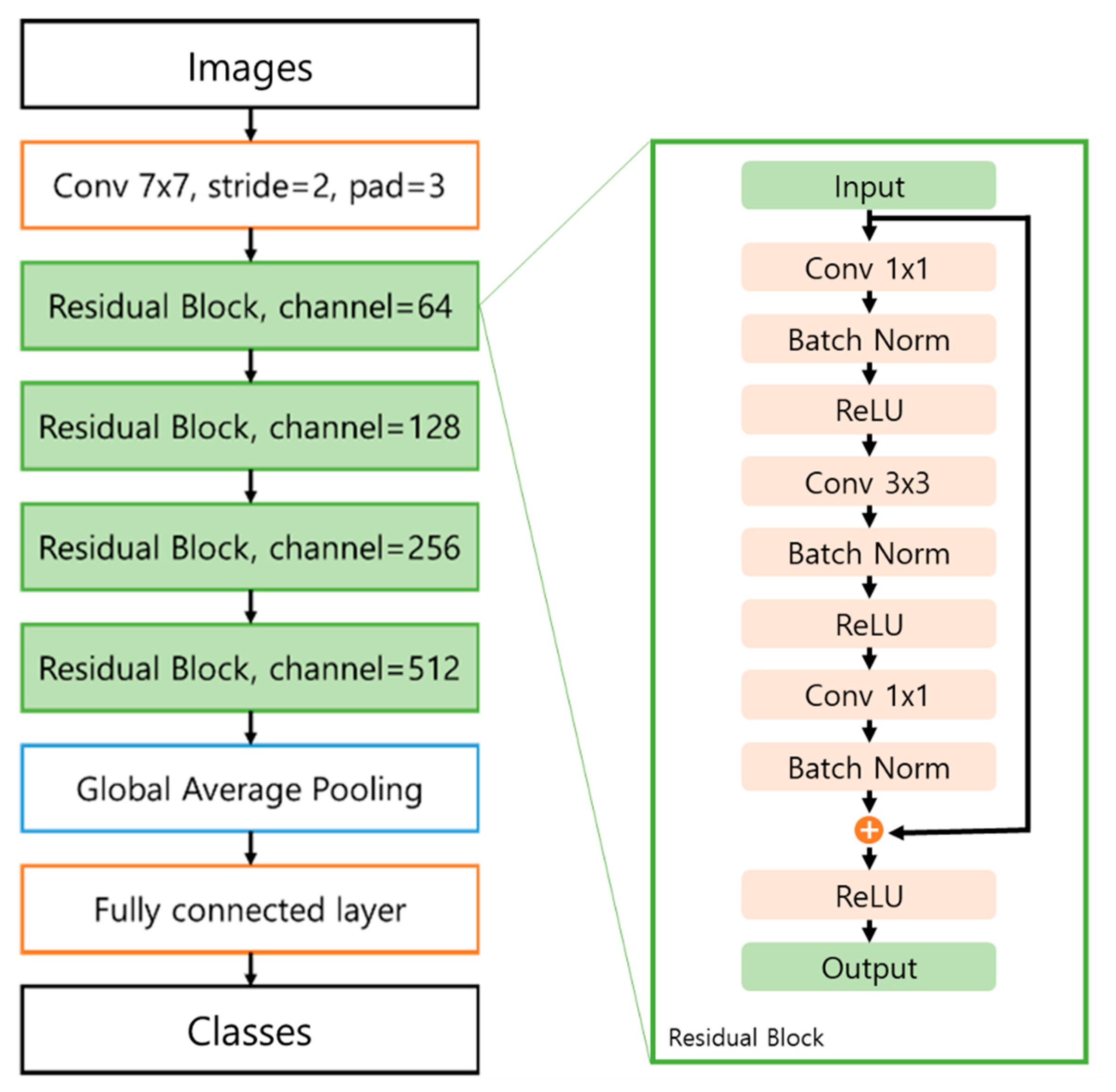

The residual network consists of four residual blocks including skip connections, a global average pooling (GAP) layer, and a fully connected layer (

Figure 1). Convolutional layers and max pooling layers were used to extract informative features from images and prominent signals from the feature maps. We used 3 × 3 filters for each convolution layer and max pooling layer and used batch normalization after each convolution layer. To extract the prominent signals, the pooling layer downsamples the image to a small size. When the image size was reduced by the pooling and convolution layers, the number of channels gradually doubled from 64 to 512. A rectified linear unit (ReLU) [

38], alleviating the gradient vanishing problem, was used as the activation function for all the convolution layers. The ResNet50 model applied the GAP [

39] layer at the end of the model, which can conserve location information. The conserved location information can then be used to visualize the influential area in the testing stage.

To train the proposed model, the mini-batch size was fixed to 20. The error between the label and prediction was calculated using cross-entropy with the softmax function. The model was optimized by the ADAM optimizer [

41]. The learning rate was fixed to 0.001 with a total of 100 epochs. Experiments were performed using a PC equipped with an Intel i7-8700K 3.7 GHz CPU, an NVIDIA GTX 1080 Ti GPU, and 64 GB of RAM, and the algorithms were implemented in PyTorch [

42].

During the testing stage, the proposed model computes the prediction score from a given colorectal image. We employ class activation maps (CAMs) [

43], which visually activate the parts that have a significant influence on the classification. To construct the CAM, we retrieved the GAP layer of the trained network that conserved the location information. By using the location information, we obtained a map of the most salient features used in classifying the image pixels as neoplasms.

2.5. Evaluation Settings

For evaluation, we performed 4-fold cross-validation by dividing 411 images into 4 sets (namely, 102, 103, 103, and 103), and each set had an equal distribution of the 3 classes. We used 3 of the 4 sets as training data and 1 set as test data, and the aforementioned process was repeated for every fold to obtain the prediction scores of all 411 images. A similar procedure was repeated for the machine learning-based methods with the same data splits.

We evaluated the performance in terms of accuracy, precision, recall, F1-score, false positive rate (FPR), and false negative rate (FNR). Moreover, to demonstrate the effectiveness of the proposed method, we compared its performance to the assessments of 4 endoscopists for all 411 images. We computed confusion matrices to check for inter-observer variability among the assessments of the four endoscopists and highlighted the efficacy of the proposed method over the assessments. To confirm the robustness of the proposed method, we investigated the prediction trends by evaluating the FPR and FNR.

3. Results

3.1. Performance of Predictions by the Endoscopists

Table 1 and

Table 2 show the classification scores of the four endoscopists. The accuracy scores of the four endoscopists were 0.51, 0.62, 0.59, and 0.64. The average accuracy of the four endoscopists on normal images was 72% even though 14% of images were misinterpreted as tumors and inflammation. They predicted 55% of tumor images well, but 38% were incorrectly predicted as inflammation. For inflammation images, 34% were well predicted, but 53% of images were incorrectly classified as tumors.

3.2. Comparison of Learning-Based Methods

Table 3 and

Table 4 present the four fold cross-validation performance of the three machine learning-based approaches and the proposed deep learning-based method. Among the machine learning-based methods, XGB achieved the highest score in all performance metrics. The performance difference between RF and SVM was insignificant, but SVM performed the worst in terms of accuracy, precision, and F1-scores. The proposed method achieved state of the art results and outperformed all the machine learning-based methods. The proposed method has a high accuracy of 81.73% in categorizing confocal microscopy images of colon biopsy samples into three categories. After comparing the results of the proposed method with those of the machine learning-based methods, we found a marked improvement of +9.21% in accuracy on average. Moreover, the proposed method obtained the lowest FPR and FNR (lower FPR and lower FNR are better).

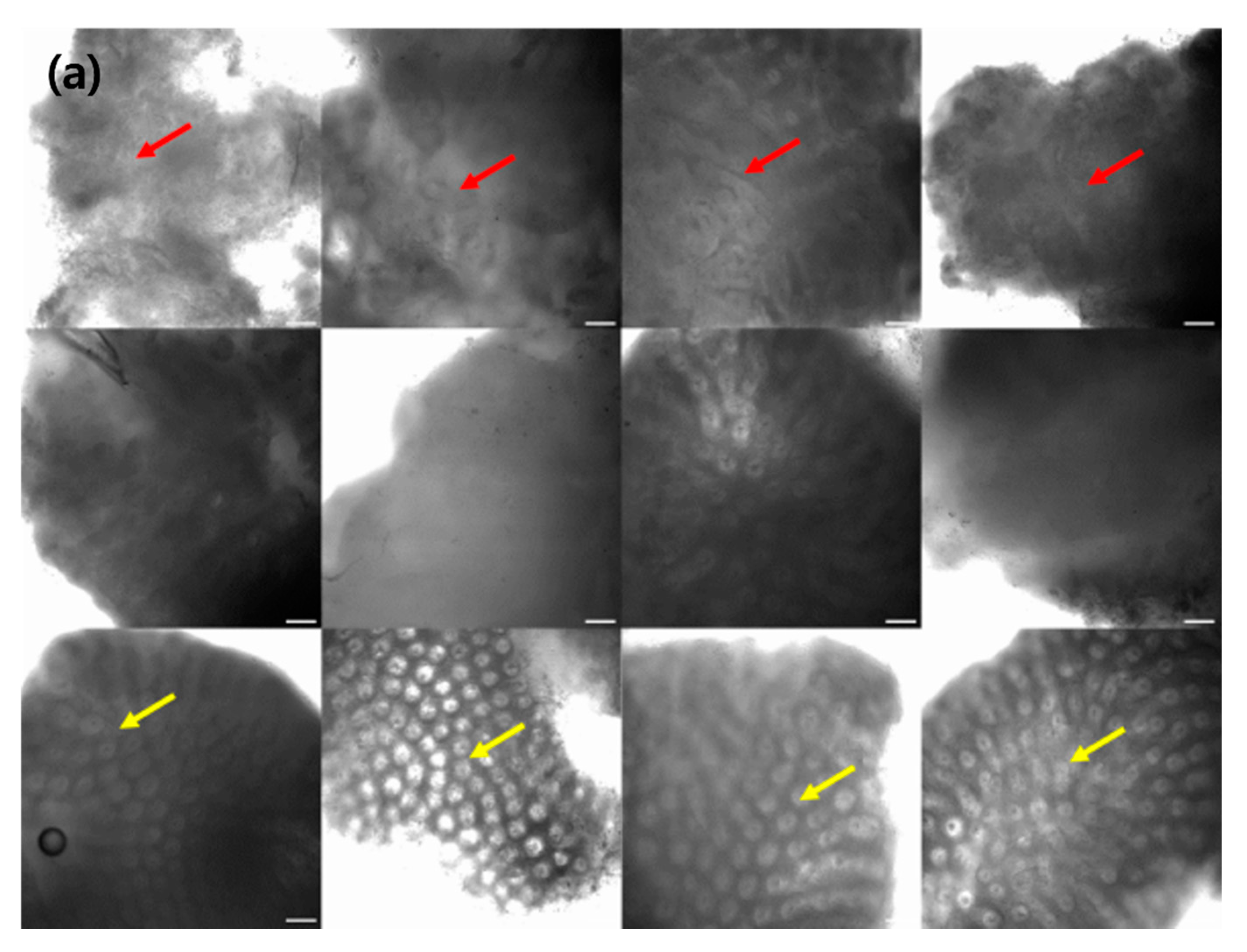

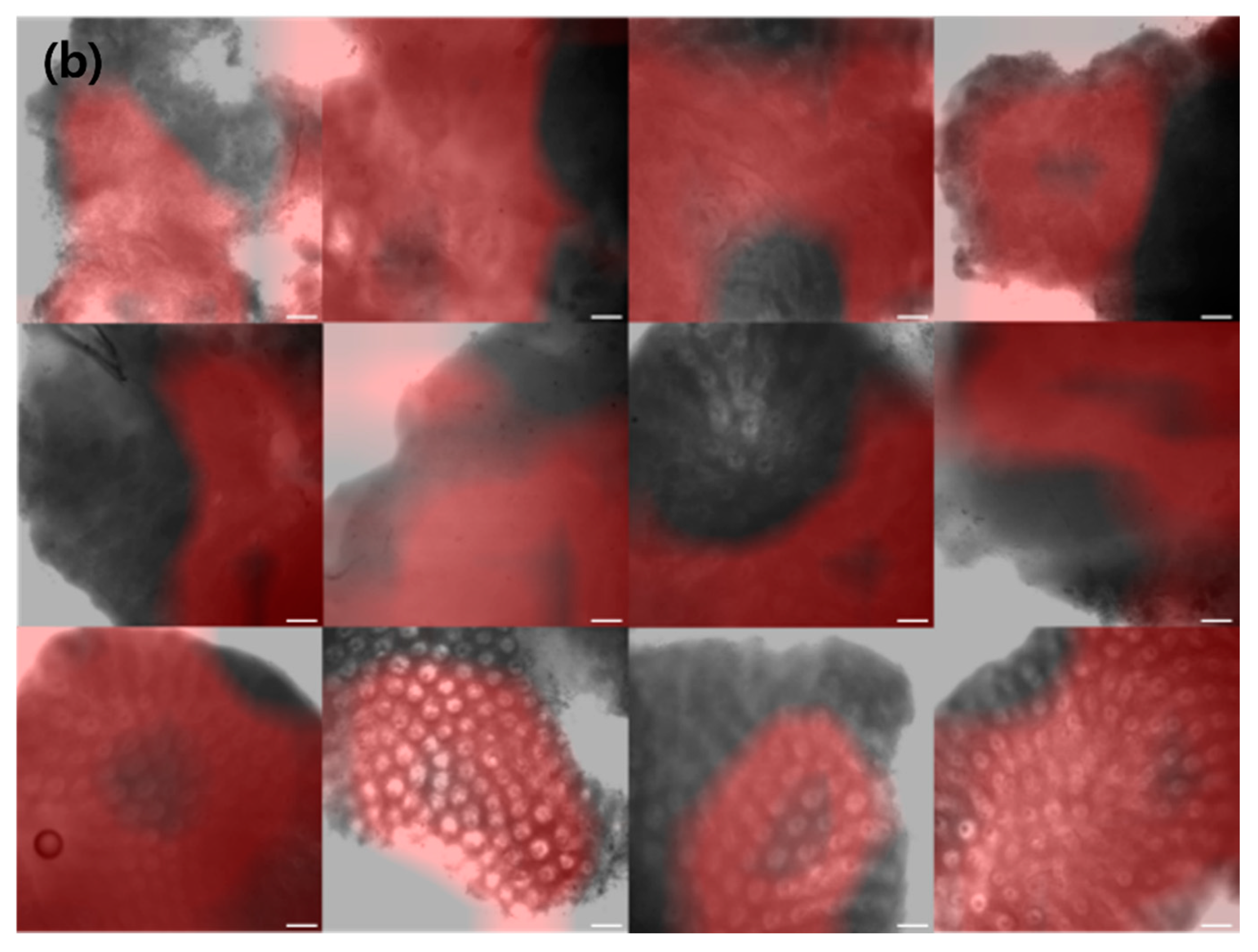

To facilitate endoscopists in the clinical diagnosis of CRC, we employed a CAM, which activates the regions that are crucial for CRC categorization in a confocal microscopy image.

Figure 2 depicts various examples of normal, tumor, and inflammation-activated regions using CAMs. A healthy normal colon mucosa is characterized by dark goblet cells, regular and narrow vessels surrounding crypts, and a round crypt structure [

44], whereas inflammation of the colon mucosa is characterized by variations in the shape, size, and distribution of crypts, increased distance between crypts, focal crypt distribution, mild-to-moderate increase in capillaries, and dilated and distorted crypts [

45,

46,

47]. Neoplasm colon mucosa is characterized by a ridge-lined irregular epithelial layer with the loss of crypts and goblet cells, irregular cell architecture with little or no mucin, dilated and distorted vessels with increased leakage, irregular architecture with little or no orientation to adjunct tissue, disorganized villiform or lack of structure, dark and irregularly thickened epithelium, and dilated vessels [

14,

48]. Our CAM results show the well-activated characteristic areas of each neoplasm, inflammation, and normal tissue (

Figure 2); for example, the activated regular and round crypt structures of neoplasms, dispersed crypt structure of inflammation, and irregular cell architecture of neoplasms.

4. Discussion

This study demonstrated the efficacy of the proposed model by comparing it with the performance of three machine learning methods and the predictions of four endoscopists. The proposed method provides a significant performance improvement compared with the XGB, SVM, and RF methods. Machine learning-based methods frequently misclassify normal and tumor classes, which may lead to incorrect treatment for the patients. On the contrary, the proposed deep learning-based method obtained 12% and 17% better accuracy rates for classifying normal and tumor classes in images, respectively. The proposed method also produced better results than the evaluations of the endoscopists in terms of accuracy, precision, recall, and F1 score by +22.60%, +25.14%, +22.26%, and +24.15%, respectively. There was a high degree of inter-observer variability among the decisions of the endoscopists, particularly in differentiating between inflammation and neoplasm classes. For example, endoscopists A and C were inclined to predict neoplasm as inflammation, whereas endoscopists B and D were inclined to predict inflammation as a neoplasm class. Therefore, the classification evaluations of the endoscopists indicate a significant bias and low accuracy in neoplasm–inflammation classification. On the other hand, the proposed method obtained consistent performance in the classification of neoplasm and inflammation. After rigorous evaluation of the performance of the proposed method, we confirmed that the proposed method will perform with high reliability when employed in the clinical workflow for diagnosing neoplasms in colonoscopies.

In the literature, prior studies [

49,

50] have shown 93.1% and 89.1% accuracy rates for colon classification. However, these techniques only consider binary classification tasks. Our method achieved an accuracy of 81.7% for the three class classification tasks, including the difficult task of distinguishing neoplasm and inflammation classes. In the binary classification problem that classifies CRC and normal tissue, our method can obtain an accuracy of 95.1%.

In medical settings, diagnosis is dependent on the subjective opinions of analysts and often shows a significant discrepancy between experienced and novice endoscopists. We confirmed that the proposed deep learning-based method may assist novice endoscopists in confusing situations, particularly in tumor-inflammation classification. Furthermore, the proposed method can accelerate the analysis. In particular, our proposed method predicts the confocal image class with higher accuracy in less than 1 s, whereas endoscopists often require 5–10 s to diagnose a single confocal image. Moreover, CAM visualization can help novice endoscopists with confocal image interpretation because they are less reliable and take longer to interpret confocal images.

Despite the significant performance of the proposed method across various settings, we highlight some shortcomings and constraints that require careful consideration. First, although data augmentation and transfer learning methods were used, the annotated colon dataset was insufficient in our experiments. Additionally, the current work is limited to the single domain study. In the future, we would like to expand our model to address more general tissue images taken from various hospitals. Since each hospital has different lens specs or laser specs for commercially available confocal microscopes, there may be differences in the images. However, the specifications of the commercial confocal microscope used in this study are not particularly high-level special equipment, and the model will be generalized if more images from various hospitals can be used in the training stage. We will verify the performance of the proposed method on a large amount of data obtained from more sites. Second, the images used in our experiments were acquired using a microscope instead of a probe-based CLE. In the future, we plan to create a system that can assist decision making while viewing the CLE in real time.

5. Conclusions

We proposed a colorectal neoplasm classification system that uses a deep learning model with data augmentation and transfer learning to effectively identify neoplasm, inflammation, and normal tissue in confocal microscopy images. The proposed method outperforms the classification accuracy of experienced endoscopists, as well as the accuracy of the three machine learning-based methods. We expect that the proposed deep learning-based method is feasible and capable of assisting endoscopists in decision making with high precision for colorectal neoplasm classification.

Author Contributions

Conceptualization, J.J., S.T.H., E.S.K. and S.H.P.; methodology, J.J. and S.H.P.; formal analysis, J.J., S.T.H., E.S.K. and S.H.P. writing—review and editing, J.J., S.T.H., I.U. and S.H.P.; data curation, E.S.K.; software, J.J.; funding acquisition, E.S.K. and S.H.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Grant of Artificial Intelligence Bio-Robot Medical Convergence Technology funded by the Ministry of Trade, Industry and Energy, the Ministry of Science and ICT, and the Ministry of Health and Welfare (No. 20001533).

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Ethics Committee of Korea University Medical Center, Anam Hospital (protocol code 2019AN0051 and date of approval was 31 January 2019).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data are not publicly available due to privacy restrictions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rawla, P.; Sunkara, T.; Barsouk, A. Epidemiology of colorectal cancer: Incidence, mortality, survival, and risk factors. Prz. Gastroenterol. 2019, 14, 89–103. [Google Scholar] [CrossRef] [PubMed]

- Kolligs, F.T. Diagnostics and epidemiology of colorectal cancer. Visc. Med. 2016, 32, 158–164. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mahasneh, A.; Al-Shaheri, F.; Jamal, E. Molecular biomarkers for an early diagnosis, effective treatment and prognosis of colorectal cancer: Current updates. Exp. Mol. Pathol. 2017, 102, 475–483. [Google Scholar] [CrossRef] [PubMed]

- López, P.J.T.; Albero, J.S.; Rodríguez-Montes, J.A. Primary and secondary prevention of colorectal cancer. Clin. Med. Insights Gastroenterol. 2014, 7, 33–46. [Google Scholar] [CrossRef]

- Mitsala, A.; Tsalikidis, C.; Pitiakoudis, M.; Simopoulos, C.; Tsaroucha, A.K. Artificial Intelligence in Colorectal Cancer Screening, Diagnosis and Treatment. A New Era. Curr. Oncol. 2021, 28, 1581–1607. [Google Scholar] [CrossRef]

- Lieberman, D.A.; Rex, D.K.; Winawer, S.J.; Giardiello, F.M.; Johnson, D.A.; Levin, T.R. Guidelines for colonoscopy surveillance after screening and polypectomy: A consensus update by the US Multi-Society Task Force on Colorectal Cancer. Gastroenterology 2012, 143, 844–857. [Google Scholar] [CrossRef] [Green Version]

- ASGE Standards of Practice Committee; Fisher, D.A.; Shergill, A.K.; Early, D.S.; Acosta, R.D.; Chandrasekhara, V.; Chathadi, K.V.; Decker, G.A.; Evans, J.A.; Fanelli, R.D.; et al. Role of endoscopy in the staging and management of colorectal cancer. Gastrointest. Endosc. 2013, 78, 8–12. [Google Scholar] [CrossRef]

- Janssen, R.M.; Takach, O.; Nap-Hill, E.; Enns, R.A. Time to endoscopy in patients with colorectal cancer: Analysis of wait-times. Can. J. Gastroenterol. Hepatol. 2016, 2016, 8714587. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, N.Q.; Leong, R.W. Current application of confocal endomicroscopy in gastrointestinal disorders. J. Gastroenterol. Hepatol. 2008, 23, 1483–1491. [Google Scholar] [CrossRef]

- Tsuji, S.; Takeda, Y.; Tsuji, K.; Yoshida, N.; Takemura, K.; Yamada, S.; Doyama, H. Clinical outcomes of the “resect and discard” strategy using magnifying narrow-band imaging for small (<10 mm) colorec-tal polyps. Endosc. Int. Open 2018, 6, E1382–E1389. [Google Scholar]

- Rangrez, M.; Bussau, L.; Ifrit, K.; Preul, M.C.; Delaney, P. Fluorescence In Vivo Endomicroscopy Part 2: Applications of High-Resolution, 3-Dimensional Confocal Laser Endomicroscopy. MTO 2021, 29, 14–26. [Google Scholar] [CrossRef]

- Popa, P.; Streba, C.T.; Caliţă, M.; Iovănescu, V.F.; Florescu, D.N.; Ungureanu, B.S.; Stănculescu, A.D.; Ciurea, R.N.; Oancea, C.N.; Georgescu, D.; et al. Value of endoscopy with narrow-band imaging and probe-based confocal laser endomicroscopy in the diagnosis of preneoplastic lesions of gastrointestinal tract. Rom. J. Morphol. Embryol. 2020, 61, 759. [Google Scholar] [CrossRef] [PubMed]

- Gómez, V.; Shahid, M.W.; Krishna, M.; Heckman, M.G.; Crook, J.E.; Wallace, M.B. Classification criteria for advanced adenomas of the colon by using probe-based confocal laser endomicroscopy: A preliminary study. Dis. Colon Rectum 2013, 56, 967–973. [Google Scholar] [CrossRef] [PubMed]

- Shahid, M.W.; Buchner, A.M.; Coron, E.; Woodward, T.A.; Raimondo, M.; Dekker, E.; Fockens, P.; Wallace, M.B. Diagnostic accuracy of probe-based confocal laser endomicroscopy in detecting residual colorectal neoplasia after EMR: A prospective study. Gastrointest. Endosc. 2012, 75, 525–533. [Google Scholar] [CrossRef]

- Wang, K.K.; Carr-Locke, D.L.; Singh, S.K.; Neumann, H.; Bertani, H.; Galmiche, J.P.; Arsenescu, R.I.; Caillol, F.; Chang, K.J.; Chaussade, S. Use of probe-based confocal laser endomicroscopy (pCLE) in gastrointestinal applications. A consensus report based on clinical evidence. United Eur. Gastroenterol. J. 2015, 3, 230–254. [Google Scholar] [CrossRef]

- Taunk, P.; Atkinson, C.D.; Lichtenstein, D.; Rodriguez-Diaz, E.; Singh, S.K. Computer-assisted assessment of colonic polyp histopathology using probe-based confocal laser endomicroscopy. Int. J. Colorectal. Dis. 2019, 34, 2043–2051. [Google Scholar] [CrossRef]

- Nogueira-Rodríguez, A.; Domínguez-Carbajales, R.; López-Fernández, H.; Iglesias, A.; Cubiella, J.; Fdez-Riverola, F.; Reboiro-Jato, M.; Glez-Peña, D. Deep neural networks approaches for detecting and classifying colorectal polyps. Neurocomputing 2021, 423, 721–734. [Google Scholar] [CrossRef]

- Ozawa, T.; Ishihara, S.; Fujishiro, M.; Kumagai, Y.; Shichijo, S.; Tada, T. Automated endoscopic detection and classification of colorectal polyps using convolutional neural networks. Therap. Adv. Gastroenterol. 2020, 13. [Google Scholar] [CrossRef] [Green Version]

- Zhou, D.; Tian, F.; Tian, X.; Sun, L.; Huang, X.; Zhao, F.; Zhou, N.; Chen, Z.; Zhang, Q.; Yang, M. Diagnostic evaluation of a deep learning model for optical diagnosis of colorectal cancer. Nat. Commun. 2020, 11, 2961. [Google Scholar] [CrossRef]

- Tamaki, T.; Yoshimuta, J.; Kawakami, M.; Raytchev, B.; Kaneda, K.; Yoshida, S.; Takemura, Y.; Onji, K.; Miyaki, R.; Tanaka, S. Computer-aided colorectal tumor classification in NBI endoscopy using local features. Med. Image. Anal. 2013, 17, 78–100. [Google Scholar] [CrossRef]

- Ito, N.; Kawahira, H.; Nakashima, H.; Uesato, M.; Miyauchi, H.; Matsubara, H. Endoscopic diagnostic support system for cT1b colorectal cancer using deep learning. Oncology 2019, 96, 44–50. [Google Scholar] [CrossRef] [PubMed]

- Gubatan, J.; Levitte, S.; Patel, A.; Balabanis, T.; Wei, M.T.; Sinha, S.R. Artificial intelligence applications in inflammatory bowel disease: Emerging technologies and future directions. World J. Gastroenterol. 2021, 27, 1920–1935. [Google Scholar] [CrossRef] [PubMed]

- Maeda, Y.; Kudo, S.E.; Mori, Y.; Misawa, M.; Ogata, N.; Sasanuma, S.; Wakamura, K.; Oda, M.; Mori, K.; Ohtsuka, K. Fully automated diagnostic system with artificial intelligence using endocytoscopy to identify the presence of histologic inflammation associated with ulcerative colitis (with video). Gastrointest. Endosc. 2019, 89, 408–415. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, G.; Shen, J. Artificial Intelligence Enhances Studies on Inflammatory Bowel Disease. Front. Bioeng. Biotechnol. 2021, 9, 635764. [Google Scholar] [CrossRef]

- Sundaram, S.; Choden, T.; Mattar, M.C.; Desai, S.; Desai, M. Artificial intelligence in inflammatory bowel disease endoscopy: Current landscape and the road ahead. Ther. Adv. Gastrointest. Endosc. 2021, 14. [Google Scholar] [CrossRef] [PubMed]

- Quénéhervé, L.; David, G.; Bourreille, A.; Hardouin, J.B.; Rahmi, G.; Neunlist, M.; Brégeon, J.; Coron, E. Quantitative assessment of mucosal architecture using computer-based analysis of confocal laser endomicroscopy in inflammatory bowel diseases. Gastrointest. Endosc. 2019, 89, 626–636. [Google Scholar] [CrossRef] [PubMed]

- Kim, E.R.; Chang, D.K. Colorectal cancer in inflammatory bowel disease: The risk, pathogenesis, prevention and diagnosis. World J. Gastroenterol. 2014, 20, 9872–9881. [Google Scholar] [CrossRef]

- Lukas, M. Inflammatory bowel disease as a risk factor for colorectal cancer. Dig. Dis. 2010, 28, 619–624. [Google Scholar] [CrossRef]

- Ananthakrishnan, A.N.; Cagan, A.; Cai, T.; Gainer, V.S.; Shaw, S.Y.; Churchill, S.; Karlson, E.W.; Murphy, S.N.; Kohane, I.; Liao, K.P. Colonoscopy is associated with a reduced risk for colon cancer and mortality in patients with inflammatory bowel diseases. Clin. Gastroenterol. Hepatol. 2015, 13, 322–329.e1. [Google Scholar] [CrossRef] [Green Version]

- Schmitt, M.; Greten, F.R. The inflammatory pathogenesis of colorectal cancer. Nat. Rev. Immunol. 2021, 21, 653–667. [Google Scholar] [CrossRef]

- Van Griethuysen, J.J.M.; Fedorov, A.; Parmar, C.; Hosny, A.; Aucoin, N.; Narayan, V.; Beets-Tan, R.G.H.; Fillion-Robin, J.C.; Pieper, S.; Aerts, H.J.W.L. Computational radiomics system to decode the radiographic phenotype. Cancer Res. 2017, 77, e104–e107. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gillies, R.J.; Kinahan, P.E.; Hricak, H. Radiomics: Images are more than pictures, they are data. Radiology 2016, 278, 563–577. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rizzo, S.; Botta, F.; Raimondi, S.; Origgi, D.; Fanciullo, C.; Morganti, A.G.; Bellomi, M. Radiomics: The facts and the challenges of image analysis. Eur. Radiol. Exp. 2018, 2, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Hearst, M.A.; Dumais, S.T.; Osman, E.; Platt, J.C.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. 1998, 13, 18–28. [Google Scholar] [CrossRef] [Green Version]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; Association for Computing Machinery: New York, NY, USA, 2016. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network in network. In Proceedings of the 2nd International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 7–9 July 2015; pp. 448–456. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural. Inf. Process. Syst. 2019, 32, 8026–8037. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Ştefănescu, D.; Streba, C.; Cârţână, E.T.; Săftoiu, A.; Gruionu, G.; Gruionu, L.G. Computer aided diagnosis for confocal laser endomicroscopy in advanced colorectal adenocarcinoma. PLoS ONE 2016, 11, e0154863. [Google Scholar] [CrossRef] [Green Version]

- Kiesslich, R.; Goetz, M.; Lammersdorf, K.; Schneider, C.; Burg, J.; Stolte, M.; Vieth, M.; Nafe, B.; Galle, P.R.; Neurath, M.F. Chromoscopy-guided endomicroscopy increases the diagnostic yield of intraepithelial neoplasia in ulcerative colitis. Gastroenterology 2007, 132, 874–882. [Google Scholar] [CrossRef]

- Kiesslich, R.; Duckworth, C.; Moussata, D.; Gloeckner, A.; Lim, L.G.; Goetz, M.; Pritchard, D.M.; Galle, P.R.; Neurath, M.F.; Watson, A.J.M. Local barrier dysfunction identified by confocal laser endomicroscopy predicts relapse in inflammatory bowel disease. Gut 2012, 61, 1146–1153. [Google Scholar] [CrossRef]

- Neumann, H.; Vieth, M.; Atreya, R.; Grauer, M.; Siebler, J.; Bernatik, T.; Neurath, M.F.; Mudter, J. Assessment of Crohn’s disease activity by confocal laser endomicroscopy. Inflamm. Bowel. Dis. 2012, 18, 2261–2269. [Google Scholar] [CrossRef]

- Wallace, M.; Lauwers, G.Y.; Chen, Y.; Dekker, E.; Fockens, P.; Sharma, P.; Meining, A. Miami classification for probe-based confocal laser endomicroscopy. Endoscopy 2011, 43, 882–891. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xu, F.; Qin, Y.; He, W.; Huang, G.; Lv, J.; Xie, X.; Diao, C.; Tang, F.; Jiang, L.; Lan, R. A deep transfer learning framework for the automated assessment of corneal inflammation on in vivo confocal microscopy images. PLoS ONE 2021, 16, e0252653. [Google Scholar] [CrossRef] [PubMed]

- Gessert, N.; Wittig, L.; Drömann, D.; Keck, T.; Schlaefer, A.; Ellebrecht, D. Feasibility of colon cancer detection in confocal laser microscopy images using convolution neural networks. In Bildverarbeitung für die Medizin 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 327–332. [Google Scholar]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).