Employing Energy and Statistical Features for Automatic Diagnosis of Voice Disorders

Abstract

1. Introduction

2. Materials and Methods

2.1. Voice Disorder Databases

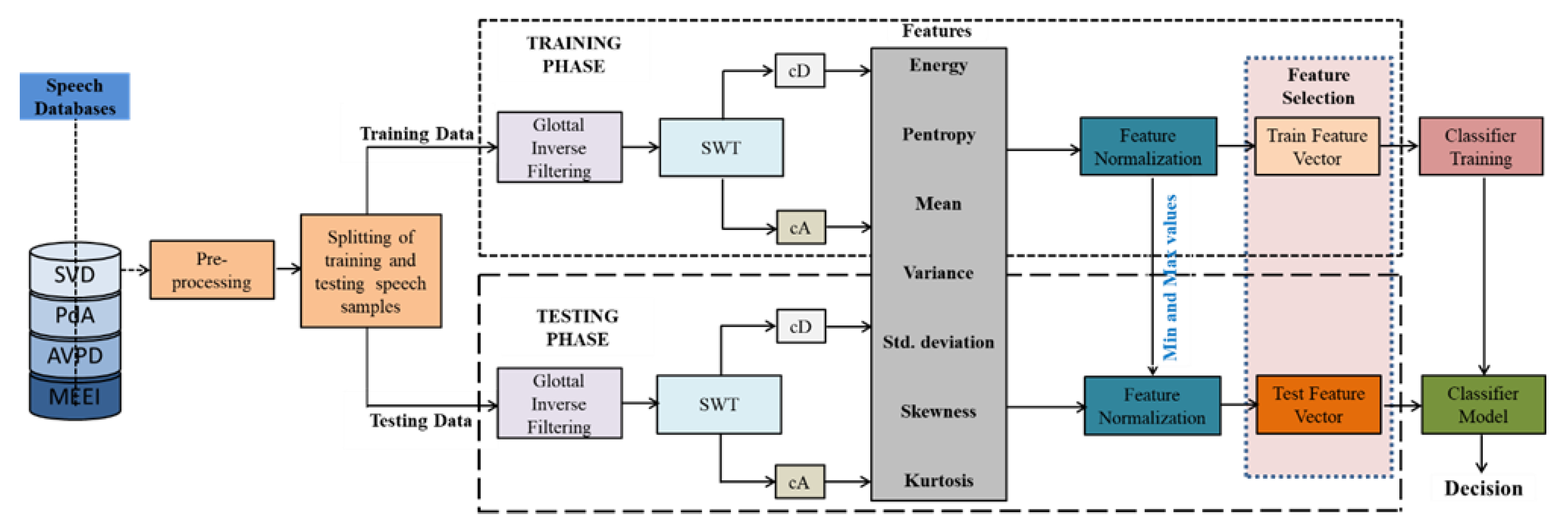

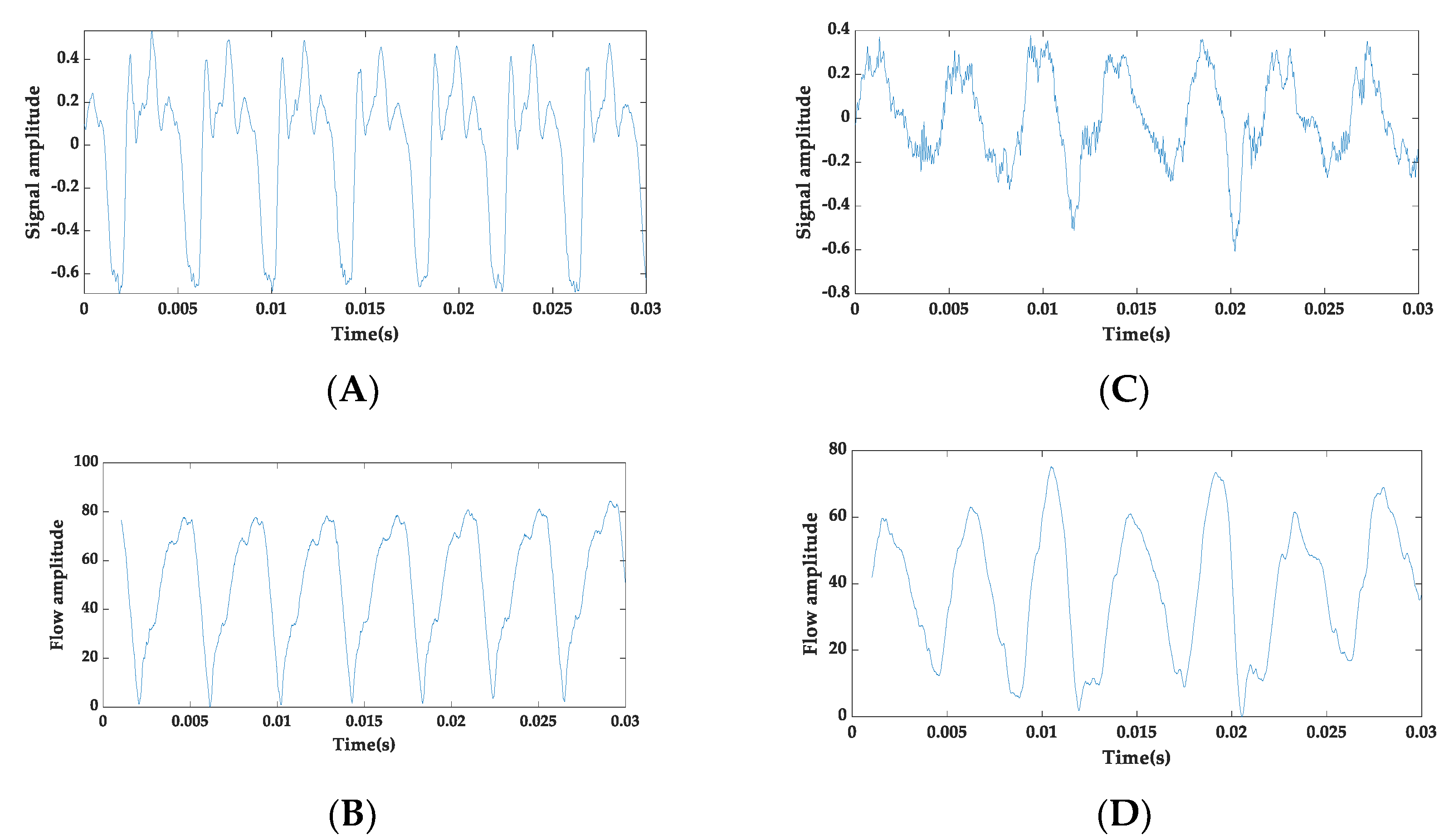

2.2. Proposed Speech Pathology Assessment Technique

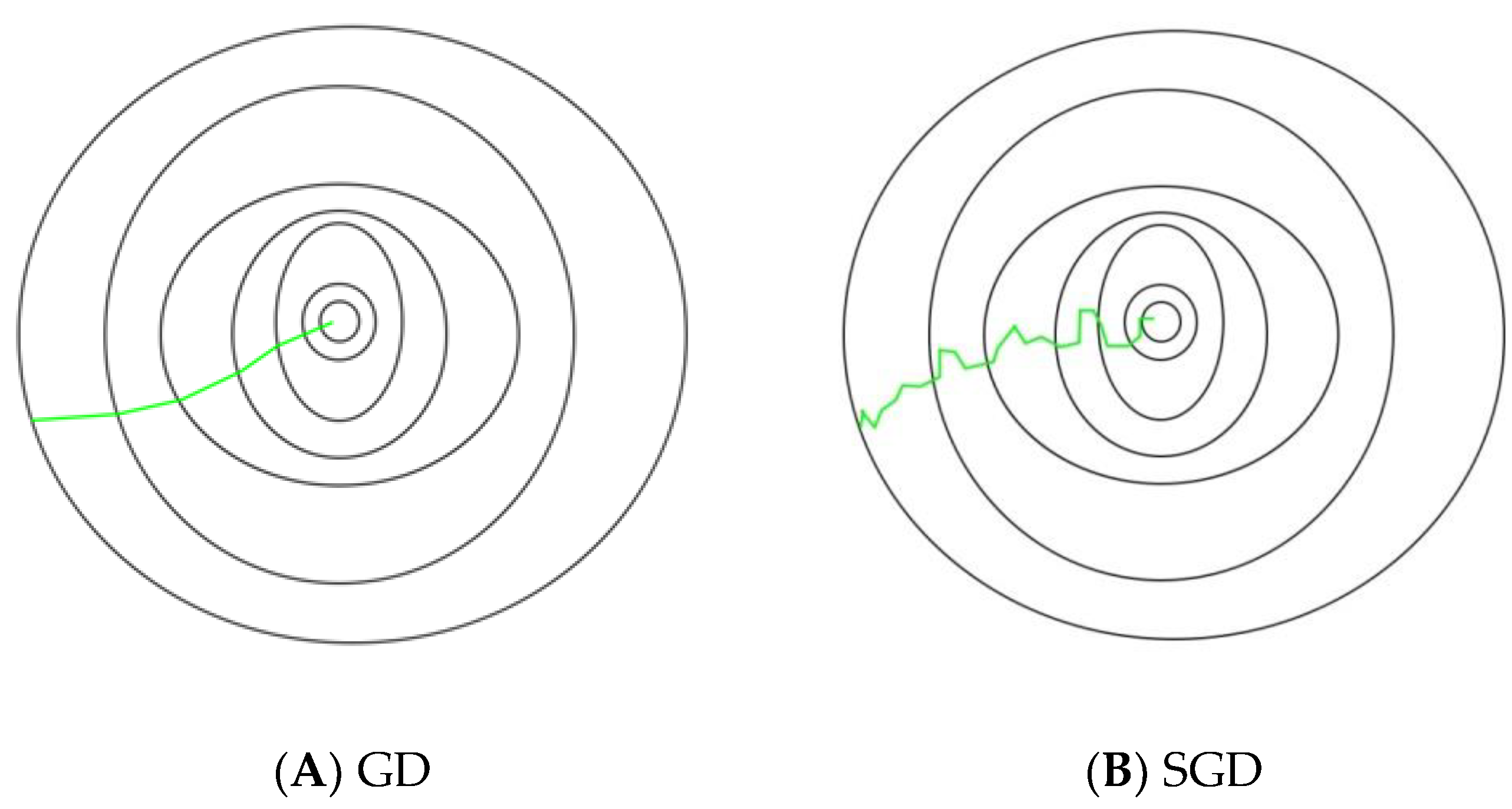

- Select an initial vector of parameters and step size (learning rate)

- Repeat it until an approximate minimum is obtained:

- ○

- Shuffle the observations in the training dataset randomly.

- ○

- For , do:

- ▪

- .

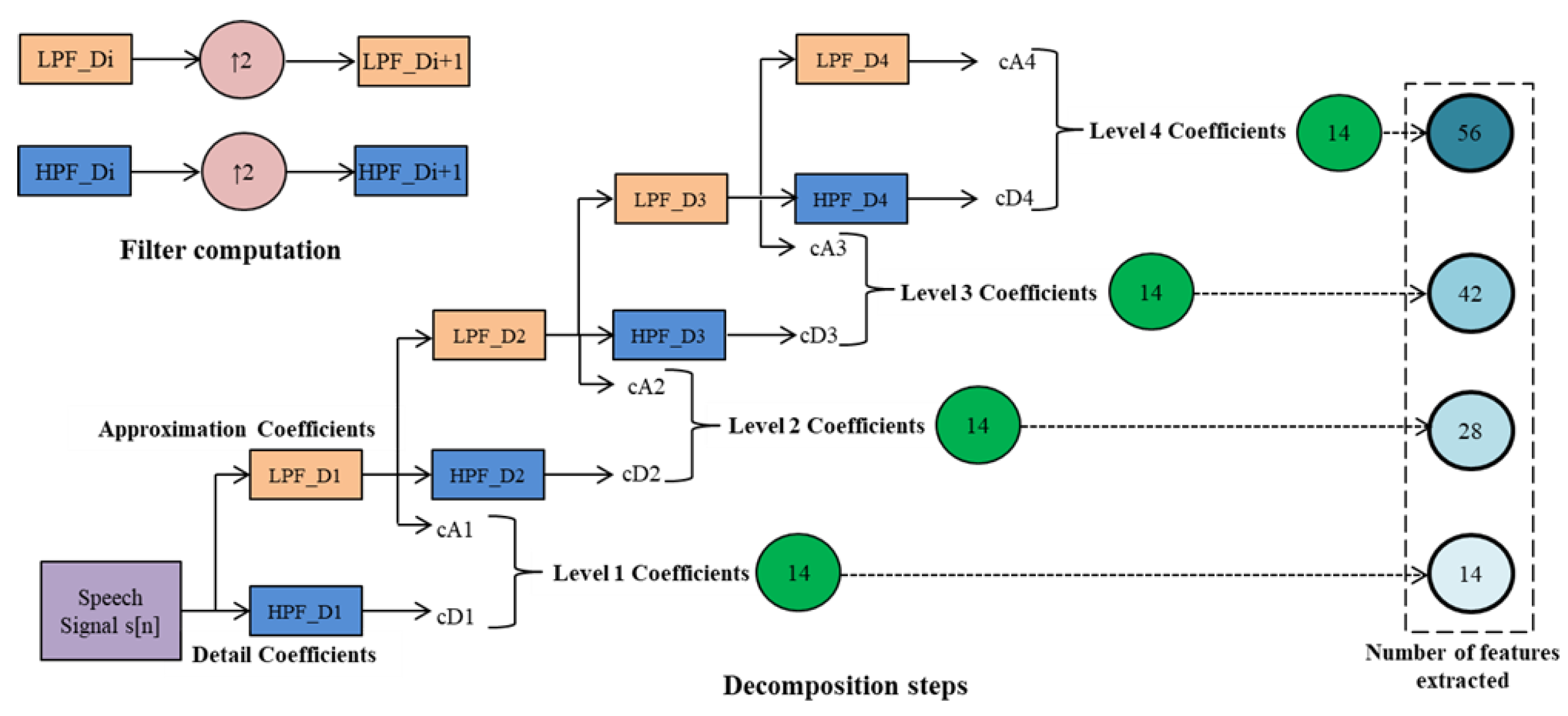

2.2.1. Stationary Wavelet Transform

2.2.2. SWT Sub-Band Features

- 1.

- Energy: the energy of each is calculated using:

- 2.

- Spectral entropy: the sub-band entropy is calculated as follows:

- 3.

- Mean (μ): the mean of both coefficients of the sub-band is calculated as:

- 4.

- Variance: the variance of both coefficients of the sub-band is calculated as:

- 5.

- Standard deviation: this is calculated as:

- 6.

- Kurtosis: this is evaluated as:

- 7.

- Skewness: skewness computes the asymmetry of the data in relation to the sample mean and is estimated as:

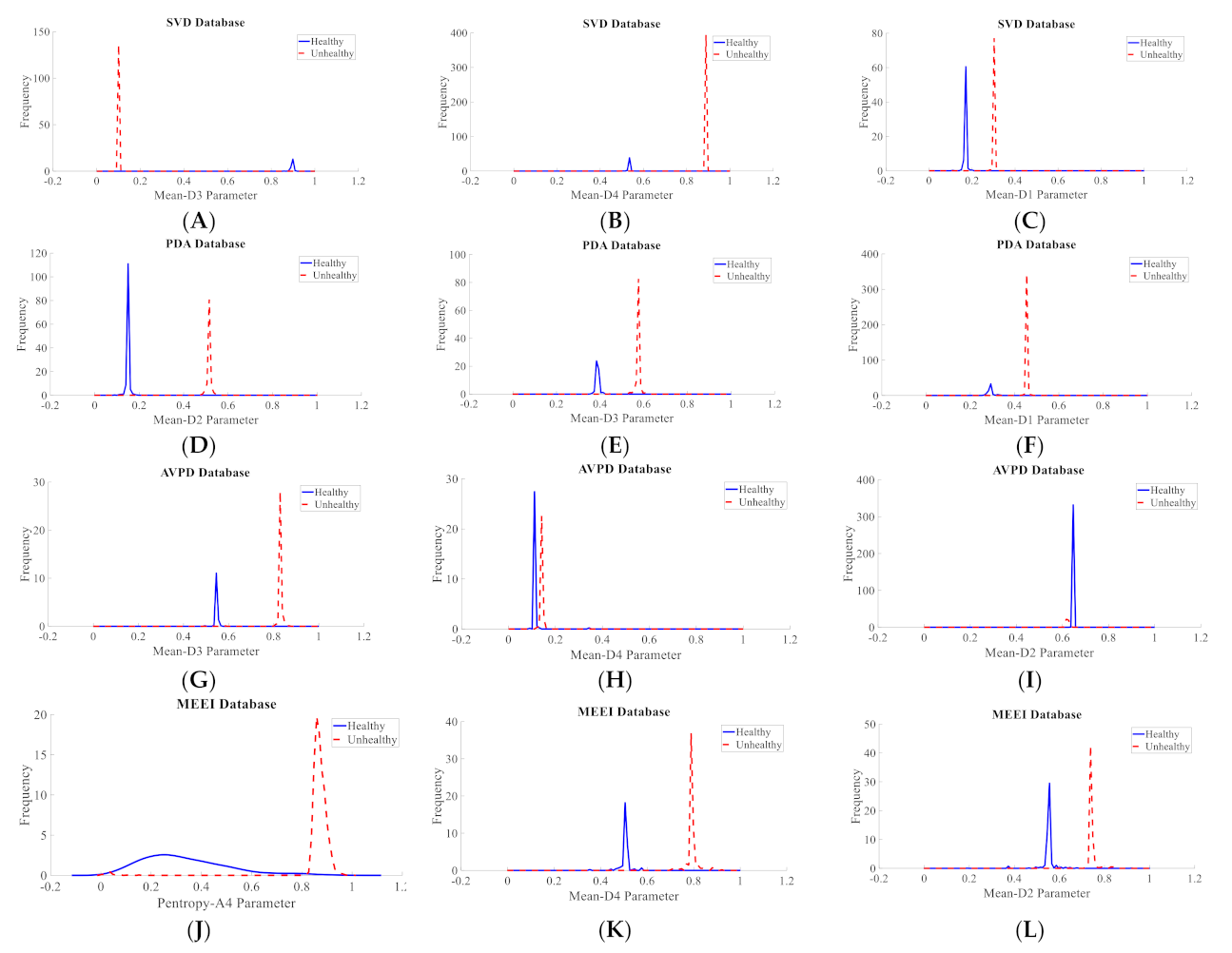

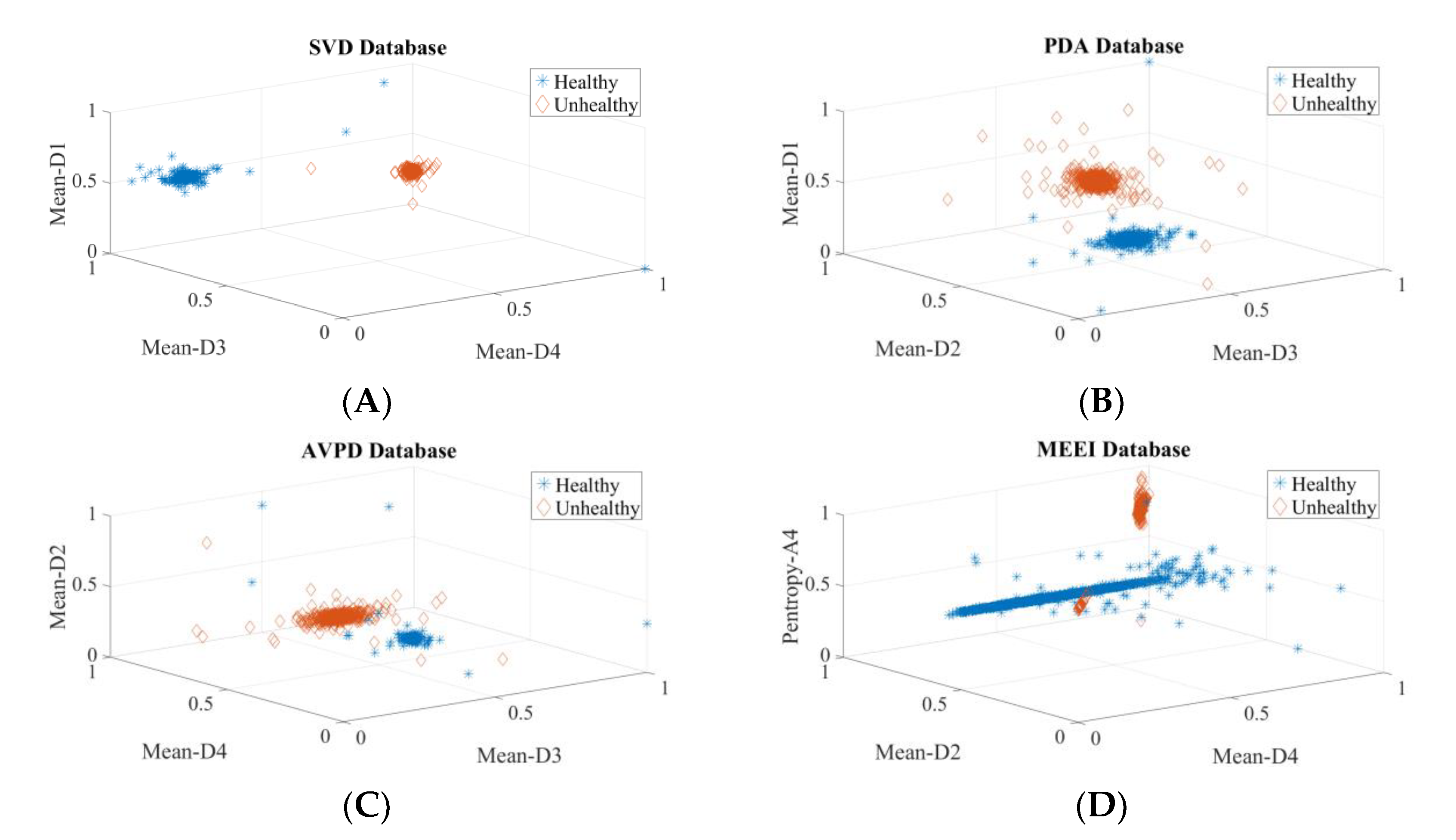

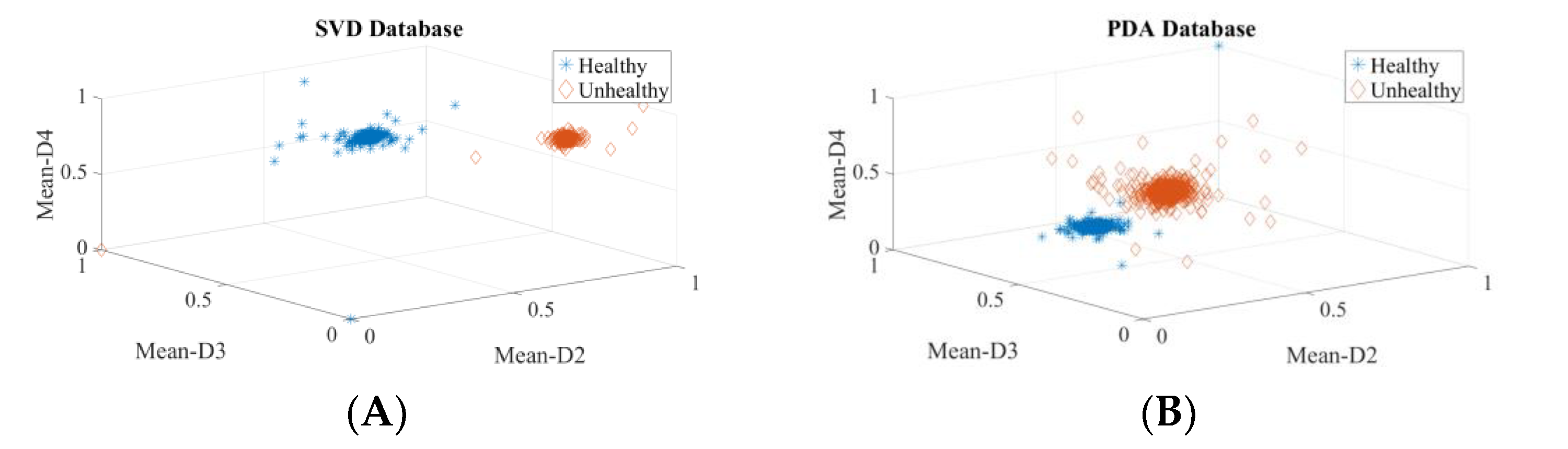

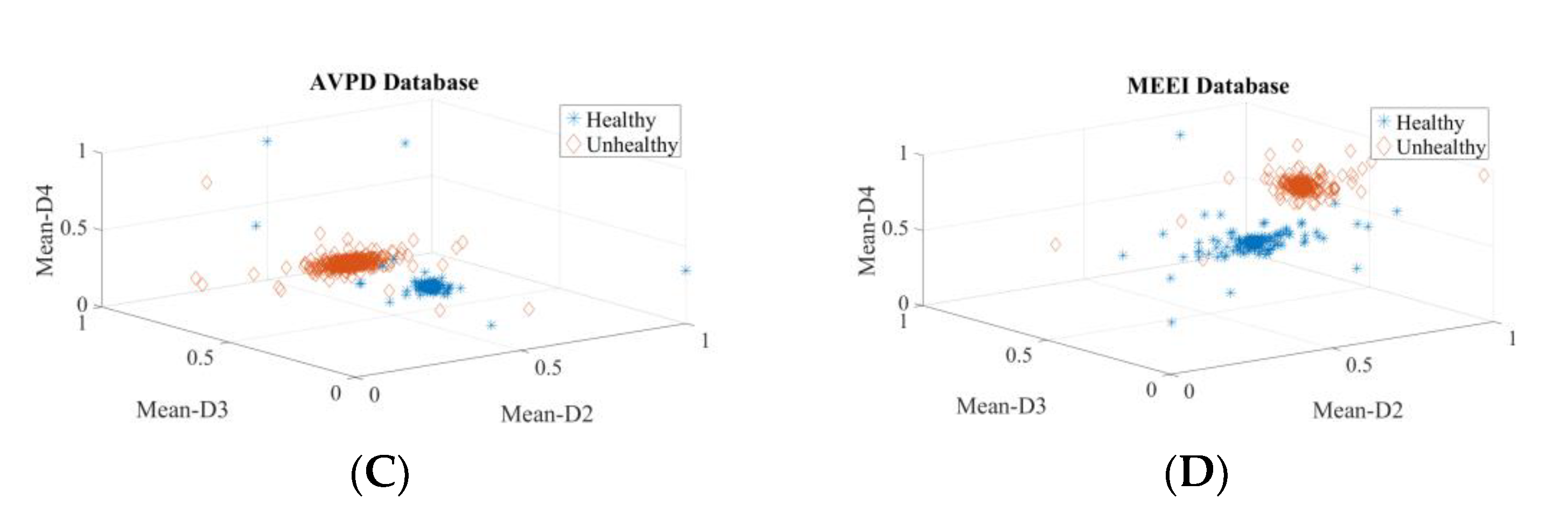

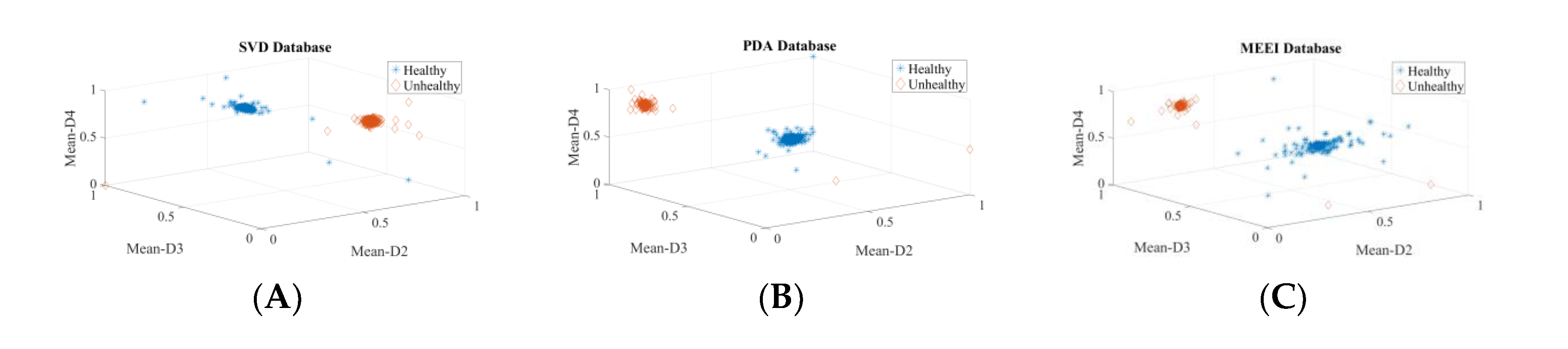

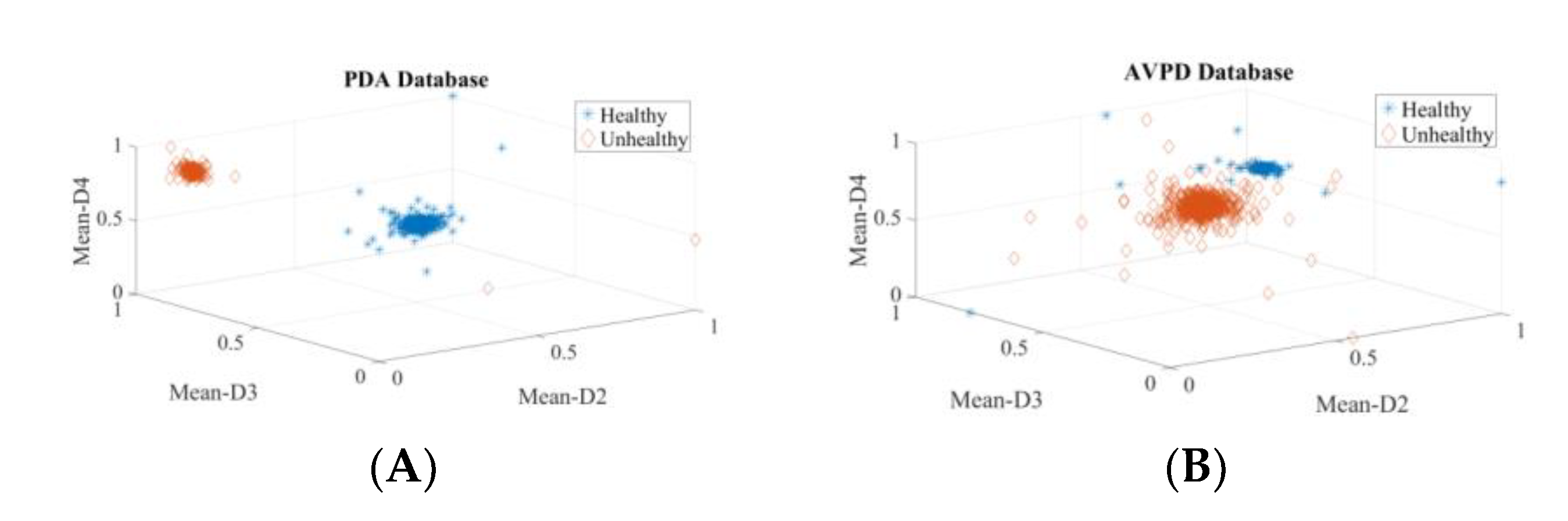

2.2.3. Evaluation of Discernment Potential of Attributes

3. Results

3.1. Intra-Dataset Voice Pathology Detection Experiments

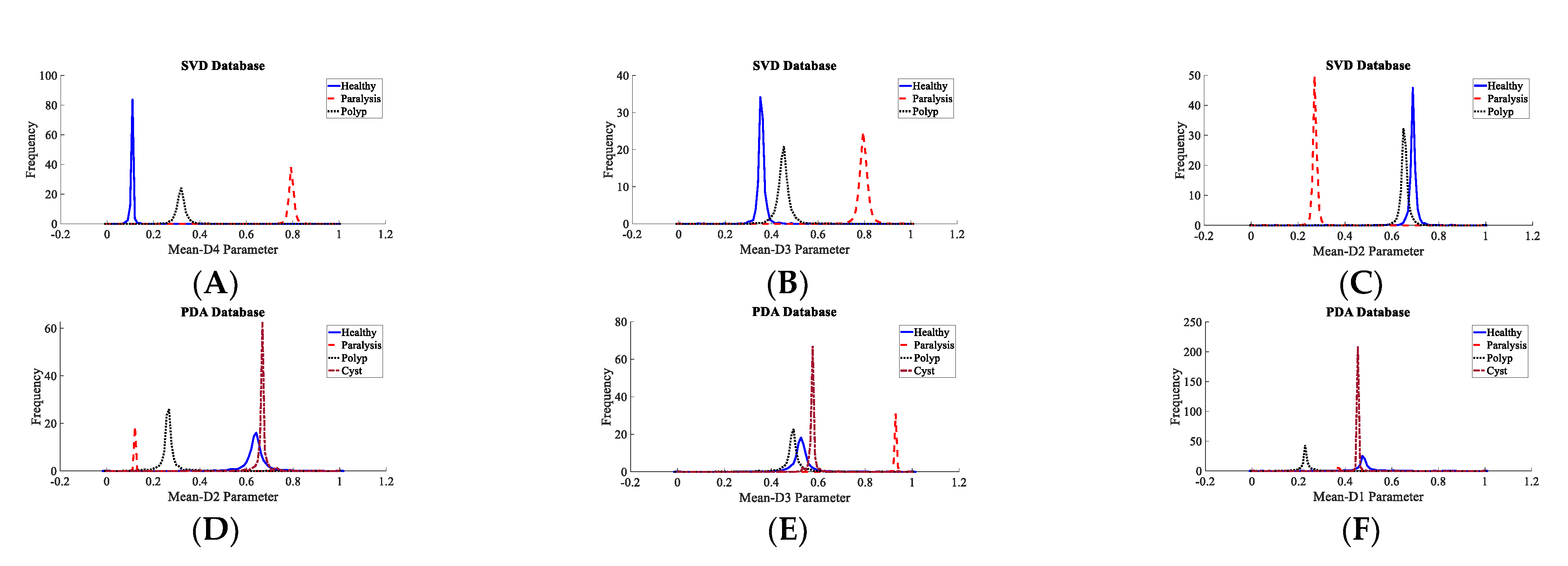

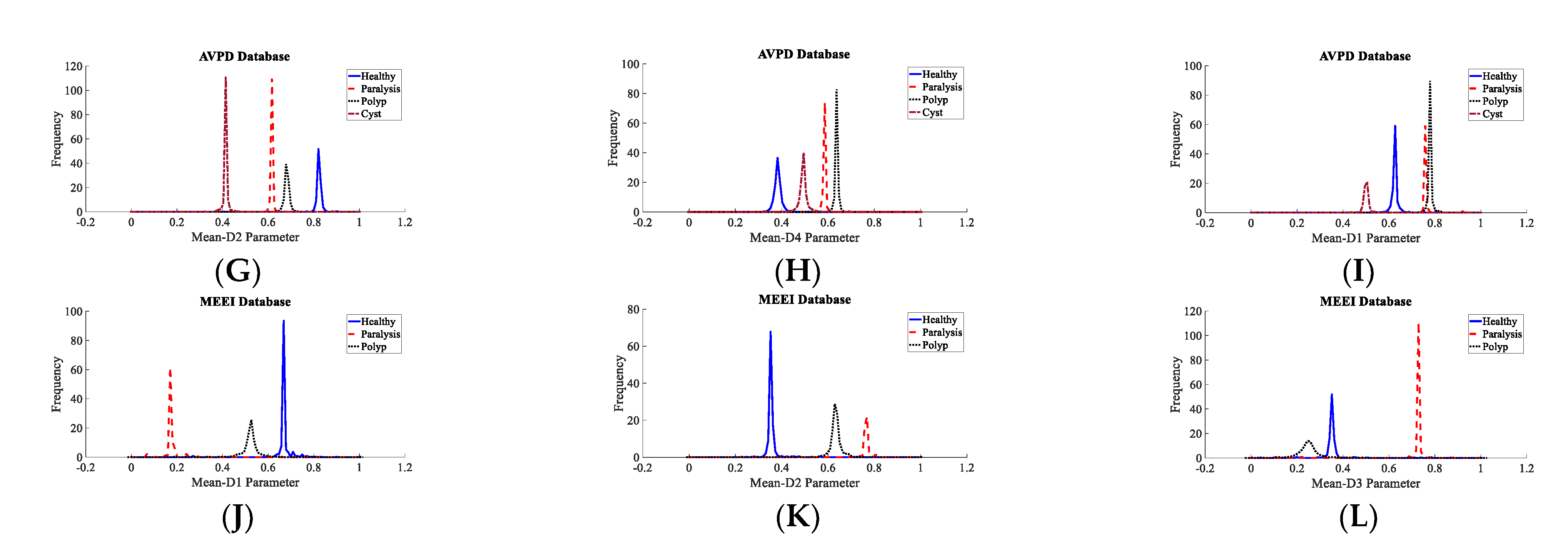

3.2. Voice Disorder Categorization

3.3. Dataset-Independent Voice Illness Assessment System

3.4. Voice Disorder Detection (Inter-Database)

3.5. Voice Pathology Independent System

3.6. Impact of Wavelet Decomposition Level on the Accuracy of Detecting and Categorization

4. Discussion

- The voice-acquiring equipment and acoustic settings in each corpus were different.

- The sampled frequency varies by dataset. This has an impact on the accuracy and reliability of a voice analysis.

- In the MEEI registry, healthy people were not clinically evaluated. As a result, there is no way of knowing whether these people were truly healthy.

- Although the recordings are altered to obtain just the stable section of the phonation, the research has revealed that the start and end of the vocalization have more acoustic clues than the steady section.

- In the German and English datasets, many audios are labeled with multiple diseases.

- For certain disorders, there are only a few recordings available.

- The severity of pathology is different in each dataset

- The distribution of the subject’s age and sex varies by dataset.

- The SVD dataset does not include relevant information on paralysis, whether unilateral or bilateral.

- The PdA dataset includes audio files with strong background noise or barely discernible vocalizations.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Verdolini, K.; Ramig, L. Review: Occupational risks for voice problems. Logoped. Phoniatr. Vocol. 2001, 26, 37–46. [Google Scholar] [CrossRef] [PubMed]

- Nemr, K.; Marcia Simões-Zenari, G.F.; Cordeiro, D.; Tsuji, A.I.; Ogawa, M.; Menezes, M. GRBAS and Cape-V scales: High reliability and consensus when applied at different times. J. Voice 2012, 26, 812-e17. [Google Scholar] [CrossRef] [PubMed]

- Arjmandi, M.; Pooyan, M. An optimum algorithm in pathological voice quality assessment using wavelet-packet-based features, linear discriminant analysis and support vector machine. Biomed. Signal Process. Control 2012, 7, 3–19. [Google Scholar] [CrossRef]

- Rosa, M.; Pereira, J.; Grellet, M. Adaptive estimation of residue signal for voice pathology diagnosis. IEEE. Trans. Biomed. Eng. 2000, 47, 96–104. [Google Scholar] [CrossRef] [PubMed]

- Narendra, N.P.; Alku, P. Glottal source information for pathological voice detection. IEEE Access 2020, 8, 67745–67755. [Google Scholar] [CrossRef]

- Kadiri, S.R.; Alku, P. Analysis and detection of pathological voice using glottal source features. IEEE J. Sel. Top. Signal Process. 2020, 14, 367–379. [Google Scholar] [CrossRef]

- Reddy, M.K.; Alku, P. A Comparison of Cepstral Features in the Detection of Pathological Voices by Varying the Input and Filterbank of the Cepstrum Computation. IEEE Access 2021, 9, 135953–135963. [Google Scholar] [CrossRef]

- Hegde, S.; Shetty, S.; Rai, S.; Dodderi, T. A survey on machine learning approaches for automatic detection of voice. J. Voice 2019, 33, 947-e11. [Google Scholar] [CrossRef]

- Parsa, V.; Jamieson, D. Acoustic discrimination of pathological voice. J. Speech Lang. Hear. Res. 2001, 44, 327–339. [Google Scholar] [CrossRef]

- Zhang, Y.; Jiang, J.; Biazzo, L.; Jorgensen, M. Perturbation and nonlinear dynamic analyses of voices from patients with unilateral laryngeal paralysis. J. Voice 2005, 19, 519–528. [Google Scholar] [CrossRef]

- Michaelis, D.; Gramss, T.; Strube, H. Glottal-to-noise excitation ratio a new measure for describing pathological voices. Acta Acust. United Acust. 1997, 83, 700–706. [Google Scholar]

- Manfredi, C.; D’Aniello, M.; Bruscaglioni, P.; Ismaelli, A. A comparative analysis of fundamental frequency estimation methods with application to pathological voices. Med. Eng. Phys. 2000, 22, 135–147. [Google Scholar] [CrossRef]

- Watts, C.; Awan, S. Use of spectral/cepstral analyses for differentiating normal from hypofunctional voices in sustained vowel and continuous speech contexts. J. Speech Lang. Hear. Res. 2011, 54, 1525–1537. [Google Scholar] [CrossRef]

- Patel, R.R.; Awan, S.N.; Barkmeier-Kraemer, J.; Courey, M.; Deliyski, D.; Eadie, T.; Paul, D.; Švec, J.G.; Hillman, R. Recommended protocols for instrumental assessment of voice: American speech-language hearing association expert panel to develop a protocol for instrumental assessment of vocal function. Am. J. Speech Lang. Pathol. 2018, 27, 887–905. [Google Scholar] [CrossRef] [PubMed]

- Benba, A.; Jilbab, A.; Hammouch, A. Discriminating between patients with Parkinson’s and neurological diseases using cepstral analysis. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 24, 1100–1108. [Google Scholar] [CrossRef]

- Ali, Z.; Elamvazuthi, I.; Alsulaiman, M.; Muhammad, M. Automatic voice pathology detection with running speech by using estimation of auditory spectrum and cepstral coefficients based on the all-pole model. J. Voice 2016, 30, 757-e7. [Google Scholar] [CrossRef]

- Godino-Llorente, J.; Gomez-Vilda, P. Automatic detection of voice impairments by means of short-term cepstral parameters and neural network based detectors. IEEE. Trans. Biomed. Eng. 2004, 51, 380–384. [Google Scholar] [CrossRef]

- Godino-Llorente, J.; Vilda, P.; Blanco-Velasco, M. Dimensionality reduction of a pathological voice quality assessment system based on Gaussian mixture models and short-term cepstral parameters. IEEE. Trans. Biomed. Eng. 2006, 53, 1943–1953. [Google Scholar] [CrossRef]

- Sáenz-Lechón, N.; Godino-Llorente, J.; Osma-Ruiz, V.; Gómez-Vilda, P. Methodological issues in the development of automatic systems for voice pathology detection. Biomed. Signal Process. Control 2006, 1, 120–128. [Google Scholar] [CrossRef]

- Godino-Llorente, J.; Fraile, R.; Sáenz-Lechón, N.; Osma-Ruiz, V.; Gómez-Vilda, P. Automatic detection of voice impairments from text-dependent running speech. Biomed. Signal Process. Control 2009, 4, 176–182. [Google Scholar] [CrossRef]

- Ali, Z.; Alsulaiman, M.; Muhammad, G.; Elamvazuthi, I.; Al-Nasheri, A.; Mesallam, T.M.; Farahat, M.; Malki, K.H. Intra-and inter-database study for Arabic, English, and German databases: Do conventional speech features detect voice pathology? J. Voice 2017, 31, 386-e1. [Google Scholar] [CrossRef] [PubMed]

- Arias-Londoño, J.; Godino-Llorente, J. Entropies from Markov models as complexity measures of embedded attractors. Entropy 2015, 17, 3595–3620. [Google Scholar] [CrossRef]

- Titze, I. The Myoelastic Aerodynamic Theory of Phonation; National Center for Voice and Speech: Salt Lake City, UT, USA, 2006. [Google Scholar]

- Zhang, Y.; Jiang, J. Nonlinear dynamics analysis in signal typing of pathological human voices. Electron. Lett. 2003, 39, 1021–1023. [Google Scholar] [CrossRef]

- Zhang, Y.; Jiang, J. Acoustic analyses of sustained and running voices from patients with laryngeal pathologies. J. Voice 2008, 22, 1–9. [Google Scholar] [CrossRef]

- Little, M.; McSharry, P.; Roberts, S.; Costello, D.; Moroz, I. Exploiting nonlinear recurrence and fractal scaling properties for voice disorder detection. Biomed. Eng. 2007, 6, 1–23. [Google Scholar]

- Arias-Londoño, J.; Godino-Llorente, J.; Sáenz-Lechón, N.; Osma-Ruiz, V.; Castellanos-Domínguez, G. Automatic detection of pathological voices using complexity measures, noise parameters, and melcepstral coefficients. IEEE. Trans. Biomed. Eng. 2011, 58, 370–379. [Google Scholar] [CrossRef] [PubMed]

- Forero, L.; Kohler, M.; Vellasco, M.; Cataldo, E. Analysis and classification of voice pathologies using glottal signal parameters. J. Voice. 2016, 30, 549–556. [Google Scholar] [CrossRef]

- Muhammad, G.; Alsulaiman, M.; Ali, Z.; Mesallam, T.A.; Farahat, M.; Malki, K.H.; Al-nasheri, A.; Bencherifa, M.A. Voice pathology detection using interlaced derivative pattern on glottal source excitation. Biomed. Signal Process. Control 2017, 31, 156–164. [Google Scholar] [CrossRef]

- Gidaye, G.; Nirmal, J.; Ezzine, K.; Shrivas, A.; Frikha, M. Application of glottal flow descriptors for pathological voice diagnosis. Int. J. Speech Technol. 2020, 23, 205–222. [Google Scholar] [CrossRef]

- Farouk, M. Clinical Diagnosis and Assessment of Speech Pathology, 1st ed.; Springer International Publishing: Cham, Switzerland, 2018. [Google Scholar]

- Saarbrucken Voice Database. Available online: http://www.Stimmdatenbank.coli.uni-saarland.de (accessed on 2 June 2021).

- Mesallam, T.A.; Farahat, M.; Malki, K.H.; Alsulaiman, M.; Ali, Z.; Al-Nasheri, A.; Muhammad, G. Development of the Arabic voice pathology database and its evaluation by using speech features and machine learning algorithms. J. Healthc. Eng. 2017, 2017, 1–13. [Google Scholar] [CrossRef]

- MEEI: Disordered Voice Database; Version 1.03 (CD-ROM); Voice and Speech Lab, Kay Elemetrics Corp.: Lincoln Park, NJ, USA, 1994.

- Drugman, T.; Bozkurt, B.; Dutoit, T. A comparative study of glottal source estimation techniques. Comput. Speech Lang. 2012, 26, 20–34. [Google Scholar] [CrossRef]

- El-Jaroudi, A.; Makhoul, J. Discrete all-pole modelling. IEEE Trans. Signal Process. 1991, 39, 411–423. [Google Scholar] [CrossRef]

- Alku, P. Glottal wave analysis with pitch synchronous iterative adaptive inverse filtering. Speech Commun. 1992, 11, 109–118. [Google Scholar] [CrossRef]

- Airas, M. TKK Aparat: An environment for voice inverse filtering and parameterization. Logoped. Phoniatr. Vocol. 2008, 33, 49–64. [Google Scholar] [CrossRef]

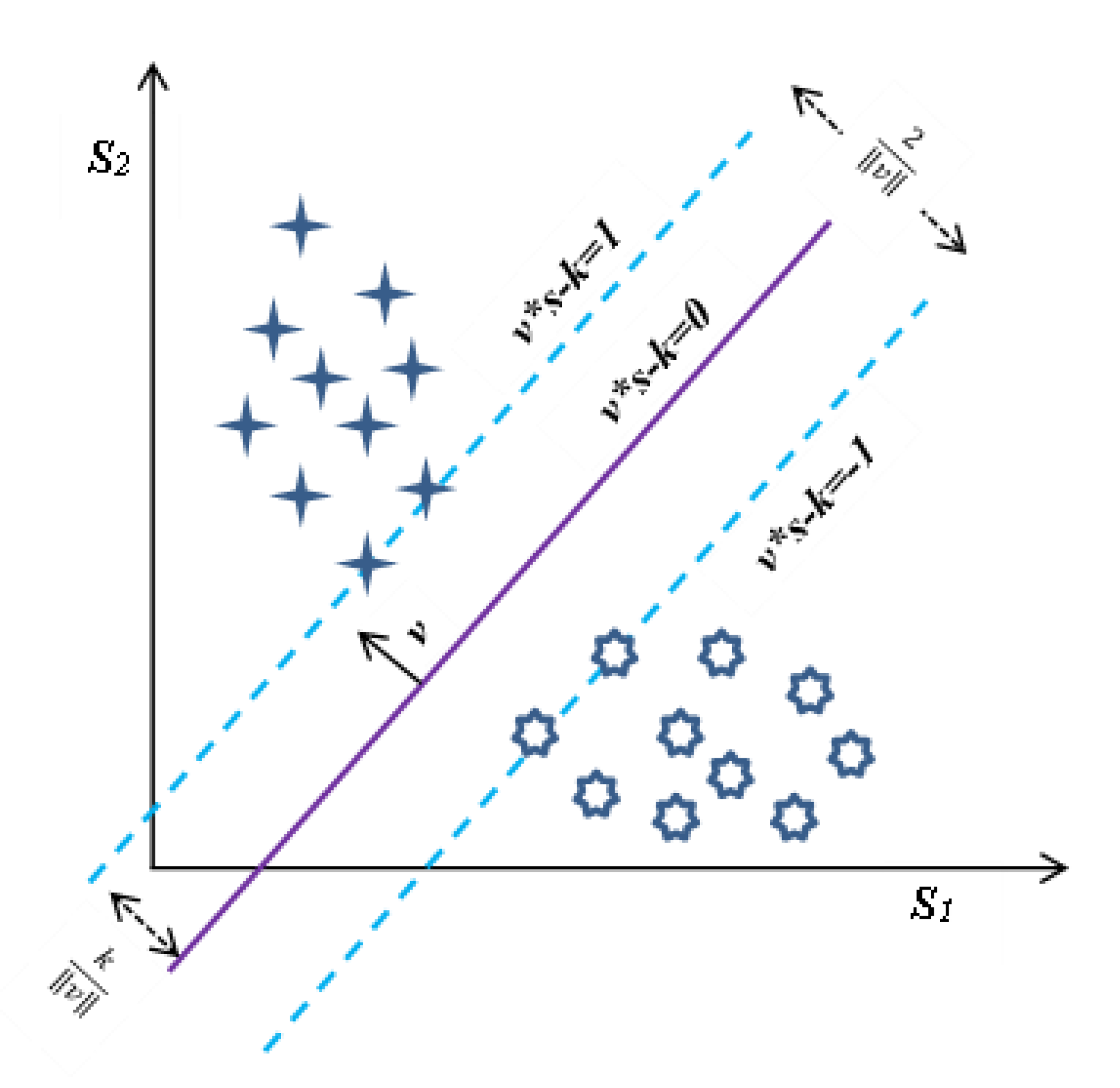

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Ben-Hur, A.; Horn, D.; Siegelmann, H.; Vapnik, V. Support vector clustering. J. Mach. Learn. Res. 2001, 2, 125–137. [Google Scholar] [CrossRef]

- Ferguson, T. An inconsistent maximum likelihood estimate. J. Am. Stat Assoc. 1982, 77, 831–834. [Google Scholar] [CrossRef]

- Xing, C.; Arpit, D.; Tsirigotis, C.; Bengio, Y. A walk with SGD. arXiv 2018, arXiv:1802.08770. [Google Scholar]

- Kohavi, R. A study of cross-validation and bootstrap for accuracy estimation and model selection. In Proceedings of the Fourteenth International Joint Conference on Artificial Intelligence, San Mateo, CA, USA, 20–25 August 1995; pp. 1137–1143. [Google Scholar]

- Sreehari, V.; Mary, L. Automatic speaker recognition using stationary wavelet coefficients of lp residual. TENCON 2018. In Proceedings of the IEEE Region 10 Conference, Jeju, Korea, 28–31 October 2018. [Google Scholar]

- Nongpiur, R.; Shpak, D. Impulse-noise suppression in speech using the stationary wavelet transform. J. Acoust. Soc. Am. 2013, 133, 866–879. [Google Scholar] [CrossRef]

- Al-Nasheri, A.; Muhammad, G.; Alsulaiman, M.; Ali, Z.; Malki, K.; Mesallam, T.; Ibrahim, M. Voice pathology detection and classification using auto-correlation and entropy features in different frequency regions. IEEE Access. 2018, 6, 6961–6974. [Google Scholar] [CrossRef]

- Al-Nasheri, A.; Muhammad, G.; Alsulaiman, M.; Ali, Z. Investigation of Voice Pathology Detection and Classification on Different Frequency Regions Using Correlation Functions. J. Voice 2017, 31, 3–15. [Google Scholar] [CrossRef] [PubMed]

- Al-Nasheri, A.; Muhammad, G.; Alsulaiman, M.; Ali, Z.; Mesallam, T.A.; Farahat, M.; Malki, K.H. Bencherif, M.A. An investigation of multidimensional voice program parameters in three different databases for voice pathology detection and classification. J. Voice 2016, 31, 113-e9. [Google Scholar] [PubMed]

- Akbari, A.; Arjmandi, M. Employing linear prediction residual signal of wavelet sub-bands in automatic detection of laryngeal pathology. Biomed. Signal Process. Control 2015, 18, 293–302. [Google Scholar] [CrossRef]

| Dataset | Normal | Cyst | Paralysis | Polyp | Laryngitis | Reinke’s Edema | Nodules | Sulcus |

|---|---|---|---|---|---|---|---|---|

| SVD [32] | 262 | 06 | 194 | 44 | 140 | 68 | -- | -- |

| PdA [27] | 238 | 20 | 18 | 28 | 30 | 29 | 29 | 24 |

| AVPD [33] | 118 | 24 | 45 | 34 | -- | -- | 20 | 41 |

| MEEI [34] | 53 | 10 | 52 | 15 | 08 | 53 | -- | -- |

| In Section 3.1, Section 3.2, Section 3.3 and Section 3.4, speakers’ voice (vowel /a/) samples of healthy, cyst, paralysis, and polyp were used. | ||||||||

| In addition to the speech recording indicated in rows 1 to 4, the following pathological PdA [27] database speech samples were used for experiments in Section 3.5: Acquired iatrogenic trauma on the vocal cords = 2, Upper motor neuron injury = 14, Extrapyramidal alterations = 1, and Lack of closure = 6. | ||||||||

| Sr. No. | Characteristics | SVD [32] | PdA [27] | AVPD [33] | MEEI [34] |

|---|---|---|---|---|---|

| 1 | Language | German | Spanish | Arabic | English |

| 2 | Recording place | Institute of Phonetics of the Saarland University, Germany | Príncipe de Asturias Hospital, Madrid | King Abdul Aziz University Hospital, Saudi Arabia | Massachusetts Eye and Ear Infirmary voice and speech laboratory, USA |

| 3 | Sampling rate of speech samples | All speech samples 50 KHz | All speech samples 25 KHz | All speech samples 48 KHz | 10 KHz, 16 KHz, 25 KHz, and 50 KHz |

| 4 | Audio format | .WAV and .NSP | .WAV | .WAV and .NSP | .NSP |

| 5 | Recording session(s) | • Sustained vowels /a/, /i/, and /u/ • A sentence | • Sustained vowel /a/ | • Vowels /a/, /i/, and /u/ • Al-Fateha (continuous speech) • Arabic digits • Isolated common words | • Sustained vowel /a/ • Rainbow passage (continuous speech) |

| 6 | Duration of recorded speech samples | • Vowels: 1–3 s • Sentence: 2 s | Vowels: 1–3 s | • Vowel: 5 s • Al-Fateha: 18 s • Digits: 10 s • Words: 3 s | Healthy • Vowel: 3 s • Passage: 12 s Pathological • Vowel: 1 s • Passage: 9 s |

| 7 | Proportion of healthy and pathological subjects | Healthy: 33% Pathological: 67% | Healthy: 54.32% Pathological: 45.68% | Healthy: 51% Pathological: 49% | Healthy: 7% Pathological: 93% |

| 8 | Severity of disordered voice quality | Not available | Severity is rated on a GRABS scale | Severity is rated on a scale of 1 (low) to 3 (high) | Not available |

| 9 | Voice illness types | Functional and organic | Organic and traumatic etiologies | Organic | Organic and functional |

| 10 | Evaluation of normal subjects | No such information is available | No such information is available | Evaluation conducted using laryngeal stroboscope | No such information is available |

| (a) | ||||||||

|---|---|---|---|---|---|---|---|---|

| Rank | Detection | |||||||

| SVD | PdA | AVPD | MEEI | |||||

| Parameter | IG Value | Parameter | IG Value | Parameter | IG Value | Parameter | IG Value | |

| 1 | Mean-D4 | 0.9904 | Mean-D3 | 0.9844 | Mean-D3 | 0.9857 | Mean-D4 | 0.9866 |

| 2 | Mean-D3 | 0.9841 | Mean-D2 | 0.9711 | Mean-D4 | 0.9746 | Mean-D2 | 0.9453 |

| 3 | Mean-D1 | 0.8880 | Mean-D1 | 0.8929 | Mean-D2 | 0.8959 | Pentropy-A4 | 0.8862 |

| 4 | Mean-D2 | 0.5902 | Mean-D4 | 0.6226 | Mean-D1 | 0.8802 | Mean-D1 | 0.8672 |

| 5 | Pentropy-D2 | 0.2034 | Skewness-D4 | 0.5664 | Kurtosis-A1 | 0.2414 | Mean-D3 | 0.8611 |

| 6 | Pentropy-D1 | 0.2019 | Skewness-D3 | 0.5128 | Kurtosis-A2 | 0.2412 | Pentropy-A3 | 0.7601 |

| 7 | Pentropy-A2 | 0.1758 | Skewness-D2 | 0.2123 | Kurtosis-A3 | 0.2391 | Pentropy-A1 | 0.7408 |

| 8 | Skewness-D4 | 0.1436 | Energy-D1 | 0.2015 | Pentropy-D3 | 0.2149 | Pentropy-A2 | 0.7178 |

| 9 | Pentropy-A4 | 0.1383 | Variance-D1 | 0.2015 | Kurtosis-A4 | 0.2075 | Skewness-D2 | 0.5615 |

| 10 | Pentropy-D3 | 0.1282 | Std-Dev-D1 | 0.1736 | Pentropy-D4 | 0.1985 | Skewness-D1 | 0.3802 |

| (b) | ||||||||

| Rank | Classification | |||||||

| SVD | PdA | AVPD | MEEI | |||||

| Parameter | IG Value | Parameter | IG Value | Parameter | IG Value | Parameter | IG Value | |

| 1 | Mean-D4 | 1.1168 | Mean-D1 | 1.5628 | Mean-D2 | 1.9189 | Mean-D1 | 1.0684 |

| 2 | Mean-D3 | 1.0786 | Mean-D2 | 1.5520 | Mean-D4 | 1.8214 | Mean-D2 | 1.0468 |

| 3 | Mean-D2 | 0.9874 | Mean-D3 | 1.3169 | Mean-D1 | 1.7703 | Mean-D3 | 0.9598 |

| 4 | Mean-D1 | 0.9810 | Mean-D4 | 1.1685 | Mean-D3 | 1.7252 | Mean-D4 | 0.6896 |

| 5 | Pentropy-A3 | 0.8053 | Variance-A4 | 0.6850 | Mean-A1 | 0.3323 | Pentropy-A4 | 0.5580 |

| 6 | Pentropy-A2 | 0.7660 | Variance-A3 | 0.6830 | Mean-A2 | 0.3323 | Pentropy-A3 | 0.4609 |

| 7 | Pentropy-A4 | 0.7356 | Variance-A2 | 0.6821 | Mean-A3 | 0.3323 | Kurtosis-D3 | 0.4108 |

| 8 | Pentropy-A1 | 0.6911 | Variance-A1 | 0.6806 | Mean-A4 | 0.3323 | Pentropy-A2 | 0.4023 |

| 9 | Pentropy-D4 | 0.5450 | Kurtosis-D4 | 0.6631 | Energy-A4 | 0.2966 | Pentropy-A1 | 0.3881 |

| 10 | Pentropy-D3 | 0.4738 | Variance-D4 | 0.6384 | Energy-A3 | 0.2963 | Variance-D2 | 0.3307 |

| Number of Features | Databases | Classifier | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | SGD | ||||||||||

| AUC | CA (%) | F1 Score | PPV (%) | Recall (%) | AUC | CA (%) | F1 Score | PPV (%) | Recall (%) | ||

| All 56 | SVD | 0.9999 | 99.55 | 0.9955 | 99.55 | 99.55 | 0.9930 | 99.30 | 0.9930 | 99.31 | 99.30 |

| PdA | 0.9999 | 99.06 | 0.9906 | 99.07 | 99.06 | 0.9815 | 98.15 | 0.9814 | 98.18 | 98.15 | |

| AVPD | 0.9999 | 99.87 | 0.9987 | 99.87 | 99.87 | 0.9997 | 99.97 | 0.9997 | 99.97 | 99.97 | |

| MEEI | 1.0000 | 99.93 | 0.9993 | 99.93 | 99.93 | 0.9993 | 99.93 | 0.9993 | 99.93 | 99.93 | |

| Top-3 | SVD | 0.9999 | 99.97 | 0.9997 | 99.97 | 99.97 | 0.9998 | 99.98 | 0.9998 | 99.98 | 99.98 |

| PdA | 1.0000 | 99.97 | 0.9997 | 99.97 | 99.97 | 0.9988 | 99.88 | 0.9988 | 99.88 | 99.88 | |

| AVPD | 0.9999 | 99.97 | 0.9997 | 99.97 | 99.97 | 0.9999 | 99.99 | 0.9999 | 99.99 | 99.99 | |

| MEEI | 1.0000 | 99.93 | 0.9993 | 99.93 | 99.93 | 0.9999 | 99.99 | 0.9999 | 99.99 | 99.99 | |

| Number of Features | Databases | Classifier | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | SGD | ||||||||||

| AUC | CA (%) | F1 Score | PPV (%) | Recall (%) | AUC | CA (%) | F1 Score | PPV (%) | Recall (%) | ||

| All 56 | SVD | 0.9999 | 98.62 | 0.9862 | 98.68 | 98.62 | 0.9904 | 98.72 | 0.9872 | 98.76 | 98.72 |

| PdA | 0.9998 | 98.93 | 0.9893 | 98.94 | 98.93 | 0.9939 | 99.09 | 0.9909 | 99.11 | 99.09 | |

| AVPD | 0.9999 | 99.85 | 0.9985 | 99.85 | 99.85 | 0.9983 | 99.75 | 0.9975 | 99.75 | 99.75 | |

| MEEI | 0.9999 | 99.47 | 0.9947 | 99.48 | 99.47 | 1.0000 | 99.99 | 0.9999 | 99.99 | 99.99 | |

| Top 5 | SVD | 0.9999 | 98.86 | 0.9886 | 98.89 | 98.86 | 0.9944 | 99.26 | 0.9926 | 99.27 | 99.26 |

| PdA | 0.9992 | 98.24 | 0.9824 | 98.25 | 98.24 | 0.9805 | 97.08 | 0.9705 | 97.11 | 97.08 | |

| AVPD | 0.9999 | 99.87 | 0.9987 | 99.87 | 99.87 | 0.9987 | 99.81 | 0.9981 | 99.81 | 99.81 | |

| MEEI | 1.0000 | 99.65 | 0.9965 | 99.65 | 99.65 | 0.9986 | 99.82 | 0.9982 | 99.82 | 99.82 | |

| Top 3 | SVD | 0.9982 | 98.19 | 0.9818 | 98.26 | 98.19 | 0.9861 | 98.15 | 0.9814 | 98.18 | 98.15 |

| PdA | 0.7779 | 73.83 | 0.6663 | 68.49 | 73.83 | 0.9628 | 94.42 | 0.9439 | 94.56 | 94.42 | |

| AVPD | 0.9999 | 99.73 | 0.9973 | 99.73 | 99.73 | 0.9959 | 99.39 | 0.9939 | 99.40 | 99.39 | |

| MEEI | 1.0000 | 99.65 | 0.9965 | 99.65 | 99.65 | 0.9986 | 99.82 | 0.9982 | 99.83 | 99.82 | |

| Databases | Classifier | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| SVM | SGD | |||||||||

| AUC | CA (%) | F1 Score | PPV (%) | Recall (%) | AUC | CA (%) | F1 Score | PPV (%) | Recall (%) | |

| SVD | 0.9999 | 99.96 | 0.9996 | 99.96 | 99.96 | 0.9995 | 99.95 | 0.9995 | 99.95 | 99.95 |

| PdA | 0.9999 | 99.77 | 0.9977 | 99.77 | 99.77 | 0.9971 | 99.71 | 0.9971 | 99.71 | 99.71 |

| AVPD | 0.9999 | 99.97 | 0.9997 | 99.97 | 99.97 | 0.9996 | 99.96 | 0.9996 | 99.96 | 99.96 |

| MEEI | 1.0000 | 99.86 | 0.9986 | 99.86 | 99.86 | 0.9993 | 99.93 | 0.9993 | 99.93 | 99.93 |

| Databases | Classifier | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| SVM | SGD | |||||||||

| AUC | CA (%) | F1 Score | PPV (%) | Recall (%) | AUC | CA (%) | F1 Score | PPV (%) | Recall (%) | |

| SVD | 0.9982 | 98.19 | 0.9818 | 98.26 | 98.19 | 0.9861 | 98.15 | 0.9814 | 98.18 | 98.15 |

| PdA | 0.9975 | 97.29 | 0.9727 | 97.29 | 97.29 | 0.9798 | 96.97 | 0.9695 | 96.99 | 96.97 |

| AVPD | 0.9998 | 99.87 | 0.9987 | 99.87 | 99.87 | 0.9989 | 99.83 | 0.9983 | 99.83 | 99.83 |

| MEEI | 0.9999 | 99.47 | 0.9947 | 99.48 | 99.47 | 0.9986 | 99.82 | 0.9982 | 99.82 | 99.82 |

| Experiment No. | Classifier | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| SVM | SGD | ||||||||

| Model Tested on | SVD | PdA | AVPD | MEEI | SVD | PdA | AVPD | MEEI | |

| Model Trained on | |||||||||

| 1 | SVD | -- | 99.98 | 99.97 | 99.99 | -- | 99.92 | 99.98 | 99.99 |

| 2 | PdA | 99.98 | -- | 99.99 | 99.99 | 99.99 | -- | 99.99 | 99.99 |

| 3 | AVPD | 99.88 | 99.76 | -- | 99.81 | 99.99 | 99.93 | -- | 99.93 |

| 4 | MEEI | 99.86 | 99.14 | 99.57 | -- | 99.80 | 98.89 | 99.54 | -- |

| 5 | SVD + PdA | -- | -- | 99.99 | 99.99 | -- | -- | 99.99 | 99.99 |

| 6 | AVPD + SVD | -- | 99.99 | -- | 99.99 | -- | 99.99 | -- | 99.99 |

| 7 | PdA + AVPD | 99.99 | -- | -- | 99.99 | 99.99 | -- | -- | 99.99 |

| 8 | MEEI + SVD | -- | 99.98 | 99.97 | -- | -- | 99.99 | 99.98 | -- |

| 9 | PdA + MEEI | 99.98 | -- | 99.99 | -- | 99.99 | -- | 99.99 | -- |

| 10 | MEEI + AVPD | 99.97 | 99.97 | -- | -- | 99.99 | 99.94 | -- | -- |

| 11 | SVD + PDA + MEEI | -- | -- | 99.99 | -- | -- | -- | 99.98 | -- |

| 12 | SVD + PDA + AVPD | -- | -- | -- | 99.99 | -- | -- | -- | 99.99 |

| 13 | PDA + MEEI + AVPD | 99.99 | -- | -- | -- | 99.99 | -- | -- | -- |

| 14 | SVD + MEEI + AVPD | -- | 98.99 | -- | -- | 99.19 | -- | -- | |

| Rank | SVD | IG Value | PdA | IG Value | MEEI | IG Value |

|---|---|---|---|---|---|---|

| 1 | Mean-D4 | 0.9915 | Mean-D4 | 0.9956 | Mean-D4 | 0.9870 |

| 2 | Mean-D3 | 0.9901 | Mean-D3 | 0.9906 | Mean-D2 | 0.9788 |

| 3 | Pentropy-A4 | 0.9853 | Mean-D2 | 0.9618 | Mean-D3 | 0.9691 |

| 4 | Pentropy-A1 | 0.9850 | Mean-D1 | 0.8881 | Pentropy-A4 | 0.9059 |

| 5 | Pentropy-A2 | 0.9848 | Energy-D3 | 0.6470 | Mean-D1 | 0.8725 |

| 6 | Pentropy-A3 | 0.9847 | Variance-D3 | 0.6470 | Pentropy-A3 | 0.7945 |

| 7 | Mean-D2 | 0.9622 | Energy-D4 | 0.6448 | Pentropy-A2 | 0.7448 |

| 8 | Mean-D1 | 0.8922 | Variance-D4 | 0.6448 | Pentropy-A1 | 0.7307 |

| 9 | Pentropy-D4 | 0.2313 | Energy-D2 | 0.6350 | Variance-A4 | 0.5659 |

| 10 | Kurtosis-A4 | 0.1651 | Variance-D2 | 0.6350 | Variance-A1 | 0.5628 |

| Number of Descriptors | Databases | Classifier | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | SGD | ||||||||||

| AUC | CA (%) | F1 Score | PPV (%) | Recall (%) | AUC | CA (%) | F1 Score | PPV (%) | Recall (%) | ||

| All 56 | SVD | 0.9999 | 99.94 | 0.9994 | 99.94 | 99.94 | 0.9995 | 99.95 | 0.9995 | 99.95 | 99.95 |

| PdA | 0.9999 | 99.99 | 0.9999 | 99.99 | 99.99 | 0.9992 | 99.92 | 0.9992 | 99.92 | 99.92 | |

| MEEI | 0.9999 | 99.99 | 0.9999 | 99.99 | 99.99 | 0.9988 | 99.88 | 0.9988 | 99.88 | 99.88 | |

| Common 3 | SVD | 0.9999 | 99.99 | 0.9999 | 99.99 | 99.99 | 0.9997 | 99.97 | 0.9997 | 99.97 | 99.97 |

| PdA | 0.9999 | 99.99 | 0.9999 | 99.99 | 99.99 | 0.9999 | 99.99 | 0.9999 | 99.99 | 99.99 | |

| MEEI | 0.9999 | 99.99 | 0.9999 | 99.99 | 99.99 | 0.9999 | 99.99 | 0.9999 | 99.99 | 99.99 | |

| Rank | PdA | IG Value | AVPD | IG Value |

|---|---|---|---|---|

| 1 | Mean-D4 | 0.9962 | Mean-D4 | 0.9956 |

| 2 | Mean-D3 | 0.9883 | Mean-D2 | 0.9660 |

| 3 | Mean-D2 | 0.9619 | Mean-D3 | 0.9650 |

| 4 | Mean-D1 | 0.8768 | Mean-D1 | 0.8829 |

| 5 | Variance-D3 | 0.6070 | Variance-A4 | 0.8494 |

| 6 | Energy-D3 | 0.6070 | Variance-A1 | 0.8493 |

| 7 | Variance-D4 | 0.6012 | Variance-A2 | 0.8493 |

| 8 | Energy-D4 | 0.6012 | Variance-A3 | 0.8490 |

| 9 | Variance-D2 | 0.5928 | Std-Dev-A4 | 0.8473 |

| 10 | Energy-D2 | 0.5928 | Std-Dev-A2 | 0.8470 |

| Number of Descriptors | Databases | Classifier | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | SGD | ||||||||||

| AUC | CA (%) | F1 Score | PPV (%) | Recall (%) | AUC | CA (%) | F1 Score | PPV (%) | Recall (%) | ||

| All 56 | PdA | 0.9999 | 99.99 | 0.9999 | 99.99 | 99.99 | 0.9994 | 99.94 | 0.9994 | 99.94 | 99.94 |

| AVPD | 0.9999 | 99.92 | 0.9992 | 99.92 | 99.92 | 0.9997 | 99.97 | 0.9997 | 99.97 | 99.97 | |

| Common 3 | PdA | 0.9999 | 99.99 | 0.9999 | 99.99 | 99.99 | 0.9999 | 99.99 | 0.9999 | 99.99 | 99.99 |

| AVPD | 0.9999 | 99.99 | 0.9999 | 99.99 | 99.99 | 0.9997 | 99.97 | 0.9997 | 99.97 | 99.97 | |

| Rank | PdA | IG Value | AVPD | IG Value |

|---|---|---|---|---|

| 1 | Mean-D2 | 1.6651 | Mean-D2 | 1.4317 |

| 2 | Mean-D1 | 1.3641 | Mean-D4 | 1.4194 |

| 3 | Mean-D3 | 1.2962 | Mean-D1 | 1.1262 |

| 4 | Mean-D4 | 1.0894 | Mean-D3 | 0.9220 |

| 5 | Variance-A2 | 0.7819 | Skewness-D4 | 0.6898 |

| 6 | Variance-A1 | 0.7819 | Skewness-D3 | 0.4225 |

| 7 | Variance-A3 | 0.7817 | Variance-D4 | 0.3733 |

| 8 | Variance-A4 | 0.7784 | Energy-D4 | 0.3714 |

| 9 | Variance-D4 | 0.7726 | Variance-D3 | 0.3618 |

| 10 | Energy-D4 | 0.7726 | Energy-D3 | 0.3601 |

| Number of Descriptors | Databases | Classifier | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | SGD | ||||||||||

| AUC | CA (%) | F1 Score | PPV (%) | Recall (%) | AUC | CA (%) | F1 Score | PPV (%) | Recall (%) | ||

| All 56 | PdA | 0.9999 | 99.01 | 0.9901 | 99.01 | 99.01 | 0.9899 | 98.24 | 0.9825 | 98.31 | 98.24 |

| AVPD | 0.9997 | 97.61 | 0.9762 | 97.77 | 97.61 | 0.9903 | 98.38 | 0.9838 | 98.45 | 98.38 | |

| Top 10 | PdA | 0.9135 | 75.21 | 0.7063 | 75.84 | 75.21 | 0.9602 | 93.04 | 0.9298 | 93.14 | 93.04 |

| AVPD | 0.9998 | 97.58 | 0.9760 | 97.77 | 97.58 | 0.9868 | 97.80 | 0.9779 | 97.84 | 97.80 | |

| Common 3 | PdA | 0.9481 | 73.69 | 0.6749 | 74.89 | 73.69 | 0.8786 | 78.76 | 0.7438 | 70.88 | 78.76 |

| AVPD | 0.9991 | 98.11 | 0.9811 | 98.12 | 98.11 | 0.9707 | 95.12 | 0.9506 | 95.44 | 95.12 | |

| Database | Classifier | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| SVM | SGD | |||||||||

| Decomposition Level | Decomposition Level | |||||||||

| 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 | |

| SVD | 99.89 | 99.50 | 99.53 | 99.55 | 99.88 | 99.42 | 99.24 | 99.32 | 99.30 | 99.35 |

| PdA | 99.88 | 99.65 | 99.68 | 99.06 | 99.91 | 99.88 | 98.77 | 99.80 | 98.15 | 99.85 |

| AVPD | 99.97 | 99.96 | 99.92 | 99.87 | 99.96 | 99.93 | 99.99 | 99.98 | 99.97 | 99.93 |

| MEEI | 99.99 | 99.99 | 99.99 | 99.93 | 99.99 | 99.93 | 99.93 | 99.93 | 99.93 | 99.99 |

| Database | Classifier | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| SVM | SGD | |||||||||

| Decomposition Level | Decomposition Level | |||||||||

| 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 | |

| SVD | 96.75 | 98.05 | 98.49 | 98.62 | 98.25 | 96.24 | 99.19 | 98.22 | 98.72 | 98.69 |

| PdA | 94.10 | 92.14 | 97.92 | 98.93 | 99.04 | 93.20 | 96.49 | 98.93 | 99.09 | 99.94 |

| AVPD | 95.66 | 99.55 | 98.45 | 99.85 | 99.85 | 97.91 | 99.61 | 98.62 | 99.75 | 99.67 |

| MEEI | 97.22 | 98.95 | 99.13 | 99.47 | 98.95 | 96.00 | 99.13 | 99.65 | 99.99 | 98.78 |

| Ref. No. | Descriptors Used | Conveyed Accuracies (%) for Voice Disorder Identification | ||||

|---|---|---|---|---|---|---|

| Database | ||||||

| SVD | PdA | AVPD | MEEI | Private | ||

| [21] | MFCC | 80.20 | -- | 83.65 | 94.60 | -- |

| [29] | IDP | 93.20 | -- | 91.50 | 99.40 | -- |

| [30] | Glottal flow descriptors | 99.80 | 99.70 | 99.80 | 99.80 | -- |

| [46] | Auto-correlation and entropy | 92.79 | -- | 99.79 | 99.69 | -- |

| [47] | Peak and lag | 90.97 | -- | 91.16 | 99.80 | -- |

| [48] | MDVP | 99.68 | -- | 72.53 | 88.21 | -- |

| [49] | Linear prediction of discrete wavelet coefficients | -- | -- | -- | 97.01 | -- |

| Proposed approach | SWT-based energy and statistical features | 99.98 | 99.98 | 99.99 | 99.99 | -- |

| Conveyed accuracies (%) for voice disorder classification | ||||||

| [28] | Short-time and glottal flow descriptors | -- | -- | -- | -- | 96.20 |

| [30] | Glottal flow descriptors | 90.40 | 99.30 | 99.80 | 90.10 | -- |

| [46] | Auto-correlation and entropy features | 99.53 | 96.02 | 99.54 | -- | |

| [47] | Peak and lag | 98.94 | -- | 95.18 | 99.25 | -- |

| Proposed approach | SWT-based energy and statistical features | 99.86 | 98.93 | 99.85 | 99.65 | -- |

| Database | SVM | SGD | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Haar | db2 | db4 | db6 | db8 | db10 | db12 | Haar | db2 | db4 | db6 | db8 | db10 | db12 | |

| SVD | 99.55 | 62.50 | 73.99 | 61.80 | 64.85 | 61.10 | 77.05 | 99.30 | 99.28 | 96.91 | 99.39 | 98.49 | 99.12 | 98.92 |

| PdA | 99.06 | 87.53 | 82.58 | 91.00 | 79.70 | 72.82 | 86.62 | 98.15 | 98.97 | 98.06 | 99.03 | 93.45 | 97.55 | 96.92 |

| AVPD | 99.87 | 95.96 | 92.57 | 83.51 | 85.94 | 90.94 | 82.50 | 99.97 | 98.90 | 99.86 | 99.39 | 99.00 | 98.16 | 99.11 |

| MEEI | 99.93 | 99.72 | 99.86 | 99.93 | 99.79 | 99.86 | 99.79 | 99.93 | 98.68 | 99.37 | 99.79 | 99.16 | 99.65 | 99.37 |

| Database | SVM | SGD | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Haar | db2 | db4 | db6 | db8 | db10 | db12 | Haar | db2 | db4 | db6 | db8 | db10 | db12 | |

| SVD | 98.62 | 81.60 | 87.16 | 90.01 | 86.16 | 91.22 | 90.75 | 98.72 | 95.27 | 96.51 | 96.51 | 95.41 | 94.37 | 97.42 |

| PdA | 98.93 | 83.43 | 86.94 | 79.72 | 90.92 | 81.84 | 93.25 | 99.09 | 95.64 | 91.98 | 92.94 | 91.82 | 90.92 | 95.06 |

| AVPD | 99.85 | 71.55 | 62.16 | 83.02 | 59.73 | 66.34 | 57.74 | 99.75 | 91.06 | 90.89 | 93.51 | 91.95 | 94.26 | 87.93 |

| MEEI | 99.47 | 93.75 | 91.31 | 94.44 | 88.71 | 88.36 | 86.97 | 99.99 | 98.43 | 95.65 | 93.57 | 89.93 | 92.70 | 88.71 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shrivas, A.; Deshpande, S.; Gidaye, G.; Nirmal, J.; Ezzine, K.; Frikha, M.; Desai, K.; Shinde, S.; Oza, A.D.; Burduhos-Nergis, D.D.; et al. Employing Energy and Statistical Features for Automatic Diagnosis of Voice Disorders. Diagnostics 2022, 12, 2758. https://doi.org/10.3390/diagnostics12112758

Shrivas A, Deshpande S, Gidaye G, Nirmal J, Ezzine K, Frikha M, Desai K, Shinde S, Oza AD, Burduhos-Nergis DD, et al. Employing Energy and Statistical Features for Automatic Diagnosis of Voice Disorders. Diagnostics. 2022; 12(11):2758. https://doi.org/10.3390/diagnostics12112758

Chicago/Turabian StyleShrivas, Avinash, Shrinivas Deshpande, Girish Gidaye, Jagannath Nirmal, Kadria Ezzine, Mondher Frikha, Kamalakar Desai, Sachin Shinde, Ankit D. Oza, Dumitru Doru Burduhos-Nergis, and et al. 2022. "Employing Energy and Statistical Features for Automatic Diagnosis of Voice Disorders" Diagnostics 12, no. 11: 2758. https://doi.org/10.3390/diagnostics12112758

APA StyleShrivas, A., Deshpande, S., Gidaye, G., Nirmal, J., Ezzine, K., Frikha, M., Desai, K., Shinde, S., Oza, A. D., Burduhos-Nergis, D. D., & Burduhos-Nergis, D. P. (2022). Employing Energy and Statistical Features for Automatic Diagnosis of Voice Disorders. Diagnostics, 12(11), 2758. https://doi.org/10.3390/diagnostics12112758