Using Slit-Lamp Images for Deep Learning-Based Identification of Bacterial and Fungal Keratitis: Model Development and Validation with Different Convolutional Neural Networks

Abstract

:1. Introduction

2. Materials and Methods

2.1. Identification of Microbial Keratitis

2.2. Exclusion Criteria

2.3. Image Collection

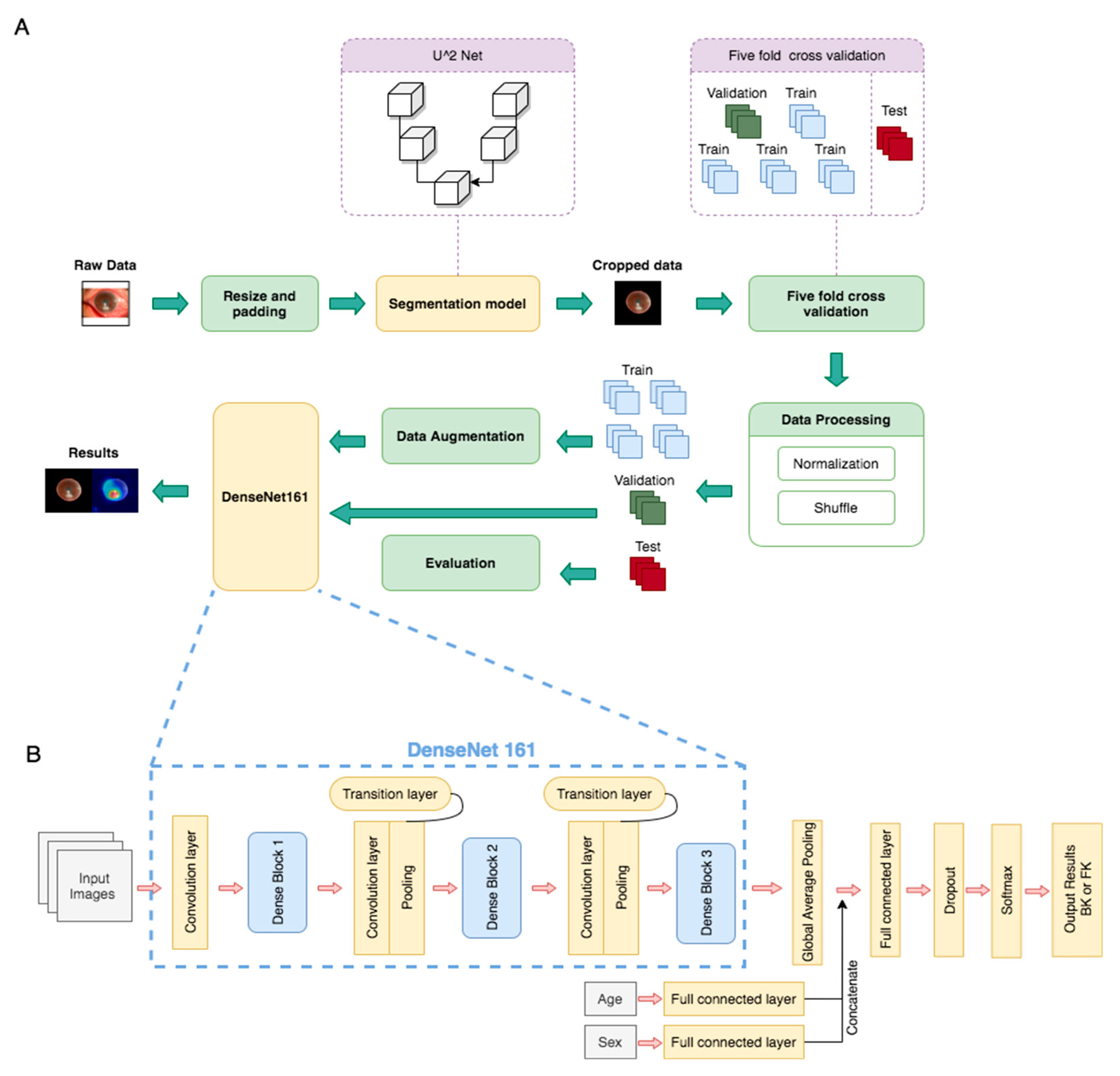

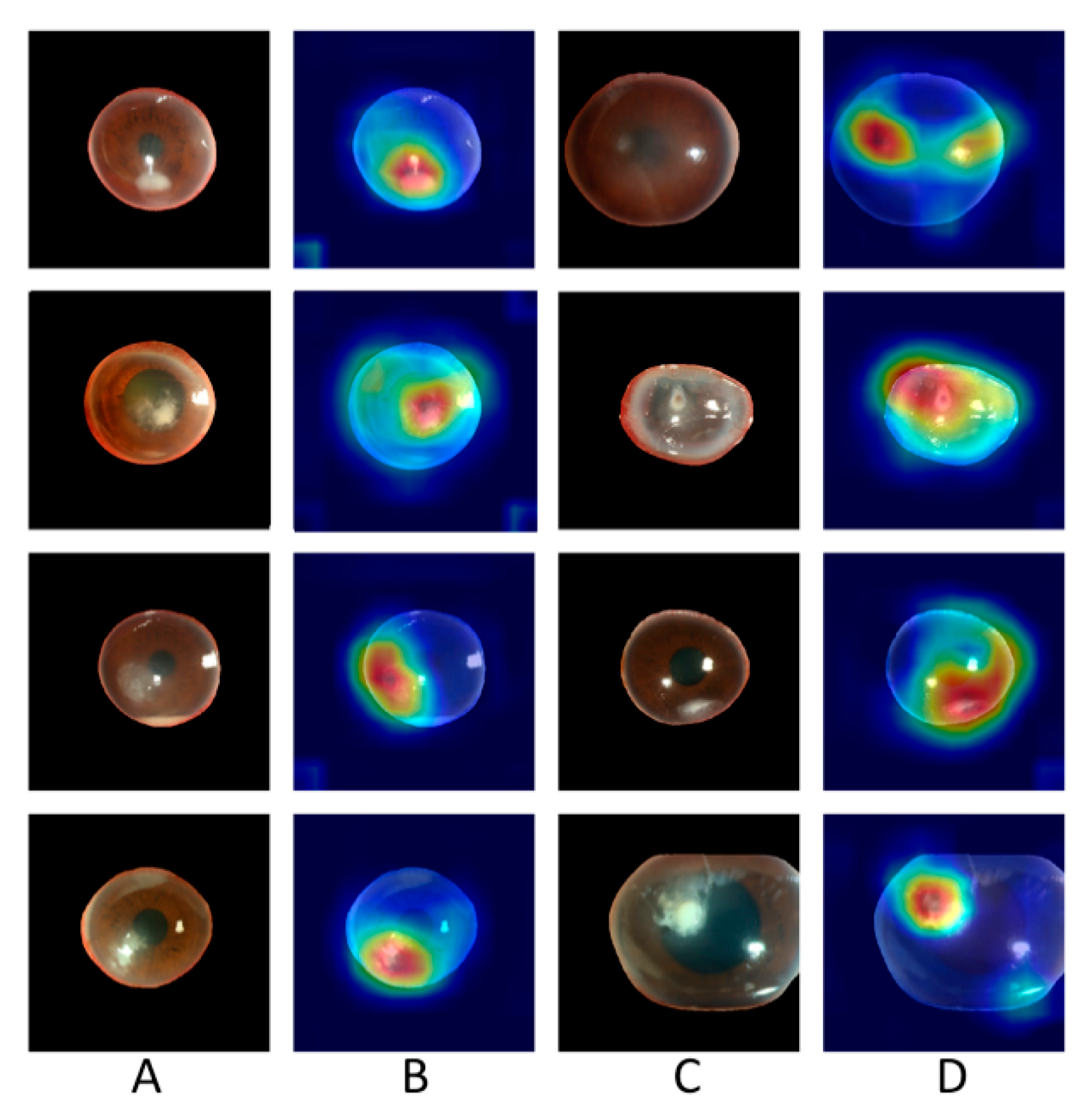

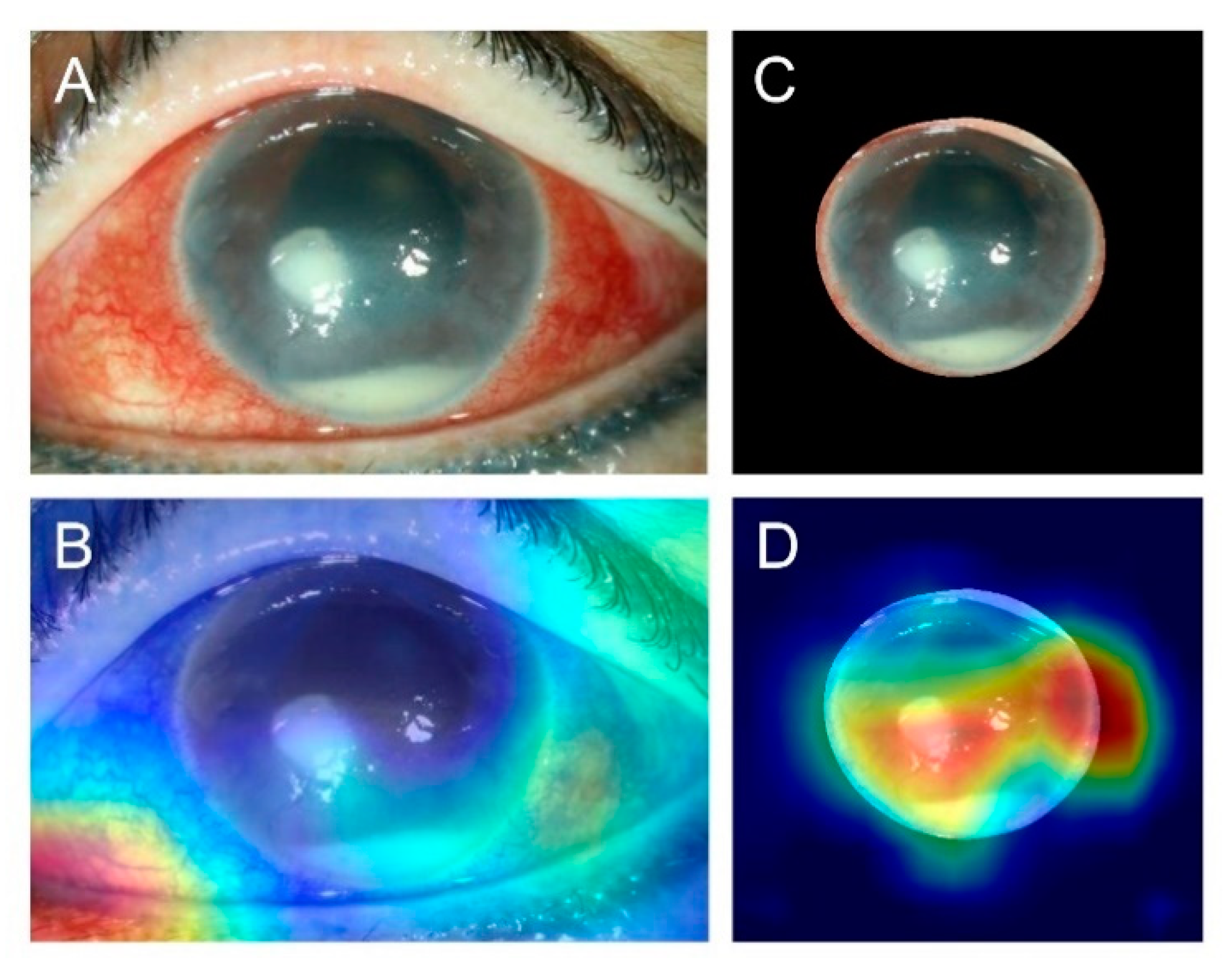

2.4. Algorithm Architecture

2.5. Performance Interpretation and Statistics

3. Results

3.1. Patient Classification and Characteristics

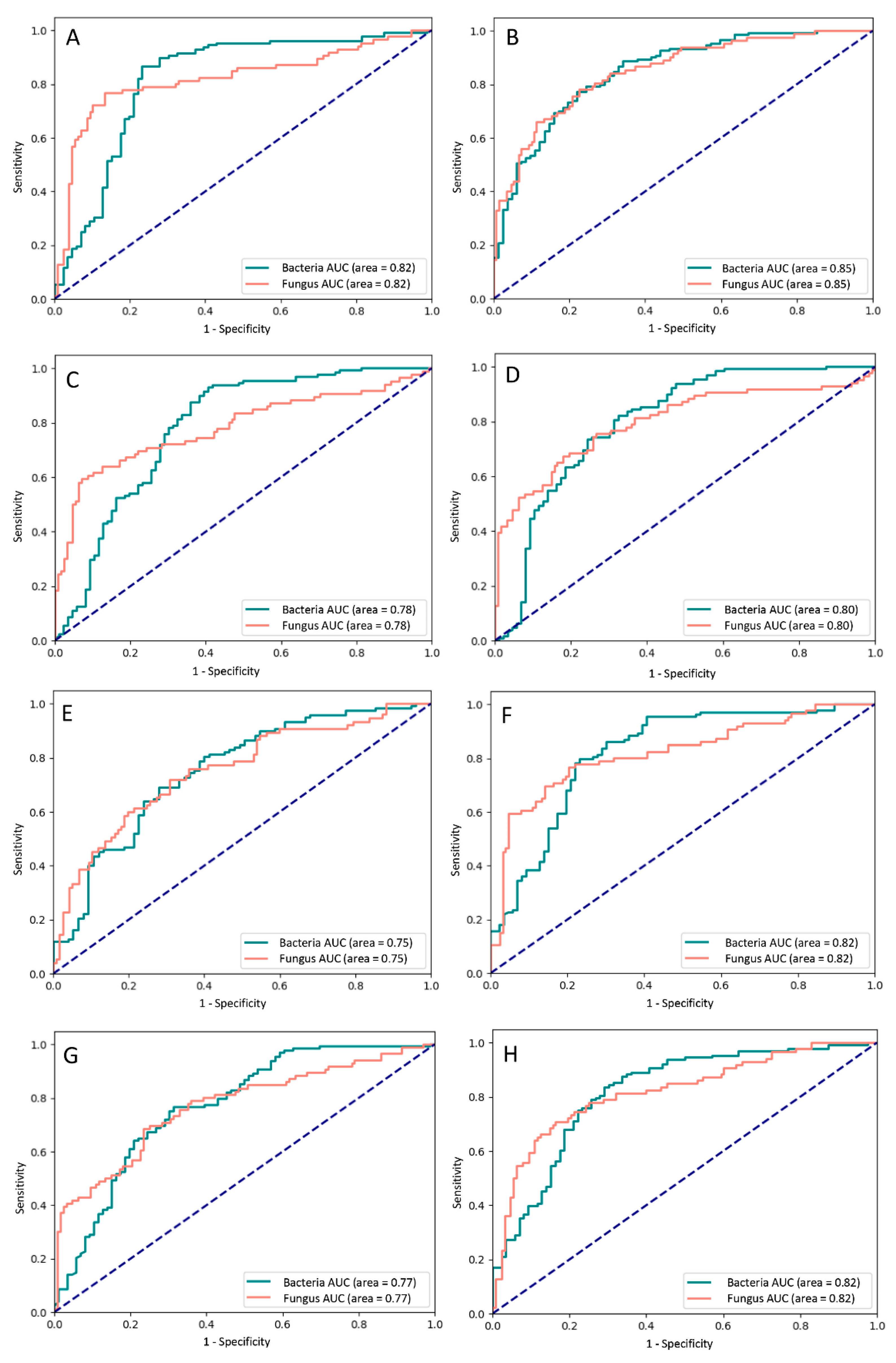

3.2. Performance of Different Models

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Manikandan, P.; Abdel-Hadi, A.; Randhir Babu Singh, Y.; Revathi, R.; Anita, R.; Banawas, S.; Dukhyil, A.A.B.; Alshehri, B.; Shobana, C.S.; Selvam, K.P. Fungal Keratitis: Epidemiology, Rapid Detection, and Antifungal Susceptibilities of Fusarium and Aspergillus Isolates from Corneal Scrapings. Biomed. Res. Int. 2019, 2019, 6395840. [Google Scholar] [CrossRef] [Green Version]

- Gower, E.W.; Keay, L.; Oechsler, R.A.; Iovieno, A.; Alfonso, E.C.; Jones, D.B.; Colby, K.; Tuli, S.S.; Patel, S.R.; Lee, S.M.; et al. Trends in Fungal Keratitis in the United States, 2001 to 2007. Ophthalmology 2010, 117, 2263–2267. [Google Scholar] [CrossRef]

- Whitcher, J.P.; Srinivasan, M.; Upadhyay, M.P. Corneal blindness: A global perspective. Bull. World Health Organ. 2001, 79, 214–221. [Google Scholar]

- Ung, L.; Bispo, P.J.; Shanbhag, S.S.; Gilmore, M.S.; Chodosh, J. The persistent dilemma of microbial keratitis: Global burden, diagnosis, and antimicrobial resistance. Surv. Ophthalmol. 2019, 64, 255–271. [Google Scholar] [CrossRef] [PubMed]

- Hung, N.; Yeh, L.-K.; Ma, D.H.-K.; Lin, H.-C.; Tan, H.-Y.; Chen, H.-C.; Sun, P.-L.; Hsiao, C.-H. Filamentous Fungal Keratitis in Taiwan: Based on Molecular Diagnosis. Transl. Vis. Sci. Technol. 2020, 9, 32. [Google Scholar] [CrossRef] [PubMed]

- Sharma, N.; Sahay, P.; Maharana, P.K.; Singhal, D.; Saluja, G.; Bandivadekar, P.; Chako, J.; Agarwal, T.; Sinha, R.; Titiyal, J.S.; et al. Management Algorithm for Fungal Keratitis: The TST (Topical, Systemic, and Targeted Therapy) Protocol. Cornea 2019, 38, 141–145. [Google Scholar] [CrossRef] [PubMed]

- Thomas, P.A.; Kaliamurthy, J. Mycotic keratitis: Epidemiology, diagnosis and management. Clin. Microbiol. Infect. 2013, 19, 210–220. [Google Scholar] [CrossRef] [Green Version]

- Kredics, L.; Narendran, V.; Shobana, C.S.; Vágvölgyi, C.; Manikandan, P.; Indo-Hungarian Fungal Keratitis Working Group. Filamentous fungal infections of the cornea: A global overview of epidemiology and drug sensitivity. Mycoses 2015, 58, 243–260. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Kong, M.; Xie, W.; Duan, R.; Fang, Z.; Lin, Y.; Zhu, Q.; Tang, S.; Wu, F.; Yao, Y.-F. Deep Sequential Feature Learning in Clinical Image Classification of Infectious Keratitis. Engineering 2020. [Google Scholar] [CrossRef]

- Kuo, M.-T.; Hsu, B.W.-Y.; Yin, Y.-K.; Fang, P.-C.; Lai, H.-Y.; Chen, A.; Yu, M.-S.; Tseng, V.S. A deep learning approach in diagnosing fungal keratitis based on corneal photographs. Sci. Rep. 2020, 10, 1–8. [Google Scholar] [CrossRef]

- Dalmon, C.; Porco, T.C.; Lietman, T.M.; Prajna, N.V.; Prajna, L.; Das, M.R.; Kumar, J.A.; Mascarenhas, J.; Margolis, T.P.; Whitcher, J.P.; et al. The Clinical Differentiation of Bacterial and Fungal Keratitis: A Photographic Survey. Investig. Opthalmol. Vis. Sci. 2012, 53, 1787–1791. [Google Scholar] [CrossRef]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.; et al. Development and Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs. JAMA 2016, 316, 2402–2410. [Google Scholar] [CrossRef]

- Bhuiyan, A.; Wong, T.Y.; Ting, D.S.W.; Govindaiah, A.; Souied, E.H.; Smith, T. Artificial Intelligence to Stratify Severity of Age-Related Macular Degeneration (AMD) and Predict Risk of Progression to Late AMD. Transl. Vis. Sci. Technol. 2020, 9, 25. [Google Scholar] [CrossRef] [Green Version]

- Kim, S.J.; Cho, K.J.; Oh, S. Development of machine learning models for diagnosis of glaucoma. PLoS ONE 2017, 12, e0177726. [Google Scholar] [CrossRef] [Green Version]

- Ting, D.S.W.; Lee, A.Y.; Wong, T.Y. An Ophthalmologist’s Guide to Deciphering Studies in Artificial Intelligence. Ophthalmology 2019, 126, 1475–1479. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- CLSI. Principles and Procedures for Detection and Culture of Fungi in Clinical Specimens, 2nd ed.; CLSI guideline M54; Clinical and Laboratory Standards Institute: Wayne, PA, USA, 2021. [Google Scholar]

- CLSI. Methods for Dilution Antimicrobial Susceptibility Tests for Bacteria That Grow Aerobically, 11th ed.; CLSI Guideline M07; Clinical and Laboratory Standards Institute: Wayne, PA, USA, 2018. [Google Scholar]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaiane, O.R.; Jagersand, M. U2-Net: Going deeper with nested U-structure for salient object detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Gao Huang, Z.L.; van der Maaten, L.; Kilian, Q.W. Densely Connected Convolutional Networks. arXiv 2016, arXiv:1608.06993. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network in Network. arXiv 2014, arXiv:1312.4400. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Why did you say that? Visual explanations from deep networks via gradient-based localization. arXiv 2016, arXiv:1610.02391v3. [Google Scholar]

- Gross, J.; Breitenbach, J.; Baumgartl, H.; Buettner, R. High-Performance Detection of Corneal Ulceration Using Image Classifica-tion with Convolutional Neural Networks. In Proceedings of the 54th Hawaii International Conference on System Sciences, Kauai, HI, USA, 5–8 January 2021. [Google Scholar] [CrossRef]

- Ting, D.S.; Peng, L.; Varadarajan, A.V.; Keane, P.A.; Burlina, P.M.; Chiang, M.F.; Schmetterer, L.; Pasquale, L.R.; Bressler, N.M.; Webster, D.R.; et al. Deep learning in ophthalmology: The technical and clinical considerations. Prog. Retin. Eye Res. 2019, 72, 100759. [Google Scholar] [CrossRef] [PubMed]

- Beede, E.; Baylor, E.; Hersch, F.; Iurchenko, A.; Wilcox, L.; Ruamviboonsuk, P.; Vardoulakis, L.M. A Human-Centered Evaluation of a Deep Learning System Deployed in Clinics for the Detection of Diabetic Retinopathy. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–12. [Google Scholar]

| Characteristics | Total (n = 580) | Training (n = 388) | Validation (n = 96) | Testing (n = 96) |

|---|---|---|---|---|

| Male, n (%) | 420 (72.4) | 278 (71.6) | 74 (77.1) | 68 (70.8) |

| Age, years 1 | 55.4 ± 20.2 | 53.6 ± 19.7 | 62.1 ± 20.0 | 55.8 ± 21.2 |

| Patients, n (%)/Photos, n | ||||

| Bacteria | 346 (59.7)/824 | 231(39.8)/562 | 60 (10.3)/134 | 55 (9.5)/128 |

| Fungus | 234 (40.3)/506 | 157(27.1)/342 | 36 (6.2)/78 | 41 (7.1)/86 |

| Model | Validation | Testing | Average Accuracy | BK | FK | Sensitivity | Specificity | PPV | NPV |

|---|---|---|---|---|---|---|---|---|---|

| (95% Confidence Interval) | |||||||||

| DenseNet121 | 79.7 | 77.9 | 78.8 | 85.0 | 59.6 | 59.7 (45.8–72.4) | 85.0 (78.2–90.4) | 60.7 (49.9–70.6) | 84.5 (79.7–88.2) |

| DenseNet161 | 77.5 | 79.7 | 78.6 | 87.3 | 65.8 | 65.8 (41.5–65.8) | 87.3 (86.0–95.3) | 74.0 (65.1–82.9) | 82.4 (74.9–82.4) |

| DenseNet169 | 83.6 | 75.0 | 79.3 | 79.6 | 63.2 | 63.2 (49.34–75.6) | 79.6 (72.2–85.8) | 54.6 (45.2–63.6) | 84.8 (79.7–88.8) |

| DenseNet201 | 78.0 | 78.9 | 78.4 | 87.8 | 56.1 | 56.1 (42.4–69.3) | 87.8 (81.3–92.6) | 64.0 (52.1–74.4) | 83.8 (79.3–87.4) |

| EfficientNetB3 | 77.8 | 74.3 | 76.1 | 85.2 | 58.1 | 58.1 (47.0–68.7) | 85.2 (77.8–90.8) | 72.5 (62.6–80.5) | 75.2 (70.0–79.7) |

| InceptionV3 | 79.7 | 78.0 | 78.9 | 89.1 | 61.6 | 61.6 (50.5–71.9) | 89.1 (82.3–93.9) | 79.1 (69.2–86.5) | 77.6 (72.4–82.0) |

| ResNet101 | 79.2 | 80.9 | 80.0 | 93.2 | 49.1 | 49.1 (35.6–62.7) | 93.2 (87.9–96.7) | 73.7 (59.3–84.3) | 82.5 (78.5–86.0) |

| ResNet50 | 78.2 | 76.5 | 77.3 | 95.9 | 26.3 | 26.3 (15.5–39.7) | 95.9 (91.3–98.5) | 71.4 (50.5–86.0) | 77.1 (74.1–79.7) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hung, N.; Shih, A.K.-Y.; Lin, C.; Kuo, M.-T.; Hwang, Y.-S.; Wu, W.-C.; Kuo, C.-F.; Kang, E.Y.-C.; Hsiao, C.-H. Using Slit-Lamp Images for Deep Learning-Based Identification of Bacterial and Fungal Keratitis: Model Development and Validation with Different Convolutional Neural Networks. Diagnostics 2021, 11, 1246. https://doi.org/10.3390/diagnostics11071246

Hung N, Shih AK-Y, Lin C, Kuo M-T, Hwang Y-S, Wu W-C, Kuo C-F, Kang EY-C, Hsiao C-H. Using Slit-Lamp Images for Deep Learning-Based Identification of Bacterial and Fungal Keratitis: Model Development and Validation with Different Convolutional Neural Networks. Diagnostics. 2021; 11(7):1246. https://doi.org/10.3390/diagnostics11071246

Chicago/Turabian StyleHung, Ning, Andy Kuan-Yu Shih, Chihung Lin, Ming-Tse Kuo, Yih-Shiou Hwang, Wei-Chi Wu, Chang-Fu Kuo, Eugene Yu-Chuan Kang, and Ching-Hsi Hsiao. 2021. "Using Slit-Lamp Images for Deep Learning-Based Identification of Bacterial and Fungal Keratitis: Model Development and Validation with Different Convolutional Neural Networks" Diagnostics 11, no. 7: 1246. https://doi.org/10.3390/diagnostics11071246

APA StyleHung, N., Shih, A. K.-Y., Lin, C., Kuo, M.-T., Hwang, Y.-S., Wu, W.-C., Kuo, C.-F., Kang, E. Y.-C., & Hsiao, C.-H. (2021). Using Slit-Lamp Images for Deep Learning-Based Identification of Bacterial and Fungal Keratitis: Model Development and Validation with Different Convolutional Neural Networks. Diagnostics, 11(7), 1246. https://doi.org/10.3390/diagnostics11071246