Proof-of-Concept Study on an Automatic Computational System in Detecting and Classifying Occlusal Caries Lesions from Smartphone Color Images of Unrestored Extracted Teeth

Abstract

:1. Introduction

2. Materials and Methods

2.1. Dataset

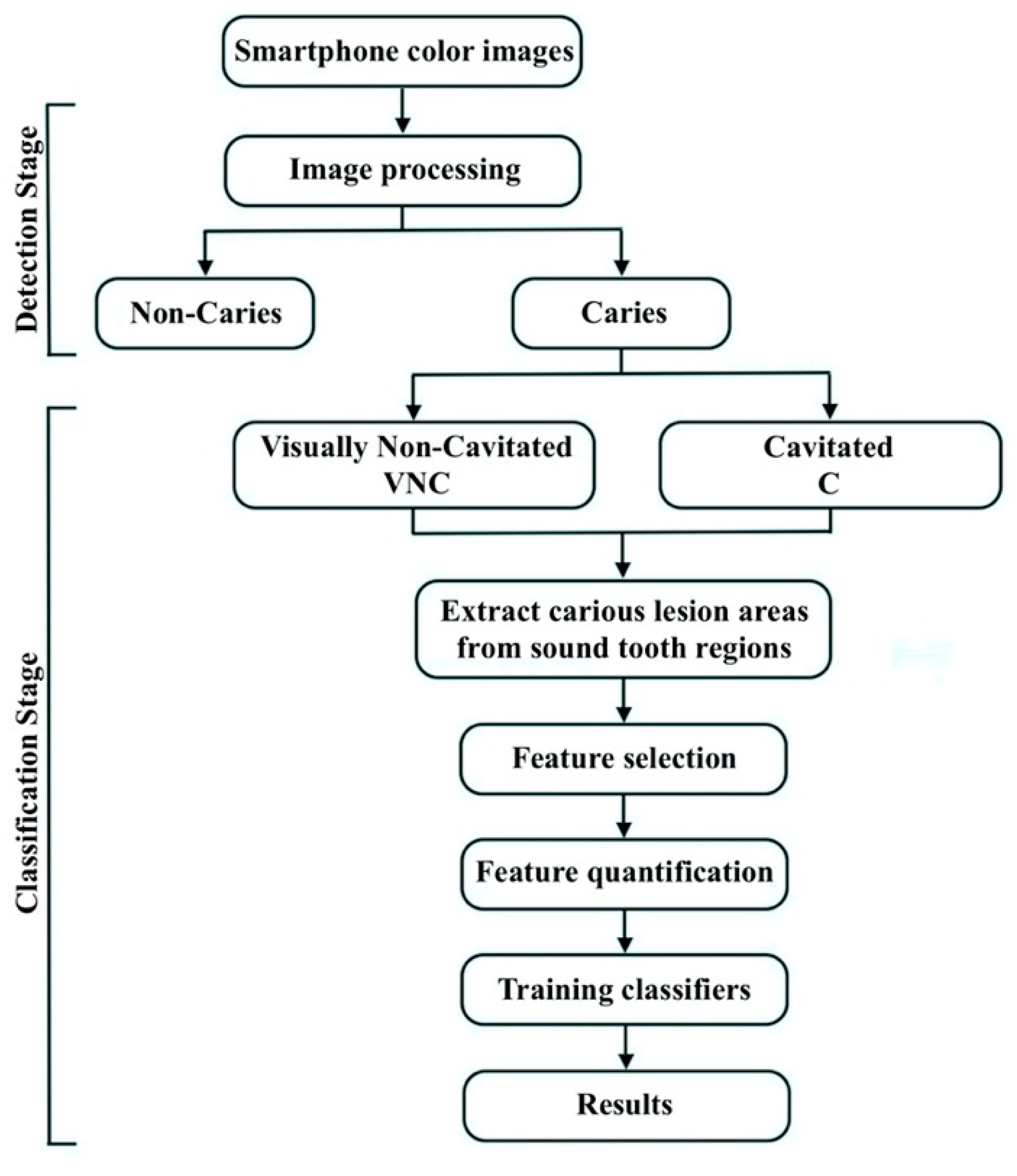

2.2. Caries Detection Stage

2.2.1. Image Processing

2.2.2. Evaluation Process for Caries Detection Stage

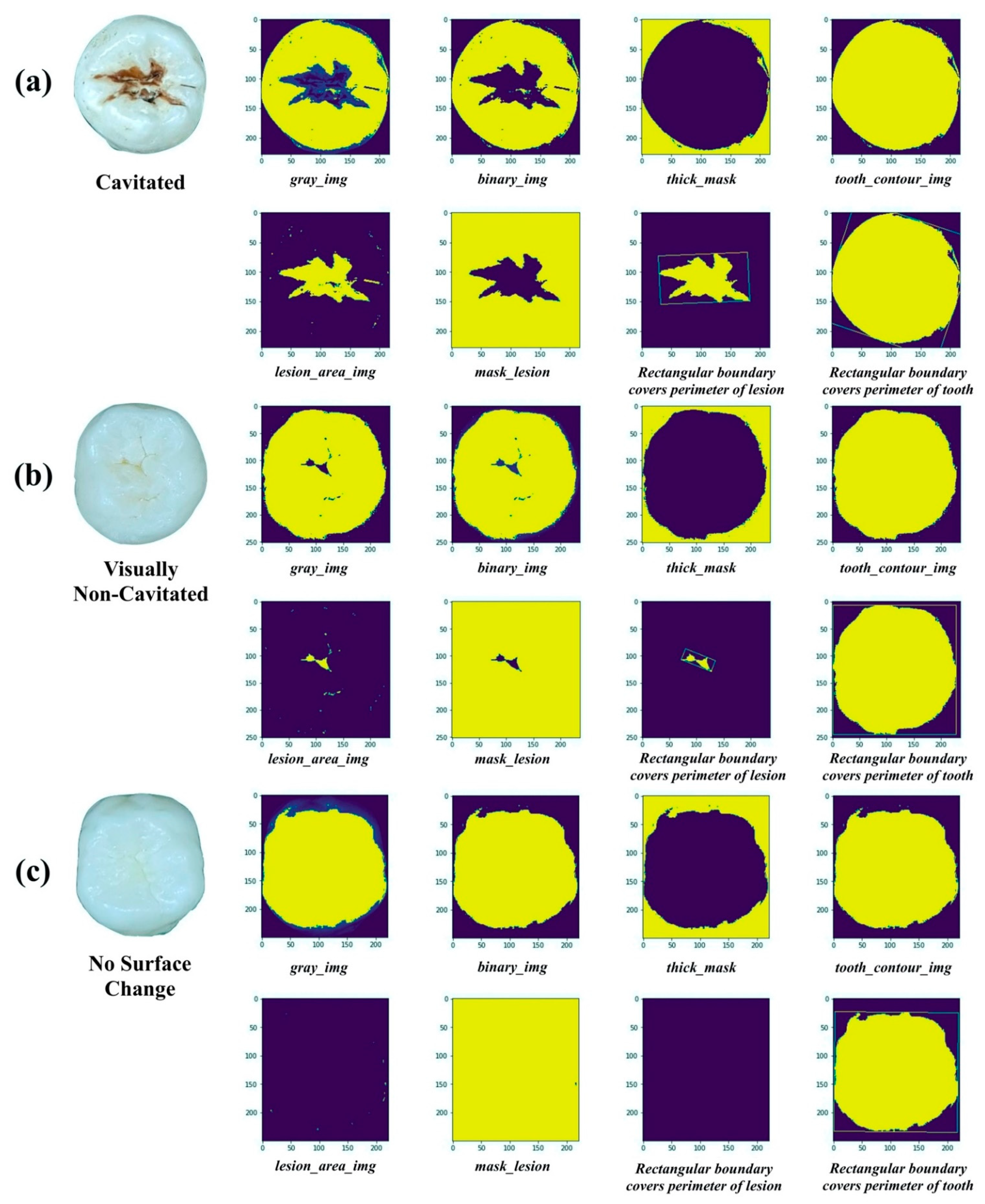

2.3. Caries Classification Stage

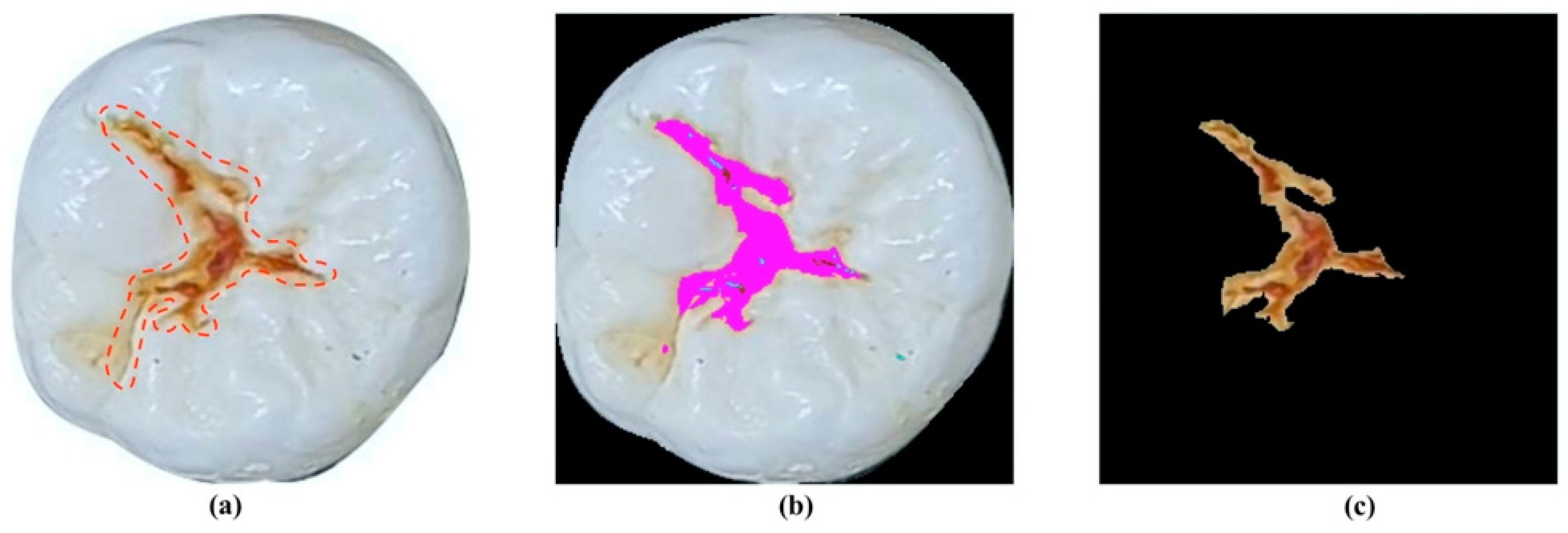

2.3.1. Feature Selection and Quantification

2.3.2. Classification Algorithms and Evaluation Metrics

2.4. Convolutional Neural Network

3. Results

3.1. Caries Detection Stage

3.2. Caries Classification Stage

3.2.1. Caries Classification with Selected Features

3.2.2. Convolutional Neural Network Classification

4. Discussion

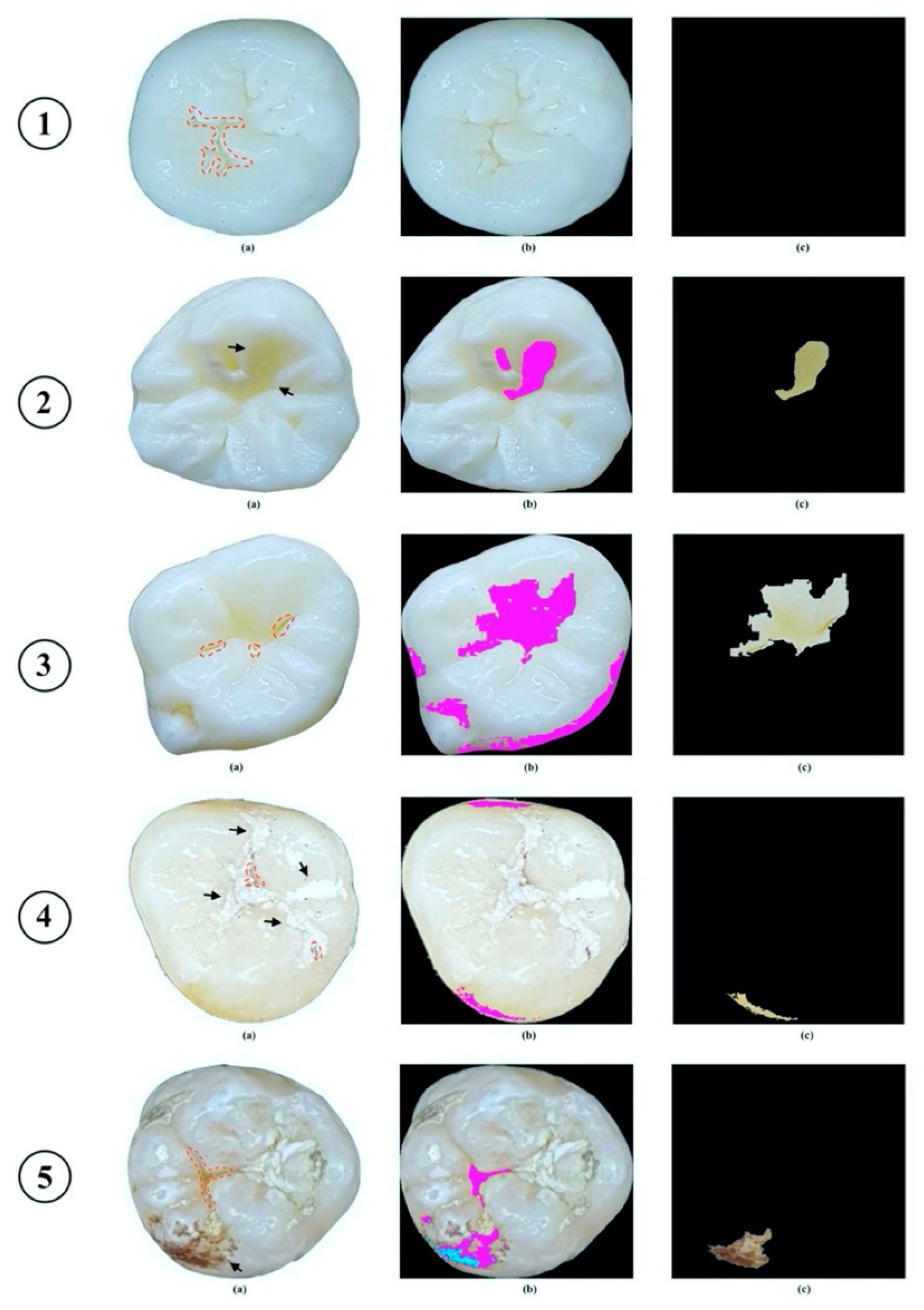

- Minor change on the occlusal surface was not detected by the proposed method.

- The proposed method recognized shadow effect that appeared on the occlusal surface of “Non-Caries” tooth as carious lesion (indicated by arrow).

- The proposed method correctly recognized carious lesion on the occlusal surface, but due to light reflection, carious lesion was identified as larger than it should be.

- The proposed method recognized shadow effect that appeared on the occlusal surface as carious lesion, while true lesion could not be identified due to appearance of dental plaque (indicated by arrow).

- The proposed method misidentified carious lesion due to appearance of stains on occlusal surface (indicated by arrow).

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Global Burden of Disease Study, C. Global, regional, and national incidence, prevalence, and years lived with disability for 301 acute and chronic diseases and injuries in 188 countries, 1990-2013: A systematic analysis for the Global Burden of Disease Study 2013. Lancet 2015, 386, 743–800. [Google Scholar] [CrossRef] [Green Version]

- Neuhaus, K.W.; Ellwood, R.; Lussi, A.; Pitts, N.B. Traditional lesion detection aids. Monogr. Oral. Sci. 2009, 21, 42–51. [Google Scholar] [CrossRef]

- Amaechi, B.T.; Podoleanu, A.; Higham, S.M.; Jackson, D.A. Correlation of quantitative light-induced fluorescence and optical coherence tomography applied for detection and quantification of early dental caries. J. Biomed. Opt. 2003, 8, 642–647. [Google Scholar] [CrossRef] [PubMed]

- Abrams, S.H.; Sivagurunathan, K.S.; Silvertown, J.D.; Wong, B.; Hellen, A.; Mandelis, A.; Hellen, W.M.; Elman, G.I.; Mathew, S.; Mensinkai, P.K. Correlation with caries lesion depth of the Canary System, DIAGNODENT and ICDAS II. Open Dent. J. 2017, 11, 679. [Google Scholar] [CrossRef] [Green Version]

- Jeon, R.J.; Matvienko, A.; Mandelis, A.; Abrams, S.H.; Amaechi, B.T.; Kulkarni, G. Detection of interproximal demineralized lesions on human teeth in vitro using frequency-domain infrared photothermal radiometry and modulated luminescence. J. Biomed. Opt. 2007, 12, 034028. [Google Scholar] [CrossRef]

- Attrill, D.C.; Ashley, P.F. Occlusal caries detection in primary teeth: A comparison of DIAGNOdent with conventional methods. Br Dent J 2001, 190, 440–443. [Google Scholar] [CrossRef] [Green Version]

- Söchtig, F.; Hickel, R.; Kühnisch, J. Caries detection and diagnostics with near-infrared light transillumination: Clinical experiences. Quintessence Int. 2014, 45. [Google Scholar]

- Davies, G.M.; Worthington, H.V.; Clarkson, J.E.; Thomas, P.; Davies, R.M. The use of fibre-optic transillumination in general dental practice. Br. Dent. J. 2001, 191, 145–147. [Google Scholar] [CrossRef] [Green Version]

- Ricketts, D.N.; Kidd, E.A.; Liepins, P.J.; Wilson, R.F. Histological validation of electrical resistance measurements in the diagnosis of occlusal caries. Caries Res. 1996, 30, 148–155. [Google Scholar] [CrossRef] [PubMed]

- Bottenberg, P.; Jacquet, W.; Behrens, C.; Stachniss, V.; Jablonski-Momeni, A. Comparison of occlusal caries detection using the ICDAS criteria on extracted teeth or their photographs. BMC Oral Health 2016, 16, 93. [Google Scholar] [CrossRef] [Green Version]

- Boye, U.; Walsh, T.; Pretty, I.A.; Tickle, M. Comparison of photographic and visual assessment of occlusal caries with histology as the reference standard. BMC Oral Health 2012, 12, 10. [Google Scholar] [CrossRef] [Green Version]

- Umemori, S.; Tonami, K.; Nitta, H.; Mataki, S.; Araki, K. The possibility of digital imaging in the diagnosis of occlusal caries. Int. J. Dent. 2010, 2010, 860515. [Google Scholar] [CrossRef] [Green Version]

- Kositbowornchai, S.; Siriteptawee, S.; Plermkamon, S.; Bureerat, S.; Chetchotsak, D. An Artificial Neural Network for Detection of Simulated Dental Caries. Int. J. Comput. Assist. Radiol. Surg. 2006, 1, 91–96. [Google Scholar] [CrossRef]

- Olsen, G. Fundamental Work toward an image Processing-Empowered Dental Intelligent Educational System. Ph.D. Thesis, Virginia Commonwealth University, Richmond, VA, USA, 2010. [Google Scholar]

- Ghaedi, L.; Gottlieb, R.; Sarrett, D.C.; Ismail, A.; Belle, A.; Najarian, K.; Hargraves, R.H. An automated dental caries detection and scoring system for optical images of tooth occlusal surface. Conf Proc IEEE Eng Med Biol Soc 2014, 2014, 1925–1928. [Google Scholar] [CrossRef]

- Berdouses, E.D.; Koutsouri, G.D.; Tripoliti, E.E.; Matsopoulos, G.K.; Oulis, C.J.; Fotiadis, D.I. A computer-aided automated methodology for the detection and classification of occlusal caries from photographic color images. Comput. Biol. Med. 2015, 62, 119–135. [Google Scholar] [CrossRef] [PubMed]

- Ahmad, I. Digital dental photography. Part 4: Choosing a camera. Br. Dent. J. 2009, 206, 575–581. [Google Scholar] [CrossRef] [Green Version]

- Kohara, E.K.; Abdala, C.G.; Novaes, T.F.; Braga, M.M.; Haddad, A.E.; Mendes, F.M. Is it feasible to use smartphone images to perform telediagnosis of different stages of occlusal caries lesions? PLoS ONE 2018, 13, e0202116. [Google Scholar] [CrossRef]

- Estai, M.; Kanagasingam, Y.; Huang, B.; Shiikha, J.; Kruger, E.; Bunt, S.; Tennant, M. Comparison of a Smartphone-Based Photographic Method with Face-to-Face Caries Assessment: A Mobile Teledentistry Model. Telemed J. E Health 2017, 23, 435–440. [Google Scholar] [CrossRef]

- Banting, D.; Eggertsson, H.; Ekstrand, K.; Ferreira-Zandoná, A.; Ismail, A.; Longbottom, C.; Pitts, N.; Reich, E.; Ricketts, D.; Selwitz, R. Rationale and evidence for the international caries detection and assessment system (ICDAS II). Ann. Arbor. 2005, 1001, 48109-1078. [Google Scholar]

- Duong, D.L.; Kabir, M.H.; Kuo, R.F. Automated caries detection with smartphone color photography using machine learning. Health Inform. J. 2021, 27, 14604582211007530. [Google Scholar] [CrossRef]

- Bradski, G. The opencv library. Dr Dobb’s J. Softw. Tools 2000, 25, 120–125. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Keller, J.; Gray, M.R.; Givens, J.A. A fuzzy K-nearest neighbor algorithm. IEEE Trans. Syst. Man Cybern. 1985, SMC-15, 580–585. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 1189–1232. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning, 2nd ed.; Springer: New York, NY, USA, 2009; pp. 119–127. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Wei, L.; Yangqing, J.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Bader, J.D.; Shugars, D.A.; Bonito, A.J. Systematic reviews of selected dental caries diagnostic and management methods. J. Dent. Educ. 2001, 65, 960–968. [Google Scholar] [CrossRef]

- Bader, J.D.; Shugars, D.A.; Bonito, A.J. A systematic review of the performance of methods for identifying carious lesions. J. Public. Health Dent. 2002, 62, 201–213. [Google Scholar] [CrossRef]

- Tu, J.V. Advantages and disadvantages of using artificial neural networks versus logistic regression for predicting medical outcomes. J. Clin. Epidemiol. 1996, 49, 1225–1231. [Google Scholar] [CrossRef]

| ICDAS Criteria Code | |||||||

|---|---|---|---|---|---|---|---|

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | |

| Labeled classes | No Surface Change (NSC) (n = 73) | Visually Non-Cavitated (VNC) (n = 220) | Cavitated (C) (n = 294) | ||||

| Features of Carious Lesions | |||||

|---|---|---|---|---|---|

| Length | Width | Smoothness | Ratio | Convex Area | |

| NSC | 0 | 0 | 0 | 0 | 0 |

| VNC | ✓ | ✓ | ✓ | ✓ | ✓ |

| C | ✓ | ✓ | ✓ | ✓ | ✓ |

| True Class | |||

|---|---|---|---|

| Predictedclass | Caries | Non-Caries | |

| Caries | 462 | 22 | |

| Non-Caries | 52 | 51 | |

| Accuracy (%) | Recall (%) | Precision (%) | F1 Score (%) | Sensitivity (%) | Specificity (%) | AUCROC (%) | |

|---|---|---|---|---|---|---|---|

| SVM | 88.76 | 92.31 | 86.19 | 89.14 | 92.31 | 85.21 | 95 |

| RF | 86.39 | 87.57 | 85.55 | 86.55 | 87.57 | 85.21 | 94 |

| KNN | 86.09 | 86.98 | 85.47 | 86.22 | 86.98 | 85.21 | 94 |

| GBT | 85.21 | 85.80 | 84.80 | 85.29 | 85.80 | 84.62 | 92 |

| LR | 82.25 | 84.62 | 80.79 | 82.66 | 84.62 | 79.88 | 92 |

| Validation Accuracy (%) | Test Accuracy (%) | Learning Epochs | CPU Time (m) | |

|---|---|---|---|---|

| ResNet18 | 71.67 | 51.72 | 10 | 50 |

| ResNet50 | 68.33 | 63.79 | 10 | 94 |

| GoogleNet | 71.67 | 65.52 | 10 | 42 |

| Authors | Methodology | Dataset | Accuracy | Sensitivity | Specificity |

|---|---|---|---|---|---|

| Kositbowornchai et al. [13] | Images from a charged coupled device (CCD) camera and intra-oral digital radiography | Training set—49 images (26 teeth were sound or had artificially-created buccal or lingual carious lesions; 23 teeth with sound or artificially-induced proximal caries) Test set—322 images (160 CCD images and 162 digital radiographs) | CCD: 77% Radiograph: 81% | CCD: 85% Radiograph: 93% | |

| Berdouses et al. [16] | Digital color images Preprocessing Segmentation Postprocessing | 103 images: 12 in vivo; 91 in vitro. | 80% | 80% | |

| Our method | Smartphone color images Image Processing | 587 in vitro images | 87.39% | 89.88% | 68.86% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Duong, D.L.; Nguyen, Q.D.N.; Tong, M.S.; Vu, M.T.; Lim, J.D.; Kuo, R.F. Proof-of-Concept Study on an Automatic Computational System in Detecting and Classifying Occlusal Caries Lesions from Smartphone Color Images of Unrestored Extracted Teeth. Diagnostics 2021, 11, 1136. https://doi.org/10.3390/diagnostics11071136

Duong DL, Nguyen QDN, Tong MS, Vu MT, Lim JD, Kuo RF. Proof-of-Concept Study on an Automatic Computational System in Detecting and Classifying Occlusal Caries Lesions from Smartphone Color Images of Unrestored Extracted Teeth. Diagnostics. 2021; 11(7):1136. https://doi.org/10.3390/diagnostics11071136

Chicago/Turabian StyleDuong, Duc Long, Quoc Duy Nam Nguyen, Minh Son Tong, Manh Tuan Vu, Joseph Dy Lim, and Rong Fu Kuo. 2021. "Proof-of-Concept Study on an Automatic Computational System in Detecting and Classifying Occlusal Caries Lesions from Smartphone Color Images of Unrestored Extracted Teeth" Diagnostics 11, no. 7: 1136. https://doi.org/10.3390/diagnostics11071136

APA StyleDuong, D. L., Nguyen, Q. D. N., Tong, M. S., Vu, M. T., Lim, J. D., & Kuo, R. F. (2021). Proof-of-Concept Study on an Automatic Computational System in Detecting and Classifying Occlusal Caries Lesions from Smartphone Color Images of Unrestored Extracted Teeth. Diagnostics, 11(7), 1136. https://doi.org/10.3390/diagnostics11071136