1. Introduction

Keratoconus is a non-inflammatory, progressive, bilateral dystrophic disease characterised by conical corneal protrusion, irregular astigmatism and stromal thinning of the cornea at the apex. The main diagnostic methods for assessing the presence of keratoconus are topography, tomography, pachymetry and biomicroscopy [

1]. Corneal topography and Scheimpflug imaging, with a Pentacam system, are used to analyse the corneal surface. This system is based on elevation detection and allows for the assessment of decentration, anterior and posterior surface conditions and corneal pachymetry [

2]. The most common problem in the treatment of keratoconus is its diagnosis in its initial stages. Detecting keratoconus at an early stage provides patients with the opportunity to start treatment earlier, thus slowing or even halting the progression of the disease. Patients with formal fruste keratoconus are at high risk of developing iatrogenic ectasia after corneal refractive procedures such as LASIK [

3]. Various indices and classifications have been developed to quantify the severity of keratoconus. Among them are TKC parameter and Bellin–Ambrosio ectasia index of the Pentacam system [

4]. One of the surgical classifications of keratoconus was proposed in 2014 by Izmailova S.B [

5]. It is based on several parameters including corrected visual acuity, maximum keratometry, pachymetry, corneal height maps measured with the Pentacam and biomicroscopy and confocal microscopy data. The most widely used and included in the European clinical guidelines for the treatment of keratoconus [

6] is the classification proposed by Amsler in 1946 [

7]. It is based on keratometric criteria and includes pachymetric and refractive data. Its modern adapted versions—the Amsler–Krumeich algorithm, 1998 [

8], and the George Asimellis algorithm, 2013 [

9]—are currently in wide use. A new ABCD classification system was presented by Michael Belin in 2017 [

10]. It uses four parameters to assess disease severity: the anterior and posterior radii of curvature, minimum corneal thickness and corrected visual acuity.

With advances in technology and the accumulation of data, more and more studies are showing that it is possible to automate the process of keratoconus diagnosis. Various machine-learning methods are being used for this purpose, which are a subset of artificial intelligence methods. The term “artificial intelligence” was introduced in 1956 by John McCartney and is a general term that “refers to hardware or software that exhibits behaviour which appears intelligent” [

11]. Numerous studies using machine-learning techniques to diagnose keratoconus have focused on determining its subclinical form. Indices have been created for Pentacam, Galileo, Sirius and Obscan topographers, with an accuracy of 0.96 for subclinical keratoconus detection [

12]. It has also been shown that the use of not only keratotopography data but also parameters of other keratotomography devices can improve diagnostic accuracy [

13,

14]. In addition to digital device parameters, keratotopographer topographic maps are used to classify eyes with normal topography and eyes with keratoconus [

15]. The use of machine-learning techniques can help to both solve the problem of diagnosing keratoconus and predict the course of the disease. For example, Josefi et al. showed that it was possible to predict the probability of keratoplasty using optical coherence tomography data. An unsupervised learning method, such as clustering, was used for this purpose, and the probability of surgery was estimated using the ratio of the number of all the eyes in the cluster to the number of eyes having been operated on [

16]. In a study by Velázquez-Blázquez et al., a predictive model for the classification of the initial stages of keratoconus, according to the RETICS scale with an accuracy of 73%, was developed based on a set of demographics, as well as optical, pachometric and morphogeometric variables [

17,

18].

There are numerous nomograms for the management of patients with keratoconus, which mainly include parameters such as the degree of keratoconus, disease progression and contact lens wearability. For example, in Ismailova’s algorithm, the main factor influencing the choice of treatment tactics is the stage of keratoconus, which is determined using modern diagnostic methods [

5]. The algorithms developed by Mohammadpour [

19] and Andreanos [

20] represent a synthesis of previous studies. They are based on disease progression, corrected visual acuity, corneal thickness and keratometry.

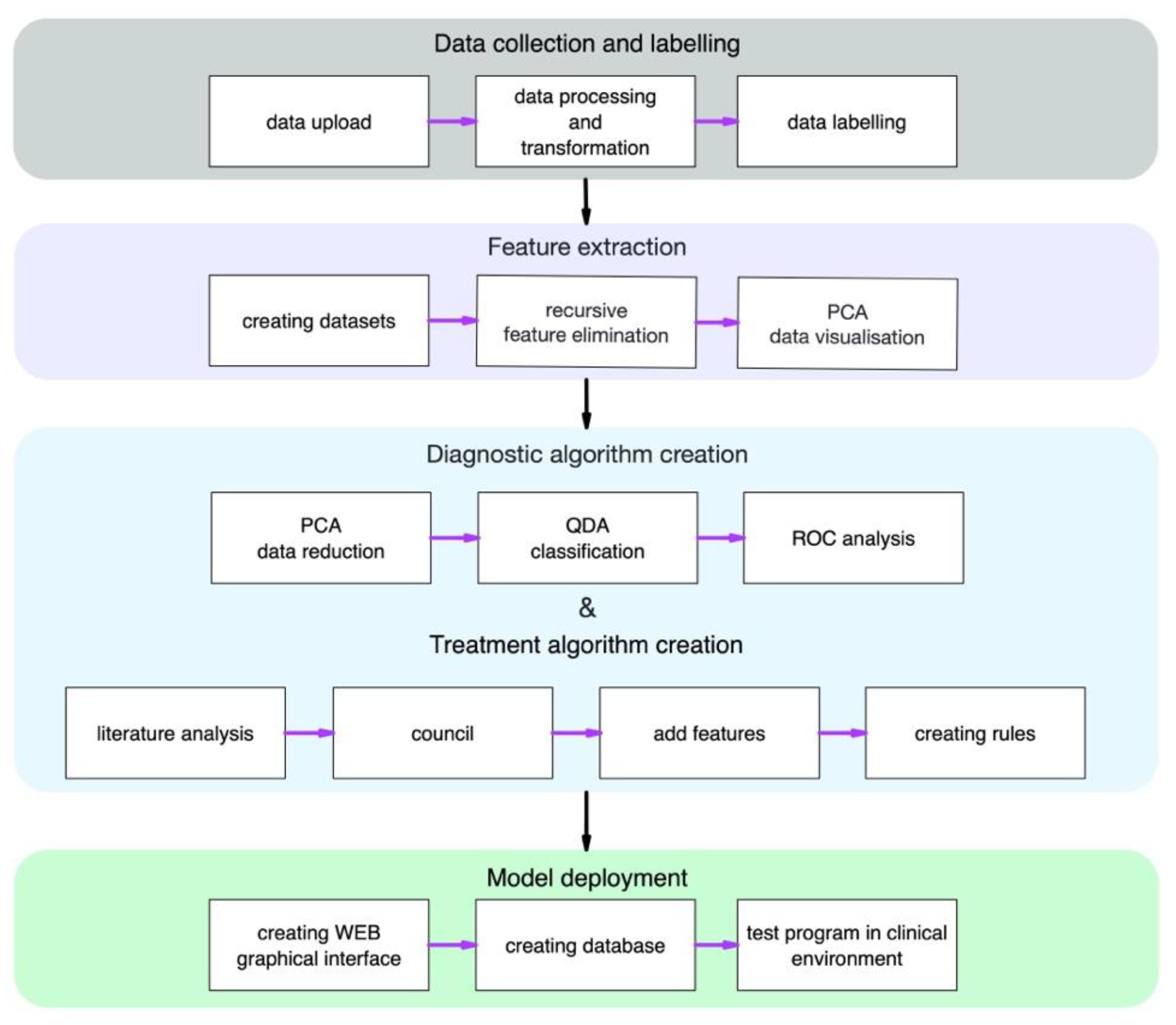

In this paper, we present the results of developing a machine-learning model for the diagnosis of keratoconus, as well as an algorithm that summarises the recommendations for treatment tactics.

2. Materials and Methods

2.1. Data

Patients’ data from Pentacam devices were automatically obtained as 8 CSV format tables in the S. Fyodorov Eye Microsurgery Complex Head Office (Moscow) and its branches located in Krasnodar, Cheboksary and St. Petersburg during the period from 2015 to 2021. Baseline settings were used in the measurements on the device. After merging the data contained in different tables as well as on different instruments, only the rows with optimal data quality with an “OK” survey status were retained. Data obtained from one eye of the one patient with an “OK” status on the same day were deleted. In the database, which contained all the data from the Pentacam, 47,419 rows remained. Data on keratoconus patients from electronic medical records containing information on the keratoconus stage and visual acuity were collected manually. These data were added and merged with the Pentacam database by patient ID, after which all the data were de-identified. In the final database, 734 rows contained information on the Pentacam measurements, keratoconus stage and visual acuity. The data were processed and analysed using the Python 3 programming language.

This study was performed in accordance with the ethical standards in the Declaration of Helsinki and was approved by the Local Ethical Committee. Informed consent was obtained from the participants. The data protection measures included the de-identification of the data, and the use of local computers to store and process the data within the organisation’s network. In describing this work, we considered the TRIPOD recommendations [

21].

2.2. Data Labelling and Obtaining the Dataset

As the aim of our study was to determine the stage of keratoconus, the first step was to select a suitable classification. For this purpose, keratoconus stages were added to the Pentacam device readings according to the ABCD classification [

10] as well as according to an adapted Amsler–Krumeich (AK) algorithm [

9]. The parameters and reference values for these algorithms are shown in

Table 1.

The amount of data obtained after applying the classification, as well as from electronic case histories, is presented in

Table 2. In addition, after combining keratotopographer data, as well as keratoconus stage data, from electronic medical records, the final database included keratoconus stages that was defined by the ophthalmologists. A sufficient number of rows for training the ML model was obtained only for the AK classification, as it was not possible to use one or seven cases to select the most influential parameters, which included 490 measurements. In this case, the result would be approximate and inaccurate. Therefore, this classification was taken as the basis for further stages of the work. From the result database, we obtained a dataset that contained 400 healthy eyes; 400 eyes with preclinical keratoconus or stages 1, 2 and 3 were selected by random sampling and 52 eyes with stage 4.

2.3. Feature Selection

The stages of keratoconus determined by the adapted AK algorithm were a dependent variable for the feature-selection step. In this study, we used StandartScaler for normalisation of independent variables to the range 0–1 and the RFE algorithm with logistic regression as an estimator that provided the importance of features. The estimator updated coefficients that held the fitted parameters. Important features corresponded to high absolute values of the coefficients. We used ‘newton-cg’ as a solver with ‘multinomial’ parameter for the multiclass problem. As a result, out of 490 Pentacam parameters, the 7 most significant parameters were selected using the RFE method. The descriptions of the selected parameters are presented in

Table 3.

2.4. Machine-Learning Algorithms and Evaluation of Results

For ML model development, we used the same dataset as in

Section 2.3, which included only those parameters that had been selected in the feature-selection step. The data were split with a 60:40 ratio: 60% of the data were used for training, and 40%, for testing. First, we used PCA to reduce the dataset dimension from seven parameters to two components and quadratic discriminant analysis (QDA) to classify condition of the patients. Then, we applied the model for test data. To check the quality of the model, we used the AUC metric as well as a visual analysis of the data distribution to compare the resulting model, relative to the adapted AK algorithm. The machine-learning model was developed using Python 3 and the open-source library scikit-learn.

Figure 1 is a complete study design for a developed algorithm for the diagnosis and treatment of keratoconus.

3. Results

The first step in our work was to determine the stages of keratoconus in the resulting database. For this purpose, we selected two classifications—the ABCD classification developed in 2018 and the adapted AK algorithm. The staging of these classifications resulted in stages 0–4 of keratoconus for AK, as well as stages 1 and 2 for ABCD from the overall database. There were also cases with stages 1 and 2 determined by the ophthalmologists in the database. The number of rows for ABCD and physician-defined stages 3 and 4 was insufficient for further analysis.

Thus, the keratoconus stages determined by the adapted AK algorithm were used to select the most significant parameters; they were the dependent variable for the RFE method. Out of 490 keratotopograph parameters, the 7 parameters most influential for keratoconus stage selection were identified (

Table 3).

Table 4 shows the calculated mean values for the parameters selected by RFE. A gradual increase in ISV, IVA, KI, IHD, K Max (Front) and IS-Value and a decrease in R Min from normal to stage 4 keratoconus can be observed.

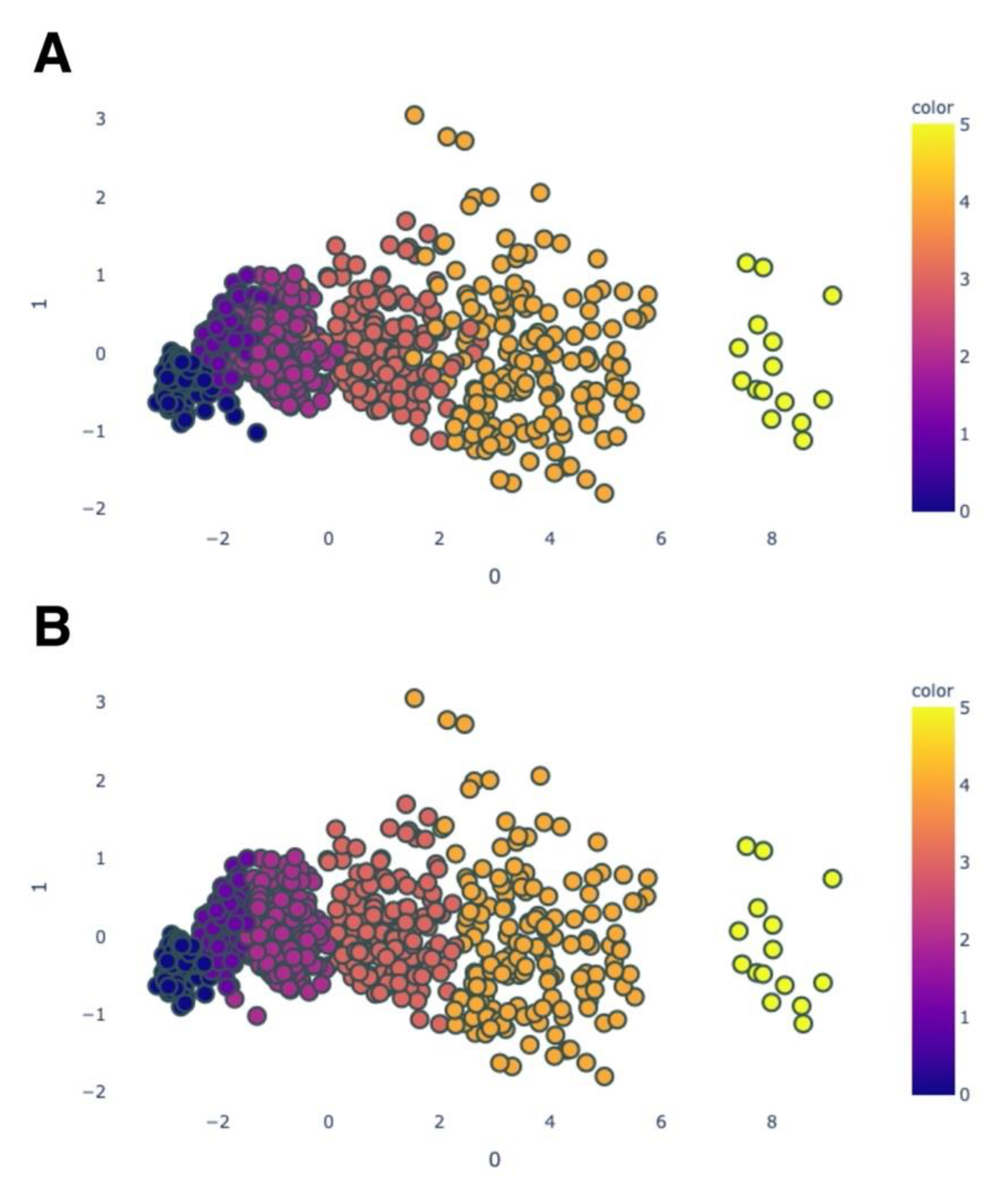

The next step of our work was diagnostic algorithm creation. After normalisation of train data, we implemented the PCA method to linear transformation from seven parameters to the two principal components.

Figure 2 shows the distribution of the training data, which are coloured according to the stage of keratoconus (normal; preclinical keratoconus; and stages 1, 2, 3 and 4).

In the next step, the quadratic discriminant analysis was fitted to the training dataset, which consisted of two parameters (principal components) as determined by the PCA method. As a result, we received the classification model for keratoconus stages. This model we applied to predict of the keratoconus stages in test data.

Figure 3 visualises the test data after PCA (A) and QDA (B).

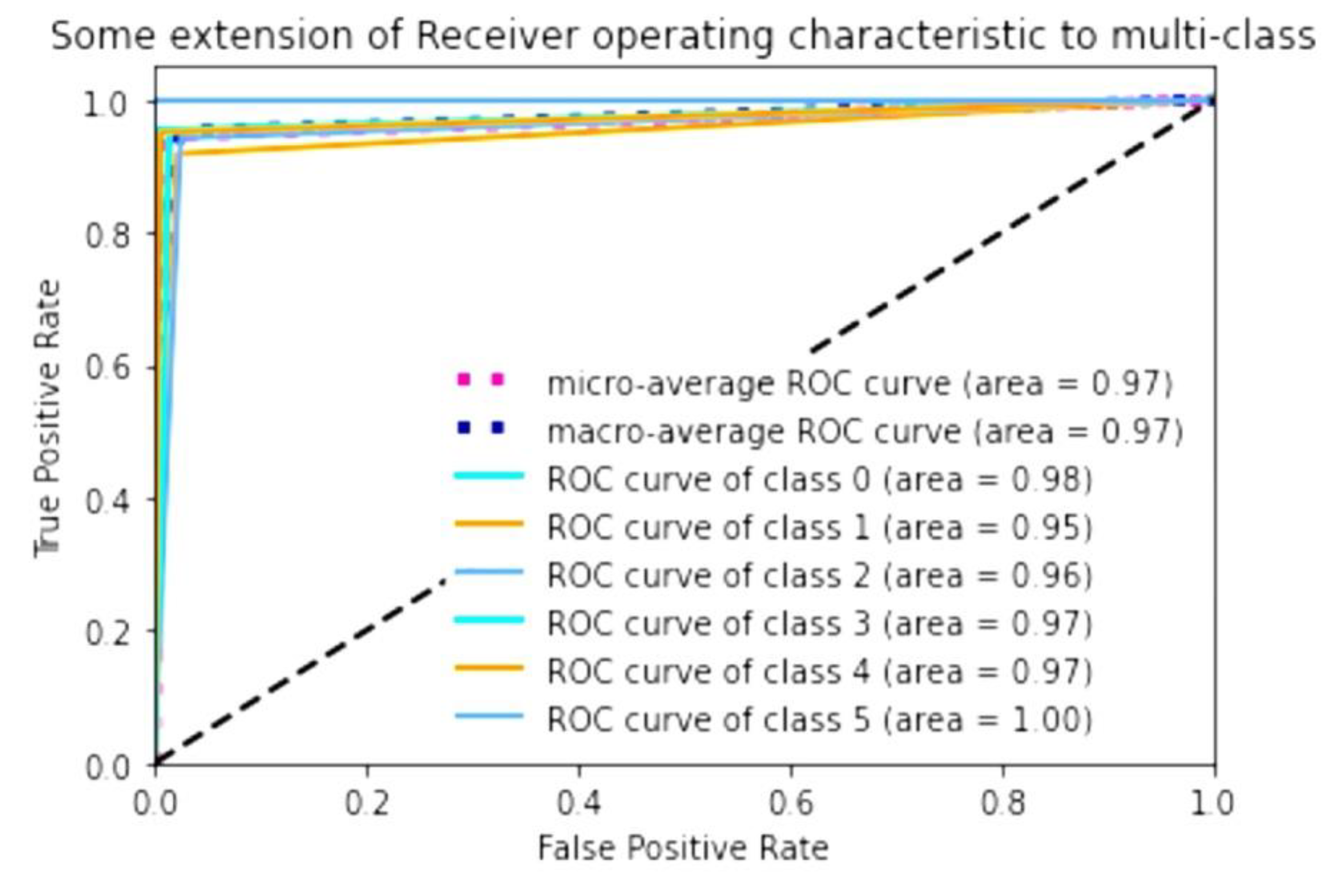

To evaluate the accuracy of the algorithm, we calculated the AUC of the prediction of keratoconus stage contained in test data relative to the adapted AK algorithm. It showed high AUC values when performing ROC analysis. The macro-average, which is the result of computing the metric independently for each class and then taking the average and the micro-average, which is the result of aggregating the contributions of all the classes to compute the average metric, is 0.97. If we consider the AUC for a single stage, it was the highest for stage 4 (1.00, class 5). For normal eyes (class 1), it was 0.98; for stages 2 (class 3) and 3 (class 4), it was 0.97; and for stage 1 (class 2), it was 0.96. Preclinical keratoconus (class 1) had the lowest AUC; the result for this group of eyes was 0.95 (

Figure 4).

The next step in our work was to create an algorithm for the automated determination of the best keratoconus treatment tactics. For this purpose, additional diagnostic measurements for corneal structure were identified through reviewing the literature and consulting physicians and were used for constructing the algorithm. The parameters and values used in our algorithm to determine the treatment tactics are shown in

Table 5. These parameters include the keratoconus stage, determined using our developed machine-learning model, as well as the minimum corneal thickness (measured by a kertotopographer), endothelial cell density (ECD) (biomicroscopy), presence of scarring or opacities (biomicroscopy), ability to correct vision with lenses or glasses (from the medical record), maximum corrected visual acuity (from the medical record) and presence of keratoconus progression (from the medical record).

A graphical interface was developed for the keratoconus diagnosis model derived from the study as well as the algorithm for determining the indication for surgical intervention. The resulting web application has input fields (

Figure 5A,B) for entering information about the parameters measured with the Pentacam, as well as additional diagnostic parameters. The result is displayed as a graphical representation of the model with determined keratoconus stage (

Figure 5C) or type of surgical intervention (

Figure 5D).

This application has a web form and is located at the following address:

mntk.predictspace.com (the program is not registered as a medical device and is not a substitute for a medical diagnosis).

4. Discussion

Studies dedicated to the use of machine-learning techniques have mainly focused on determining the presence of keratoconus, especially its subclinical form [

12,

14], as treatment of the initial stages is less invasive and helps to avoid further disease progression. The aim of our study was to develop an algorithm for the determination of both the presence of keratoconus and its stages. For this purpose, the first task we had to perform was to choose the most relevant keratotopographer parameters for keratoconus diagnostics. The parameters that have the greatest influence on the stage of keratoconus according to the adapted AK algorithm are the most commonly used in the diagnosis of keratoconus in clinical practice [

22].

There are several topographic criteria for diagnosing KC. They can be divided into three main subgroups: curvature-based, elevation-based, and pachymetry-based. The rotating Scheimpflug camera (Pentacam, Oculus GmbH, Wetzlar, Germany) can generate various indices within each of the three index subgroups. The TKC parameter provides the doctor with information about the keratoconus stage. Additionally, information about the stage and status of keratoconus are presented in the extended Belin–Ambrosio ectasia index of the Pentacam. Unfortunately, none of them are 100% sensitive or specific. Some authors believe that elevation maps are better than axial curvature maps for KC screening, while others claim that curvature is still the most sensitive parameter [

4].

Earlier studies on keratoconus diagnosis using machine-learning methods have mainly used supervised methods such as support vector machine, random forest and regression analysis. In the present study, we used a supervised machine-learning method, quadratic discriminant analysis (QDA). Accuracy of this method reached an average of 0.97. The best accuracy with the test dataset was shown for stage 4, and the worst for preclinical keratoconus. These data show very similar distribution of keratoconus stages determined by the AK algorithm and stages predicted using the developed model.

The present work has a number of limitations that we plan to solve in our future studies. To build a machine-learning model, we used the keratoconus stages and parameters that were identified during the extraction of the parameters most significant with respect to the adapted AK algorithm. This algorithm is the most widely used, but a newer ABCD classification is now available. In the present study, we obtained datasets for stages 1, 2, 3 and 4 and preclinical keratoconus using the AK algorithm; 1 and 2 using the ABCD classification; and 1 and 2 as defined by ophthalmologists. The ABCD classification, in addition to parameters such as the anterior and posterior radii of curvature and minimum corneal thickness, also uses parameters of corrected visual acuity. Due to the lack of corrected visual acuity data in the electronic database, this parameter was not taken into account when using the classification, which may have resulted in insufficient stages 3 and 4 in the resulting dataset. We plan to add data containing corrected visual acuity information and create a model based on the ABCD classification to compare with this algorithm in the near future.

We also attempted to collect data containing information on the stages of keratoconus diagnosed by clinical physicians. In the obtained dataset, sufficient cases for further analysis were only collected for stages 1 and 2, making it impossible to use this method of determining the keratoconus stage for model development.

Another limitation is that we did not compare the results obtained using the algorithm with the doctor’s decision regarding the choice of treatment tactics. Therefore, we plan to test our algorithm in the clinical environment. We are also planning to collect data about doctors’ decisions and to develop a fully automated model of keratoconus treatment.

Thus, after passing the testing phase and the practical confirmation of high accuracy, the developed software solution could become a complementary diagnostic method and allow the standardisation of the treatment process.

5. Conclusions

In summary, we have developed a diagnostic software solution that includes a model for the automated determination of the keratoconus stage, with an accuracy from 0.95 to 1.0, as measured against the adapted AK algorithm. In addition, this software contains a standardised algorithm for determining the indication for surgical intervention, based on data from the literature and recommendations from the expert community. The software has a web interface, which will allow us to adopt it into wider clinical practice and conduct further research in the near future.

6. Patents

Certificate of state registration of a computer program No. 2021662273 Russian Federation. Keratoconus Diagnostic and Treatment Program.

Author Contributions

Conceptualisation, B.M., S.S. and V.M.; methodology, L.A. and K.A.; software, K.A.; validation, S.S., B.M. and S.I.; formal analysis, L.A.; investigation, L.A. and K.A.; resources, E.B., A.T. (Aleksej Titov), N.P. and S.S.; data curation, B.M., A.T. (Anna Terentyeva) and N.P.; writing—original draft preparation, L.A.; writing—review and editing, B.M., S.S., S.I., L.A., K.A., V.M. and T.Z.; visualisation, L.A.; supervision, S.S. and V.M.; project administration, V.M. and K.A. All authors have read and agreed to the published version of the manuscript.

Funding

The APC was funded by The Fyodorov Eye Microsurgery Federal State Institution, 127-486 Moscow, Russia.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Local Ethical Committee of The S.N. Fyodorov Eye Microsurgery Federal State Institution (protocol code №1 11.09.2019).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Restrictions apply to the availability of these data. The data were obtained from The S. Fyodorov Eye Microsurgery Complex Federal State Institution and are available from Axenova L. with the permission of the S. Fyodorov Eye Microsurgery Complex Federal State Institution.

Acknowledgments

We express our gratitude to Anatoly Bessarabov N. (Moscow), Roman Yakovlev A., Ekaterina Yurtaeva A. (Cheboksary) and Kirsanov S.L. (Krasnodar) for assistance in the data collection. We thank Zakaraiia Eteri for the translation and language amendments of the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- George, A.; Kaufman, E.J. Keratoconus. In StatPearls; StatPearls Publishing: Treasure Island, FL, USA, 2021; Available online: http://www.ncbi.nlm.nih.gov/books/NBK470435/ (accessed on 4 August 2021).

- Lynett Erita, M.; Moodley, V. A Review of Corneal Imaging Methods for the Early Diagnosis of Pre-Clinical Keratoconus. J. Optom. 2020, 13, 269–275. [Google Scholar] [CrossRef]

- Binder, P.S. Analysis of Ectasia after Laser in Situ Keratomileusis: Risk Factors. J. Cataract. Refract. Surg. 2007, 33, 1530–1538. [Google Scholar] [CrossRef] [PubMed]

- Wahba, S.S.; Roshdy, M.M.; Elkitkat, R.S.; Naguib, K.M. Rotating Scheimpflug Imaging Indices in Different Grades of Keratoconus. J. Ophthalmol. 2016, 2016, 1–9. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Svetlana, I.; Komarova, O.; Semykin, A.; Zimina, M.; Konovalova, M. Revising the Question of Keratoconus Classification. Int. J. Keratoconus Ectatic Corneal Dis. 2018, 7, 82–89. [Google Scholar] [CrossRef]

- Peña-García, P.; Sanz-Díez, P.; Durán-García, M.L. Keratoconus Management Guidelines. Int. J. Keratoconus Ectatic Corneal Dis. 2015, 4, 1–39. [Google Scholar] [CrossRef]

- Amsler, M. Kératocône Classique et Kératocône Fruste; Arguments Unitaires. Ophthalmologica 1946, 111, 96–101. [Google Scholar] [CrossRef]

- Krumeich, J.H.; Jan, D.; Knülle, A. Live-Epikeratophakia for Keratoconus. J. Cataract. Refract. Surg. 1998, 24, 456–463. [Google Scholar] [CrossRef]

- John, A.K.; Asimellis, G. Revisiting Keratoconus Diagnosis and Progression Classification Based on Evaluation of Corneal Asymmetry Indices, Derived from Scheimpflug Imaging in Keratoconic and Suspect Cases. Clin. Ophthalmol. 2013, 7, 1539. [Google Scholar] [CrossRef] [Green Version]

- Belin, M.; Duncan, J. Keratoconus: The ABCD Grading System. Klin. Mon. Für Augenheilkd. 2016, 233, 701–707. [Google Scholar] [CrossRef]

- Shanthi, S.; Aruljyothi, L.; Balasundaram, M.B.; Janakiraman, A.; Nirmala, D.K.; Pyingkodi, M. Artificial Intelligence Applications in Different Imaging Modalities for Corneal Topography. Surv. Ophthalmol. 2021. [Google Scholar] [CrossRef]

- Cao, K.; Verspoor, K.; Sahebjada, S.; Baird, P.N. Evaluating the Performance of Various Machine Learning Algorithms to Detect Subclinical Keratoconus. Transl. Vis. Sci. Technol. 2020, 9, 24. [Google Scholar] [CrossRef] [Green Version]

- Hallett, N.; Yi, K.; Dick, J.; Hodge, C.; Sutton, G.; Wang, Y.G.; You, J. Deep Learning Based Unsupervised and Semi-Supervised Classification for Keratoconus. arXiv 2020, arXiv:2001.11653. Available online: http://arxiv.org/abs/2001.11653 (accessed on 4 August 2021).

- Kato, N.; Masumoto, H.; Tanabe, M.; Sakai, C.; Negishi, K.; Torii, H.; Tabuchi, H.; Tsubota, K. Predicting Keratoconus Progression and Need for Corneal Crosslinking Using Deep Learning. J. Clin. Med. 2021, 10, 844. [Google Scholar] [CrossRef] [PubMed]

- Lavric, A.; Valentin, P. KeratoDetect: Keratoconus Detection Algorithm Using Convolutional Neural Networks. Comput. Intell. Neurosci. 2019, 2019, 1–9. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yousefi, S.; Takahashi, H.; Hayashi, T.; Tampo, H.; Inoda, S.; Arai, Y.; Tabuchi, H.; Asbell, P. Predicting the Likelihood of Need for Future Keratoplasty Intervention Using Artificial Intelligence. Ocul. Surf. 2020, 18, 320–325. [Google Scholar] [CrossRef] [PubMed]

- Velázquez-Blázquez, J.S.; Bolarín, J.M.; Cavas-Martínez, F.; Alió, J.L. EMKLAS: A New Automatic Scoring System for Early and Mild Keratoconus Detection. Transl. Vis. Sci. Technol. 2020, 9, 30. [Google Scholar] [CrossRef] [PubMed]

- Bolarín, J.M.; Cavas, F.; Velázquez, J.S.; Alió, J.L. A Machine-Learning Model Based on Morphogeometric Parameters for RETICS Disease Classification and GUI Development. Appl. Sci. 2020, 10, 1874. [Google Scholar] [CrossRef] [Green Version]

- Mohammadpour, M.; Heidari, Z.; Hashemi, H. Updates on Managements for Keratoconus. J. Curr. Ophthalmol. 2018, 30, 110–124. [Google Scholar] [CrossRef]

- Andreanos, K.D.; Hashemi, K.; Petrelli, M.; Droutsas, K.; Georgalas, I.; Kymionis, G.D. Keratoconus Treatment Algorithm. Ophthalmol. Ther. 2017, 6, 245–262. [Google Scholar] [CrossRef]

- Collins, G.S.; Reitsma, J.B.; Altman, D.G.; Moons, K.G. Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis (TRIPOD): The TRIPOD Statement. Eur. J. Clin. Investig. 2015, 45, 204–214. [Google Scholar] [CrossRef] [Green Version]

- Naderan, M.; Shoar, S.; Kamaleddin, M.A.; Rajabi, M.T.; Naderan, M.; Khodadadi, M. Keratoconus Clinical Findings According to Different Classifications. Cornea 2015, 34, 1005–1011. [Google Scholar] [CrossRef] [PubMed]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).