Predicting Mechanical Ventilation and Mortality in COVID-19 Using Radiomics and Deep Learning on Chest Radiographs: A Multi-Institutional Study

Abstract

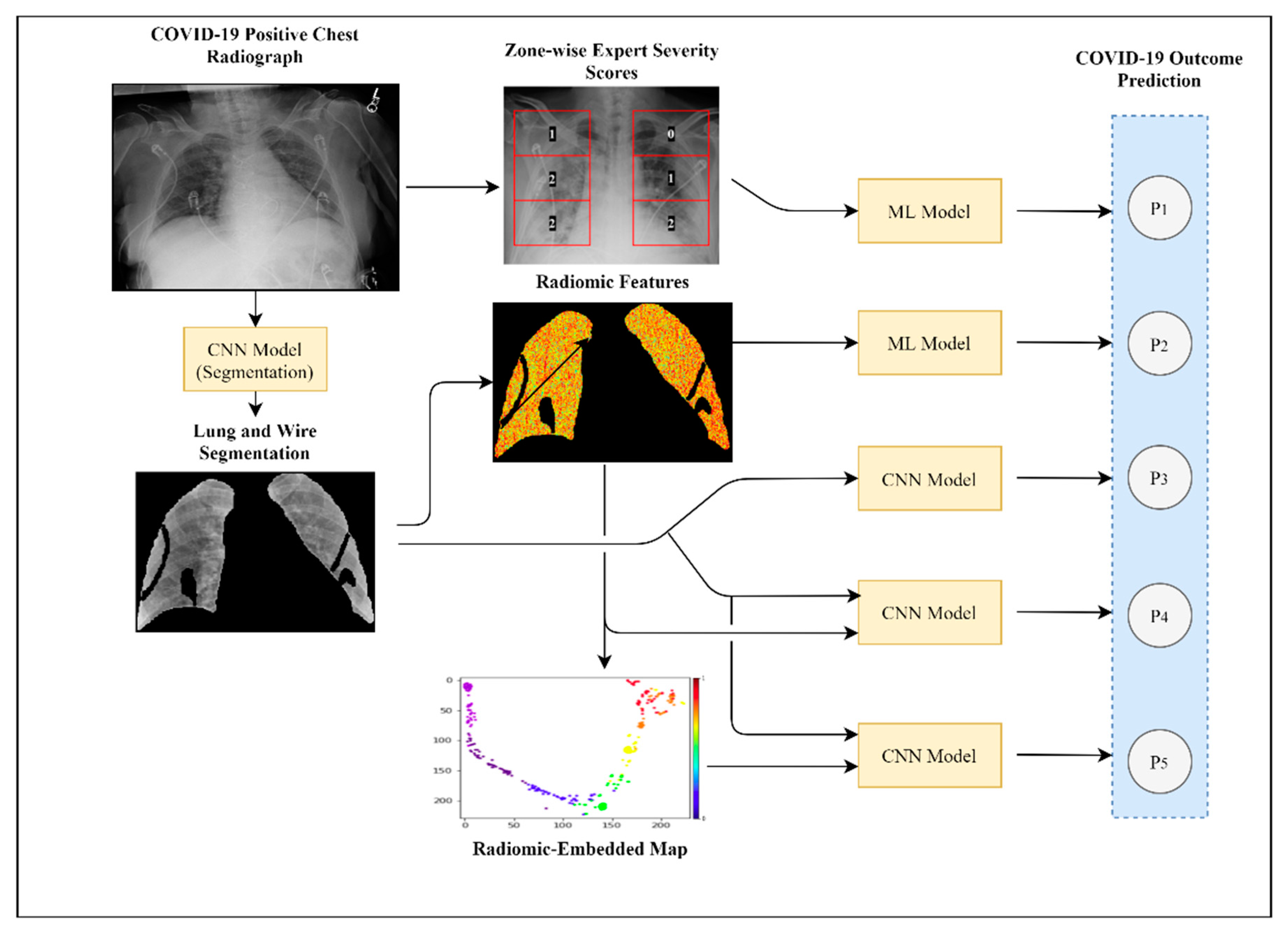

:1. Introduction

Related Work

2. Materials and Methods

2.1. Cohort Description

2.2. Image Preprocessing

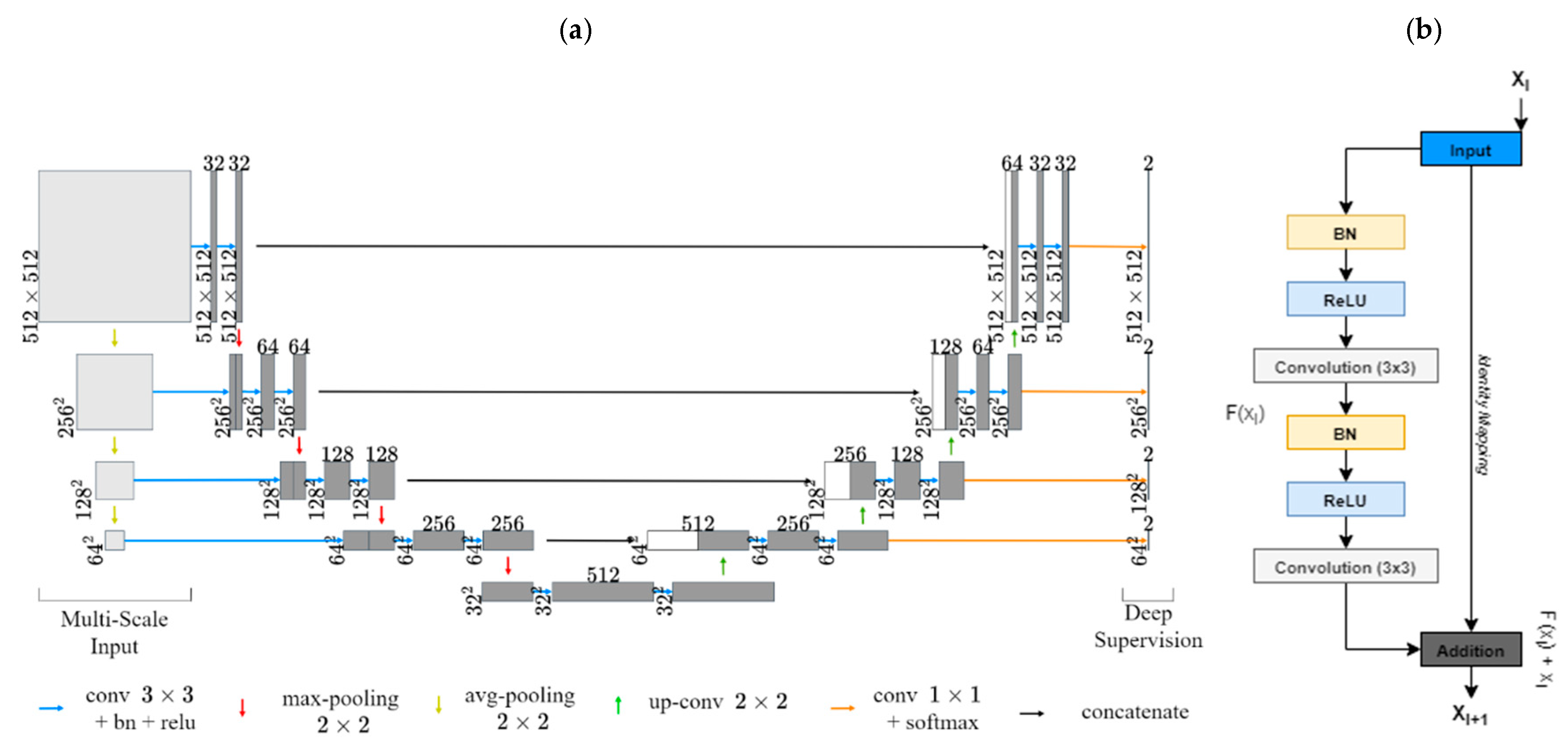

2.2.1. Lung and Artifact Segmentation

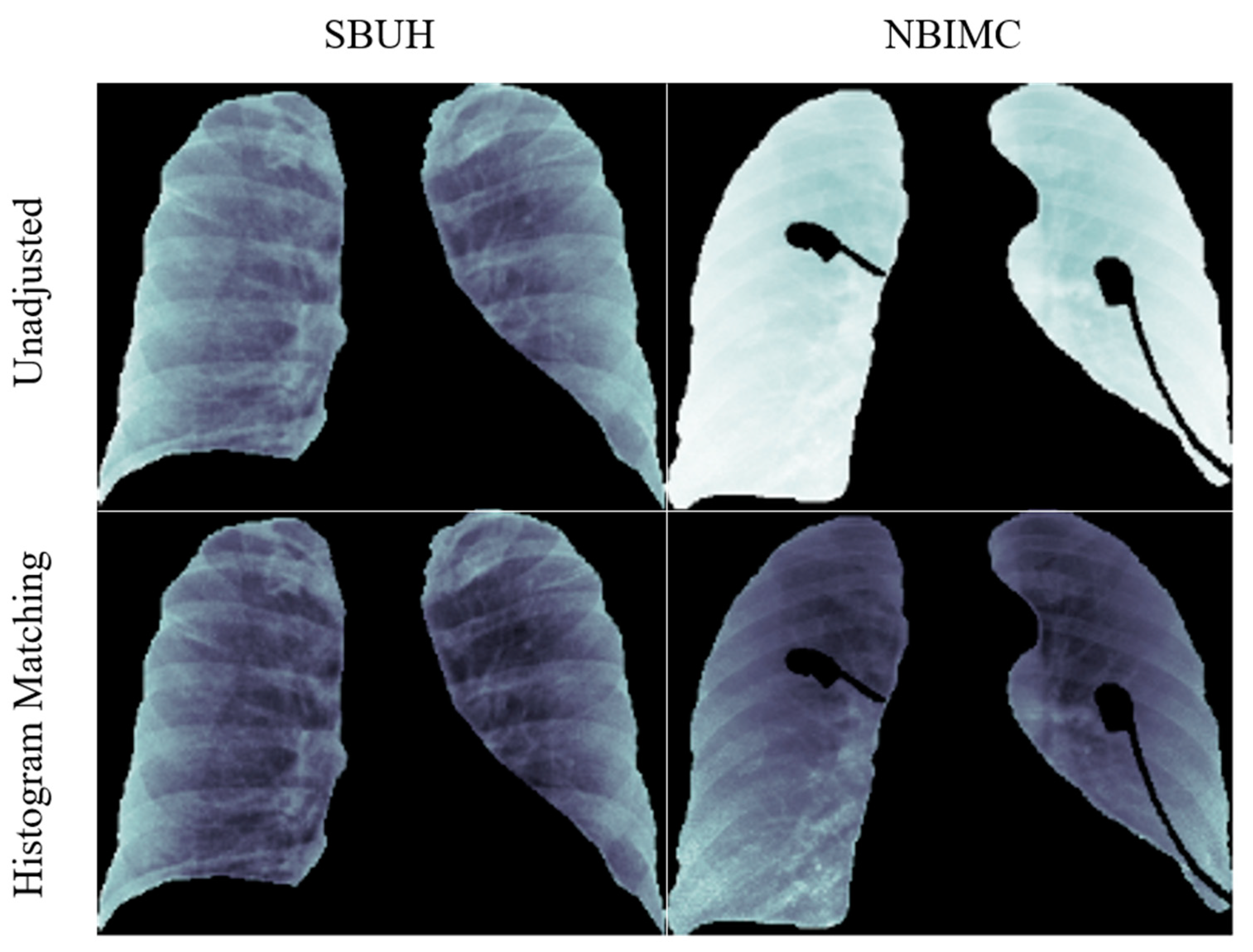

2.2.2. Average Histogram Matching (HM)

2.3. Experiment 1: Outcome Classification Using Radiologist Severity Scores

2.4. Experiment 2: Outcome Classification Using Radiomic Features

2.5. Experiment 3: Outcome Classification Using Convolutional Neural Networks

2.6. Experiment 4: Outcome Classification Using Convolutional Neural Networks and Radiomic-Map Embedding

2.6.1. Feed-Forward Concatenation of Radiomic Features

2.6.2. Radiomic-Embedded Feature Maps

3. Results

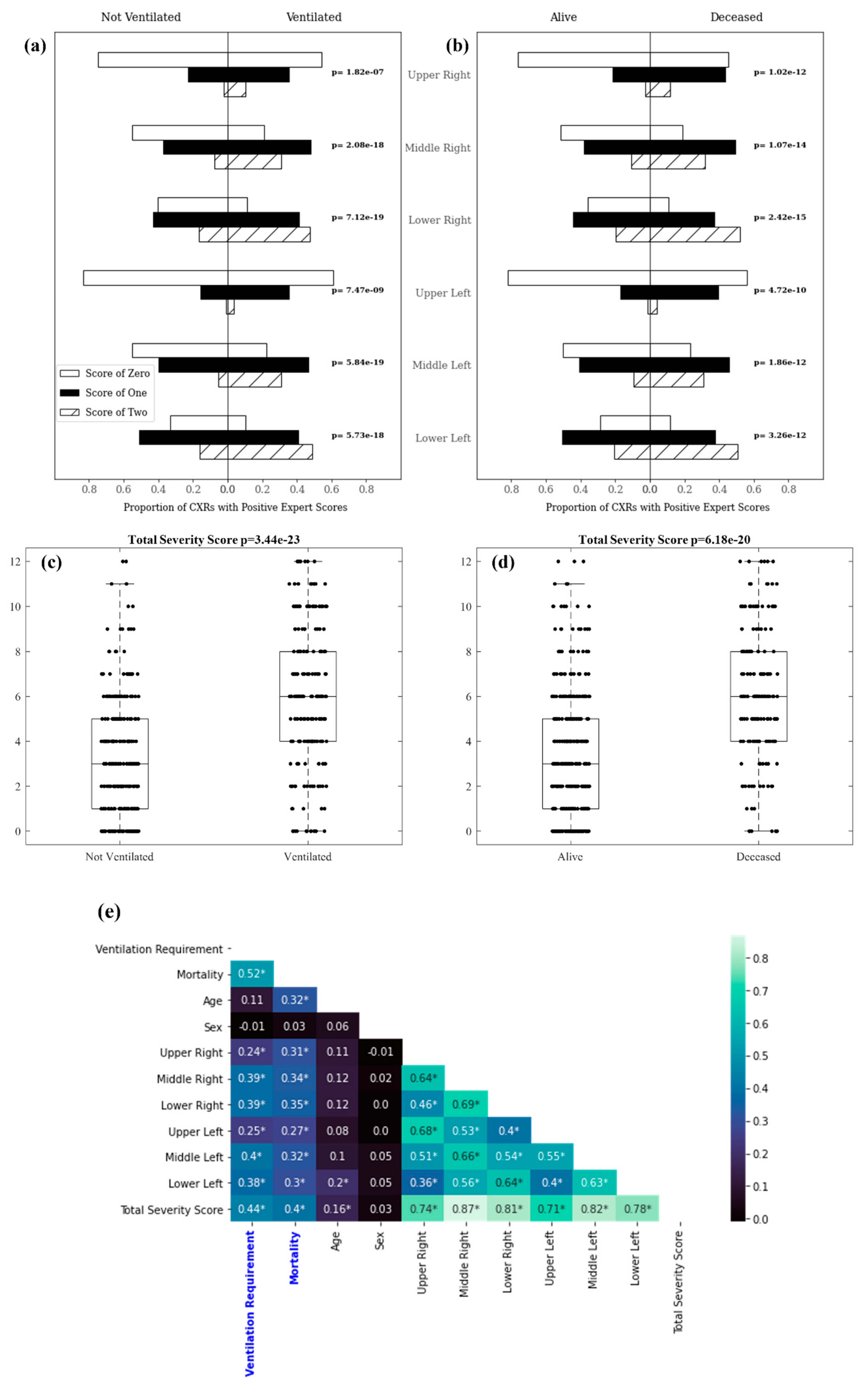

3.1. Experiment 1: Outcome Classification Using Radiologist Severity Scores

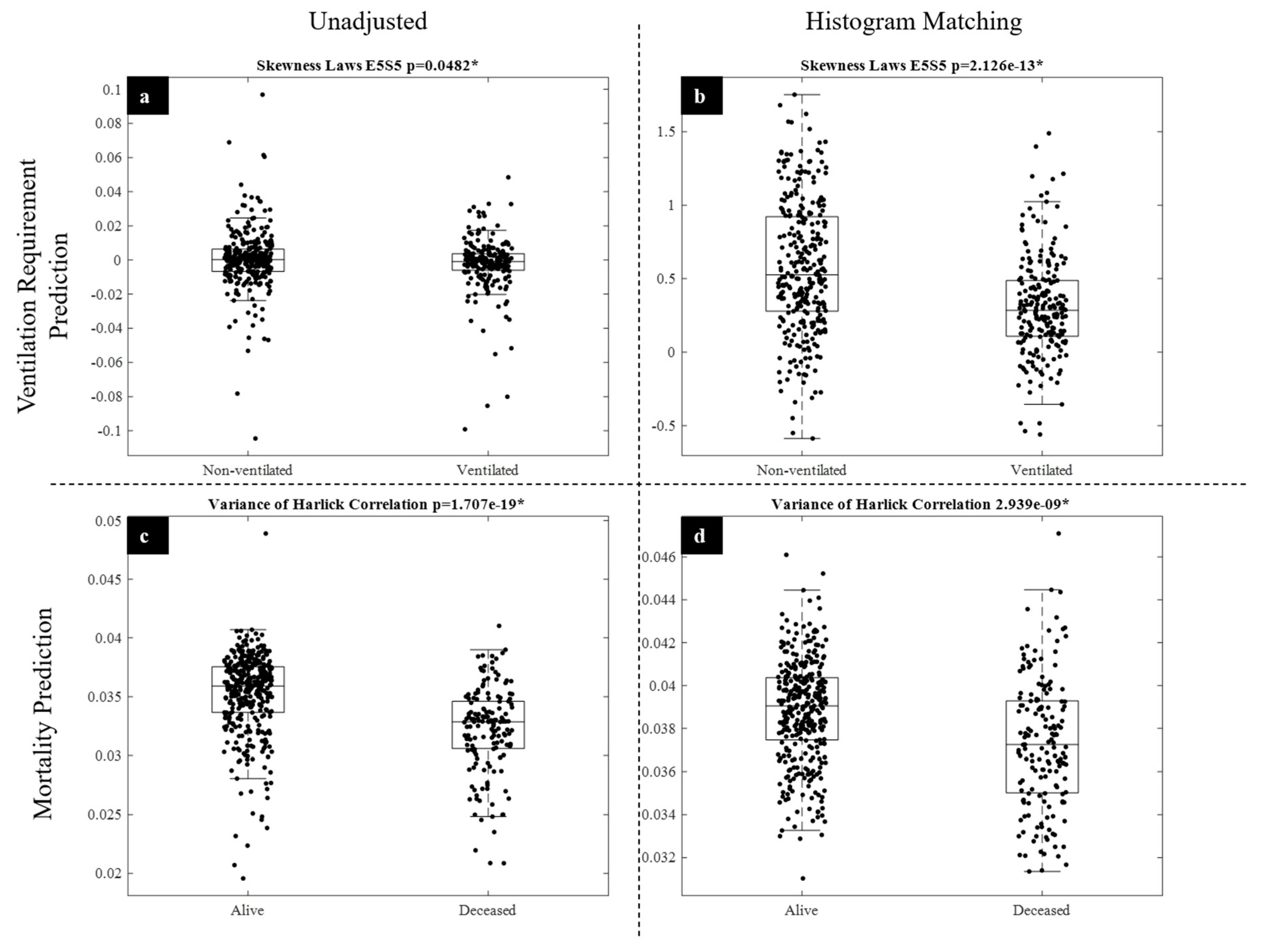

3.2. Experiment 2: Outcome Classification Using Radiomic Features

3.3. Experiment 3: Outcome Classification Using Convolutional Neural Networks

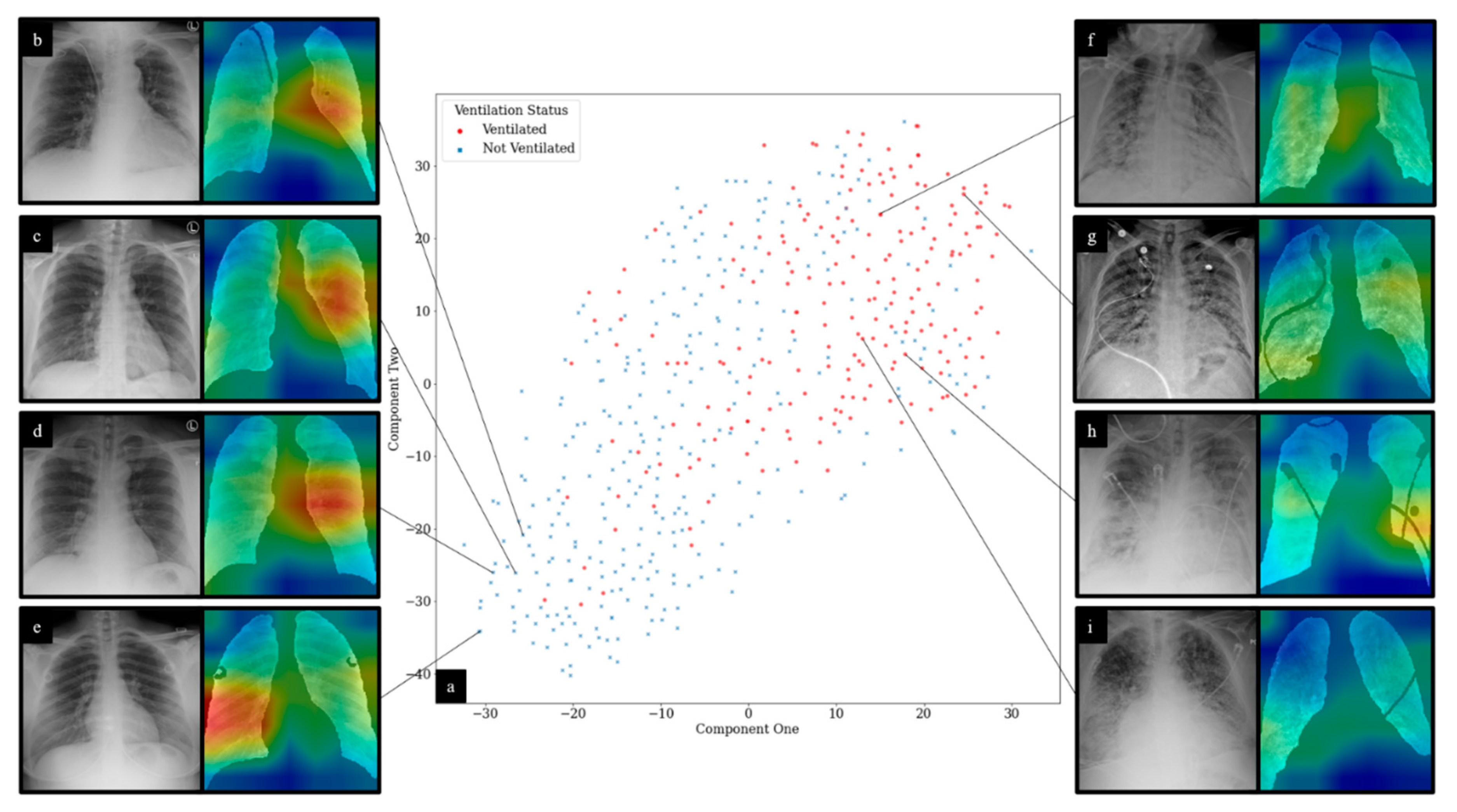

3.4. Experiment 4: Outcome Classification Using Convolutional Neural Networks and Radiomic-Map Embedding

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dong, E.; Du, H.; Gardner, L. An Interactive Web-Based Dashboard to Track COVID-19 in Real Time. Lancet Infect. Dis. 2020, 20, 533–534. [Google Scholar] [CrossRef]

- Toussie, D.; Voutsinas, N.; Finkelstein, M.; Cedillo, M.A.; Manna, S.; Maron, S.Z.; Jacobi, A.; Chung, M.; Bernheim, A.; Eber, C.; et al. Clinical and Chest Radiography Features Determine Patient Outcomes In Young and Middle Age Adults with COVID-19. Radiology 2020, 271, E197–E206. [Google Scholar] [CrossRef] [PubMed]

- Vaid, A.; Somani, S.; Russak, A.J.; De Freitas, J.K.; Chaudhry, F.F.; Paranjpe, I.; Johnson, K.W.; Lee, S.J.; Miotto, R.; Richter, F.; et al. Machine Learning to Predict Mortality and Critical Events in a Cohort of Patients With COVID-19 in New York City: Model Development and Validation. J. Med. Internet Res. 2020, 22, e24018. [Google Scholar] [CrossRef] [PubMed]

- Liang, W.; Liang, H.; Ou, L.; Chen, B.; Chen, A.; Li, C.; Li, Y.; Guan, W.; Sang, L.; Lu, J.; et al. Development and Validation of a Clinical Risk Score to Predict the Occurrence of Critical Illness in Hospitalized Patients With COVID-19. JAMA Intern. Med. 2020, 180, 1081–1089. [Google Scholar] [CrossRef]

- Yang, R.; Li, X.; Liu, H.; Zhen, Y.; Zhang, X.; Xiong, Q.; Luo, Y.; Gao, C.; Zeng, W. Chest CT Severity Score: An Imaging Tool for Assessing Severe COVID-19. Radiol. Cardiothorac. Imaging 2020, 2, e200047. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Balbi, M.; Caroli, A.; Corsi, A.; Milanese, G.; Surace, A.; Di Marco, F.; Novelli, L.; Silva, M.; Lorini, F.L.; Duca, A.; et al. Chest X-Ray for Predicting Mortality and the Need for Ventilatory Support in COVID-19 Patients Presenting to the Emergency Department. Eur. Radiol. 2020, 31, 1999–2012. [Google Scholar] [CrossRef]

- Khullar, R.; Shah, S.; Singh, G.; Bae, J.; Gattu, R.; Jain, S.; Green, J.; Anandarangam, T.; Cohen, M.; Madan, N.; et al. Effects of Prone Ventilation on Oxygenation, Inflammation, and Lung Infiltrates in COVID-19 Related Acute Respiratory Distress Syndrome: A Retrospective Cohort Study. J. Clin. Med. 2020, 9, 4129. [Google Scholar] [CrossRef]

- Shi, F.; Wang, J.; Shi, J.; Wu, Z.; Wang, Q.; Tang, Z.; He, K.; Shi, Y.; Shen, D. Review of Artificial Intelligence Techniques in Imaging Data Acquisition, Segmentation and Diagnosis for COVID-19. arXiv 2020, arXiv:2004.02731. [Google Scholar]

- ACR Recommendations for the Use of Chest Radiography and Computed Tomography (CT) for Suspected COVID-19 Infection. Available online: https://www.acr.org/Advocacy-and-Economics/ACR-Position-Statements/Recommendations-for-Chest-Radiography-and-CT-for-Suspected-COVID19-Infection (accessed on 15 June 2020).

- Wong, H.Y.F.; Lam, H.Y.S.; Fong, A.H.-T.; Leung, S.T.; Chin, T.W.-Y.; Lo, C.S.Y.; Lui, M.M.-S.; Lee, J.C.Y.; Chiu, K.W.-H.; Chung, T.; et al. Frequency and Distribution of Chest Radiographic Findings in COVID-19 Positive Patients. Radiology 2020, 296, E72–E78. [Google Scholar] [CrossRef] [Green Version]

- Ai, T.; Yang, Z.; Hou, H.; Zhan, C.; Chen, C.; Lv, W.; Tao, Q.; Sun, Z.; Xia, L. Correlation of Chest CT and RT-PCR Testing in Coronavirus Disease 2019 (COVID-19) in China: A Report of 1014 Cases. Radiology 2020, 296, E32–E40. [Google Scholar] [CrossRef] [Green Version]

- Chaganti, S.; Balachandran, A.; Chabin, G.; Cohen, S.; Flohr, T.; Liu, S.; Mellot, F.; Murray, N.; Nicolaou, S.; Parker, W.; et al. Quantification of Tomographic Patterns Associated with COVID-19 from Chest CT. arXiv 2020, arXiv:2004.01279v5. [Google Scholar]

- Yan, L.; Zhang, H.-T.; Goncalves, J.; Xiao, Y.; Wang, M.; Guo, Y.; Sun, C.; Tang, X.; Jing, L.; Zhang, M.; et al. An Interpretable Mortality Prediction Model for COVID-19 Patients. Nat. Mach. Intell. 2020, 2, 283–288. [Google Scholar] [CrossRef]

- Ji, D.; Zhang, D.; Chen, Z.; Xu, Z.; Zhao, P.; Zhang, M.; Zhang, L.; Cheng, G.; Wang, Y.; Yang, G.; et al. Clinical Characteristics Predicting Progression of COVID-19; Social Science Research Network: Rochester, NY, USA, 2020. [Google Scholar]

- Zhou, Y.; He, Y.; Yang, H.; Yu, H.; Wang, T.; Chen, Z.; Yao, R.; Liang, Z. Development and Validation a Nomogram for Predicting the Risk of Severe COVID-19: A Multi-Center Study in Sichuan, China. PLoS ONE 2020, 15, e0233328. [Google Scholar] [CrossRef] [PubMed]

- Lu, J.Q.; Musheyev, B.; Peng, Q.; Duong, T.Q. Neural Network Analysis of Clinical Variables Predicts Escalated Care in COVID-19 Patients: A Retrospective Study. PeerJ 2021, 9, e11205. [Google Scholar] [CrossRef] [PubMed]

- Parekh, V.S.; Jacobs, M.A. Deep Learning and Radiomics in Precision Medicine. Expert Rev. Precis. Med. Drug Dev. 2019, 4, 59–72. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cohen, J.P.; Morrison, P.; Dao, L. COVID-19 Image Data Collection. arXiv 2020, arXiv:2003.11597. [Google Scholar]

- Ozturk, T.; Talo, M.; Yildirim, E.A.; Baloglu, U.B.; Yildirim, O.; Rajendra Acharya, U. Automated Detection of COVID-19 Cases Using Deep Neural Networks with X-Ray Images. Comput. Biol. Med. 2020, 121, 103792. [Google Scholar] [CrossRef] [PubMed]

- Chassagnon, G.; Vakalopoulou, M.; Battistella, E.; Christodoulidis, S.; Hoang-Thi, T.-N.; Dangeard, S.; Deutsch, E.; Andre, F.; Guillo, E.; Halm, N.; et al. AI-Driven Quantification, Staging and Outcome Prediction of COVID-19 Pneumonia. Med. Image Anal. 2021, 67, 101860. [Google Scholar] [CrossRef] [PubMed]

- Konwer, A.; Bae, J.; Singh, G.; Gattu, R.; Ali, S.; Green, J.; Phatak, T.; Gupta, A.; Chen, C.; Saltz, J.; et al. Predicting COVID-19 Lung Infiltrate Progression on Chest Radiographs Using Spatio-Temporal LSTM Based Encoder-Decoder Network. In Proceedings of the Fourth Conference on Medical Imaging with Deep Learning, PMLR, 25 August 2021; pp. 384–398. [Google Scholar]

- Shen, B.; Hoshmand-Kochi, M.; Abbasi, A.; Glass, S.; Jiang, Z.; Singer, A.J.; Thode, H.C.; Li, H.; Hou, W.; Duong, T.Q. Initial Chest Radiograph Scores Inform COVID-19 Status, Intensive Care Unit Admission and Need for Mechanical Ventilation. Clin. Radiol. 2021, 76, 473.e1–473.e7. [Google Scholar] [CrossRef]

- Maguolo, G.; Nanni, L. A Critic Evaluation of Methods for COVID-19 Automatic Detection from X-Ray Images. arXiv 2020, arXiv:2004.12823. [Google Scholar]

- Kwon, Y.J.; Toussie, D.; Finkelstein, M.; Cedillo, M.A.; Maron, S.Z.; Manna, S.; Voutsinas, N.; Eber, C.; Jacobi, A.; Bernheim, A.; et al. Combining Initial Radiographs and Clinical Variables Improves Deep Learning Prognostication of Patients with COVID-19 from the Emergency Department. Radiol. Artif. Intell. 2020, 3, e200098. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Wang, J.; Zhu, H.; Wang, S.-H.; Zhang, Y.-D. A Review of Deep Learning on Medical Image Analysis. Mob. Netw. Appl. 2021, 26, 351–380. [Google Scholar] [CrossRef]

- Siddique, N.; Paheding, S.; Elkin, C.P.; Devabhaktuni, V. U-Net and Its Variants for Medical Image Segmentation: A Review of Theory and Applications. IEEE Access 2021, 9, 82031–82057. [Google Scholar] [CrossRef]

- Ferreira Junior, J.R.; Cardona Cardenas, D.A.; Moreno, R.A.; de Sá Rebelo, M.d.F.; Krieger, J.E.; Gutierrez, M.A. Novel Chest Radiographic Biomarkers for COVID-19 Using Radiomic Features Associated with Diagnostics and Outcomes. J. Digit. Imaging 2021, 34, 1–11. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, Q.; Wang, Y. Road Extraction by Deep Residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef] [Green Version]

- Lee, C.-Y.; Xie, S.; Gallagher, P.; Zhang, Z.; Tu, Z. Deeply-Supervised Nets. arXiv 2014, arXiv:1409.5185. [Google Scholar]

- Abraham, N.; Khan, N.M. A Novel Focal Tversky Loss Function with Improved Attention U-Net for Lesion Segmentation. arXiv 2018, arXiv:1810.07842. [Google Scholar]

- Chen, C.; Dou, Q.; Chen, H.; Heng, P.-A. Semantic-Aware Generative Adversarial Nets for Unsupervised Domain Adaptation in Chest X-Ray Segmentation. arXiv 2018, arXiv:1806.00600. [Google Scholar]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Jain, A.K.; Farrokhnia, F. Unsupervised Texture Segmentation Using Gabor Filters. Pattern Recognit. 1991, 24, 1167–1186. [Google Scholar] [CrossRef] [Green Version]

- Laws, K.I. Textured Image Segmentation; University of Southern California: Los Angeles, CA, USA, 1980. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Tin Kam Ho The Random Subspace Method for Constructing Decision Forests. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 832–844. [CrossRef] [Green Version]

- Hastie, T.; Tibshirani, R.; Friedman, J.H. The Elements of Statistical Learning: Data Mining, Inference, and Prediction, 2nd ed.; Springer series in statistics; Springer: New York, NY, USA, 2009; ISBN 978-0-387-84857-0. [Google Scholar]

- Peng, H.; Long, F.; Ding, C. Feature Selection Based on Mutual Information Criteria of Max-Dependency, Max-Relevance, and Min-Redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1226–1238. [Google Scholar] [CrossRef] [PubMed]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar]

- Sharma, A.; Vans, E.; Shigemizu, D.; Boroevich, K.A.; Tsunoda, T. DeepInsight: A Methodology to Transform a Non-Image Data to an Image for Convolution Neural Network Architecture. Sci. Rep. 2019, 9, 11399. [Google Scholar] [CrossRef] [Green Version]

- Cohen, J.P.; Morrison, P.; Dao, L.; Roth, K.; Duong, T.Q.; Ghassemi, M. COVID-19 Image Data Collection: Prospective Predictions Are the Future. arXiv 2020, arXiv:2006.11988. [Google Scholar]

- Wong, A.; Lin, Z.Q.; Wang, L.; Chung, A.G.; Shen, B.; Abbasi, A.; Hoshmand-Kochi, M.; Duong, T.Q. Towards Computer-Aided Severity Assessment via Deep Neural Networks for Geographic and Opacity Extent Scoring of SARS-CoV-2 Chest X-Rays. Sci. Rep. 2021, 11, 9315. [Google Scholar] [CrossRef]

| Stony Brook University Hospital Patients (n = 522) | Newark Beth Israel Medical Center Patients (n = 169) | |

|---|---|---|

| Sex | 267 (175 COVID-19+) male 255 (171 COVID-19+) female | 96 male 73 female |

| Age | 55 ± 18.630 (p = 0.0989 *) 57 ± 16.969 (COVID-19+, p = 0.1170 *) | 59 ± 14.256 (p = 0.6821 *) |

| Age | Number of COVID-19 Positive Patients | Number Requiring Mechanical Ventilation | Number Deceased | |

|---|---|---|---|---|

| 18–19 (n = 1) | Male | 1 | 0 | 0 |

| Female | 0 | 0 | 0 | |

| 20–29 (n = 22) | Male | 11 | 1 | 1 |

| Female | 11 | 4 | 2 | |

| 30–39 (n = 47) | Male | 29 | 8 | 4 |

| Female | 18 | 4 | 2 | |

| 40–49 (n = 75) | Male | 42 | 11 | 5 |

| Female | 33 | 9 | 4 | |

| 50–59 (n = 130) | Male | 64 | 24 | 17 |

| Female | 66 | 30 | 13 | |

| 60–69 (n = 108) | Male | 60 | 33 | 24 |

| Female | 48 | 27 | 18 | |

| 70–79 (n = 79) | Male | 41 | 24 | 20 |

| Female | 38 | 17 | 19 | |

| 80+ (n = 53) | Male | 23 | 6 | 11 |

| Female | 30 | 7 | 18 | |

| Total (n = 515) | Male | 271 | 107 | 82 |

| Female | 244 | 98 | 76 |

| Radiomic Feature Family | Features Used for Clinical Outcome Prediction | Description |

|---|---|---|

| Laws Energy | L5E5, E5S5, W5E5, L5E5, W5R5, S5E5, R5E5, W5W5, S5E5, S5W5, S5L5, L5S5, E3S3, R5R5 | Combinations of these filters at different window sizes (3 × 3, 5 × 5) enable identification of various qualitative patterns such as waves, ripples, edges, and spots. |

| Gabor Wavelet | θ = 1.571 λ = 1.786, θ = 0.785 λ = 1.276, θ = 1.963 λ = 1.276, θ = 1.178 λ = 1.786, θ = 1.178 λ = 0.765 | Computes oriented textures via changes in direction and scale to capture microarchitectures in lung regions. Each descriptor quantifies response to a given Gabor filter at a specific wavelength (λ) and orientation (θ) |

| Haralick | Entropy, Correlation, Information | Features are extracted from the grey level co-occurrence matrix (GLCM) of an image. Measures various characteristics regarding local disorder, homogeneity, and heterogeneity. |

| Gradient | X, Y, Diagonal | Measures changes in intensity values within an image in different directions. |

| Grey | Standard Deviation, Mean | Standard measures of intensity information. |

| Classification Type | Sensitivity | Specificity | AUC |

|---|---|---|---|

| Ventilation Requirement | 0.67 ± 0.08 | 0.69 ± 0.07 | 0.75 ± 0.02 |

| Mortality | 0.69 ± 0.08 | 0.76 ± 0.08 | 0.79 ± 0.05 |

| Classification Type | Image Adjustment | Clinical Features | Sensitivity | Specificity | AUC |

|---|---|---|---|---|---|

| Ventilation Requirement | Unadjusted | None | 0.64 ± 0.07 | 0.67 ± 0.07 | 0.72 ± 0.05 |

| Expert Scores, patient age and sex | 0.67 ± 0.08 | 0.73 ± 0.07 | 0.77 ± 0.05 | ||

| Histogram Matching | None | 0.72 ± 0.07 | 0.72 ± 0.06 | 0.78 ± 0.05 | |

| Expert Scores, patient age and sex | 0.71 ± 0.06 | 0.71 ± 0.08 | 0.79 ± 0.04 | ||

| Mortality | Unadjusted | None | 0.72 ± 0.09 | 0.72 ± 0.08 | 0.77 ± 0.05 |

| Expert Scores, patient age and sex | 0.79 ± 0.07 | 0.74 ± 0.09 | 0.83 ± 0.04 | ||

| Histogram Matching | None | 0.70 ± 0.09 | 0.73 ± 0.09 | 0.78 ± 0.06 | |

| Expert Scores, patient age and sex | 0.77 ± 0.08 | 0.71 ± 0.09 | 0.80 ± 0.06 |

| Ventilation Requirement | Mortality | ||||

|---|---|---|---|---|---|

| Unadjusted | Histogram Matching | Unadjusted | Histogram Matching | ||

| Sensitivity | CXR | 0.55 ± 0.09 | 0.64 ± 0.09 | 0.56 ± 0.15 | 0.59 ± 0.13 |

| CLC | 0.63 ± 0.08 | 0.61 ± 0.01 | 0.58 ± 0.17 | 0.67 ± 0.09 | |

| REM | 0.54 ± 0.08 | 0.68 ± 0.05 | 0.66 ± 0.07 | 0.64 ± 0.07 | |

| REM CLC | 0.58 ± 0.09 | 0.62 ± 0.08 | 0.61 ± 0.14 | 0.77 ± 0.07 | |

| RAD | 0.63 ± 0.06 | 0.66 ± 0.04 | 0.58 ± 0.12 | 0.59 ± 0.12 | |

| RAD CLC | 0.62 ± 0.07 | 0.67 ± 0.07 | 0.59 ± 0.07 | 0.69 ± 0.08 | |

| Specificity | CXR | 0.72 ± 0.08 | 0.73 ± 0.07 | 0.72 ± 0.07 | 0.74 ± 0.04 |

| CLC | 0.66 ± 0.08 | 0.76 ± 0.05 | 0.65 ± 0.06 | 0.71 ± 0.09 | |

| REM | 0.59 ± 0.05 | 0.63 ± 0.02 | 0.58 ± 0.08 | 0.73 ± 0.07 | |

| REM CLC | 0.65 ± 0.07 | 0.68 ± 0.06 | 0.63 ± 0.08 | 0.60 ± 0.09 | |

| RAD | 0.69 ± 0.06 | 0.75 ± 0.06 | 0.67 ± 0.03 | 0.76 ± 0.03 | |

| RAD CLC | 0.69 ± 0.06 | 0.78 ± 0.05 | 0.71 ± 0.02 | 0.67 ± 0.03 | |

| AUC | CXR | 0.70 ± 0.07 | 0.75 ± 0.02 | 0.72 ± 0.07 | 0.75 ± 0.04 |

| CLC | 0.69 ± 0.03 | 0.77 ± 0.02 | 0.70 ± 0.07 | 0.74 ± 0.04 | |

| REM | 0.61 ± 0.03 | 0.71 ± 0.02 | 0.67 ± 0.04 | 0.76 ± 0.04 | |

| REM CLC | 0.64 ± 0.02 | 0.72 ± 0.02 | 0.68 ± 0.02 | 0.77 ± 0.01 | |

| RAD | 0.70 ± 0.03 | 0.77 ± 0.03 | 0.69 ± 0.07 | 0.74 ± 0.06 | |

| RAD CLC | 0.72 ± 0.02 | 0.78 ± 0.02 | 0.71 ± 0.04 | 0.75 ± 0.07 | |

| Classification Type | Image Adjustment | Clinical Features | Radiomic Features |

|---|---|---|---|

| Ventilation Requirement | Unadjusted | None | 1. Laws L5E5 2. Gabor XY θ = 1.571 λ = 1.786 3. Gradient Diagonal 4. Laws E5S5 5. Laws W5E5 6. Laws L5E5 7. Laws W5R5 8. Laws S5E5 9. Haralick Entropy Ws7 10. Haralick Correlation Ws7 |

| Expert Scores, Patient Age and Sex | 1. ES Lower Left 2. Age 3. ES Middle Left 4. Sex 5. ES Middle Right 6. Laws W5E5 7. Laws W5R5 8. Laws E5S5 9. Gradient Diagonal 10. ES Lower Right 11. Laws R5E5 12. Laws E5E5 13. Laws E3S3 14. Laws R5W5 15. Laws W5W5 16. Laws S5E5 17. Laws S5W5 18. Laws S5L5 19. Gradient dy | ||

| Histogram Matching | None | 1. Gradient Y 2. Laws E5S5 3. Laws L5S5 | |

| Expert Scores, Patient Age and Sex | 1. Laws E3S3 2. LawsR5R5 3. ES Middle Right 4. ES Lower Right 5. Gabor XY θ = 0.785 λ = 1.276 6. ES Middle Left 7. ES Lower Left 8. Gabor XY θ = 1.963 λ = 1.276 9. Grey Standard Deviation 10. Laws L5S5 11. Gabor XY θ = 1.178 λ = 1.786 12. Haralick Entropy Ws3 13. Gradient Sobel Y 14. Gabor XY θ = 1.178 λ = 0.765 15. Haralick Information Ws5 | ||

| Mortality | Unadjusted | None | 1. Haralick Correlation Ws5 |

| Expert Scores, Patient Age and Sex | 1. Age 2. Haralick Correlation Ws5 3. ES Middle Right 4. ES Lower Left | ||

| Histogram Matching | None | 1. Laws R5E5 2. Gradient Y 3. Laws E3S3 4. Haralick Entropy Ws 5 | |

| Expert Scores, Patient Age and Sex | 1. Age 2. ES Lower Left 3. ES Middle Right 4. Laws R5E5 5. ES Upper Right6. ES Lower Right 7. Gradient Y 8. Gradient Sobel YX 9. Laws E3 S3 10. Gradient dx 11. Haralick Entropy |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bae, J.; Kapse, S.; Singh, G.; Gattu, R.; Ali, S.; Shah, N.; Marshall, C.; Pierce, J.; Phatak, T.; Gupta, A.; et al. Predicting Mechanical Ventilation and Mortality in COVID-19 Using Radiomics and Deep Learning on Chest Radiographs: A Multi-Institutional Study. Diagnostics 2021, 11, 1812. https://doi.org/10.3390/diagnostics11101812

Bae J, Kapse S, Singh G, Gattu R, Ali S, Shah N, Marshall C, Pierce J, Phatak T, Gupta A, et al. Predicting Mechanical Ventilation and Mortality in COVID-19 Using Radiomics and Deep Learning on Chest Radiographs: A Multi-Institutional Study. Diagnostics. 2021; 11(10):1812. https://doi.org/10.3390/diagnostics11101812

Chicago/Turabian StyleBae, Joseph, Saarthak Kapse, Gagandeep Singh, Rishabh Gattu, Syed Ali, Neal Shah, Colin Marshall, Jonathan Pierce, Tej Phatak, Amit Gupta, and et al. 2021. "Predicting Mechanical Ventilation and Mortality in COVID-19 Using Radiomics and Deep Learning on Chest Radiographs: A Multi-Institutional Study" Diagnostics 11, no. 10: 1812. https://doi.org/10.3390/diagnostics11101812

APA StyleBae, J., Kapse, S., Singh, G., Gattu, R., Ali, S., Shah, N., Marshall, C., Pierce, J., Phatak, T., Gupta, A., Green, J., Madan, N., & Prasanna, P. (2021). Predicting Mechanical Ventilation and Mortality in COVID-19 Using Radiomics and Deep Learning on Chest Radiographs: A Multi-Institutional Study. Diagnostics, 11(10), 1812. https://doi.org/10.3390/diagnostics11101812