Abstract

Human–machine collaboration in assistive aerial teleoperation is frequently compromised by trust imbalances, which arise from the vehicle’s complex dynamics and the operator’s constrained perceptual feedback. We introduce a novel framework that enhances collaboration by dynamically integrating a model of human trust into the unmanned aerial vehicle’s trajectory planning. We first propose a Machine-Performance-Dependent trust model, specifically tailored for aerial teleoperation, that quantifies trust based on real-time safety and visibility metrics. This model then informs a trust-aware trajectory planning algorithm, which generates smooth and adaptive trajectories that continuously align with the operator’s trust level and intent inferred from control inputs. Extensive simulations conducted in diverse forest environments validate our approach. The results demonstrate that our method achieves task efficiency comparable to that of a trust-unaware baseline while significantly reducing operator workload and improving trajectory smoothness, achieving reductions of up to 23.2% and 43.2%, respectively, in challenging dense environments. By embedding trust dynamics directly into the trajectory optimization loop, this work pioneers a more intuitive, efficient, and resilient paradigm for assistive aerial teleoperation.

1. Introduction

Unmanned aerial vehicle (UAV) teleoperation technology enables UAVs to access hazardous or human-inaccessible environments, performing tasks such as search and rescue [1] and surveillance [2]. It not only ensures the safety of operators but also gives full play to the advantages of human judgment and skills. However, in the traditional direct teleoperation mode, operators are responsible for all control tasks of the UAV, including flight path planning and obstacle avoidance [3], while the UAV itself lacks any high-level decision-making autonomy. This mode not only requires operators to possess extensive professional knowledge and experience but also leads to cognitive fatigue due to frequent manual operations [4]. Therefore, the question of how to reduce operational difficulty and alleviate the operator’s burden, especially when performing tasks in complex and hazardous environments, remains a key challenge in UAV teleoperation technology [3,5].

With the advancement of UAV autonomous technology, the assistive aerial teleoperation mode has been widely recognized as an effective solution to address the aforementioned challenges, for example, by reducing high cognitive load and mitigating the need for extensive operator expertise [3,6]. In this mode, operators provide commands through remote control, while the UAV offers assistance by inferring the operators’ intent [5,6,7] and autonomously plans appropriate action strategies based on mission requirements. By combining human decision-making with machine-assisted execution, this collaborative approach can significantly reduce the operator’s workload while improving task efficiency and safety.

However, while assistive aerial teleoperation technology has made significant progress in reducing operators’ workload and other aspects, it has also triggered the issue of trust imbalance in human–machine collaboration [8,9]. Due to the complex high-dimensional nonlinear dynamic characteristics of a UAV and the limited perceptual capabilities of operators through remote control terminals [10], it is difficult for humans to intuitively understand the UAV’s status and performance. Such information asymmetry [11] can lead to human trust imbalance, resulting in very serious consequences: over-trust may cause operators to fail to intervene in a timely manner in the UAV’s erroneous behaviors [12], thereby leading to mission failure. while a lack of trust may cause operators to frequently conduct unnecessary interventions [13], increasing the operational burden and reducing task execution efficiency.

Addressing this critical issue of trust imbalance reveals a twofold research gap, spanning from theory to application, that has hindered progress in the field. First, in the area of trust modeling, research specific to assistive aerial teleoperation remains limited. Most existing trust models are developed for other scenarios, such as ground mobile robots [14,15] and autonomous vehicles [16,17]. However, as these models do not account for the higher environmental complexity and the operator’s limited perceptual capabilities inherent to UAV teleoperation, they cannot be directly applied. Second, there is a practical gap in trust application. Even with a suitable model, the question of how to operationally use this dynamic trust information to guide the UAV’s behavior remains largely unanswered. Most current assistive planners focus on aligning with operator intent [3,5,7], but they lack a mechanism to modulate their behavior based on the operator’s dynamic trust level. A planning framework that can integrate a real-time trust metric into its decision-making process is a crucial missing piece.

To address this twofold research gap, this paper introduces a novel framework for trust-aware trajectory planning in assistive aerial teleoperation. Our approach is founded upon the Machine-Performance-Dependent (MPD) human trust model framework, which provides a quantitative basis for linking objective system performance to an operator’s trust. We adapt and specialize this general framework for the UAV teleoperation scenario and, crucially, integrate it into a real-time trajectory planner. By tackling both the modeling and application gaps, the key contributions of this work are summarized as follows:

- We propose an MPD trust model tailored for assistive aerial teleoperation. The model quantifies the UAV’s performance capacity using safety and visibility metrics, which, in contrast to methods relying on abstract or binary scores, enables a more nuanced and dynamic representation of human trust that can inform trajectory planning.

- Building upon this model, we develop a trust-aware trajectory planner that, for the first time, integrates a dynamic trust level directly into the optimization loop. This enables the planner to continuously adapt its assistance strategy, moving beyond simple intent-matching to achieve true human–machine collaboration.

- We validate our approach through extensive simulations in challenging, randomly generated forest environments. The results confirm that our trust-aware method significantly reduces operator workload and enhances trajectory smoothness, achieving superior collaborative performance without compromising task efficiency compared to a trust-unaware baseline.

Our approach assumes a stable communication link between the operator and the UAV. The trust model parameters are calibrated using experimental data to estimate human factors. However, the model does not account for real-time adaptation to individual differences. The proposed trust model is validated in simulated forest environments, and its applicability in highly dynamic urban settings remains a subject for future work.

The remainder of this paper is structured as follows. Section 2 surveys the literature on assistive aerial teleoperation, along with related work on human-factor-aware trajectory planning and trust modeling. Section 3 outlines the problem formulation and introduces the foundational MPD trust model. Building on this, Section 4 details the proposed trust model tailored for UAVs, while Section 5 presents our core trust-aware trajectory planning framework. Section 6 describes the experiments and analyzes the results. Section 7 interprets the findings, illustrates the framework’s practical significance through a scenario-based evaluation, and outlines open issues for future work. Finally, Section 8 concludes this paper with a summary of our contributions.

2. Related Work

2.1. Assistive Aerial Teleoperation

Existing research in assistive aerial teleoperation primarily focuses on two levels: operational control and trajectory planning [3]. At the control level, some works model the task as a local safety problem by generating constraints to mitigate collision risks [18,19]. Other works, such as Backman et al. [20], propose a shared autonomy approach that learns a policy module to provide assistive control inputs for safe landing via the reinforcement learning algorithm TD3. At the planning level, other works concentrate on longer-horizon human–machine collaborative planning. For instance, Yang and Michael [7] propose a motion-primitive-based sampling method to generate long-horizon trajectories which align with the human intention while circumventing obstacles. Similarly, Wang et al. [6] present a gaze-enhanced teleoperation framework that generates a safe and perception-aware trajectory by capturing the operator’s exploratory intent from gaze information. However, while these approaches show increasing technical sophistication, their interaction models often lack a deep consideration of human factors, such as operator preferences and trust, which are critical for effective collaboration.

2.2. Trajectory Planning Considering Human Factors

In the field of autonomous driving, significant research has focused on trajectory planning that incorporates human factors to create more intelligent and acceptable vehicle behaviors. Some works focus on understanding the human-like behaviors of surrounding vehicles for better prediction. For instance, Zhang et al. [21] propose an interaction-aware and driving-style-aware model that first uses an unsupervised clustering algorithm to classify the driving styles of other drivers and then employs an encoder–decoder architecture for long-term trajectory prediction. Other works aim to make the autonomous vehicle’s own path feel more natural to the user. Igneczi et al. [22] develop a curve trajectory model based on a linear driver model, which calculates human-preferred node points from road curvature data to design a path that emulates average human driving patterns. Furthermore, to cater to individual user needs, some methods allow for explicit personalization. Huang et al. [23] present a personalized trajectory planning method for lane-change maneuvers, which uses an improved RRT algorithm to generate a cluster of feasible trajectories and then utilizes fuzzy linguistic preference relations and TOPSIS to select the optimal one that matches the user’s subjective preferences for safety and comfort. While these methods effectively integrate human factors like driving style and preference into ground-based trajectory planning, their underlying models and assumptions are tied to the structured nature of road networks and vehicle dynamics, which do not directly apply to the challenges of 3D aerial teleoperation. Among these human factors, the operator’s dynamic trust in the system’s capability is arguably the most critical yet least understood, especially in high-stakes aerial tasks.

2.3. Trust Models in Human–Machine Interaction

In fields beyond UAV teleoperation, particularly in autonomous driving and ground mobile robotics, trust modeling has been explored to facilitate smoother and safer human–machine collaboration. For instance, Li et al. [14] proposed a bidirectional trust-based variable autonomy (BTVA) approach for the field of semi-autonomous mobile robots to balance control between a human and a robot. Similarly, Saeidi and Wang [15] proposed a trust framework for the same field to resolve human–machine intent conflicts by continuously modulating the degree of automation. In the autonomous driving domain, Hu et al. [16] proposed a quantitative trust model to dynamically allocate control authority by measuring the deviation between vehicle performance and driver expectations. Furthermore, Fang et al. [17] proposed a hierarchical framework based on mutual trust evaluation for shared steering control to optimize authority allocation by evaluating driver skill and human–machine conflicts. However, these frameworks’ underlying assumptions do not hold in UAV teleoperation. The key distinction is the shift from navigating on a plane to maneuvering in complex 3D space, a challenge compounded by the operator’s limited situational awareness [10]. A critical consequence is that the machine’s objective capability becomes highly volatile and context-dependent. Existing trust models for more predictable ground systems do not explicitly parameterize this performance volatility. Our work, therefore, builds upon the MPD human trust model, which addresses this gap by modeling the machine’s context-dependent capability to achieve a more accurate and adaptive trust assessment.

3. Problem Formulation and Preliminaries

We formalize assistive aerial teleoperation as a human–UAV collaborative trajectory planning problem and employ the MPD trust model to characterize human trust dynamics. This section provides an overview of human–UAV collaborative trajectory planning and the MPD trust model.

3.1. Human–UAV Collaborative Trajectory Planning

Let the state vector of the UAV be denoted as , where represents the state space and n is the dimension of the state vector. Typically, comprises the UAV’s position, velocity, and other variables relevant to its dynamics. The control input is defined as , where denotes the admissible set of control inputs and m is the dimension of the control input. The control input governs the state transitions of the UAV.

To ensure both safety and operational feasibility during flight, the UAV’s trajectory must satisfy multiple constraints. These constraints arise from the system’s physical limitations and environmental factors. Specifically, we define as the position component of the state vector , representing the trajectory over a time horizon . The dynamic feasibility constraints are expressed as shown in (1):

where the i-th-order derivative of the trajectory is upper-bounded by a known limit of the UAV. Additionally, the UAV must avoid obstacles in the environment during flight. To this end, the trajectory should always lie within the safe region, as specified by the constraint in (2):

where , and denotes the set of obstacles.

In human–UAV collaborative scenarios without a predefined target, it is more appropriate to model the operator’s intent as directional preferences rather than specific state-space targets [24]. The operator conveys their intent by providing control signals through input devices, such as joysticks. To integrate this intent into trajectory planning, we introduce an intent cost function , which quantifies the alignment between the trajectory and the operator’s directives. Concurrently, a flight quality cost function is defined to assess metrics such as trajectory smoothness and energy efficiency.

Thus, the human–UAV collaborative trajectory planning problem can be formulated as the following optimization problem: given the operator’s input , compute a trajectory within the feasible space to minimize the combined cost, as shown in (3):

3.2. The MPD Human Trust and Its Dynamic Model

The MPD trust (denoted by ) is defined as the ratio of subjective machine capability (denoted by , referring to the machine capability perceived by the human) to objective machine capability (denoted by , referring to the machine’s actual capability at a given time). This relationship is formally expressed in (4):

where is the time when the human updates their subjective machine capability for the k-th time ( is the starting time of the considered human–machine system).

A dynamic model for MPD human trust was built, as detailed in (5):

where is defined in (6):

and is given in (7):

with specified in (8):

The four human factors—the static human factor via informed capability , the static human factor via observed performance , conservativeness and risk-awareness human factors and , and the actual machine performance during time interval —can be estimated using experimental data.

4. MPD Trust Model for Assistive Aerial Teleoperation

This section specifies the general MPD trust model, presented in (5), for the context of assistive aerial teleoperation. The initial step is to determine the machine’s performance and its objective capability . While is often assumed to be a constant value for a given task, as expressed in (9),

this assumption may not hold in practice. Especially for UAVs operating in unknown environments, the machine’s capability can vary due to dynamic environmental factors. Therefore, this work focuses on quantifying both and the context-dependent to formulate a specialized trust model for assistive aerial teleoperation.

4.1. Quantifying Machine Performance and Objective Machine Capability

In practice, the objective machine capability is an idealized benchmark derived from data on actual machine performance collected over an extended period. Consequently, both the machine performance and the objective machine capability originate from the same set of evaluation metrics. Their primary distinction lies in the temporal scope of the assessment: is evaluated over a discrete time interval , whereas reflects the aggregated performance across a long-term horizon.

We first define the evaluation metrics for machine performance and objective machine capability in the context of assistive aerial teleoperation. We then present the specific algorithms used to compute and .

4.1.1. UAV Trajectory Performance Metrics

To ensure compliance with both flight safety and operator visibility constraints, this work proposes a multi-dimensional metric that incorporates a safety factor and a visibility factor.

- (a)

- SafetySafety is defined as a quantitative index of collision risk. This index reflects the principle that the collision risk increases as the radial distance to obstacles decreases and the velocity component towards them increases. Specifically, suppose that at time k, the position vector from the UAV to the nearest obstacle is . The corresponding minimum radial distance is modeled as the Euclidean norm of this position vector, as given in (10):Meanwhile, the flight velocity vector is . The velocity component in the obstacle direction is calculated by vector projection, as expressed in (11):where denotes the dot product of the velocity vector and the obstacle direction vector. To characterize the relative motion risk, we employ a kinematic risk indicator , which represents the constant deceleration required to avoid a collision [14]. The safety factor is then formulated as given in (12):where is a tunable coefficient that regulates risk sensitivity.

- (b)

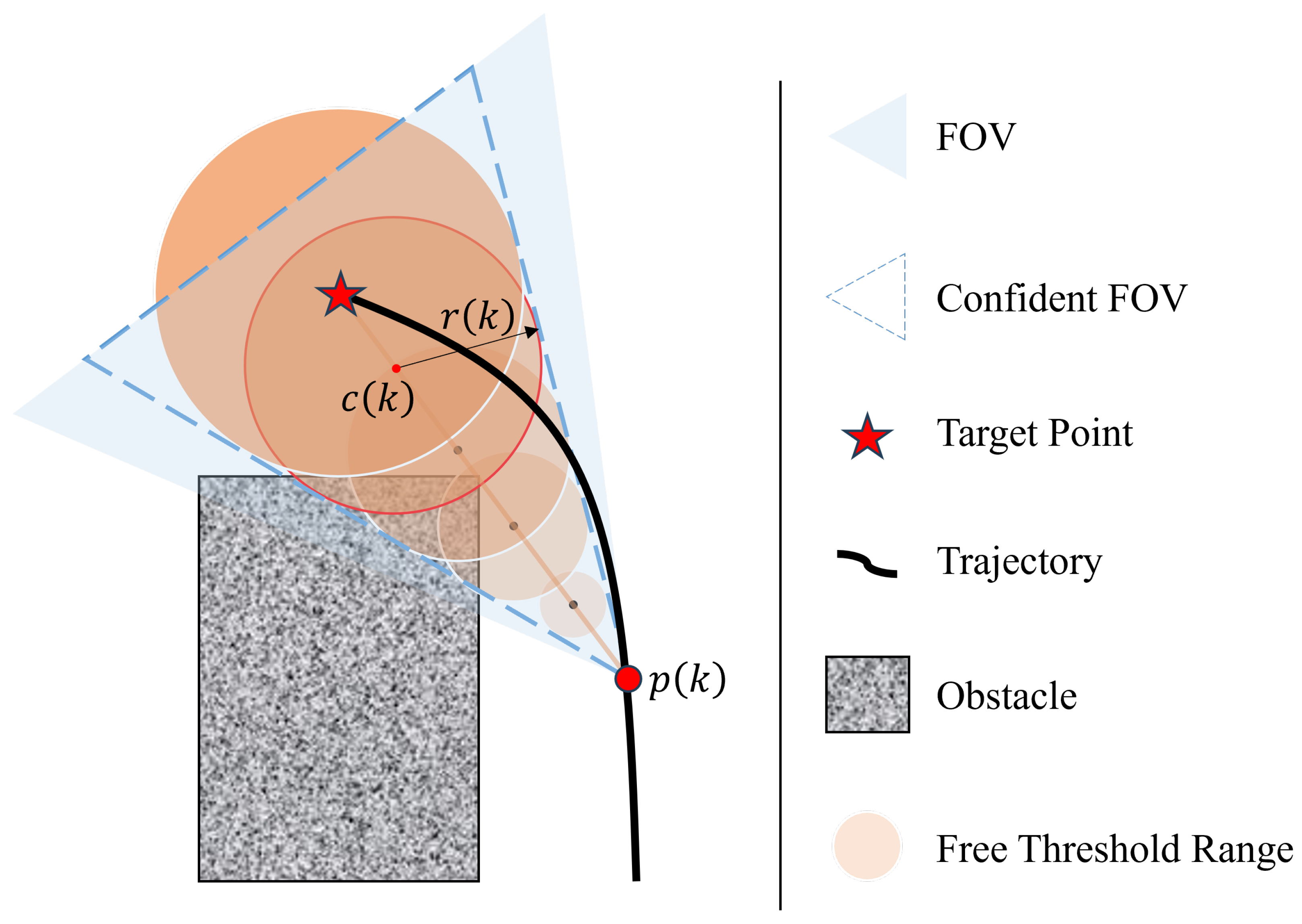

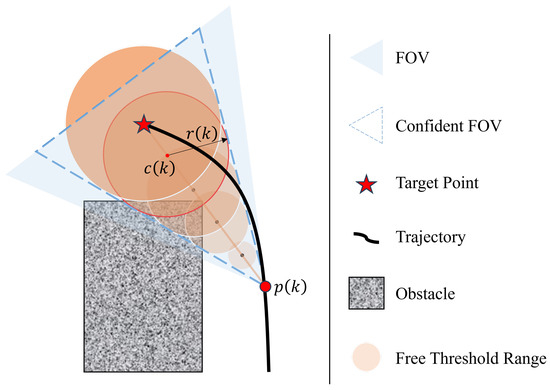

- VisibilityVisibility is a metric designed to quantify the level of occlusion within the UAV’s field of view (FOV) [25]. When the occluded area within the FOV exceeds a threshold, the environmental information available to the operator will significantly diminish, thereby affecting decision-making efficiency. As shown in Figure 1, the UAV trajectory point , and the target position . It is assumed that the UAV always faces the target. The blue area in the figure is the UAV’s FOV, and the blue dashed area is defined as the confident FOV, i.e., the core observation area where no occlusion is required in the ideal state. Considering that the computational complexity of the analytical solution for the occluded area of obstacles in the FOV is extremely high, we approximate the visibility by constructing a series of spherical regions , where N is the total number of spherical regions. The center and radius of the spherical regions are defined as given in (13):where , and is a parameter related to the size of the FOV. The visibility of the FOV is measured by comparing the minimum distance from the center point to the nearest obstacle with the radius of the corresponding sphere. The visibility of each area is ensured by the following condition, as given in (14):where denotes the minimum distance from to the nearest obstacle. Accordingly, the visibility factor at time k is defined as given in (15):

Figure 1. Illustration of the visibility metric definition.

Figure 1. Illustration of the visibility metric definition.

4.1.2. Determining Machine Performance

The machine performance is quantified by aggregating trajectory performance metrics within the time interval . Research in human–machine interaction suggests that trust formation is subject to two key temporal effects. First, recent performance immediately influences trust, an effect known as immediacy. Second, the cumulative effect of historical performance shapes long-term trust assessment [26]. This dual influence necessitates a modeling approach for machine performance that balances short-term responsiveness with long-term stability.

Specifically, within the interval , we define a sampling interval , where the interval length is , comprising N equally spaced sampling points. To incorporate observation data dynamically, we employ a sliding window spanning the most recent N sampling points, covering a duration of . Within this window, the safety indicator and visibility indicator of the UAV trajectory are computed using an Exponentially Weighted Moving Average (EWMA) to emphasize recent performance, as follows in (16):

where represents the i-th sampling point backward from the current time, and is a decay factor that adjusts the degree of influence of historical data. A smaller value of places greater emphasis on more recent data. The machine performance is then defined as given in (17):

where and are weighting coefficients for safety and visibility, respectively, satisfying . The value quantifies the overall trajectory performance within .

4.1.3. Determining Objective Machine Capacity

Given the dynamic and uncertain environments that UAVs encounter during missions, their objective machine capability may vary due to environmental factors. To calculate , we integrate prior knowledge from the system’s predefined capability model with posterior knowledge derived from real-time environmental feedback. The machine capability at time step k is thus expressed as given in (18):

where represents the actual machine performance over , and is a dynamic factor reflecting the contribution of recent performance to the capability assessment.

4.2. The MPD Human Trust Model for Assistive Aerial Teleoperation

The MPD human trust model for assistive aerial teleoperation can now be formulated as follows in (19):

where , , and can be similarly defined as in (6), (7), and (8), respectively. In particular, and can be calculated as in (17) and (18), respectively.

Regarding the human factors from (5), the initial trust value, , must be determined before the system operates. In this work, we initialize this value using a pre-task trust scale. The three human-factor parameters, , , and , must be estimated from runtime data. Various methods are suitable for this purpose, such as the Least Squares Estimation approach.

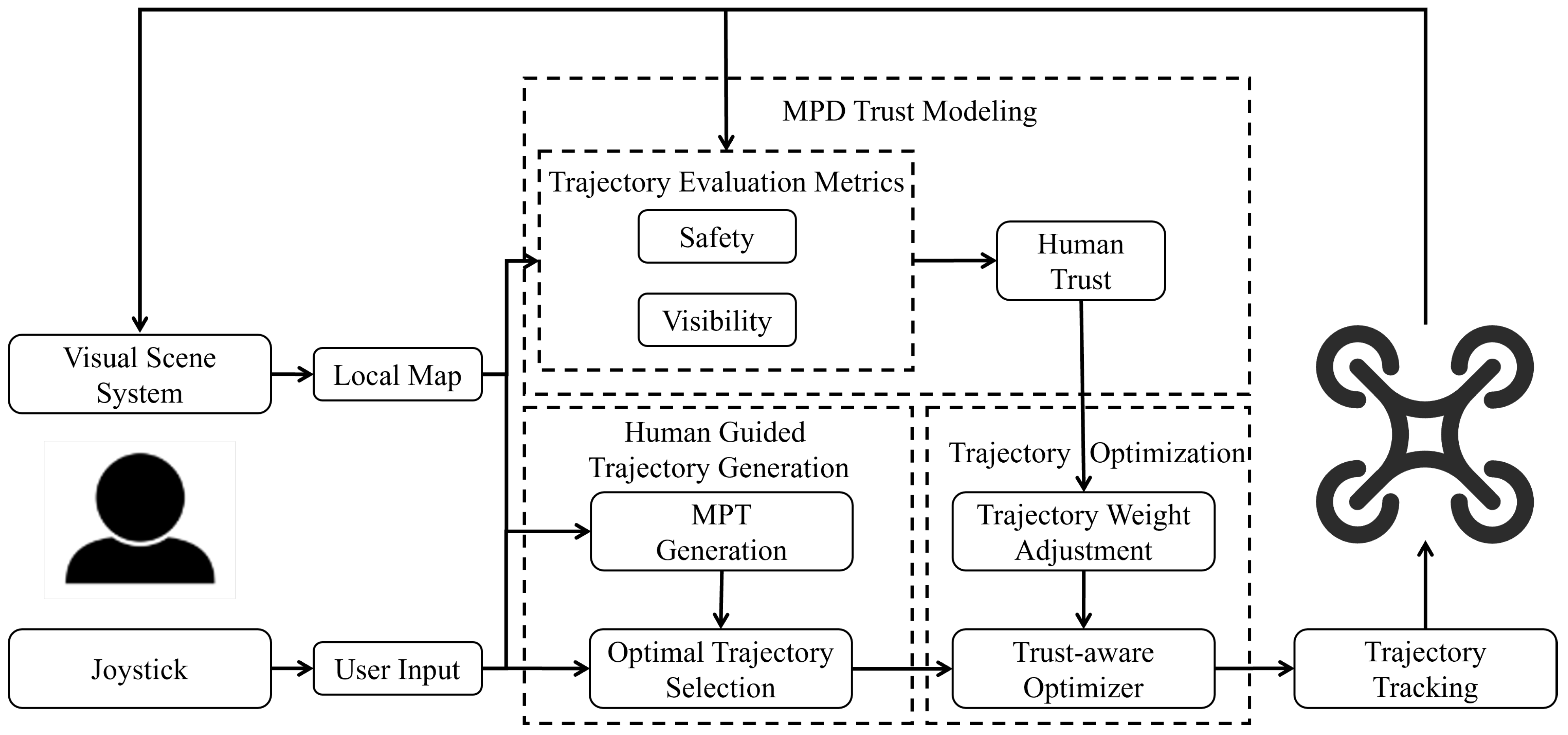

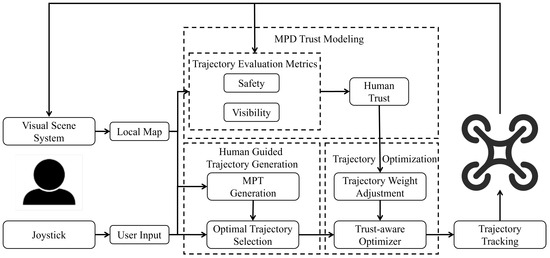

5. Trust-Aware Human–UAV Collaborative Trajectory Planning

This section presents a trust-aware method for human–UAV collaborative trajectory planning. First, a trajectory generation method is adopted to generate a candidate trajectory set. Subsequently, the optimal trajectory is selected from the candidate set by minimizing a cost function that incorporates the discrete Fréchet distances to the current trajectory and the human input primitive, as well as the trust value associated with each trajectory. Finally, the selected trajectories are reparameterized and optimized in conjunction with human trust levels using MINCO’s representation. The specific process is detailed in Algorithm 1 and an overview of the entire framework is shown in Figure 2.

| Algorithm 1 Trust-aware human–UAV collaborative trajectory planning. |

| Require: Initial state , User input , Motion primitive tree , Sample set , Human trust Ensure: Human ideal trajectory

|

Figure 2.

The framework diagram presents a human–UAV system for assistive aerial teleoperation that incorporates human trust into trajectory planning. The system uses human input to guide trajectory planning and generate an initial trajectory while simultaneously modeling human trust via the MPD trust model. Subsequently, the system optimizes the initial trajectory to match the dynamic trust level, ultimately forming an optimized path for UAV trajectory tracking.

5.1. Trajectory Generation Guided by Human Intentions

5.1.1. Motion Primitive Tree Generation

Existing studies have demonstrated that quadrotors are differentially flat systems [27]; thus, we can compute precise inputs to make the vehicle follow a specified trajectory in terms of the flat outputs x, y, z, and yaw angle . We define the state as , where the state space . The operator provides inputs with respect to a level frame , which is a world-z-aligned frame rigidly attached to the vehicle’s origin.

Inspired by [5], we generate motion primitives as polynomial trajectories. This method constrains the trajectory by the vehicle’s current state and a target velocity profile guided by the operator’s input. The state of the motion primitive is set to evolve according to the unicycle model [28], as expressed in (20):

The motion primitive, denoted as , is an eighth-order polynomial trajectory in the world frame of the form . Its coefficients are uniquely determined. Given the current state and its higher-order derivatives (up to the fourth order), along with a duration , the operator’s input primitive for is generated by enforcing the following boundary conditions, as given in (21):

where is the transformation matrix from the level frame to the world frame at time t. The primary role of this guiding primitive is to direct the search process, ensuring the generated trajectories align with the user’s desired direction.

For generating the tree, we fix the forward velocity to the value provided by the human operator’s input . We then perform discrete sampling on the remaining action parameters: angular velocity , vertical velocity , and duration T. By iteratively sampling the action space , we propagate from node to its child node set . We generate a corresponding candidate primitive by applying the exact same polynomial generation process defined by Equations (20) and (21). We then check if it lies within the safe space to ensure all generated primitives are collision-free. This acts as a hard constraint, immediately discarding any inherently unsafe paths and guaranteeing that only collision-free trajectories are considered for the subsequent stages.

To evaluate the directional similarity between a candidate primitive and the human-guided primitive, we compute a cost based on their direction vectors. We define a mapping that extracts the spatial coordinates from a full state vector. The cost is then given by (22):

where is the duration of the motion primitive corresponding to node , is the initial state corresponding to node , and is the current state. We sort the costs and select the node with the minimum cost from the candidate node set to add to the sampling set , which is used for the next round of motion primitive expansion.

When exceeds the range of the local perception, or the number of nodes reaches a preset threshold, the motion primitive tree ceases to expand. By connecting nodes at different depths, a series of trajectories are obtained.

5.1.2. Trajectory Selection

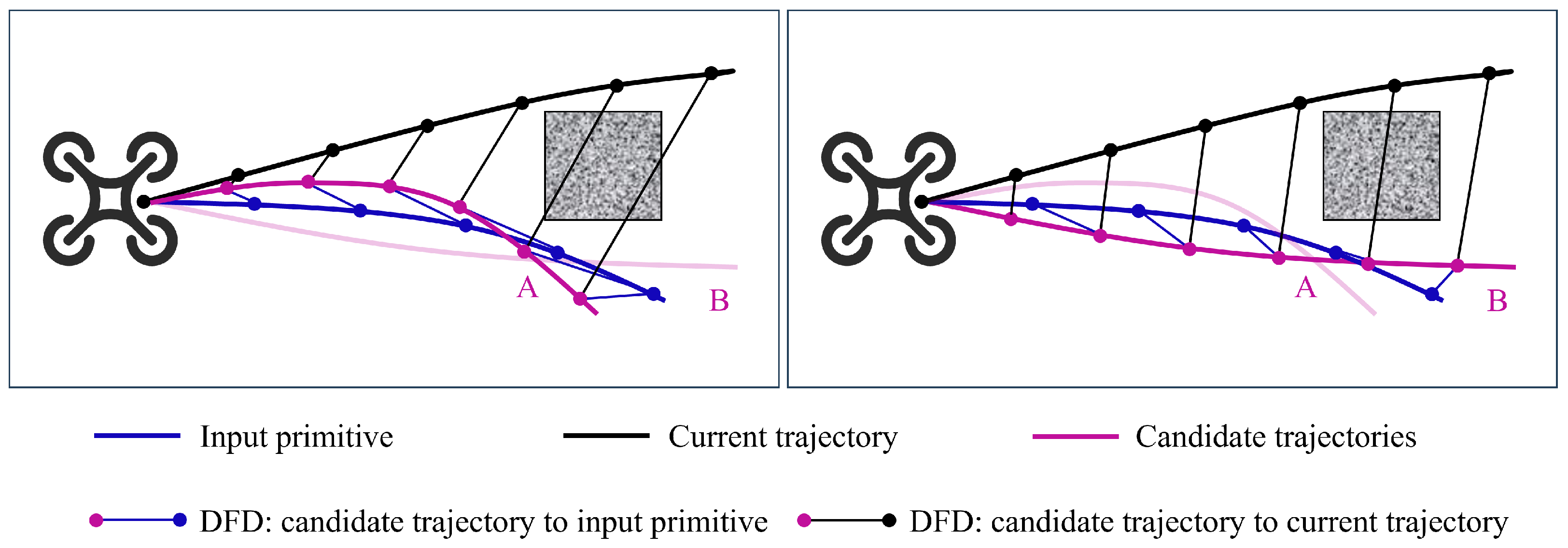

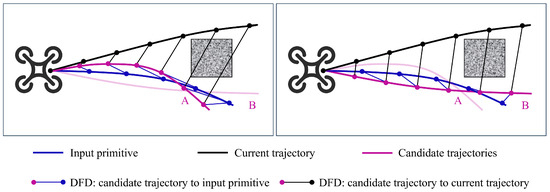

Given a set of candidate trajectories , we select the human-desired trajectory from them. We need to balance three objectives: minimizing the deviation from the current trajectory to ensure a smooth transition, minimizing the deviation from the human input primitives [3], and maximizing the average trust value associated with each trajectory. To this end, we introduce a selection cost function, which evaluates the proximity of each trajectory to the current trajectory and the operator input primitive by calculating the discrete Fréchet distance (see Figure 3) [29] while also considering the trust value of each trajectory. A brief definition of the discrete Fréchet distance is given as follows.

Figure 3.

Illustration of the calculation of discrete Fréchet distance (DFD) in trajectory selection. The DFD of each candidate trajectory with respect to the operator input primitives and the current trajectory is calculated.

Consider a discrete sampling of two continuous functions f and g, forming sequences and , consisting of n and m discrete points, respectively. An order-preserving complete correspondence between and is defined by a pair of discrete monotone reparameterizations [5], where maps to and maps to . The discrete Fréchet distance of and is given by (23):

where range over all order-preserving complete correspondences between and . Thus, for time-parameterized trajectories and , their Fréchet distance is defined as given in (24):

where denotes sampling at a fixed time interval .

Based on the above definitions, we derive the trajectory selection mechanism as follows: Given the current trajectory , the operator input primitive , and the average trust value for each candidate trajectory, the desired trajectory is determined by (25):

where weights the smoothness with the current trajectory, weights the deviation from the human input primitive, and weights the trust value.

5.2. Optimization Problem Formulation

Selected from the motion primitive tree is the desired human trajectory . In this section, a trajectory representation method called MINCO [30] is adopted to reparameterize the trajectory. MINCO performs spatiotemporal decoupling transformation on the M-piece trajectory with flat output through a linear complexity mapping, thereby decoupling the spatial parameters from the temporal parameters. Specifically, the s-order MINCO trajectory is actually a -order polynomial spline with constant boundary conditions, which is defined as given in (26):

where is an m-dimensional M-piece polynomial trajectory. is the polynomial coefficient, and is a linear-complexity smooth map from intermediate points and a time allocation for all pieces to the coefficients of splines. The i-th piece of the trajectory, , is defined as given in (27):

where is the natural basis, is the coefficient matrix, , and .

Define the constraint points . The sampling manner on each piece of the trajectory is given by , where and denotes the number of sampling points in the i-th piece. These points serve to convert the time integrals of certain constraint functions into a weighted sum of sampling penalty functions. Considering our requirements, all constraints are converted into penalty terms, and the trajectory optimization problem is formulated as given in (28):

where is the execution time penalty, is the safety penalty, is the control effort penalty, and is the dynamic feasibility penalty. is the weight vector used to balance these respective penalties.

5.2.1. Execution Time Penalty

To enhance the flight speed of the UAV, we impose a penalty term on the total execution time . Its gradients are given by and .

5.2.2. Safety Penalty

Inspired by [31], the safety penalty term for obstacle avoidance is computed using the Euclidean Signed Distance Field (ESDF). We penalize sampling points that are too close to obstacles, as expressed in (29):

where is the safety distance threshold set according to practical scenarios, and represents the distance from to the nearest obstacle. The safety penalty term is then derived by computing the weighted sum of the sampled constraint functions, specifically as given in (30):

where are the quadrature coefficients following the trapezoidal rule [32]. The detailed derivation of the gradient of is as given in (31):

where t is the relative time on this piece. For the case where , the gradient is given as expressed in (32):

where is the gradient of the ESDF at . Otherwise, the gradients become and .

5.2.3. Control Effort Penalty

According to [33], the s-order control input and its gradient for the i-th piece of the trajectory can be expressed as given in (33):

5.2.4. Dynamical Feasibility Penalty

When the trajectory of the UAV approaches physical constraints, the dynamic feasibility penalty mechanism is triggered. To ensure that the UAV can execute the trajectory, we impose limits on the maximum values of velocity, acceleration, and jerk, as expressed in (34):

where , , and denote velocity, acceleration, jerk, coordinate axis indices, and sampling time, respectively. , , and are the maximum allowable velocity, acceleration, and jerk. The dynamic feasibility penalty [6] term is obtained by calculating the weighted sum of sampled constraint functions, as given in (35):

where . The gradient can be expressed as given in (36):

In practical implementation, to reserve some power resources and ensure the feasibility of the trajectory, we take 90% of the maximum allowable velocity, acceleration, and jerk as the thresholds.

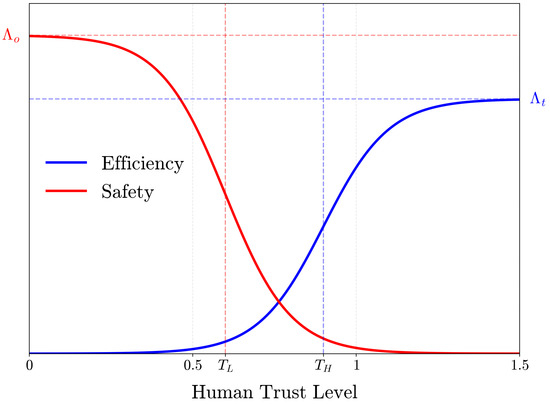

5.3. Trajectory Weight Adjustment Based on Human Trust

Considering the changes in human trust in the UAV, the optimized trajectory should balance search efficiency and safety, and and are further optimized as given in (37):

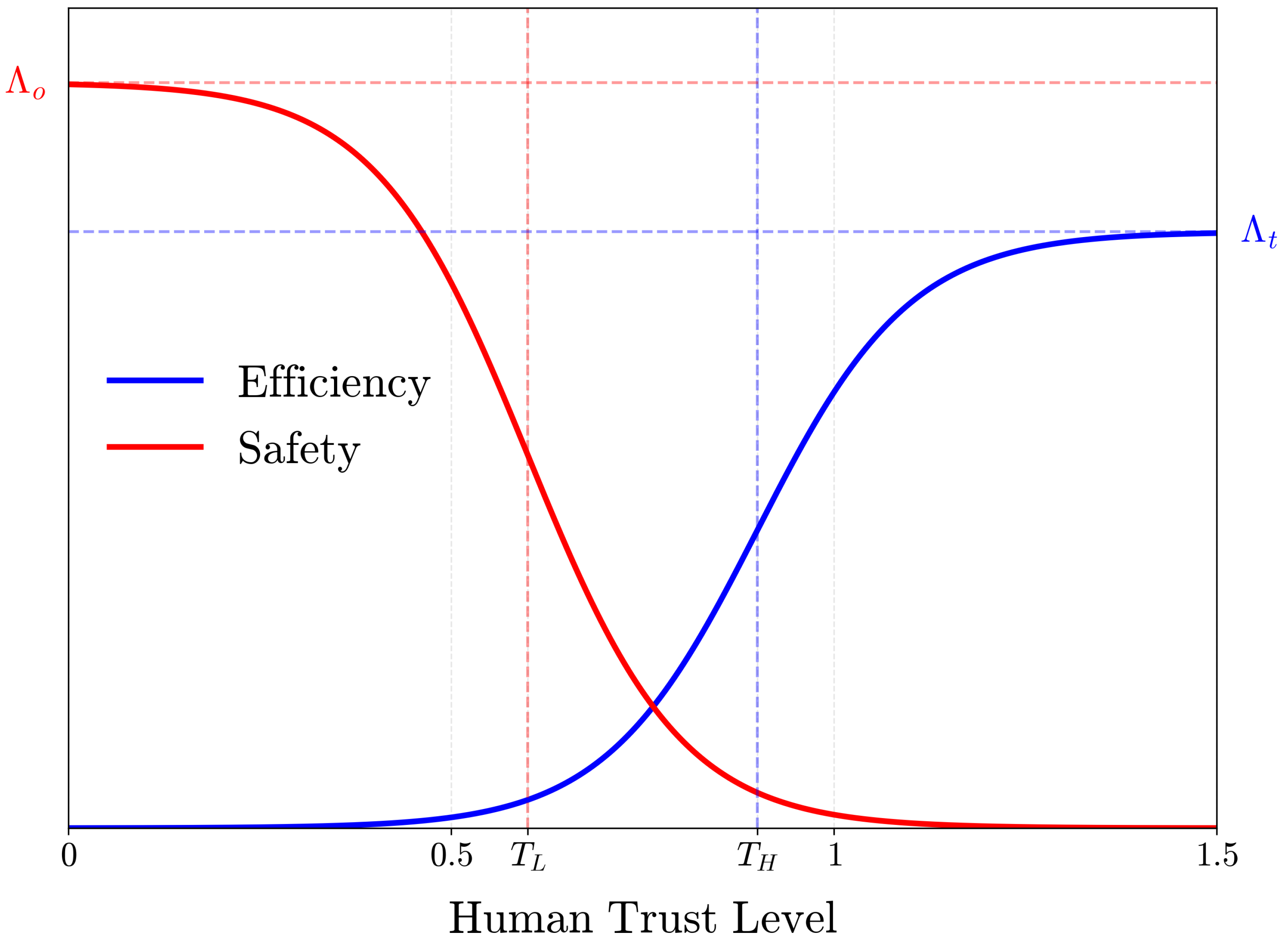

Based on the historical trajectory of the UAV and the MPD human trust model, we can calculate the human trust level . We categorize the human trust level into three ranges from low to high: , , and . Here, and represent predefined trust thresholds. When the operator’s trust level in the UAV is low (), the operator may compete with the UAV for control, posing a significant safety risk. In this scenario, is assigned a smaller value, while is assigned a larger value to enhance the safety of the UAV during human intervention. As the operator’s trust level increases (), is gradually increased, and is gradually decreased to achieve a balance between trajectory rapidity and safety. Furthermore, when the operator’s trust level is high (), it reflects strong confidence in the UAV’s capabilities. Consequently, is set to a larger value, and is set to a smaller value to optimize search efficiency and reduce the operator’s long-term workload. To accommodate the requirements of these distinct trust intervals, the specific expressions for the efficiency weight adjustment and the safety weight adjustment , where T denotes the human trust level , are given by (38) and illustrated in Figure 4:

Figure 4.

Trust-aware trajectory weight adjustment function.

The key parameters are set based on a combination of pilot studies and design principles. The trust thresholds are set to and based on pilot studies. The adjustment bounds follow a safety-first principle where , ensuring safety concerns elicit a stronger system response than efficiency gains. Finally, the transition slopes () were tuned through iterative user feedback to achieve a behavioral transition that operators perceived as smooth and predictable.

6. Experiments and Results

In this section, we design and conduct an experiment on human–UAV collaboration to compare the effectiveness of our proposed method against a baseline approach that does not account for human trust. The experiments are performed on a high-performance desktop equipped with an Intel Core i9-14900KF CPU (32 cores, base frequency 3.2 GHz, maximum turbo frequency 6.0 GHz) and an NVIDIA GeForce RTX 4090 GPU (24 GB VRAM).

6.1. Implementation Details

To evaluate the proposed method, we conducted simulation experiments in three simulated random forest environments with varying tree densities. The simulation area was a rectangular volume measuring 70 m in length, 20 m in width, and 3 m in height, containing 50, 100, or 200 trees to represent sparse, medium, and dense scenarios, respectively. For the experiments, 15 students (ages 22–28) with no prior drone piloting experience were recruited. After a 5 min familiarization session, each operator was tasked with navigating a simulated quadrotor vehicle from one end of the forest to the other end. The experiment followed a within-subjects design, where each participant tested both our proposed method and a baseline approach. The order of the two methods was counterbalanced across participants. For each method, the operator performed the task once in each of the three random forest environments. The task was deemed complete upon reaching the opposite side. Operators were provided with a first-person view of the vehicle and sent commands via a joystick to guide its flight. They were permitted to intervene in the vehicle’s flight at any time.

We evaluate two aspects of the performance of our assistive aerial teleoperation system: (1) navigation efficiency, which mainly includes the distance, duration, and average velocity during the mission; and (2) the cost of human–UAV interaction, for which the core evaluation metrics are the number of operator inputs, jerk integral, and average trust. Specifically, the number of operator inputs indicates the intervention frequency of the assistive system, while the jerk integral reflects the piloting burden and trajectory smoothness. If the former two interaction indicators (operator inputs and jerk integral) are high, it indicates that the path searched by the assistive system does not match the human’s true intention, leading to poor flight quality and requiring more operator interaction to correct, which is often accompanied by lower trust. In contrast, if these indicators are low, it indicates that the operator agrees with the system’s path planning, which implies fluid human–machine collaboration and a smooth flight trajectory, ultimately building and maintaining a higher level of trust.

The motion primitive parameters used in the experiment are shown in Table 1. The maximum linear velocity input by the operator is set to 2 m/s. The parameters of the visibility metric are , . The parameters of the safety metric are . The specific parameters for the MPD trust model are detailed in Table 2. In our implementation, these parameters are empirically determined by referencing previous work and our own experiments, and their systematic optimization is beyond the scope of this paper.

Table 1.

Motion primitive parameters.

Table 2.

MPD trust model parameters.

6.2. Results Analysis

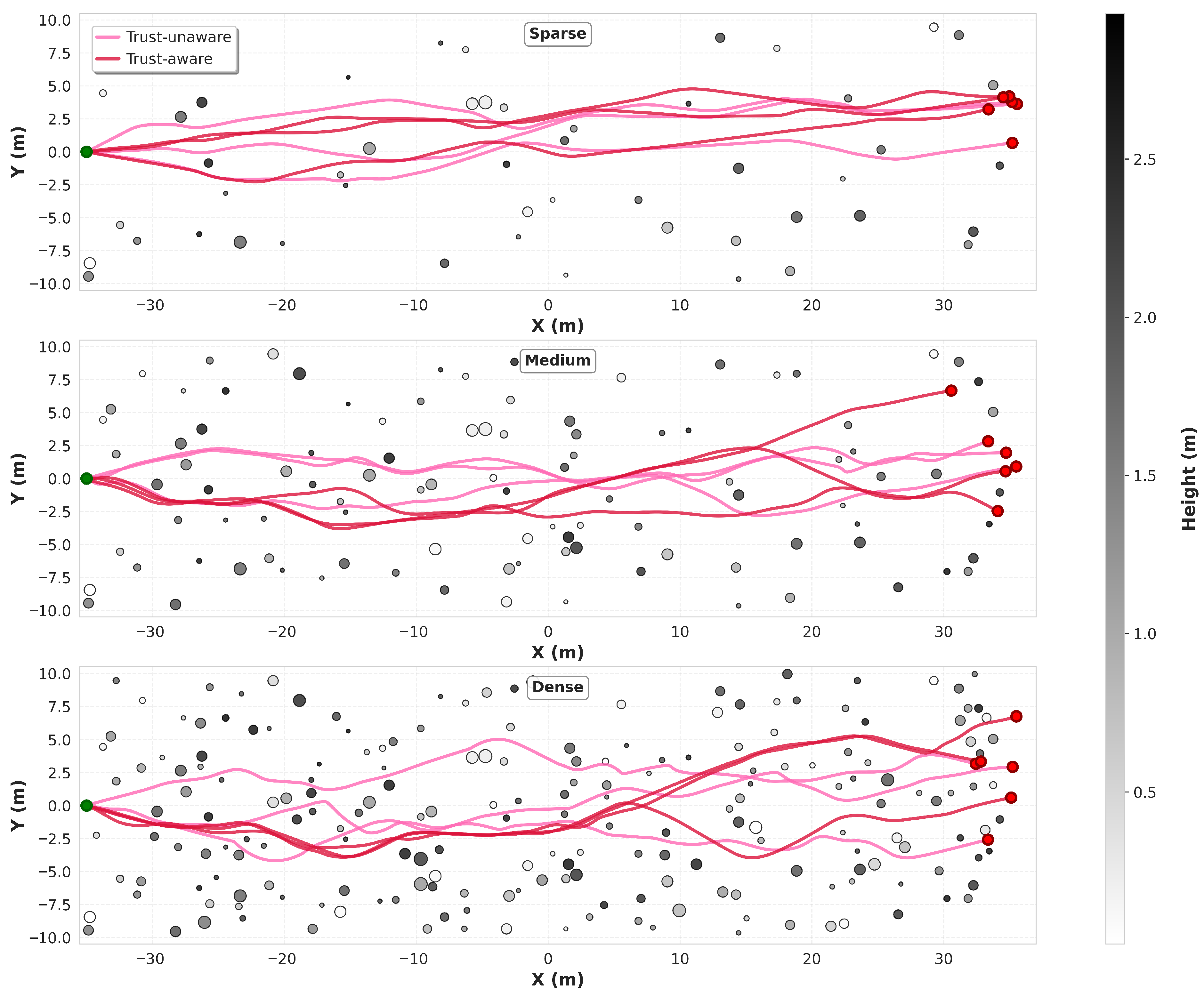

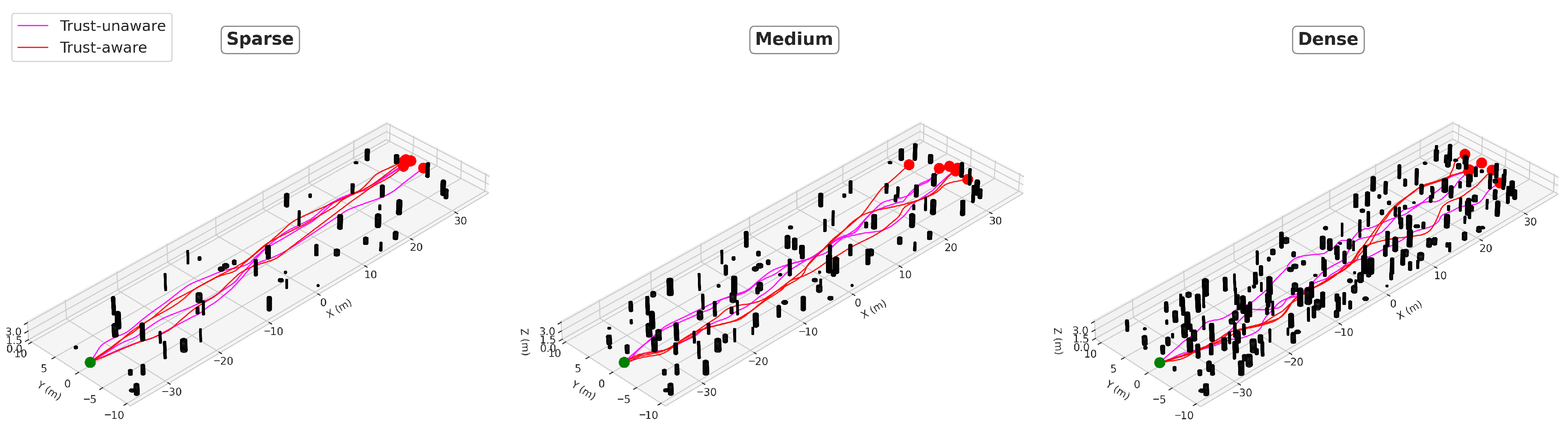

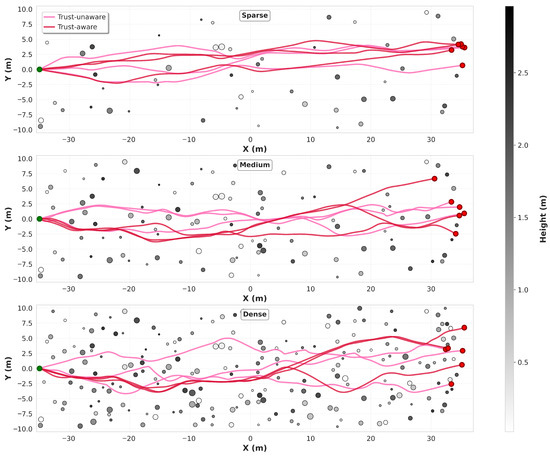

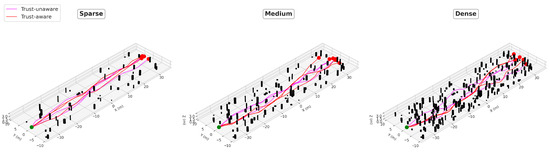

The quantitative results of our experiments are summarized in Table 3, which compares the performance of our proposed trust-aware approach against the trust-unaware baseline across four key dimensions: task completion efficiency, operator workload, trajectory quality, and human–machine trust. These experiments were conducted in three distinct environments to assess the robustness of both methods under varying levels of complexity. Trajectory comparisons are visually illustrated in Figure 5 (2D) and Figure 6 (3D), offering additional insights into the behavioral differences between the two approaches.

Table 3.

Results for the random forest environment.

Figure 5.

Two-dimensional trajectory comparison between trust-aware and trust-unaware methods under different obstacle densities. The three panels show trajectories in sparse (top, 50 trees), medium (middle, 100 trees), and dense (bottom, 200 trees) environments. The colorbar on the right indicates obstacle heights in meters. Green and red circles represent start and end points, respectively.

Figure 6.

Three-dimensional visualization of trajectory comparison across sparse, medium, and dense obstacle environments.

In terms of fundamental task completion metrics, both methods exhibit comparable performance across all environments. Specifically, flight distance and duration show no significant differences between the trust-aware and trust-unaware approaches. For instance, in the dense environment, the trust-aware method recorded a flight distance of 71.38 m and a duration of 49.86 s, compared to 71.73 m and 54.89 s for the trust-unaware method. Similar trends are observed in the sparse and medium environments, indicating that incorporating the trust model does not compromise basic task execution efficiency. Both methods guide the UAV to plan effective paths of similar lengths, where the paths for both methods closely align in terms of overall distance across sparse, medium, and dense settings. However, the trust-aware method demonstrates a slight advantage in average velocity, particularly in the dense environment. This improvement is primarily attributed to smoother flight trajectories and fewer unnecessary pauses. The trust-aware method produces paths with fewer sharp turns and abrupt changes compared to the trust-unaware baseline, especially in the dense environment, enhancing the overall efficiency of motion.

The number of operator inputs serves as a core metric for evaluating operator intervention frequency and effort, directly reflecting the workload imposed on the operator. As shown in Table 3, the trust-aware method significantly reduces the number of operator inputs compared to the trust-unaware method across all scenarios. In the sparse environment, inputs decreased from 39 to 34 (a reduction of approximately 12.8%), and this gap widened in the dense environment, where inputs dropped from 56 to 43 (a reduction of approximately 23.2%). These results strongly demonstrate that our trust-aware method effectively alleviates the operator’s workload. The trust-aware system’s ability to dynamically adjust its assistance strategy based on real-time trust assessments underlies this improvement. When trust is high, the system operates more autonomously, reducing the need for operator fine-tuning. Conversely, when trust declines, the system adopts conservative behaviors that align better with the operator’s expectations, minimizing interventions caused by mismatches between system decisions and human intent.

The jerk integral metric reflects the smoothness of the UAV’s flight, with lower values indicating smoother trajectories with fewer abrupt changes. The experimental results in Table 3 reveal that the trajectory quality of the trust-aware method consistently surpasses that of the trust-unaware baseline. In the sparse environment, the jerk integral was reduced by approximately 22.5%, and this improvement was even more pronounced in the dense environment, with a reduction of approximately 43.2%. These smoother trajectories imply safer, more energy-efficient flights and provide a more comfortable first-person view experience for the operator. The reduction in the jerk integral is closely tied to the decrease in operator inputs; fewer external interventions prevent frequent, abrupt changes in flight state. Furthermore, the trust-aware model’s ability to predict and align with the operator’s long-term intent results in more proactive and coherent paths, contrasting with the passive, jerky reactions of the trust-unaware baseline, particularly evident in the dense environment.

Finally, the average trust metric directly validates the effectiveness of our trust-aware approach. In all environments, the average trust level of operators was significantly higher with the trust-aware method compared to the trust-unaware baseline. For example, in the medium-density environment, the trust-aware method achieved an average trust of 0.750 compared to 0.657 for the trust-unaware method. Trust levels for both methods decreased as environmental complexity increased, which is expected due to the greater challenges in denser settings. However, the decline was gentler for the trust-aware method (e.g., from 0.813 in the sparse environment to 0.693 in the dense environment compared to a steeper drop from 0.744 to 0.611 for the trust-unaware method). This suggests that by actively modeling and adapting to the operator’s trust state, our system establishes and maintains a more robust and resilient human–machine trust relationship. The higher trust levels both result from and contribute to the benefits observed (fewer inputs, smoother trajectories). As the system’s behavior becomes more reliable and predictable, operators are more willing to reduce interventions, creating a virtuous cycle.

7. Discussion

7.1. Discussion of Findings

The empirical findings provide compelling evidence that our trust-aware framework substantially elevates the quality of human–UAV interactions, as evidenced by reductions in operator workload, smoother trajectories, and more resilient trust dynamics—all without compromising core task metrics such as flight efficiency. This aligns with the framework’s core innovation: integrating an MPD trust model into the real-time planner, which dynamically predicts and adapts to operator intent and trust states. Unlike traditional trust-unaware baselines that react passively to inputs, our approach proactively aligns system behavior with human expectations and fosters more intuitive collaboration. These improvements extend beyond mere performance gains, underscoring the framework’s value in making human–machine systems safer and more user-centric, particularly in variable environments where baseline methods falter.

The core of this success lies in the formation of a virtuous cycle in operator–UAV interaction, a phenomenon observed across different environments. Specifically, this cycle occurs when the system’s trust-aware behavior aligns with operator expectations, which reduces unnecessary interventions and in turn reinforces the operator’s trust, fostering smoother and more effective collaboration. This cycle not only validates the important role of trust in human–machine interaction (e.g., [13]) but also provides a crucial extension to previous studies focused on intent-aligned trajectory generation [3,5]. While these prior works demonstrated that aligning with operator intent improves collaboration, our findings reveal that intent-alignment alone is insufficient under trust-eroding conditions. Specifically, our results in dense environments show that without a trust model, operator interventions remained high despite intent alignment, whereas our trust-aware approach successfully mitigated conflicts by dynamically increasing system caution. Our work thus extends these intent-based models by incorporating a vital second feedback loop, the operator’s dynamic trust state, which proves essential for robust performance in complex scenarios.

Furthermore, our framework makes a tangible contribution to the formal practice of risk analysis in human–UAV interaction. As conceptualized in frameworks like that of Sanz et al. [34], effective risk management for UAVs involves a cycle of hazard identification, assessment, and reduction. Our work provides a concrete, real-time implementation of this cycle. Specifically, the MPD trust model functions as a dynamic risk assessment tool, quantifying the risk of human–machine goal conflicts based on system performance and the operator’s trust level. The adaptive trajectory planner then serves as the risk reduction mechanism, translating this assessed risk into tangible actions by modulating the safety and efficiency weights. By formalizing the link between trust dynamics and system safety behaviors, our approach offers a more structured method for managing risk in collaborative aerial tasks, moving beyond the static safety rules common in prior systems.

However, while these findings affirm the framework’s efficacy in simulated scenarios, they also reveal critical limitations that warrant careful consideration, primarily stemming from the inherent sim-to-real gap. It must be acknowledged that our controlled simulation cannot fully replicate the complex uncertainties of the physical world. These include unmodeled aerodynamics, complex sensor noise profiles, and unpredictable communication latencies. Crucially, such factors could directly corrupt the real-time safety and visibility metrics that are fundamental inputs to our MPD trust model. Furthermore, beyond these physical uncertainties, our study utilized a limited operator cohort, and the framework’s generalizability across operators with varying expertise levels and risk tolerances remains an underexplored but vital area. These limitations, therefore, highlight critical open research issues regarding the framework’s real-world robustness and its capacity for personalization, paving the way for future enhancements.

7.2. Practical Significance and Scenario-Based Illustration

To illuminate the practical significance of our trust-aware framework, we present an illustrative scenario in a high-stakes application: post-disaster search and rescue.

Imagine a rescue mission where an operator must remotely pilot a UAV through a collapsed building to locate survivors. When using a UAV equipped only with basic obstacle avoidance, the operator faces immense cognitive load, simultaneously interpreting video feeds, navigating complex 3D spaces, and fighting against a system that may refuse to approach cluttered areas where a survivor might be. This often leads to human–machine conflict; to complete the mission, the operator might disable the assistance system, risking a catastrophic crash due to a moment of inattention. The loss of an expensive UAV not only incurs a financial cost but, more critically, could prematurely end a life-saving search.

Now, let us envision the same mission executed with our trust-aware framework. Its practical value unfolds in several stages:

- Initial trust calibration: As the UAV enters the building, it first navigates through less cluttered corridors. Its smooth, predictable trajectories, reflecting high safety and visibility, rapidly build the operator’s trust in the system’s competence.

- Intent-driven investigation: The operator spots a potential sign of life and issues a command to move closer for a better view. Because the operator’s trust is high, our framework accurately infers a strong, deliberate intent. It then generates a precise trajectory that navigates assertively yet safely around debris, fulfilling the operator’s goal without resistance. This is a stark contrast to a trust-unaware system that might have simply halted.

- Adaptive safety preservation: As the UAV ventures deeper, it enters an area with poor visibility, causing its onboard sensors to become less reliable. Our framework’s machine performance metrics objectively detect this degradation. Consequently, the trust model dynamically lowers its trust value, shifting the system into a more cautious state. If the anxious operator now commands a rapid forward movement, the system will provide stronger assistance, moderating the speed and trajectory to prioritize the UAV’s survival. It intelligently prevents a trust-induced error, safeguarding the mission’s most critical asset.

In summary, this scenario demonstrates clear practical significance. By fostering a more intuitive human–machine collaboration, our framework simultaneously enhances mission effectiveness and reduces operator cognitive load. Crucially, its ability to dynamically adapt to risk ensures the safety of critical assets, ultimately empowering human–machine teams to confidently undertake complex, high-stakes operations that were previously too hazardous or inefficient, thereby significantly expanding their operational boundaries.

7.3. Open Issues and Future Work

Future work will address several open issues by focusing on three core directions to further enhance the trust-aware framework’s generalization, accuracy, and application scope.

First, in human–machine interaction, a central open issue is how to bridge the sim-to-real gap while enabling genuine user adaptivity. Existing studies have primarily validated trust-aware frameworks in simulation [17,35], and their robustness against real-world uncertainties, such as sensor noise and communication latency, remains insufficiently tested. At the same time, current generic trust models often ignore differences in operator expertise, limiting their ability to meet personalized needs. To address this, our future research will encompass the following: (i) transition the framework from simulated to physical environments to verify robustness in practice; and (ii) develop personalized trust models that identify and adapt to the trust characteristics of operators with varying experience levels, thereby creating a system that is both robust and user-adaptive.

Second, in trust modeling, another open issue is how to reduce the over-reliance on empirical, manually tuned parameters. Existing approaches largely depend on human expertise [14,17] for parameter settings, which restricts scalability and adaptability. To overcome this, we will explore data-driven paradigms such as Inverse Reinforcement Learning (IRL) [13], enabling the model to autonomously learn and optimize intrinsic parameters from interaction data, replacing manual tuning with a more robust self-learning mechanism.

Finally, at the application level, an important open issue is how to scale trust-aware frameworks beyond constrained laboratory tasks into complex, high-stakes real-world scenarios. Prior work has mostly focused on basic navigation [3,5,7] in controlled settings, whereas missions such as large-scale inspection and search and rescue require balancing multiple performance metrics—including speed, safety, and coverage—while maintaining stability in highly dynamic environments that include challenges such as dynamic obstacles. To meet this challenge, we will extend the framework toward these high-stakes missions, using them as testbeds to rigorously evaluate and strengthen the adaptive capabilities of the trust-aware planner under realistic conditions.

8. Conclusions

This work proposes and validates a trust-aware trajectory planning framework to address the critical issue of human–machine trust imbalance in assistive aerial teleoperation. By embedding an MPD trust model into a real-time planner, the approach effectively reduces operator workload, improves flight safety, and establishes a more stable human–machine trust relationship, all while maintaining task efficiency. The results demonstrate that introducing dynamic trust quantification into the control loop of autonomous systems holds significant potential, enabling the creation of a virtuous cycle of reduced human intervention, greater system predictability, and enhanced collaborative performance. Taken together, this work establishes a foundation for developing more intuitive and efficient human–machine collaboration models, making an important contribution to the advancement of intelligent aerial systems. In the future, by addressing the open issues outlined in the Discussion section, this framework holds promise for extension to a broader range of autonomous system applications.

Author Contributions

Conceptualization, Q.Z.; methodology, K.H.; software, K.H.; validation, K.H. and X.J.; formal analysis, X.J.; investigation, Q.Z.; resources, Q.Z.; writing—original draft preparation, K.H.; writing—review and editing, P.L. and Y.Z.; visualization, K.H.; supervision, Y.K.; project administration, P.L. and Y.Z.; funding acquisition, Q.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the State Key Laboratory of Avionics Integration and Aviation System-of-Systems Synthesis (grant number 2024AIASS0701) and supported by the Dreams Foundation of Jianghuai Advance Technology Center (grant number 2023-ZM01G003).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The original contributions presented in the study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| MPD | Machine-Performance-Dependent |

| UAV | Unmanned Aerial Vehicle |

| MPT | Motion Primitive Tree |

| DFD | Discrete Fréchet Distance |

| IRL | Inverse Reinforcement Learning |

References

- Perez-Grau, F.; Ragel, R.; Caballero, F.; Viguria, A.; Ollero, A. Semi-autonomous teleoperation of UAVs in search and rescue scenarios. In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL, USA, 13–16 June 2017; pp. 1066–1074. [Google Scholar] [CrossRef]

- Kanso, A.; Elhajj, I.H.; Shammas, E.; Asmar, D. Enhanced teleoperation of UAVs with haptic feedback. In Proceedings of the 2015 IEEE International Conference on Advanced Intelligent Mechatronics (AIM), Busan, Republic of Korea, 7–11 July 2015; pp. 305–310. [Google Scholar] [CrossRef]

- Shi, H.; Luo, L.; Gao, S.; Yu, Q.; Hu, S. Human-guided motion planner with perception awareness for assistive aerial teleoperation. Adv. Robot. 2024, 38, 152–167. [Google Scholar] [CrossRef]

- Boksem, M.A.; Meijman, T.F.; Lorist, M.M. Effects of mental fatigue on attention: An ERP study. Cogn. Brain Res. 2005, 25, 107–116. [Google Scholar] [CrossRef]

- Yang, X.; Cheng, J.; Michael, N. An Intention Guided Hierarchical Framework for Trajectory-based Teleoperation of Mobile Robots. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 482–488. [Google Scholar] [CrossRef]

- Wang, Q.; He, B.; Xun, Z.; Xu, C.; Gao, F. GPA-Teleoperation: Gaze Enhanced Perception-Aware Safe Assistive Aerial Teleoperation. IEEE Robot. Autom. Lett. 2022, 7, 5631–5638. [Google Scholar] [CrossRef]

- Yang, X.; Michael, N. Assisted Mobile Robot Teleoperation with Intent-aligned Trajectories via Biased Incremental Action Sampling. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 10998–11003. [Google Scholar] [CrossRef]

- Gebru, B.; Zeleke, L.; Blankson, D.; Nabil, M.; Nateghi, S.; Homaifar, A.; Tunstel, E. A Review on Human–Machine Trust Evaluation: Human-Centric and Machine-Centric Perspectives. IEEE Trans.-Hum.-Mach. Syst. 2022, 52, 952–962. [Google Scholar] [CrossRef]

- Alhaji, B.; Beecken, J.; Ehlers, R.; Gertheiss, J.; Merz, F.; Müller, J.P.; Prilla, M.; Rausch, A.; Reinhardt, A.; Reinhardt, D.; et al. Engineering Human–Machine Teams for Trusted Collaboration. Big Data Cogn. Comput. 2020, 4, 35. [Google Scholar] [CrossRef]

- Ibrahim, B.; Elhajj, I.H.; Asmar, D. 3D Autocomplete: Enhancing UAV Teleoperation with AI in the Loop. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 17829–17835. [Google Scholar] [CrossRef]

- Steyvers, M.; Tejeda, H.; Kerrigan, G.; Smyth, P. Bayesian modeling of human–AI complementarity. Proc. Natl. Acad. Sci. USA 2022, 119, e2111547119. [Google Scholar] [CrossRef]

- Lyons, J.B.; Stokes, C.K. Human–human reliance in the context of automation. Hum. Factors 2012, 54, 112–121. [Google Scholar] [CrossRef]

- Nam, C.; Walker, P.; Li, H.; Lewis, M.; Sycara, K. Models of Trust in Human Control of Swarms With Varied Levels of Autonomy. IEEE Trans.-Hum.-Mach. Syst. 2020, 50, 194–204. [Google Scholar] [CrossRef]

- Li, Y.; Cui, R.; Yan, W.; Zhang, S.; Yang, C. Reconciling Conflicting Intents: Bidirectional Trust-Based Variable Autonomy for Mobile Robots. IEEE Robot. Autom. Lett. 2024, 9, 5615–5622. [Google Scholar] [CrossRef]

- Saeidi, H.; Wang, Y. Incorporating Trust and Self-Confidence Analysis in the Guidance and Control of (Semi)Autonomous Mobile Robotic Systems. IEEE Robot. Autom. Lett. 2019, 4, 239–246. [Google Scholar] [CrossRef]

- Hu, C.; Shi, Y.; Ge, S.; Hu, H.; Zhao, J.; Zhang, X. Trust-Based Shared Control of Human-Vehicle System Using Model Free Adaptive Dynamic Programming. IEEE Trans. Intell. Veh. 2024, 1–13. [Google Scholar] [CrossRef]

- Fang, Z.; Wang, J.; Liang, J.; Yan, Y.; Pi, D.; Zhang, H.; Yin, G. Authority Allocation Strategy for Shared Steering Control Considering Human-Machine Mutual Trust Level. IEEE Trans. Intell. Veh. 2024, 9, 2002–2015. [Google Scholar] [CrossRef]

- Nieuwenhuisen, M.; Droeschel, D.; Schneider, J.; Holz, D.; Läbe, T.; Behnke, S. Multimodal obstacle detection and collision avoidance for micro aerial vehicles. In Proceedings of the 2013 European Conference on Mobile Robots, Barcelona, Spain, 25–27 September 2013; pp. 7–12. [Google Scholar] [CrossRef]

- Odelga, M.; Stegagno, P.; Bülthoff, H.H. Obstacle detection, tracking and avoidance for a teleoperated UAV. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 2984–2990. [Google Scholar] [CrossRef]

- Backman, K.; Kulić, D.; Chung, H. Learning to Assist Drone Landings. IEEE Robot. Autom. Lett. 2021, 6, 3192–3199. [Google Scholar] [CrossRef]

- Zhang, Q.; Xing, Y.; Wang, J.; Fang, Z.; Liu, Y.; Yin, G. Interaction-Aware and Driving Style-Aware Trajectory Prediction for Heterogeneous Vehicles in Mixed Traffic Environment. IEEE Trans. Intell. Transp. Syst. 2025, 26, 10710–10724. [Google Scholar] [CrossRef]

- Igneczi, G.F.; Horvath, E.; Toth, R.; Nyilas, K. Curve Trajectory Model for Human Preferred Path Planning of Automated Vehicles. Automot. Innov. 2024, 7, 59–70. [Google Scholar] [CrossRef]

- Huang, C.; Huang, H.; Hang, P.; Gao, H.; Wu, J.; Huang, Z.; Lv, C. Personalized Trajectory Planning and Control of Lane-Change Maneuvers for Autonomous Driving. IEEE Trans. Veh. Technol. 2021, 70, 5511–5523. [Google Scholar] [CrossRef]

- Yang, X.; Sreenath, K.; Michael, N. A framework for efficient teleoperation via online adaptation. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 5948–5953. [Google Scholar] [CrossRef]

- Wang, Q.; Gao, Y.; Ji, J.; Xu, C.; Gao, F. Visibility-aware Trajectory Optimization with Application to Aerial Tracking. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 5249–5256. [Google Scholar] [CrossRef]

- Lee, J.D.; See, K.A. Trust in Automation: Designing for Appropriate Reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef]

- Mellinger, D.; Kumar, V. Minimum snap trajectory generation and control for quadrotors. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 2520–2525. [Google Scholar] [CrossRef]

- Falanga, D.; Foehn, P.; Lu, P.; Scaramuzza, D. PAMPC: Perception-Aware Model Predictive Control for Quadrotors. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Eiter, T.; Mannila, H. Computing Discrete Fréchet Distance; Technical Report CD-TR 94/64; Christian Doppler Laboratory: Vienna, Austria, 1994. [Google Scholar]

- Wang, Z.; Zhou, X.; Xu, C.; Gao, F. Geometrically Constrained Trajectory Optimization for Multicopters. IEEE Trans. Robot. 2022, 38, 3259–3278. [Google Scholar] [CrossRef]

- Zhou, B.; Gao, F.; Wang, L.; Liu, C.; Shen, S. Robust and Efficient Quadrotor Trajectory Generation for Fast Autonomous Flight. IEEE Robot. Autom. Lett. 2019, 4, 3529–3536. [Google Scholar] [CrossRef]

- Press, W.H.; William, H.; Teukolsky, S.A.; Saul, A.; Vetterling, W.T.; Flannery, B.P. Numerical Recipes 3rd Edition: The Art of Scientific Computing; Cambridge University Press: New York, NY, USA, 2007. [Google Scholar]

- Quan, L.; Yin, L.; Xu, C.; Gao, F. Distributed Swarm Trajectory Optimization for Formation Flight in Dense Environments. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 4979–4985. [Google Scholar] [CrossRef]

- Sanz, D.; Valente, J.; del Cerro, J.; Colorado, J.; Barrientos, A. Safe operation of mini UAVs: A review of regulation and best practices. Adv. Robot. 2015, 29, 1221–1233. [Google Scholar] [CrossRef]

- Broad, A.; Schultz, J.; Derry, M.; Murphey, T.; Argall, B. Trust Adaptation Leads to Lower Control Effort in Shared Control of Crane Automation. IEEE Robot. Autom. Lett. 2017, 2, 239–246. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).