1. Introduction

In modern industrial applications, rotating machinery is a core asset, with rolling bearings as one of its most critical components. Mechanical failures of rolling bearings are a common issue that can lead to motor malfunctions, reduced production efficiency, and even severe safety incidents [

1,

2]. Therefore, developing efficient and accurate fault diagnosis methods is of paramount importance. Such methods enable the prompt identification and localization of system anomalies, preventing further damage and thereby improving system reliability while reducing maintenance costs [

3,

4].

In the diagnostic process, extracting effective fault information from raw signals encounters two primary challenges: severe background noise interference and the limited availability of fault samples under real-world operating conditions. To overcome these challenges, researchers have employed a range of data preprocessing techniques. For instance, in the field of filtering technology, empirical mode decomposition (EMD) [

5] has been widely applied, although it suffers from limitations such as mode aliasing. To address this issue, ensemble empirical mode decomposition (EEMD) [

6,

7] was developed as an improved variant capable of further decomposing high-frequency signal components. Other advanced methods include wavelet packet transform (WPT) [

8] and empirical wavelet transform (EWT) [

9,

10], the latter of which integrates the concepts of wavelet transform and empirical mode decomposition to enable adaptive frequency band partitioning for signal decomposition.

As the volume of collected data continues to grow, the feature signals extracted from raw data often contain redundant noise and suffer from poor sample quality, which can negatively affect subsequent model training and analytical outcomes [

11]. Therefore, the application of denoising techniques constitutes a critical step in the diagnostic process. Signal decomposition approaches, with wavelet transform (WT) as a representative example, have been extensively utilized to suppress noise and highlight fault-related features. Wavelet threshold denoising leverages multi-scale decomposition to simultaneously capture both time and frequency features of the signal. This makes it particularly effective in handling non-stationary data, especially those with abrupt changes [

12,

13]. Meanwhile, to effectively address the issue of class imbalance resulting from insufficient fault data, algorithms such as the Synthetic Minority Over-sampling Technique (SMOTE) have emerged as widely adopted data augmentation strategies [

14]. For instance, Wang et al. [

15] effectively combined an improved SMOTE model with a CNN to tackle imbalanced bearing data, underscoring the importance of this preprocessing step. However, the majority of existing studies apply these techniques in a fragmented manner, without establishing a comprehensive, multi-phase preprocessing framework capable of addressing both poor signal quality and sample imbalance issues in a coordinated manner.

In the feature extraction and classification phase, deep learning models, with convolutional neural networks (CNNs) as a representative example, have gained widespread adoption owing to their capability to automatically extract discriminative features and effectively capture local patterns. For instance, Yang et al. [

16] proposed an interpretable intelligent fault diagnosis framework to address the black-box issue caused by mixed feature decisions in standard CNNs for rotating machinery fault diagnosis, achieving both fault signal recognition and visual interpretation of model features. Reference [

3] proposes a deeply integrated framework termed SKND-TSACNN, which effectively integrates Time-Scale Adaptive CNN (TSACNN) and Neural Denoiser (SKND). The proposed framework is specifically designed to overcome the limitations of traditional CNN models, including low diagnostic accuracy and insufficient noise resistance, particularly under complex operational conditions and in the presence of significant noise interference. Zhong et al. [

17] proposed a remaining useful life prediction method for rolling bearings based on an improved convolutional bidirectional gated recurrent unit neural network (CNN-BGRU), which significantly enhanced the accuracy of predicting remaining useful life compared to existing AI-based approaches. CNNs offer advantages such as translation-invariant classification, weight sharing, and efficient convolution operations [

18]. However, due to the limitation of their local receptive fields [

19], modeling long-range dependencies remains challenging [

20,

21]. To address this limitation, researchers have increasingly explored the application of the Transformer framework in fault diagnosis. Initially developed for natural language processing (NLP) [

22], the Transformer has since been extended to various domains, including computer vision, multimodal learning, industrial monitoring, and fault diagnosis. In [

23], a rolling bearing fault diagnosis method was proposed that integrates sub-domain adaptation (SA) with an improved visual Transformer network (IVTN), effectively combining local and global information to achieve high-precision fault diagnosis under variable-speed and variable-load conditions. In [

24], a self-supervised learning (SSL) approach incorporating a self-attention mechanism was introduced, leveraging the Transformer architecture to enhance temporal feature learning and global modeling, thereby improving diagnostic performance under novel operating conditions. In [

25], a PRT model based on the Transformer was proposed, utilizing dense overlapping splitting and a class attention mechanism to improve feature learning. Li et al. [

26] introduced a variational attention mechanism into the Transformer architecture to better capture the correlation among vibration signals in rotating machinery fault diagnosis and to enhance model interpretability. However, transformer models generally necessitate large-scale datasets for effective training, and the optimization process entails substantial computational resources, thereby restricting their deployment in real-world industrial applications.

To overcome the limitations of CNNs in modeling long-range dependencies and to reduce the high computational complexity associated with Transformers, researchers have developed CNN-Transformer hybrid architectures [

27]. These models effectively integrate the local feature extraction capability of CNNs with the global modeling strengths of Transformers. The C-ECAFormer architecture proposed by Wang et al. combines the spatial sensitivity of CNNs with the global attention mechanism of Transformers, achieving robust fault diagnosis performance under conditions of strong noise and limited sample sizes [

28]. In [

29], a multi-task CNN-Transformer (MT-ConvFormer) method was proposed for bearing fault diagnosis, addressing key challenges in current intelligent diagnosis approaches, including the limited exploration of multi-fault task analysis and the challenge of capturing complementary fault characteristics. This method also enhances diagnostic performance under noisy environments and in cases of imbalanced data distribution. Fang et al. proposed the CLFormer architecture, enhancing the resilience and precision of Transformers in the context of bearing fault identification by incorporating convolutional embedding and a linear self-attention mechanism (LSA) [

30]. Although these hybrid approaches partially mitigate the limitations of both CNNs and Transformers, they still face challenges such as performance redundancy and limited engineering adaptability due to complex network structures. Furthermore, while previous studies have explored individual components of our proposed framework—such as the CNN-Transformer architecture for multi-task learning [

29], the use of SMOTE with CNNs for imbalanced data [

15], and the application of Bayesian optimization to standard CNNs [

31]—they often focus on improving a single stage of the diagnostic pipeline.

A systematic integration that synergistically combines advanced signal preprocessing, a hybrid feature extractor, and intelligent hyperparameter optimization for such a complex architecture remains underexplored. Therefore, building upon the CNN-Transformer hybrid framework, this study innovatively incorporates two critical components—a comprehensive data preprocessing pipeline (EMDWS) and an automated hyperparameter optimization strategy (BO)—aiming to create a holistic, end-to-end diagnostic solution. This integrated approach not only mitigates the practical application challenges of existing hybrid models but also enhances diagnostic accuracy and robustness under complex conditions such as strong noise and limited sample sizes, providing a more engineering-feasible solution for the efficient fusion of CNN and Transformer architectures.

The principal contributions of this study are delineated as follows:

(1) We propose a holistic preprocessing framework, EMDWS, that systematically integrates EMD, Wavelet Denoising, and SMOTE to simultaneously address the dual challenges of poor signal quality and data imbalance in complex conditions.

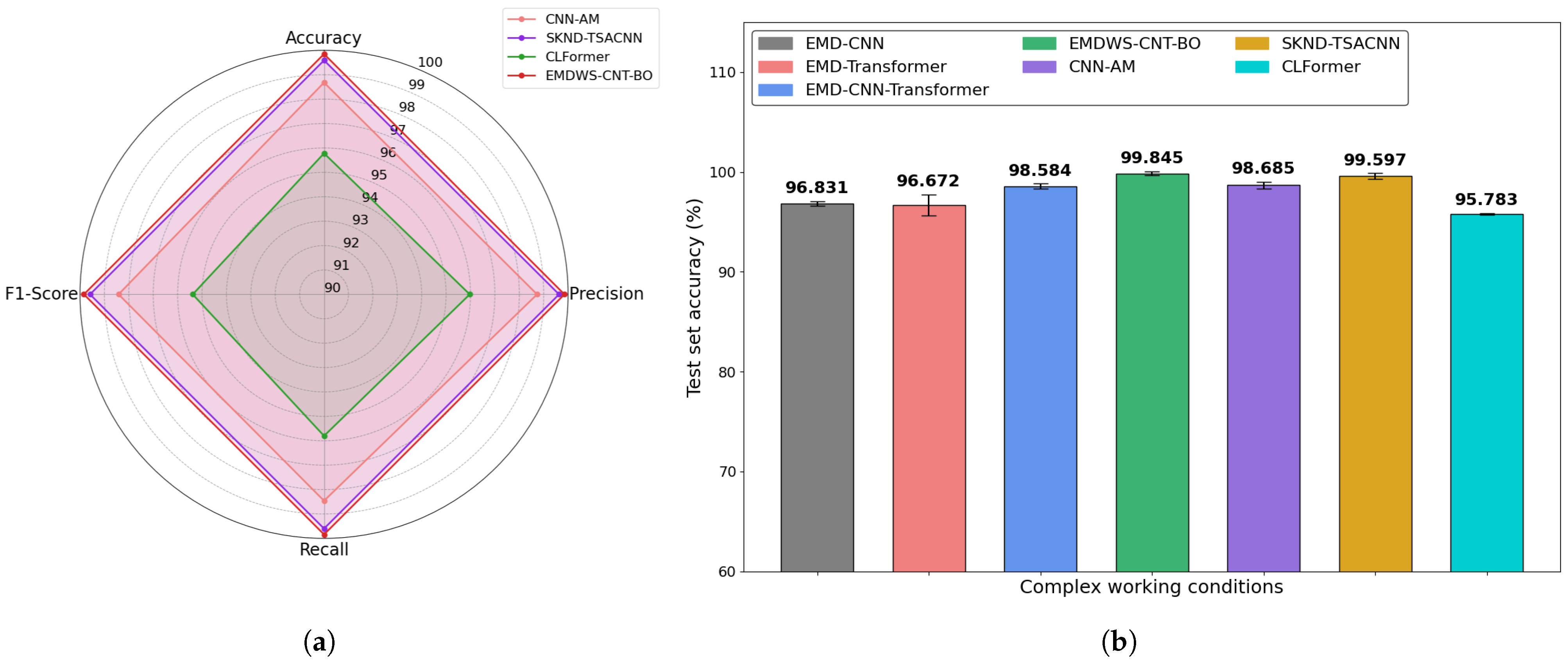

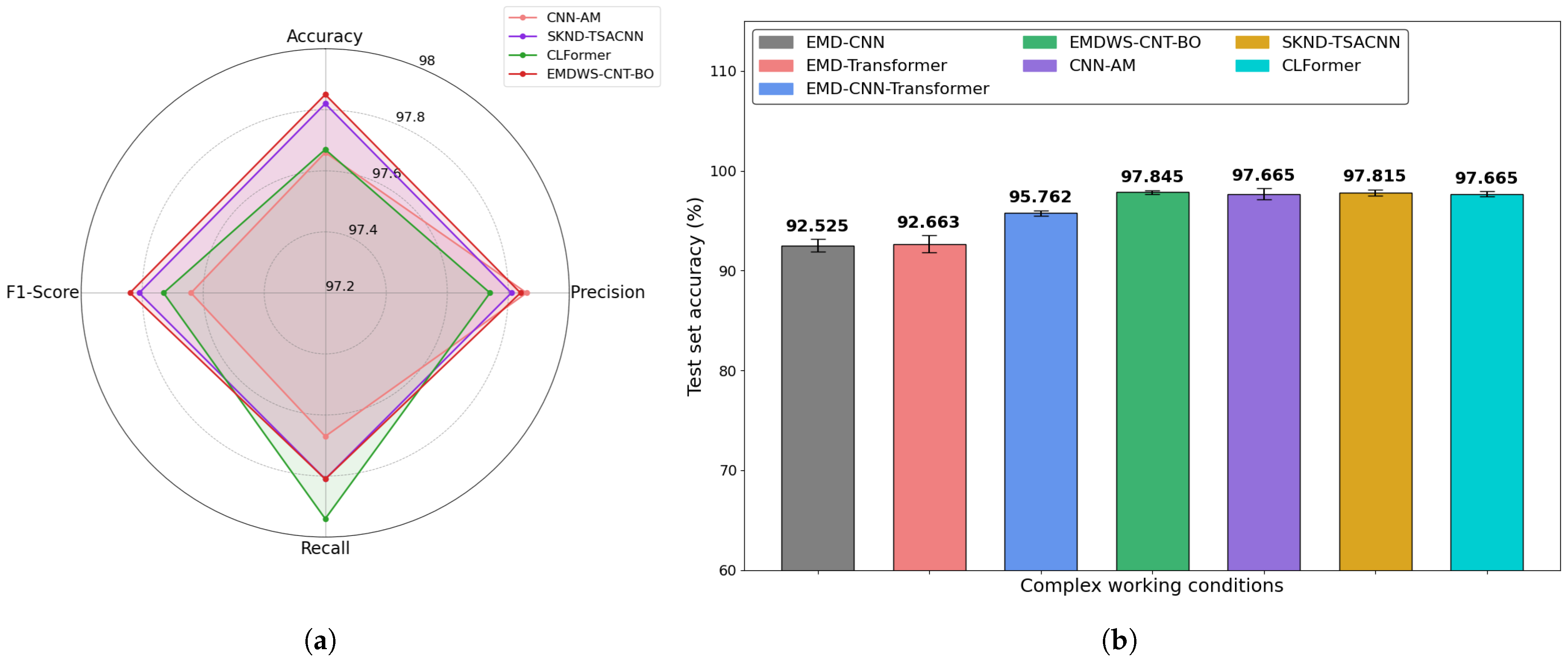

(2) We validate the synergistic effect between a hybrid CNN-Transformer architecture and Bayesian Optimization (BO), demonstrating that BO is a critical component—not merely an add-on—for maximizing the diagnostic performance of such a complex model.

(3) We present and validate an end-to-end automated diagnostic pipeline, EMDWS-CNT-BO. Experiments on multiple datasets prove that this systematically integrated framework is significantly superior to baseline models that lack either comprehensive preprocessing or hyperparameter optimization.

3. The Proposed Approach

To address the signal processing challenges identified in

Section 2 and fulfill the critical requirements for adaptability, accuracy, and robustness in diagnostic modeling, this study proposes and establishes an end-to-end, systematically integrated framework for rolling bearing fault diagnosis, termed EMDWS-CNT-BO. Unlike conventional approaches that merely stack individual techniques, the proposed framework is designed as a synergistically optimized and cohesive system, comprising three core components: (1) the EMDWS collaborative signal preprocessing module; (2) the CNT (CNN-Transformer) hybrid feature extraction module; and (3) the Bayesian hyperparameter intelligent optimization module.

3.1. The EMDWS Synergistic Signal Preprocessing Module

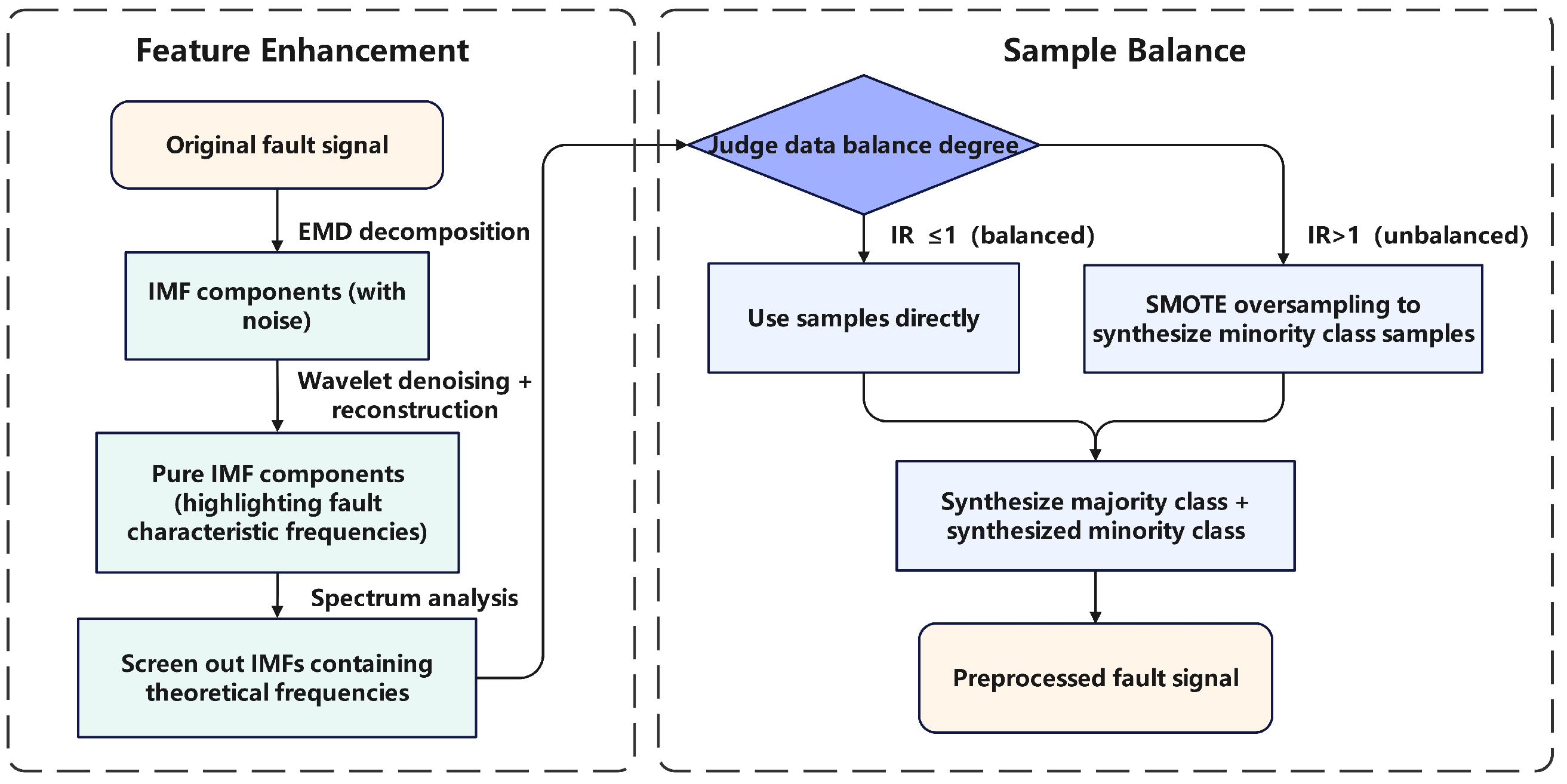

To tackle the two primary challenges—low signal-to-noise ratio in raw vibration signals and imbalanced fault sample distribution—this study proposes the EMDWS preprocessing pipeline. The objective of this pipeline is to generate a high-quality dataset characterized by enhanced feature representation and balanced class distribution, thereby facilitating improved performance of downstream deep learning models. The detailed processing steps are illustrated in

Figure 2 and are elaborated below:

Stage 1: Adaptive Signal Decomposition

The process initiates with the original non-stationary vibration signal . Utilizing the fully data-driven nature of Empirical Mode Decomposition (EMD), the signal is adaptively decomposed into a sequence of Intrinsic Mode Functions (IMFs) ordered from high frequency to low frequency, denoted as . This decomposition requires no predefined basis functions or parameters, thereby ensuring its applicability across diverse operating conditions.

Stage 2: IMF Screening and Targeted Denoising IMF

To ensure the validity of the decomposition, we screen the resulting Intrinsic Mode Functions (IMFs), discarding samples that yield fewer than seven IMFs as required by this study. Following this, to further purify the signal, wavelet threshold denoising is independently applied to each screened IMF, . For this stage, we utilized the db8 wavelet basis with a 3-level decomposition and applied a Donoho universal hard threshold for noise suppression. Owing to the more homogeneous characteristics of IMFs compared to the original signal, this wavelet-based approach can effectively suppress noise while preserving critical information associated with fault features. This process results in a set of denoised IMF components, .

Stage 3: Data Balancing

Finally, to mitigate the adverse effects of data imbalance on model training, the Synthetic Minority Over-sampling Technique (SMOTE) is applied to the training set consisting of pure Intrinsic Mode Functions (IMFs). By generating synthetic samples within the feature space of minority class instances, SMOTE effectively increases their representation, thereby ensuring balanced data exposure during the training process and preventing the model from developing a bias toward the majority class.

Figure 2.

Flowchart of the EMDWS Preprocessing Procedure.

Figure 2.

Flowchart of the EMDWS Preprocessing Procedure.

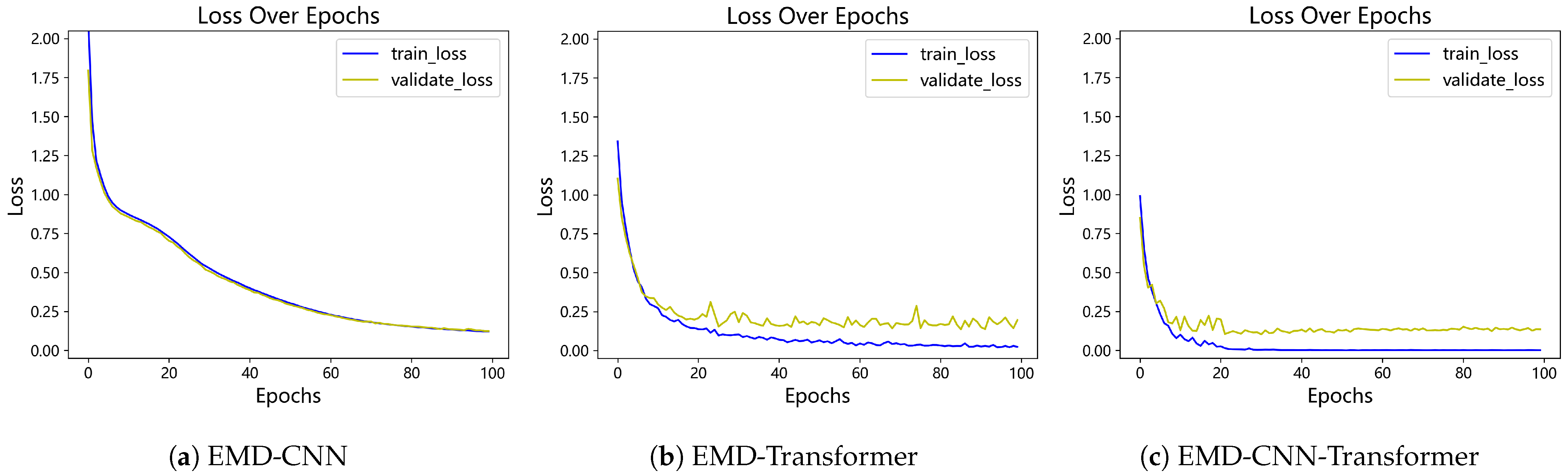

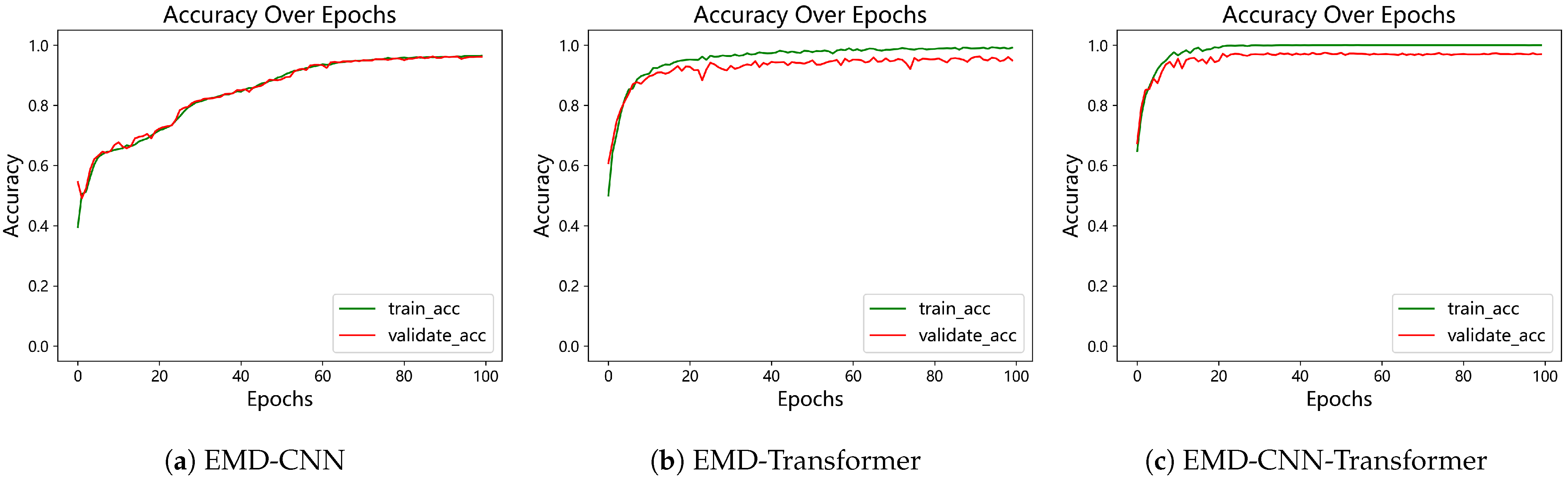

3.2. The CNN-Transformer Hybrid Feature Extraction Model

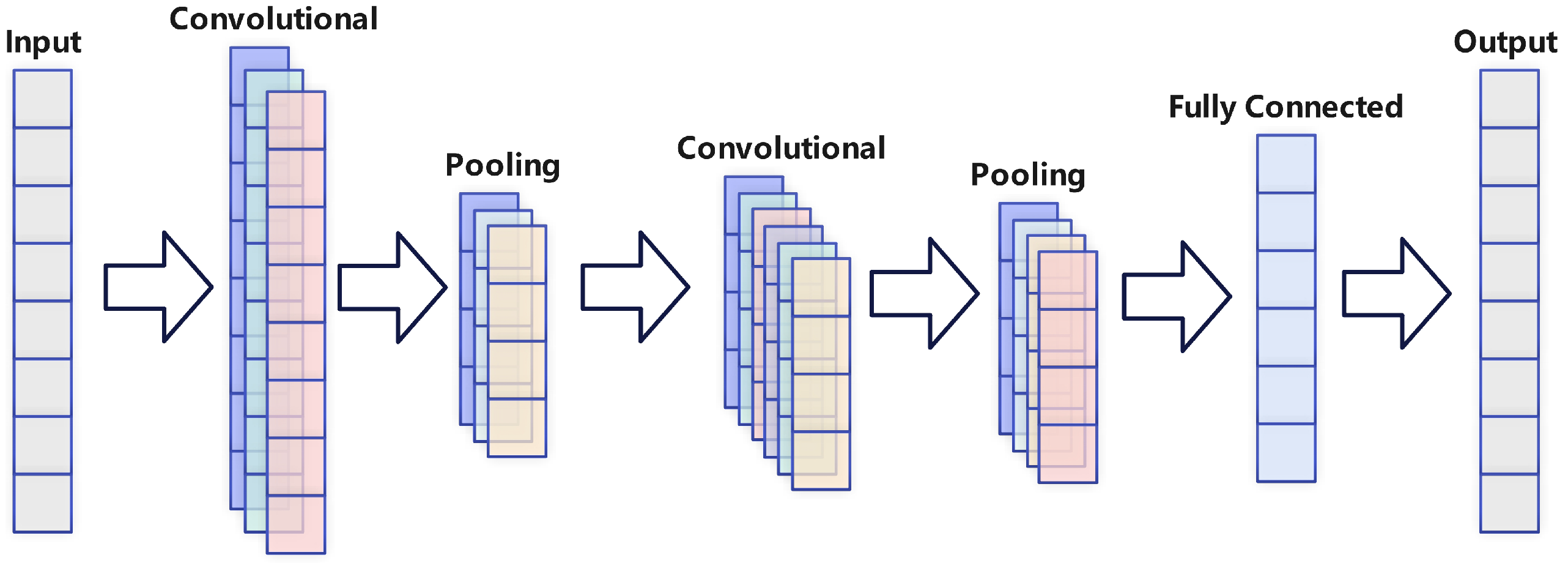

The integration of CNN and Transformer architectures enables complementary advantages. Convolutional Neural Networks (CNNs) possess translation invariance and localized receptive fields, making them highly effective at identifying local patterns in signals—such as edges, textures, or transient features. In contrast, the self-attention mechanism of the Transformer exhibits strong global perception capabilities, enabling the capture of long-range dependencies across any positions in a sequence. A hybrid design, as depicted in

Figure 3, typically employs the initial layers of CNN to rapidly extract low-level features and reduce sequence length, followed by the Transformer, which then learns global contextual information on the compressed representation. This approach not only enhances computational efficiency but also preserves expressive modeling capacity. Furthermore, CNNs benefit from inductive biases—such as weight sharing in convolutional kernels—that allow effective training with smaller datasets, whereas pure Transformer models often require large-scale data. Consequently, in scenarios with limited data availability or where robustness is critical, hybrid models tend to demonstrate superior performance.

- (1)

CNN Feature Extraction Stage

The CNN component captures fundamental local features by processing the input sequence through multiple stages. Initially, convolutional layers identify localized patterns, followed by normalization and application of the ReLU activation function to model nonlinear relationships. Afterwards, max pooling layers reduce the spatial size of the feature maps, minimizing dimensionality while maintaining crucial information, ultimately producing a refined and compact feature representation.

Max pooling layer:

where

is the input feature map,

and

are the weights and biases of the convolutional layer, BN stands for Batch Normalization, ReLU is the activation function, and MaxPool is the max pooling layer.

- (2)

Feature dimension adjustment stage

The CNN module outputs feature maps with dimension (B, C, L), whereas the transformer module requires input data in the format (B, L, C). To ensure compatibility, a dimension permutation operation is applied to reorganize the feature maps from (B, C, L) to (B, L, C) before they are fed into the transformer module.

Adjusted Feature Map:

where B is the batch size, C is the number of channels, L is the sequence length, and Permute is the dimension adjustment operation.

- (3)

Transformer Encoder Stage

The Transformer encoding phase involves passing the feature sequences through multiple identical encoder layers. Each layer carries out a two-stage transformation: first utilizing a multi-head self-attention mechanism, and then applying a position-based feedforward neural network (FFN). Importantly, residual connections combined with layer normalization are employed after both stages to enhance training efficiency and model convergence.

The complete transformation process within a single Transformer encoder layer, which transforms the input sequence x into the output sequence , can be formulated as follows:

Initially, an intermediate sequence

is produced by processing the input

x through the multi-head self-attention mechanism, after which a residual connection and layer normalization are applied:

Subsequently, this intermediate sequence

is passed through the feed-forward network, again with a residual connection and layer normalization, to produce the final output

:

where the component functions are defined as:

is the multi-head self-attention mechanism, calculated as .

represents the feed-forward network, typically computed as .

LayerNorm denotes the layer normalization operation. The terms are the query, key, and value matrices derived from the input, while are learnable model parameters.

- (4)

Global average pooling and classification stage

To transform the output of the Transformer encoder into a fixed-dimensional representation, an adaptive average pooling layer is employed to perform global average pooling across the encoder’s feature maps. This operation computes the average value for each channel, resulting in a compact and fixed-length feature vector. Subsequently, the pooled features are passed through a fully connected layer to generate the final classification predictions.

Adaptive average pooling layer:

Fully connected layer:

where AdaptiveAvgPool1d is the adaptive average pooling layer,

W and

b are the weights and biases of the fully connected layer, and Softmax is the activation function.

The proposed architecture achieves an effective fusion of CNN and Transformer functionalities. The CNN module initially processes segments of the input sequence to extract localized feature patterns. Subsequently, the Transformer architecture models the long-range temporal dependencies across these features. Final classification is conducted using a global average pooling layer followed by a fully connected layer. This hybrid approach fully leverages the respective strengths of CNNs and Transformers, enhancing the model’s performance.

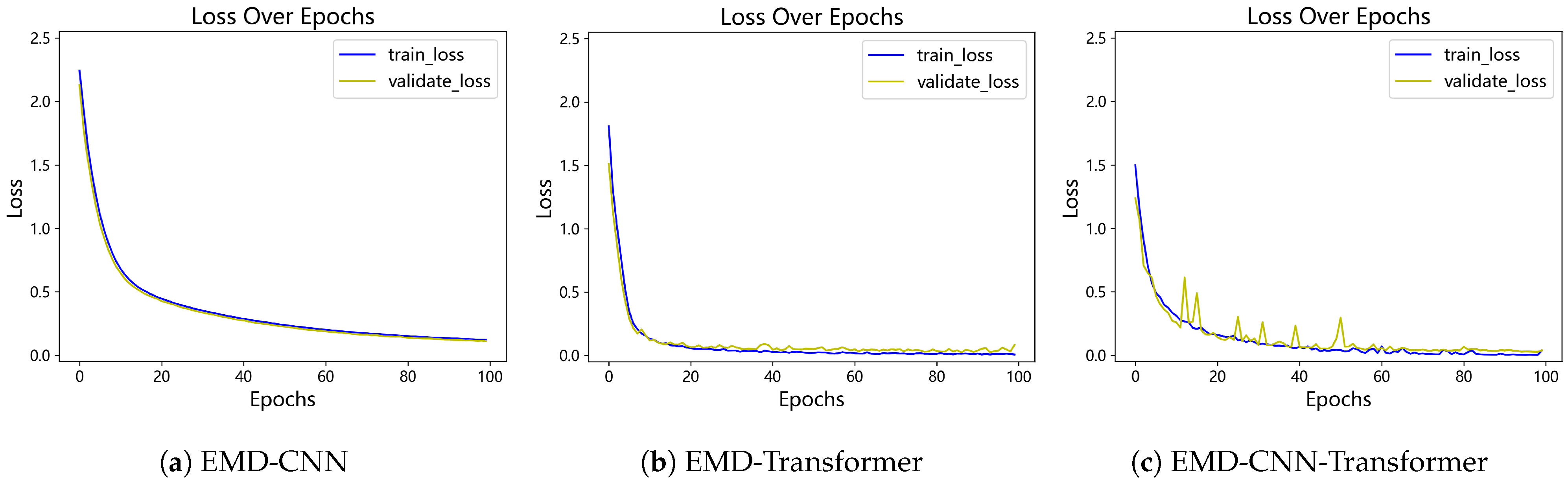

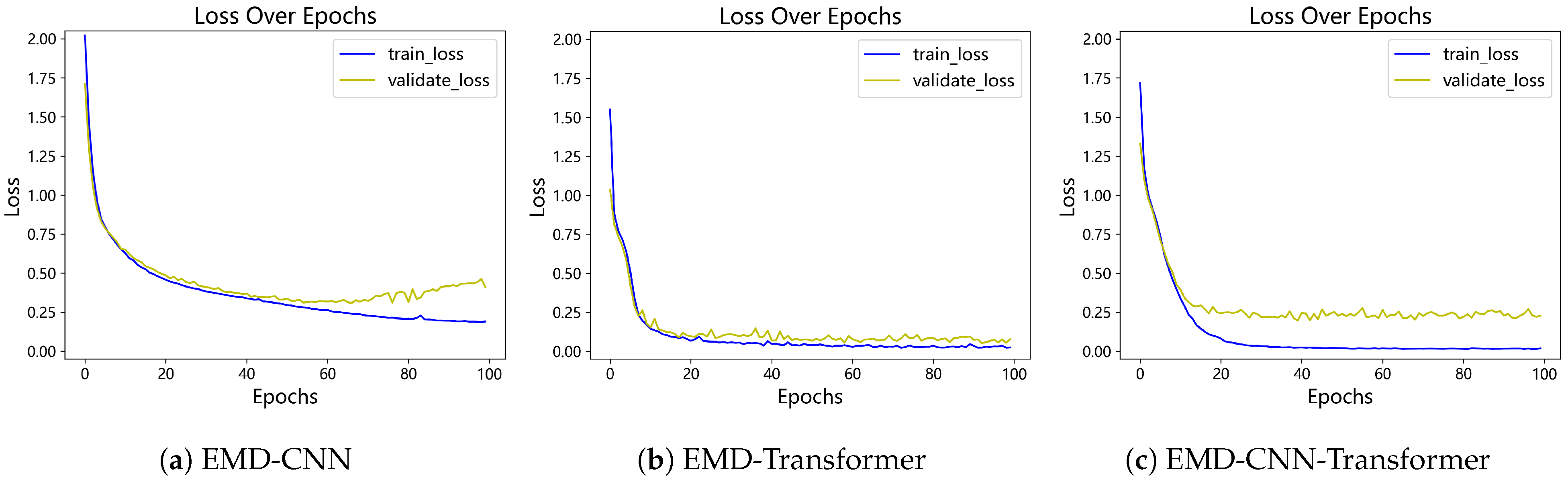

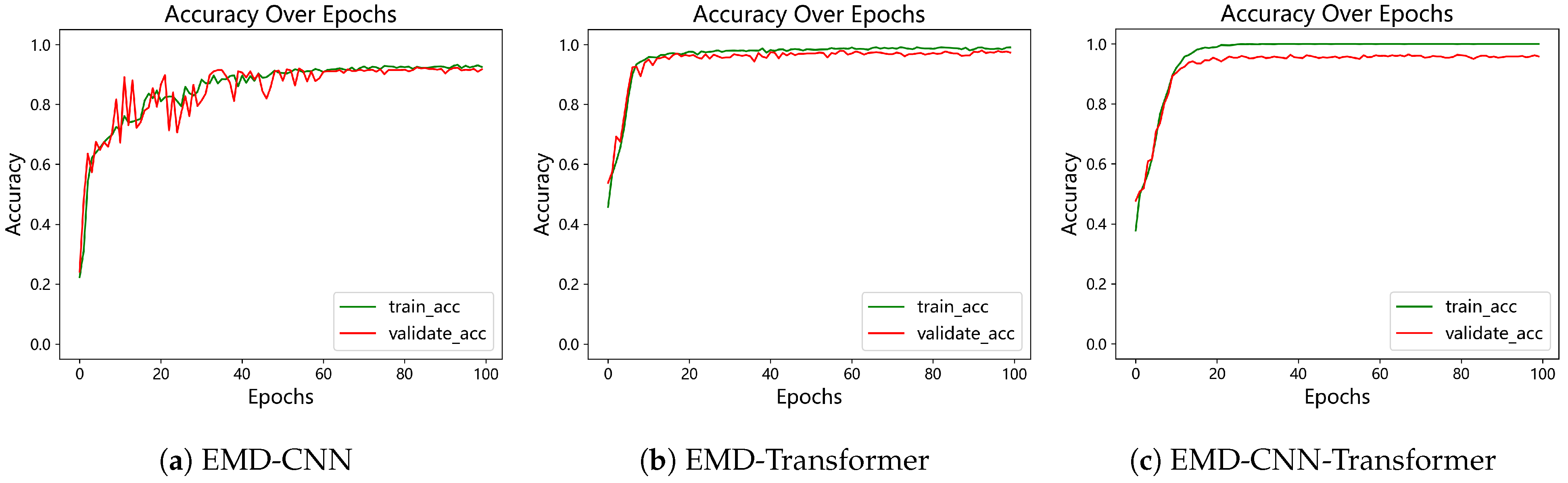

To ensure a stable and efficient training process for the designed hybrid model and to fully exploit its performance potential, we adopted a comprehensive training strategy. We selected the Adam optimizer for the iterative updating of model parameters and employed Focal Loss as the loss function in place of the conventional cross-entropy loss. By setting the gamma parameter () to 2.5, Focal Loss enables the model to focus more on hard-to-classify examples during training, thereby enhancing the model’s overall robustness.

To further enhance training stability and promote model convergence, we implemented two key techniques. First, we introduced a gradient clipping mechanism, limiting the maximum norm of the gradients to 1.0, which effectively prevents the problem of exploding gradients. Second, we utilized the ReduceLROnPlateau learning rate scheduler. This strategy continuously monitors the validation loss and reduces the learning rate by a factor of 0.2 if no improvement is observed for 5 consecutive epochs. This helps the model to more finely search for the optimal solution in the later stages of training. Finally, throughout the training process, we saved the model weights that achieved the lowest validation loss. This set of weights was selected as the final model for testing and deployment, serving as an effective strategy to prevent overfitting.

3.3. Bayesian Optimization for Hyperparameter Adaptation

3.3.1. Hyperparameter Optimization Process Design

To determine the optimal model configuration and ensure maximal performance, this study utilized Bayesian Optimization for automated hyperparameter tuning. The flowchart of this process is illustrated in

Figure 4, and the procedure comprises the following key stages:

- (1)

Data and Search Space Initialization

Initially, independent training, validation, and test sets are established to ensure evaluation objectivity. Guided by domain-specific prior knowledge and the structural properties of the model, the hyperparameter search space is defined—including learning rate, network architecture parameters, and regularization coefficients—along with their corresponding value ranges and initial default settings, thereby establishing constraint boundaries for the subsequent Bayesian search process.

- (2)

Bayesian Iterative Search Mechanism

In this study, the optimization process was implemented using the BayesSearchCV framework from the scikit-optimize library in Python (version 3.9), which integrates Bayesian optimization with a cross-validation scheme. We configured the search to run for a total of 10 iterations (n_iter = 10).

For each iteration, a promising hyperparameter combination was sampled from the search space. The performance of the model with these hyperparameters was then evaluated using a 3-fold Stratified Cross-Validation (cv = 3) strategy on the training data. This cross-validation approach ensures a robust estimation of the model’s performance and mitigates potential biases from a single data partition. The mean cross-validated accuracy score served as the objective function that the Bayesian optimizer sought to maximize. The results from each fold were fed back to the Gaussian Process surrogate model, which updated its posterior distribution to intelligently guide the selection of the next hyperparameter combination, effectively balancing exploration and exploitation.

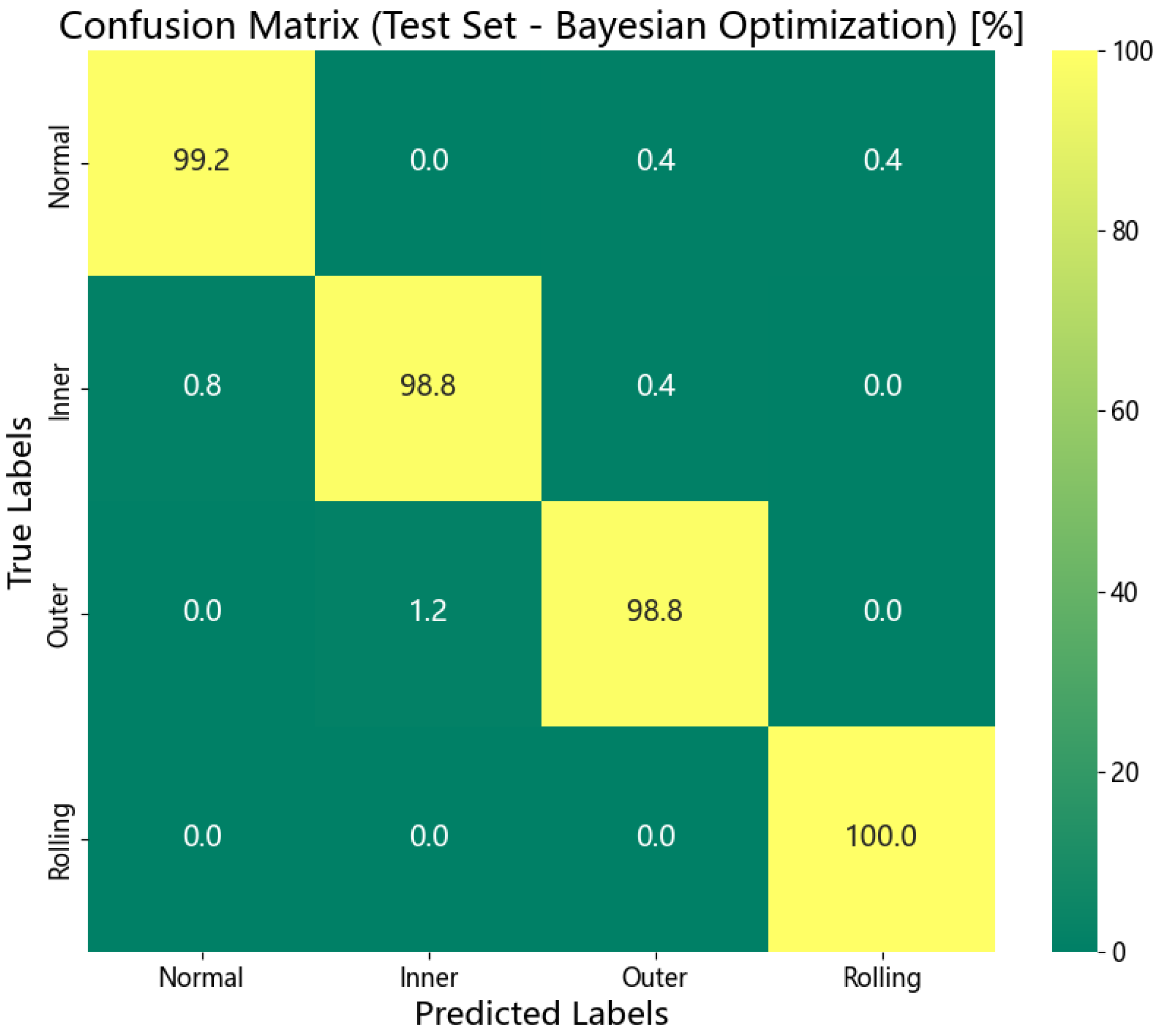

- (3)

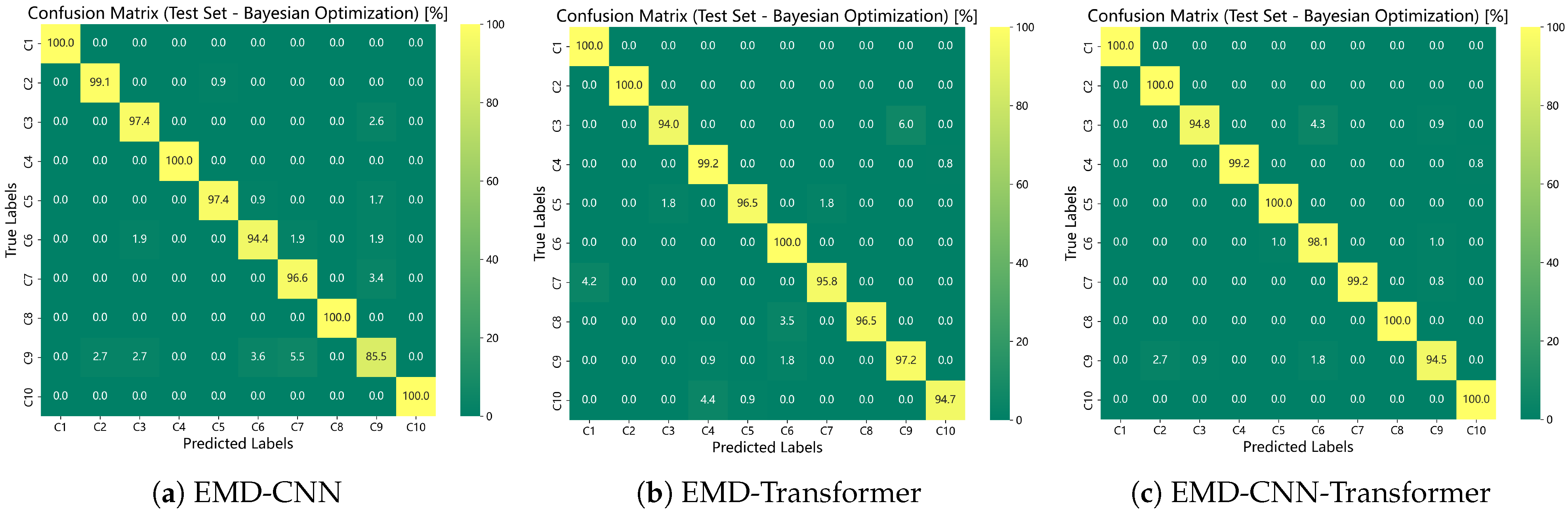

Verification and Deployment of Optimal Parameters

Upon completion of the iterative process, the optimal hyperparameter configuration generated by the Bayesian framework is retrieved. The model is subsequently retrained on the entire training dataset, and its performance is rigorously evaluated using the test set across multiple metrics—including accuracy, recall rate, and computational efficiency—to objectively assess the impact of hyperparameter optimization on both model generalization and inference speed.

Figure 4.

Bayesian hyperparameter optimization flowchart.

Figure 4.

Bayesian hyperparameter optimization flowchart.

3.3.2. Hyperparameter Configuration and Search Space Design

Considering the characteristics of the hybrid architecture, the hyperparameter search space is systematically defined across four key dimensions: training strategy, Transformer architecture, CNN-based feature extraction, and regularization constraints. The detailed configuration is summarized in the following

Table 1:

5. Discussion

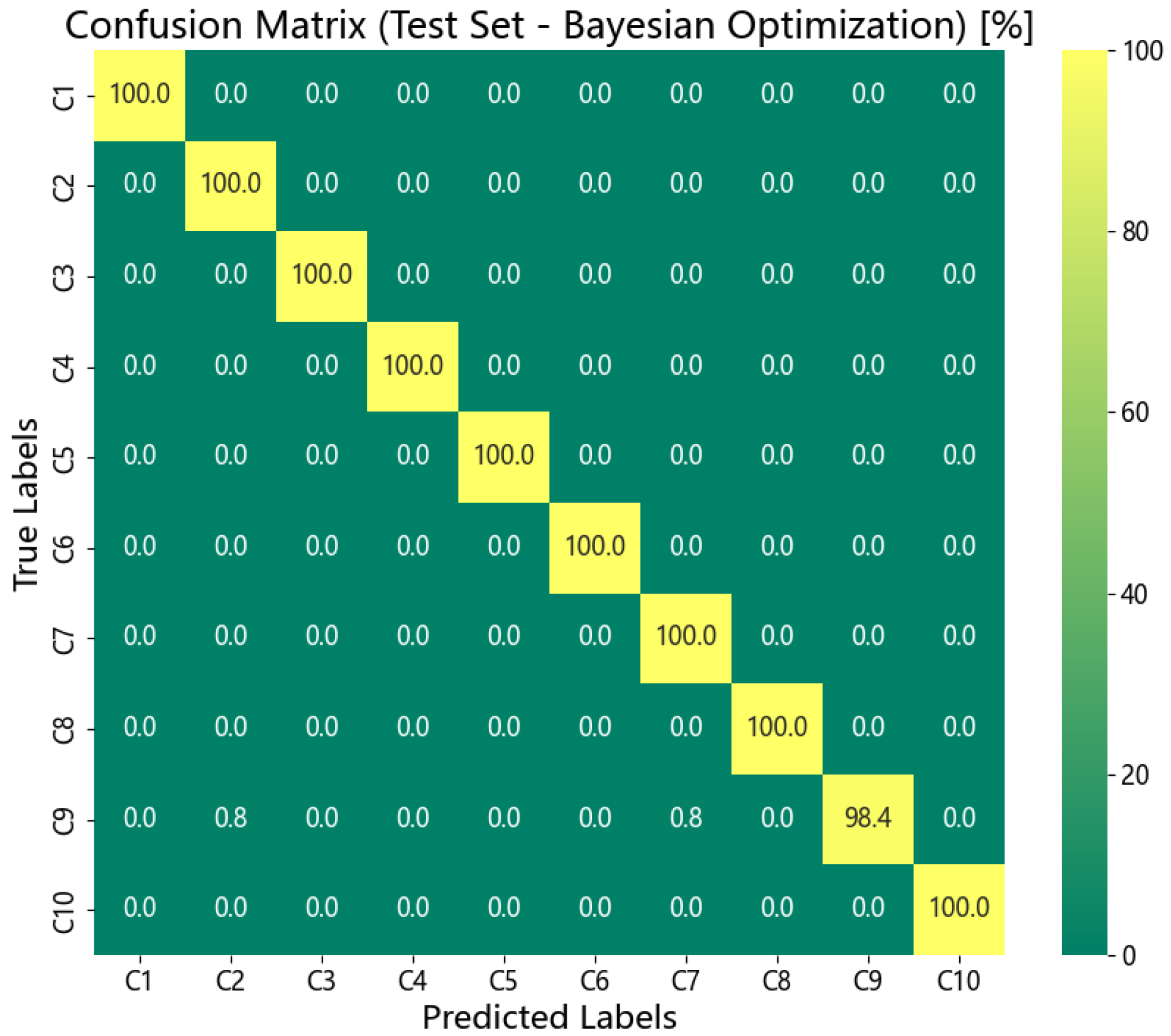

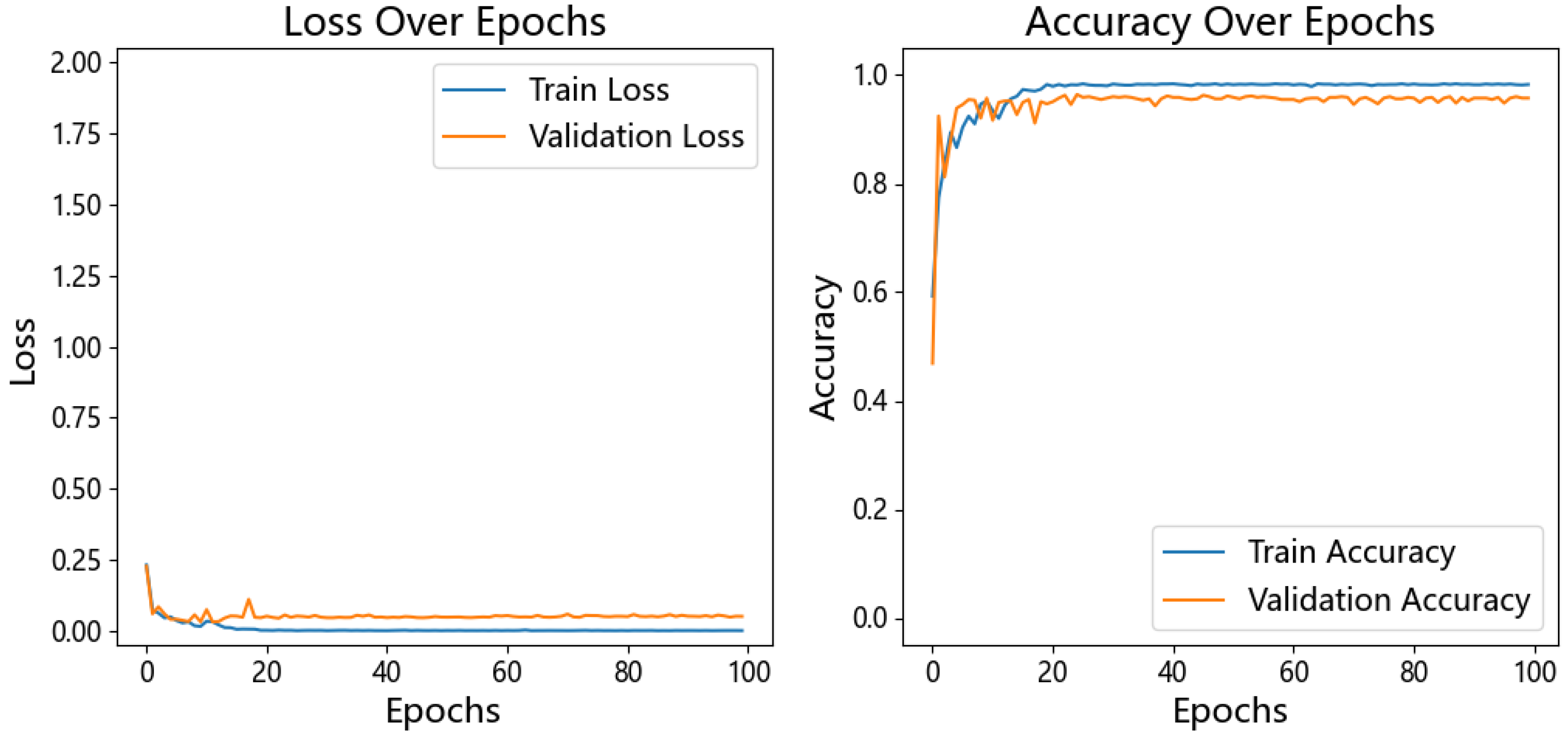

The EMDWS-CNT-BO framework proposed in this study, through its deep integration of systematic signal preprocessing, a hybrid CNN-Transformer architecture, and Bayesian hyperparameter optimization, has demonstrated its superiority as an advanced diagnostic paradigm across multiple bearing datasets. The framework not only achieved near-perfect recognition accuracy on standard datasets but also showcased its robust capability in handling non-stationary, noisy, and imbalanced data.

However, despite the significant success of our framework, we must objectively acknowledge its limitations in the context of complex industrial applications. First, this study validates the model’s effectiveness in a closed-set recognition scenario. A closed-set assumption posits that a test sample must belong to one of the classes known during training. This setup is common in many early simulation-driven AI diagnostic studies, such as the work by Xiang & Zhong [

47], who utilized FEM simulation data to train an SVM model for distinguishing several known shaft system faults. While this approach is valuable for validating diagnostic logic and analyzing fault mechanisms, it carries a risk of misclassification in real-world industrial settings, where novel or compound faults not seen during training may emerge at any time.

Second, this challenge is preliminarily highlighted in the custom-built dataset from Case Study 3. By introducing a more realistic domain shift through a change in the experimental venue, the model’s performance, while still high, exhibited a slight degradation compared to its performance on stable datasets. This indicates that subtle variations in data distribution, caused by factors such as background noise and minor adjustments in sensor placement, still pose a challenge to the model’s generalization ability.

Therefore, future work will primarily focus on overcoming the aforementioned limitations. A core direction is to upgrade the model from a closed-set framework to an open-set recognition (OSR) paradigm, endowing it with the ability to identify “unknown” faults and thus avoid forced misclassification. Concurrently, research into model lightweighting and edge deployment is crucial. This involves employing techniques like knowledge distillation and network pruning to maintain high diagnostic accuracy while meeting the real-time monitoring demands of practical equipment such as machine tools. Furthermore, we will continue to optimize the model architecture and incorporate techniques like domain adaptation to enhance its generalization capabilities across different operating conditions and address the challenge of domain shift. Finally, to verify the versatility of our framework, we plan to apply it to a broader range of industrial time-series tasks, such as gearbox fault diagnosis and rotor crack detection, to explore its potential as a general-purpose time-series analysis paradigm.