1. Introduction

As the last process of modern manufacturing, mechanical assembly has a direct impact on product quality. In the era of Industry 5.0 [

1,

2,

3], robots are extensively utilized in modern industrial manufacturing fields, such as those of computer, communication and consumer electronics (3C) [

4] manufacturing, automobile assembly [

5], and reducer installation [

6].

However, most robots are limited to position-controlled operations, such as gripping, placing, and painting [

7]. They lack the flexibility and perception required for precision work like peg-in-hole (PiH) assembly [

8], welding [

9,

10], and grinding. Additionally, autonomous planning and decision-making abilities are necessary for such tasks. Therefore, force sensors [

11,

12] are typically installed at the end of a robot to enable real-time motion adjustment based on force-moment information from the sensors. This technology is known as active flexible assembly technology, and the PiH task represents a typical application of this approach.

The PiH task can be divided into two primary processes: hole-searching and insertion. During the hole-searching phase, conventional techniques, such as Archimedean spiral search [

13], grid search [

14], and random search [

15], are commonly employed in blind searches. The blind search method using force sensors may require extensive time and distance during the search phase, potentially exacerbating issues associated with such a technique. As a result, visual servoing is frequently utilized in PiH tasks [

16]. Visual servoing can partially address the issue of difficult assembly convergence caused by position errors [

17]. However, the introduction of visual servoing increases the amount of information to be processed. Therefore, machine learning is applied in the field of vision-based PiH assembly [

18,

19].

Chang implemented a dynamic visual servo-based miniature PiH assembly method [

20]. The PiH task is the most intricate process in assembly, encompassing several key components such as robotic trajectory planning, peg and hole contact state determination, and pose adjustment. As a result, numerous scholars have integrated machine learning into PiH tasks to emulate human operations through reinforcement learning [

21,

22], convolutional neural networks [

23], piecewise strategies, hierarchical learning [

24] and decoupling control [

25].

However, the current PiH assembly tasks are predominantly limited to circular peg and hole assembly, with research on shaped-PiH assembly still in its infancy. Song carried out complex-shaped PiH assembly under the teaching mode of a robot [

26], while Park experimented with circular, triangular, and square PiH assembly using a manipulator [

27]. However, they all used the blind searching method, which is less efficient. Li employed a multi-stage search method to perform PiH assembly with irregular shapes under partial constraints; yet, this approach failed to achieve the precise relative pose of the two assembly platforms and lacks universality [

28].

To avoid having too many training samples for neural networks, which can damage the device, it is transformed into a regression problem [

29], combining vision and force perception to complete the assembly of 16 kinds of sockets. Ahn completed the PiH assembly task for square, star, and double cylinder shapes through blind search using deep reinforcement learning algorithms. This study used hand-guiding to initially locate the assembling surface, so that the robotic arm only had to perform the insertion task. However, this design overlooks a crucial aspect: the most difficult point of automated PiH assembly is allowing the robotic arm to find the location of the hole with eyes (visual servoing) and to use hands (force sense) like a human to adjust the peg’s angle, position, and speed for smooth insertion into the hole. If human intervention is used to guide the peg near the hole, then the entire assembly system also intervenes in the intelligent body and greatly reduces subsequent insertion difficulties. This assembly strategy goes against the original purpose of automated assembly.

The aim of this paper is to devise a novel and versatile autonomous assembly mechanism for PiH tasks, as obtaining the pose presents a significant challenge in PiH assembly. Once the precise relative pose between the two assembly platforms is obtained, the robotic arm can easily replicate the PiH operation. Due to the ability of a circular peg to rotate around its axis even after assembly, achieving precise positional alignment between the working end and the fixed end of the robot is unattainable. Therefore, it is necessary to design a peg that constrains circumferential rotation.

The new assembly strategy allows for the replacement of pegs and holes of any shape once cross-shaped peg-in-hole assembly has been achieved, without the need to reformulate the assembly strategy or design specialized sensors. Only calibration of the robotic arm and the sensor is required to reproduce the research content presented in this paper and obtain the relative pose between the peg and the hole. This feature allows for the replacement of the shapes and sizes of the pegs and holes while maintaining proper functionality, thereby reducing the workload of users and improving industrial production efficiency.

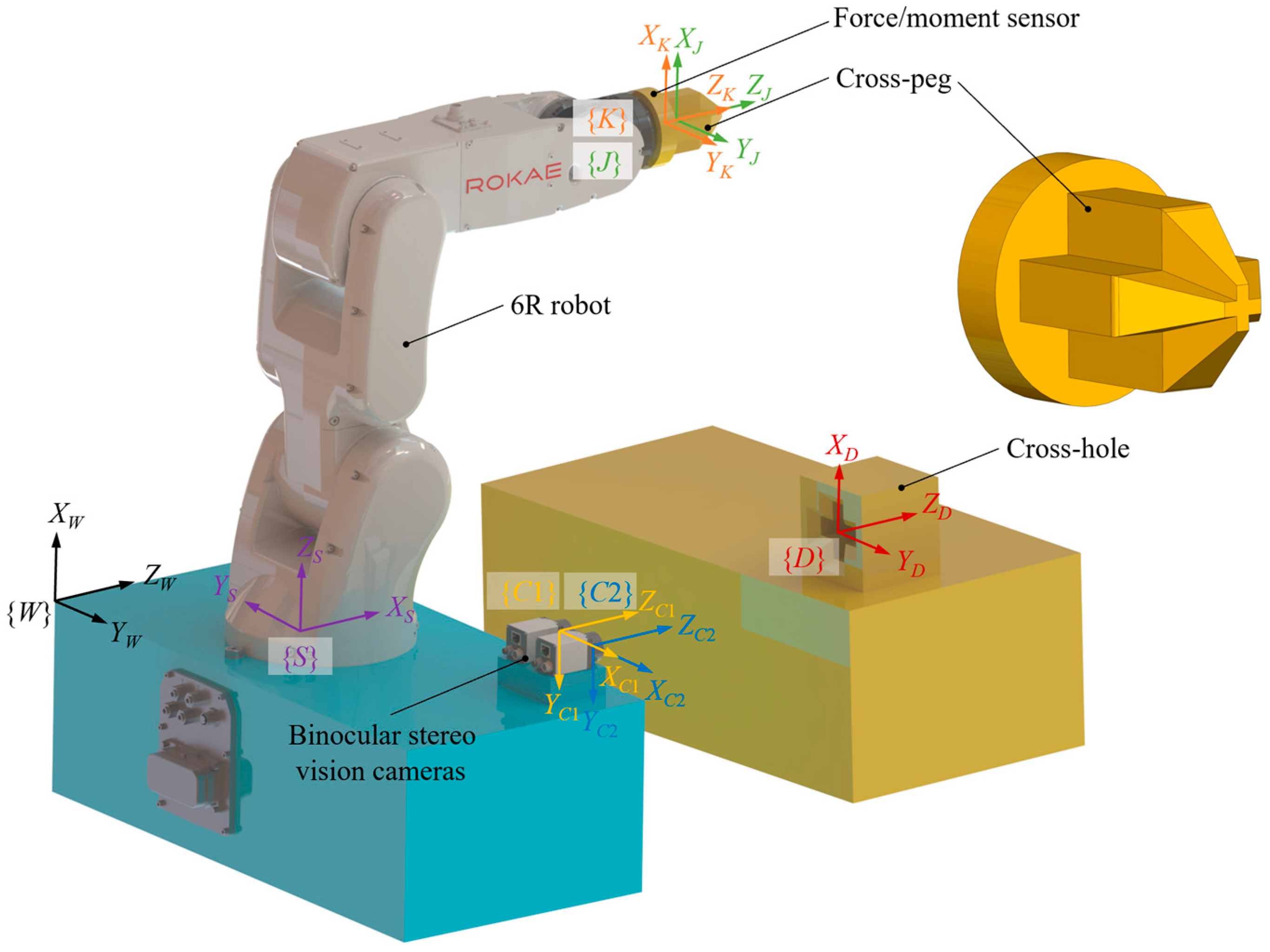

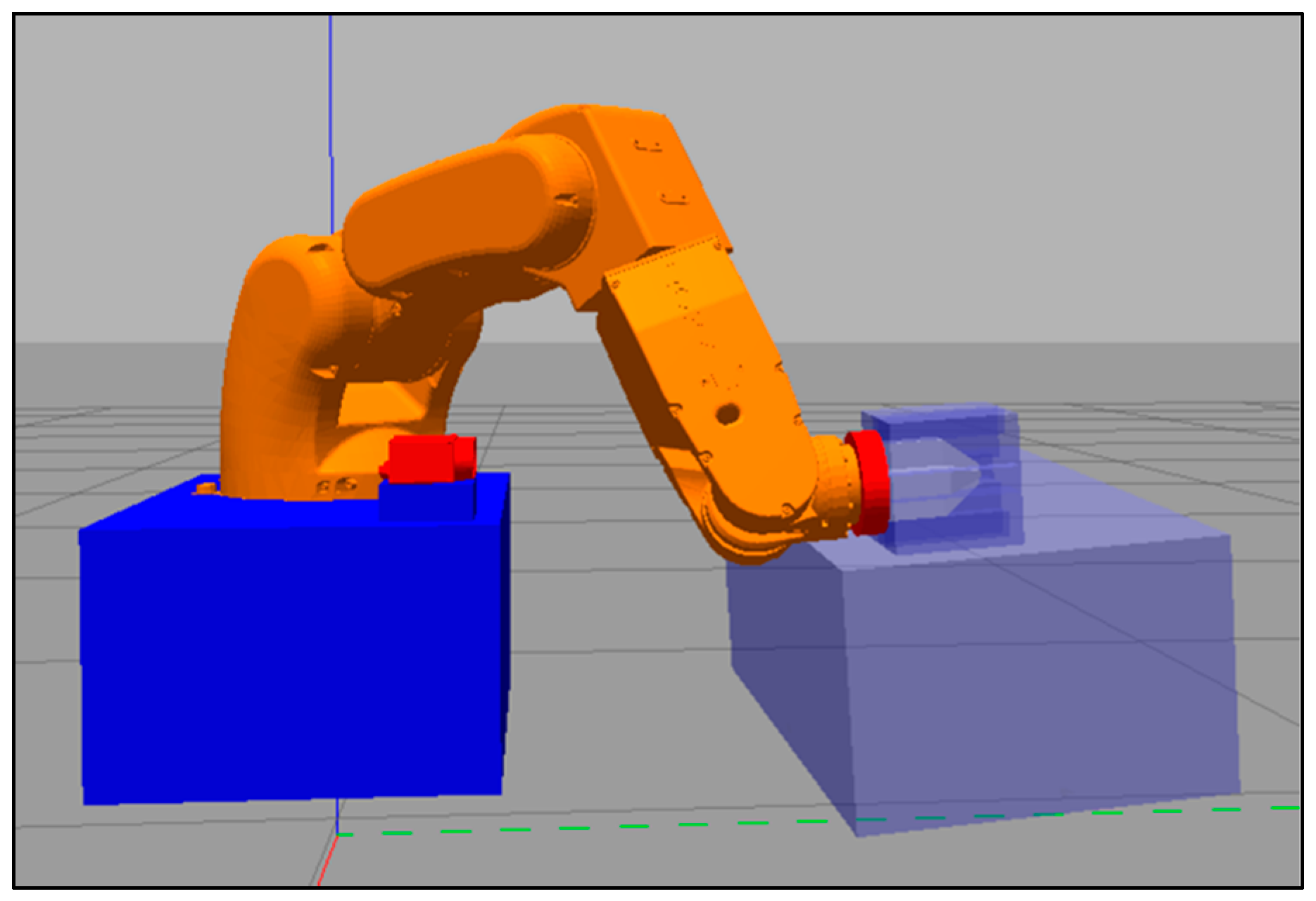

2. Binocular Stereo Vision Based Hole-Searching

Responding to the current problems in PiH assembly, a novel assembly strategy is proposed that utilizes a binocular camera to determine the center coordinates of the cross-hole [

30,

31]. This enables the robot to autonomously locate the hole without requiring manual guidance. This autonomous assembly system consists of a 6R robot, a force-moment sensor, a cross-shaped feedback peg, a cross-shaped hole, and a binocular camera, as shown in

Figure 1; {

J} is the cross-peg coordinate system, {

K} is the sensor coordinate system, {

S} is the robot base coordinate system, {

C1} is the left camera coordinate system, {

C2} is the right camera coordinate system, {

D} is the cross-hole coordinate system, and {

W} is the actual world coordinate system.

The cross-section of the cross-peg and cross-hole is cross-shaped, which effectively constrains the rotation degrees of freedom (DoF) about the x, y, and z axes and the translation DoF about the x and y axes at the same time after completing the assembly. This limits the end joint of a 6R robot to 5 DoF, addressing the issue that the rotation DoF about the z-axis cannot be constrained by a circular peg. Meanwhile, the cross-peg features a tapered end, and the front of the cross-hole is chamfered. The tapered design reduces the requirement for the accuracy of the initial positioning using the binocular camera and solves the problem that the assembly of the circular peg is prone to failures of convergence.

The 6R robot and the binocular camera are fixed on the same platform, with a force-moment sensor and a cross-shaped feedback peg connected in series at the end of the robot, and the cross-hole is fixed on another assembly platform. A binocular stereo vision system was constructed using two monocular industrial cameras, specifically the JAI Go-X model. The binocular vision camera can capture the coordinates of the cross-hole center, and the force-moment sensor can collect the force/moment information during the assembly process, which can be combined with machine learning to train the robot to complete the autonomous PiH task. Based on the required arm reach and the workload of the robot, the ROKAE XB4 series industrial robot was selected as the 6R robot for this research project. This particular model features a payload capacity of 4 kg, an arm reach of 596 mm, and a positioning repeatability of ±0.02 mm.

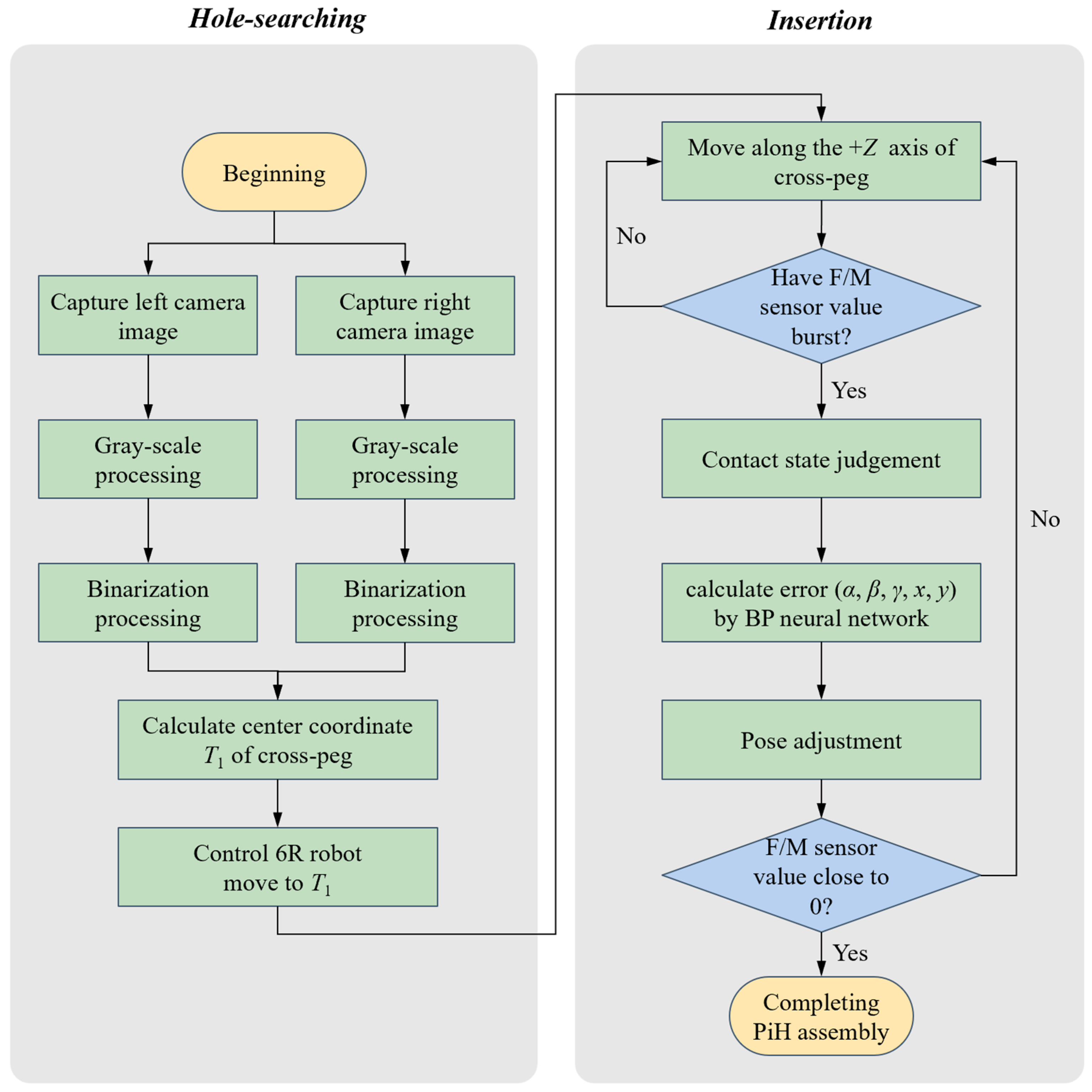

In this study, the Robot Operating System (ROS) was selected as the development platform due to its capability of achieving 3D visualization and motion simulation. By integrating MATLAB for data processing and backpropagation (BP) neural network training, the ROS system can efficiently accomplish autonomous assembly tasks. The PiH assembly flowchart is shown in

Figure 2. This flowchart shows that the autonomous assembly system is divided into two processes: hole-searching and insertion. In the hole-searching process, the binocular camera image processing and the calculation of the center coordinate of the cross-hole are mainly carried out. In the insertion process, the 26 contact states are judged based on force-moment sensor values, and then the pose error is calculated using the BP neural network. This iterative process continues until PiH assembly is completed.

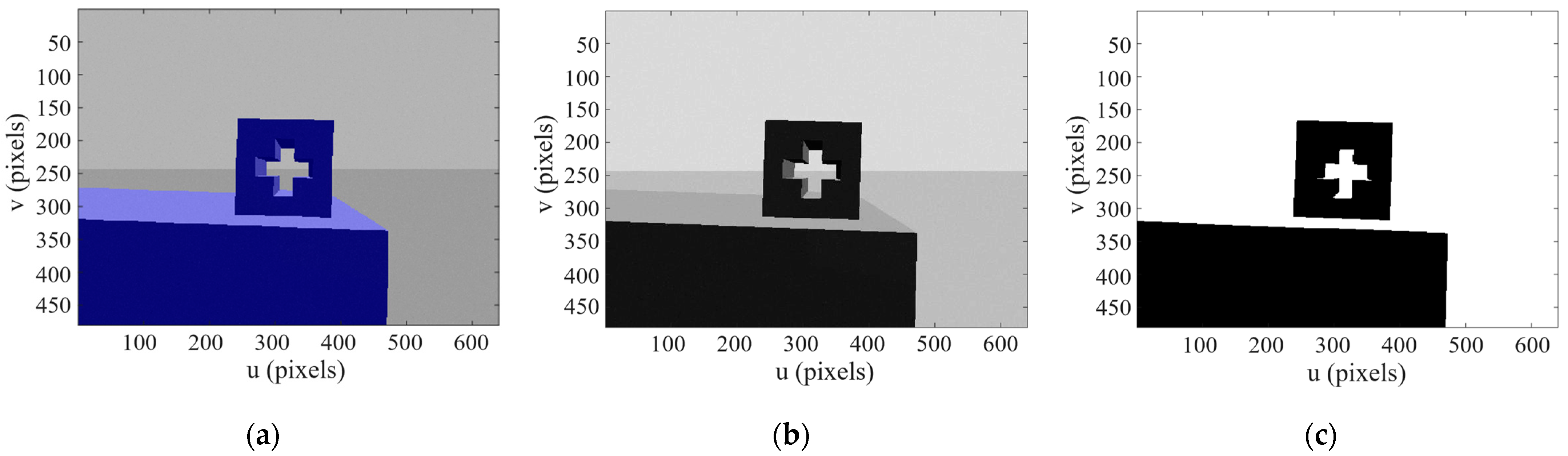

2.1. Feature Extraction for Cross-Peg

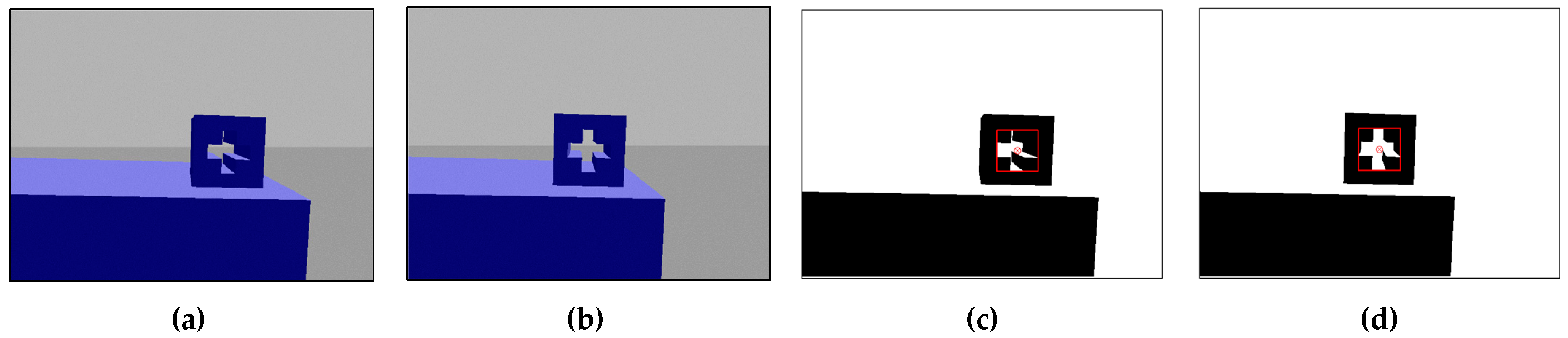

To calculate the pose of the cross-hole coordinate system relative to the robot base coordinate system {

S}, it is necessary to perform image processing first in order to identify the cross-hole features. Then, based on the principle of 3D reconstruction by binocular vision, the spatial coordinates of the center of the cross-hole are obtained. The image of the cross-hole captured by the binocular stereo vision camera is shown in

Figure 3a. After obtaining the image, the method of gray-scale classification [

32,

33] is used to extract features of the image.

Figure 3b displays the image after undergoing gray-scale processing.

The grayscale image’s pixels are binarized into black and white based on a predetermined threshold value

t. The pixel type is set to 0 when the grayscale value of the pixel is less than

t; the pixel type is set to 1 when the grayscale value of the pixel is greater than

t:

where

c is the pixel type, represented by an integer,

I is the grayscale value of each pixel point in the grayscale image, in the range 0 to 1,

t is the threshold value, and after binarization, the value of 1 represents the background and is displayed in white, while the value of 0 represents the target after binarization process and is displayed in black.

Figure 3c shows the image after the binarization process. Since the cross-hole is a through hole, the cross-hole is shown as a white cross shape surrounded by black annulus in the binarized image.

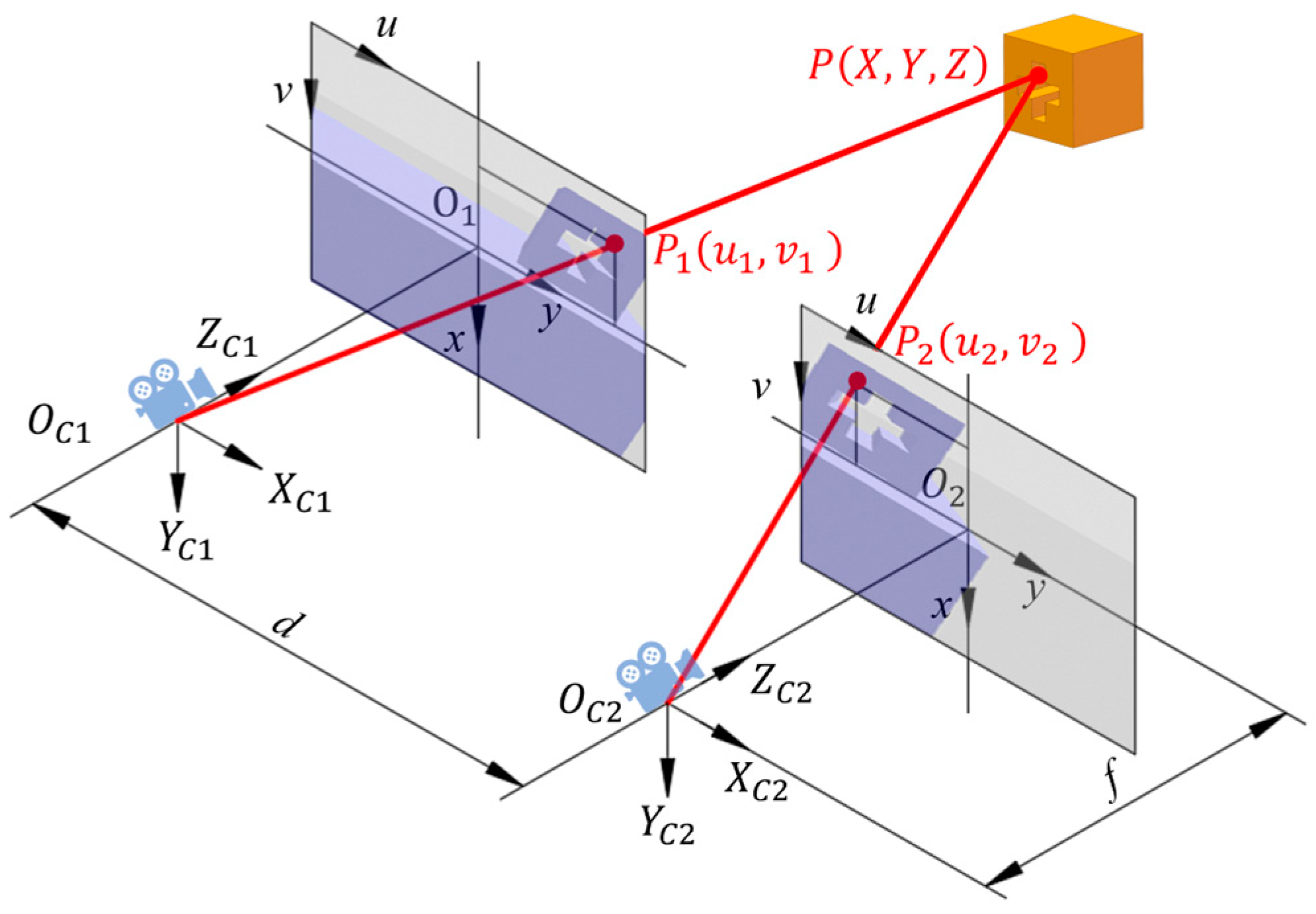

2.2. Calculation of Three-Dimensional Center Coordinates of the Cross-Hole

The monocular camera solely captures the planar information of an object while losing its depth perception. Therefore, a parallel binocular stereo vision camera is employed to simultaneously gather information from two views and the parallax ranging method is utilized for 3D reconstruction [

34] of spatial points, ultimately obtaining the 3D coordinates of the cross-hole’s center [

35].

As illustrated in

Figure 4, the operational principle of binocular stereo vision [

36] involves two camera coordinate systems: {

C1} for the left camera with

, and {

C2} for the right camera with

. Both cameras have a focal length

f and parallel optical axes separated by a fixed distance

d. The binocular cameras utilized in this study have a focal length of 381.2612 mm.

Point

P is projected onto the left camera at

and onto the right camera at

, then the point

P is located at the intersection of the line

and the line

. The coordinates of the point

P in the coordinate system {

C1} are

and in the coordinate system {

C2} are

; then, the relationship between the two coordinate systems {

C1} and {

C2} is

The coordinates of imaging points

and

in the image coordinate system

and

can be obtained according to Equation (2), and the relationship between the image coordinate system and the camera coordinate system can be determined by applying the principle of similar triangles:

Simultaneously with Equations (2) and (3), the spatial coordinate

of the point

P in the left camera coordinate system {

C1} is obtained as

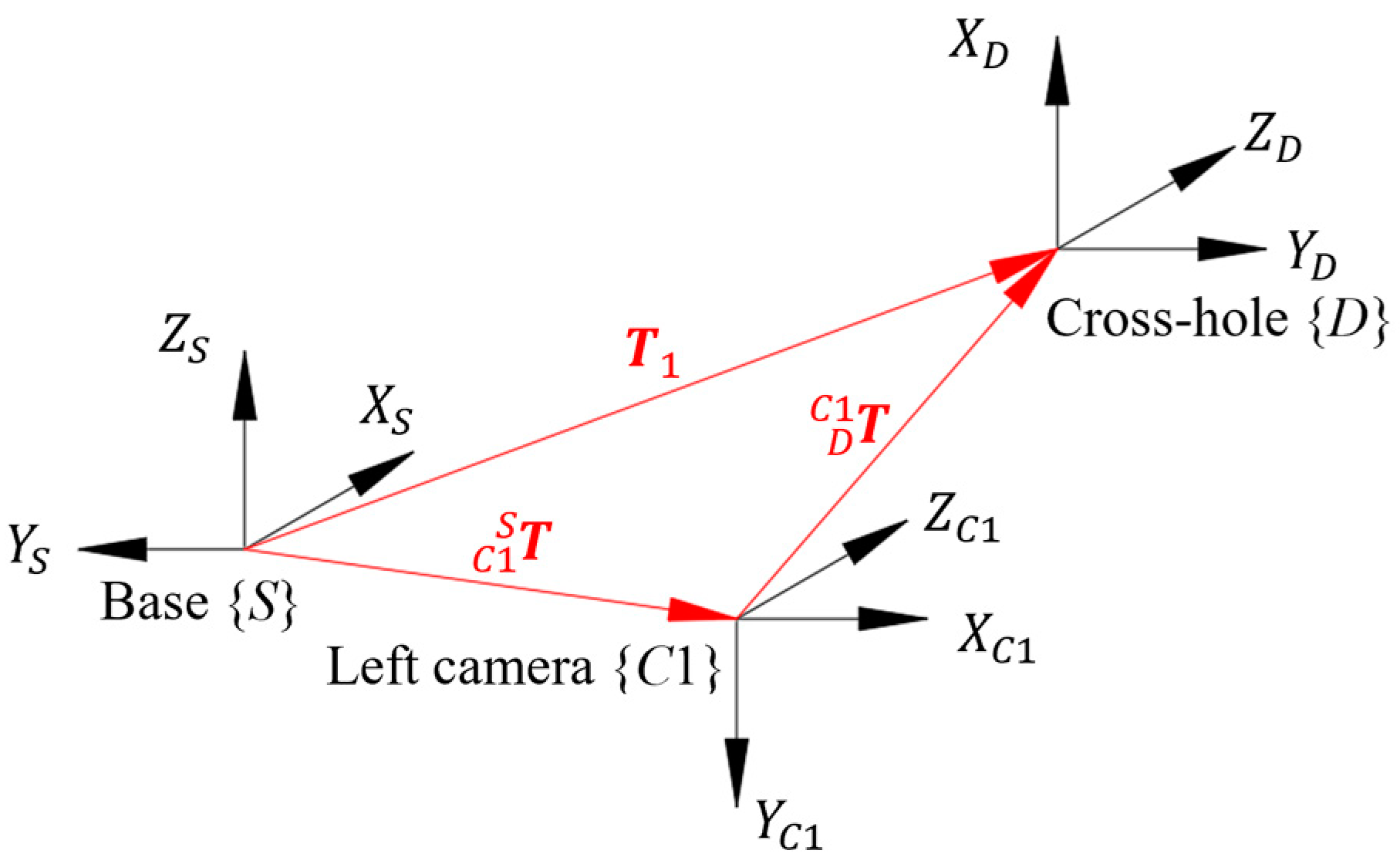

A schematic diagram of the coordinate and relative pose of the binocular vision system is depicted in

Figure 5. The initial pose

of the cross-hole coordinate system can be derived from the poses

and

, which respectively represent the left camera coordinate system {

C1}, with respect to the base coordinate system {

S}, and the cross-hole coordinate system {

D}, with respect to the left camera coordinate system {

C1}.

The poses

of the left camera coordinate system {

C1} with respect to the robot base coordinate system {

S} are

where

,

,

is the position of the camera coordinate system {

C1} relative to the base coordinate system {

S}.

The pose

of the cross hole coordinate system {

D} with respect to the left camera coordinate system {

C1} is

where

,

,

is the position of the cross-hole center in the left camera coordinate system {

C1}.

Then the pose

of the coordinate system {

D} under the coordinate system {

S} of the robot arm base is

Using the 3D reconstruction principle of binocular vision, the three-dimensional coordinates

of the center of the cross-hole in the left camera coordinate system {

C1} are calculated as (0.0507, 0.0085, 0.3002), in meters. The transformation matrix

of the left camera coordinate system {

C1} with respect to the base coordinate system {

S} can be determined as

Substituting (8) into (7), the target pose

of the cross-peg is obtained as

The images captured by the binocular camera in the simulation system are presented in

Figure 6a, while

Figure 6b displays the image obtained after image processing. The area enclosed by the red rectangle represents the cross-hole region, with its center marked by a red dot. In the left camera, the pixel coordinates of this center are (385, 253), while in the right camera they are (321.5, 252.5). The distance between both cameras’ optical axes is 0.05 m.

2.3. 6R Robot Trajectory Planning

To achieve the initial positioning and movement of the PiH task, it is necessary to plan the trajectory of the 6R robot so that it can move to position

. To ensure the smoothness of velocity and acceleration, a fifth-order polynomial is employed for interpolation [

37], with

representing the joint position of the robot at time

t.

The position, velocity and acceleration of the interpolation starting point

A are

,

, and

, respectively, and the position, velocity, and acceleration of the interpolation target destination

B are

,

, and

, respectively. The time duration between point

A and point

B is denoted as

T, which commences from the initial position

A at

and terminates at the destination

B at

t =

T. Consequently, we can derive

The coefficients can be obtained by inputting the initial joint angle and target joint angle into Equation (11), which are then substituted in to determine the joint angle of the robot arm at each time step. This information is transmitted to the robot for precise control over its movement, allowing it to reach the specified position and complete initial positioning of the PiH task.

The target joint angle

is

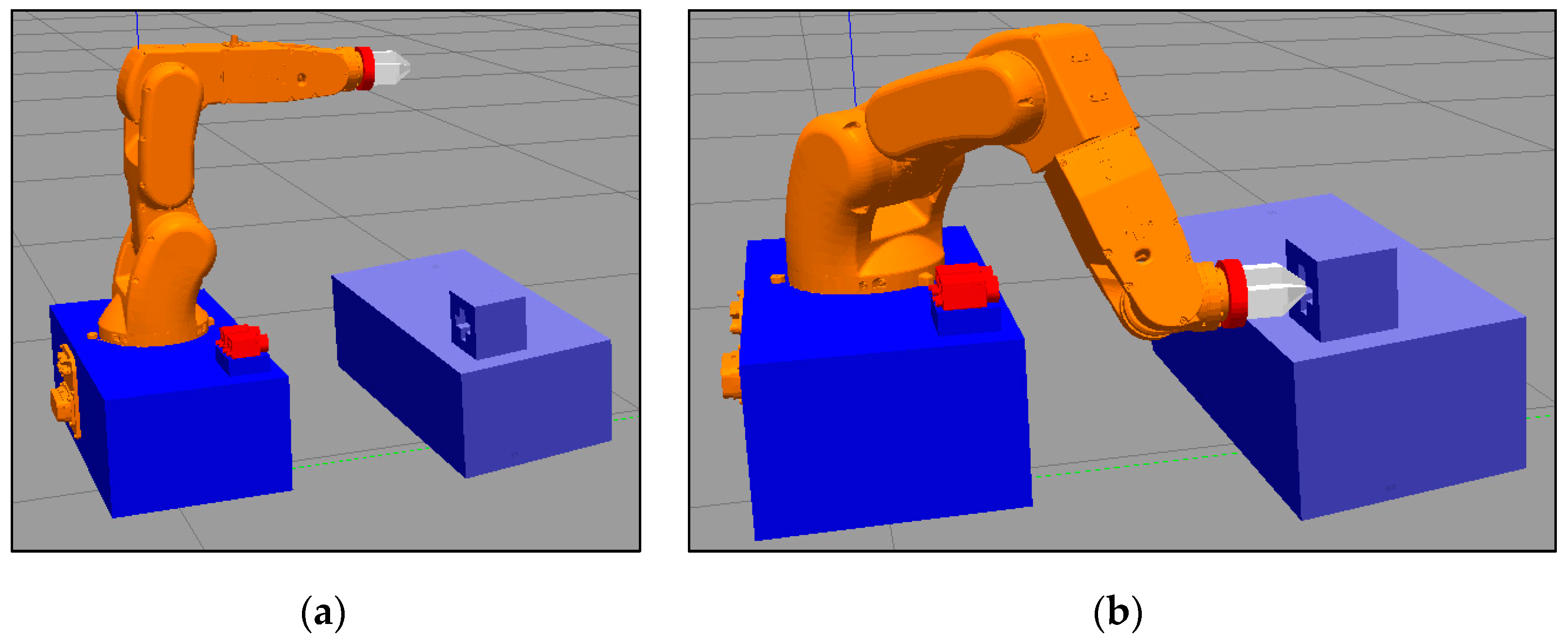

Figure 7a shows the initial pose of the 6R robot. As measured by ROS, in the initial state, the pose relationship between the cross-hole coordinate system {

D} and the robot base coordinate system {

S} is

, which is recorded for subsequent verification:

After the interpolation trajectory planning of the 6R robot,

Figure 7b depicts the robot’s attitude subsequent to its relocation to the designated position

. At this point, the axes of both cross-peg and cross-hole are collinear, but do not make contact. This marks the completion of the initial pose adjustment for the PiH task.

3. Force-Moment Sensor-Based Cross-Peg Insertion

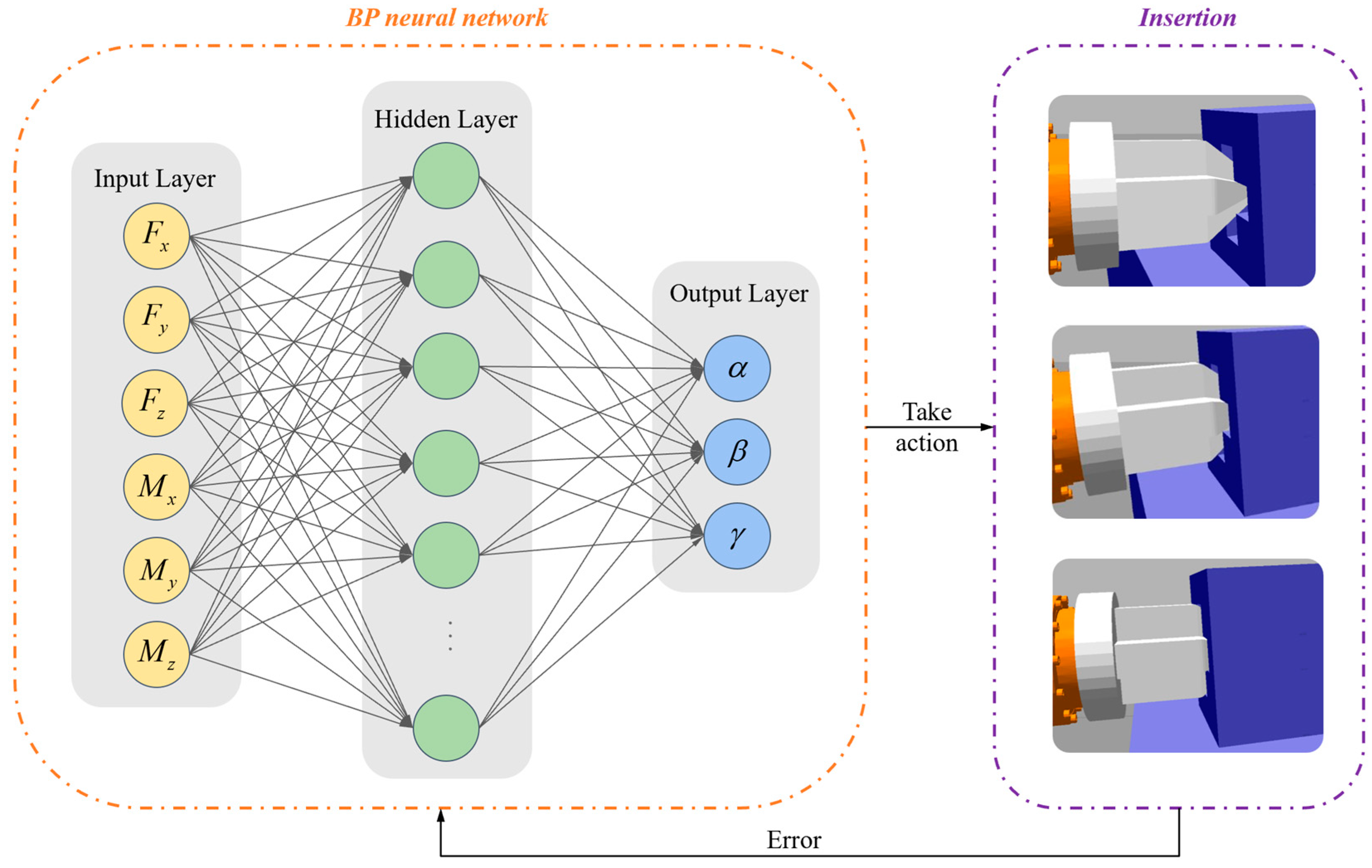

After completing the initial positioning through a hole-searching process, the cross-peg can be inserted into the cross-hole by continuously adjusting its position according to the force-moment sensor value until the PiH task is completed. In this section, the force-moment sensor will be calibrated and gravity compensation will be implemented to mitigate the impact of inherent errors and external loads on the sensor’s measurements. Subsequently, the contact state of the peg and hole is classified and the mechanics of the assembly process are analyzed. Finally, a BP neural network mapping relationship between sensor data and assembly error is established to enable the independent assembly of the cross-peg.

3.1. Force-Moment Sensor Calibration and Gravity Compensation

The measured data of the force-moment sensor consists of three components: the inherent error of the sensor, the gravity acting on both the sensor and peg, and the external contact force applied to the feedback peg [

38,

39]. The gravity of the sensor and peg is denoted as

G, and has its centroid located at

in coordinate system {

D}. Based on the material and structural design, the gravity acting upon the force-moment sensor and cross-peg is 9.558 N.

The user has the flexibility to adjust the data in accordance with their own study. The value of the force-moment sensor at no load

, the measured value of the force-moment sensor

, and the contact force of the cross-peg

can be recorded as

According to the theory of least squares [

40], it is obtained that

where

,

,

are the constants containing the force under no load, moment, and centroid coordinates.

To solve the matrix using least squares theory, it is necessary to guide the end joint of the robot through six distinct poses while ensuring that at least three of these poses have non-coplanar end-pointing vectors. Additionally, force-moment sensor data must be recorded for each pose.

Table 1 shows the force-moment sensor data in six different poses.

The least squares solution

p of the matrix from the force-moment sensor data is

Similarly, the results of the force-moment sensor calibrated by the least squares method are as follows:

3.2. Peg-in-Hole Contact State Classification

The contact point of the PiH task is located at the intersection of the tapered end of the front guide section of the cross-peg and the edge line of the cross-hole, as shown in

Figure 8.

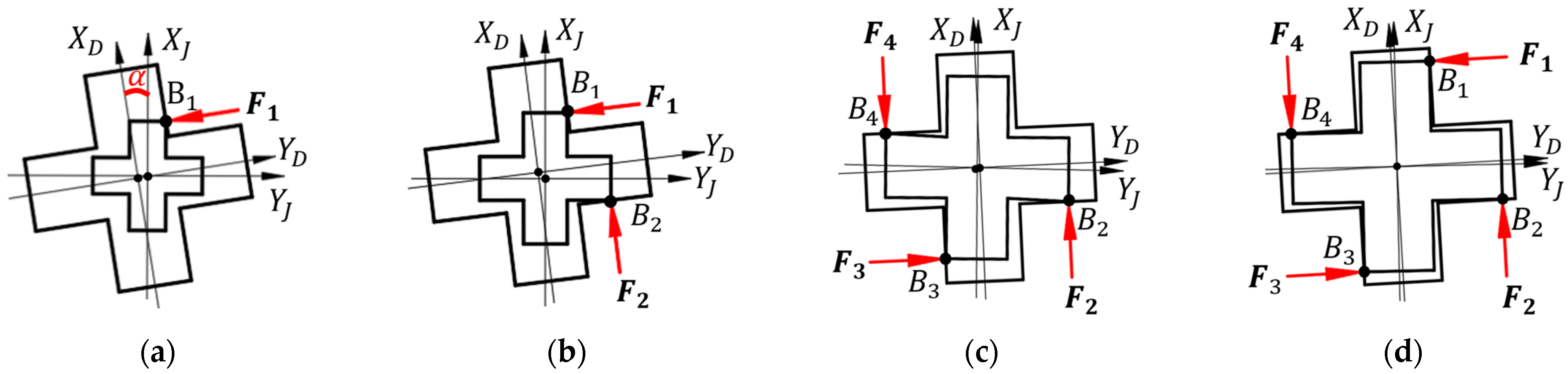

Based on the advantages of the central symmetry cross-shaped peg and hole, two classification methods are proposed for categorizing contact states in the PiH insertion task: classification by the number of contact points and the rotation error of the cross-peg.

3.2.1. Classification by the Number of Contact Points

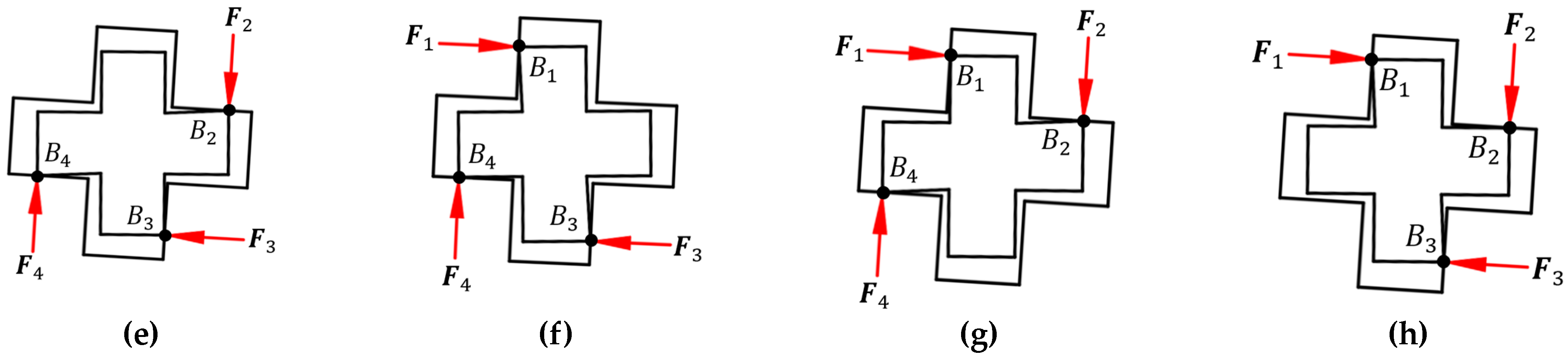

As shown in

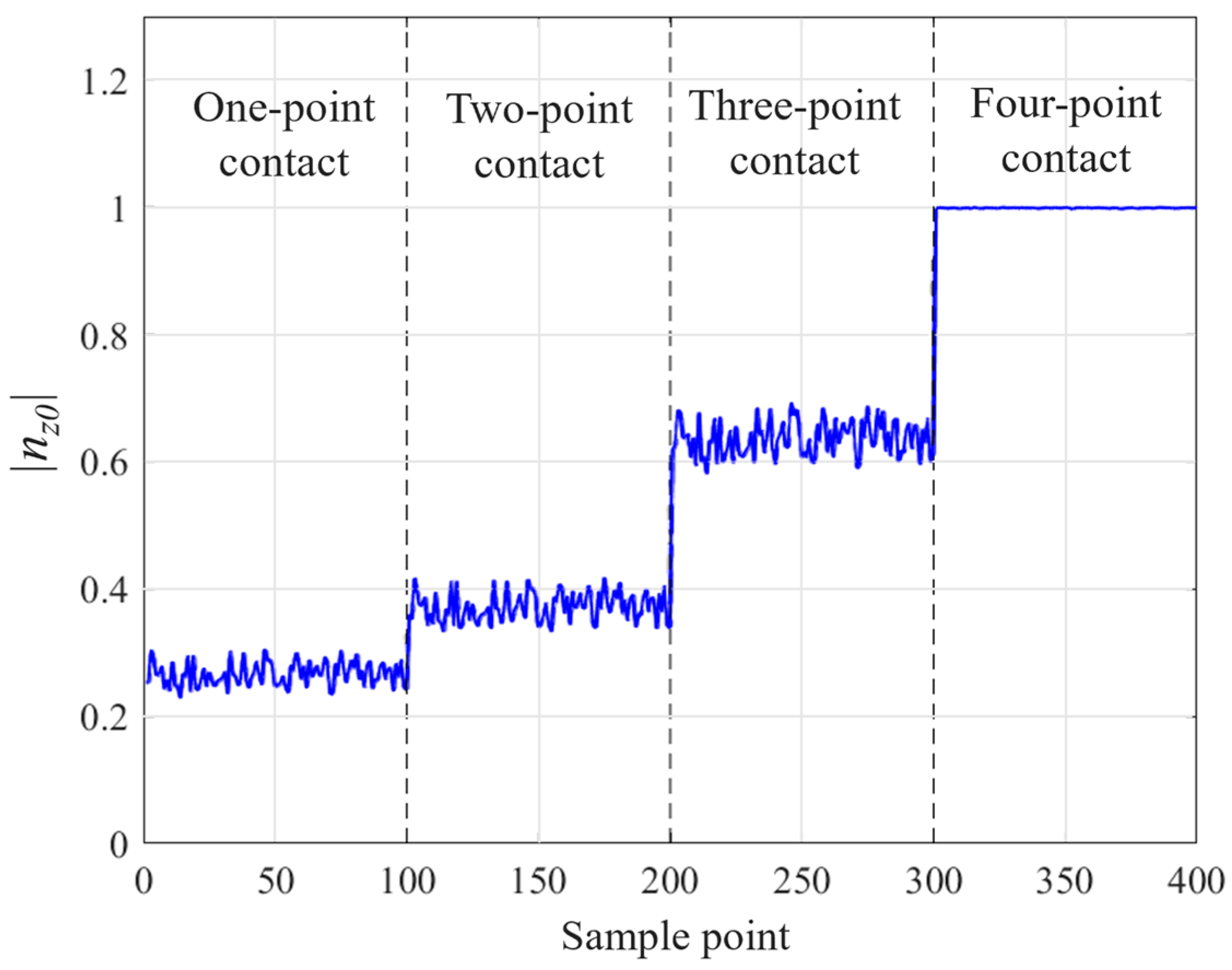

Figure 9, during the insertion of a cross-shaped peg, there are four types of contact states according to the number of contact points: one-point contact, two-point contact, three-point contact, and four-point contact.

In

Figure 9, it can be seen that the cross-peg circumferential forces and moments are more complex when the guide area of the cross-peg is in contact with the cross-hole, while the radial forces

are simpler and may be related to the number of contact points. To verify this hypothesis, the robot was manipulated to create 100 contacts between the cross-axis and cross-hole in one-point, two-point, three-point, and four-point states. The unit vector of the external force on the cross-peg is as follows:

where the data of

are shown in

Figure 10.

It can be seen in

Figure 10 that

has a clear mapping relationship with the contact state; it is concluded that

is distributed between 0.23 and 0.31 with a mean value of 0.278 in the case of one-point contact, between 0.33 and 0.42 with a mean value of 0.376 in the case of two-point contact, between 0.58 and 0.71 with a mean value of 0.649 in the case of three-point contact, and is infinitely close to 1 in the case of four-point contact. According to the mapping relationship between

and the contact state, the contact state of the peg can be determined for subsequent inserting operations.

3.2.2. Classification by Rotation Error

The rotation angle of the cross-hole coordinate system {

D} relative to the

axis of the cross-peg coordinate system {

D} is

α. Depending on the direction of rotation, it can be divided into the cases of

and

, and

Figure 11 shows the diagrams of the two different contact states.

3.3. Force-Moment Sensor for Each Contact State

The two states can be distinguished according to the direction of

in the force-moment sensor data; when

,

is negative, and when

,

is positive. By combining the two classification methods mentioned in

Section 3.2.1 and

Section 3.2.2, a total of 26 different contact cases were obtained. To succinctly describe each contact state, a numbering system is utilized to express the contact cases. The format for this numbering system is as follows:

where

indicates the number of contact points, ranging from 1 to 4;

indicates the direction of rotation—

when

and

when

;

indicates the contact case when

and

are identical. For example, case 1-2-3 represents the third contact case with one-point contact and

.

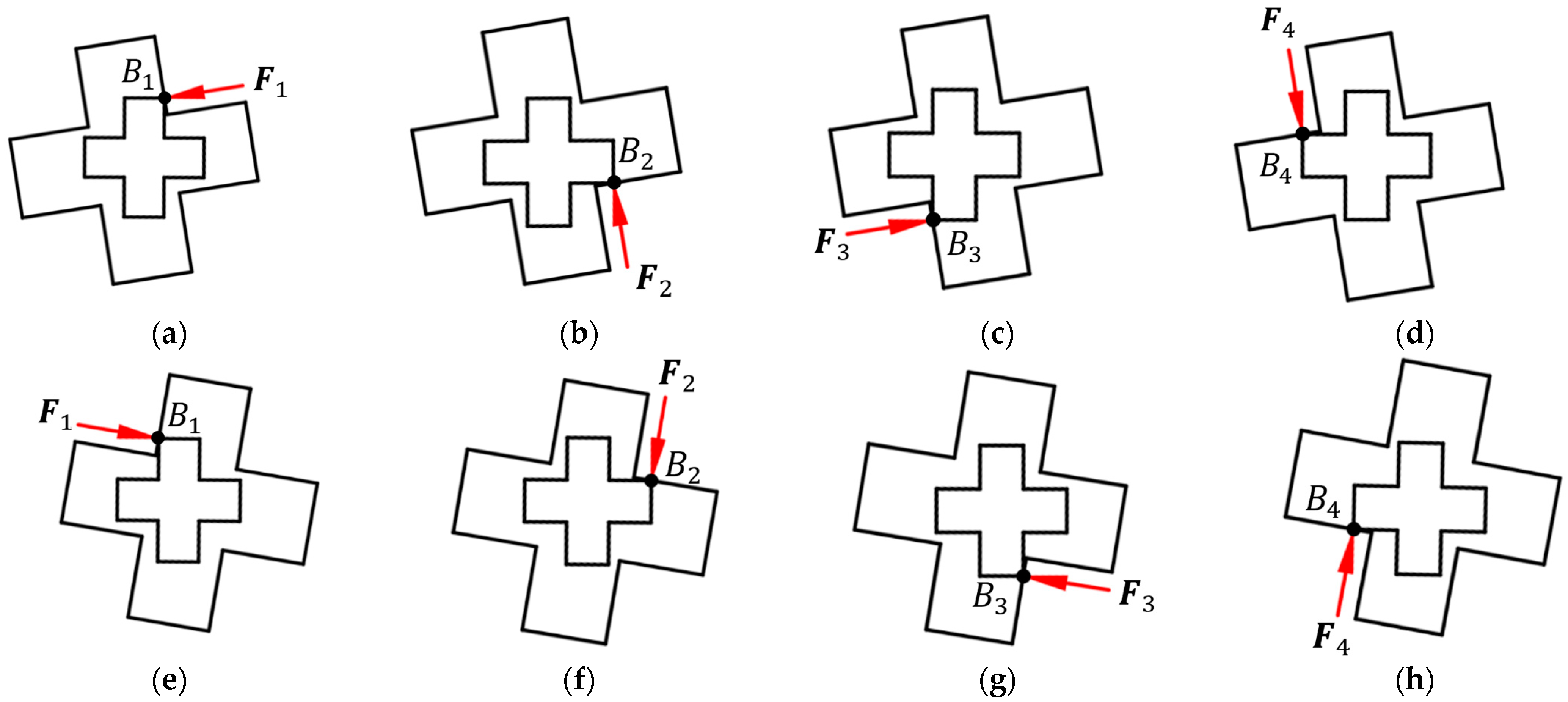

3.3.1. One-Point Contact

There are eight one-point contact cases, as shown in

Figure 12. The force-moment sensor data for the one-point contact case is presented in

Table 2.

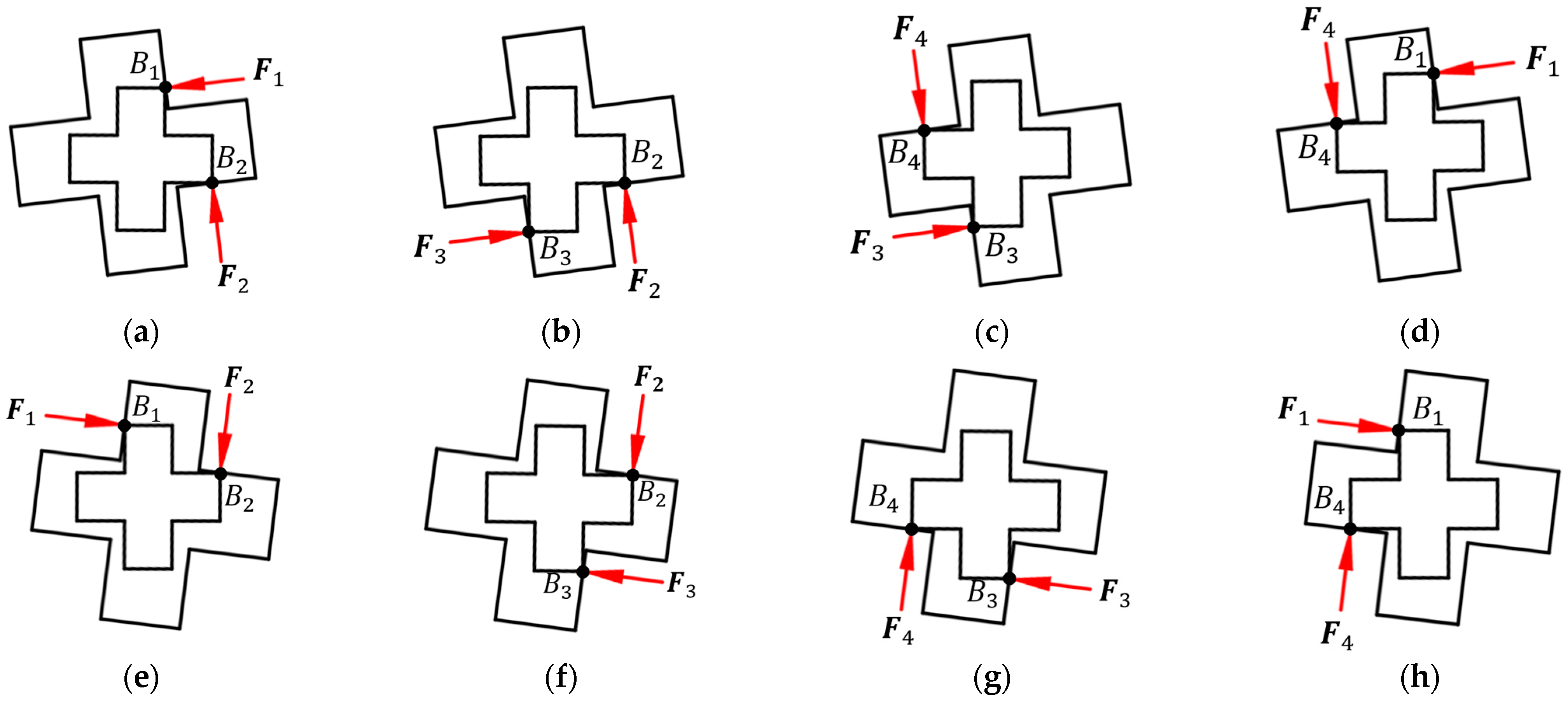

3.3.2. Two-Point Contact

There are eight two-point contact cases, as shown in

Figure 13. The force-moment sensor data for the two-point contact case is presented in

Table 3.

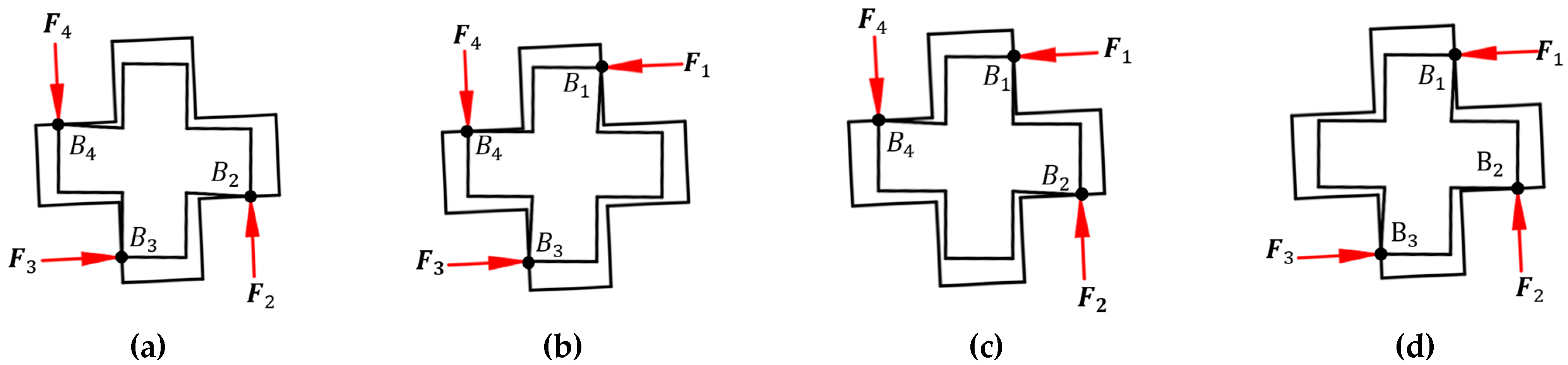

3.3.3. Three-Point Contact

There are eight three-point contact cases, as shown in

Figure 14. The force-moment sensor data for the three-point contact case is presented in

Table 4.

3.3.4. Four-Point Contact

There are two four-point contact cases, as shown in

Figure 15. The force-moment sensor data for the four-point contact case is presented in

Table 5.

3.4. Mechanical Analysis of PiH Assembly

Figure 16 illustrates the force exerted when the cross-peg and cross-hole make contact with case 1-1-1. The contact point of the PiH task is located at the intersection of line

and line

. The direction of the contact force

F on the cross-peg is a normal vector of the plane formed by two lines, pointing to the inside of the cross-hole. The direction of the friction force

is along the tapered end of the cross-peg from point

to point

.

The coordinates of the point

are

, and the coordinates of the point

are

. The coordinates of point

and point

in the cross-peg coordinate system {

D} are

and

, respectively. Then the coordinates of the contact point

is

The coordinates of each contact point in other contact cases can be solved using the same method, which will not be repeated in this paper.

The cross-peg is subjected to a contact force and a frictional force at the contact point . The direction of the contact force is normal to the plane formed by line and line , pointing inside the cross-peg; the direction of the frictional force is along line , opposite to the direction of movement of the cross-peg.

The direction vector of the contact force

is

Assuming the magnitude of the contact force on the cross-peg at the contact point

is

, then

Since the direction of the frictional force

is

Then the frictional force

is

Additionally, the force-moment sensor value on the cross-peg is then

where

represents the contact point number for each contact state.

The relative error between the peg and hole can be obtained by solving Equation (24) and then adjusting the peg pose according to the error.

5. Experiments

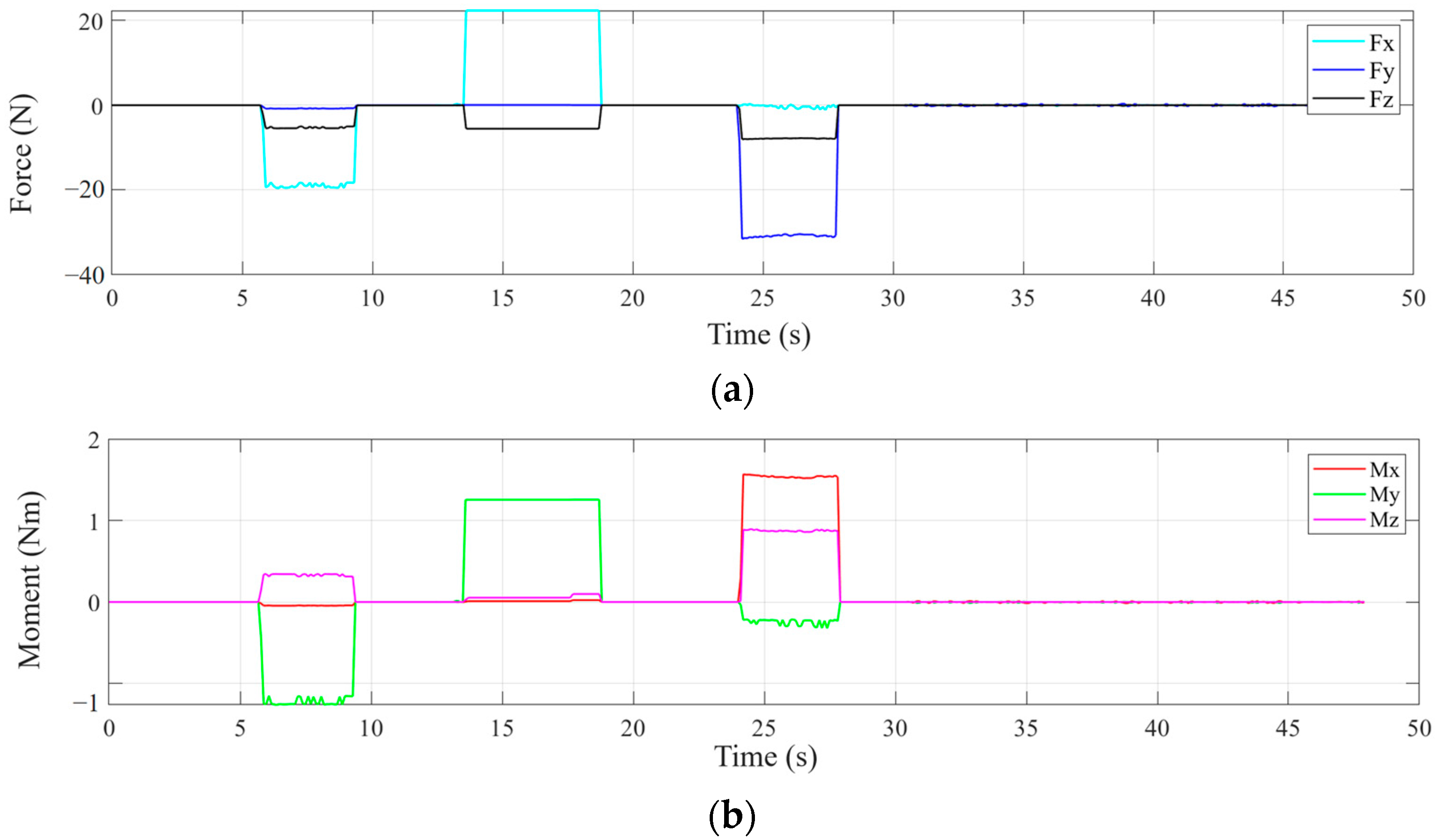

After completing the calibration of the force-moment sensor and BP neural network training, the assembly process of the cross-peg and cross-hole can be executed; the changes in the contact force and moment between the peg and the hole during the assembly process are shown in

Figure 19.

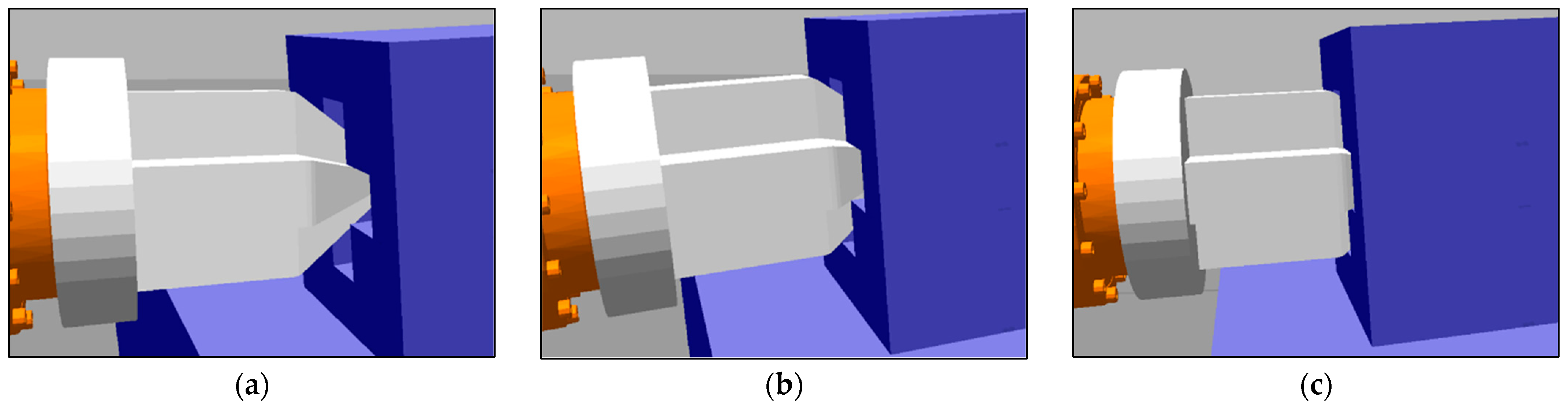

At approximately 0 to 5 s, the cross-peg begins to move along its axis without any contact with the cross-hole, as shown in

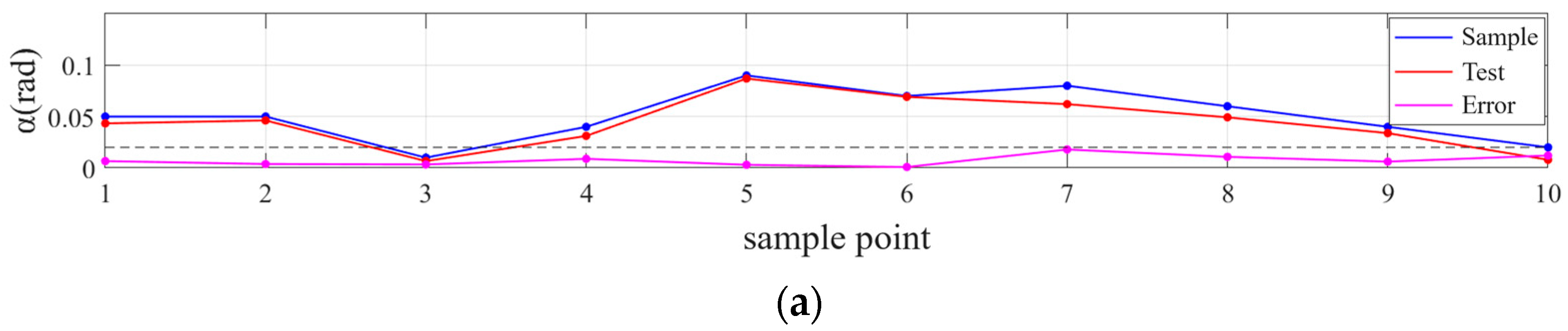

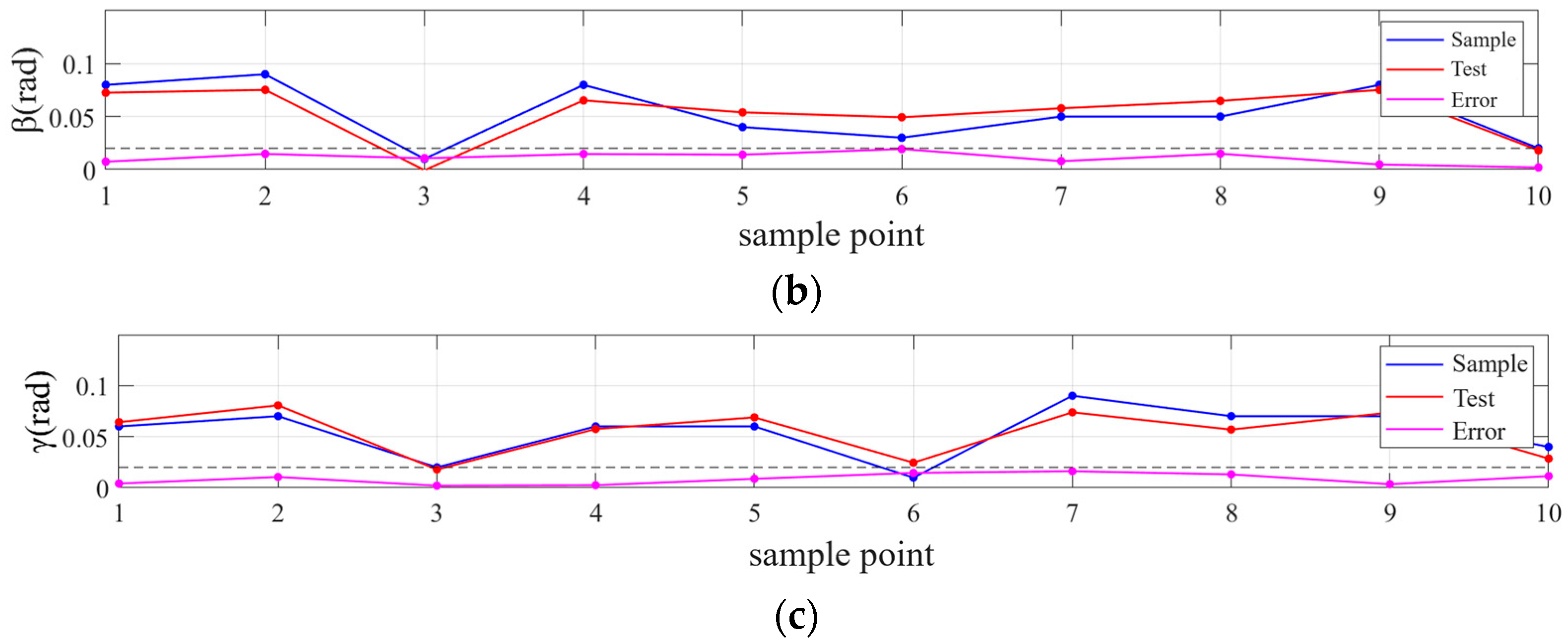

Figure 20a. The force-moment sensor reading value remains at zero. At around 6 s, the cross-peg continues to approach and insert into the cross-hole, resulting in an abrupt change in sensor value that indicates initial contact between the peg and the hole. The contact force value is (−19.247, −0.785, −5.3953) N, while the moment value is (−0.044, −1.244, 0.339) Nm.

Using the established BP neural network, the contact state is judged to be 1-2-2, and the mapping relationship between force-moment sensor values and angle errors is obtained as (0.057, 0.074, 0.076) rad in the cross-hole coordinate system. The corresponding force-moment sensor values and angle errors are (−0.011, 0.006, 0.065) m. According to the calculated error, the robot pose is adjusted, the contact force between the peg and the hole is restored to zero after the adjustment, and the cross-peg continues to move along its axis. Similar to the above process, the second contact occurs around 13 s, as shown in

Figure 20b, after automatic pose adjustment; the third contact occurs around 24 s, as shown in

Figure 20c, and the assembly is completed after 27 s.

After the third adjustment is completed, the force-moment sensor data is close to 0 due to the frictional force existing in the peg. The increase in cross-peg insertion depth no longer generates abrupt changes, indicating that the relative error between the peg and the hole has been adjusted within acceptable accuracy limits. The state of the system after completion of PiH assembly is shown in

Figure 21, wherein the cross-peg coordinate system {

D} coincides with the cross-hole coordinate system {

D}, and the position of each joint angle of the robot arm is

The joint angle is substituted into the transformation matrix of the robot link to obtain the relative pose

of the cross-hole coordinate system {

D} relative to the robot base coordinate system {

S}, which is

The relative pose obtained by the autonomous assembly system exhibits a negligible error relative to the pose measured by ROS in the initial state as shown in Equation (13), thereby validating the accuracy and correctness of the autonomous assembly system.

6. Conclusions

Based on the limitations of current peg-in-hole assembly, which mostly involves circular pegs and holes, and the fact that rotational DoF cannot be restricted about their axes after circular hole assembly, resulting in difficulty in obtaining the precise relative pose between the robot base and the hole, this paper proposes a new PiH assembly strategy by designing a cross-shaped peg and hole.

As many studies have focused solely on the insertion of pegs in PiH tasks, this paper aimed to establish a comprehensive autonomous assembly system that includes hole-searching and insertion based on force/moment and visual information. Therefore, the central coordinates of the cross-hole were obtained based on the three-dimensional reconstruction theory of binocular stereo vision. The trajectory for the robot was planned to align the axis of the cross-peg with the cross-hole without physical contact, thus achieving an autonomous hole-searching process. Then, all 26 contact states of cross-peg and cross-hole were classified and numbered according to the number of contact points and angle errors between the peg and the hole, and the force-moment sensor value under different contact states was summarized; then, the BP neural network was trained based on the sensor information and the relative errors of the cross peg and cross holes to establish the mapping relationship between force and error. Then, the cross-peg and cross-hole contact states were classified and numbered based on the number of contact points and angle errors between them. The force-moment sensor values under different contact states were summarized, and a BP neural network was trained using this information with relative errors to establish a mapping relationship between force and error. Finally, the PiH task was successfully executed to address the autonomous assembly challenge of the cross-peg and cross-hole, thereby obtaining the relative pose between the robot base coordinate system and the cross-hole coordinate system.

This new assembly strategy can replace the use of cross-pegs and cross-holes once this pose is obtained, enabling the assembly of more complex-shaped PiH tasks, such as spline assembly and square peg assembly. This solves the problem in which users need to reformulate the assembly strategy or design special sensors according to the size and shape of the peg. This not only reduces users’ workload but also enhances industrial production efficiency.

However, the research on this topic still needs to be improved, and our future research directions are as follows:

Firstly, to compare the assembly efficiency and convergence speed of the mapping model under different algorithms, such as reinforcement learning and iterative learning control;

Secondly, the replacement of various shaped pegs and holes to test their assembly effects was not conducted in this article. However, future research will focus on this area;

Finally, if the 6R robot can be fixed on a 6-DoF platform, after obtaining the relative pose of the robot base coordinate system and the cross-hole coordinate system, the platform can achieve rendezvous and docking with another platform while continuously detecting the docking process based on the follow-up function of the 6R robot. After the system is built, it can realize the assembly task of heavy machinery, such as rocket tank assembly and missile assembly.